Potato Visual Navigation Line Detection Based on Deep Learning and Feature Midpoint Adaptation

Abstract

:1. Introduction

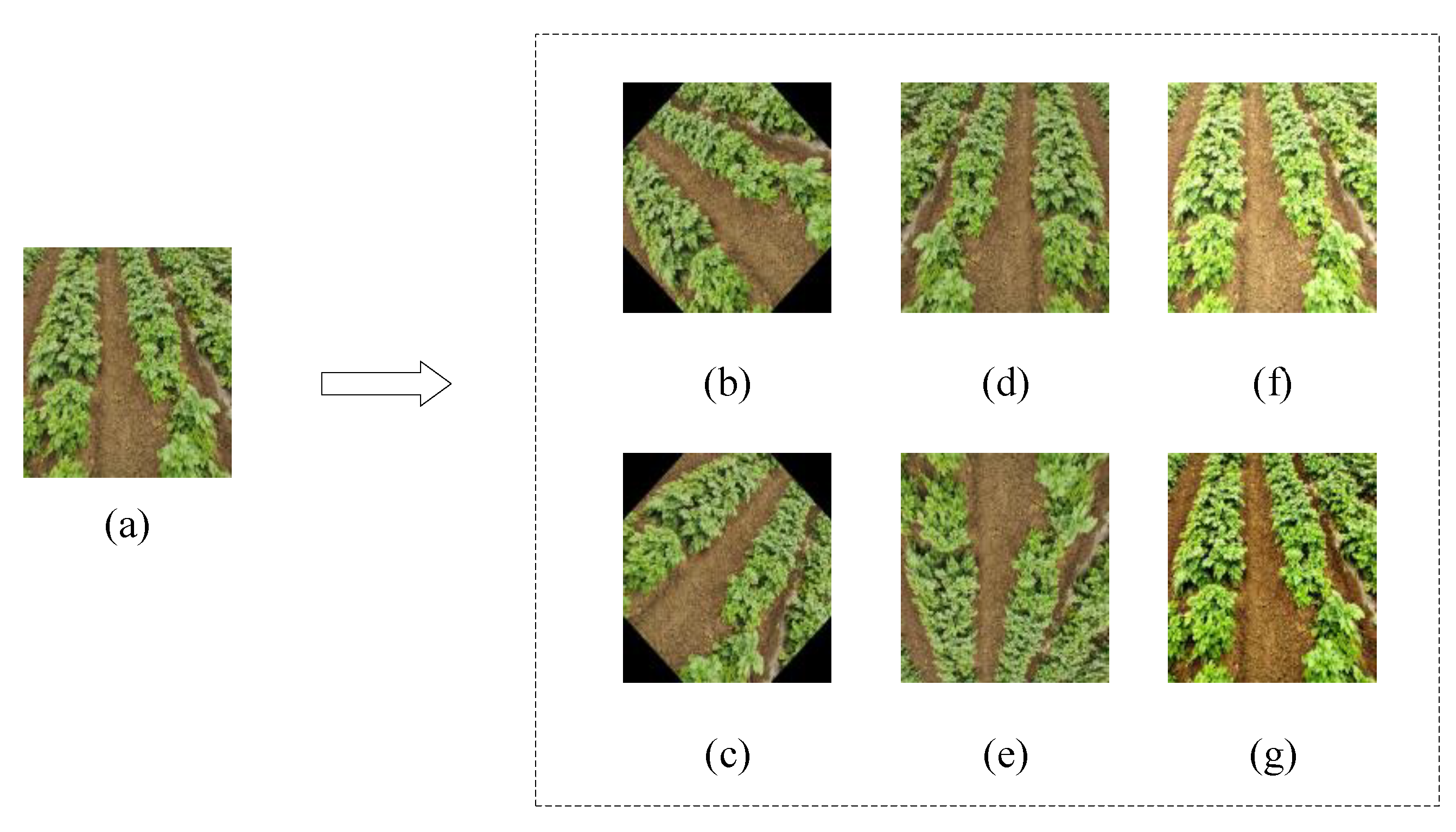

- A potato crop row dataset was established under various growth periods and lighting conditions.

- Based on improved U-Net, a segmentation and recognition model of potato crop rows was constructed.

- A complete detection scheme for the potato visual navigation line suitable for multiple growth periods was proposed.

2. Materials and Methods

- Potato crop row segmentation and prediction: First, the dataset is established for the potato crop rows under various working conditions, and then the dataset is trained using the improved U-Net semantic segmentation model to obtain the training weights, in which data augmentation is used to prevent overfitting during training. The newly acquired images are then segmented using the training weights to obtain segmentation masks for the potato crop row and background.

- Feature midpoint adaptation fitting: First, the ROI is set on the segmented binary image mask. Secondly, the edge information of the potato crop row within the ROI is extracted, and the center position of the crop row is located using the extracted boundary points. Since the segmentation effect may occasionally be unsatisfactory, resulting in the center of the crop row being incorrectly positioned, a simple k-means algorithm is introduced to correct it, but this situation is extremely rare. Then, the crop row center position is used to locate the lane center position, and finally, the least squares method is used to fit the navigation line to the lane center point.

2.1. Data Collection and Annotation

2.2. Semantic Segmentation

2.3. Model Training and Data Augmentation

2.4. Crop Row Detection Based on Feature Midpoint Adaptation

2.4.1. ROI Determination

2.4.2. Crop Row Feature Point Extraction

2.4.3. K-Means Clustering

2.4.4. Least Squares Fitting

| Algorithm 1. Adaptive Midpoint Fitting Algorithm |

| (1) Define ln, rn, bn, cn/2 as four sets to store the left and right borders of the crop row, the center point of the crop row, and the coordinates of the navigation reference point, respectively. |

| (2) Input the image after semantic segmentation; set interlacing interval k and threshold α. |

| (3) Convert the image to a single-channel binary image and set the ROI. |

| (4) Interlaced traversal scan. |

| (5) Scan all pixel coordinates of one row. |

| (6) If α/2 consecutive pixels have a value of 255, the remaining α/2 consecutive pixels have a value of 0. |

| (7) Output the α/2th pixel coordinate and store it in ln. |

| (8) If α/2 consecutive pixels have a value of 0, the remaining α/2 consecutive pixels have a value of 255. |

| (9) Output the α/2th pixel coordinate and store it in rn. |

| (10) Traverse ln, rn, and calculate the midpoint coordinates of the green feature of the crop and store it in bn. |

| (11) When the number of green feature points in a horizontal row is greater than 2, perform k-means clustering to obtain the centroid coordinates. |

| (12) Remove the original feature points from the set, and put the obtained centroid coordinates into the original feature point set. |

| (13) Calculate the center position of two adjacent coordinate points in bn and store it in cn/2. |

| (14) Use least squares fitting to obtain the navigation line. |

3. Results and Discussion

3.1. Semantic Segmentation Experiment

3.2. Feature Midpoint Adaptation Fitting

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Department of Economic and Social Affairs of the United Nations. World Population Prospects: The 2012 Revision; Population Division of the Department of Economic and Social Affairs of the United Nations Secretariat: New York, NY, USA, 2013; p. 18. [Google Scholar]

- Lu, J. The Mechanism and Parameter Optimization of the Key Device of Pneumatic Precision Seeding of Potatoes. Ph.D. Thesis, Heilongjiang Bayi Agricultural Reclamation University, Daqing, China, 2020. [Google Scholar]

- Zhai, Z.; Zhu, Z.; Du, Y.; Song, Z.; Mao, E. Multi-crop-row detection algorithm based on binocular vision. Biosyst. Eng. 2016, 150, 89–103. [Google Scholar] [CrossRef]

- Chen, W. Research on Stubble Avoidance Technology of No-Till Planter Based on Machine Vision. Ph.D. Thesis, China Agricultural University, Beijing, China, 2018. [Google Scholar]

- Josiah, R.; Julie, C.; Duke, M. Machine vision for orchard navigation. Comput. Ind. 2018, 98, 165–171. [Google Scholar] [CrossRef]

- Ruotsalainen, L.; Morrison, A.; Mäkelä, M.; Rantanen, J.; Sokolova, N. Improving Computer Vision-Based Perception for Collaborative Indoor Navigation. IEEE Sens. J. 2022, 22, 4816–4826. [Google Scholar] [CrossRef]

- Adamkiewicz, M.; Chen, T.; Caccavale, A.; Gardner, R.; Culbertson, P.; Bohg, J.; Schwager, M. Vision-Only Robot Navigation in a Neural Radiance World. IEEE Robot. Autom. Lett. 2022, 7, 4606–4613. [Google Scholar] [CrossRef]

- Huang, P.; Zheng, Q.; Liang, C. Overview of Image Segmentation Methods. J. Wuhan Univ. (Sci. Ed.) 2020, 66, 519–531. [Google Scholar]

- Zhou, Y.; Yang, Y.; Zhang, B.L.; Wen, X.; Yue, X.; Chen, L. Autonomous detection of crop rows based on adaptive multi-ROI in maize fields. Int. J. Agric. Biol. Eng. 2021, 14, 1934–6344. [Google Scholar] [CrossRef]

- Søgaard, H.T.; Olsen, H.J. Determination of crop rows by image analysis without segmentation. Comput. Electron. Agric. 2003, 38, 141–158. [Google Scholar] [CrossRef]

- Li, M.; Zhang, M.; Meng, Q. Rapid detection method of agricultural machinery visual navigation baseline based on scanning filtering. Trans. Chin. Soc. Agric. Eng. 2013, 29, 41–47. [Google Scholar] [CrossRef]

- Yu, Y.; Bao, Y.; Wang, J.; Chu, H.; Zhao, N.; He, Y.; Liu, Y. Crop Row Segmentation and Detection in Paddy Fields Based on Treble-Classification Otsu and Double-Dimensional Clustering Method. Remote Sens. 2021, 13, 901. [Google Scholar] [CrossRef]

- Montalvo, M.; Pajares, G.; Guerrero, J.M.; Romeo, J.; Guijarro, M.; Ribeiro, A.; Ruz, J.J.; Cruz, J.M. Automatic detection of crop rows in maize fields with high weeds pressure. Expert Syst. Appl. 2012, 39, 11889–11897. [Google Scholar] [CrossRef]

- Gai, J.; Xiang, L.; Tang, L. Using a depth camera for crop row detection and mapping for under-canopy navigation of agricultural robotic vehicle. Comput. Electron. Agric. 2021, 188, 106301. [Google Scholar] [CrossRef]

- Konstantinos, C.; Ioannis, K.; Antonios, G. Thorough robot navigation based on SVM local planning. Robot. Auton. Syst. 2015, 70, 166–180. [Google Scholar] [CrossRef]

- Ulrich, B.; Marian, H.; Erik, M. An Autonomous Forklift with 3D Time-of-Flight Camera-Based Localization and Navigation. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; pp. 1739–1746. [Google Scholar] [CrossRef]

- Fue, K.; Porter, W.; Barnes, E.; Li, C.; Rains, G. Evaluation of a Stereo Vision System for Cotton Row Detection and Boll Location Estimation in Direct Sunlight. Agronomy 2020, 10, 1137. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Y.; Niu, S.; Song, H. Bio-inspired routing for heterogeneous Unmanned Aircraft Systems (UAS) swarm networking. Comput. Electr. Eng. 2021, 95, 107401. [Google Scholar] [CrossRef]

- Yang, X.; Li, X. Research on Autonomous Driving Technology Based on Deep Reinforcement Learning. Netw. Secur. Technol. Appl. 2021, 1, 136–138. [Google Scholar]

- Yang, Y.; Mei, G. Pneumonia Recognition by Deep Learning: A Comparative Investigation. Appl. Sci. 2022, 12, 4334. [Google Scholar] [CrossRef]

- Hwang, J.H.; Seo, J.W.; Kim, J.H.; Park, S.; Kim, Y.J.; Kim, K.G. Comparison between Deep Learning and Conventional Machine Learning in Classifying Iliofemoral Deep Venous Thrombosis upon CT Venography. Diagnostics 2022, 12, 274. [Google Scholar] [CrossRef]

- Kastrati, Z.; Dalipi, F.; Imran, A.S.; Pireva Nuci, K.; Wani, M.A. Sentiment Analysis of Students’ Feedback with NLP and Deep Learning: A Systematic Mapping Study. Appl. Sci. 2021, 11, 3986. [Google Scholar] [CrossRef]

- Niu, S.; Liu, Y.; Wang, J.; Song, H. A Decade Survey of Transfer Learning (2010–2020). Trans. Artif. Intell. 2020, 1, 151–166. [Google Scholar] [CrossRef]

- Zhao, C.; Wen, C.; Lin, S.; Guo, W.; Long, J. A method for identifying and detecting tomato flowering period based on cascaded convolutional neural network. Trans. Chin. Soc. Agric. Eng. 2020, 36, 143–152. [Google Scholar]

- Xiang, R.; Zhang, M.; Zhang, J. Recognition for Stems of Tomato Plants at Night Based on a Hybrid Joint Neural Network. Agriculture 2022, 12, 743. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Zhang, Z.; Mao, W.; Wang, D.; Wang, D.-W. Maize field weed detection method based on Mask R-CNN. Trans. Chin. Soc. Agric. Mach. 2020, 6, 220–228,247. [Google Scholar]

- Fan, X.; Zhou, J.; Xu, Y.; Li, K.; Wen, D. Identification and location of weeds in cotton seedling based on optimized Faster R-CNN. Trans. Chin. Soc. Agric. Mach. 2021, 5, 26–34. [Google Scholar]

- Yang, S.; Feng, Q.; Zhang, J.; Sun, W.; Wang, G. Potato disease recognition method based on deep learning and compound dictionary. Trans. Chin. Soc. Agric. Mach. 2020, 7, 22–29. [Google Scholar]

- Xi, R.; Jiang, K.; Zhang, W.; Lu, Z.; Hou, J. Potato sprout eye recognition method based on improved Faster R-CNN. Trans. Chin. Soc. Agric. Mach. 2020, 51, 216–223. [Google Scholar]

- Bansal, P.; Kumar, R.; Kumar, S. Disease Detection in Apple Leaves Using Deep Convolutional Neural Network. Agriculture 2021, 11, 617. [Google Scholar] [CrossRef]

- Wang, L.; Yue, X.; Wang, H.; Ling, K.; Liu, Y.; Wang, J.; Hong, J.; Pen, W.; Song, H. Dynamic Inversion of Inland Aquaculture Water Quality Based on UAVs-WSN Spectral Analysis. Remote Sens. 2020, 12, 402. [Google Scholar] [CrossRef]

- Lin, Y.; Chen, S. Development of Navigation System for Tea Field Machine Using Semantic Segmentation. IFAC Pap. 2019, 52, 108–113. [Google Scholar] [CrossRef]

- Li, J.; Yin, J.; Deng, L. A robot vision navigation method using deep learning in edge computing environment. EURASIP J. Adv. Signal Processing 2021, 2021, 22. [Google Scholar] [CrossRef]

- Adhikari, S.P.; Kim, G.; Kim, H. Deep Neural Network-based System for Autonomous Navigation in Paddy Field. IEEE Access 2020, 8, 71272–71278. [Google Scholar] [CrossRef]

- Adhikari, S.; Yang, H.; Kim, H. Learning Semantic Graphics Using Convolutional Encoder–Decoder Network for Autonomous Weeding in Paddy. Front. Plant Sci. 2019, 10, 1404. [Google Scholar] [CrossRef] [PubMed]

- Ponnambalam, V.R.; Bakken, M.; Moore, R.J.D.; Glenn Omholt Gjevestad, J.; Johan From, P. Autonomous Crop Row Guidance Using Adaptive Multi-ROI in Strawberry Fields. Sensors 2020, 20, 5249. [Google Scholar] [CrossRef] [PubMed]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Bah, M.; Hafiane, A.; Canals, R. CRowNet: Deep Network for Crop Row Detection in UAV Images. IEEE Access 2020, 8, 5189–5200. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, J.; Li, B. Extraction method for centerlines of rice seedings based on YOLOv3 target detection. Trans. Chin. Soc. Agric. Mach. 2020, 51, 34–43. [Google Scholar]

- Gao, Z. Method for Kiwi Trunk Detection and Navigation Line Fitting Based on Deep Learning. Master’s Thesis, Northwest A & F University, Xianyang, China, 2020. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Han, Z.; Li, J.; Yuan, Y. Path Recognition of Orchard Visual Navigation Based on U Net. Trans. Chin. Soc. Agric. Mach. 2021, 52, 30–39. [Google Scholar]

- Yang, Y.; Zhang, B.; Zha, J.; Wen, X.; Chen, L.; Zhang, T.; Dong, X.; Yang, X. Real-time extraction of navigation line between corn row. Trans. Chin. Soc. Agric. Eng. 2020, 36, 162–171. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

| Working Conditions | Number of Acquired Images | |

|---|---|---|

| Seedling period | 8:00–9:00 | 167 |

| 10:30–11:00 | 156 | |

| 17:00–18:30 | 178 | |

| Tillering period | 8:30–9:30 | 210 |

| 14:00–15:00 | 292 | |

| Tuber period | 7:30–8:30 | 158 |

| 16:00–17:00 | 175 | |

| Model | MPA/% | MIOU/% | FPS f/s |

|---|---|---|---|

| VU-Net | 97.29 | 93.94 | 12.62 |

| U-Net | 94.35 | 90.06 | 18.30 |

| SegNet | 90.52 | 86.54 | 11.15 |

| PSPNet | 92.37 | 87.45 | 15.59 |

| Deeplab V3 | 93.71 | 90.94 | 10.79 |

| Growth Periods | Lighting Conditions | MPA/% | MIOU/% | FPS f/s |

|---|---|---|---|---|

| Seedling period | weak light intensity | 97.72 | 90.36 | 12.78 |

| strong light intensity | 96.44 | 89.63 | 12.77 | |

| Tillering period | weak light intensity | 95.46 | 86.64 | 12.83 |

| strong light intensity | 93.25 | 84.56 | 12.83 | |

| Tuber period | weak light intensity | 97.35 | 93.21 | 12.52 |

| strong light intensity | 95.27 | 86.12 | 12.50 |

| Index | This Paper | Literature [37] |

|---|---|---|

| Segment Objects | Potato crop row with background | Orchard road and background |

| Model | U-Net | U-Net |

| Backbone | VGG16 | Unused |

| Dataset size | 1200 | 1200 |

| Accuracy/% | 97.72 | 94.51 |

| Season of Growth | Hough Transform | Least Squares | ||

|---|---|---|---|---|

| Average Angular Deviation | Execution Time | Average Angular Deviation | Execution Time | |

| Seedling period | 2.35 | 0.712 ± 0.05 | 2.03 | 0.625 ± 0.03 |

| Tillering period | 1.87 | 0.701 ± 0.05 | 1.32 | 0.532 ± 0.03 |

| Tuber period | 3.56 | 0.776 ± 0.05 | 3.13 | 0.654 ± 0.03 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, R.; Zhai, Y.; Zhang, J.; Zhang, H.; Tian, G.; Zhang, J.; Huang, P.; Li, L. Potato Visual Navigation Line Detection Based on Deep Learning and Feature Midpoint Adaptation. Agriculture 2022, 12, 1363. https://doi.org/10.3390/agriculture12091363

Yang R, Zhai Y, Zhang J, Zhang H, Tian G, Zhang J, Huang P, Li L. Potato Visual Navigation Line Detection Based on Deep Learning and Feature Midpoint Adaptation. Agriculture. 2022; 12(9):1363. https://doi.org/10.3390/agriculture12091363

Chicago/Turabian StyleYang, Ranbing, Yuming Zhai, Jian Zhang, Huan Zhang, Guangbo Tian, Jian Zhang, Peichen Huang, and Lin Li. 2022. "Potato Visual Navigation Line Detection Based on Deep Learning and Feature Midpoint Adaptation" Agriculture 12, no. 9: 1363. https://doi.org/10.3390/agriculture12091363

APA StyleYang, R., Zhai, Y., Zhang, J., Zhang, H., Tian, G., Zhang, J., Huang, P., & Li, L. (2022). Potato Visual Navigation Line Detection Based on Deep Learning and Feature Midpoint Adaptation. Agriculture, 12(9), 1363. https://doi.org/10.3390/agriculture12091363