Sichuan Pepper Recognition in Complex Environments: A Comparison Study of Traditional Segmentation versus Deep Learning Methods

Abstract

1. Introduction

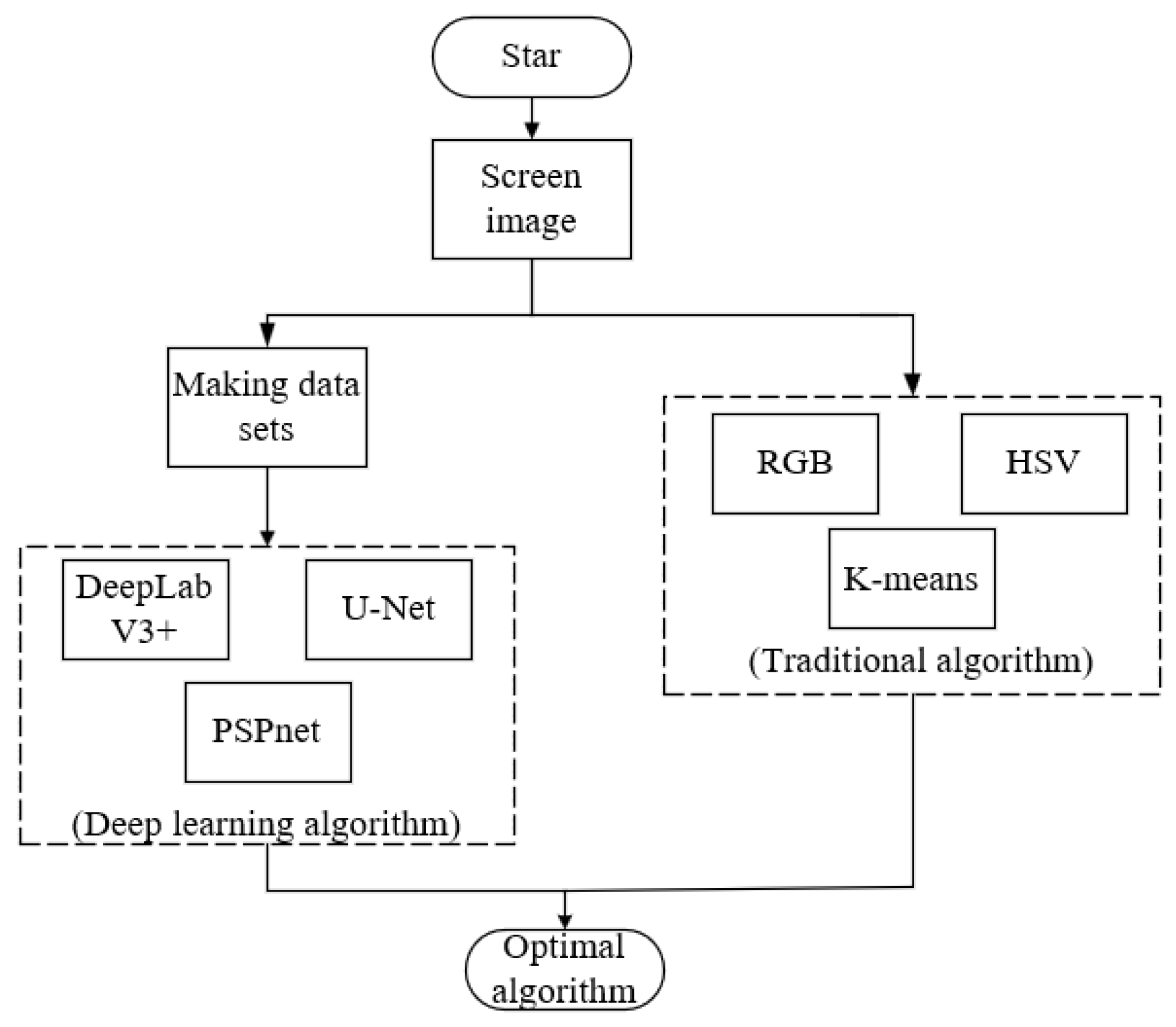

2. Materials and Methods

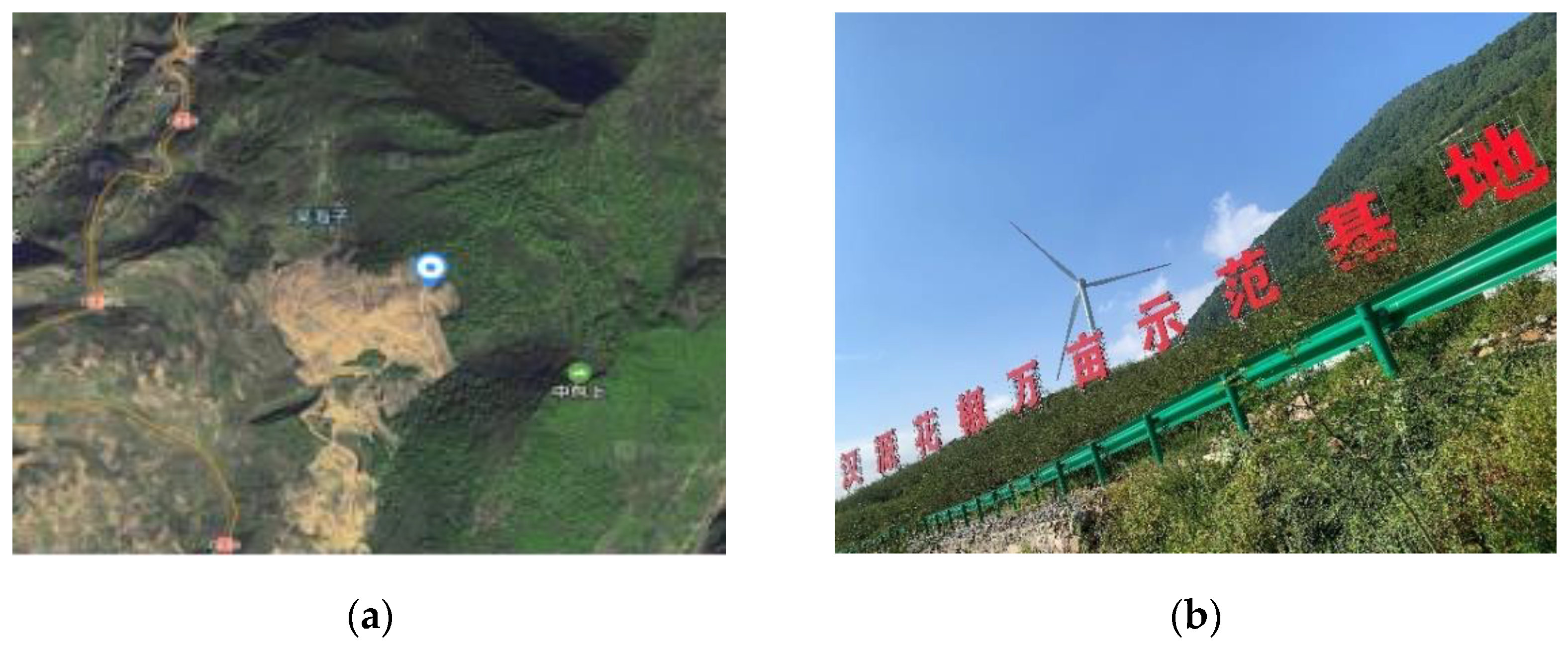

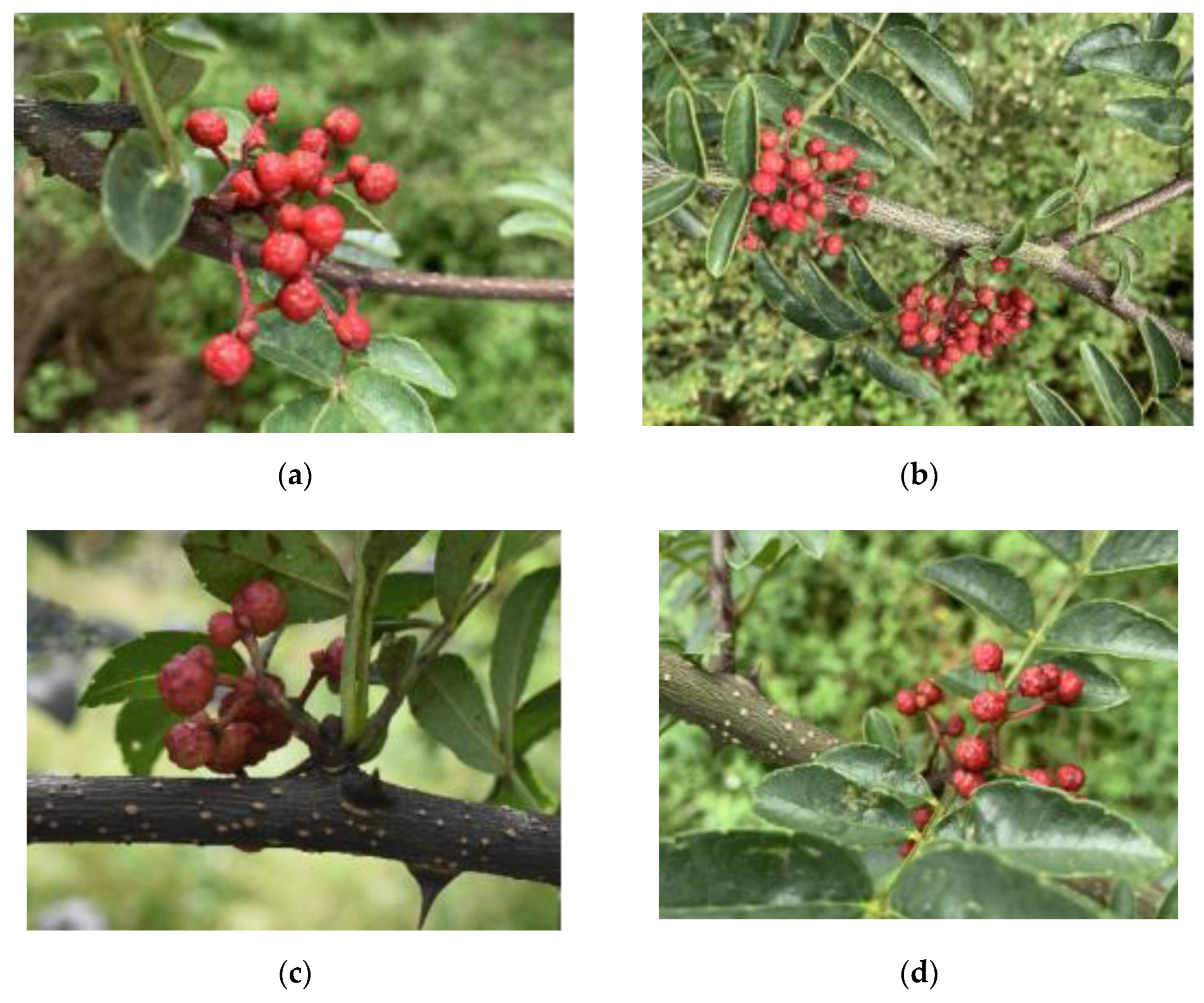

2.1. Image Acquisition

2.2. Sichuan Pepper Datasets

2.3. Traditional Segmentation Algorithm

2.3.1. RGB Color Space Algorithm

2.3.2. HSV Color Space Algorithm

2.3.3. K-Means Clustering Segmentation

- (1)

- Select an appropriate central value of the K classes.

- (2)

- In the nth iteration, find the distance from any sample to the K cluster centers for any sample, and take the class where the center with the shortest orbital distance is located.

- (3)

- The central value of this class is updated using the mean method.

- (4)

- For all the n cluster centers, repeat steps (2) and (3); the iteration ends if the cluster center value remains constant, otherwise the iteration continues.

2.4. Deep Learning Algorithm

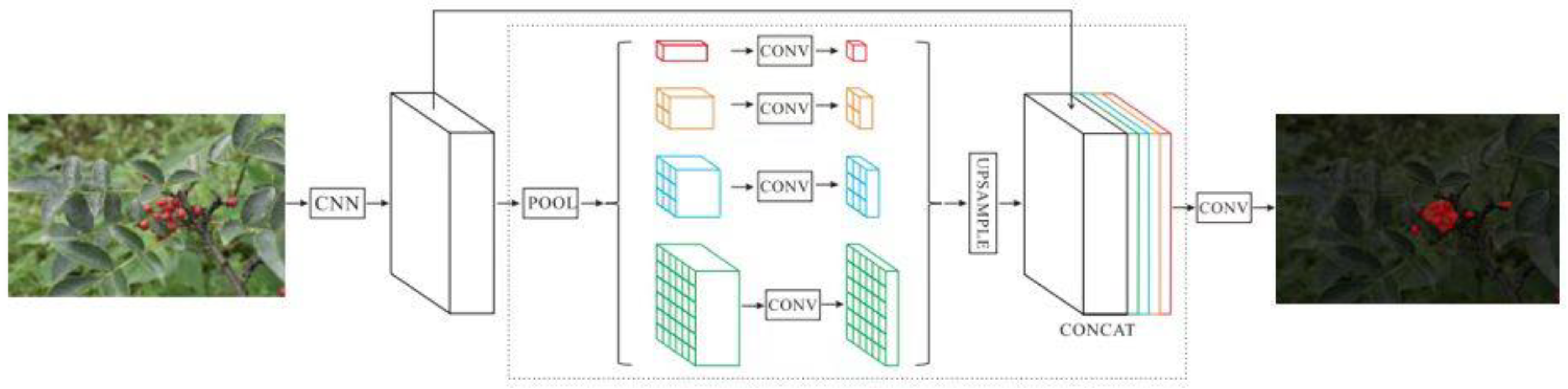

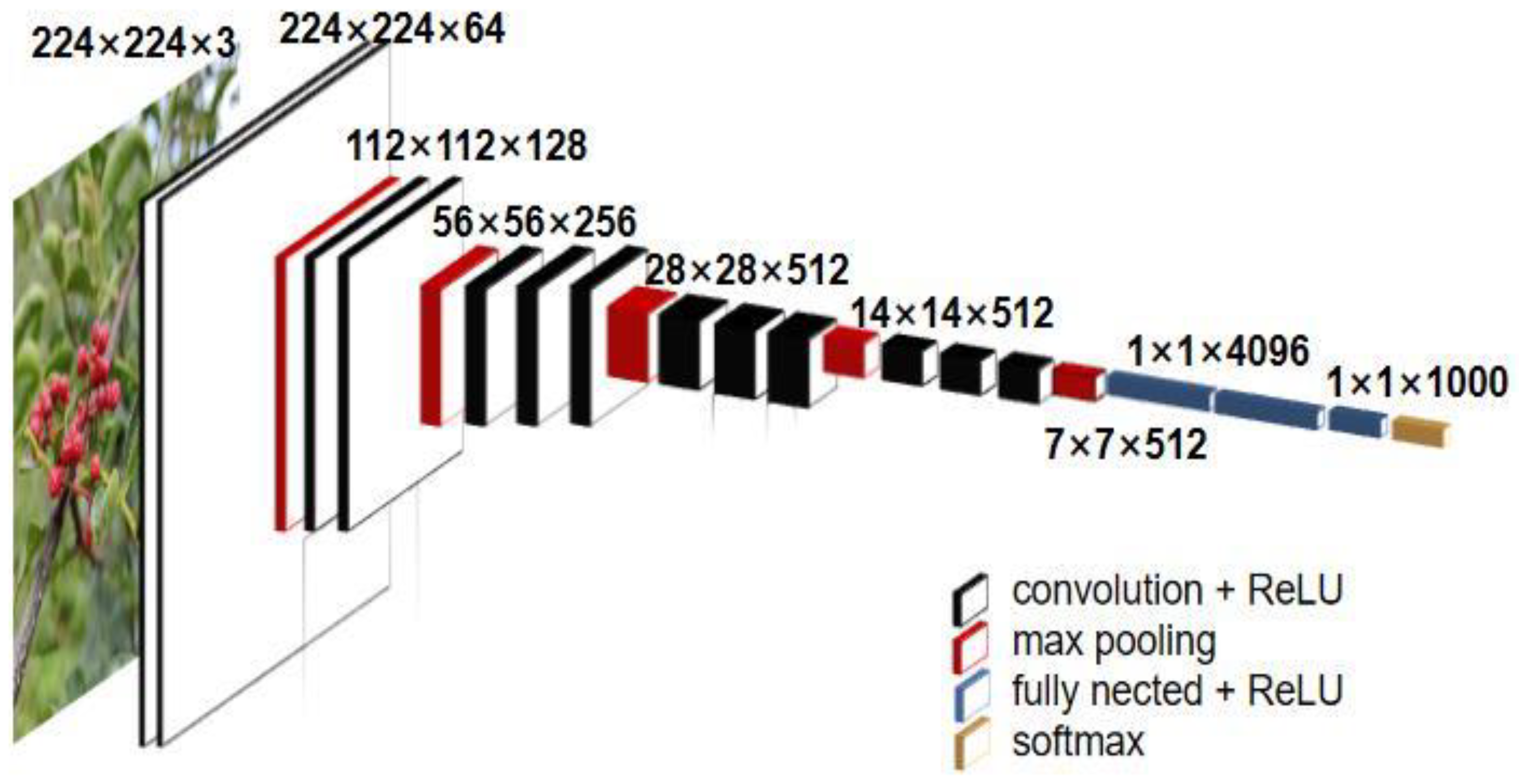

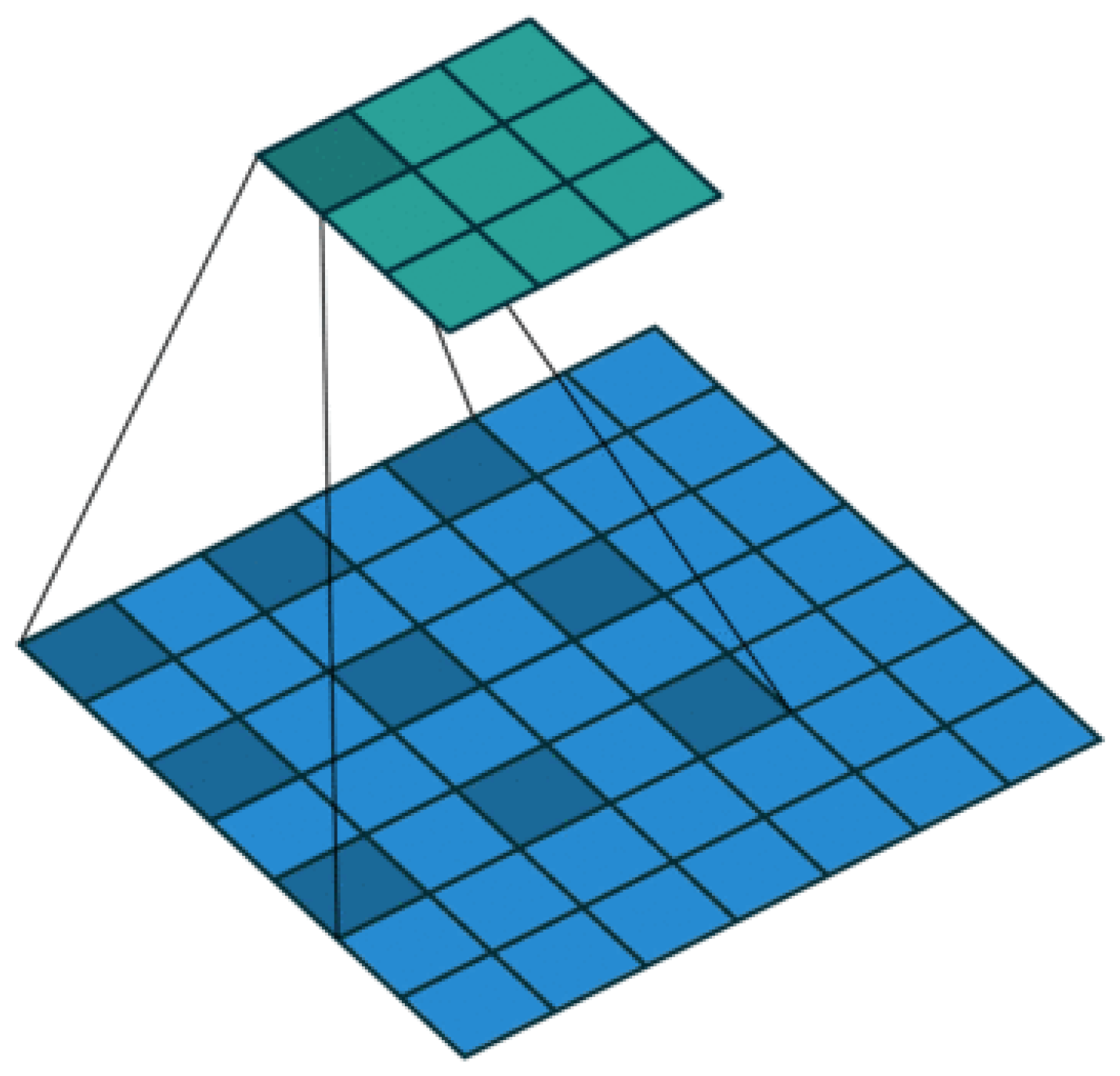

2.4.1. PSPnet Algorithm

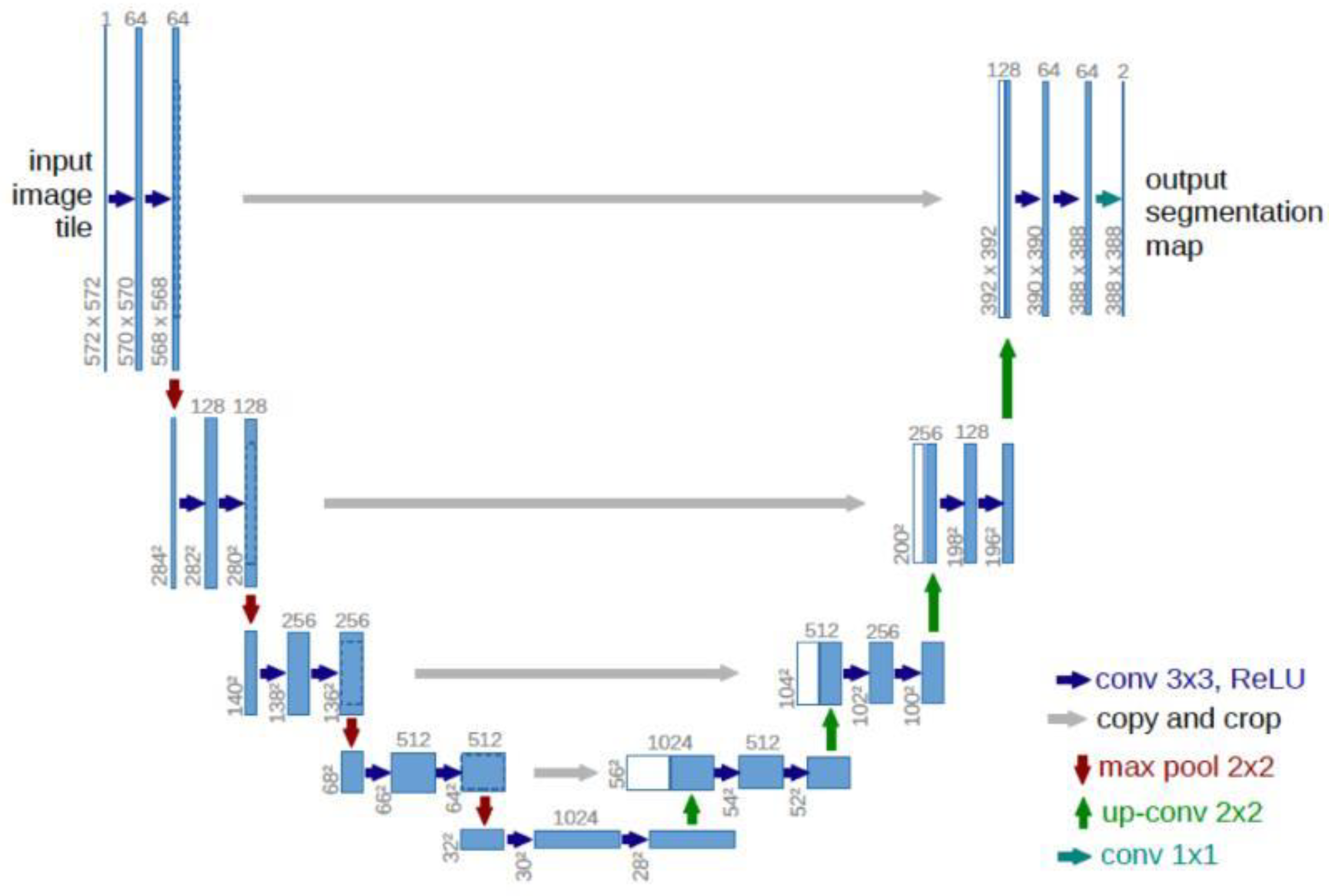

2.4.2. U-Net Algorithm

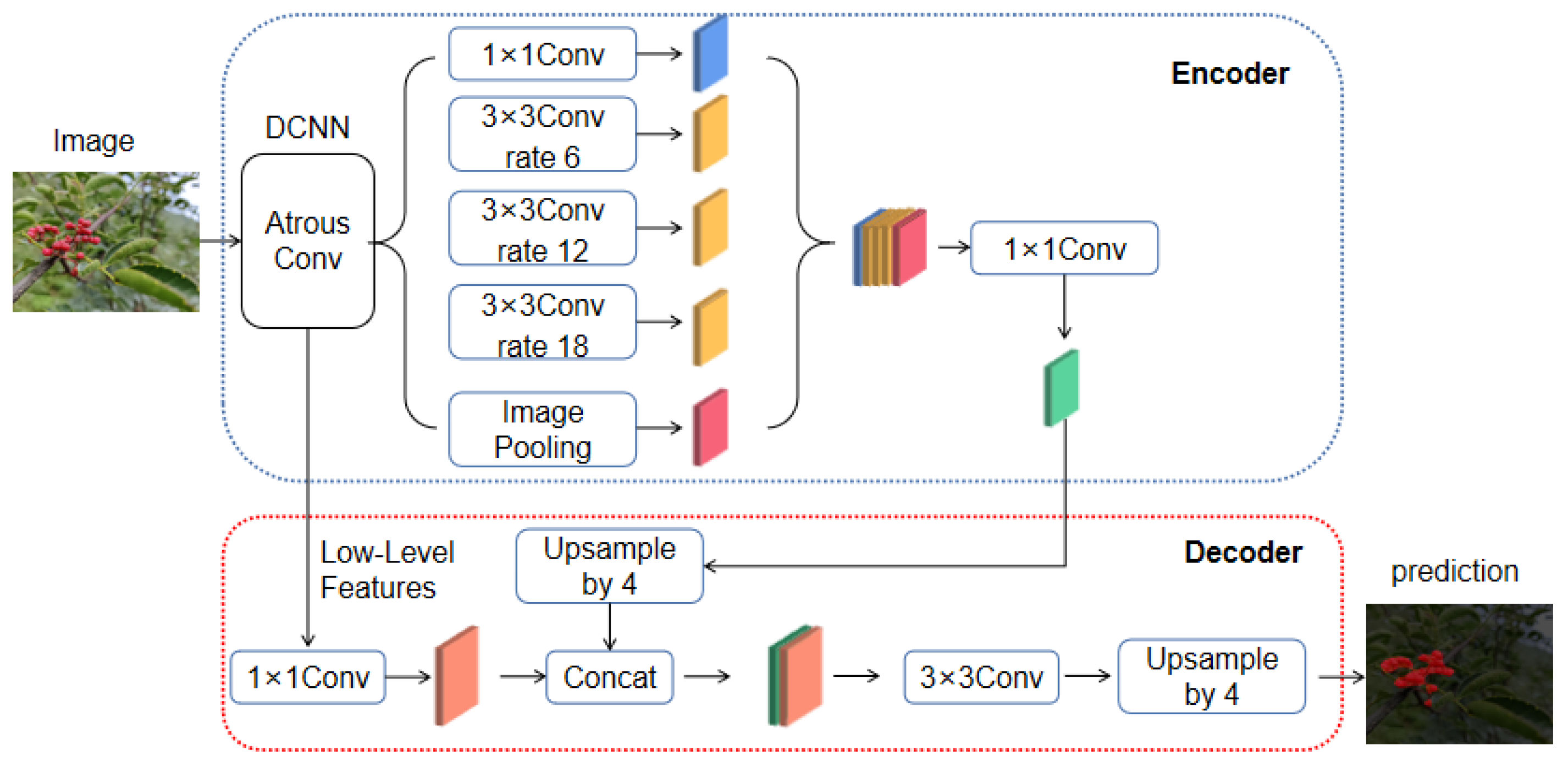

2.4.3. DeepLabV3+ Algorithm

2.5. Partition Accuracy and Evaluation Criteria

3. Results

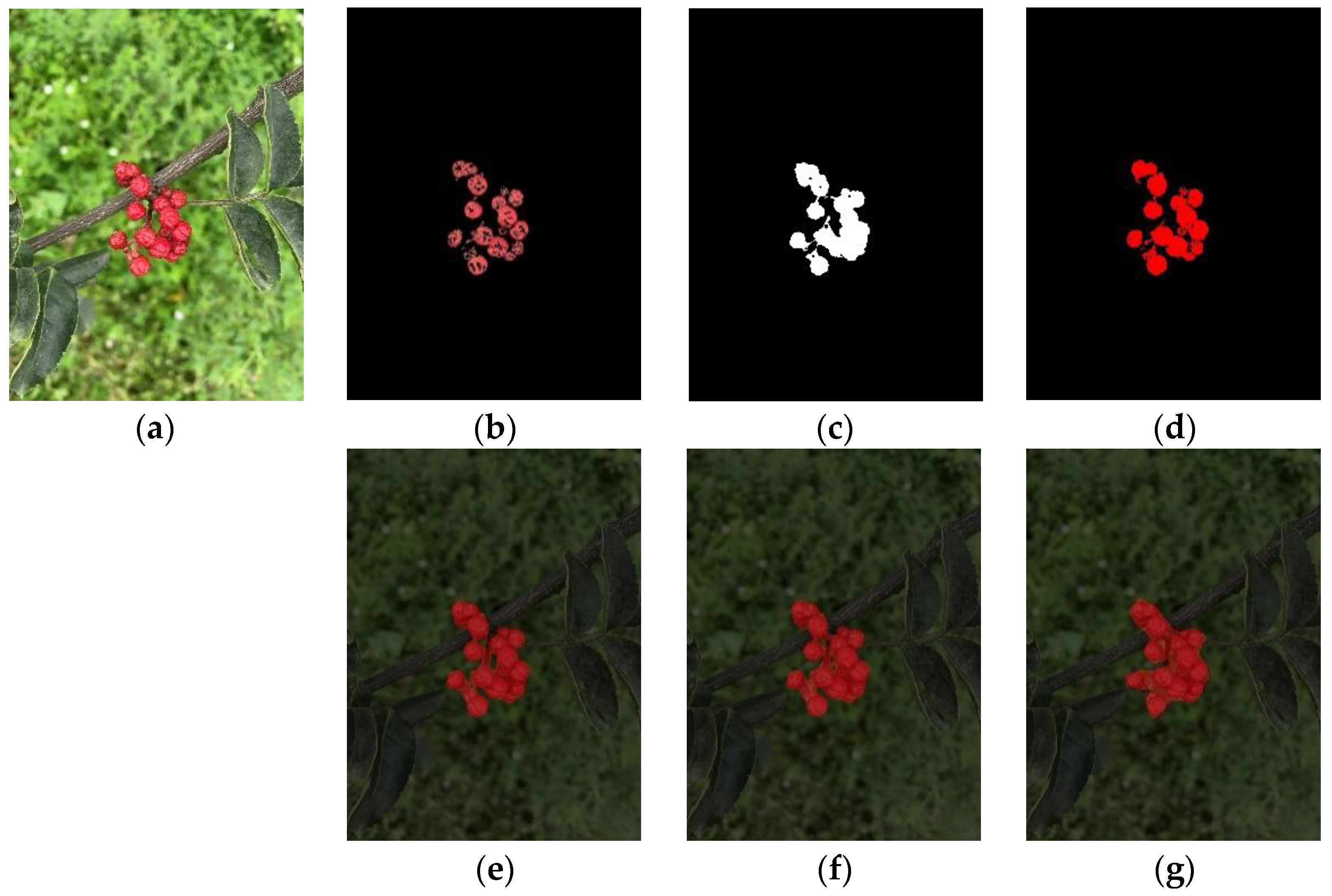

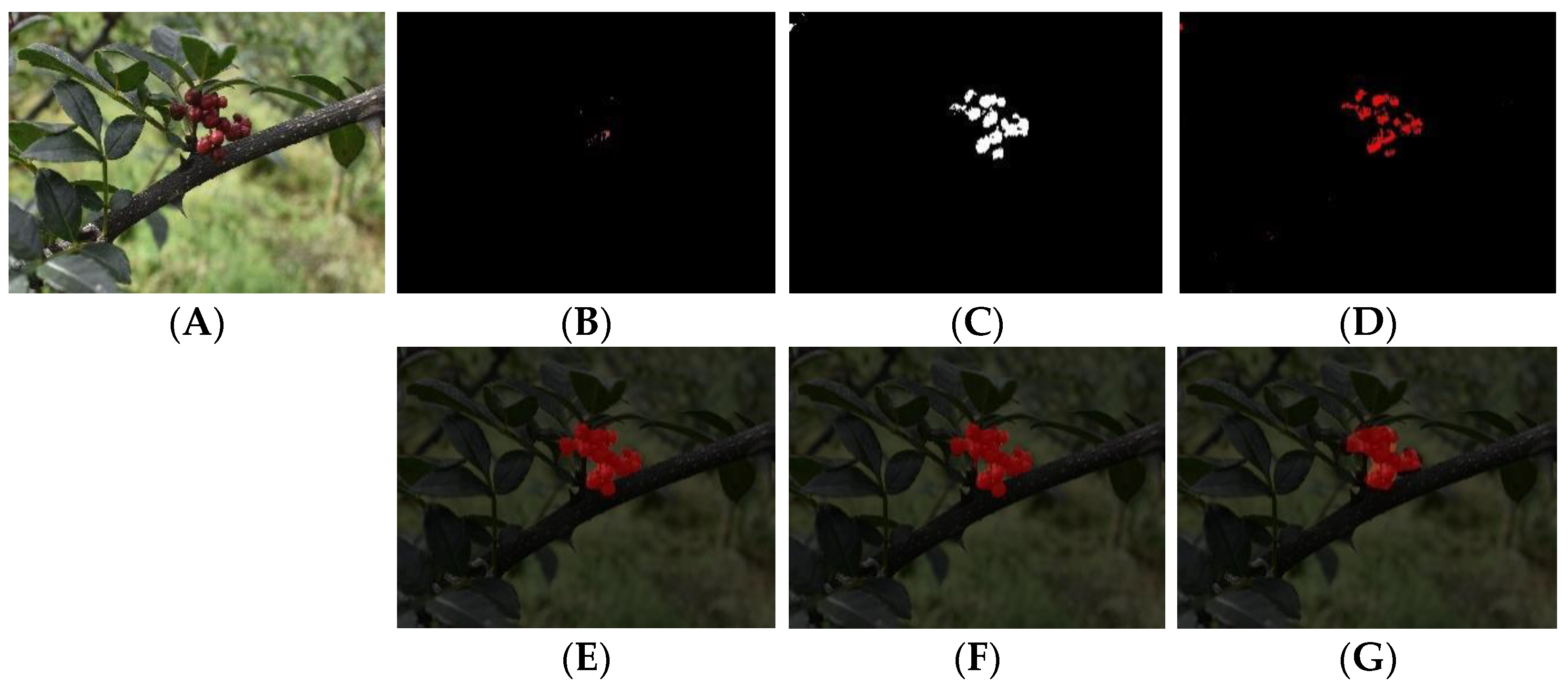

3.1. Sichuan Pepper Segmentation with Varying Light Intensity

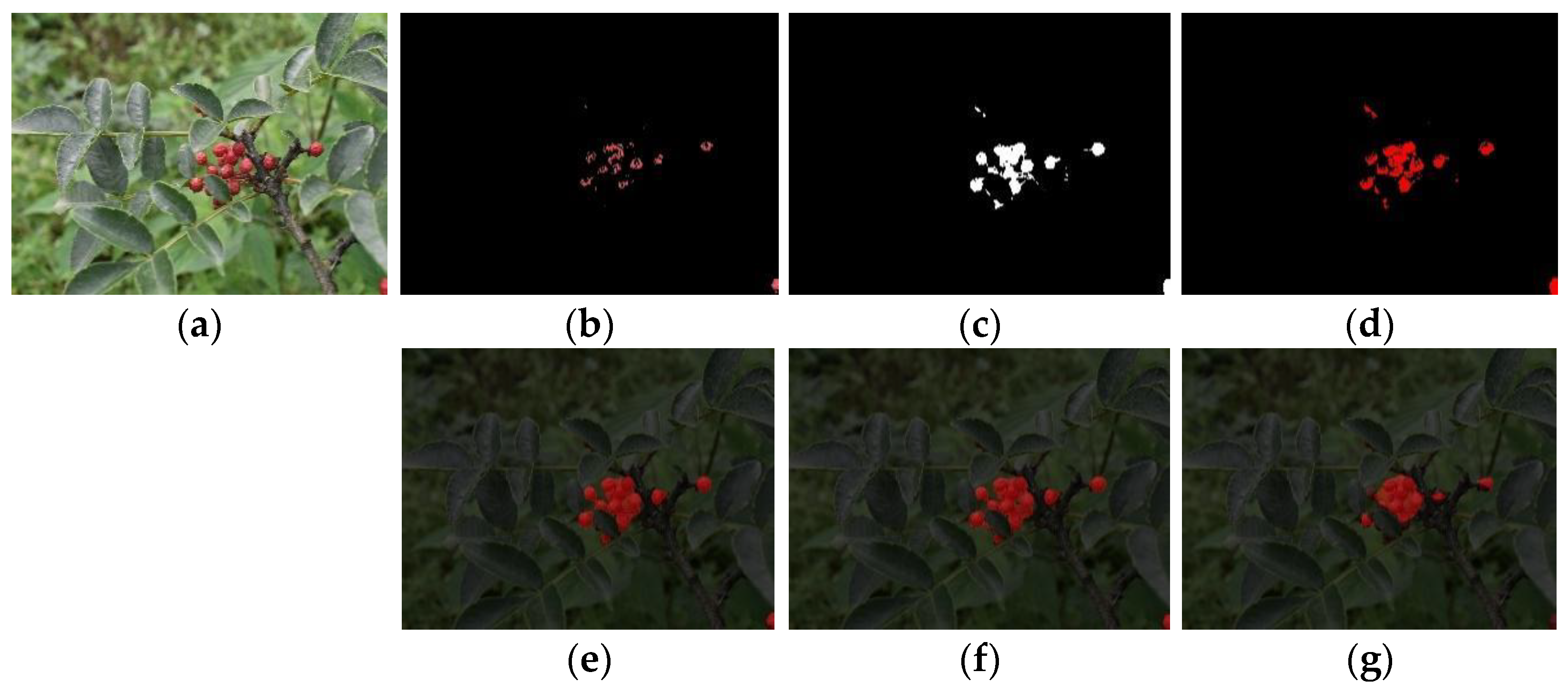

3.2. Sichuan Pepper Segmentation with Occlusion

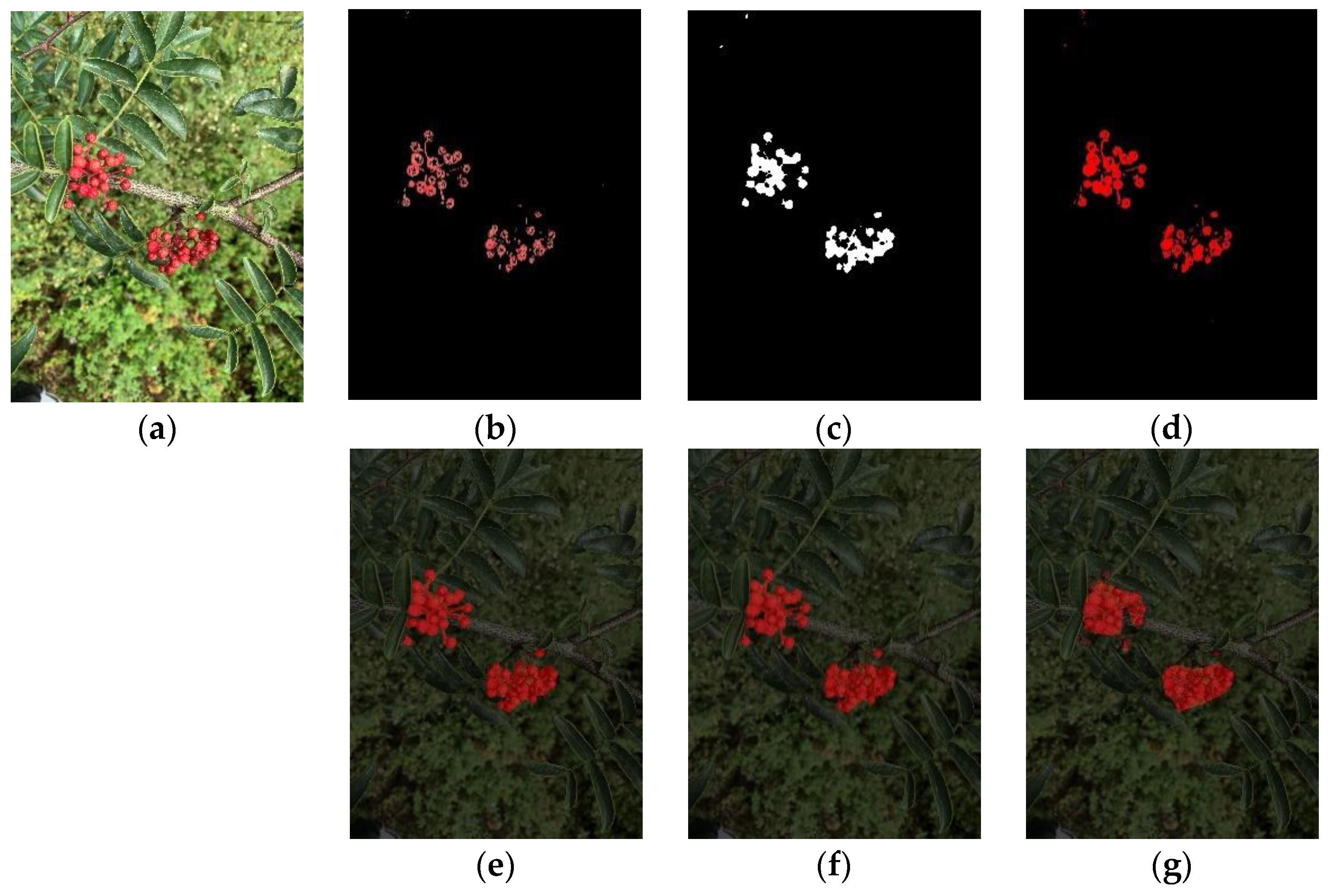

3.3. Segmentation with Different Numbers of Sichuan Pepper Clusters

3.4. Test Results of the Algorithm

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gong, Y.; Sun, W.-H.; Xu, T.-T.; Zhang, L.; Huang, X.-Y.; Tan, Z.-H.; Di, D.-L. Chemical constituents from the pericarps of Zanthoxylum bungeanum and their chemotaxonomic significance. Biochem. Syst. Ecol. 2021, 95, 104213. [Google Scholar] [CrossRef]

- Zhang, D.; Sun, X.; Battino, M.; Wei, X.; Shi, J.; Zhao, L.; Liu, S.; Xiao, J.; Shi, B.; Zou, X. A comparative overview on chili pepper (capsicum genus) and sichuan pepper (zanthoxylum genus): From pungent spices to pharma-foods. Trends Food Sci. Technol. 2021, 117, 148–162. [Google Scholar] [CrossRef]

- Zheng, T.; Zhang, Q.; Su, K.X.; Liu, S.M. Transcriptome and metabolome analyses reveal the regulation of peel coloration in green, red Chinese prickly ash (Zanthoxylum L.). Food Chem. Mol. Sci. 2020, 1, 100004. [Google Scholar] [CrossRef]

- Lv, J.; Wang, F.; Xu, L.; Ma, Z.; Yang, B. A segmentation method of bagged green apple image. Sci. Hortic. 2019, 246, 411–417. [Google Scholar] [CrossRef]

- Yogesh; Dubey, A.K.; Agarwal, A.; Sarkar, A.; Arora, R. Adaptive thresholding based segmentation of infected portion of pome fruit. J. Stat. Manag. Syst. 2017, 20, 575–584. [Google Scholar] [CrossRef]

- Septiarini, A.; Hamdani, H.; Hatta, H.R.; Anwar, K. Automatic image segmentation of oil palm fruits by applying the contour-based approach. Sci. Hortic. 2020, 261, 108939. [Google Scholar] [CrossRef]

- Lv, J.; Xu, L. Method to acquire regions of fruit, branch and leaf from image of red apple in orchard. Mod. Phys. Lett. B 2017, 31, 19–21. [Google Scholar] [CrossRef]

- Tassis, L.M.; Tozzi de Souza, J.E.; Krohling, R.A. A deep learning approach combining instance and semantic segmentation to identify diseases and pests of coffee leaves from in-field images. Comput. Electron. Agric. 2021, 186, 106191. [Google Scholar] [CrossRef]

- Li, Q.; Jia, W.; Sun, M.; Hou, S.; Zheng, Y. A novel green apple segmentation algorithm based on ensemble U-Net under complex orchard environment. Comput. Electron. Agric. 2021, 180, 105900. [Google Scholar] [CrossRef]

- Jia, W.; Zhang, Z.; Shao, W.; Hou, S.; Ji, Z.; Liu, G.; Yin, X. FoveaMask: A fast and accurate deep learning model for green fruit instance segmentation. Comput. Electron. Agric. 2021, 191, 106488. [Google Scholar] [CrossRef]

- Hameed, K.; Chai, D.; Rassau, A. Score-based mask edge improvement of Mask-RCNN for segmentation of fruit and vegetables. Expert Syst. Appl. 2022, 190, 116205. [Google Scholar] [CrossRef]

- Danish, M.; Akhtar, M.N.; Hashim, R.; Saleh, J.M.; Bakar, E.A. Analysis using image segmentation for the elemental composition of activated carbon. MethodsX 2020, 7, 100983. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Yu, Z.; Liu, W.; Xu, Y.; Zhang, D.; Cheng, Y. Tongue image segmentation via color decomposition and thresholding. Concurr. Comput. Pract. Exp. 2018, 31, 4662. [Google Scholar]

- Steinley, D. K-means clustering: A half-century synthesis. Br. J. Math. Stat. Psychol. 2006, 59, 1–34. [Google Scholar] [CrossRef]

- Kumar, K.V.; Jayasankar, T. An identification of crop disease using image segmentation. Int. J. Pharm. Sci. Res. 2019, 10, 1054–1064. [Google Scholar]

- Shaaban, A.M.; Salem, N.M.; Al-atabany, W.I. A Semantic-based Scene segmentation using convolutional neural networks. AEU—Int. J. Electron. Commun. 2020, 125, 153364. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR) 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Yue, J.; Zhu, L.; Li, F.; Li, Z. Vegetable Recognition and Classification Based on Improved VGG Deep Learning Network Model. Int. J. Comput. Intell. Syst. 2020, 13, 559–564. [Google Scholar]

- Zhang, D.; Lv, J.; Cheng, Z. An Approach Focusing on the Convolutional Layer Characteristics of the VGG Network for Vehicle Tracking. IEEE Access 2020, 8, 112827–112839. [Google Scholar] [CrossRef]

- Peng, H.; Xue, C.; Shao, Y.; Chen, K.; Xiong, J.; Xie, Z.; Zhang, L. Semantic Segmentation of Litchi Branches Using DeepLabV3+ Model. IEEE Access 2020, 8, 164546–164555. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, X.; Chen, Y. Research on Semantic Segmentation of Portraits Based on Improved Deeplabv3+. IOP Conf. Ser. Mater. Sci. Eng. 2020, 806, 012057. [Google Scholar] [CrossRef]

- Font, D.; Tresanchez, M.; Martinez, D.; Moreno, J.; Clotet, E.; Palacin, J. Vineyard yield estimation based on the analysis of high resolution images obtained with artificial illumination at night. Sensors 2015, 15, 8284–8301. [Google Scholar] [CrossRef]

- Wang, C.; Tang, Y.; Zou, X.; SiTu, W.; Feng, W. A robust fruit image segmentation algorithm against varying illumination for vision system of fruit harvesting robot. Optik 2017, 131, 626–631. [Google Scholar] [CrossRef]

- Lv, J.; Wang, Y.; Xu, L.; Gu, Y.; Zou, L.; Yang, B.; Ma, Z. A method to obtain the near-large fruit from apple image in orchard for single-arm apple harvesting robot. Sci. Hortic. 2019, 257, 108758. [Google Scholar] [CrossRef]

- Zhang, C.; Zou, K.; Pan, Y. A Method of Apple Image Segmentation Based on Color-Texture Fusion Feature and Machine Learning. Agronomy 2020, 10, 972. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, A.; Liu, J.; Faheem, M. A Comparative Study of Semantic Segmentation Models for Identification of Grape with Different Varieties. Agriculture 2021, 11, 997. [Google Scholar] [CrossRef]

- Qi, X.; Dong, J.; Lan, Y.; Zhu, H. Method for Identifying Litchi Picking Position Based on YOLOv5 and PSPNet. Remote Sens. 2022, 14, 2004. [Google Scholar] [CrossRef]

- Chen, S.; Song, Y.; Su, J.; Fang, Y.; Shen, L.; Mi, Z.; Su, B. Segmentation of field grape bunches via an improved pyramid scene parsing network. Int. J. Agric. Biol. Eng. 2021, 14, 185–194. [Google Scholar] [CrossRef]

- Roy, K.; Chaudhuri, S.S.; Pramanik, S. Deep learning based real-time Industrial framework for rotten and fresh fruit detection using semantic segmentation. Microsyst. Technol. 2020, 27, 3365–3375. [Google Scholar] [CrossRef]

| Traditional Algorithm | Intersection Over Union, IOU (%) | |||

|---|---|---|---|---|

| Single Front-Lit Clusters without Occlusion | Multiple Front-Lit Clusters without Occlusion | Single Back-Lit Clusters without Occlusion | Single Front-Lit Clusters with Occlusion | |

| RGB color space | 71.89 | 68.01 | 10.7 | 47.68 |

| HSV color space | 84.99 | 80.92 | 70.57 | 82.84 |

| k-means | 75.30 | 78.29 | 70.58 | 73.32 |

| Deep Learning Algorithm | IOU (%) | |||

|---|---|---|---|---|

| Single Front-Lit Clusters without Occlusion | Multiple Front-Lit Clusters without Occlusion | Single Back-Lit Clusters without Occlusion | Single Front-Lit Clusters with Occlusion | |

| PSPnet | 73.28 | 57.54 | 67.32 | 68.73 |

| U-Net | 87.23 | 76.52 | 83.47 | 84.71 |

| DeepLabV3+ | 86.01 | 75.39 | 82.13 | 81.25 |

| Deep Learning Algorithm | Mean Pixel Accuracy, MPA (%) | |||

|---|---|---|---|---|

| Single Front-Lit Clusters without Occlusion | Multiple Front-Lit Clusters without Occlusion | Single Back-Lit Clusters without Occlusion | Single Front-Lit Clusters with Occlusion | |

| PSPnet | 89.63 | 83.57 | 86.78 | 87.48 |

| U-Net | 95.95 | 94.33 | 93.85 | 94.11 |

| DeepLabV3+ | 95.10 | 92.48 | 92.97 | 92.95 |

| No | Reference | Task | Dataset | Methods | Pros and Cons |

|---|---|---|---|---|---|

| 1 | Davinia et al. (2015) [23] | Estimating vineyard yield at night | Images from a grape orchard | RGB and HSV color spaces | Manual control of light, reduced the impact of light, improved the segmentation effect |

| 2 | Wang et al. (2017) [24] | Applying a robust fruit segmentation algorithm against varying illumination | 300 images under outdoor conditions captured in three orchards | k-means, Retinex-based image enhancement algorithm | The k-means segmentation effect was better when using an illumination normalization algorithm and image enhancement |

| 3 | Lv et al. (2019) [25] | Obtaining near-large fruit from apple image in orchard | Images from the apple planting demonstration area | RGB color space | Algorithm took less time |

| 4 | Zhang et al. (2020) [26] | Applying an apple segmentation algorithm in an orchard | 105 images from a Science and Technology Park | k-means, R–G color difference method | Reduced the computational resource burden to the greatest extent. |

| 5 | Peng et al. (2021) [27] | Segmentation of grape clusters with different varieties | 300 images from a grape orchard | Fully convolutional networks(FCN), U-Net, DeepLabv3+ | The IOU of the three networks was no greater than 85% but all of them were better than traditional networks |

| 6 | Qi et al. (2022) [28] | Detecting accurate picking locations on the main stems | Lychee images from the Internet | Pyramid Scene Parsing Network(PSPnet), DeepLabv3+, U-Net | When there were multiple clusters of lychees in the image, the IOU values in the three models were lower than 60% |

| 7 | Chen et al. (2021) [29] | Segmenting various kinds of grapes in a field environment | 1856 images from wine grape production demonstration | PSPnet, DeepLabv3+, U-Net | When the bunches on the grape images were relatively discrete, the model could not accurately and completely segment the berry regions. |

| 8 | Kyamelia et al. (2020) [30] | Detection of rotten or fresh apple | 4035 images from Kaggle | U-Net, Enhanced Unet(EN-U-Net) | U-Net achieved training and validation accuracies of 93.19% and 95.36%, respectively |

| 9 | This this study | Optimal segmentation algorithm for Sichuan pepper in complex environment | 953 images from Hanyuan Sichuan pepper based in Ya‘an | RGB, HSV color space, k-means, PSPnet, U-Net, DeepLabv3+ | The traditional segmentation algorithm was affected by illumination and the segmentation effect was poor, the U-Net segmentation algorithm was the best |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, J.; Xiang, J.; Liu, T.; Gao, Z.; Liao, M. Sichuan Pepper Recognition in Complex Environments: A Comparison Study of Traditional Segmentation versus Deep Learning Methods. Agriculture 2022, 12, 1631. https://doi.org/10.3390/agriculture12101631

Lu J, Xiang J, Liu T, Gao Z, Liao M. Sichuan Pepper Recognition in Complex Environments: A Comparison Study of Traditional Segmentation versus Deep Learning Methods. Agriculture. 2022; 12(10):1631. https://doi.org/10.3390/agriculture12101631

Chicago/Turabian StyleLu, Jinzhu, Juncheng Xiang, Ting Liu, Zongmei Gao, and Min Liao. 2022. "Sichuan Pepper Recognition in Complex Environments: A Comparison Study of Traditional Segmentation versus Deep Learning Methods" Agriculture 12, no. 10: 1631. https://doi.org/10.3390/agriculture12101631

APA StyleLu, J., Xiang, J., Liu, T., Gao, Z., & Liao, M. (2022). Sichuan Pepper Recognition in Complex Environments: A Comparison Study of Traditional Segmentation versus Deep Learning Methods. Agriculture, 12(10), 1631. https://doi.org/10.3390/agriculture12101631