Multi-Feature Patch-Based Segmentation Technique in the Gray-Centered RGB Color Space for Improved Apple Target Recognition

Abstract

1. Introduction

2. Materials and Methods

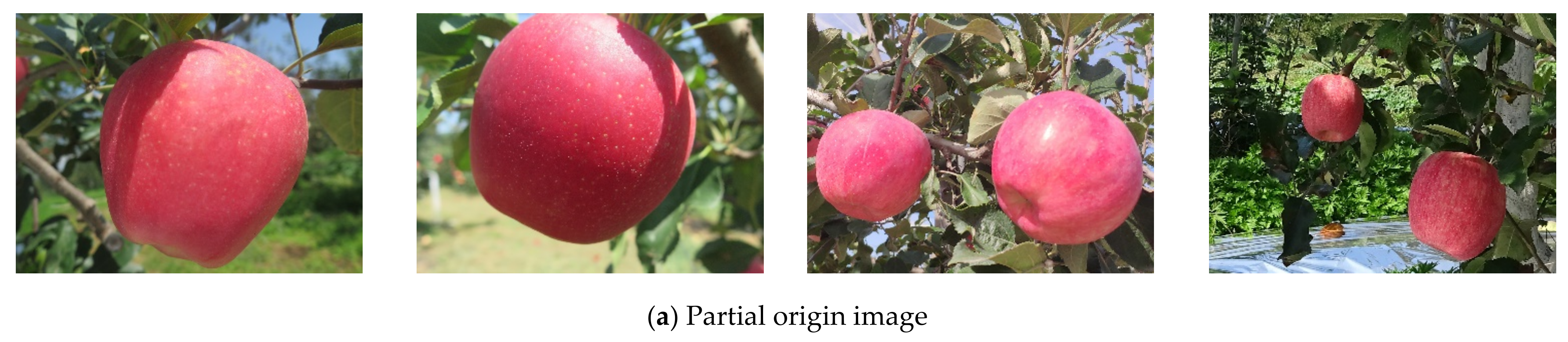

2.1. Apple Image Acquisition

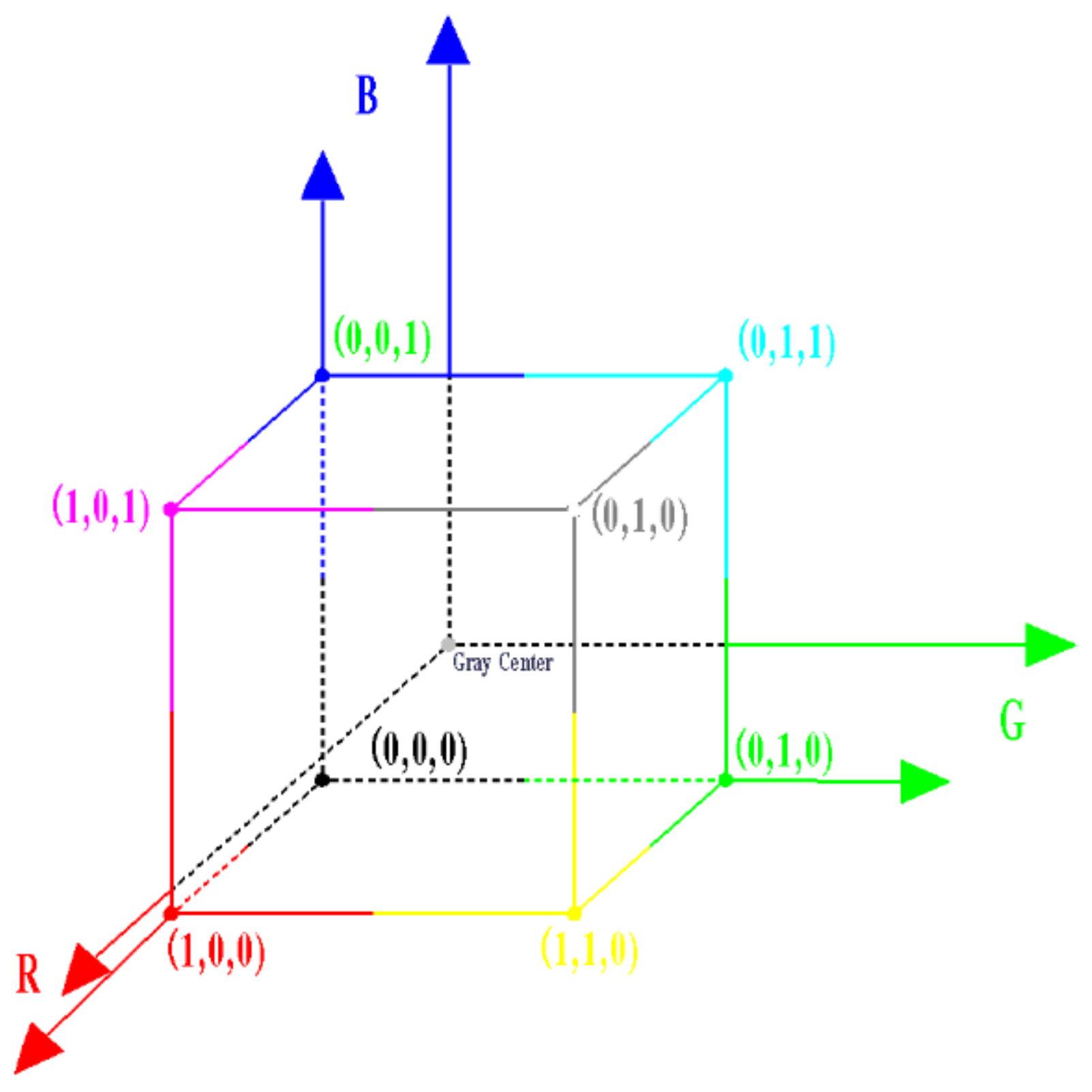

2.2. Vector Decomposition in Gray-Centered RGB Color Space

2.2.1. Gray-Centered RGB Color Space

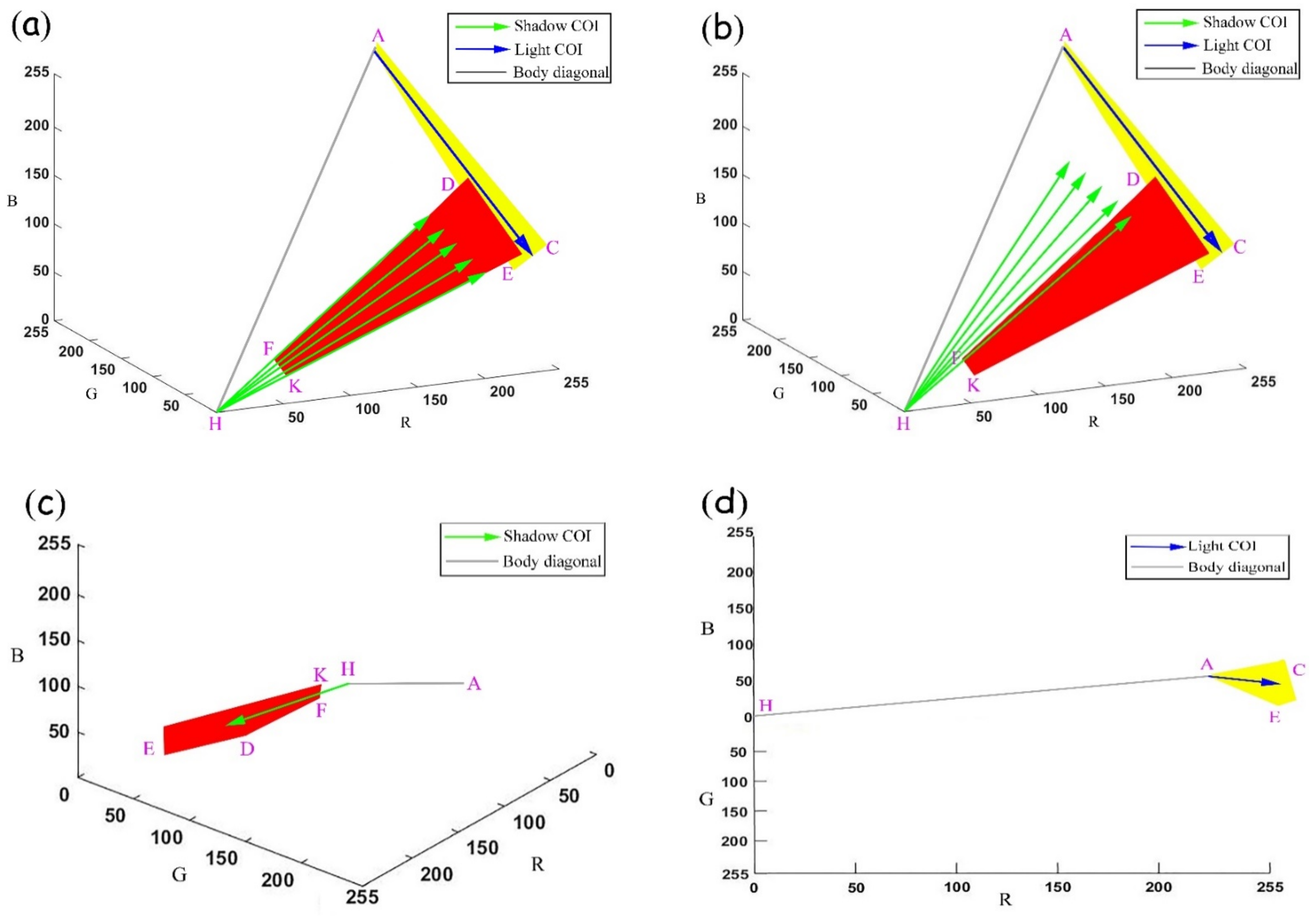

2.2.2. Vector Decomposition

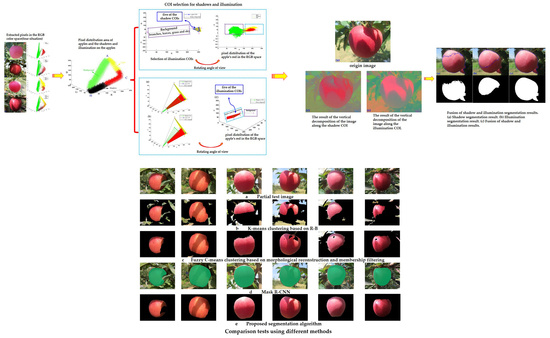

2.3. Multiple Shadow and Halation Feature Extraction and Fusion

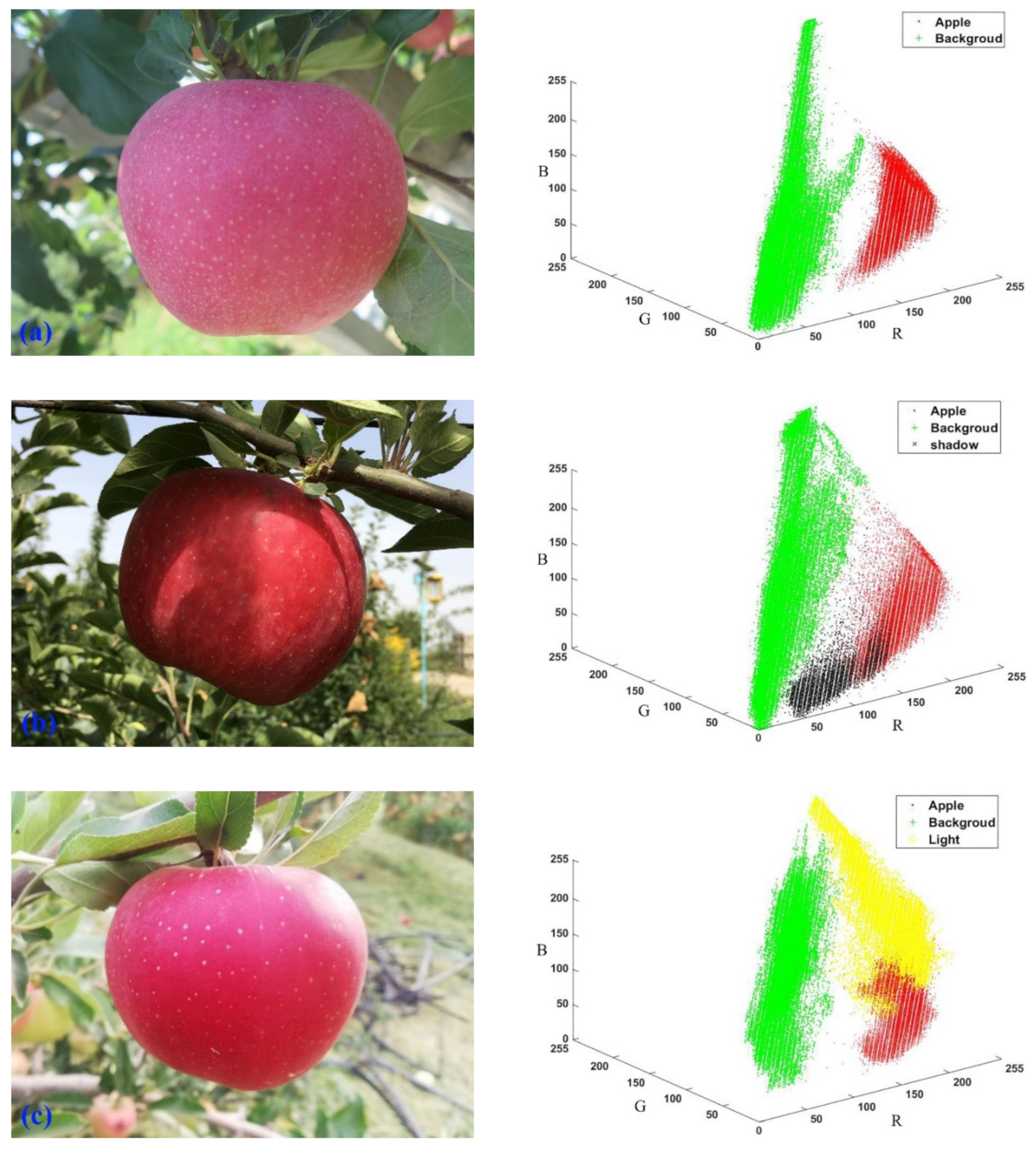

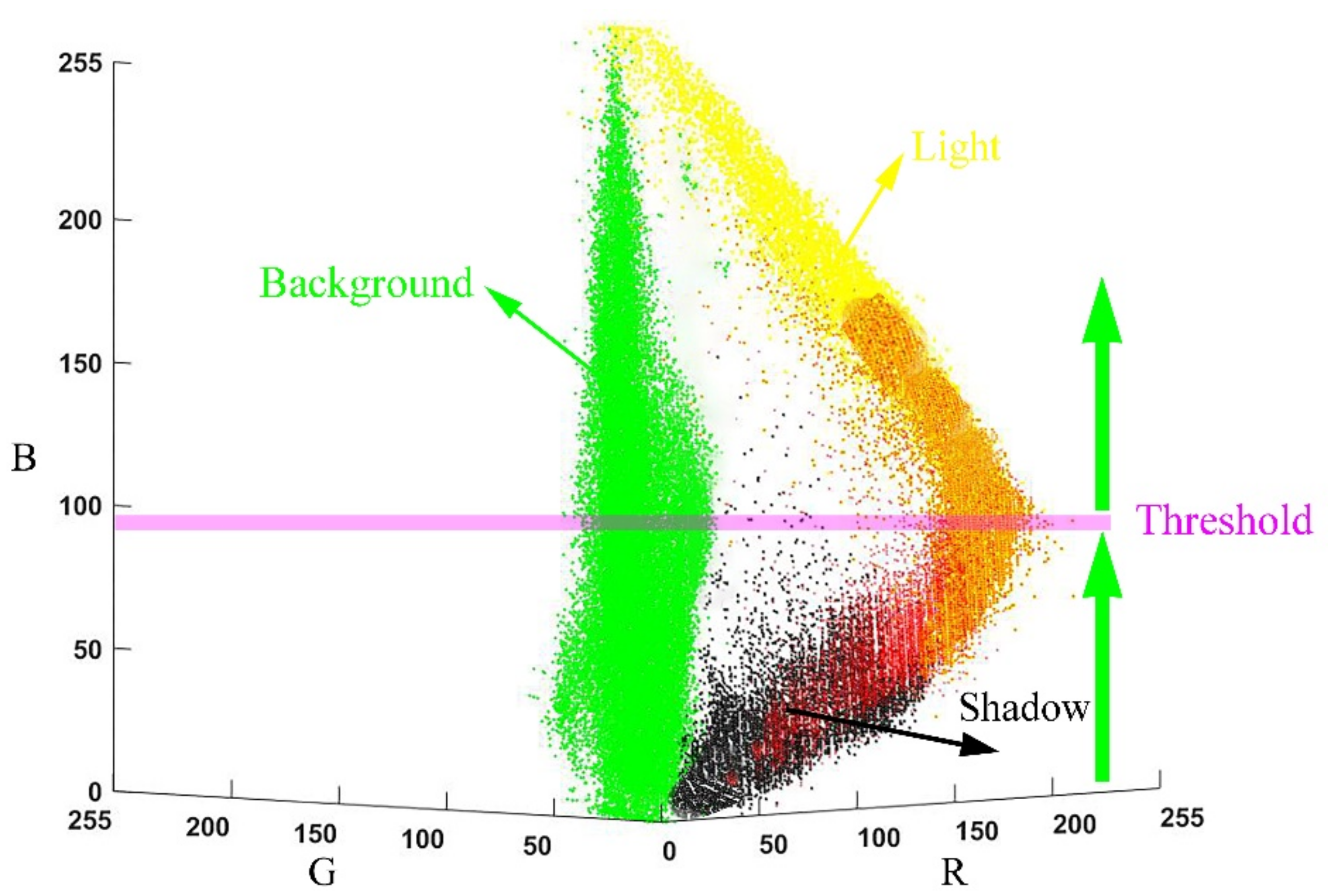

2.3.1. Pixel Distribution of Apple Image in the RGB Color Space

2.3.2. COI Selection for Shadows and Halation

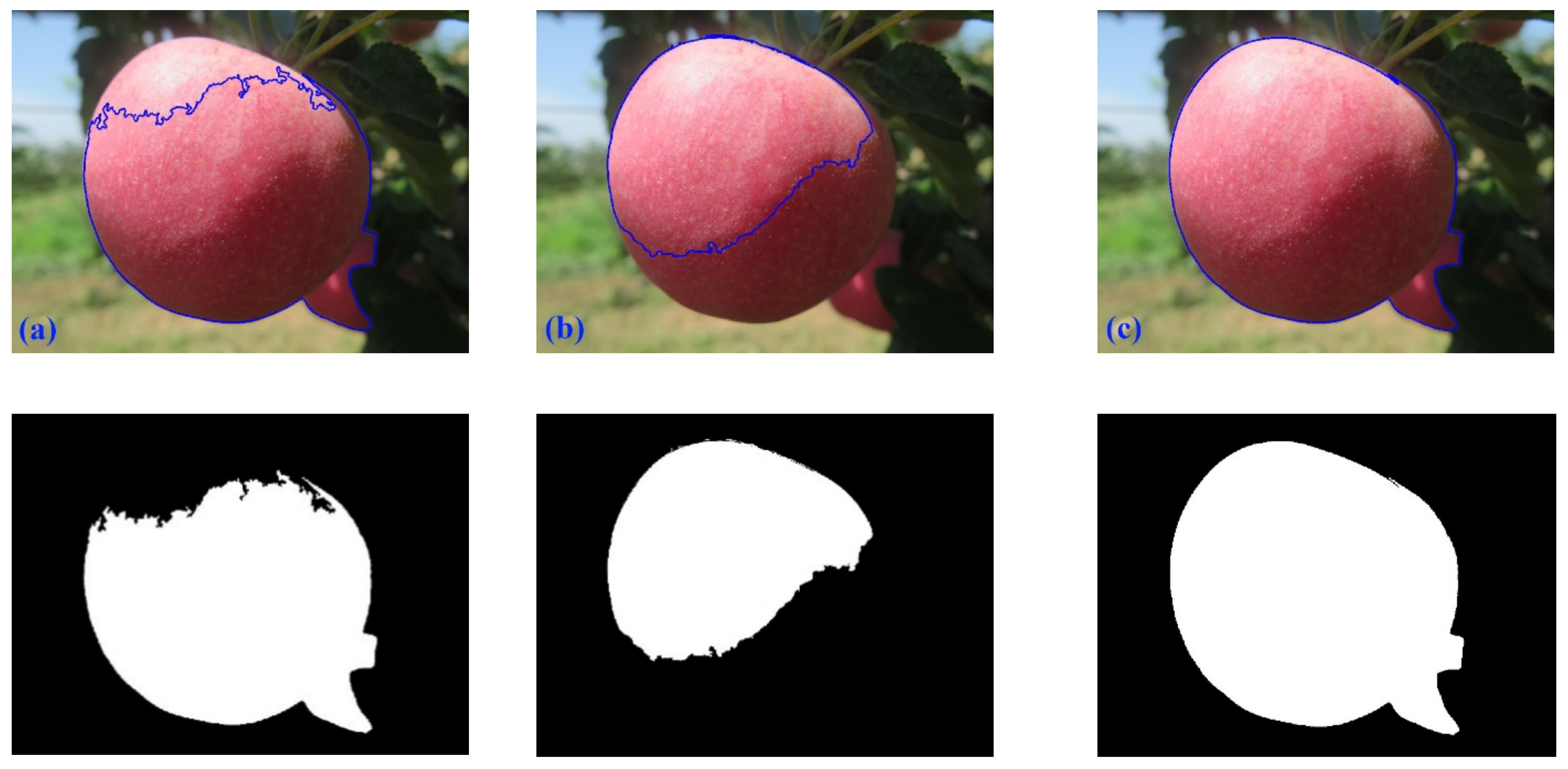

2.4. Apple Image Segmentation

2.4.1. Patch-Based Multi-Feature Segmentation Algorithm

2.4.2. Principal Component Analysis (PCA) Dimensionality Reduction

2.4.3. Halation and Shadow Image Fusion

3. Experimental and Analysis

4. Discussion

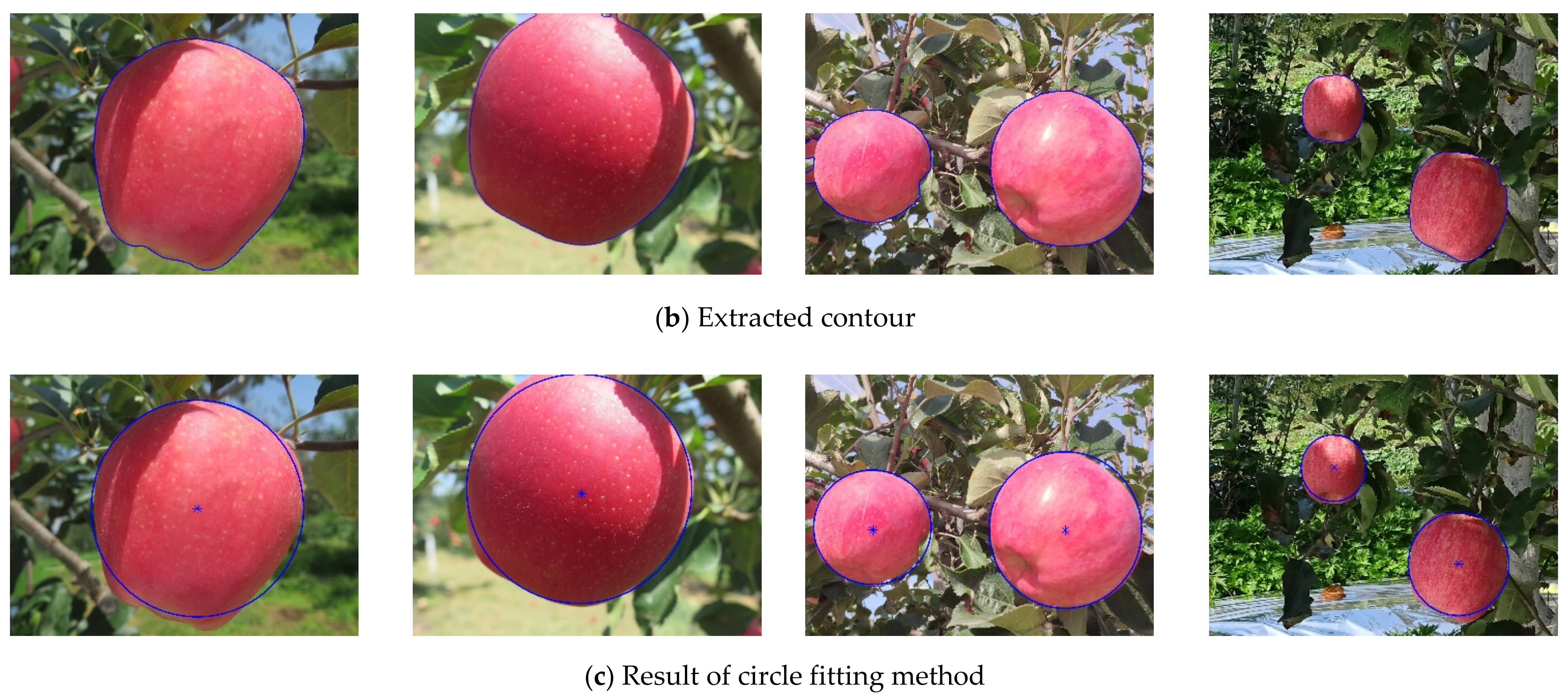

4.1. Location of Apple Targets

4.2. Further Research Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- He, B. Intelligent Robotics for Smart Agriculture. Adv. Robot. Mech. Eng. 2018, 1, 1–3. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Kantor, G.A.; Cheein, F.A.A. Human-robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Ropelewska, E. The Application of Machine Learning for Cultivar Discrimination of Sweet Cherry Endocarp. Agriculture 2020, 11, 6. [Google Scholar] [CrossRef]

- Zhuang, J.; Luo, S.; Hou, C.; Tang, Y.; He, Y.; Xue, X. Detection of orchard citrus fruits using a monocular machine vision-based method for automatic fruit picking applications. Comput. Electron. Agric. 2018, 152, 64–73. [Google Scholar] [CrossRef]

- Gu, Y.; Shi, G.; Liu, X.; Zhao, D. Optimization spectral clustering algorithm of apple image segmentation with noise based on space feature. Trans. Chin. Soc. Agric. Eng. 2016, 32, 159–167. [Google Scholar] [CrossRef]

- Silwal, A.; Davidson, J.R.; Karkee, M.; Mo, C.; Zhang, Q.; Lewis, K. Design, integration, and field evaluation of a robotic apple harvester. J. Field Robot. 2017, 34, 1140–1159. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. A review of key techniques of vision-based control for harvesting robot. Comput. Electron. Agric. 2016, 127, 311–323. [Google Scholar] [CrossRef]

- Ostovar, A.; Ringdahl, O.; Hellström, T. Adaptive Image Thresholding of Yellow Peppers for a Harvesting Robot. Robotics 2018, 7, 11. [Google Scholar] [CrossRef]

- Sabzi, S.; Abbaspour-Gilandeh, Y.; Hernandez-Hernandez, J.L.; Azadshahraki, F.; Karimzadeh, R. The Use of the Combination of Texture, Color and Intensity Transformation Features for Segmentation in the Outdoors with Emphasis on Video Processing. Agriculture 2019, 9, 104. [Google Scholar] [CrossRef]

- Yuan, T.; Lv, L.; Zhang, F.; Fu, J.; Gao, J.; Zhang, J.; Li, W.; Zhang, C.; Zhang, W. Robust Cherry Tomatoes Detection Algorithm in Greenhouse Scene Based on SSD. Agriculture 2020, 10, 160. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors 2020, 20, 5670. [Google Scholar] [CrossRef]

- Arad, B.; Kurtser, P.; Barnea, E.; Harel, B.; Edan, Y.; Ben-Shahar, O. Controlled Lighting and Illumination-Independent Target Detection for Real-Time Cost-Efficient Applications. The Case Study of Sweet Pepper Robotic Harvesting. Sensors 2019, 19, 1390. [Google Scholar] [CrossRef]

- Song, H.; Qu, W.; Wang, D.; Yu, X.; He, D. Shadow removal method of apples based on illumination invariant image. Trans. Chin. Soc. Agric. Eng. 2014, 30, 168–176. [Google Scholar] [CrossRef]

- Huang, L.; He, D. Apple Recognition in Natural Tree Canopy based on Fuzzy 2-partition Entropy. Int. J. Digit. Content Technol. Appl. 2013, 7, 107–115. [Google Scholar] [CrossRef]

- Song, H.; Zhang, W.; Zhang, X.; Zou, R. Shadow removal method of apples based on fuzzy set theory. Trans. Chin. Soc. Agric. Eng. 2014, 30, 135–141. [Google Scholar] [CrossRef]

- Lü, J.; Zhao, D.; Ji, W. Fast tracing recognition method of target fruit for apple harvesting robot. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2014, 45, 65–72. [Google Scholar] [CrossRef]

- Lv, J.; Wang, F.; Xu, L.; Ma, Z.; Yang, B. A segmentation method of bagged green apple image. Sci. Hortic. 2019, 246, 411–417. [Google Scholar] [CrossRef]

- Wu, G.; Li, B.; Zhu, Q.; Huang, M.; Guo, Y. Using color and 3D geometry features to segment fruit point cloud and improve fruit recognition accuracy. Comput. Electron. Agric. 2020, 174, 105475. [Google Scholar] [CrossRef]

- Sun, S.; Wu, Q.; Jiao, L.; Long, Y.; He, D.; Song, H. Recognition of green apples based on fuzzy set theory and manifold ranking algorithm. Optik 2018, 165, 395–407. [Google Scholar] [CrossRef]

- Suh, H.K.; Hofstee, J.W.; Van Henten, E.J. Improved vegetation segmentation with ground shadow removal using an HDR camera. Precis. Agric. 2018, 19, 218–237. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, D.; Jia, W.; Ji, W.; Sun, Y. A Detection Method for Apple Fruits Based on Color and Shape Features. IEEE Access 2019, 7, 67923–67933. [Google Scholar] [CrossRef]

- Xu, W.; Chen, H.; Su, Q.; Ji, C.; Xu, W.; Memon, M.S.; Zhou, J. Shadow detection and removal in apple image segmentation under natural light conditions using an ultrametric contour map. Biosyst. Eng. 2019, 184, 142–154. [Google Scholar] [CrossRef]

- Xie, M.; Ji, Z.; Zhang, G.; Wang, T.; Sun, Q. Mutually exclusive-KSVD: Learning a discriminative dictionary for hyperspectral image classification. Neurocomputing 2018, 315, 177–189. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, Y.; Jiang, B.; Hou, J. An maize leaf segmentation algorithm based on image repairing technology. Comput. Electron. Agric. 2020, 172, 105349. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Kang, H.; Chen, C. Fruit detection, segmentation and 3D visualisation of environments in apple orchards. Comput. Electron. Agric. 2020, 171, 105302. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F. Using 3D Point Clouds Derived from UAV RGB Imagery to Describe Vineyard 3D Macro-Structure. Remote Sens. 2017, 9, 111. [Google Scholar] [CrossRef]

- Lai, C.-W.; Lo, Y.-L.; Yur, J.-P.; Chuang, C.-H. Application of Fiber Bragg Grating Level Sensor and Fabry-Pérot Pressure Sensor to Simultaneous Measurement of Liquid Level and Specific Gravity. IEEE Sens. J. 2012, 12, 827–831. [Google Scholar] [CrossRef]

- Liu, X.; Chen, Y.; Peng, Z.; Wu, J. Infrared Image Super-Resolution Reconstruction Based on Quaternion and High-Order Overlapping Group Sparse Total Variation. Sensors 2019, 19, 5139. [Google Scholar] [CrossRef]

- Jia, Z.; Ng, M.K.; Song, G. Robust quaternion matrix completion with applications to image inpainting. Numer. Linear Algebra Appl. 2019, 26, 2245. [Google Scholar] [CrossRef]

- Evans, C.J.; Sangwine, S.J.; Ell, T.A. Hypercomplex color-sensitive smoothing filters. In Proceedings of the 2000 International Conference on Image Processing (Cat. No.00CH37101), Vancouver, BC, Canada, 10–13 September 2000; pp. 541–544. [Google Scholar]

- Ell, T.A.; Sangwine, S.J. Hypercomplex Fourier Transforms of Color Images. IEEE Trans. Image Process. 2007, 16, 22–35. [Google Scholar] [CrossRef]

- Shi, L.; Funt, B. Quaternion color texture segmentation. Comput. Vis. Image Underst. 2007, 107, 88–96. [Google Scholar] [CrossRef]

- Zhang, X.; Yang, M. Color image knowledge model construction based on ontology. Color Res. Appl. 2019, 44, 651–662. [Google Scholar] [CrossRef]

- Kazakeviciute-Januskeviciene, G.; Janusonis, E.; Bausys, R.; Limba, T.; Kiskis, M. Assessment of the Segmentation of RGB Remote Sensing Images: A Subjective Approach. Remote Sens. 2020, 12, 4152. [Google Scholar] [CrossRef]

- Sural, S.; Qian, G.; Pramanik, S. Segmentation and histogram generation using the HSV color space for image retrieval. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2003. [Google Scholar]

- Wang, W.; Chen, Z.; Yuan, X.; Wu, X. Adaptive image enhancement method for correcting low-illumination images. Inf. Sci. 2019, 496, 25–41. [Google Scholar] [CrossRef]

- Abdalla, A.; Cen, H.; Abdel-Rahman, E.; Wan, L.; He, Y. Color Calibration of Proximal Sensing RGB Images of Oilseed Rape Canopy via Deep Learning Combined with K-Means Algorithm. Remote Sens. 2019, 11, 3001. [Google Scholar] [CrossRef]

- Wang, J.; Zhi, X.; Huang, J.; Meng, C.; Hu, Y.; Zhang, D. Hierarchical Characteristics Analysis of Forest Landscape Pattern Based on GIS and PCA Dimension Reduction Method. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2019, 50, 195–201. [Google Scholar] [CrossRef]

- Gao, L.; Lin, X. A method for accurately segmenting images of medicinal plant leaves with complex backgrounds. Comput. Electron. Agric. 2018, 155, 426–445. [Google Scholar] [CrossRef]

- Piramanayagam, S.; Saber, E.; Schwartzkopf, W.; Koehler, F.W. Supervised Classification of Multisensor Remotely Sensed Images Using a Deep Learning Framework. Remote Sens. 2018, 10, 1429. [Google Scholar] [CrossRef]

- Lei, T.; Jia, X.; Zhang, Y.; Liu, S.; Meng, H.; Nandi, A.K. Superpixel-Based Fast Fuzzy C-Means Clustering for Color Image Segmentation. IEEE Trans. Fuzzy Syst. 2018, 27, 1753–1766. [Google Scholar] [CrossRef]

- Feng, J.; Wang, S.; Liu, G.; Zeng, L. A Separating Method of Adjacent Apples Based on Machine Vision and Chain Code Information. In Proceedings of the International Conference on Computer and Computing Technologies, Beijing, China, 29–31 October 2011; Volume 368, pp. 258–267. [Google Scholar]

- Changyi, X.; Lihua, Z.; Minzan, L.; Yuan, C.; Chunyan, M. Apple detection from apple tree image based on BP neural network and Hough transform. Int. J. Agric. Biol. Eng. 2015, 8, 46–53. [Google Scholar] [CrossRef]

- Niu, L.; Zhou, W.; Wang, D.; He, D.; Zhang, H.; Song, H. Extracting the symmetry axes of partially occluded single apples in natural scene using convex hull theory and shape context algorithm. Multimed. Tools Appl. 2017, 76, 14075–14089. [Google Scholar] [CrossRef]

- Hammam, A.A.; Soliman, M.M.; Hassanien, A.E. Real-time multiple spatiotemporal action localization and prediction approach using deep learning. Neural Netw. 2020, 128, 331–344. [Google Scholar] [CrossRef] [PubMed]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Wu, M.; Yin, X.; Li, Q.; Zhang, J.; Feng, X.; Cao, Q.; Shen, H. Learning deep networks with crowdsourcing for relevance evaluation. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 1–11. [Google Scholar] [CrossRef]

- Haque, I.R.; Neubert, J. Deep learning approaches to biomedical image segmentation. Inform. Med. Unlocked 2020, 18, 100297. [Google Scholar] [CrossRef]

- Amanullah, M.A.; Habeeb, R.A.A.; Nasaruddin, F.H.; Gani, A.; Ahmed, E.; Nainar, A.S.M.; Akim, N.M.; Imran, M. Deep learning and big data technologies for IoT security. Comput. Commun. 2020, 151, 495–517. [Google Scholar] [CrossRef]

- Lin, Z.; Zhang, Z.; Chen, L.-Z.; Cheng, M.-M.; Lu, S.-P. Interactive Image Segmentation with First Click Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 13336–13345. [Google Scholar]

- Karabağ, C.; Verhoeven, J.; Miller, N.; Reyes-Aldasoro, C. Texture Segmentation: An Objective Comparison between Tradi-tional and Deep-Learning Methodologies. Preprints 2019. [Google Scholar] [CrossRef]

- Huang, J.; Li, J.; Liu, L.; Luo, K.; Chen, X.; Liang, F. PCB Image Registration Based on a Priori Threshold SURF Algorithm. In Proceedings of the International Conference on Smart Vehicular Technology, Transportation, Communication and Applications, Mount Emei, China, 25–28 October 2018; pp. 440–447. [Google Scholar]

- Li, W.; Li, F.; Du, J. A level set image segmentation method based on a cloud model as the priori contour. Signal Image Video Process. 2018, 13, 103–110. [Google Scholar] [CrossRef]

| Method | Method Source | Recall | Precision | F-Measure | FPR | FNR |

|---|---|---|---|---|---|---|

| K-means | K-means based on R-B (Jidong Lv et al., 2019) | 74.15% | 65.31% | 69.45% | 21.07% | 24.93% |

| Fuzzy C-means | Fast and robust fuzzy C-means (Tao Lei et al., 2017) | 93.25% | 96.82% | 95.00% | 1.51% | 6.68% |

| Deep learning | Mask R-CNN (Kaiming HE et al., 2018) | 97.69% | 97.92% | 97.80% | 0.33% | 2.25% |

| Proposed algorithm | 98.79% | 99.91% | 99.35% | 0.04% | 1.18% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, P.; Lang, G.; Guo, P.; Liu, Z.; Yang, F.; Yan, B.; Lei, X. Multi-Feature Patch-Based Segmentation Technique in the Gray-Centered RGB Color Space for Improved Apple Target Recognition. Agriculture 2021, 11, 273. https://doi.org/10.3390/agriculture11030273

Fan P, Lang G, Guo P, Liu Z, Yang F, Yan B, Lei X. Multi-Feature Patch-Based Segmentation Technique in the Gray-Centered RGB Color Space for Improved Apple Target Recognition. Agriculture. 2021; 11(3):273. https://doi.org/10.3390/agriculture11030273

Chicago/Turabian StyleFan, Pan, Guodong Lang, Pengju Guo, Zhijie Liu, Fuzeng Yang, Bin Yan, and Xiaoyan Lei. 2021. "Multi-Feature Patch-Based Segmentation Technique in the Gray-Centered RGB Color Space for Improved Apple Target Recognition" Agriculture 11, no. 3: 273. https://doi.org/10.3390/agriculture11030273

APA StyleFan, P., Lang, G., Guo, P., Liu, Z., Yang, F., Yan, B., & Lei, X. (2021). Multi-Feature Patch-Based Segmentation Technique in the Gray-Centered RGB Color Space for Improved Apple Target Recognition. Agriculture, 11(3), 273. https://doi.org/10.3390/agriculture11030273