Measuring Canopy Geometric Structure Using Optical Sensors Mounted on Terrestrial Vehicles: A Case Study in Vineyards

Abstract

:1. Introduction

- public dataset made of sensory data;

- portable and standalone data acquisition hardware module;

- benchmark and assessment of open-source and commercial software tools about performing Structure from Motion and Multi-View Stereo tasks;

- data processing pipeline capable of transforming raw data (monocular images and laser scans) to fine agriculture-based 3D models and the interpretation of their geometric aspects.

2. Materials and Methods

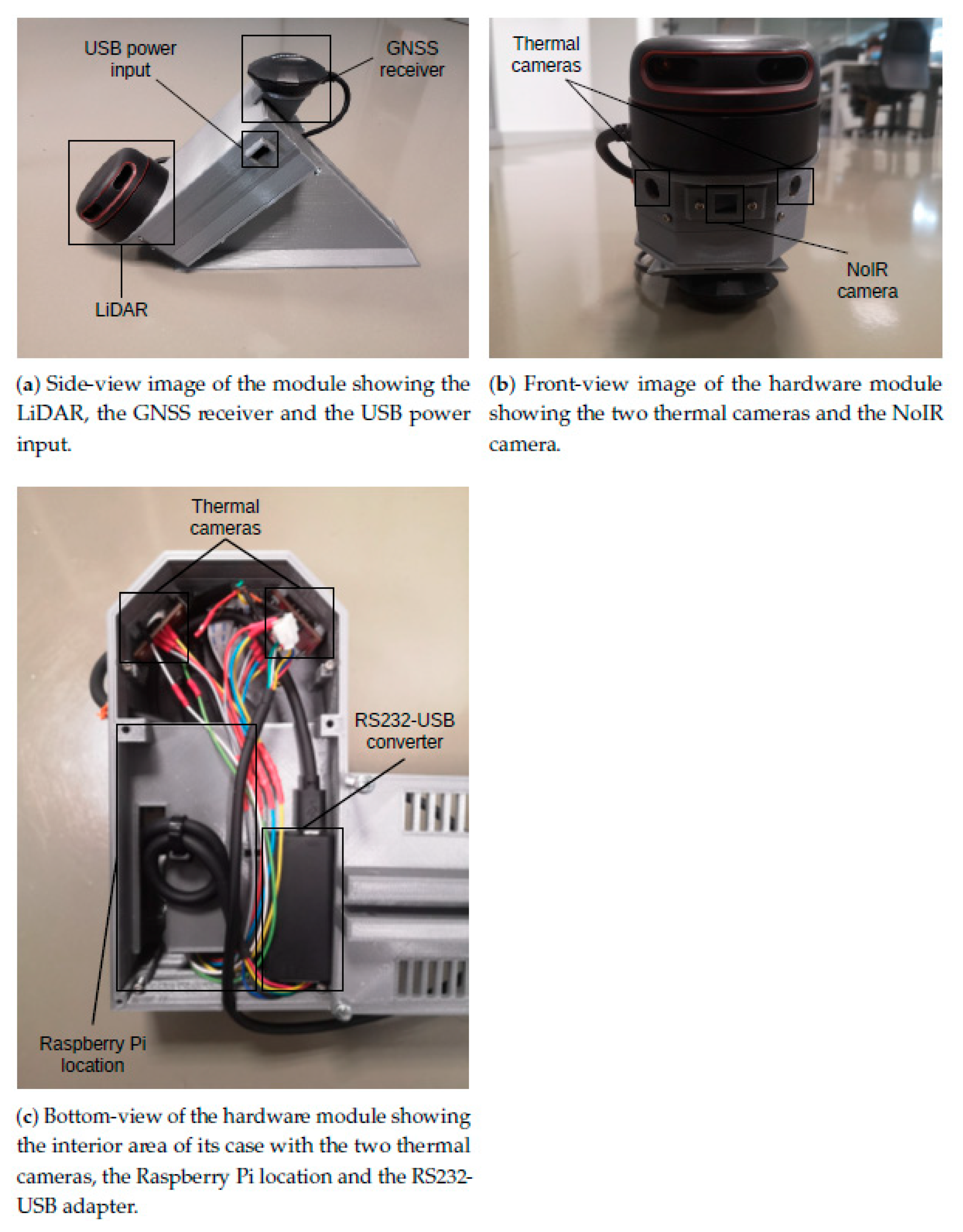

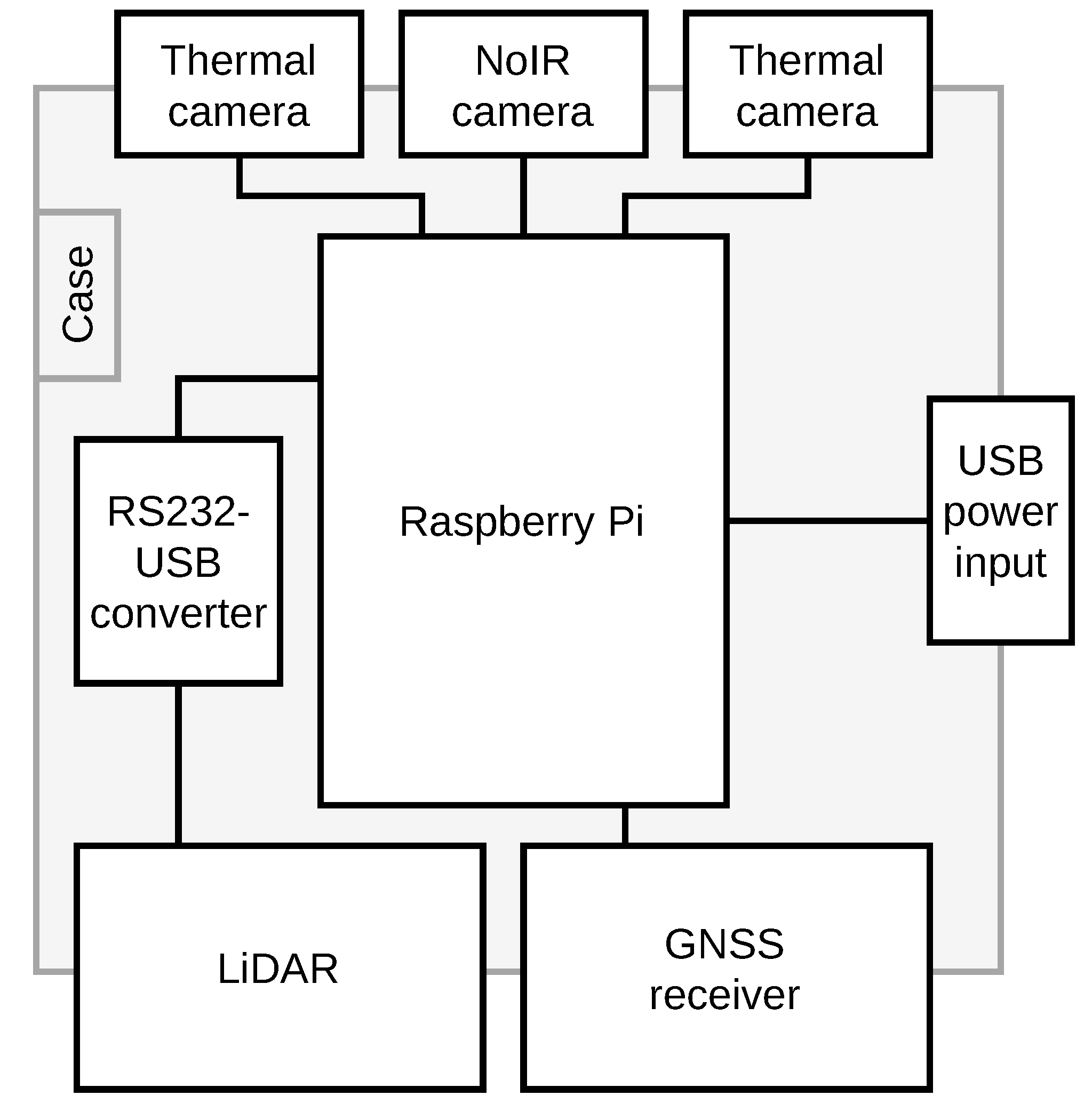

2.1. Data Acquisition Hardware Module

- -

- 1× No-Infrared (NoIR) camera (Pi NoIR Camera (https://www.raspberrypi.org/products/pi-noir-camera-v2/ accessed on 3 March 2021));

- -

- 2× thermal cameras (MLX90640 (https://bit.ly/3p63a5y accessed on 3 March 2021));

- -

- 1× planar LiDAR with mechanical motion (Slamtec’s RPLIDAR A2 (https://www.slamtec.com/en/Lidar/A2 accessed at on 3 March 2021));

- -

- 1× Recommended Standard 232 (RS232) to Universal Serial Bus (USB) adapter (https://bit.ly/3ixhrWM accessed on 3 March 2021);

- -

- 1× Global Navigation Satellite System (GNSS) receiver (GP-808G (https://www.sparkfun.com/products/14198 accessed at on 3 March 2021));

- -

- 1× Raspberry Pi 3B+ (https://www.raspberrypi.org/products/raspberry-pi-3-model-b-plus/ accessed on 3 March 2021);

- -

- 1× 3D printed with Polylactic Acid filament case that accommodates all previous components.

2.2. Study Area and Path

2.3. Dataset Description

2.4. On-Site Manual Measurements

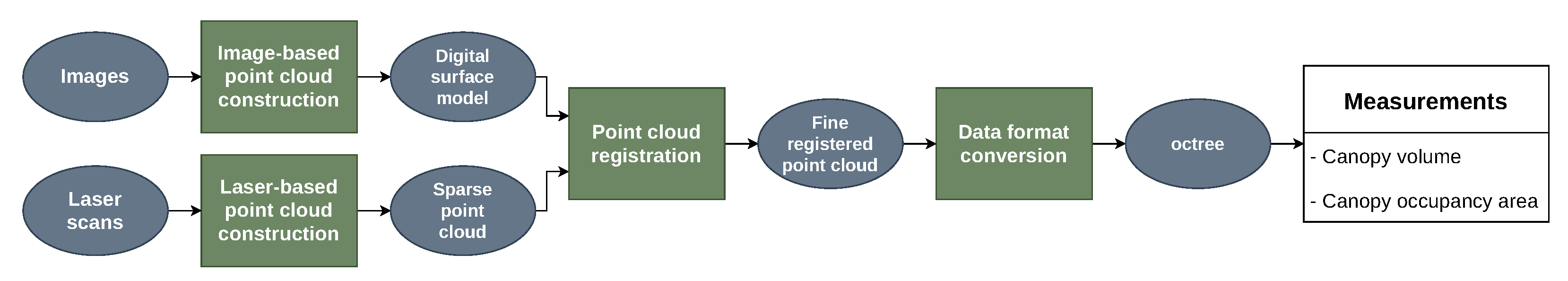

2.5. Data Processing Pipeline

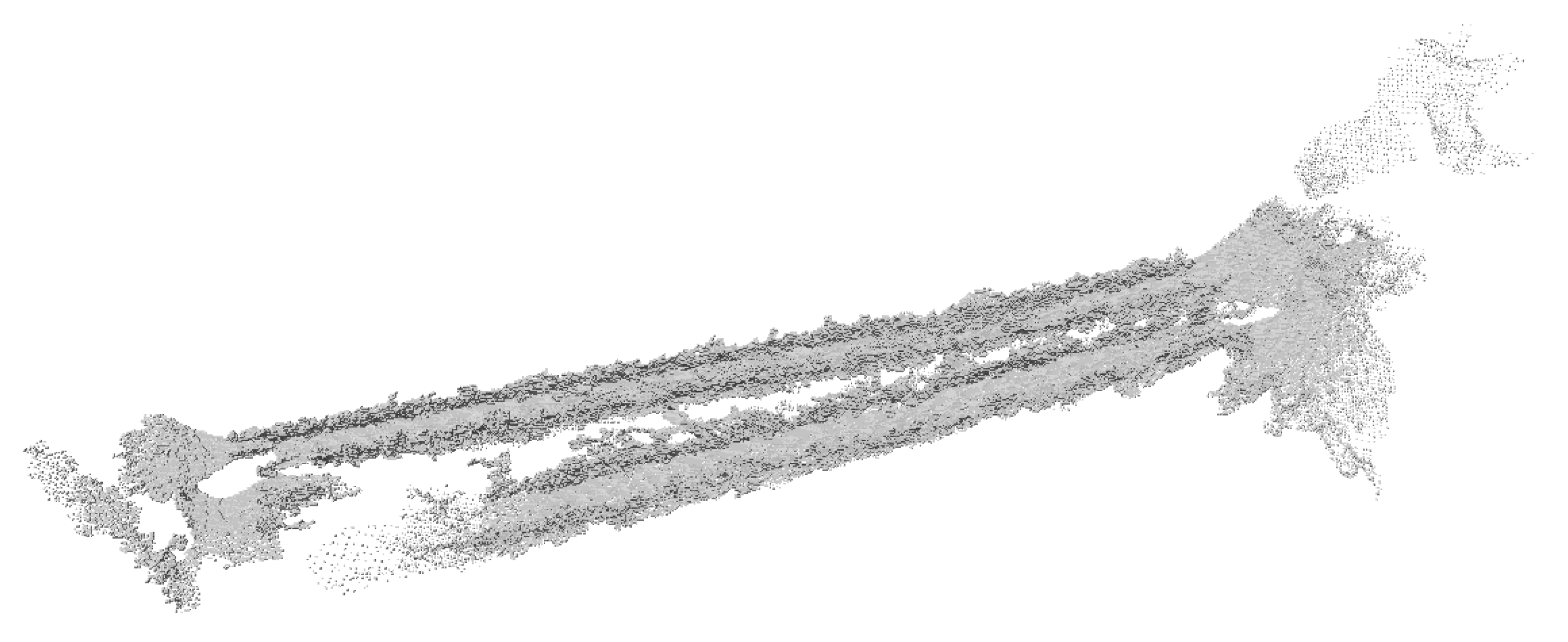

2.5.1. Point Cloud Construction

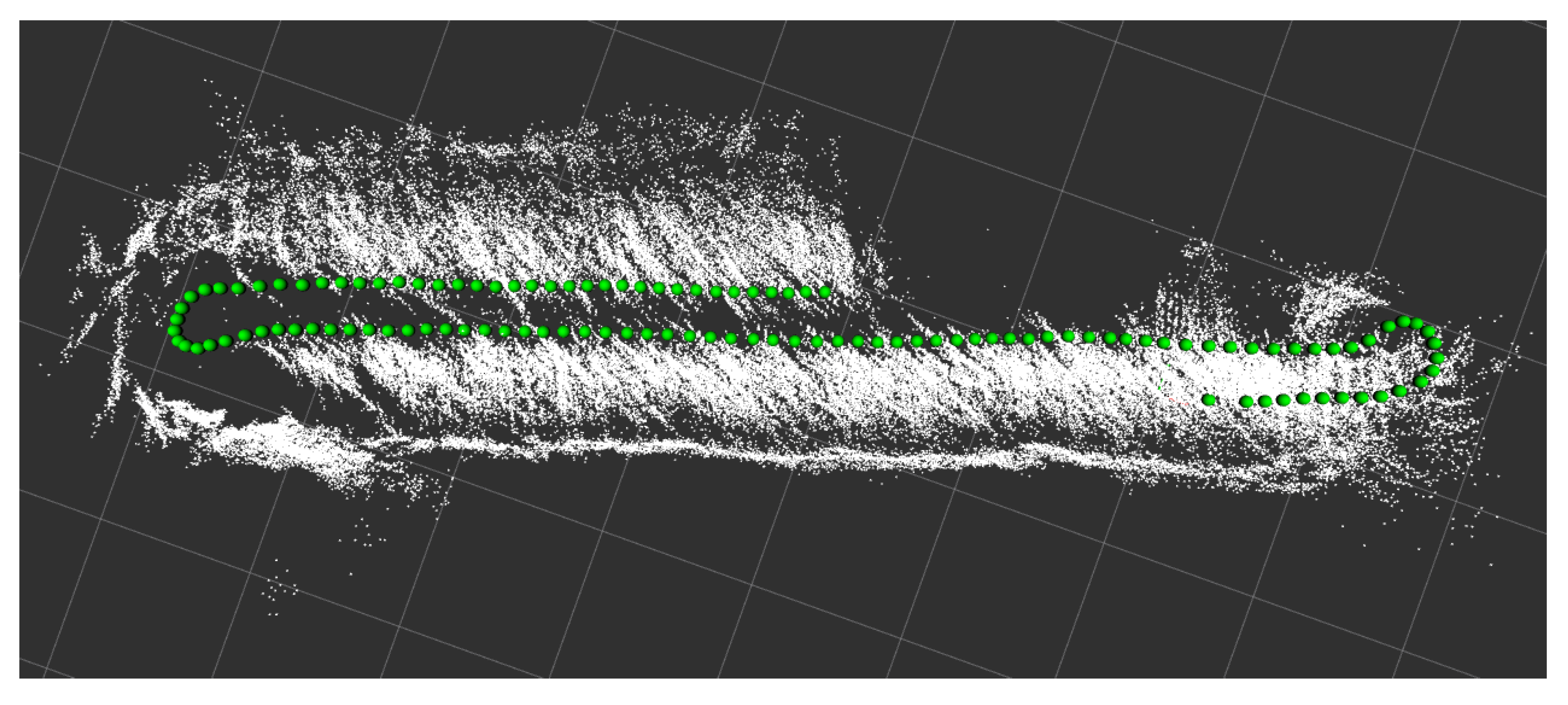

2.5.2. Point Cloud Registration

2.5.3. Point Cloud to Octree Format

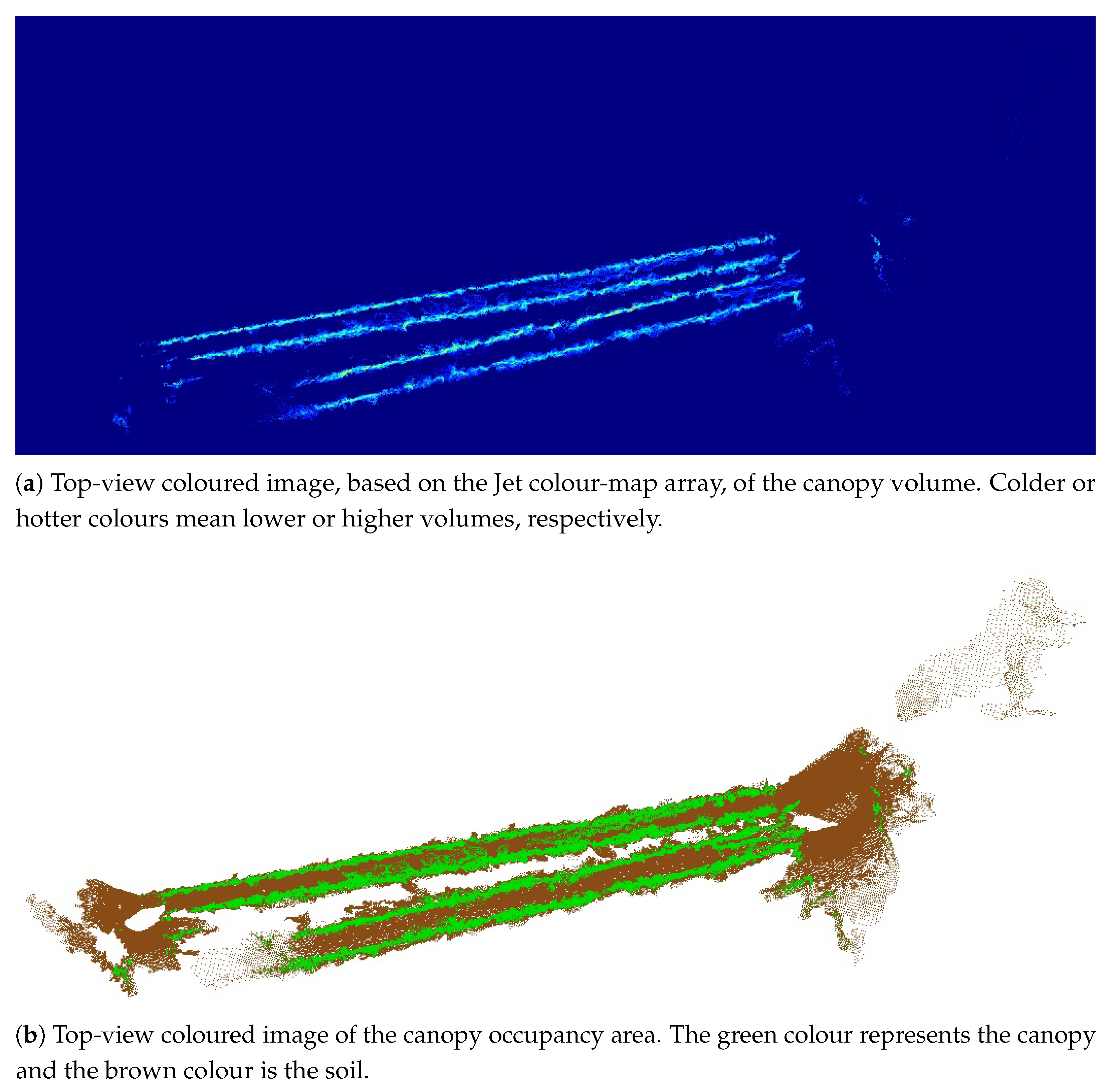

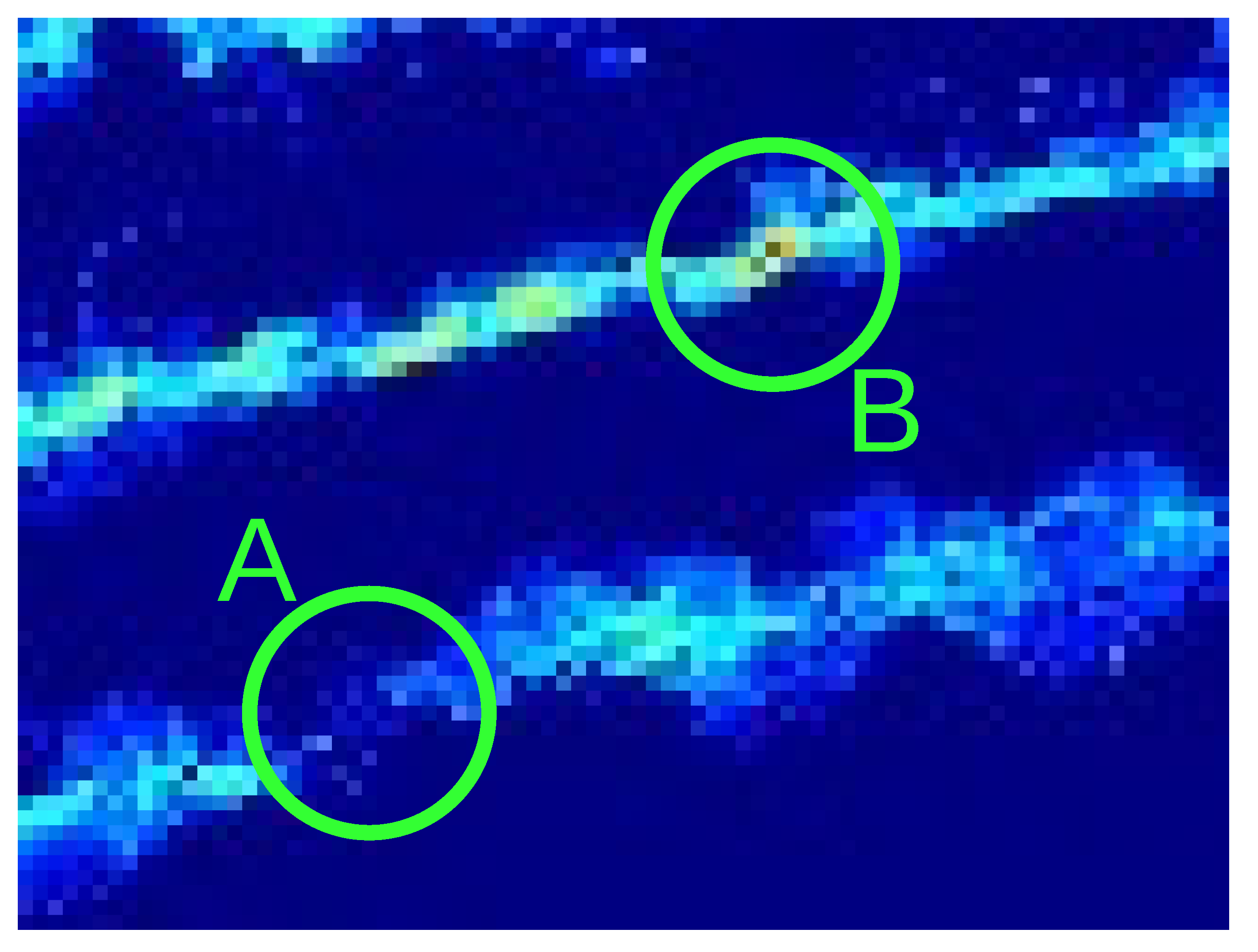

2.5.4. Geometric Measurements

3. Results and Discussion

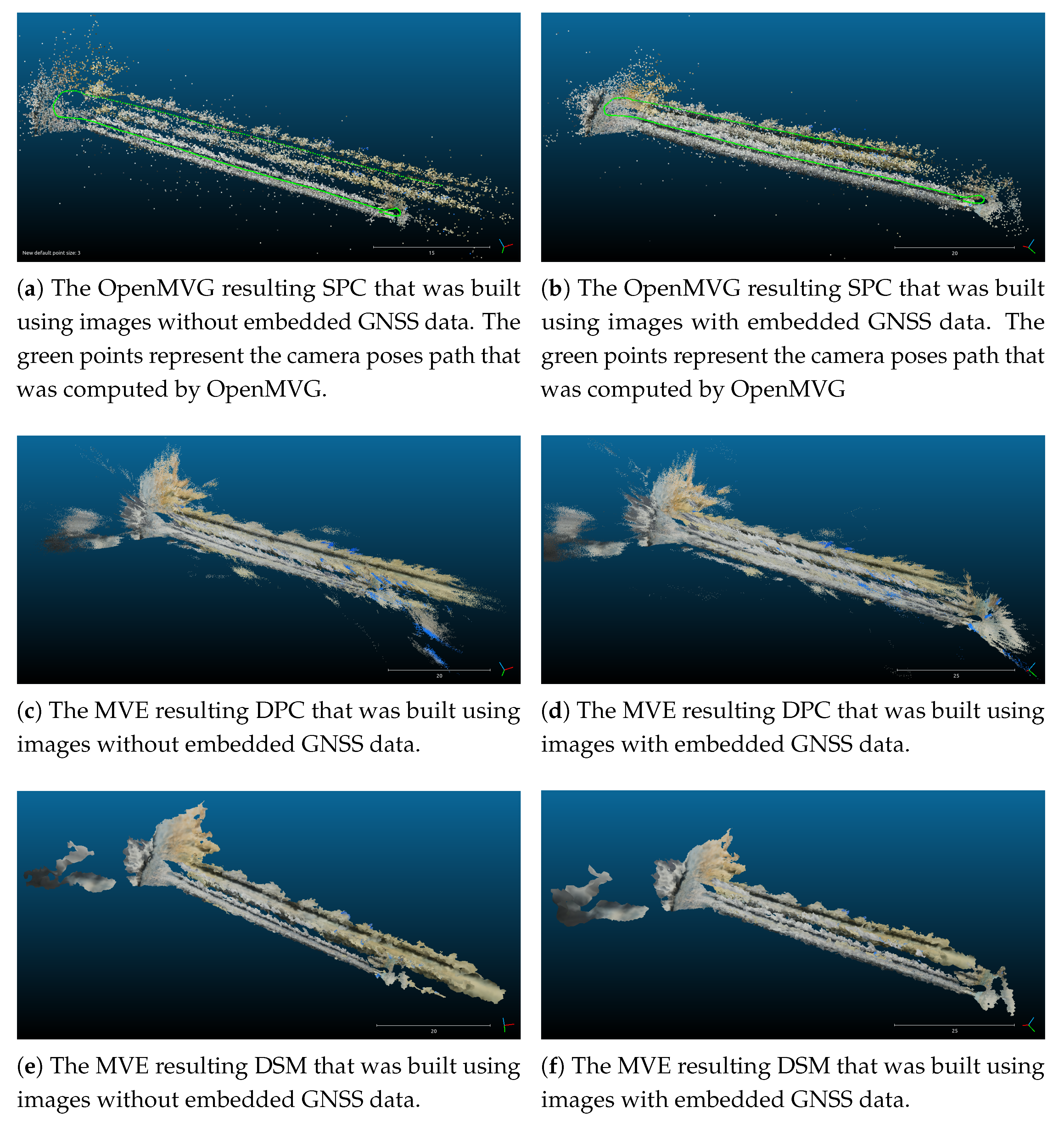

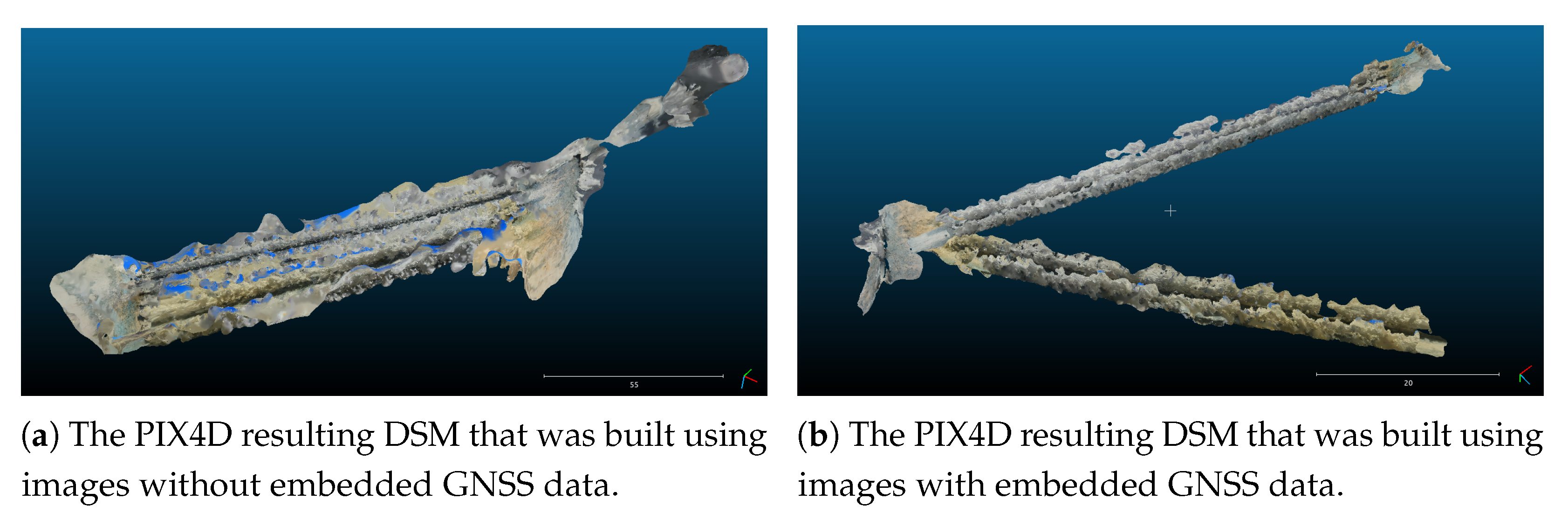

3.1. Results of the Point Cloud Construction

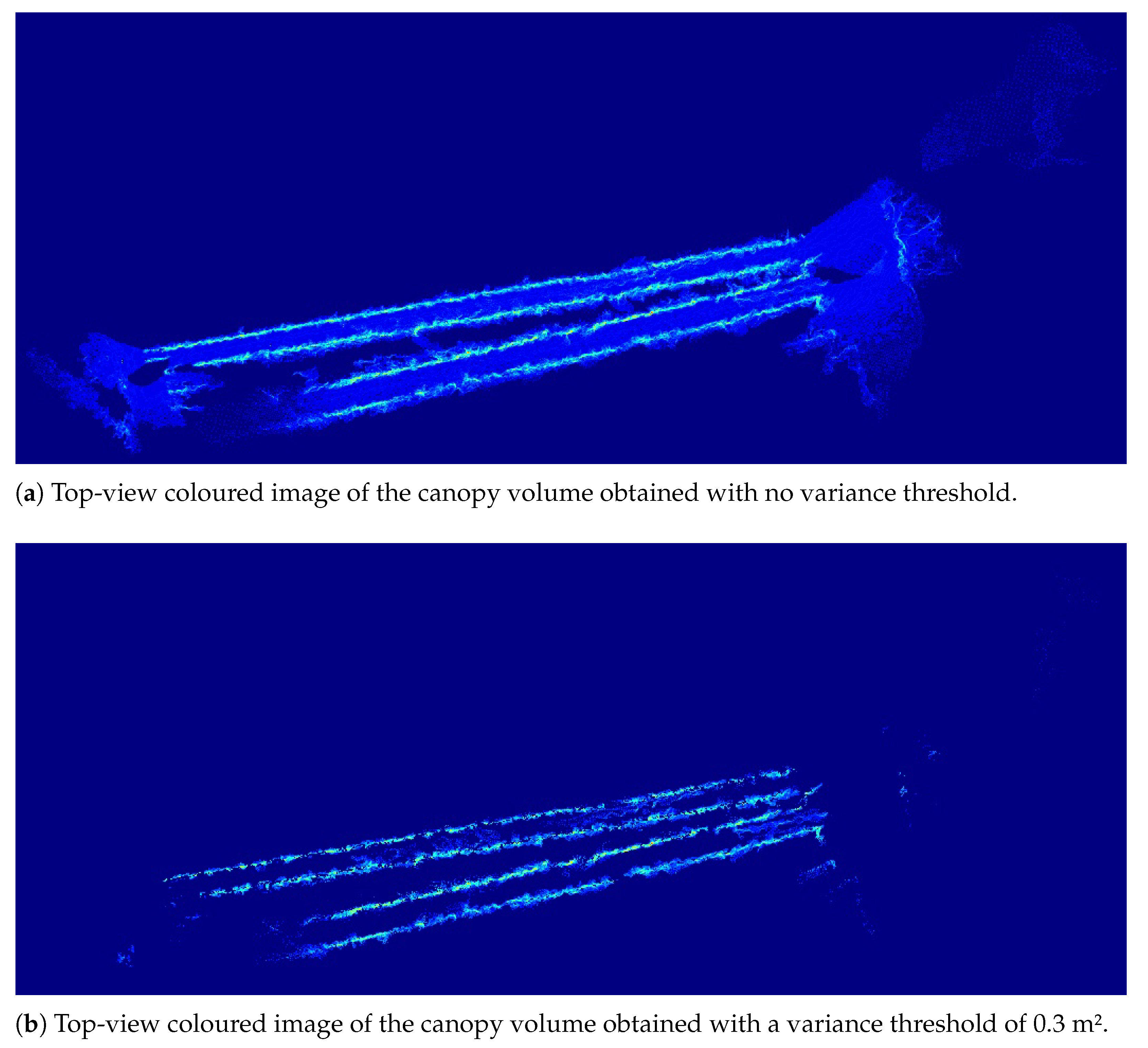

3.2. Results of the Point Cloud Registration

3.3. Results of the Point Cloud to Octree Conversion

3.4. Results of the Geometric Measurements

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ALS | Aerial Laser Scanning |

| DPC | Dense Point Cloud |

| DSM | Digital Terrain Model |

| DTM | Digital Surface Model |

| EGNOS | European Geostationary Navigation Overlay Service |

| Exif | Exchangeable image file format |

| GNSS | Global Navigation Satellite System |

| ICP | Iterative Closest Point |

| LAI | Leaf Area Index |

| LiDAR | Light Detection And Ranging |

| MVE | Multi-View Environment |

| MVS | Multi-View Stereo |

| NDVI | Normalised Difference Vegetation Index |

| NIR | Near-Infrared |

| NoIR | No-Infrared |

| OpenMVG | Open Multiple View Geometry |

| PCL | Point Cloud Library |

| ROS | Robot Operating System |

| RS232 | Recommended Standard 232 |

| SfM | Structure from Motion |

| SPC | Sparse Point Cloud |

| TLS | Terrestrial Laser Scanning |

| TRV | Tree Row Volume |

| UAV | Unmanned Aerial Vehicles |

| USB | Universal Serial Bus |

References

- Sun, G.; Wang, X.; Ding, Y.; Lu, W.; Sun, Y. Remote Measurement of Apple Orchard Canopy Information Using Unmanned Aerial Vehicle Photogrammetry. Agronomy 2019, 9, 774. [Google Scholar] [CrossRef] [Green Version]

- Escolà, A.; Martínez-Casasnovas, J.A.; Rufat, J.; Arnó, J.; Arbonés, A.; Sebé, F.; Pascual, M.; Gregorio, E.; Rosell-Polo, J.R. Mobile terrestrial laser scanner applications in precision fruticulture/horticulture and tools to extract information from canopy point clouds. Precis. Agric. 2017, 18. [Google Scholar] [CrossRef] [Green Version]

- Kalisperakis, I.; Stentoumis, C.; Grammatikopoulos, L.; Karantzalos, K. Leaf area index estimation in vineyards from Uav hyperspectral data, 2D image mosaics and 3D canopy surface models. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W4, 299–303. [Google Scholar] [CrossRef] [Green Version]

- Anifantis, A.S.; Camposeo, S.; Vivaldi, G.A.; Santoro, F.; Pascuzzi, S. Comparison of UAV Photogrammetry and 3D Modeling Techniques with Other Currently Used Methods for Estimation of the Tree Row Volume of a Super-High-Density Olive Orchard. Agriculture 2019, 9, 233. [Google Scholar] [CrossRef] [Green Version]

- Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Tortia, C.; Mania, E.; Guidoni, S.; Gay, P. Leaf Area Index evaluation in vineyards using 3D point clouds from UAV imagery. Precis. Agric. 2020, 21. [Google Scholar] [CrossRef] [Green Version]

- Mathews, A.J.; Jensen, J.L.R. Visualizing and Quantifying Vineyard Canopy LAI Using an Unmanned Aerial Vehicle (UAV) Collected High Density Structure from Motion Point Cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef] [Green Version]

- Zheng, G.; Moskal, L.M.; Kim, S. Retrieval of Effective Leaf Area Index in Heterogeneous Forests With Terrestrial Laser Scanning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 777–786. [Google Scholar] [CrossRef]

- Khaliq, A.; Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Chiaberge, M.; Gay, P. Comparison of Satellite and UAV-Based Multispectral Imagery for Vineyard Variability Assessment. Remote Sens. 2019, 11, 436. [Google Scholar] [CrossRef] [Green Version]

- Ma, Q.; Su, Y.; Guo, Q. Comparison of Canopy Cover Estimations From Airborne LiDAR, Aerial Imagery, and Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4225–4236. [Google Scholar] [CrossRef]

- Comba, L.; Zaman, S.; Biglia, A.; Ricauda Aimonino, D.; Dabbene, F.; Gay, P. Semantic interpretation and complexity reduction of 3D point clouds of vineyards. Biosyst. Eng. 2020, 197, 216–230. [Google Scholar] [CrossRef]

- Specht, C.; Pawelski, J.; Smolarek, L.; Specht, M.; Dabrowski, P. Assessment of the Positioning Accuracy of DGPS and EGNOS Systems in the Bay of Gdansk using Maritime Dynamic Measurements. J. Navig. 2019, 72, 575–587. [Google Scholar] [CrossRef] [Green Version]

- Robot Operating System (ROS). Available online: https://www.ros.org/ (accessed on 4 February 2020).

- Schönberger, J.L.; Frahm, J. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Fuhrmann, S.; Langguth, F.; Goesele, M. MVE—A Multi-View Reconstruction Environment. In Proceedings of the Eurographics Workshop on Graphics and Cultural Heritage (GCH), Darmstadt, Germany, 6–8 October 2014. [Google Scholar]

- Moulon, P.; Monasse, P.; Perrot, R.; Marlet, R. OpenMVG: Open Multiple View Geometry. In Reproducible Research in Pattern Recognition; Kerautret, B., Colom, M., Monasse, P., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 60–74. [Google Scholar]

- PIX4D. Available online: https://www.pix4d.com/ (accessed on 4 February 2020).

- Xu, Y.; Boerner, R.; Yao, W.; Hoegner, L.; Stilla, U. Pairwise coarse registration of point clouds in urban scenes using voxel-based 4-planes congruent sets. ISPRS J. Photogramm. Remote. Sens. 2019, 151, 106–123. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Dong, Z.; Liang, F.; Yang, B.; Xu, Y.; Zang, Y.; Li, J.; Wang, Y.; Dai, W.; Fan, H.; Hyyppä, J.; et al. Registration of large-scale terrestrial laser scanner point clouds: A review and benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 163, 327–342. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar] [CrossRef] [Green Version]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Zhang, Y.; Gu, R. Research Status and Prospects on Plant Canopy Structure Measurement Using Visual Sensors Based on Three-Dimensional Reconstruction. Agriculture 2020, 10, 462. [Google Scholar] [CrossRef]

- CloudCompare. Available online: http://www.cloudcompare.org/ (accessed on 4 February 2020).

- Codis, S.; Carra, M.; Delpuech, X.; Montegano, P.; Nicot, H.; Ruelle, B.; Ribeyrolles, X.; Savajols, B.; Vergès, A.; Naud, O. Dataset of spray deposit distribution in vine canopy for two contrasted performance sprayers during a vegetative cycle associated with crop indicators (LWA and TRV). Data Brief 2018, 18, 415–421. [Google Scholar] [CrossRef] [PubMed]

| Topic | Type | Description |

|---|---|---|

| /fix | sensor_msgs/NavSatFix | GNSS localisation data |

| /scan | sensor_msgs/LaserScan | Scanning data from the planar LiDAR |

| /fisheye_cam/camera_info | sensor_msgs/CameraInfo | Information data about the NoIR camera |

| /fisheye_cam/image/compressed | sensor_msgs/CompressedImage | Compressed image data |

| Measurement | Value (m) |

|---|---|

| Inter-row width | 2.75 |

| Row width | 1.10 |

| Row length | 61.20 |

| Row height | 1.50 |

| Distance between two vine trees | 0.90 |

| Method | Number of Points | |||

|---|---|---|---|---|

| SPC | DPC | DSM | ||

| Image (without GNSS data) | OpenMVG + MVE | 41,853 | 8,213,802 | 510,049 |

| PIX4D | - | - | 252,182 | |

| Image (with GNSS data) | OpenMVG + MVE | 74,351 | 8,357,579 | 501,756 |

| PIX4D | - | - | 317,889 | |

| Laser | 91,554 | - | - | |

| s2 Threshold in the z-Axis (m2) | (m3) | (m2) |

|---|---|---|

| 0.10 | 105.19 | 189.12 |

| 0.15 | 100.44 | 179.32 |

| 0.20 | 95.19 | 169.22 |

| Number of Rows | (m3) | (m2) |

|---|---|---|

| 1 | 100.98 | 67.32 |

| 2.5 | 252.45 | 168.30 |

| s2 Threshold in the z-Axis (m2) | (%) | (%) |

|---|---|---|

| 0.10 | +139.99 | −11.01 |

| 0.15 | +151.34 | −6.15 |

| 0.20 | +165.21 | −0.54 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

da Silva, D.Q.; Aguiar, A.S.; dos Santos, F.N.; Sousa, A.J.; Rabino, D.; Biddoccu, M.; Bagagiolo, G.; Delmastro, M. Measuring Canopy Geometric Structure Using Optical Sensors Mounted on Terrestrial Vehicles: A Case Study in Vineyards. Agriculture 2021, 11, 208. https://doi.org/10.3390/agriculture11030208

da Silva DQ, Aguiar AS, dos Santos FN, Sousa AJ, Rabino D, Biddoccu M, Bagagiolo G, Delmastro M. Measuring Canopy Geometric Structure Using Optical Sensors Mounted on Terrestrial Vehicles: A Case Study in Vineyards. Agriculture. 2021; 11(3):208. https://doi.org/10.3390/agriculture11030208

Chicago/Turabian Styleda Silva, Daniel Queirós, André Silva Aguiar, Filipe Neves dos Santos, Armando Jorge Sousa, Danilo Rabino, Marcella Biddoccu, Giorgia Bagagiolo, and Marco Delmastro. 2021. "Measuring Canopy Geometric Structure Using Optical Sensors Mounted on Terrestrial Vehicles: A Case Study in Vineyards" Agriculture 11, no. 3: 208. https://doi.org/10.3390/agriculture11030208

APA Styleda Silva, D. Q., Aguiar, A. S., dos Santos, F. N., Sousa, A. J., Rabino, D., Biddoccu, M., Bagagiolo, G., & Delmastro, M. (2021). Measuring Canopy Geometric Structure Using Optical Sensors Mounted on Terrestrial Vehicles: A Case Study in Vineyards. Agriculture, 11(3), 208. https://doi.org/10.3390/agriculture11030208