Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs

Abstract

1. Introduction

2. Materials and Methods

2.1. Ethics Statement

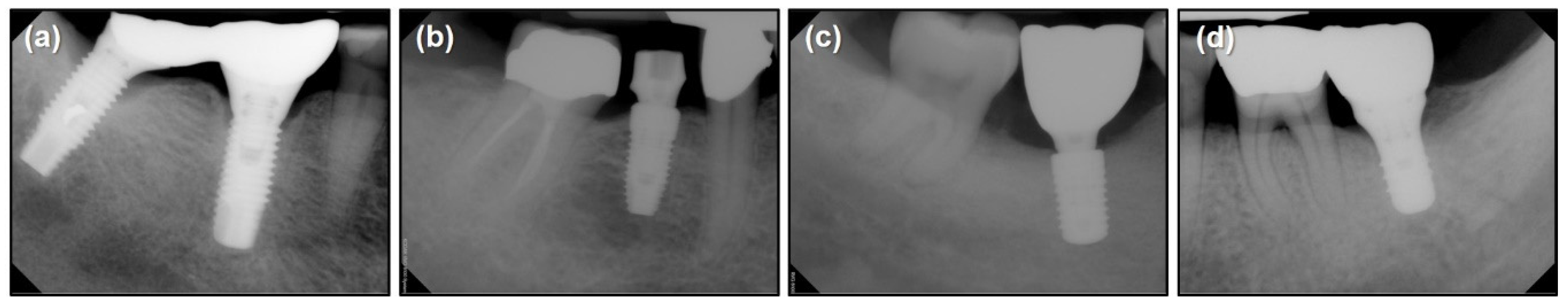

2.2. Materials

2.3. Methods

2.3.1. Preprocessing

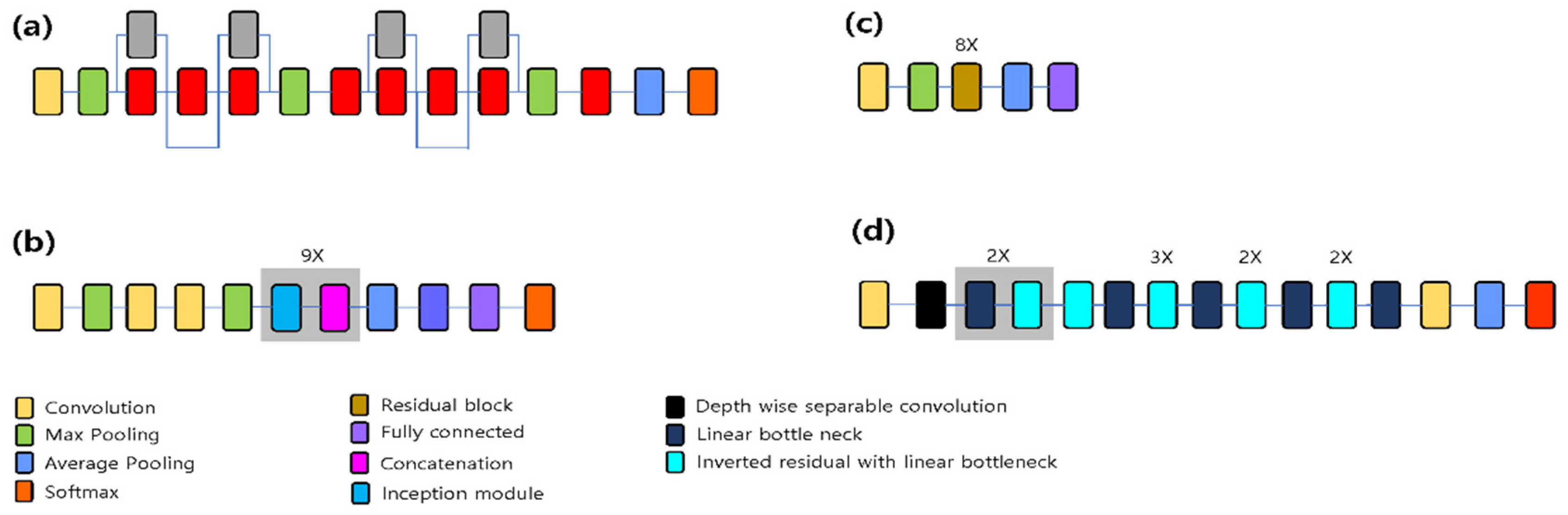

2.3.2. Network Pre-training

2.3.3. Data Augmentation

2.3.4. Training Options

2.3.5. Metrics for Accuracy Comparison

3. Results

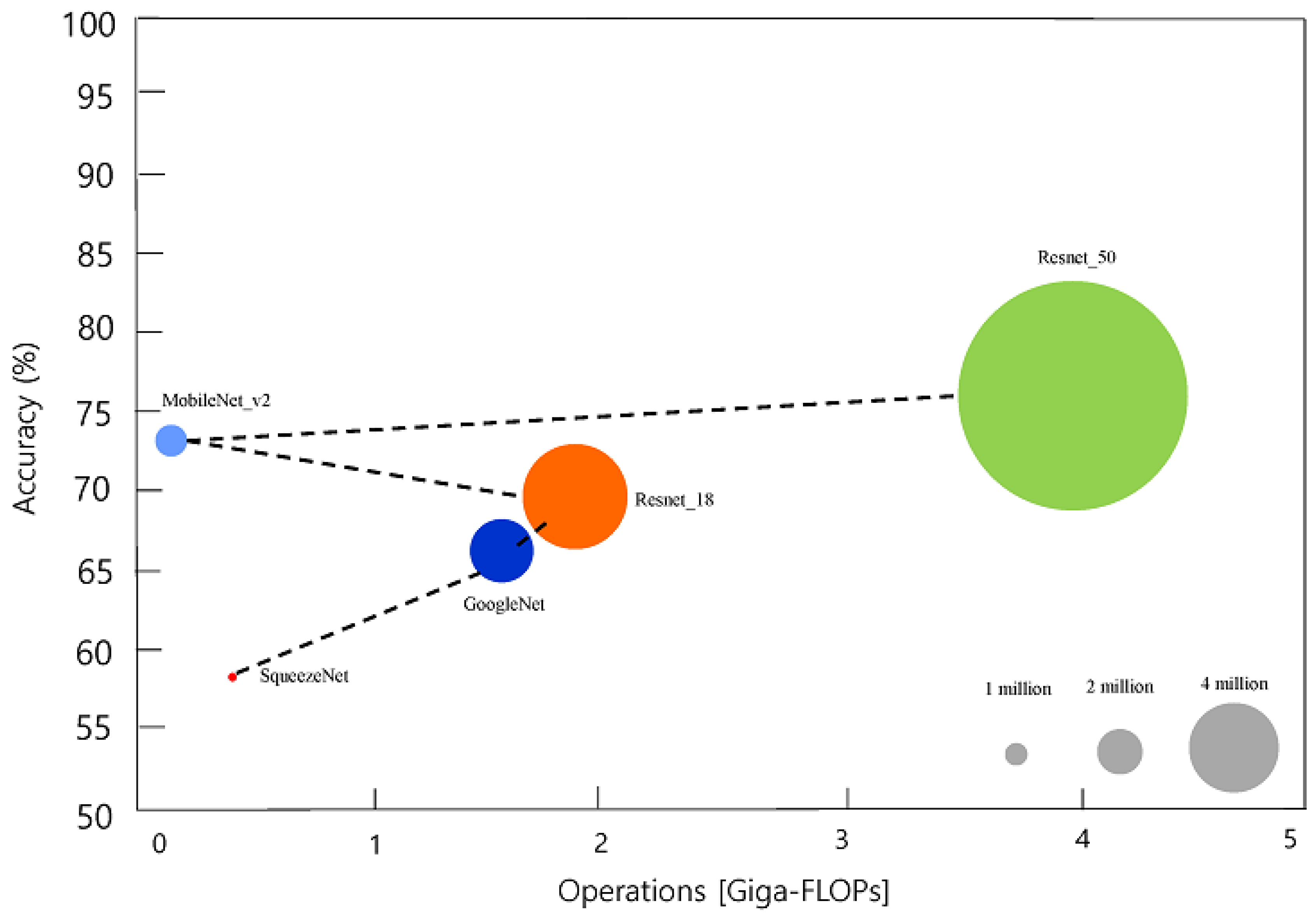

3.1. Classification Performance

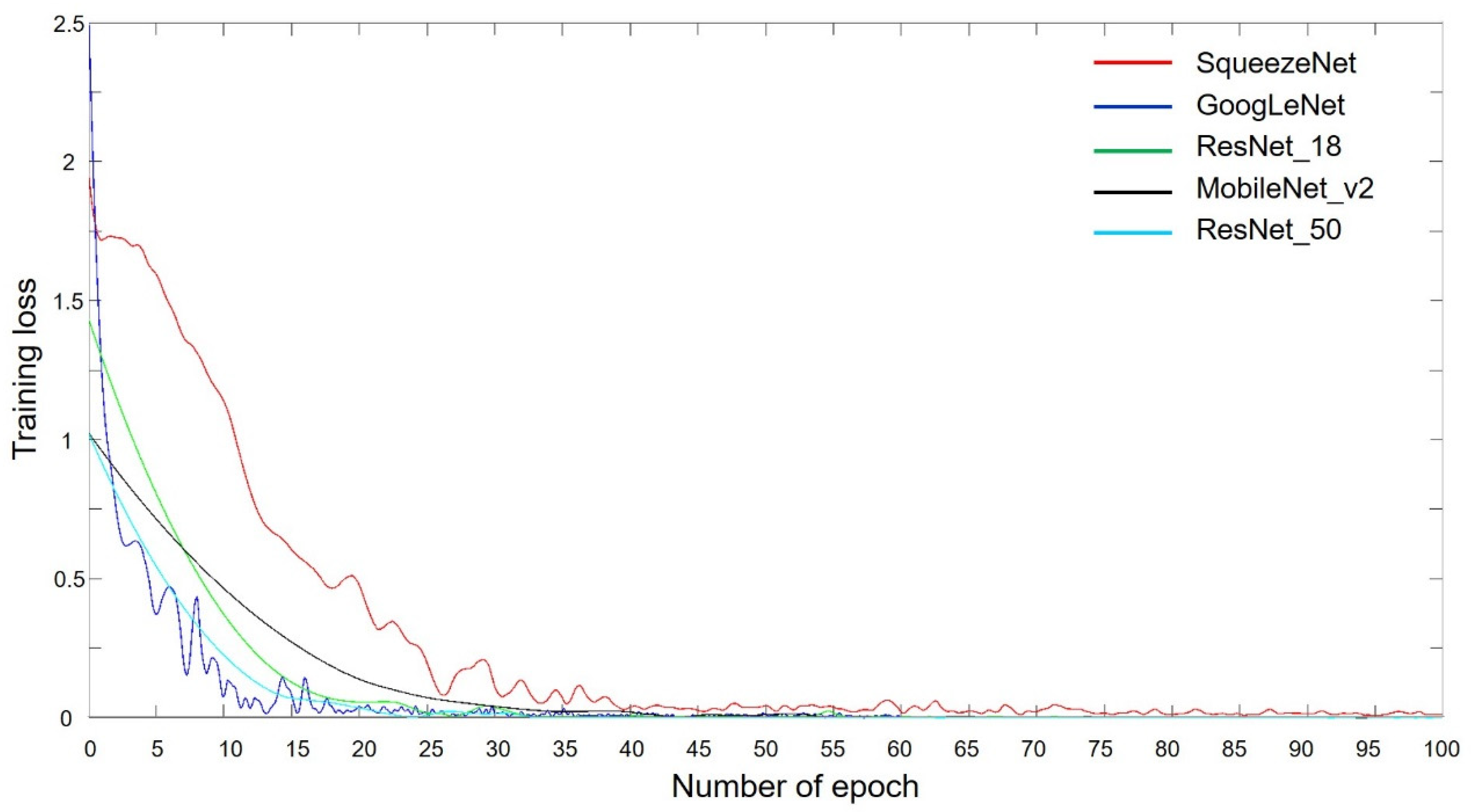

3.2. Training Progress

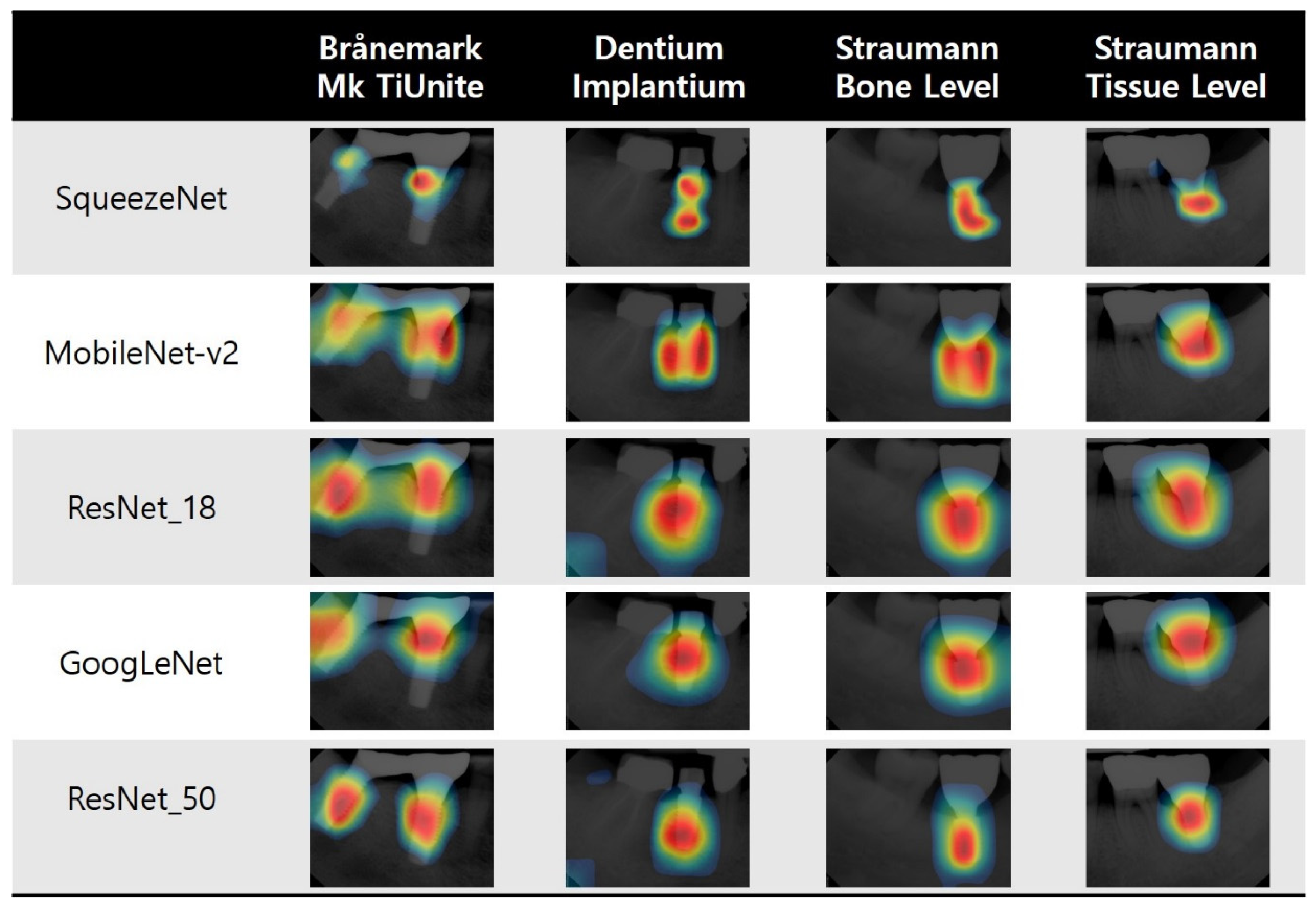

3.3. Visualization of Model Classification

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Branemark, P.-I. Tissue-integrated prostheses: Osseointegration in clinical dentistry. Quintessence 1985, 54, 99–115. [Google Scholar]

- Adell, R.; Lekholm, U.; Rockler, B.; Branemark, P.I. A 15-year study of osseointegrated implants in the treatment of the edentulous jaw. Int. J. Oral Surg. 1981, 10, 387–416. [Google Scholar] [CrossRef]

- Adell, R.; Eriksson, B.; Lekholm, U.; Brånemark, P.-I.; Jemt, T. A long-term follow-up study of osseointegrated implants in the treatment of totally edentulous jaws. Int. J. Oral Maxillofac. Implant. 1990, 5, 347–359. [Google Scholar]

- Le Guéhennec, L.; Soueidan, A.; Layrolle, P.; Amouriq, Y. Surface treatments of titanium dental implants for rapid osseointegration. Dent. Mater. 2007, 23, 844–854. [Google Scholar] [CrossRef]

- Lee, J.H.; Frias, V.; Lee, K.W.; Wright, R.F. Effect of implant size and shape on implant success rates: A literature review. J. Prosthet. Dent. 2005, 94, 377–381. [Google Scholar] [CrossRef]

- Brunette, D.M. The effects of implant surface topography on the behavior of cells. Int. J. Oral Maxillofac. Implant. 1988, 3, 231–246. [Google Scholar]

- Lekholm, U.; Gunne, J.; Henry, P.; Higuchi, K.; Lindén, U.; Bergström, C.; Van Steenberghe, D. Survival of the Brånemark implant in partially edentulous jaws: A 10-year prospective multicenter study. Int. J. Oral Maxillofac. Implant. 1999, 14, 639–645. [Google Scholar]

- Del Fabbro, M.; Testori, T.; Francetti, L.; Weinstein, R. Systematic review of survival rates for implants placed in the grafted maxillary sinus. Int. J. Periodontics Restor. Dent. 2004, 24, 565–577. [Google Scholar] [CrossRef]

- Koka, S.; Babu, N.M.; Norell, A. Survival of dental implants in post-menopausal bisphosphonate users. J. Prosthodont. Res. 2010, 54, 108–111. [Google Scholar] [CrossRef]

- Javed, F.; Al-Hezaimi, K.; Al-Rasheed, A.; Almas, K.; Romanos, G.E. Implant survival rate after oral cancer therapy: A review. Oral Oncol. 2010, 46, 854–859. [Google Scholar] [CrossRef]

- Swierkot, K.; Lottholz, P.; Flores-de-Jacoby, L.; Mengel, R. Mucositis, peri-implantitis, implant success, and survival of implants in patients with treated generalized aggressive periodontitis: 3- to 16-year results of a prospective long-term cohort study. J. Periodontol. 2012, 83, 1213–1225. [Google Scholar] [CrossRef] [PubMed]

- Pjetursson, B.E.; Thoma, D.; Jung, R.; Zwahlen, M.; Zembic, A. A systematic review of the survival and complication rates of implant-supported fixed dental prostheses (FDP s) after a mean observation period of at least 5 years. Clin. Oral Implant. Res. 2012, 23, 22–38. [Google Scholar] [CrossRef] [PubMed]

- Al-Johany, S.S.; Al Amri, M.D.; Alsaeed, S.; Alalola, B. Dental Implant Length and Diameter: A Proposed Classification Scheme. J. Prosthodont. 2017, 26, 252–260. [Google Scholar] [CrossRef] [PubMed]

- Michelinakis, G.; Sharrock, A.; Barclay, C.W. Identification of dental implants through the use of Implant Recognition Software (IRS). Int. Dent. J. 2006, 56, 203–208. [Google Scholar] [CrossRef]

- Jokstad, A.; Braegger, U.; Brunski, J.B.; Carr, A.B.; Naert, I.; Wennerberg, A. Quality of dental implants. Int. Dent. J. 2003, 53, 409–443. [Google Scholar] [CrossRef]

- Lazzara, R.J. Criteria for implant selection: Surgical and prosthetic considerations. Pract. Periodontics Aesthetic Dent. PPAD 1994, 6, 55–62. [Google Scholar]

- Demenko, V.; Linetskiy, I.; Nesvit, K.; Shevchenko, A. Ultimate masticatory force as a criterion in implant selection. J. Dent. Res. 2011, 90, 1211–1215. [Google Scholar] [CrossRef]

- Palmer, R.M.; Howe, L.C.; Palmer, P.J. Implants in Clinical Dentistry; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Jaarda, M.J.; Razzoog, M.E.; Gratton, D.G. Geometric comparison of five interchangeable implant prosthetic retaining screws. J. Prosthet. Dent. 1995, 74, 373–379. [Google Scholar] [CrossRef]

- Al-Wahadni, A.; Barakat, M.S.; Abu Afifeh, K.; Khader, Y. Dentists’ most common practices when selecting an implant system. J. Prosthodont. 2018, 27, 250–259. [Google Scholar] [CrossRef]

- Golden, J.A. Deep learning algorithms for detection of lymph node metastases from breast cancer: Helping artificial intelligence be seen. JAMA 2017, 318, 2184–2186. [Google Scholar] [CrossRef]

- Ghosh, R.; Ghosh, K.; Maitra, S. Automatic detection and classification of diabetic retinopathy stages using CNN. In Proceedings of the 2017 4th International Conference on Signal Processing and Integrated Networks, Noida, India, 2–3 February 2017; pp. 550–554. [Google Scholar]

- Pham, T.-C.; Luong, C.-M.; Visani, M.; Hoang, V.-D. Deep CNN and data augmentation for skin lesion classification. In Proceedings of the Asian Conference on Intelligent Information and Database Systems, Donghoi city, Vietnam, 19–21 March 2018; pp. 573–582. [Google Scholar] [CrossRef]

- Hwang, J.J.; Jung, Y.H.; Cho, B.H.; Heo, M.S. An overview of deep learning in the field of dentistry. Imaging Sci. Dent. 2019, 49, 1–7. [Google Scholar] [CrossRef]

- Casalegno, F.; Newton, T.; Daher, R.; Abdelaziz, M.; Lodi-Rizzini, A.; Schurmann, F.; Krejci, I.; Markram, H. Caries Detection with Near-Infrared Transillumination Using Deep Learning. J. Dent. Res. 2019, 98, 1227–1233. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dorfer, C.; Schwendicke, F. Deep Learning for the Radiographic Detection of Periodontal Bone Loss. Sci. Rep. 2019, 9, 8495. [Google Scholar] [CrossRef]

- Kim, J.; Lee, H.S.; Song, I.S.; Jung, K.H. DeNTNet: Deep Neural Transfer Network for the detection of periodontal bone loss using panoramic dental radiographs. Sci. Rep. 2019, 9, 17615. [Google Scholar] [CrossRef]

- Canziani, A.; Paszke, A.; Culurciello, E. An analysis of deep neural network models for practical applications. arXiv 2016, arXiv:1605.07678. [Google Scholar]

- Bianco, S.; Cadene, R.; Celona, L.; Napoletano, P. Benchmark Analysis of Representative Deep Neural Network Architectures. IEEE Access 2018, 6, 64270–64277. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Iandola, F.; Han, S.; Moskewicz, M.; Ashraf, K.; Dally, W.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Zeng, P.Y.; Rane, N.; Du, W.; Chintapalli, J.; Prendergast, G.C. Role for RhoB and PRK in the suppression of epithelial cell transformation by farnesyltransferase inhibitors. Oncogene 2003, 22, 1124–1134. [Google Scholar] [CrossRef]

- Szegedy, C.; Wei, L.; Yangqing, J.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Flamant, Q.; Stanciuc, A.M.; Pavailler, H.; Sprecher, C.M.; Alini, M.; Peroglio, M.; Anglada, M. Roughness gradients on zirconia for rapid screening of cell-surface interactions: Fabrication, characterization and application. J. Biomed. Mater. Res. Part A 2016, 104, 2502–2514. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; The MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Cassetta, M.; Di Giorgio, R.; Barbato, E. Are Intraoral Radiographs Accurate in Determining the Peri-implant Marginal Bone Level? Int. J. Oral Maxillofac. Implant. 2018, 33, 847–852. [Google Scholar] [CrossRef]

- Cassetta, M.; Di Giorgio, R.; Barbato, E. Are intraoral radiographs reliable in determining peri-implant marginal bone level changes? The correlation between open surgical measurements and peri-apical radiographs. Int. J. Oral Maxillofac. Surg. 2018, 47, 1358–1364. [Google Scholar] [CrossRef]

- Hsu, K.W.; Shen, Y.F.; Wei, P.C. Compatible CAD-CAM titanium abutments for posterior single-implant tooth replacement: A retrospective case series. J. Prosthet. Dent. 2017, 117, 363–366. [Google Scholar] [CrossRef]

- Karl, M.; Irastorza-Landa, A. In Vitro Characterization of Original and Nonoriginal Implant Abutments. Int. J. Oral Maxillofac. Implant. 2018, 33, 1229–1239. [Google Scholar] [CrossRef]

| Implant System | Brånemark Mk TiUnite Implant | Dentium Implantium Implant | Straumann Bone Level Implant | Straumann Tissue Level Implant |

|---|---|---|---|---|

| Number of data | 197 | 193 | 203 | 208 |

| Network | Depth | Size (Megabyte) | Parameters (Millions) | Image Input Size |

|---|---|---|---|---|

| SqueezeNet | 18 | 4.6 | 1.24 | 227 × 227 |

| GoogLeNet | 22 | 27 | 7 | 224 × 224 |

| ResNet-18 | 18 | 44 | 11.7 | 224 × 224 |

| MobileNet-v2 | 54 | 13 | 3.5 | 224 × 224 |

| ResNet-50 | 50 | 96 | 25.6 | 224 × 224 |

| Pre-Trained Network | Test Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| SqueezeNet | 0.96 | 0.96 | 0.96 | 0.96 |

| GoogLeNet | 0.93 | 0.92 | 0.94 | 0.93 |

| ResNet-18 | 0.98 | 0.98 | 0.98 | 0.98 |

| MobileNet-v2 | 0.97 | 0.96 | 0.96 | 0.96 |

| ResNet-50 | 0.98 | 0.98 | 0.98 | 0.98 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.-E.; Nam, N.-E.; Shim, J.-S.; Jung, Y.-H.; Cho, B.-H.; Hwang, J.J. Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs. J. Clin. Med. 2020, 9, 1117. https://doi.org/10.3390/jcm9041117

Kim J-E, Nam N-E, Shim J-S, Jung Y-H, Cho B-H, Hwang JJ. Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs. Journal of Clinical Medicine. 2020; 9(4):1117. https://doi.org/10.3390/jcm9041117

Chicago/Turabian StyleKim, Jong-Eun, Na-Eun Nam, June-Sung Shim, Yun-Hoa Jung, Bong-Hae Cho, and Jae Joon Hwang. 2020. "Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs" Journal of Clinical Medicine 9, no. 4: 1117. https://doi.org/10.3390/jcm9041117

APA StyleKim, J.-E., Nam, N.-E., Shim, J.-S., Jung, Y.-H., Cho, B.-H., & Hwang, J. J. (2020). Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs. Journal of Clinical Medicine, 9(4), 1117. https://doi.org/10.3390/jcm9041117