Enhancing the Usability of Patient Monitoring Devices in Intensive Care Units: Usability Engineering Processes for Early Warning System (EWS) Evaluation and Design

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Participants

2.3. Study Procedures

2.3.1. Formative Evaluation

- Usability Test

- User preference survey

2.3.2. Summative Evaluation

2.4. Analysis

3. Results

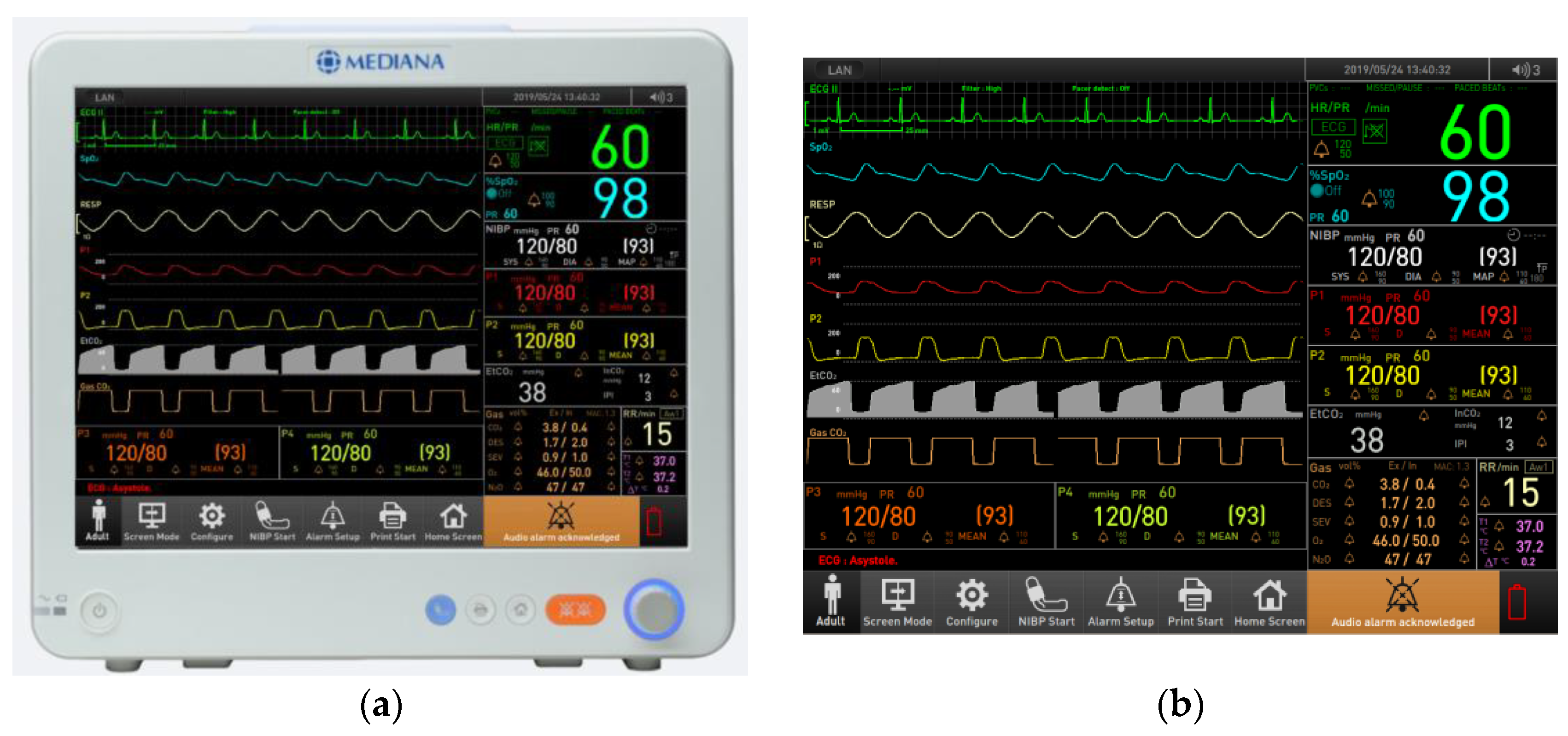

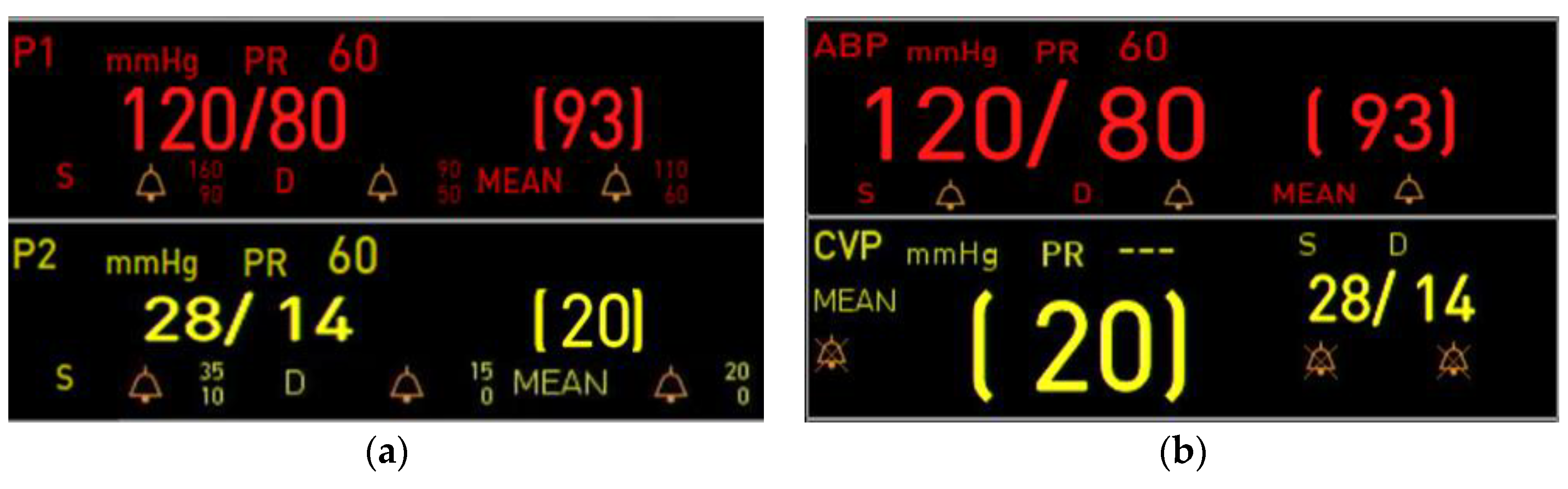

3.1. Execution of the Formative Evaluation

3.1.1. User Statistics of the Formative Evaluation

3.1.2. Task Completion of the Formative Evaluation

3.1.3. User Preference Survey

3.2. Design Implementation

3.3. Execution of the Summative Evaluation

3.3.1. User Statistics of the Summative Evaluation

3.3.2. Task Completion of the Summative Evaluation

3.4. Comparative Usability Analysis Between Formative and Summative Evaluations

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| EWS | Early Warning Score |

| NEWS | National Early Warning Score |

| PEWS | Pediatric Early Warning Score |

| MEWS | Modified Early Warning Score |

| ICU | Intensive Care Unit |

| CCU | Cardiac Intensive Care Unit |

| FDA | Food and Drug Administration |

| UI | User Interface |

| ABP | Arterial Blood Pressure |

| CVP | Central Venous Pressure |

References

- Andrade, E.; Quinlan, L.; Harte, R.; Byrne, D.; Fallon, E.; Kelly, M.; Casey, S.; Kirrane, F.; O’Connor, P.; O’Hora, D. Novel interface designs for patient monitoring applications in critical care medicine: Human factors review. JMIR Hum. Factors 2020, 7, e15052. [Google Scholar] [CrossRef] [PubMed]

- Walsh, T.; Beatty, P.C. Human factors error and patient monitoring. Physiol. Meas. 2002, 23, R111. [Google Scholar] [CrossRef] [PubMed]

- Poncette, A.-S.; Spies, C.; Mosch, L.; Schieler, M.; Weber-Carstens, S.; Krampe, H.; Balzer, F. Clinical requirements of future patient monitoring in the intensive care unit: Qualitative study. JMIR Med. Inform. 2019, 7, e13064. [Google Scholar] [CrossRef] [PubMed]

- Stevens, G.; Larmuseau, M.; Damme, A.V.; Vanoverschelde, H.; Heerman, J.; Verdonck, P. Feasibility study of the use of a wearable vital sign patch in an intensive care unit setting. J. Clin. Monit. Comput. 2024, 39, 245–256. [Google Scholar] [CrossRef]

- Alam, N.; Hobbelink, E.L.; van Tienhoven, A.-J.; van de Ven, P.M.; Jansma, E.P.; Nanayakkara, P.W. The impact of the use of the Early Warning Score (EWS) on patient outcomes: A systematic review. Resuscitation 2014, 85, 587–594. [Google Scholar] [CrossRef]

- Kyriacos, U.; Jelsma, J.; Jordan, S. Monitoring vital signs using early warning scoring systems: A review of the literature. J. Nurs. Manag. 2011, 19, 311–330. [Google Scholar] [CrossRef]

- Alam, N.; Vegting, I.; Houben, E.; Van Berkel, B.; Vaughan, L.; Kramer, M.; Nanayakkara, P. Exploring the performance of the National Early Warning Score (NEWS) in a European emergency department. Resuscitation 2015, 90, 111–115. [Google Scholar] [CrossRef]

- Doyle, D.J. Clinical early warning scores: New clinical tools in evolution. Open Anesth. J. 2018, 12, 12–26. [Google Scholar] [CrossRef]

- Jacob, A.; Qudsi, A.; Kumar, N.S.; Trevarthen, T.; Awad, W.I. Utilisation of the National Early Warning Score (NEWS) and Assessment of Patient Outcomes Following Cardiac Surgery. J. Clin. Med. 2024, 13, 6850. [Google Scholar] [CrossRef]

- Esposito, S.; Mucci, B.; Alfieri, E.; Tinella, A.; Principi, N. Advances and Challenges in Pediatric Sepsis Diagnosis: Integrating Early Warning Scores and Biomarkers for Improved Prognosis. Biomolecules 2025, 15, 123. [Google Scholar] [CrossRef]

- Williams, B. The national early warning score: From concept to NHS implementation. Clin. Med. 2022, 22, 499–505. [Google Scholar] [CrossRef] [PubMed]

- Langkjaer, C.S.; Bundgaard, K.; Bunkenborg, G.; Nielsen, P.B.; Iversen, K.K.; Bestle, M.H.; Bove, D.G. How nurses use National Early Warning Score and Individual Early Warning Score to support their patient risk assessment practice: A fieldwork study. J. Adv. Nurs. 2023, 79, 789–797. [Google Scholar] [CrossRef] [PubMed]

- Fuhrmann, L.; Lippert, A.; Perner, A.; Østergaard, D. Incidence, staff awareness and mortality of patients at risk on general wards. Resuscitation 2008, 77, 325–330. [Google Scholar] [CrossRef] [PubMed]

- Subbe, C.; Davies, R.; Williams, E.; Rutherford, P.; Gemmell, L. Effect of introducing the Modified Early Warning score on clinical outcomes, cardio-pulmonary arrests and intensive care utilisation in acute medical admissions. Anaesthesia 2003, 58, 797–802. [Google Scholar] [CrossRef]

- Bokhari, S.W.; Munir, T.; Memon, S.; Byrne, J.L.; Russell, N.H.; Beed, M. Impact of critical care reconfiguration and track-and-trigger outreach team intervention on outcomes of haematology patients requiring intensive care admission. Ann. Hematol. 2010, 89, 505–512. [Google Scholar] [CrossRef]

- Bitkina, O.V.; Kim, H.K.; Park, J. Usability and user experience of medical devices: An overview of the current state, analysis methodologies, and future challenges. Int. J. Ind. Ergon. 2020, 76, 102932. [Google Scholar] [CrossRef]

- Han, S.H.; Yun, M.H.; Kim, K.-J.; Kwahk, J. Evaluation of product usability: Development and validation of usability dimensions and design elements based on empirical models. Int. J. Ind. Ergon. 2000, 26, 477–488. [Google Scholar] [CrossRef]

- Inostroza, R.; Rusu, C.; Roncagliolo, S.; Jimenez, C.; Rusu, V. Usability heuristics for touchscreen-based mobile devices. In Proceedings of the 2012 Ninth International Conference on Information Technology—New Generations, Las Vegas, NV, USA, 16–18 April 2012; pp. 662–667. [Google Scholar]

- Food and Drug Administration. Applying Human Factors and Usability Engineering to Medical Devices: Guidance for Industry and Food and Drug Administration staff; Food and Drug Administration: Rockville, MD, USA, 2016.

- IEC. Medical Devices—Application of Usability Engineering to Medical Devices; IEC: Geneva, Switzerland, 2015. [Google Scholar]

- IEC. Medical Devices—Part 2: Guidance on the Application of Usability Engineering to Medical Devices; IEC: Geneva, Switzerland, 2016. [Google Scholar]

- Brade, J.; Lorenz, M.; Busch, M.; Hammer, N.; Tscheligi, M.; Klimant, P. Being there again–Presence in real and virtual environments and its relation to usability and user experience using a mobile navigation task. Int. J. Hum. Comput. Stud. 2017, 101, 76–87. [Google Scholar] [CrossRef]

- Lindquist, A.; Johansson, P.; Petersson, G.; Saveman, B.-I.; Nilsson, G. The use of the Personal Digital Assistant (PDA) among personnel and students in health care: A review. J. Med. Internet Res. 2008, 10, e1038. [Google Scholar] [CrossRef]

- Machado Faria, T.V.; Pavanelli, M.; Bernardes, J.L., Jr. Evaluating the usability using USE questionnaire: Mindboard system use case. In Proceedings of the International Conference on Learning and Collaboration Technologies, Orlando, FL, USA, 31 October–4 November 2016; pp. 518–527. [Google Scholar]

- Lund, A.M. Measuring usability with the use questionnaire12. Usability Interface 2001, 8, 3–6. [Google Scholar]

- Dawes, J. Do data characteristics change according to the number of scale points used? An experiment using 5-point, 7-point and 10-point scales. Int. J. Mark. Res. 2008, 50, 61–104. [Google Scholar] [CrossRef]

- Preston, C.C.; Colman, A.M. Optimal number of response categories in rating scales: Reliability, validity, discriminating power, and respondent preferences. Acta Psychol. 2000, 104, 1–15. [Google Scholar] [CrossRef] [PubMed]

| NEWS (National Early Warning Score) | MEWS (Modified Early Warning Score) | PEWS (Pediatric Early Warning Score) | |

|---|---|---|---|

| Target patients | Adult patient | Adult patient | Pediatric patient |

| Purpose | Standardized early warning and tracking | Early detection of deterioration | Detect deterioration in pediatric patients |

| Standardization | Standardized in NHS, UK | Not standardized | Not standardized |

| Assessment Parameters |

|

|

|

| Predictive Performance and Research Findings | Highly predictive in studies | Relatively low predictive power with variations across hospitals. | Useful for pediatric patients but lacks standardization. |

| Clinical Applications | Integration of emergency department, inpatient ward, and in-hospital patient monitoring with rapid response systems | Evaluation of critically ill patients within the hospital and criteria for ICU transfer. | Early detection of deterioration in pediatric emergency departments and pediatric wards |

| Formative Evaluation | Summative Evaluation | ||

|---|---|---|---|

| Usability Test | Survey | Usability Test | |

| Description |

|

|

|

| Number of participants | 5 | 72 | 23 |

| Performance metric |

|

|

|

| Use Scenario | Task No. | Task |

|---|---|---|

| Patient Admit | Task 1 | Admit new patient. |

| Task 2 | Change the birthdate from 29 July 1970 to 20 July 1970. | |

| Waveform/ Parameter Setting | Task 3 | Change 4 channel waveform display in the standard mode. |

| Task 4 | Change the label from PAP to P2. | |

| Task 5 | Change the waveform from Respiration to PAP. | |

| Task 6 | Select ECG sweep speed to proper speed. | |

| Task 7 | Select ECG waveform size menu to proper size. | |

| Task 8 | Set NIBP interval to 1 h. | |

| Task 9 | Change Respiration source randomly. | |

| General Setting | Task 10 | By default, the settings window closes after a certain amount of time. Change the settings so that the settings window does not close. |

| Task 11 | Set not to make a sound when the screen is touched. | |

| Task 12 | Set the sound to be muted according to your heart rate. | |

| Alarm | Task 13 | Set the Alarm limit display ON to display established alarm value on the monitor. Check the alarm limit location on the screen and point it out. |

| Task 14 | Check all areas where the current visual alarm (SpO2) occurs. | |

| Task 15 | Pause the alarm when the alarm occurs. | |

| Task 16 | Adjust the lower threshold for the SpO2 alarm to 85%. | |

| Task 17 | Check all areas where the current visual alarm (P1) occurs. | |

| Task 18 | Change the upper limit of P1 SYS to 170 mmHg. | |

| Task 19 | Check the alarm message in Message List and check the alarm message on the screen. | |

| Task 20 | Set only the diastolic pressure and mean pressure auditory alarms of P1 to be disabled. | |

| Task 21 | Set the arrhythmia alarm ON to display. | |

| Task 22 | Change the alarm condition for ventricular tachycardia to 135 bpm. | |

| Task 23 | Check the alarm of high-risk V-FIB and point to the area where the visual and alarm messages occur. | |

| Task 24 | Pause the alarm when the alarm occurs. | |

| Task 25 | Check the visual and audible alarm for a medium-risk Tachycardia alarm. | |

| Task 26 | Check the visual and audible alarm for the low-risk Bigeminy alarms. | |

| Task 27 | Check that the current ECG waveform is normal and click the message list to check the arrhythmia alarms that have occurred so far. (V-FIB alarm record does not remain) | |

| Task 28 | Check a visual alarm to the current Pair PVCs alarm. | |

| Task 29 | Check ECG waveform and PVCs alarm message list. | |

| Task 30 | Check the visual alarm messages for new Run of PVCs alarms and compare them to ECG waveforms. | |

| Task 31 | Check that the ECG waveform is Multiform PVCs and that the alarm message properly occurred. If the visual alarm message is not appropriate, wait for Multiform PVCs to appear. | |

| Task 32 | The ECG waveform returned to normal. However, visual and audible alarms for Multiform PVCs are still occurring. Turn off the current alarm by pressing the button indicating that you have recognized Multiform PVCs. | |

| Task 33 | Turn off all audible alarms. | |

| Display Mode | Task 34 | Display the Big Number mode. |

| Task 35 | Display the Tabular Trend mode. | |

| Task 36 | Tabular trend list is ordered by descending now. Change the display order from ascending to descending. | |

| Task 37 | Display the Graphical Trend mode. | |

| Task 38 | Change the display interval randomly. | |

| Task 39 | Display the Event review mode. | |

| Patient Discharge | Task 40 | Discharge the patient. |

| Use Scenario | Task No. | Task |

|---|---|---|

| EWS (Early Warning Score) | Task 1 | Configure the EWS Trend setting to NEWS2. |

| Task 2 | Set the AVPU scale in the EWS to PAIN. | |

| Task 3 | Calculate the total EWS score. | |

| Task 4 | Update the AVPU scale in the EWS to Alert | |

| Task 5 | Recalculate the total EWS score. | |

| Task 6 | Display the total EWS score along with the corresponding message | |

| Task 7 | Review the trend data, including the total EWS score | |

| Task 8 | Modify the system settings to hide the EWS pop-up bar, ensuring that EWS is not visible on the main screen. |

| Variable | Usability Test | Survey | |

|---|---|---|---|

| Age | 20–29 years | 0 | 30 |

| 30–39 years | 2 | 40 | |

| 40–49 years | 3 | 2 | |

| Affiliated hospital | Severance Hospital | 5 | 72 |

| Work experience | Less than 3 years | 0 | 1 |

| More than 3 years, less than 5 years | 0 | 17 | |

| More than 5 years, less than 10 years | 2 | 38 | |

| More than 10 years | 3 | 16 | |

| Use experience with similar devices | Less than 3 years | 0 | 1 |

| More than 3 years, less than 5 years | 1 | 17 | |

| More than 5 years, less than 10 years | 1 | 38 | |

| More than 10 years | 3 | 16 | |

| Manufacturer name | Mediana | 2 1 | 72 1 |

| Phillips | 5 1 | 72 1 | |

| Use Scenario | Success Rate | Comments |

|---|---|---|

| Patient Admit | 80% | (Task 1,2) During the date of birth entry, a period “.” is automatically inserted, causing a duplicate period “..” and resulting in task failure. |

| Waveform/Parameter Setting | 80% | (Task 4) The terminology used in P2 was unfamiliar to the user, leading to task failure. |

| General Setting | 73% | (Task 11) Task failure occurred due to performing the function in service mode. |

| Alarm | 90% | (Task 18) The terminology used in P1 was unfamiliar to the user, leading to task failure. |

| Display Mode | 93% | (Task 42) Task failure occurred due to confusion between the functionalities required in the event review screen and the event review settings screen. |

| Patient Discharge | 100% | - |

| Total | 86% | |

| Use Scenario | Mean | SD |

|---|---|---|

| Patient Admit | 5.00 | 1.25 |

| Waveform/Parameter Setting | 5.73 | 0.88 |

| General Setting | 5.20 | 1.20 |

| Alarm | 5.35 | 0.82 |

| Display Mode | 4.96 | 1.28 |

| Patient Discharge | 7.00 | 0.00 |

| Total | 5.49 | 0.88 |

| Variable | Usability Test | |

|---|---|---|

| Age | 20–29 years | 12 |

| 30–39 years | 10 | |

| 40–49 years | 1 | |

| Affiliated hospital | Severance Hospital | 23 |

| Work experience | Less than 3 years | 9 |

| More than 3 years, less than 5 years | 7 | |

| More than 5 years, less than 10 years | 4 | |

| More than 10 years | 3 | |

| Use experience with similar devices | Less than 3 years | 9 |

| More than 3 years, less than 5 years | 7 | |

| More than 5 years, less than 10 years | 3 | |

| More than 10 years | 4 | |

| Manufacturer name (Repetition is acceptable) | Mediana | 7 |

| Phillips | 18 | |

| GE | 1 | |

| Use Scenario | Success Rate | Satisfaction Evaluation | |

|---|---|---|---|

| Mean | SD | ||

| Patient Admit | 98% | 4.68 | 0.63 |

| Waveform/Parameter Setting | 87% | 4.52 | 0.65 |

| General Setting | 87% | 4.48 | 0.67 |

| Alarm | 89% | 4.22 | 0.87 |

| Display Mode | 95% | 4.47 | 0.79 |

| Wave Freeze | 74% | 4.59 | 0.60 |

| EWS | 82% | 3.95 | 1.00 |

| Standby Mode | 100% | 4.91 | 0.28 |

| Patient Discharge | 100% | 5.00 | 1.00 |

| Total | 90% | 4.54 | 0.72 |

| Use Scenario | Mann–Whitney U 1 | Z | p-Value |

|---|---|---|---|

| Patient Admit | 37 | −2.294 | 0.239 |

| Waveform/Parameter Setting | 27 | −1.887 | 0.071 |

| General Setting | 41 | −1.140 | 0.348 |

| Alarm | 41.5 | −1.009 | 0.348 |

| Display Mode | 50.5 | −0.533 | 0.684 |

| Patient Discharge | 57 | 0.000 | 1 |

| Use Scenario | Mann–Whitney U 1 | Z | p-Value |

|---|---|---|---|

| Patient Admit | 6 | −3.314 | 0.001 |

| Waveform/Parameter Setting | 21 | −2.204 | 0.027 |

| General Setting | 20 | −2.313 | 0.023 |

| Alarm | 31 | −1.593 | 0.121 |

| Display Mode | 24 | −2.097 | 0.045 |

| Patient Discharge | 57.5 | 0.000 | 1.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, H.; Kim, Y.; Jang, W. Enhancing the Usability of Patient Monitoring Devices in Intensive Care Units: Usability Engineering Processes for Early Warning System (EWS) Evaluation and Design. J. Clin. Med. 2025, 14, 3218. https://doi.org/10.3390/jcm14093218

Choi H, Kim Y, Jang W. Enhancing the Usability of Patient Monitoring Devices in Intensive Care Units: Usability Engineering Processes for Early Warning System (EWS) Evaluation and Design. Journal of Clinical Medicine. 2025; 14(9):3218. https://doi.org/10.3390/jcm14093218

Chicago/Turabian StyleChoi, Hyeonkyeong, Yourim Kim, and Wonseuk Jang. 2025. "Enhancing the Usability of Patient Monitoring Devices in Intensive Care Units: Usability Engineering Processes for Early Warning System (EWS) Evaluation and Design" Journal of Clinical Medicine 14, no. 9: 3218. https://doi.org/10.3390/jcm14093218

APA StyleChoi, H., Kim, Y., & Jang, W. (2025). Enhancing the Usability of Patient Monitoring Devices in Intensive Care Units: Usability Engineering Processes for Early Warning System (EWS) Evaluation and Design. Journal of Clinical Medicine, 14(9), 3218. https://doi.org/10.3390/jcm14093218