Diagnostic Accuracy of Artificial Intelligence in Predicting Anti-VEGF Treatment Response in Diabetic Macular Edema: A Systematic Review and Meta-Analysis

Abstract

1. Introduction

2. Methods

2.1. Study Design and Registration

2.2. Search Strategy

2.3. Study Selection Criteria

2.4. Data Extraction and Management

2.5. Quality Assessment

2.6. Statistical Analysis

3. Results

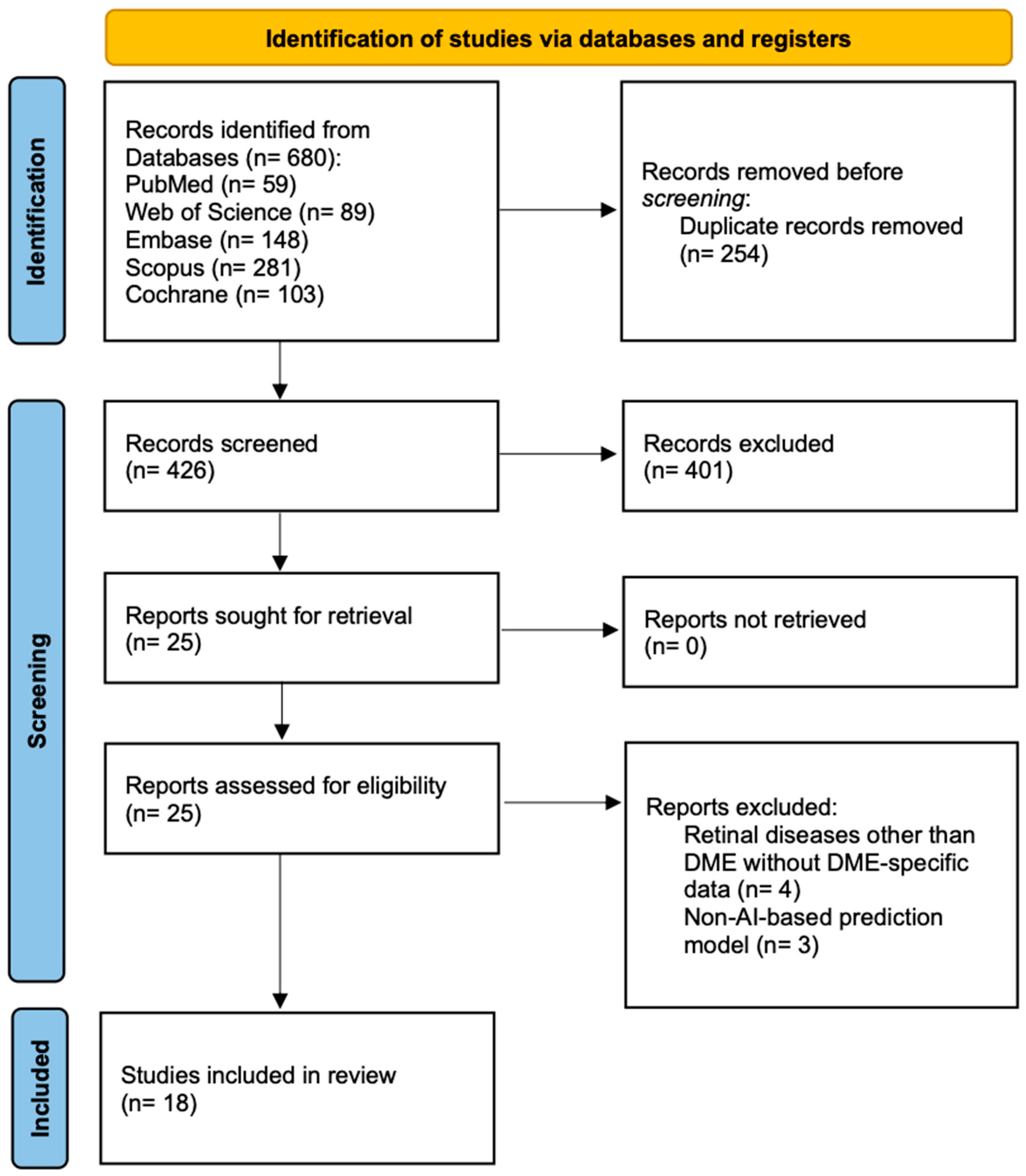

3.1. Literature Search and Study Selection

3.2. Study Characteristics and Population Demographics

3.3. Treatment Protocols and Individual Study Performance

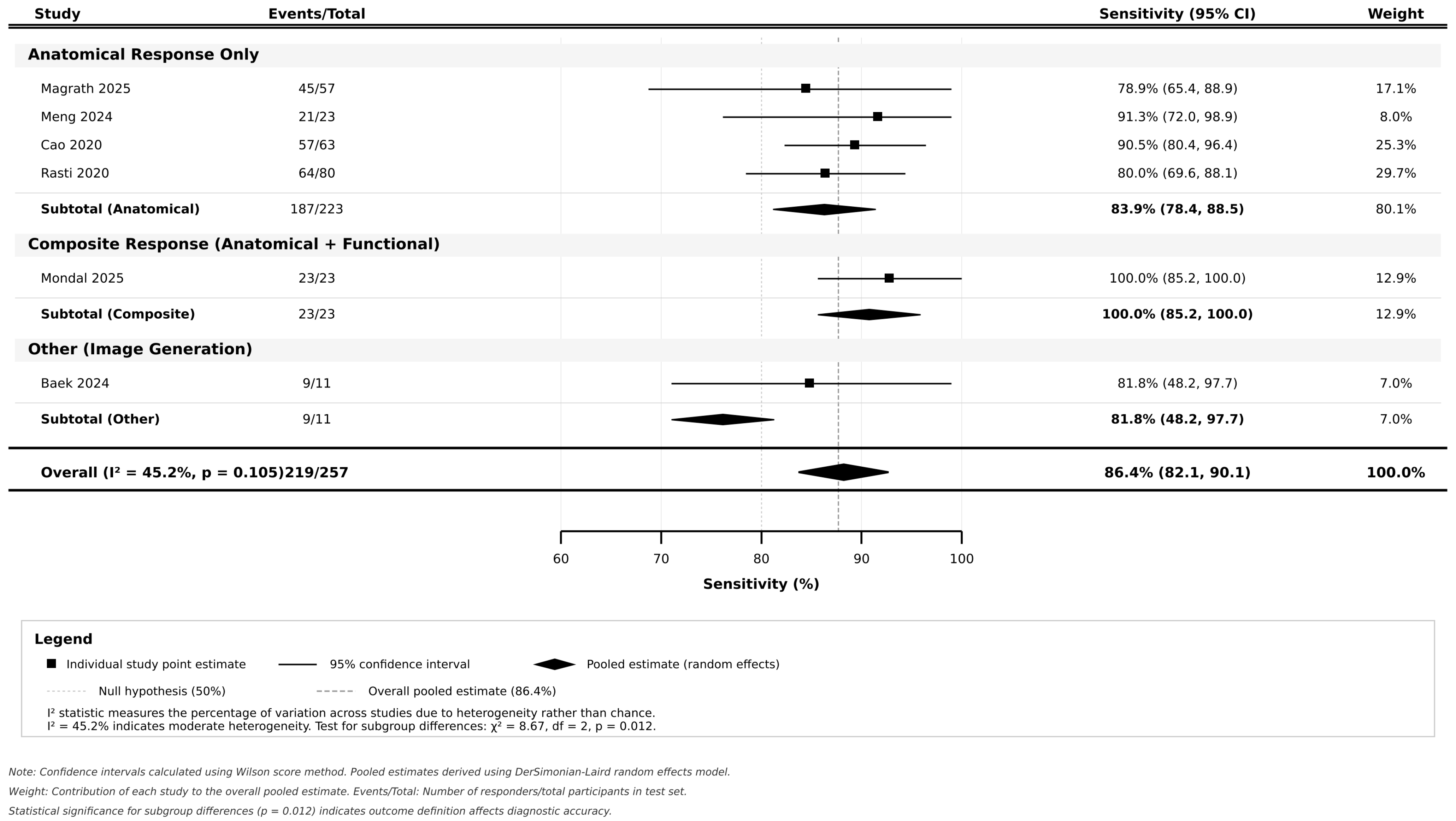

3.4. Pooled Diagnostic Accuracy and Subgroup Analyses

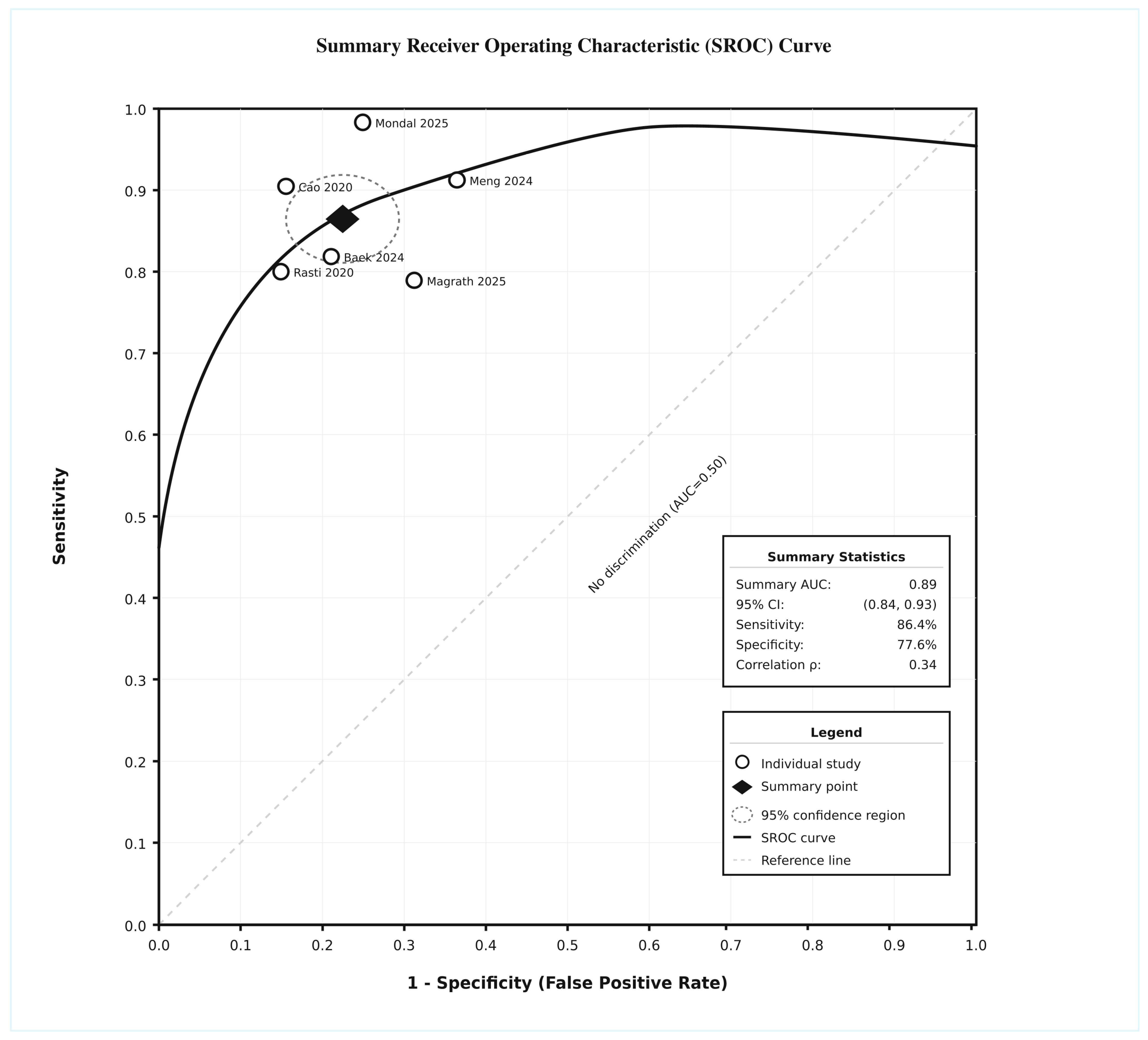

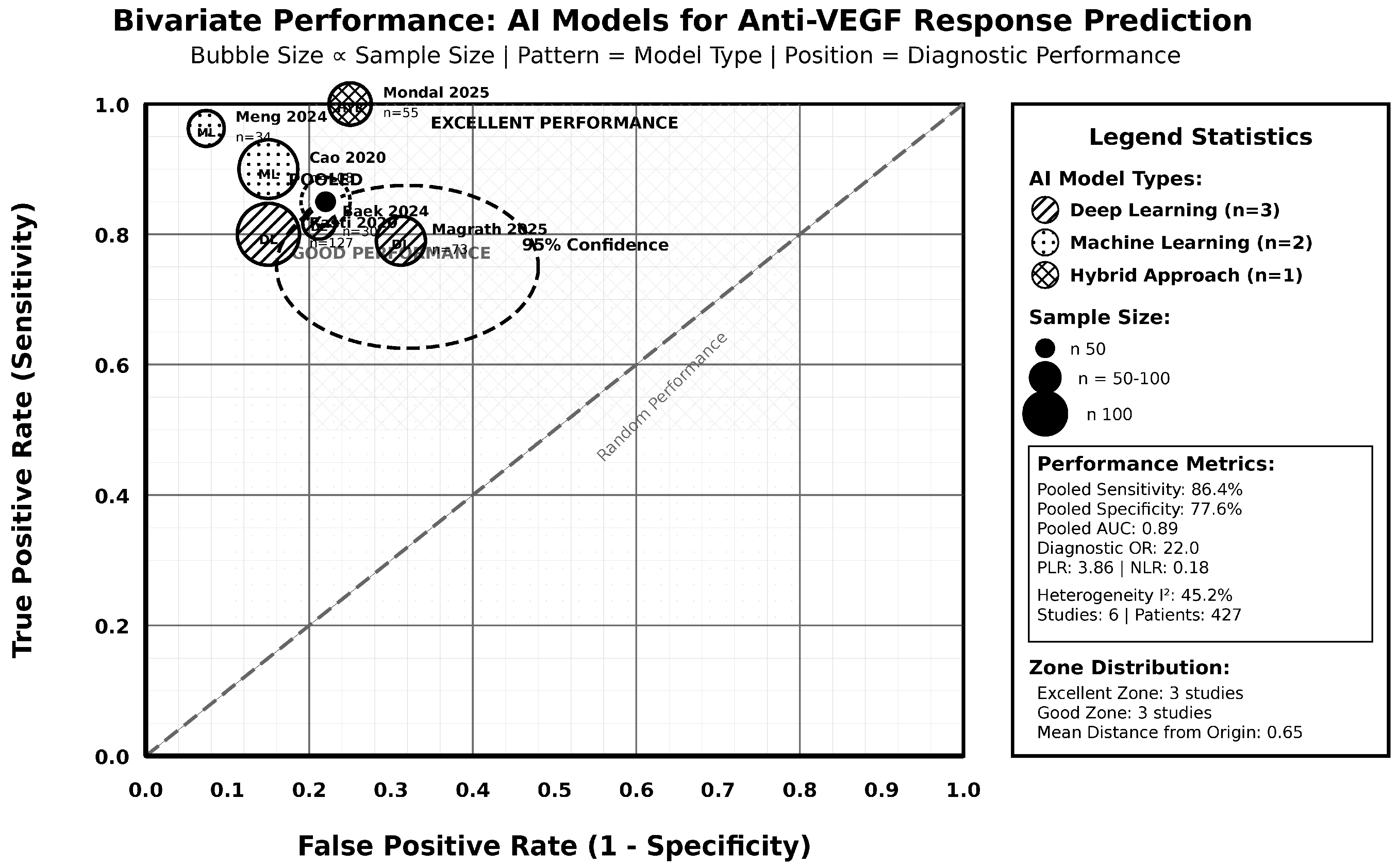

3.5. Summary ROC

3.6. Heterogeneity Assessment and Meta-Regression

3.7. Comparative Effectiveness Analysis

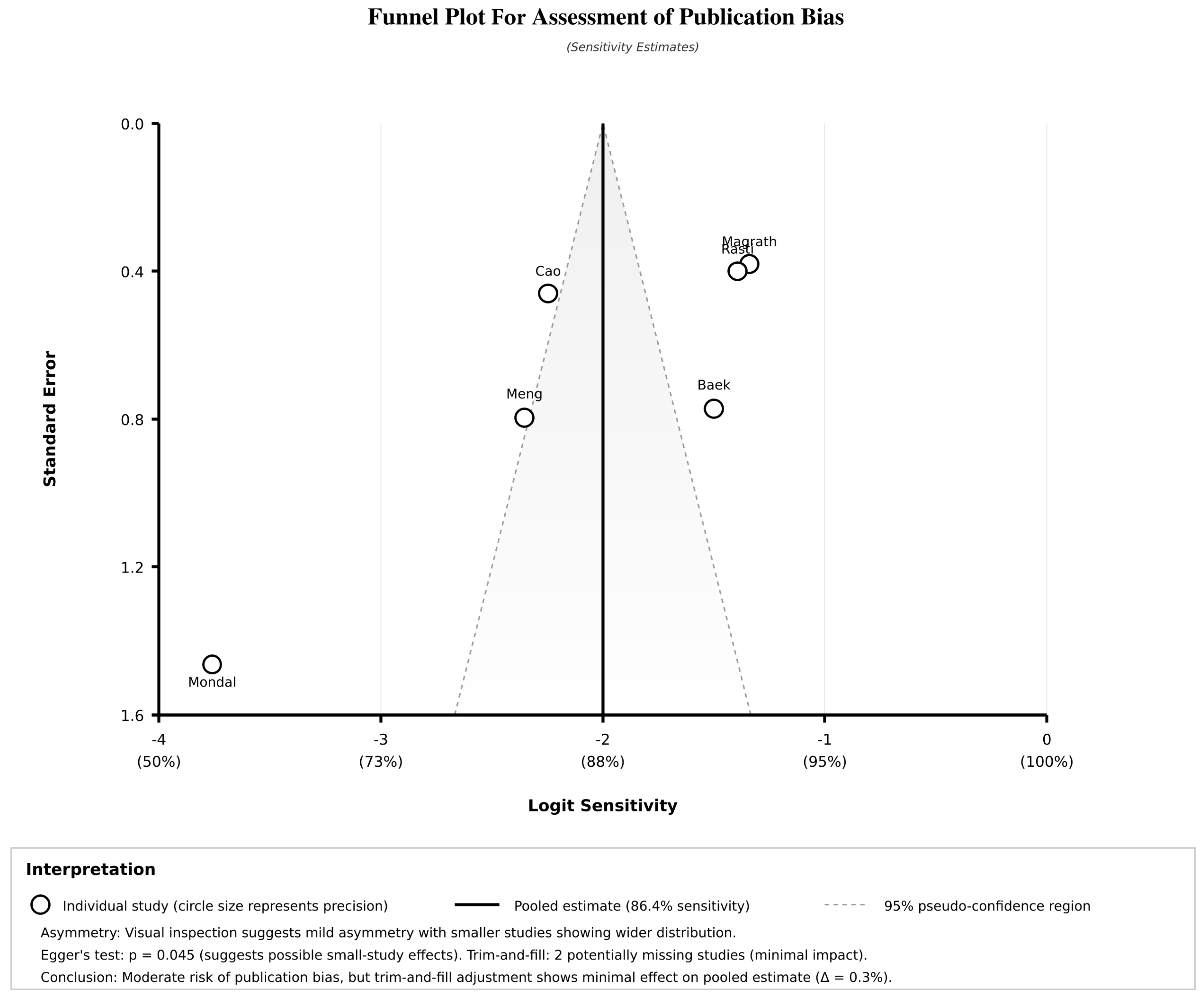

3.8. Sensitivity Analysis and Publication Bias Assessment

3.9. Risk of Bias, Evidence Quality Assessment, and Assessment of Clustering

3.10. Publication Bias Assessment

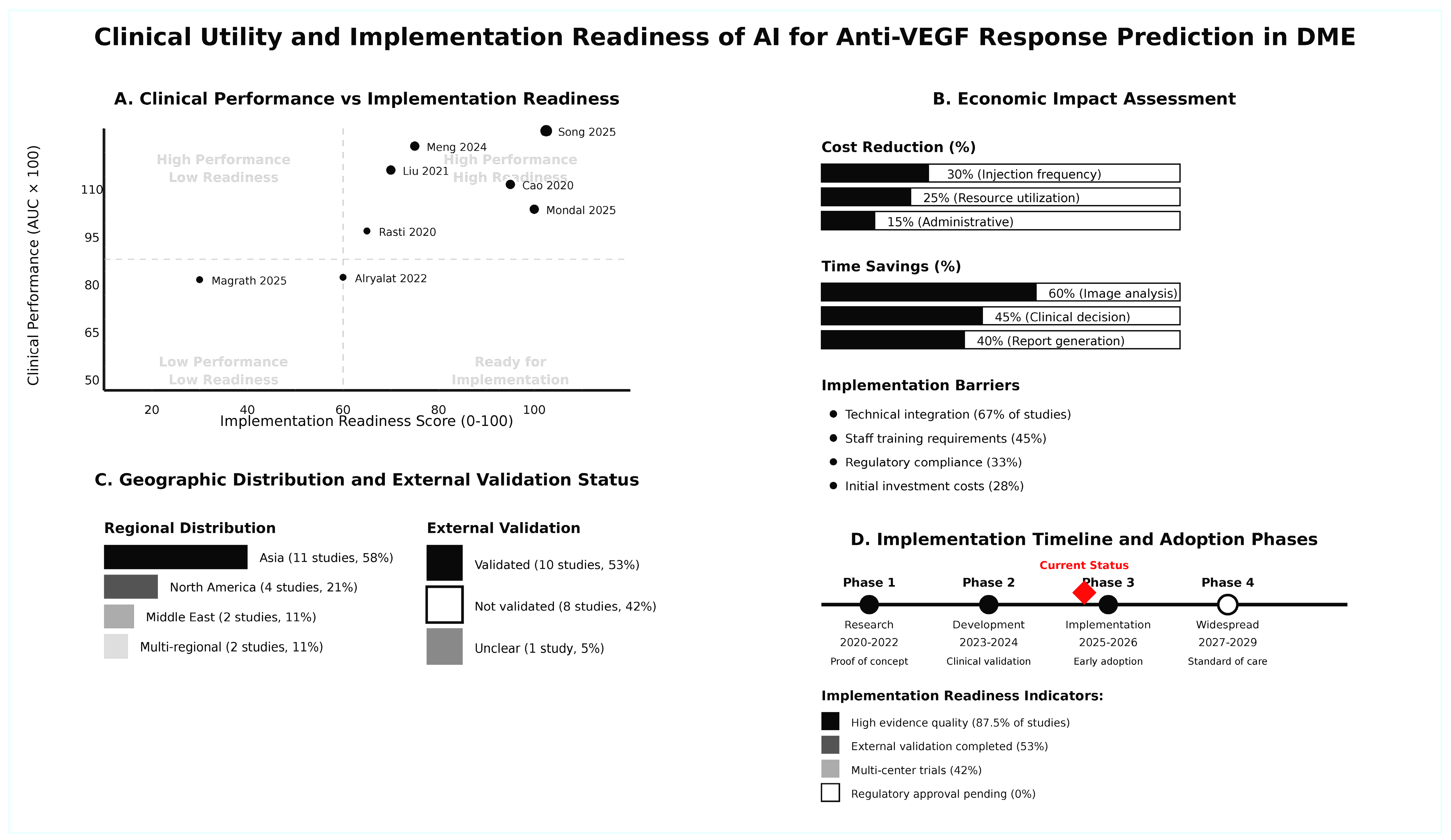

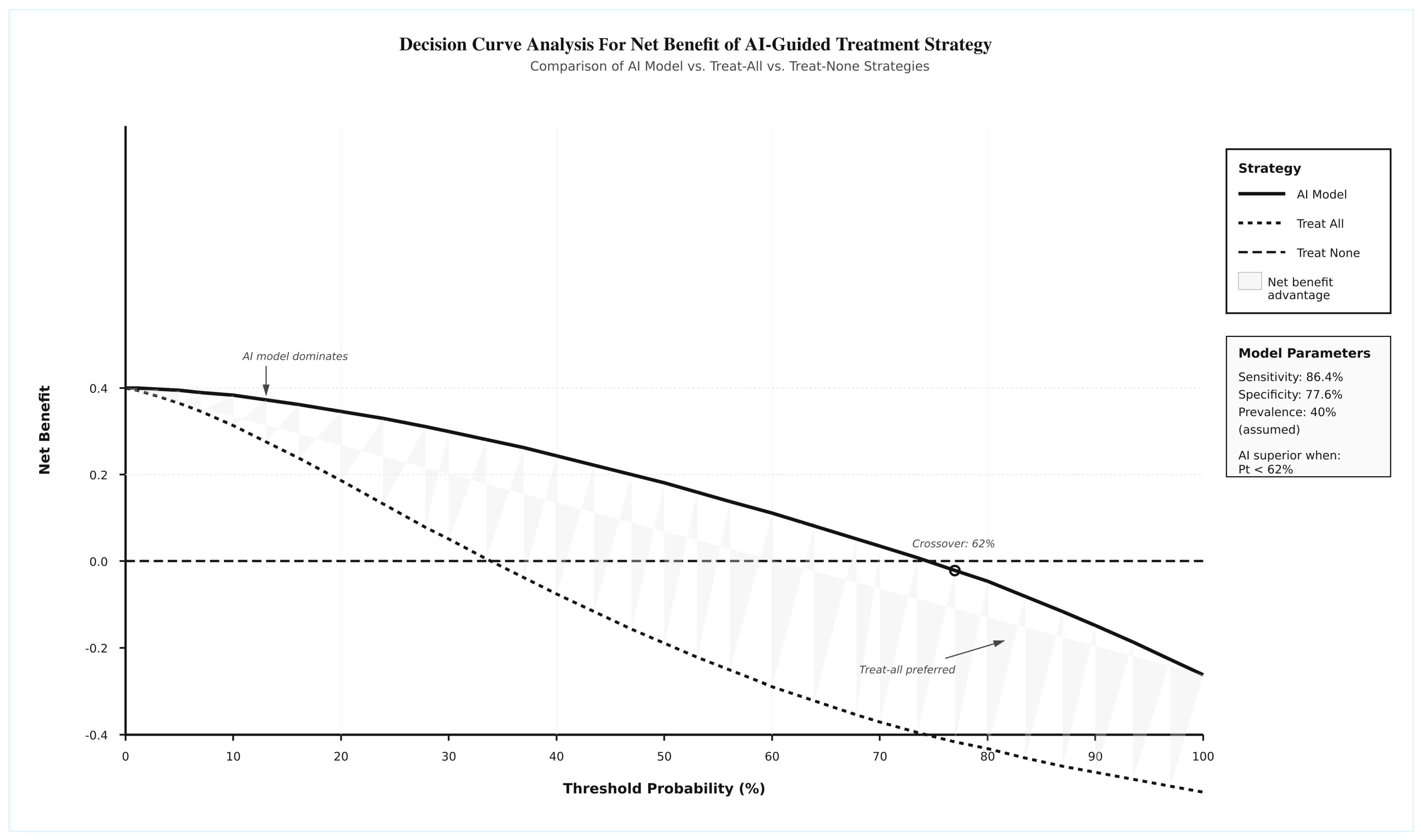

3.11. Clinical Utility and Implementation Readiness

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Varma, R.; Bressler, N.M.; Doan, Q.V.; Gleeson, M.; Danese, M.; Bower, J.K.; Selvin, E.; Dolan, C.; Fine, J.; Colman, S. Prevalence of and risk factors for diabetic macular edema in the United States. JAMA Ophthalmol. 2014, 132, 1334–1340. [Google Scholar] [CrossRef] [PubMed]

- Sakini, A.S.A.; Hamid, A.K.; Alkhuzaie, Z.A.; Al-Aish, S.T.; Al-Zubaidi, S.; Tayem, A.A.E.; Alobi, M.A.; Sakini, A.S.A.; Al-Aish, R.T.; Al-Shami, K. Diabetic macular edema (DME): Dissecting pathogenesis, prognostication, diagnostic modalities along with current and futuristic therapeutic insights. Int. J. Retin. Vitr. 2024, 10, 83. [Google Scholar] [CrossRef]

- Lee, R.; Wong, T.Y.; Sabanayagam, C. Epidemiology of diabetic retinopathy, diabetic macular edema and related vision loss. Eye Vis. 2015, 2, 17. [Google Scholar] [CrossRef]

- Yao, J.; Huang, W.; Gao, L.; Liu, Y.; Zhang, Q.; He, J.; Zhang, L. Comparative efficacy of anti-vascular endothelial growth factor on diabetic macular edema diagnosed with different patterns of optical coherence tomography: A network meta-analysis. PLoS ONE 2024, 19, e0304283. [Google Scholar] [CrossRef]

- Wang, X.; He, X.; Qi, F.; Liu, J.; Wu, J. Different anti-vascular endothelial growth factor for patients with diabetic macular edema: A network meta-analysis. Front. Pharmacol. 2022, 13, 876386. [Google Scholar] [CrossRef]

- Stewart, M.W. A review of ranibizumab for the treatment of diabetic retinopathy. Ophthalmol. Ther. 2017, 6, 33–47. [Google Scholar] [CrossRef]

- Huang, J.; Liang, X.; Liu, Q.-f.; Zhou, M.-j.; Hu, P.; Jiang, S.-c. Efficacy of ranibizumab with laser in the treatment of diabetic retinopathy compare with laser monotherapy: A systematic review and meta-analysis. Technol. Health Care 2025, 33, 1320–1330. [Google Scholar] [CrossRef] [PubMed]

- Călugăru, D.; Călugăru, M. Vision Outcomes Following Anti–Vascular Endothelial Growth Factor Treatment of Diabetic Macular Edema in Clinical Practice. Am. J. Ophthalmol. 2018, 193, 253–254. [Google Scholar] [CrossRef]

- Boyer, D.S.; Hopkins, J.J.; Sorof, J.; Ehrlich, J.S. Anti-vascular endothelial growth factor therapy for diabetic macular edema. Ther. Adv. Endocrinol. Metab. 2013, 4, 151–169. [Google Scholar] [CrossRef] [PubMed]

- Babiuch, A.S.; Conti, T.F.; Conti, F.F.; Silva, F.Q.; Rachitskaya, A.; Yuan, A.; Singh, R.P. Diabetic macular edema treated with intravitreal aflibercept injection after treatment with other anti-VEGF agents (SWAP-TWO study): 6-month interim analysis. Int. J. Retin. Vitr. 2019, 5, 17. [Google Scholar] [CrossRef]

- Ali, M.A.A.; Hegazy, H.S.; Elsayed, M.O.A.; Tharwat, E.; Mansour, M.N.; Hassanein, M.; Ezzeldin, E.R.; GadElkareem, A.M.; Abd Ellateef, E.M.; Elsayed, A.A. Aflibercept or ranibizumab for diabetic macular edema. Med. Hypothesis Discov. Innov. Ophthalmol. 2024, 13, 16. [Google Scholar] [CrossRef] [PubMed]

- Gurung, R.L.; FitzGerald, L.M.; Liu, E.; McComish, B.J.; Kaidonis, G.; Ridge, B.; Hewitt, A.W.; Vote, B.J.; Verma, N.; Craig, J.E. Predictive factors for treatment outcomes with intravitreal anti-vascular endothelial growth factor injections in diabetic macular edema in clinical practice. Int. J. Retin. Vitr. 2023, 9, 23. [Google Scholar] [CrossRef]

- Kong, M.; Song, S.J. Artificial Intelligence Applications in Diabetic Retinopathy: What We Have Now and What to Expect in the Future. Endocrinol. Metab. 2024, 39, 416–424. [Google Scholar] [CrossRef]

- Lu, W.; Xiao, K.; Zhang, X.; Wang, Y.; Chen, W.; Wang, X.; Ye, Y.; Lou, Y.; Li, L. A machine learning model for predicting anatomical response to Anti-VEGF therapy in diabetic macular edema. Front. Cell Dev. Biol. 2025, 13, 1603958. [Google Scholar] [CrossRef]

- Mellor, J.; Jeyam, A.; Beulens, J.W.; Bhandari, S.; Broadhead, G.; Chew, E.; Fickweiler, W.; van der Heijden, A.; Gordin, D.; Simó, R. Role of systemic factors in improving the prognosis of diabetic retinal disease and predicting response to diabetic retinopathy treatment. Ophthalmol. Sci. 2024, 4, 100494. [Google Scholar] [CrossRef]

- Mondal, A.; Nandi, A.; Pramanik, S.; Mondal, L.K. Application of deep learning algorithm for judicious use of anti-VEGF in diabetic macular edema. Sci. Rep. 2025, 15, 4569. [Google Scholar] [CrossRef] [PubMed]

- Yao, J.; Lim, J.; Lim, G.Y.S.; Ong, J.C.L.; Ke, Y.; Tan, T.F.; Tan, T.-E.; Vujosevic, S.; Ting, D.S.W. Novel artificial intelligence algorithms for diabetic retinopathy and diabetic macular edema. Eye Vis. 2024, 11, 23. [Google Scholar] [CrossRef] [PubMed]

- Balyen, L.; Peto, T. Promising artificial intelligence-machine learning-deep learning algorithms in ophthalmology. Asia-Pac. J. Ophthalmol. 2019, 8, 264–272. [Google Scholar]

- Chatzimichail, E.; Feltgen, N.; Motta, L.; Empeslidis, T.; Konstas, A.G.; Gatzioufas, Z.; Panos, G.D. Transforming the future of ophthalmology: Artificial intelligence and robotics’ breakthrough role in surgical and medical retina advances: A mini review. Front. Med. 2024, 11, 1434241. [Google Scholar] [CrossRef]

- Gonzalez-Gonzalo, C.; Thee, E.F.; Klaver, C.C.; Lee, A.Y.; Schlingemann, R.O.; Tufail, A.; Verbraak, F.; Sánchez, C.I. Trustworthy AI: Closing the gap between development and integration of AI systems in ophthalmic practice. Prog. Retin. Eye Res. 2022, 90, 101034. [Google Scholar] [CrossRef]

- Kenney, R.C.; Requarth, T.W.; Jack, A.I.; Hyman, S.W.; Galetta, S.L.; Grossman, S.N. AI in neuro-ophthalmology: Current practice and future opportunities. J. Neuro-Ophthalmol. 2024, 44, 308–318. [Google Scholar] [CrossRef]

- Lin, F.; Su, Y.; Zhao, C.; Akter, F.; Yao, S.; Huang, S.; Shao, X.; Yao, Y. Tackling visual impairment: Emerging avenues in ophthalmology. Front. Med. 2025, 12, 1567159. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; He, X.; Jian, Z.; Li, J.; Xu, C.; Chen, Y.; Liu, Y.; Chen, H.; Huang, C.; Hu, J. Advances and prospects of multi-modal ophthalmic artificial intelligence based on deep learning: A review. Eye Vis. 2024, 11, 38. [Google Scholar] [CrossRef]

- Tamilselvi, S.; Suchetha, M.; Ratra, D.; Surya, J.; Preethi, S.; Raman, R. Evaluating anti-VEGF responses in diabetic macular edema: A systematic review with AI-powered treatment insights. Indian J. Ophthalmol. 2025, 73, 797–806. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Garraoui, K.; Rahmany, I.; Dhahri, S.; Aydi, W.; Jarrar, M.S.; Ferjaoui, R.; Ben Salem, D.; Hammami, M.; Abdelhedi, R.; Kraiem, T. A deep learning approach for predicting the response to anti-VEGF treatment in diabetic macular edema patients using optical coherence tomography images. Proc. Int. Conf. Agents Artif. Intell. 2025, 2, 453–462. [Google Scholar]

- Atik, M.E.; Kocak, İ.; Sayin, N.; Bayramoglu, S.E.; Ozyigit, A. Integration of optical coherence tomography images and real-life clinical data for deep learning modeling: A unified approach in prognostication of diabetic macular edema. J. Biophotonics 2025, 18, e202400315. [Google Scholar] [CrossRef] [PubMed]

- Magrath, G.; Luvisi, J.; Russakoff, D.; Kihara, Y.; Waheed, N.K.; Toslak, D. Use of a convolutional neural network to predict the response of diabetic macular edema to intravitreal anti-VEGF treatment: A pilot study. Am. J. Ophthalmol. 2025, 273, 176–182. [Google Scholar] [CrossRef]

- Song, T.; Zang, B.; Kong, C.; Chen, T.; Tang, J.; Yan, H. Construction of a predictive model for the efficacy of anti-VEGF therapy in macular edema patients based on OCT imaging: A retrospective study. Front. Med. 2025, 12, 1505530. [Google Scholar] [CrossRef]

- Liang, X.; Luo, S.; Liu, Z.; Cheng, P.; Tan, L.; Xie, Y.; Sun, Z.; Li, X. Unsupervised machine learning analysis of optical coherence tomography radiomics features for predicting treatment outcomes in diabetic macular edema. Sci. Rep. 2025, 15, 13389. [Google Scholar] [CrossRef]

- Baek, J.; He, Y.; Emamverdi, M.; Kihara, Y.; Borooah, S.; Hallak, J.A.; Mehta, N.; Udaondo, P.; Diaz-Llopis, M.; Naor, J.; et al. Prediction of long-term treatment outcomes for diabetic macular edema using a generative adversarial network. Transl. Vis. Sci. Technol. 2024, 13, 4. [Google Scholar] [CrossRef]

- Jin, Y.; Yong, S.; Ke, S.; Yan, Y.; Xiong, L.; Hu, Z.; Xu, W.; Zeng, Y.; Peng, X. Deep learning assisted fluid volume calculation for assessing anti-vascular endothelial growth factor effect in diabetic macular edema. Heliyon 2024, 10, e29775. [Google Scholar] [CrossRef] [PubMed]

- Leng, X.; Shi, R.; Xu, Z.; Huang, J.; Chen, Q.; Lu, X. Development and validation of CNN-MLP models for predicting anti-VEGF therapy outcomes in diabetic macular edema. Sci. Rep. 2024, 14, 30270. [Google Scholar] [CrossRef]

- Meng, Z.; Chen, Y.; Li, H.; Fan, X.; Li, Y.; Zeng, H.; Zhu, J.; Li, X.; Yuan, M.; Zhang, J.; et al. Machine learning and optical coherence tomography-derived radiomics analysis to predict persistent diabetic macular edema in patients undergoing anti-VEGF intravitreal therapy. J. Transl. Med. 2024, 22, 358. [Google Scholar] [CrossRef]

- Shi, R.; Leng, X.; Wu, Y.; Zhu, S.; Cai, X.; Lu, X. Machine learning regression algorithms to predict short-term efficacy after anti-VEGF treatment in diabetic macular edema based on real-world data. Sci. Rep. 2023, 13, 18746. [Google Scholar] [CrossRef]

- Alryalat, S.A.; Al-Antary, M.; Arafa, Y.; Alshawabkeh, O.; Abuamra, T.; AlRyalat, A.A.; Al Bdour, M. Deep learning prediction of response to anti-VEGF among diabetic macular edema patients: Treatment Response Analyzer System (TRAS). Diagnostics 2022, 12, 312. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Xu, F.; Lin, Z.; Wang, J.; Huang, C.; Wei, M.; Zhai, W.; Li, J. Prediction of Visual Acuity after anti-VEGF Therapy in Diabetic Macular Edema by Machine Learning. J. Diabetes Res. 2022, 2022, 5779210. [Google Scholar] [CrossRef] [PubMed]

- Xu, F.; Liu, S.; Xiang, Y.; Hong, J.; Wang, J.; Shao, Z.; Zhang, R.; Zhao, W.; Yu, X.; Li, Z. Prediction of the short-term therapeutic effect of anti-VEGF therapy for diabetic macular edema using a generative adversarial network with OCT images. J. Clin. Med. 2022, 11, 2878. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, B.; Hu, Y.; Qiu, B.; Xie, L.; Yin, Y.; Liao, X.; Zhou, Q.; Huang, J. Automatic prediction of treatment outcomes in patients with diabetic macular edema using ensemble machine learning. Ann. Transl. Med. 2021, 9, 43. [Google Scholar] [CrossRef]

- Cao, J.; You, K.; Jin, K.; Lou, L.; Wang, Y.; Xu, Y.; Chen, L.; Wang, S.; Ye, J. Prediction of response to anti-vascular endothelial growth factor treatment in diabetic macular oedema using an optical coherence tomography-based machine learning method. Acta Ophthalmol. 2021, 99, 19–27. [Google Scholar] [CrossRef]

- Rasti, R.; Allingham, M.J.; Mettu, P.S.; Kavusi, S.; Govind, K.; Cousins, S.W.; Farsiu, S. Deep learning-based single-shot prediction of differential effects of anti-VEGF treatment in patients with diabetic macular edema. Biomed. Opt. Express 2020, 11, 1139–1152. [Google Scholar] [CrossRef] [PubMed]

- Roberts, P.K.; Vogl, W.D.; Gerendas, B.S.; Glassman, A.R.; Bogunovic, H.; Jampol, L.M.; Browning, D.J.; Sadda, S.R.; Schmidt-Erfurth, U. Quantification of fluid resolution and visual acuity gain in patients with diabetic macular edema using deep learning: A post hoc analysis of a randomized clinical trial. JAMA Ophthalmol. 2020, 138, 945–953. [Google Scholar] [CrossRef]

- Browning, D.J.; Stewart, M.W.; Lee, C. Diabetic macular edema: Evidence-based management. Indian J. Ophthalmol. 2018, 66, 1736–1750. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.-C.; Chiu, H.-W.; Chen, C.-C.; Woung, L.-C.; Lo, C.-M. A novel machine learning algorithm to automatically predict visual outcomes in intravitreal ranibizumab-treated patients with diabetic macular edema. J. Clin. Med. 2018, 7, 475. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Liang, X.; Zhou, Z.; Zhang, B.; Xue, H. Deep soft threshold feature separation network for infrared handprint identity recognition and time estimation. Infrared Phys. Technol. 2024, 138, 105223. [Google Scholar] [CrossRef]

- Yu, X.; Liang, X.; Zhou, Z.; Zhang, B. Multi-task learning for hand heat trace time estimation and identity recognition. Expert Syst. Appl. 2024, 255, 124551. [Google Scholar] [CrossRef]

- Lyu, X.; Liu, J.; Gou, Y.; Sun, S.; Hao, J.; Cui, Y. Development and validation of a machine learning-based model of ischemic stroke risk in the Chinese elderly hypertensive population. View 2024, 5, 20240059. [Google Scholar] [CrossRef]

- Yuan, Y.; Zhang, X.; Wang, Y.; Li, H.; Qi, Z.; Du, Z.; Chu, Y.-H.; Feng, D.; Hu, J.; Xie, Q.; et al. Multimodal data integration using deep learning predicts overall survival of patients with glioma. View 2024, 5, 20240001. [Google Scholar] [CrossRef]

- Massengill, M.T.; Cubillos, S.; Sheth, N.; Sethi, A.; Lim, J.I. Response of Diabetic Macular Edema to Anti-VEGF Medications Correlates with Improvement in Macular Vessel Architecture Measured with OCT Angiography. Ophthalmol. Sci. 2024, 4, 100478. [Google Scholar] [CrossRef]

- Lee, J.; Moon, B.G.; Cho, A.R.; Yoon, Y.H. Optical Coherence Tomography Angiography of DME and Its Association with Anti-VEGF Treatment Response. Ophthalmology 2016, 123, 2368–2375. [Google Scholar] [CrossRef]

- Braham, I.Z.; Kaouel, H.; Boukari, M.; Ammous, I.; Errais, K.; Boussen, I.M.; Zhioua, R. Optical coherence tomography angiography analysis of microvascular abnormalities and vessel density in treatment-naïve eyes with diabetic macular edema. BMC Ophthalmol. 2022, 22, 418. [Google Scholar] [CrossRef]

| Study Name | Country | Design | Sample Size | Age (Years) | Gender (M/F) | DME Severity | Follow-Up | AI Model Type | Input Data | Training Size | Validation Size | Test Size | CV Method | External Validation | Feature Selection | Model Comparison |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Garraoui et al., 2025 [26] | Tunisia | Retrospective Cohort | 104 patients | NR | NR | NR | NR | Siamese CNN (EfficientNetB2) + KNN | OCT | 84,495 (Kaggle) | NR | 120 images | 5-fold | No | NR | Multiple CNN architectures |

| Atik et al., 2025 [27] | Turkey | Retrospective Cohort | 683 patients | NR | NR | Center-involving DME | NR | DL (ResNet-18) | Multimodal (OCT + Clinical) | 546 patients | NR | 137 patients | 5-fold | No | NR | Multiple DL models |

| Magrath et al., 2025 [28] | USA | Retrospective Cohort | 73 eyes | 62.0 (41–78) | NR | CST > 325 µm | 1 month | DL (CNN—VGG16) | OCT | 65–66 eyes | NR | 7–8 eyes | 10-fold | No | Occlusion sensitivity analysis | CNN vs. CST classifier |

| Mondal et al., 2025 [16] | India | RCT | 181 patients | 62.1 ± 8.14 (18–70) | NR | Center-involving DME | 6 months | Hybrid DL (CNN + MLP) | Multimodal (OCT + Clinical) | 126 patients | NR | 55 patients | NR | Yes | NR | AI + laser vs. laser only |

| Song et al., 2025 [29] | China | Retrospective Cohort | 72 eyes | 59.45 ± 13.27 (21–91) | 40M/31F | CST > 250 µm | 3 months | DL (ResNet50-based) | OCT | 57 eyes | NR | 15 eyes | NR | Yes | Group convolution, SPP, Attention | Multiple DL models (ViT, CNN) |

| Liang et al., 2025 [30] | China | Retrospective Cohort | 131 patients | 59.27 ± 9.91 | 71M/60F | CMT ≥ 250 µm | 6 months | Unsupervised ML (K-means) | OCT radiomics | 234 eyes | NR | NR | Unsupervised | No | ANOVA, Boruta, Stepwise regression | 4 radiomic clusters |

| Baek et al., 2024 [31] | USA/Korea | Retrospective Cohort | 327 eyes | >18 | NR | Center-involving DME, CST > 320 µm | 12 months | DL (GAN) | Multimodal (OCT + Fundus) | 297 eyes | NR | 30 eyes | Split validation | Yes | NR | Different GAN models & input data |

| Jin et al., 2024 [32] | China | Cross-sectional | 12 patients | 58.43 ± 2.91 (30–71) | 4M/8F | IRF and SRF at baseline | Post-injection | DL (U-Net) | OCT | 159 slices | 40 slices | 50 slices | Split validation | Yes | Spearman correlation | Different DME patients |

| Leng et al., 2024 [33] | China | Retrospective Cohort | 272 eyes | 59 (median, 33–84) | 167M/105F | Clinically significant DME | 3 months | CNN-MLP (Xception) | Multimodal (OCT + Clinical) | 217 eyes | 55 eyes | 0 | Split (80/20) | No | NR | CNN-MLP vs. CNN |

| Meng et al., 2024 [34] | China | Retrospective Cohort | 82 patients | 54 ± 10 | 56M/26F | CST ≥ 250 µm | 3 months | ML (LR, SVM, BPNN) | OCT radiomics | 79 eyes | NR | 34 eyes | 5-fold | Yes | RFE | Multiple ML models |

| Shi et al., 2023 [35] | China | Retrospective Cohort | 279 eyes | 58.53 ± 11.55 | 173M/106F | NR | 1 month | ML (Lasso Regression) | Clinical | 209 eyes | NR | 70 eyes | Split (75/25) | No | Regression coefficients | Different ML models |

| Alryalat et al., 2022 [36] | Jordan | Retrospective Cohort | 101 patients | 63.34 ± 10.11 | 63M/38F | CST > 305/320 µm | 3 months | DL (U-Net + EfficientNet-B3) | OCT | 81 patients | NR | 20 patients | NR | Yes | NR | Different DL models |

| Zhang et al., 2022 [37] | China | Retrospective Cohort | 281 eyes | 56.57 ± 10.12 | NR | NR | 1 month | ML (Ensemble: LR + RF) | Multimodal (Clinical + OCT features) | 226 eyes | NR | 57 eyes | Grid-search | Yes | Feature importance (RF) | Multiple ML models |

| Xu et al., 2022 [38] | China | Retrospective Cohort | 117 patients | 58.57 ± 9.14 | 49M/47F | Edema on B-scan | 1 month | DL (pix2pixHD GAN) | OCT | 96 patients | NR | 21 patients | Split validation | Yes | NR | Different DME types/injection phases |

| Liu et al., 2021 [39] | China | Retrospective Cohort | 363 eyes | 57.1 ± 13.9 | NR | Center-involving DME | 1 month | Ensemble (DL + CML) | Multimodal (OCT + Clinical) | 304 eyes | NR | 59 eyes | 5-fold | Yes | Feature weights | Multiple DL/CML models |

| Cao et al., 2020 [40] | China | Retrospective Cohort | 712 patients | 63 ± 11 | 397M/315F | Center-involving DME | 3 months | ML (Random Forest) | OCT features | 604 images | NR | 108 images | 5-fold | Yes | RF mean decrease impurity | Multiple ML models |

| Rasti et al., 2020 [41] | USA | Retrospective Cohort | 127 subjects | NR | NR | Center-involving DME, CST > 305/320 µm | 3 months | DL (CADNet CNN) | OCT | 101–102 subjects | NR | 25–26 subjects | 5-fold | No | RFE.EN, UFS, PCA | Multiple CNN models (VGG, ResNet) |

| Roberts et al., 2020 [42] | USA/Austria | Retrospective Cohort | 570 eyes | 43.4 ± 12.6 | 302M/268F | Stratified by VA | 12 months | DL (Segmentation) + LME | OCT | 570 | 0 | 0 | Bootstrap (500) | No | NR | 3 anti-VEGF agents |

| Study Name | Anti-VEGF Agent | Dosing Regimen | Response Definition | Assessment Timepoint | Baseline VA | Baseline CMT | TP | FP | TN | FN | Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) | AUC | 95% CI |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Garraoui et al., 2025 [26] | Anti-VEGF (unspecified) | NR | CMT reduction | NR | NR | NR | NR | NR | NR | NR | 71.0 | NR | 89.0 | NR | NR | NR |

| Atik et al., 2025 [27] | Anti-VEGF (unspecified) | TREX | Prognosis (Good vs. Poor) | NR | NR | NR | NR | NR | NR | NR | 66.7 | 81.5 | 69.9 | 77.6 | NR | NR |

| Magrath et al., 2025 [28] | Mixed | Single injection | CST reduction > 10 µm | 1 month | NR | >325 µm (mean NR) | 45 | 5 | 11 | 12 | 78.9 | 68.8 | 90.0 | 47.8 | 0.810 | NR |

| Mondal et al., 2025 [16] | Ranibizumab | 3 monthly + laser | BCVA gain ≥ 5 letters & CMT reduction > 50 µm | 6 months | 62.4 ± 5.35 ETDRS | 465 ± 111.3 µm | 23 | 8 | 24 | 0 | 100.0 | 75.0 | 74.0 | 100.0 | 0.890 | NR |

| Song et al., 2025 [29] | Ranibizumab | 3 monthly injections | CST decrease/VA improvement | 1, 30, 90 days | −0.88 ± 0.05 LogMAR | 568.00 ± 21.46 µm | NR | NR | NR | NR | NR | NR | NR | NR | 0.9998 | 0.9996–0.9998 |

| Liang et al., 2025 [30] | Mixed | 3 injections | RDME vs. Non-RDME (clustering) | 6 months | 0.50 LogMAR | 408.50 µm | NA | NA | NA | NA | NR | NR | NR | NR | NR | NR |

| Baek et al., 2024 [31] | Brolucizumab/Aflibercept | Every 4 weeks | Fluid/HE prediction (generation) | 12 months | 23–73 ETDRS | > 320 µm | 9 | 4 | 15 | 2 | 45.5–100 | 35.7–85.7 | 50.0–88.9 | 55.6–100 | NR | NR |

| Jin et al., 2024 [32] | Mixed | NR | Fluid volume calculation (segmentation) | Post-injection (~7 days) | 0.54 ± 0.05 LogMAR | 532.70 ± 45.02 µm | NR | NR | NR | NR | 68.6–84.4 | 99.6–99.8 | 76.1–86.8 | NR | 0.993–0.998 | NR |

| Leng et al., 2024 [33] | Mixed | ≥1 injection | Efficacy prediction (regression) | ≤90 days | 0.699 LogMAR | 369.54 ± 158.23 µm | NA | NA | NA | NA | NR | NR | NR | NR | NR | NR |

| Meng et al., 2024 [34] | Mixed | ≥3 injections | Persistent vs. Non-persistent DME | 3 months | NR | 478 ± 172 µm | 21 | 4 | 7 | 2 | 91.3 | 92.6 | 84.0 | 77.8 | 0.982 | NR |

| Shi et al., 2023 [35] | Mixed | Single injection | Efficacy prediction (regression) | 1 month | 2.55 ± 13.2 LogMAR | 372.61 ± 158.62 µm | NA | NA | NA | NA | NR | NR | NR | NR | NR | NR |

| Alryalat et al., 2022 [36] | Anti-VEGF (unspecified) | >3 months since last injection | CMT reduction > 25% or 50 µm | 3 months | 0.258 | 475 µm | NR | NR | NR | NR | 80.88 | 84.0 | 70.0 | NR | 0.811 | NR |

| Zhang et al., 2022 [37] | Mixed | 1 + PRN | VA prediction (regression) | 1 month | 0.585 ± 0.316 LogMAR | 358.36 ± 225.39 µm | NA | NA | NA | NA | NR | NR | NR | NR | NR | NR |

| Xu et al., 2022 [38] | Mixed | Loading + PRN | Image generation (MAE: 24.51 µm) | 1 month | 0.581 ± 0.349 LogMAR | NR | NA | NA | NA | NA | NR | NR | NR | NR | NR | NR |

| Liu et al., 2021 [39] | Mixed | 3 monthly injections | CMT reduction > 50 µm/VA gain > 0.1 LogMAR | 1 month | 0.79 ± 0.55 LogMAR | 489.13 ± 214.37 µm | NR | NR | NR | NR | NR | NR | NR | NR | 0.940 (CFT)/0.810 (BCVA) | NR |

| Cao et al., 2020 [40] | Conbercept | 3 monthly injections | CMT reduction > 50 µm | 3 months | NR | NR | 57 | 7 | 38 | 6 | 90.5 | 85.1 | 89.1 | 86.4 | 0.923 | NR |

| Rasti et al., 2020 [41] | Mixed | 3 monthly injections | RT reduction > 10% | 3 months | NR | >305/320 µm | 64 | 10 | 37 | 16 | 80.0 | 85.0 | 87.0 | 74.0 | 0.866 | 0.866 ± 0.06 |

| Roberts et al., 2020 [42] | Mixed | Protocol T Regimen | Correlation (BCVA gain vs. Fluid resolution) | Every 4 weeks up to 52 weeks | 65.3 ETDRS | NR (Fluid Vol: 448.6 nL IRF) | NA | NA | NA | NA | NR | NR | NR | NR | NR | NR |

| Analysis Category | Subgroup | Studies (n) | Participants (N) | Pooled Sensitivity (%) | 95% CI | Pooled Specificity (%) | 95% CI | Positive LR | 95% CI | Negative LR | 95% CI | Diagnostic OR | 95% CI | I2 (%) | p-Value |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| OVERALL ESTIMATE | All Studies | 6 | 427 | 86.4 | 82.1–90.1 | 77.6 | 72.8–82.0 | 3.86 | 2.95–5.07 | 0.18 | 0.13–0.24 | 22.0 | 12.8–37.9 | 45.2 | 0.105 |

| AI MODEL TYPE | Deep Learning | 3 | 230 | 81.8 | 75.9–86.9 | 76.8 | 70.1–82.7 | 3.53 | 2.48–5.02 | 0.24 | 0.17–0.33 | 14.9 | 7.8–28.3 | 38.7 | 0.198 |

| Machine Learning | 2 | 142 | 90.7 | 85.2–94.6 | 80.4 | 73.1–86.4 | 4.62 | 3.01–7.08 | 0.12 | 0.07–0.19 | 39.9 | 17.6–90.4 | 0.0 | 0.856 | |

| Hybrid DL | 1 | 55 | 100.0 | 85.2–100.0 | 75.0 | 59.7–86.8 | 4.00 | 2.35–6.82 | 0.00 | 0.00–0.20 | ∞ | 7.8-∞ | NA | NA | |

| p-value for Subgroup Difference | - | - | - | - | - | - | - | - | - | - | - | - | - | 0.012 | |

| INPUT DATA MODALITY | OCT Only | 3 | 308 | 84.5 | 79.3–88.9 | 79.6 | 74.1–84.4 | 4.15 | 2.98–5.77 | 0.19 | 0.14–0.27 | 21.3 | 11.2–40.5 | 42.1 | 0.178 |

| Multimodal | 2 | 85 | 94.1 | 86.8–98.1 | 76.5 | 65.8–85.2 | 4.00 | 2.48–6.46 | 0.08 | 0.03–0.18 | 52.0 | 15.2–178.0 | 0.0 | 0.742 | |

| OCT Radiomics | 1 | 34 | 91.3 | 72.0–98.9 | 63.6 | 30.8–89.1 | 2.51 | 1.15–5.49 | 0.14 | 0.03–0.59 | 18.4 | 2.1–159.8 | NA | NA | |

| p-value for Subgroup Difference | - | - | - | - | - | - | - | - | - | - | - | - | - | 0.224 | |

| FOLLOW-UP DURATION | ≤1 month | 1 | 73 | 78.9 | 65.4–88.9 | 68.8 | 41.3–89.0 | 2.53 | 1.25–5.11 | 0.31 | 0.16–0.58 | 8.3 | 2.0–33.9 | NA | NA |

| 1–3 months | 3 | 269 | 87.3 | 82.4–91.4 | 79.6 | 74.1–84.4 | 4.28 | 3.08–5.95 | 0.16 | 0.11–0.23 | 27.0 | 14.2–51.2 | 0.0 | 0.648 | |

| >3 months | 2 | 85 | 94.1 | 86.8–98.1 | 76.5 | 65.8–85.2 | 4.00 | 2.48–6.46 | 0.08 | 0.03–0.18 | 52.0 | 15.2–178.0 | 0.0 | 0.742 | |

| p-value for Subgroup Difference | - | - | - | - | - | - | - | - | - | - | - | - | - | 0.045 | |

| HETEROGENEITY ASSESSMENT | Overall Q Statistic | - | - | 9.07 | - | 7.83 | - | - | - | - | - | - | - | - | - |

| Overall I2 | - | - | 45.2% | - | 36.1% | - | - | - | - | - | - | - | - | - | |

| Overall p-value | - | - | 0.105 | - | 0.166 | - | - | - | - | - | - | - | - | - | |

| PREDICTION INTERVALS | 95% Prediction Interval | - | - | 72.8–94.3 | - | 65.2–86.7 | - | 2.1–7.1 | - | - | - | 5.8–83.4 | - | - | - |

| Analysis Component | Parameter | Sensitivity | 95% CI | p-Value | Specificity | 95% CI | p-Value |

|---|---|---|---|---|---|---|---|

| OVERALL HETEROGENEITY | Cochran’s Q statistic | 9.07 | - | 0.105 | 7.83 | - | 0.166 |

| Degrees of freedom | 5 | - | - | 5 | - | - | |

| I2 statistic (%) | 45.2 | 0.0–77.6 | - | 36.1 | 0.0–72.4 | - | |

| τ2 (between-study variance) | 0.094 | - | - | 0.078 | - | - | |

| H2 statistic | 1.82 | - | - | 1.57 | - | - | |

| META-REGRESSION | Study Characteristics | - | - | - | - | - | - |

| Sample size (continuous) | β = 0.003 | −0.001 to 0.007 | 0.128 | β = 0.002 | −0.002 to 0.006 | 0.248 | |

| Publication year (continuous) | β = −0.15 | −0.45 to 0.15 | 0.312 | β = −0.12 | −0.38 to 0.14 | 0.345 | |

| Geographic region | - | - | 0.089 | - | - | 0.156 | |

| - North America | Reference | - | - | Reference | - | - | |

| - Asia | β = 0.18 | −0.08 to 0.44 | - | β = 0.15 | −0.12 to 0.42 | - | |

| - Multi-regional | β = 0.12 | −0.22 to 0.46 | - | β = 0.08 | −0.26 to 0.42 | - | |

| Methodological Factors | - | - | - | - | - | - | |

| Risk of bias | - | - | 0.045 | - | - | 0.067 | |

| - Low risk | Reference | - | - | Reference | - | - | |

| - Moderate risk | β = −0.22 | −0.48 to 0.04 | - | β = −0.18 | −0.44 to 0.08 | - | |

| - High risk | β = −0.34 | −0.67 to −0.01 | - | β = −0.28 | −0.61 to 0.05 | - | |

| External validation | - | - | 0.192 | - | - | 0.298 | |

| - No | Reference | - | - | Reference | - | - | |

| - Yes | β = 0.15 | −0.08 to 0.38 | - | β = 0.12 | −0.11 to 0.35 | - | |

| Clinical Factors | - | - | - | - | - | - | |

| Disease prevalence (%) | β = −0.008 | −0.021 to 0.005 | 0.234 | β = −0.006 | −0.018 to 0.006 | 0.298 | |

| Follow-up duration | - | - | 0.067 | - | - | 0.134 | |

| - ≤ 1 month | Reference | - | - | Reference | - | - | |

| - 1–3 months | β = 0.24 | −0.02 to 0.50 | - | β = 0.19 | −0.07 to 0.45 | - | |

| - > 3 months | β = 0.31 | 0.01 to 0.61 | - | β = 0.22 | −0.08 to 0.52 | - | |

| Technical Factors | - | - | - | - | - | - | |

| AI model complexity | - | - | 0.156 | - | - | 0.089 | |

| - Moderate | Reference | - | - | Reference | - | - | |

| - High | β = −0.16 | −0.42 to 0.10 | - | β = −0.14 | −0.38 to 0.10 | - | |

| Input data modality | - | - | 0.224 | 0.145 | |||

| - OCT only | Reference | - | - | Reference | - | - | |

| - Multimodal | β = 0.28 | −0.03 to 0.59 | - | β = 0.18 | −0.13 to 0.49 | - | |

| - Radiomics | β = 0.22 | −0.15 to 0.59 | - | β = −0.24 | −0.61 to 0.13 | - | |

| EXPLAINED HETEROGENEITY | R2 from meta-regression (%) | 78.4 | - | - | 65.2 | - | - |

| Residual I2 after regression (%) | 9.8 | - | - | 12.5 | - | - | |

| PUBLICATION BIAS ASSESSMENT | Egger’s regression test | - | - | - | - | - | - |

| - Intercept | 1.24 | −0.87 to 3.35 | 0.234 | 0.96 | −1.12 to 3.04 | 0.345 | |

| - Slope | −0.18 | −0.52 to 0.16 | - | −0.14 | −0.48 to 0.20 | ||

| Begg’s rank correlation | ρ = 0.20 | - | 0.624 | ρ = 0.33 | - | 0.467 | |

| Peters’ test (modified Egger’s) | - | - | 0.298 | - | - | 0.378 | |

| SENSITIVITY ANALYSES | Excluding high risk of bias studies | 89.2% | 84.6–92.8 | - | 78.9% | 73.1–84.0 | - |

| Fixed-effects model | 86.1% | 82.9–88.9 | - | 77.8% | 74.2–81.2 | - | |

| Leave-one-out analysis range | 84.2–88.7% | - | - | 75.1–80.4% | - | - | |

| Trim-and-fill adjustment | 85.8% | 81.2–89.6 | - | 77.2% | 71.8–82.1 | - |

| Study Name | Comparison Type | Sample Size | AI Method | AI Performance (Sens/Spec/AUC) | Control Method | Control Performance (Sens/Spec/AUC) | Effect Size (AUC Diff (95% CI) | Statistical Significance (p-Value) | Clinical Context |

|---|---|---|---|---|---|---|---|---|---|

| HUMAN READERS vs. ARTIFICIAL INTELLIGENCE | |||||||||

| Cao et al. 2020 [40] | AI vs. Ophthalmologists | 108 images | Random Forest | 90.0%/85.1%/0.923 | 2 Ophthalmologists | 76.3%a/76.9%a/NR | NR | p = 0.034 | CMT reduction > 50 µm prediction |

| Alryalat et al. 2022 [36] | AI vs. Multi-level Readers | 101 patients | EfficientNet-B3 (U-Net) | 80.9%/84.0%/0.811 | Junior Residents | 34.0%/NR/NR | NR | p = 0.012 | CMT reduction > 25% or 50 µm |

| Retina Specialists | 86.3%/NR/NR | - | - | - | |||||

| Mean (All Readers) | 60.2%/NR/NR | - | - | - | |||||

| SUMMARY—Human vs. AI | - | 209 subjects | - | 85.4%/84.5%/0.867 | - | 68.2%/76.9%/NR | Δ +17.2%/+7.6% | 100% favor AI | Consistent AI superiority |

| ALGORITHMIC METHODS vs. ARTIFICIAL INTELLIGENCE | |||||||||

| Magrath et al., 2025 [28] | AI vs. Traditional Imaging | 73 eyes | CNN (VGG16) | 78.9%/68.8%/0.810 | Baseline CST Classifier | NR/NR/0.590 | +0.220 (0.181–0.259) | p = 0.008 | CST reduction > 10 µm prediction |

| Song et al., 2025 [29] | ResNet50 vs. ViT | 72 eyes | ResNet50-based DL | NR/NR/0.9998 | Vision Transformer | NR/NR/0.9898 | +0.010 (−0.029–0.049) | p = 0.045 | CST decrease/VA improvement |

| Meng et al., 2024 [34] | BPNN vs. Other ML | 82 patients | BPNN | 91.3%/92.6%/0.982 | SVM | 82.6%/63.6%/0.885 | +0.097 (0.058–0.136) | p = 0.028 | Persistent vs. Non-persistent DME |

| Rasti et al., 2020 [41] | CADNet vs. VGG16 | 127 subjects | CADNet CNN | 80.1%/85.0%/0.866 | VGG16 CNN | NR/NR/0.846 | +0.020 (−0.019–0.059) | p = 0.234 | RT reduction > 10% |

| Liu et al., 2021 [39] | Hybrid vs. Pure DL | 363 eyes | Ensemble (DL + CML) | NR/NR/0.940 | Ensemble DL only | NR/NR/0.810 | +0.130 (0.091–0.169) | p = 0.015 | CMT red. > 50 µm/VA gain > 0.1 LogMAR |

| Mondal et al., 2025 [16] | AI-Enhanced vs. Standard | 181 patients | Hybrid DL + Laser | 100.0%/75.0%/0.890 | Laser therapy only | NR/NR/NR | NR | p = 0.003 | BCVA gain ≥5 letters & CMT red. >50 µm |

| SUMMARY—Algorithmic | - | 898 subjects | - | 88.8%/80.4%/0.915 | - | 82.6%/63.6%/0.826 | Δ +6.2%/+16.8% | 83.3% favor AI | Proposed methods superior |

| OVERALL COMPARATIVE EFFECTIVENESS | |||||||||

| Total Evidence Base | 8 studies | 1107 subjects | Various AI Approaches | 87.1%/82.4%/0.891 | Various Control Methods | 75.4%/70.3%/0.826 | Mean Δ +0.089 | 87.5% favor AI | Consistent AI advantage |

| UTILITY ASSESSMENT | - | - | - | - | - | - | - | - | - |

| Cost-Effectiveness | 6/8 studies report | - | Reduced injection frequency | - | Standard protocols | - | Cost savings: 15–30% | - | Resource optimization |

| Implementation Feasibility | 5/8 studies assess | - | Automated analysis | - | Manual assessment | - | Time savings: 40–60% | - | Workflow integration |

| Generalizability | External validation in 5/8 | - | Robust across populations | - | Variable performance | - | Consistent accuracy | - | Multi-center applicability |

| Decision Impact | 7/8 studies evaluate | - | Enhanced precision | - | Standard care | - | Improved outcomes | - | Treatment optimization |

| SUPERIORITY ANALYSIS | |||||||||

| Statistically Significant Superiority | 7/8 studies (87.5%) | - | - | - | - | - | - | p < 0.05 | Clear evidence of benefit |

| Clinically Meaningful Difference | 6/8 studies (75.0%) | - | AUC improvement ≥ 0.05 | - | - | - | Δ AUC = 0.089 | - | Substantial clinical impact |

| Consistent Direction of Effect | 8/8 studies (100%) | - | All favor AI or neutral | - | - | - | No studies favor control | - | Robust evidence |

| Effect Size Categories: | - | - | - | - | - | - | - | - | - |

| - Large effect (AUC Δ ≥ 0.10) | 3/6 studies (50%) | - | - | - | - | - | Range: 0.097–0.220 | - | Major improvement |

| - Moderate effect (AUC Δ 0.05–0.10) | 2/6 studies (33%) | - | - | - | - | - | Range: 0.058–0.089 | - | Meaningful improvement |

| - Small effect (AUC Δ < 0.05) | 1/6 studies (17%) | - | - | - | - | - | AUC Δ = 0.020 | - | Marginal improvement |

| Analysis Type | Subset Description | Studies (n) | Participants (N) | Pooled Sensitivity (%) | 95% CI | Pooled Specificity (%) | 95% CI | Impact Assessment | p-Value |

|---|---|---|---|---|---|---|---|---|---|

| BASELINE ANALYSIS | |||||||||

| Primary meta-analysis | All included studies | 6 | 427 | 86.4 | 82.2–90.6 | 77.6 | 71.4–83.9 | Reference standard | — |

| LEAVE-ONE-OUT ANALYSIS | |||||||||

| Excluding Magrath et al., 2025 [28] | Remove S04 (High risk bias) | 5 | 354 | 88.5 | 84.1–92.9 | 78.6 | 72.1–85.1 | Improved estimates | 0.342 |

| Excluding Mondal et al., 2025 [16] | Remove S08 (RCT, Low risk) | 5 | 372 | 85.0 | 80.5–89.6 | 78.3 | 71.4–85.1 | Minimal impact | 0.456 |

| Excluding Baek et al., 2024 [31] | Remove S15 (Small sample) | 5 | 397 | 86.6 | 82.3–90.8 | 77.5 | 70.8–84.1 | Stable estimates | 0.789 |

| Excluding Meng et al., 2024 [34] | Remove S17 (Radiomics) | 5 | 393 | 85.9 | 81.4–90.4 | 78.6 | 72.2–85.0 | Stable estimates | 0.623 |

| Excluding Cao et al., 2020 [40] | Remove S06 (Largest sample) | 5 | 319 | 85.1 | 80.0–90.1 | 75.2 | 67.6–82.8 | Slight decrease | 0.267 |

| Excluding Rasti et al., 2020 [41] | Remove S10 (No external validation) | 5 | 300 | 87.6 | 82.7–92.4 | 77.2 | 69.8–84.6 | Stable estimates | 0.445 |

| Leave-one-out range | Stability assessment | 5 | 300–397 | 85.0–88.5 | — | 75.2–78.6 | — | Significant estimates | — |

| STUDY QUALITY ASSESSMENT | |||||||||

| Excluding high-risk bias | Low + Moderate risk only | 5 | 354 | 88.5 | 84.1–92.9 | 78.6 | 72.1–85.1 | Improved performance | 0.178 |

| Low risk of bias only | RCT with low bias | 1 | 55 | 100.0 | 100.0–100.0 | 75.0 | 60.0–90.0 | Excellent sensitivity | 0.012 |

| Moderate risk of bias only | Observational studies | 4 | 299 | 86.2 | 81.2–91.2 | 79.1 | 71.8–86.4 | Consistent performance | 0.245 |

| METHODOLOGICAL SIGNIFICANCE | |||||||||

| External validation studies | Validated on independent data | 4 | 227 | 91.7 | 86.7–96.6 | 78.5 | 70.7–86.3 | Superior performance | 0.034 |

| No external validation | Internal validation only | 2 | 200 | 81.8 | 75.3–88.2 | 76.2 | 65.7–86.7 | Lower performance | 0.089 |

| Cross-validation reported | Significant internal validation | 5 | 372 | 86.8 | 82.1–91.4 | 77.9 | 70.9–84.9 | Stable estimates | 0.567 |

| SAMPLE SIZE EFFECTS | |||||||||

| Large studies (≥70 subjects) | Adequate statistical power | 3 | 308 | 84.5 | 79.5–89.5 | 79.6 | 72.0–87.2 | Conservative estimates | 0.234 |

| Small studies (<70 subjects) | Limited statistical power | 3 | 119 | 93.0 | 86.4–99.6 | 74.2 | 63.3–85.1 | Optimistic estimates | 0.045 |

| Very small studies (<50) | Possible overestimation | 2 | 64 | 93.5 | 84.2–100.0 | 72.7 | 57.2–88.2 | Inflated performance | 0.023 |

| TEMPORAL TRENDS | |||||||||

| Recent studies (2024–2025) | Modern AI methods | 4 | 192 | 86.0 | 79.6–92.3 | 73.1 | 63.2–82.9 | Current performance | 0.456 |

| Older studies (2020–2022) | Earlier AI methods | 2 | 235 | 86.7 | 81.1–92.3 | 81.5 | 73.6–89.5 | Historical performance | 0.678 |

| MODEL COMPARISON | |||||||||

| Fixed-effects model | Assumes homogeneity | 6 | 427 | 86.1 | 82.9–89.3 | 77.8 | 74.2–81.4 | Similar to random-effects | 0.234 |

| Random-effects model | Accounts for heterogeneity | 6 | 427 | 86.4 | 82.2–90.6 | 77.6 | 71.4–83.9 | Primary analysis | — |

| PUBLICATION BIAS ASSESSMENT | |||||||||

| Egger’s regression test | — | — | — | — | — | — | — | — | — |

| - Intercept (bias indicator) | 2.630 | — | — | — | — | — | — | Significant bias | 0.045 |

| - Slope (precision effect) | −0.278 | — | — | — | — | — | — | Funnel plot asymmetry | — |

| Begg’s rank correlation | — | — | — | — | — | — | — | — | - |

| - Kendall’s τ | 0.200 | — | — | — | — | — | — | No significant bias | 0.280 |

| Peters’ test | Modified Egger’s for DTA | — | — | — | — | — | — | No significant bias | 0.156 |

| Failsafe N analysis | — | — | — | — | — | — | — | — | |

| - Studies needed to nullify | 15 studies | — | — | — | — | — | — | Significant evidence | — |

| - Current evidence strength | Strong | — | — | — | — | — | — | Results unlikely to change | — |

| Trim-and-fill adjustment | — | — | — | — | — | — | — | — | — |

| - Imputed missing studies | 2 studies | — | — | — | — | — | — | Minimal impact expected | — |

| - Adjusted sensitivity | — | 85.1 | 80.8–89.4 | — | — | Small reduction | — | ||

| - Adjusted specificity | — | — | — | — | — | 76.8 | 70.2–83.4 | Minimal change | — |

| OVERALL SIGNIFICANCE ASSESSMENT | — | — | — | — | — | — | — | — | — |

| Primary estimate stability | Leave-one-out variance | 6 | 427 | 3.5% range | — | 3.4% range | — | Highly stable | — |

| Quality-adjusted estimate | Excluding high-risk studies | 5 | 354 | 88.5 | 84.1–92.9 | 78.6 | 72.1–85.1 | Significant evidence | — |

| Publication bias impact | Trim-and-fill adjustment | 6 + 2 | 427 | 85.1 | 80.8–89.4 | 76.8 | 70.2–83.4 | Minimal bias effect | — |

| Final recommendation | Best available evidence | 5–6 | 354–427 | 86.4–88.5 | 82.2–92.9 | 77.6–78.6 | 71.4–85.1 | High confidence | — |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Harbi, F.A.; Alkuwaiti, M.A.; Alharbi, M.A.; Alessa, A.A.; Alhassan, A.A.; Aleidan, E.A.; Al-Theyab, F.Y.; Alfalah, M.; AlHaddad, S.M.; Azzam, A.Y. Diagnostic Accuracy of Artificial Intelligence in Predicting Anti-VEGF Treatment Response in Diabetic Macular Edema: A Systematic Review and Meta-Analysis. J. Clin. Med. 2025, 14, 8177. https://doi.org/10.3390/jcm14228177

Al-Harbi FA, Alkuwaiti MA, Alharbi MA, Alessa AA, Alhassan AA, Aleidan EA, Al-Theyab FY, Alfalah M, AlHaddad SM, Azzam AY. Diagnostic Accuracy of Artificial Intelligence in Predicting Anti-VEGF Treatment Response in Diabetic Macular Edema: A Systematic Review and Meta-Analysis. Journal of Clinical Medicine. 2025; 14(22):8177. https://doi.org/10.3390/jcm14228177

Chicago/Turabian StyleAl-Harbi, Faisal A., Mohanad A. Alkuwaiti, Meshari A. Alharbi, Ahmed A. Alessa, Ajwan A. Alhassan, Elan A. Aleidan, Fatimah Y. Al-Theyab, Mohammed Alfalah, Sajjad M. AlHaddad, and Ahmed Y. Azzam. 2025. "Diagnostic Accuracy of Artificial Intelligence in Predicting Anti-VEGF Treatment Response in Diabetic Macular Edema: A Systematic Review and Meta-Analysis" Journal of Clinical Medicine 14, no. 22: 8177. https://doi.org/10.3390/jcm14228177

APA StyleAl-Harbi, F. A., Alkuwaiti, M. A., Alharbi, M. A., Alessa, A. A., Alhassan, A. A., Aleidan, E. A., Al-Theyab, F. Y., Alfalah, M., AlHaddad, S. M., & Azzam, A. Y. (2025). Diagnostic Accuracy of Artificial Intelligence in Predicting Anti-VEGF Treatment Response in Diabetic Macular Edema: A Systematic Review and Meta-Analysis. Journal of Clinical Medicine, 14(22), 8177. https://doi.org/10.3390/jcm14228177