Abstract

Background: Recent advancements in Artificial Intelligence (AI) have the potential to address the challenges of mammographic screening programs by enhancing the performance of Computer-Aided Detection (CAD) systems, improving detection accuracy, and reducing false positive rates and recall rates. These systems were mostly investigated by control trials using cancer-enriched datasets and multiple readers. Objectives: This study aims to evaluate the real-world impact of AI integration on the performance of a breast cancer screening program. Methods: In January 2021, our mammography unit integrated an AI system (iCAD version 2.0) into its mammographic screening protocol. This study evaluates audit data of 31,176 mammograms interpreted between 2017 and 2021, comparing 24,373 mammograms prior to AI implementation and 6803 after the integration. Logistic regression analysis was used to assess the statistical significance of changes in key screening metrics, with a significance level of p < 0.05. Results: This study assesses the impact of artificial intelligence (AI) on mammographic screening. The cancer detection rate increased significantly from 6.2 per 1000 in 2019 to 9.3 per 1000 in 2021, with cancers detected on mammograms rising to 98%. Stage 1 cancer detection reached 100%, and the false negative rate dropped to 0%. Additionally, ductal carcinoma in situ (DCIS) detection decreased from 36.4% in 2019 to 20% in 2021. These findings highlight AI’s effectiveness in improving cancer detection accuracy and efficiency. Conclusions: The integration of AI into mammographic screening demonstrated promising results in improving cancer detection rates and reducing false negative rates. These findings highlight AI’s potential to enhance screening efficacy.

1. Introduction

Breast cancer remains the most prevalent malignancy among women globally, accounting for over 500,000 annual deaths worldwide [1]. Mammographic screening programs, widely implemented in developed countries, have demonstrated efficacy in reducing mortality rates and enhancing early cancer detection [2]. However, these programs face significant challenges, with up to 25% of mammographically visible cancers eluding detection during routine screenings [1,3,4].

They also cause a substantial workload burden for breast radiologists [2], a concern exacerbated by the general shortage of breast radiologists [5,6,7].

In response to these challenges, artificial intelligence (AI) has emerged as a promising solution. Recent advancements in AI systems have significantly improved the performance of Computer-Aided Detection (CAD) algorithms used in mammogram analysis [8]. These CAD systems are designed to identify soft tissue lesions and suspicious calcifications, potentially enhancing the accuracy and efficiency of mammographic interpretations [9].

Studies have shown that AI-assisted screening increases cancer detection accuracy [5,8,10], increases early cancer detection rates [5], decreases false positive rates and decreases recall rates [8], without increasing mammographic reading time [10]. Some studies that compared stand-alone AI-based screening to traditional radiologist screening showed AI-based screening was not inferior to radiologist screening sensitivity [11,12] and could substantially reduce the workload [11]. Furthermore, AI systems show promise in stratifying mammography exams, potentially allowing for the exclusion of radiologist review in low-risk cases [12].

However, while controlled trials and enriched datasets have provided important insights into the potential benefits of AI, there is a lack of real-world data on its implementation outside a research environment. In clinical practice, the integration of AI into mammography workflows is subject to variability in how radiologists engage with the system. Unlike in research settings—where AI outputs are always reviewed and considered—real-world scenarios may involve cases where the interpreting radiologist does not actively consult the AI findings, perceives them as time-consuming to review, or feels that low specificity undermines their diagnostic confidence. In some situations, the AI’s alerts may even contribute to an increase in BI-RADS 0 assessments due to heightened caution, potentially affecting recall rates. These factors highlight the importance of assessing AI performance not only under optimal study conditions, but also in routine, heterogeneous clinical settings where user adoption, trust, and workflow integration play crucial roles in determining its actual impact.

The purpose of this study was to evaluate real-world mammography screening performance following the implementation of an AI system, by reviewing its impact on standardized audit benchmarks (we did not prospectively collect AI individual results as would typically be conducted in a research environment). In January 2021, our breast imaging unit incorporated an AI system (iCAD version 2.0) into its reading protocol. Since then, most of the exams have been interpreted by breast radiologists with AI-assisted decision support. This article presents a preliminary report on the real-world impacts observed in our screening program’s auditing analysis following the implementation of this AI software. Our findings contribute to the growing body of evidence regarding the practical applications and outcomes of AI integration in clinical mammography settings.

2. Materials and Methods

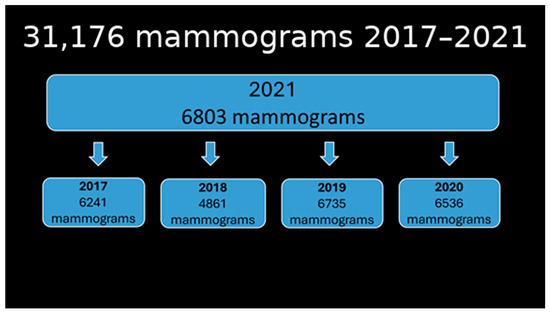

Clalit Health Services is the largest Health Maintenance Organization (HMO) in Israel. Mammography interpretations at Clalit Health Services undergo central auditing by the National Breast Program. According to the Ministry of Health policy in Israel, biennial screening mammography is offered to all women aged 50 to 74. This study is based on the audit results of mammography interpretations from the Beilinson mammography unit, covering a period of three years before and one year after implementing AI software for mammography interpretation and breast cancer risk assessment. Since the AI software was implemented in January 2021, the 2021 cohort audit was compared to previous annual mammography audits (Figure 1).

Figure 1.

Number of mammograms in 2021 compared to previous years.

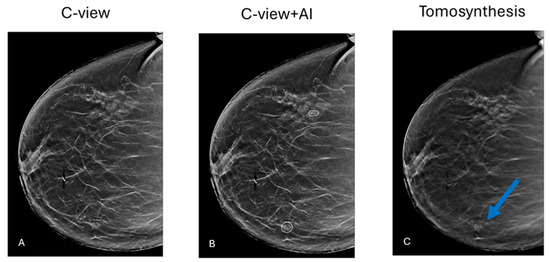

2.1. Software System

The AI system implemented for screening is the commercially available ICAD system (iCAD; M-Vu iCAD®, version 3, Nashua, NH, USA). The AI system provides findings and an examination score to radiologists during assisted readings, which stratifies screenings based on breast cancer probability. It categorizes each case into four risk groups, predicting the likelihood of breast cancer diagnosis/development within the next 1–2 years based on mammography images and patient age, compared to the general population risk at the same age (Figure 2).

Figure 2.

(A) Primary mammographic examination. There is no apparent pathology. (B) iCAD software detected 2 suspicious locations: a group of calcifications (lateral) and an architectural distortion (medial). (C) Tomosynthesis clearly shows the distortion flagged by AI. Biopsy confirmed Invasive Ductal Carcinoma.

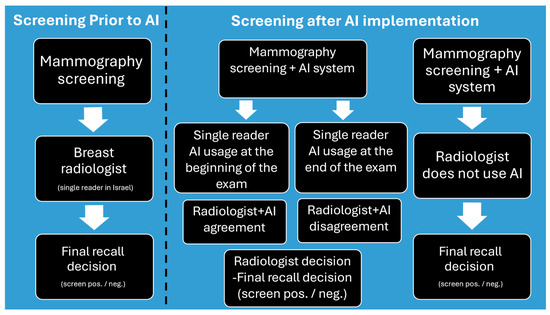

2.2. Reading Protocols

A team of fellowship-trained full-time breast radiologists, with experience ranging from 6 to 16 years, interpreted mammograms in a single-reader format. All radiologists received comprehensive training on the AI system, after which reading protocols were determined individually by each radiologist (Figure 3).

Figure 3.

Screening protocol before and after AI implementation.

Standardized audit reports, as received from the National Breast Program’s central epidemiology unit, of Clalit Health Services included the number of screening mammograms, age distribution, true and false positives, and the cancer detection rate (CDR), and stage. These parameters were compared across the three study periods.

2.3. Statistical Analysis

Descriptive statistics were generated for categorical and continuous variables, and group comparisons were conducted as appropriate. Logistic regression was used to test the differences in the probability of screening between different cohorts. p < 0.05 was considered to indicate a statistically significant difference. SAS software version 9.4 was used for statistical analysis (SAS Institute Inc., Cary, NC, USA).

3. Results

3.1. Mammography Categories

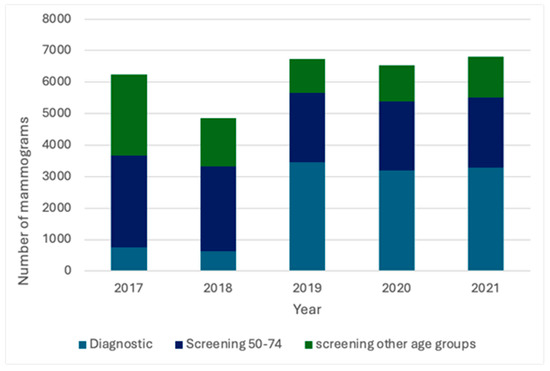

A total of 31,176 mammographic interpretations were included in the study, with 24,373 interpreted before and 6803 after AI implementation. Audit data from 2017 to 2021 indicated that approximately half of the mammograms in 2019–2021 were categorized as screening and half as diagnostic. In contrast, screening accounted for 87–88% of mammograms in 2017 and 2018. The number of mammograms for women aged 50–74 remained similar in 2019–2021 but was significantly higher in 2017 and 2018. The same pattern was noted for women under 50. The number of diagnostic mammograms was consistent in 2019–2021 but significantly lower in 2017–2018 (Figure 4).

Figure 4.

Distribution of screening and diagnostic mammograms in 2017–2021.

3.2. Screening Benchmarks

The number of overall detected breast CDRs was higher in 2021 compared to 2019 and 2020 (p < 0.0001). CDR in the screening ages 50–74 was lower in 2021 compared to 2020 and 2019. The number of invasive malignancies found in that age range was between 4 and 7 malignancies annually. The discrepancy between all detected malignancies and those found by mammography was the lowest in 2021. The false negative rate (FNR) in 2021 was also lower than in 2019 and 2020. The number of malignancies in women aged 50–74 was similar across all years (Table 1).

Table 1.

Results. Cancer detection rate (CDR), False Negative (FN), and Ductal Carcinoma In Situ (DCIS).

3.3. Cancer Stage

The number of invasive cancers detected at stage 1 in screening mammograms for women aged 50–74 was highest in 2021, like 2020, and statistically significantly higher than in 2019. The proportion of ductal carcinoma in situ (DCIS) cases detected was similar in 2020 and 2021 but lower compared to 2019.

4. Discussion

Screening programs have long been considered effective in the early detection of breast cancer. However, the sensitivity of digital mammography (DM) is estimated to be around 87% [13]. Many studies have shown promising results for the use of AI in screening programs, with most of the reported benefits demonstrated in controlled trial environments [1,2,3,4,5,9,10,11,12,13,14,15].

Current evidence does not demonstrate an effect of AI-supported screening mammography on breast cancer survival. Existing studies are primarily diagnostic-accuracy and implementation trials, reporting process and early surrogate outcomes—such as CDR, recall and false-positive rates, positive predictive value, and workload—rather than mortality. Therefore, there is no direct evidence to date that AI use in screening mammography improves breast cancer survival. Ongoing trials with longer follow-up are required to determine its effects on interval cancer rates and mortality.

While most published studies are conducted in research settings—where every examination is known to have been reviewed by the AI system—real-world practice differs substantially. In routine clinical settings, it is often unclear whether, and to what extent, radiologists actively incorporate AI findings into their interpretations [16]. Notably, improved standardized audit benchmark results compared with readings without AI were previously demonstrated, even when the level of radiologist integration of AI outputs was unknown. Similarly, in real-world double-reading scenarios of screening mammography—including tomosynthesis—studies have likewise reported improvements in audit benchmarks [8,15].

In line with these real-world findings, our study aimed to evaluate the impact of integrating an AI system into the performance of our breast unit in routine daily use. During 2021, the first year after AI implementation, 6803 mammograms were performed, approximately half for screening and half for diagnostic purposes. Comparison of audit reports from 2017 to 2021 demonstrated a shift in the distribution between screening and diagnostic mammograms, with a progressive decrease in the proportion of screening examinations and a corresponding increase in diagnostic examinations. This change likely reflects modifications in labeling and data acquisition due to updates in the RIS (Radiology Information System), digitalization of reporting systems in both radiology and pathology, as well as personnel changes. These factors limit direct comparisons between all years; therefore, we focused on comparing 2021 with 2019–2020, which were more demographically comparable.

Several screening mammography audit benchmarks [17] improved in 2021 compared to 2019–2020. These included higher CDR, a greater proportion of cancers detected on mammography, a higher percentage of stage 1 cancers, a false-negative rate of 0% (although this exceptionally low rate may reflect registration errors), and a lower percentage of DCIS cases detected. These improvements may be attributed to the integration of the AI system, consistent with other real-world reports, where CDR was 13–18% found higher with AI support [8,15,16]. The higher-than-benchmark CDR can be attributed to the more diagnostic, rather than screening, nature of our mammography unit.

Some benchmarks were better in 2018, which may be explained by the unusually low proportion of mammograms labeled as diagnostic that year, artificially inflating the detection rate for screening examinations. The lower CDR for women aged 50–74 in 2021 may be due to the relatively small number of screening examinations in this group, limiting statistical comparisons. Notably, the COVID-19 pandemic did not result in a significant change in the overall screening numbers in our cohorts.

This study has several limitations. First, the cohort size does not meet the recommended 5000 annual screening examinations for robust audit comparisons [16]. We addressed this by comparing our performance to prior years within the same unit. Second, the final reports reflect radiologist interpretation, which may not always align with AI recommendations. Studies have shown that only 30% of radiologists regularly use AI in daily practice [17], a figure influenced by concerns over inconsistency, reduced productivity, and cost [16]. In our dataset, we could not determine the proportion of cases in which AI assistance was actively utilized, since there are no objective markers indicating whether AI was used in each case. Third, we analyzed our audits using the fixed threshold implemented by the vendor. We did not perform a detailed evaluation of the effects on NPV and recall rate across different thresholds, as has been conducted in recently published studies [15,18]. Finally, as in other real-world settings, registration, labeling, and reporting processes in radiology and pathology are not strictly standardized, which can lead to errors or inconsistencies in audit data.

5. Conclusions

Our study presents some of the earliest real-world results from the implementation of an AI system in our breast unit, demonstrating promising improvements in standardized screening audit benchmarks. We anticipate that these gains will persist—or potentially increase—after the adaptation period has passed. However, the clinical significance of these improvements requires further validation.

Author Contributions

Conceptualization, A.G.; Methodology, A.G.; Formal Analysis, T.S.; Data Curation, H.S.R., T.F. and A.G.; Writing—Original Draft Preparation, A.G., H.S.R. and T.F.; Writing—Review and Editing, A.G., H.S.R., T.F., M.D.S., S.T., E.A. and A.M.; Visualization, M.D.S.; Project Administration, A.G., T.F., H.S.R. and M.D.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to its retrospective nature and use of anonymized audit data.

Informed Consent Statement

Patient consent was waived due to the retrospective design of the study and the use of anonymized audit data.

Data Availability Statement

Data is contained within the article.

Acknowledgments

We thank the staff of the Beilinson Breast Imaging Unit and the National Breast Program for administrative and technical support. During the preparation of this manuscript, the authors used OpenAI ChatGPT (GPT 4, July 2025) for language editing and minor improvements in figure resolution. The authors reviewed and edited all output and took full responsibility for the content and final images.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

Cancer detection rate (CDR), Ductal Carcinoma In Situ (DCIS), Artificial Intelligence (AI), and digital mammography (DM).

References

- Rodriguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Tan, T.; Mertelmeier, T.; et al. Stand-Alone Artificial Intelligence for Breast Cancer Detection in Mammography: Comparison with 101 Radiologists. J. Natl. Cancer Inst. 2019, 111, 916–922. [Google Scholar] [CrossRef] [PubMed]

- Pacilè, S.; Lopez, J.; Chone, P.; Bertinotti, T.; Grouin, J.-M.; Fillard, P. Improving Breast Cancer Detection Accuracy of Mammography with the Concurrent Use of an Artificial Intelligence Tool. Radiol. Artif. Intell. 2020, 2, e190208. [Google Scholar] [CrossRef] [PubMed]

- Nyström, L.; Andersson, I.; Bjurstam, N.; Frisell, J.; Nordenskjöld, B.; Rutqvist, L.E. Long-Term Effects of Mammography Screening: Updated Overview of the Swedish Randomised Trials. Lancet 2002, 359, 909–919. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.D.; Abraham, R.F.; Smith, B.L.; Lehman, J.M.; Buist, D.S.; Kerlikowske, K.; Henderson, L.M.; Onega, T.; Tosteson, A.N.A.; Rauscher, G.H.; et al. National Performance Benchmarks for Modern Screening Digital Mammography: Update from the Breast Cancer Surveillance Consortium. Radiology 2017, 283, 49–58. [Google Scholar] [CrossRef] [PubMed]

- Lauritzen, A.D.; Lillholm, M.; Lynge, E.; Nielsen, M.; Karssemeijer, N.; Vejborg, I. Early Indicators of the Impact of Using AI in Mammography Screening for Breast Cancer. Radiology 2024, 311, 783–791. [Google Scholar] [CrossRef] [PubMed]

- Paci, E.; Broeders, M.; Hofvind, S.; Duffy, S.W. Summary of the Evidence of Breast Cancer Screening Outcomes in Europe and First Estimate of the Benefit and Harm Balance Sheet. J. Med. Screen. 2012, 19, 5–13. [Google Scholar] [CrossRef] [PubMed]

- Moss, S.M.; Wale, C.; Smith, R.; Evans, A.; Cuckle, H.; Duffy, S.W. Effect of Mammographic Screening from Age 40 Years on Breast Cancer Mortality in the UK Age Trial at 17 Years’ Follow-Up: A Randomised Controlled Trial. Lancet Oncol. 2015, 16, 1123–1132. [Google Scholar] [CrossRef] [PubMed]

- Elías-Cabot, E.; Romero-Martín, S.; Raya-Povedano, J.L.; Brehl, A.K.; Álvarez-Benito, M. Impact of Real-Life Use of Artificial Intelligence as Support for Human Reading in a Population-Based Breast Cancer Screening Program with Mammography and Tomosynthesis. Eur. Radiol. 2024, 34, 3958–3966. [Google Scholar] [CrossRef] [PubMed]

- Sechopoulos, I.; Teuwen, J.; Mann, R. Artificial Intelligence for Breast Cancer Detection in Mammography and Digital Breast Tomosynthesis: State of the Art. Semin. Cancer Biol. 2021, 72, 214–225. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Ruiz, A.; Krupinski, E.; Mordang, J.J.; Schilling, K.; Heywang-Köbrunner, S.H.; Sechopoulos, I.; Mann, R.M. Detection of Breast Cancer with Mammography: Effect of an Artificial Intelligence Support System. Radiology 2019, 290, 305–314. [Google Scholar] [CrossRef] [PubMed]

- Lauritzen, A.D.; Rodríguez-Ruiz, A.; von Euler-Chelpin, M.C.; Lynge, E.; Vejborg, I.; Nielsen, M.; Karssemeijer, N.; Lillholm, M. An Artificial Intelligence–Based Mammography Screening Protocol for Breast Cancer: Outcome and Radiologist Workload. Radiology 2022, 304, 41–49. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Ruiz, A.; Lång, K.; Gubern-Merida, A.; Teuwen, J.; Broeders, M.; Gennaro, G.; Clauser, P.; Helbich, T.H.; Chevalier, M.; Mertelmeier, T.; et al. Can We Reduce the Workload of Mammographic Screening by Automatic Identification of Normal Exams with Artificial Intelligence? A Feasibility Study. Eur. Radiol. 2019, 29, 4825–4832. [Google Scholar] [CrossRef] [PubMed]

- Lamb, L.R.; Lehman, C.D.; Gastounioti, A.; Conant, E.F.; Bahl, M. Artificial Intelligence (AI) for Screening Mammography, from the AJR Special Series on AI Applications. Am. J. Roentgenol. 2022, 219, 369–381. [Google Scholar] [CrossRef] [PubMed]

- Ng, A.Y.; Oberije, C.J.G.; Ambrózay, É.; Szabó, E.; Serfőző, O.; Karpati, E.; Fox, G.; Glocker, B.; Morris, E.A.; Forrai, G.; et al. Prospective Implementation of AI-Assisted Screen Reading to Improve Early Detection of Breast Cancer. Nat. Med. 2023, 29, 3044–3049. [Google Scholar] [CrossRef] [PubMed]

- Eisemann, N.; Bunk, S.; Mukama, T.; Baltus, H.; Elsner, S.A.; Gomille, T.; Hecht, G.; Heywang-Köbrunner, S.; Rathmann, R.; Siegmann-Luz, K.; et al. Nationwide Real-World Implementation of AI for Cancer Detection in Population-Based Mammography Screening. Nat. Med. 2025, 31, 917–924. [Google Scholar] [CrossRef] [PubMed]

- Allen, B.; Agarwal, S.; Coombs, L.; Wald, C.; Dreyer, K. 2020 ACR Data Science Institute Artificial Intelligence Survey. J. Am. Coll. Radiol. 2021, 18, 258–267. [Google Scholar] [CrossRef] [PubMed]

- D’Orsi, C.J.; Sickles, E.A.; Mendelson, E.B.; Morris, E.A. ACR BI-RADS® Atlas: Breast Imaging Reporting and Data System; American College of Radiology: Reston, VA, USA, 2013. [Google Scholar]

- Chen, I.E.; Joines, M.; Capiro, N.; Dawar, R.; Sears, C.; Sayre, J.; Chalfant, J.; Fischer, C.; Hoyt, A.C.; Hsu, W.; et al. Commercial Artificial Intelligence Versus Radiologists: NPV and Recall Rate in Large Population-Based Digital Mammography and Tomosynthesis Screening Mammography Cohorts. Am. J. Roentgenol. 2025, Epub ahead of print. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).