Measuring What Matters in Trial Operations: Development and Validation of the Clinical Trial Site Performance Measure

Abstract

1. Introduction

2. Materials and Methods

2.1. Aims

- (i)

- Construct a psychometrically robust framework capturing core domains of site-level operational performance relevant to trial validity and reproducibility;

- (ii)

- Identify a parsimonious core indicator set through Mokken Scale Analysis to enable scalable and transparent monitoring;

- (iii)

- Establish evidence-based cut-offs to distinguish optimal from suboptimal site performance, thereby supporting bias control and risk-based monitoring; and;

- (iv)

- Design the instrument for seamless integration into a digital application, facilitating real-time benchmarking across multicentre trials and alignment with principles of QbD and modern clinical research governance.

2.2. Setting

2.3. Inclusion Criteria

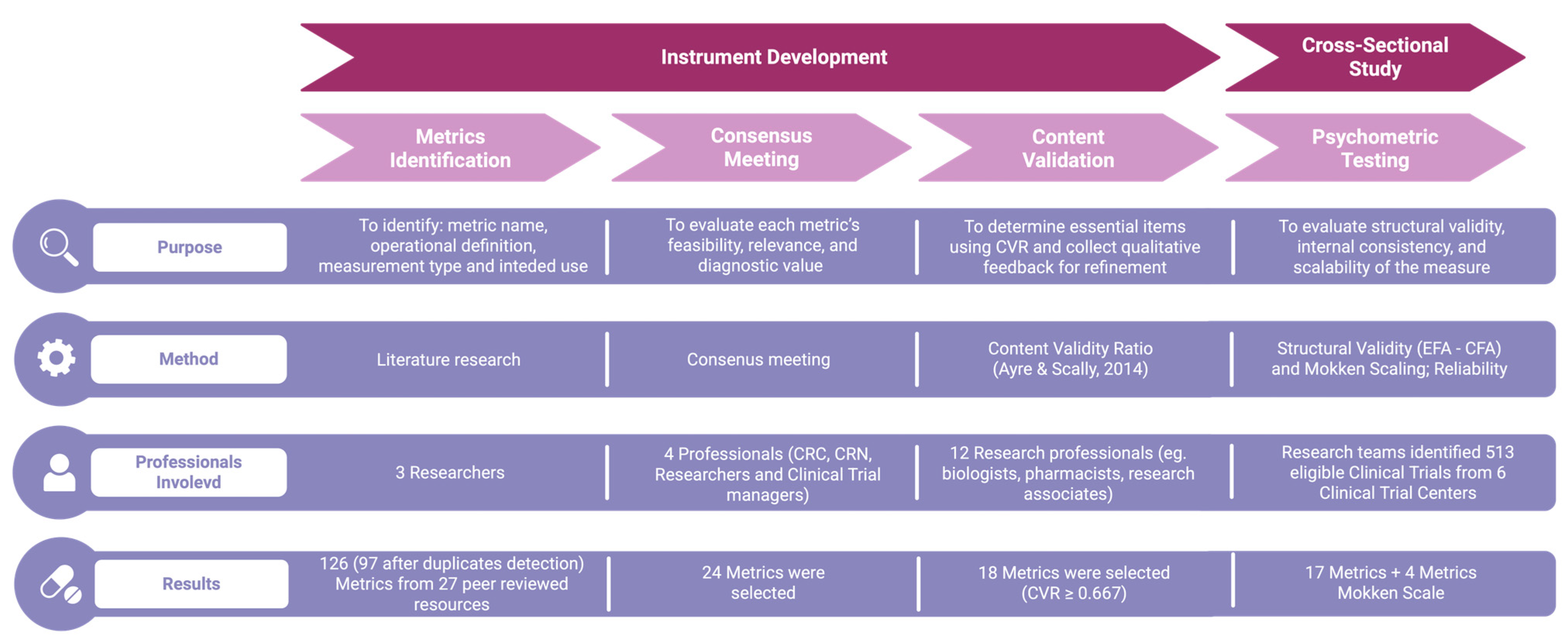

2.4. Instrument Development

2.5. Variables Collected

2.6. Statistical Analyses

2.6.1. Psychometric Testing

2.6.2. Mokken Scaling

2.6.3. Calculation of Standardized Scores

2.6.4. Performance Cut-Off Definition

2.6.5. Sample Size Calculation

2.7. Deployment

3. Results

3.1. Phase 2: Psychometric Testing

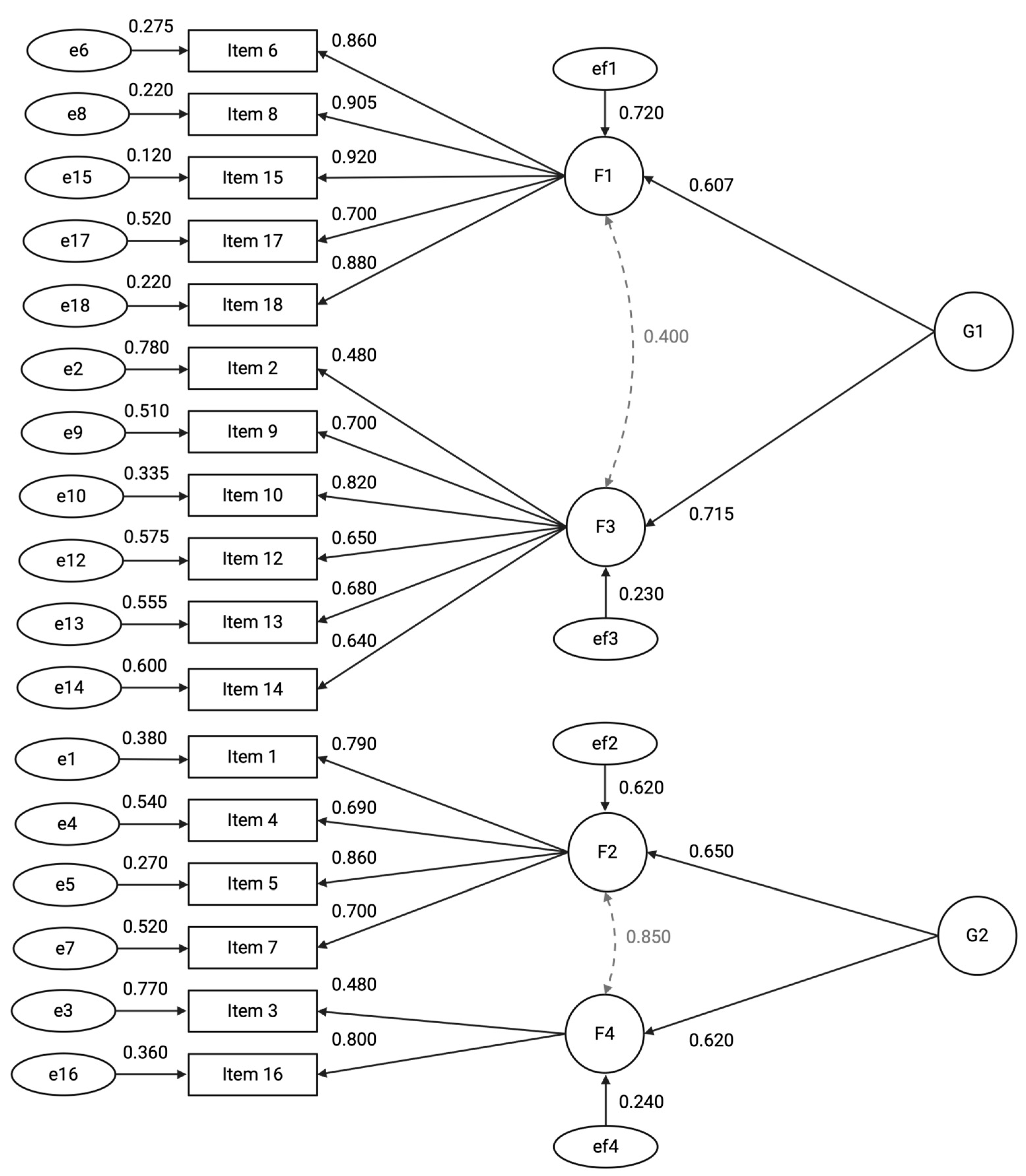

3.1.1. Structural Validity

3.1.2. Reliability

3.2. Phase 3: Mokken Scaling

3.2.1. Automatic Item Selection Procedure

3.2.2. Monotonicity

3.3. Phase 3: Defining Performance Cut-Off

| Factors | M (SD) | Cutoff (Youden’s) | AUC |

|---|---|---|---|

| Participant Retention and Consent (F1) | 61.30 (25.70) | 22.5 | 0.583 |

| Data Completeness and Timeliness (F2) | 71.80 (21.20) | 46.9 | 0.528 |

| Adverse Events Reporting (F3) | 62.50 (10.90) | 64.6 | 0.557 |

| Protocol Compliance (F4) | 82.50 (17.30) | 6.25 | 0.293 |

| Participant Retention and Adverse Event Monitoring (G1) | 61.90 (14.90) | 55.2 | 0.581 |

| Data Quality and Protocol Adherence (G2) | 77.10 (16.70) | 60.9 | 0.562 |

| Short Form (Mokken Scale) | 40.27 (24.33) | 59.38 | 0.628 |

4. Discussion

4.1. Strengths and Limitations

4.2. Implications for Future Research

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Wu, K.; Wu, E.; DAndrea, M.; Chitale, N.; Lim, M.; Dabrowski, M.; Kantor, K.; Rangi, H.; Liu, R.; Garmhausen, M.; et al. Machine Learning Prediction of Clinical Trial Operational Efficiency. AAPS J. 2022, 24, 57. [Google Scholar] [CrossRef]

- Getz, K.A.; Campo, R.A. Trends in clinical trial design complexity. Nat. Rev. Drug Discov. 2017, 16, 307–308. [Google Scholar] [CrossRef]

- Unger, J.M.; Cook, E.; Tai, E.; Bleyer, A. The Role of Clinical Trial Participation in Cancer Research: Barriers, Evidence, and Strategies. Am. Soc. Clin. Oncol. Educ. Book Am. Soc. Clin. Oncol. Annu. Meet. 2016, 35, 185–198. [Google Scholar] [CrossRef]

- Rojek, A.M.; Horby, P.W. Modernising epidemic science: Enabling patient-centred research during epidemics. BMC Med. 2016, 14, 212. [Google Scholar] [CrossRef]

- Walker, K.F.; Turzanski, J.; Whitham, D.; Montgomery, A.; Duley, L. Monitoring performance of sites within multicentre randomised trials: A systematic review of performance metrics. Trials 2018, 19, 562. [Google Scholar] [CrossRef] [PubMed]

- European Medicines Agency. Guideline for Good Clinical Practice E6(R2). Published Online 1 December 2016. Available online: https://www.ema.europa.eu/en/documents/scientific-guideline/ich-guideline-good-clinical-practice-e6r2-step-5_en.pdf (accessed on 16 February 2025).

- Adams, A.; Adelfio, A.; Barnes, B.; Berlien, R.; Branco, D.; Coogan, A.; Garson, L.; Ramirez, N.; Stansbury, N.; Stewart, J.; et al. Risk-Based Monitoring in Clinical Trials: 2021 Update. Ther. Innov. Regul. Sci. 2023, 57, 529–537. [Google Scholar] [CrossRef] [PubMed]

- Tudur Smith, C.; Williamson, P.; Jones, A.; Smyth, A.; Hewer, S.L.; Gamble, C. Risk-proportionate clinical trial monitoring: An example approach from a non-commercial trials unit. Trials 2014, 15, 127. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.; Kim, K.; Shin, Y.; Lee, Y.; Kim, T.-J. Advancements in Electronic Medical Records for Clinical Trials: Enhancing Data Management and Research Efficiency. Cancers 2025, 17, 1552. [Google Scholar] [CrossRef]

- Sedano, R.; Solitano, V.; Vuyyuru, S.K.; Yuan, Y.; Hanžel, J.; Ma, C.; Nardone, O.M.; Jairath, V. Artificial intelligence to revolutionize IBD clinical trials: A comprehensive review. Ther. Adv. Gastroenterol. 2025, 18, 17562848251321915. [Google Scholar] [CrossRef]

- Youssef, A.; Nichol, A.A.; Martinez-Martin, N.; Larson, D.B.; Abramoff, M.; Wolf, R.M.; Char, D. Ethical Considerations in the Design and Conduct of Clinical Trials of Artificial Intelligence. JAMA Netw. Open 2024, 7, e2432482. [Google Scholar] [CrossRef]

- European Medicines Agency. Guideline for Good Clinical Practice E6(R3). Published Online 6 January 2025. Available online: https://database.ich.org/sites/default/files/ICH_E6%28R3%29_Step4_FinalGuideline_2025_0106.pdf (accessed on 22 May 2025).

- Bozzetti, M.; Soncini, S.; Bassi, M.C.; Guberti, M. Assessment of Nursing Workload and Complexity Associated with Oncology Clinical Trials: A Scoping Review. Semin. Oncol. Nurs. 2024, 40, 151711. [Google Scholar] [CrossRef]

- Stensland, K.D.; Damschroder, L.J.; Sales, A.E.; Schott, A.F.; Skolarus, T.A. Envisioning clinical trials as complex interventions. Cancer 2022, 128, 3145–3151. [Google Scholar] [CrossRef] [PubMed]

- Duley, L.; Antman, K.; Arena, J.; Avezum, A.; Blumenthal, M.; Bosch, J.; Chrolavicius, S.; Li, T.; Ounpuu, S.; Perez, A.C.; et al. Specific barriers to the conduct of randomized trials. Clin. Trials 2008, 5, 40–48. [Google Scholar] [CrossRef] [PubMed]

- Durden, K.; Hurley, P.; Butler, D.L.; Farner, A.; Shriver, S.P.; Fleury, M.E. Provider motivations and barriers to cancer clinical trial screening, referral, and operations: Findings from a survey. Cancer 2024, 130, 68–76. [Google Scholar] [CrossRef]

- Tew, M.; Catchpool, M.; Furler, J.; Rue, K.; Clarke, P.; Manski-Nankervis, J.-A.; Dalziel, K. Site-specific factors associated with clinical trial recruitment efficiency in general practice settings: A comparative descriptive analysis. Trials 2023, 24, 164. [Google Scholar] [CrossRef]

- Baldi, I.; Lanera, C.; Berchialla, P.; Gregori, D. Early termination of cardiovascular trials as a consequence of poor accrual: Analysis of ClinicalTrials.gov 2006–2015. BMJ Open 2017, 7, e013482. [Google Scholar] [CrossRef]

- Kasenda, B.; von Elm, E.; You, J.; Blümle, A.; Tomonaga, Y.; Saccilotto, R.; Amstutz, A.; Bengough, T.; Meerpohl, J.J.; Stegert, M.; et al. Prevalence, characteristics, and publication of discontinued randomized trials. JAMA 2014, 311, 1045–1051. [Google Scholar] [CrossRef]

- Janiaud, P.; Hemkens, L.G.; Ioannidis, J.P.A. Challenges and Lessons Learned From COVID-19 Trials: Should We Be Doing Clinical Trials Differently? Can. J. Cardiol. 2021, 37, 1353–1364. [Google Scholar] [CrossRef]

- Singh, G.; Wague, A.; Arora, A.; Rao, V.; Ward, D.; Barry, J. Discontinuation and nonpublication of clinical trials in orthopaedic oncology. J. Orthop. Surg. 2024, 19, 121. [Google Scholar] [CrossRef] [PubMed]

- Sinha, I.P.; Smyth, R.L.; Williamson, P.R. Using the Delphi technique to determine which outcomes to measure in clinical trials: Recommendations for the future based on a systematic review of existing studies. PLoS Med. 2011, 8, e1000393. [Google Scholar] [CrossRef]

- Klatte, K.; Subramaniam, S.; Benkert, P.; Schulz, A.; Ehrlich, K.; Rösler, A.; Deschodt, M.; Fabbro, T.; Pauli-Magnus, C.; Briel, M. Development of a risk-tailored approach and dashboard for efficient management and monitoring of investigator-initiated trials. BMC Med. Res. Methodol. 2023, 23, 84. [Google Scholar] [CrossRef]

- Yorke-Edwards, V.; Diaz-Montana, C.; Murray, M.L.; Sydes, M.R.; Love, S.B. Monitoring metrics over time: Why clinical trialists need to systematically collect site performance metrics. Res. Methods Med. Health Sci. 2023, 4, 124–135. [Google Scholar] [CrossRef]

- Hanisch, M.; Goldsby, C.M.; Fabian, N.E.; Oehmichen, J. Digital governance: A conceptual framework and research agenda. J. Bus. Res. 2023, 162, 113777. [Google Scholar] [CrossRef]

- Raimo, N.; De Turi, I.; Albergo, F.; Vitolla, F. The drivers of the digital transformation in the healthcare industry: An empirical analysis in Italian hospitals. Technovation 2023, 121, 102558. [Google Scholar] [CrossRef]

- Lee, H.; Lee, H.; Baik, J.; Kim, H.; Kim, R. Failure mode and effects analysis drastically reduced potential risks in clinical trial conduct. Drug Des. Dev. Ther. 2017, 11, 3035–3043. [Google Scholar] [CrossRef] [PubMed]

- De Pretto-Lazarova, A.; Fuchs, C.; van Eeuwijk, P.; Burri, C. Defining clinical trial quality from the perspective of resource-limited settings: A qualitative study based on interviews with investigators, sponsors, and monitors conducting clinical trials in sub-Saharan Africa. PLoS Negl. Trop. Dis. 2022, 16, e0010121. [Google Scholar] [CrossRef]

- Hampel, H.; Li, G.; Mielke, M.M.; Galvin, J.E.; Kivipelto, M.; Santarnecchi, E.; Babiloni, C.; Devanarayan, V.; Tkatch, R.; Hu, Y.; et al. The impact of real-world evidence in implementing and optimizing Alzheimer’s disease care. Med 2025, 6, 100695. [Google Scholar] [CrossRef]

- Bozzetti, M.; Caruso, R.; Soncini, S.; Guberti, M. Development of the clinical trial site performance metrics instrument: A study protocol. MethodsX 2025, 14, 103165. [Google Scholar] [CrossRef] [PubMed]

- Ayre, C.; Scally, A.J. Critical Values for Lawshe’s Content Validity Ratio: Revisiting the Original Methods of Calculation. Meas. Eval. Couns. Dev. 2014, 47, 79–86. [Google Scholar] [CrossRef]

- Little, R.J.A. A Test of Missing Completely at Random for Multivariate Data with Missing Values. J. Am. Stat. Assoc. 1988, 83, 1198–1202. [Google Scholar] [CrossRef]

- Van Buuren, S.; Groothuis-Oudshoorn, K. mice: Multivariate Imputation by Chained Equations in R. J. Stat. Softw. 2011, 45, 1–67. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing. Published Online 2023. Available online: https://www.R-project.org/ (accessed on 12 March 2024).

- Hu, L.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Rodriguez, A.; Reise, S.P.; Haviland, M.G. Evaluating bifactor models: Calculating and interpreting statistical indices. Psychol. Methods 2016, 21, 137–150. [Google Scholar] [CrossRef]

- Van der Ark, L.A. New Developments in Mokken Scale Analysis in R. J. Stat. Softw. 2012, 48, 1–27. [Google Scholar] [CrossRef]

- Mokken, R.J. A Theory and Procedure of Scale Analysis: With Applications in Political Research; Walter de Gruyter: Berlin, Germany, 2011. [Google Scholar]

- Sijtsma, K.; van der Ark, L.A. A tutorial on how to do a Mokken scale analysis on your test and questionnaire data. Br. J. Math. Stat. Psychol. 2016, 70, 137–158. [Google Scholar] [CrossRef] [PubMed]

- Straat, J.H.; van der Ark, L.A.; Sijtsma, K. Minimum sample size requirements for Mokken scale analysis. Educ. Psychol. Meas. 2014, 74, 809–822. [Google Scholar] [CrossRef]

- Harman, N.L.; Bruce, I.A.; Kirkham, J.J.; Tierney, S.; Callery, P.; O’Brien, K.; Bennett, A.M.D.; Chorbachi, R.; Hall, P.N.; Harding-Bell, A.; et al. The Importance of Integration of Stakeholder Views in Core Outcome Set Development: Otitis Media with Effusion in Children with Cleft Palate. PLoS ONE 2015, 10, e0129514. [Google Scholar] [CrossRef] [PubMed]

- Whitham, D.; Turzanski, J.; Bradshaw, L.; Clarke, M.; Culliford, L.; Duley, L.; Shaw, L.; Skea, Z.; Treweek, S.P.; Walker, K.; et al. Development of a standardised set of metrics for monitoring site performance in multicentre randomised trials: A Delphi study. Trials 2018, 19, 557. [Google Scholar] [CrossRef]

- Williamson, P.R.; Altman, D.G.; Bagley, H.; Barnes, K.L.; Blazeby, J.M.; Brookes, S.T.; Clarke, M.; Gargon, E.; Gorst, S.; Harman, N.; et al. The COMET Handbook: Version 1.0. Trials 2017, 18 (Suppl. S3), 280. [Google Scholar] [CrossRef]

- Phillips, R.; Hazell, L.; Sauzet, O.; Cornelius, V. Analysis and reporting of adverse events in randomised controlled trials: A review. BMJ Open 2019, 9, e024537. [Google Scholar] [CrossRef]

- Meeker-O’Connell, A.; Glessner, C.; Behm, M.; Mulinde, J.; Roach, N.; Sweeney, F.; Tenaerts, P.; Landray, M.J. Enhancing clinical evidence by proactively building quality into clinical trials. Clin. Trials Lond. Engl. 2016, 13, 439–444. [Google Scholar] [CrossRef]

- Jomy, J.; Sharma, R.; Lu, R.; Chen, D.; Ataalla, P.; Kaushal, S.; Liu, Z.A.; Ye, X.Y.; Fairchild, A.; Nichol, A.; et al. Clinical impact of radiotherapy quality assurance results in contemporary cancer trials: A systematic review and meta-analysis. Radiother. Oncol. J. Eur. Soc. Ther. Radiol. Oncol. 2025, 207, 110875. [Google Scholar] [CrossRef]

- Ohri, N.; Shen, X.; Dicker, A.P.; Doyle, L.A.; Harrison, A.S.; Showalter, T.N. Radiotherapy Protocol Deviations and Clinical Outcomes: A Meta-analysis of Cooperative Group Clinical Trials. JNCI J. Natl. Cancer Inst. 2013, 105, 387–393. [Google Scholar] [CrossRef] [PubMed]

- Buse, J.B.; Austin, C.P.; Johnston, S.C.; Lewis-Hall, F.; March, A.N.; Shore, C.K.; Tenaerts, P.; Rutter, J.L. A framework for assessing clinical trial site readiness. J. Clin. Transl. Sci. 2023, 7, e151. [Google Scholar] [CrossRef]

- Dombernowsky, T.; Haedersdal, M.; Lassen, U.; Thomsen, S.F. Criteria for site selection in industry-sponsored clinical trials: A survey among decision-makers in biopharmaceutical companies and clinical research organizations. Trials 2019, 20, 708. [Google Scholar] [CrossRef] [PubMed]

- Lamberti, M.J.; Wilkinson, M.; Harper, B.; Morgan, C.; Getz, K. Assessing Study Start-up Practices, Performance, and Perceptions Among Sponsors and Contract Research Organizations. Ther. Innov. Regul. Sci. 2018, 52, 572–578. [Google Scholar] [CrossRef]

- Califf, R.M.; Zarin, D.A.; Kramer, J.M.; Sherman, R.E.; Aberle, L.H.; Tasneem, A. Characteristics of Clinical Trials Registered in ClinicalTrials.gov, 2007–2010. JAMA 2012, 307, 1838–1847. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A.; Greenland, S.; Hlatky, M.A.; Khoury, M.J.; Macleod, M.R.; Moher, D.; Schulz, K.F.; Tibshirani, R. Increasing value and reducing waste in research design, conduct, and analysis. Lancet 2014, 383, 166–175. [Google Scholar] [CrossRef]

- Getz, K.A.; Stergiopoulos, S.; Marlborough, M.; Whitehill, J.; Curran, M.; Kaitin, K.I. Quantifying the magnitude and cost of collecting extraneous protocol data. Am. J. Ther. 2015, 22, 117–124. [Google Scholar] [CrossRef] [PubMed]

- Agrafiotis, D.K.; Lobanov, V.S.; Farnum, M.A.; Yang, E.; Ciervo, J.; Walega, M.; Baumgart, A.; Mackey, A.J. Risk-based Monitoring of Clinical Trials: An Integrative Approach. Clin. Ther. 2018, 40, 1204–1212. [Google Scholar] [CrossRef]

- Pogue, J.M.; Devereaux, P.J.; Thorlund, K.; Yusuf, S. Central statistical monitoring: Detecting fraud in clinical trials. Clin. Trials 2013, 10, 225–235. [Google Scholar] [CrossRef] [PubMed]

| Factors | Omega Total (ω) | Omega Hierarchical (ωh) | Explained Common Variance (ECV) |

|---|---|---|---|

| Participant Retention and Adverse Event Monitoring (G1) | 0.73 | 0.59 | 0.42 |

| Data Quality and Protocol Adherence (G2) | 0.68 | 0.53 | 0.40 |

| Participant Retention and Consent (F1) | 0.82 | 0.70 | |

| Data Completeness and Timeliness (F2) | 0.75 | 0.64 | |

| Adverse Events Reporting (F3) | 0.70 | 0.60 | |

| Protocol Compliance (F4) | 0.65 | 0.58 |

| Items | H | #ac | #vi | #vi/#ac | maxvi | sum | sum/#ac | tmax | #tsig | crit | Selection | HT | Rho |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Item9 | 0.63 | 4 | 1 | 0.25 | 0.18 | 0.18 | 0.05 | 1.14 | 0 | 1.96 | 0 | 0.55 | 0.82 |

| Item10 | 0.53 | 5 | 0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0 | 1.96 | 0 | 0.60 | |

| Item12 | 0.58 | 5 | 1 | 0.20 | 0.44 | 0.62 | 0.12 | 2.75 | 1 | 1.96 | 1 | 0.54 | |

| Item17 | 0.53 | 4 | 2 | 0.50 | 0.57 | 0.70 | 0.18 | 5.05 | 1 | 1.96 | 2 | 0.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bozzetti, M.; Lo Cascio, A.; Napolitano, D.; Orgiana, N.; Mora, V.; Fiorini, S.; Petrucci, G.; Resente, F.; Baroni, I.; Caruso, R.; et al. Measuring What Matters in Trial Operations: Development and Validation of the Clinical Trial Site Performance Measure. J. Clin. Med. 2025, 14, 6839. https://doi.org/10.3390/jcm14196839

Bozzetti M, Lo Cascio A, Napolitano D, Orgiana N, Mora V, Fiorini S, Petrucci G, Resente F, Baroni I, Caruso R, et al. Measuring What Matters in Trial Operations: Development and Validation of the Clinical Trial Site Performance Measure. Journal of Clinical Medicine. 2025; 14(19):6839. https://doi.org/10.3390/jcm14196839

Chicago/Turabian StyleBozzetti, Mattia, Alessio Lo Cascio, Daniele Napolitano, Nicoletta Orgiana, Vincenzina Mora, Stefania Fiorini, Giorgia Petrucci, Francesca Resente, Irene Baroni, Rosario Caruso, and et al. 2025. "Measuring What Matters in Trial Operations: Development and Validation of the Clinical Trial Site Performance Measure" Journal of Clinical Medicine 14, no. 19: 6839. https://doi.org/10.3390/jcm14196839

APA StyleBozzetti, M., Lo Cascio, A., Napolitano, D., Orgiana, N., Mora, V., Fiorini, S., Petrucci, G., Resente, F., Baroni, I., Caruso, R., & Guberti, M., on behalf of the Performance Working Goup. (2025). Measuring What Matters in Trial Operations: Development and Validation of the Clinical Trial Site Performance Measure. Journal of Clinical Medicine, 14(19), 6839. https://doi.org/10.3390/jcm14196839