Multi-Stage Cascaded Deep Learning-Based Model for Acute Aortic Syndrome Detection: A Multisite Validation Study

Abstract

1. Introduction

2. Materials and Methods

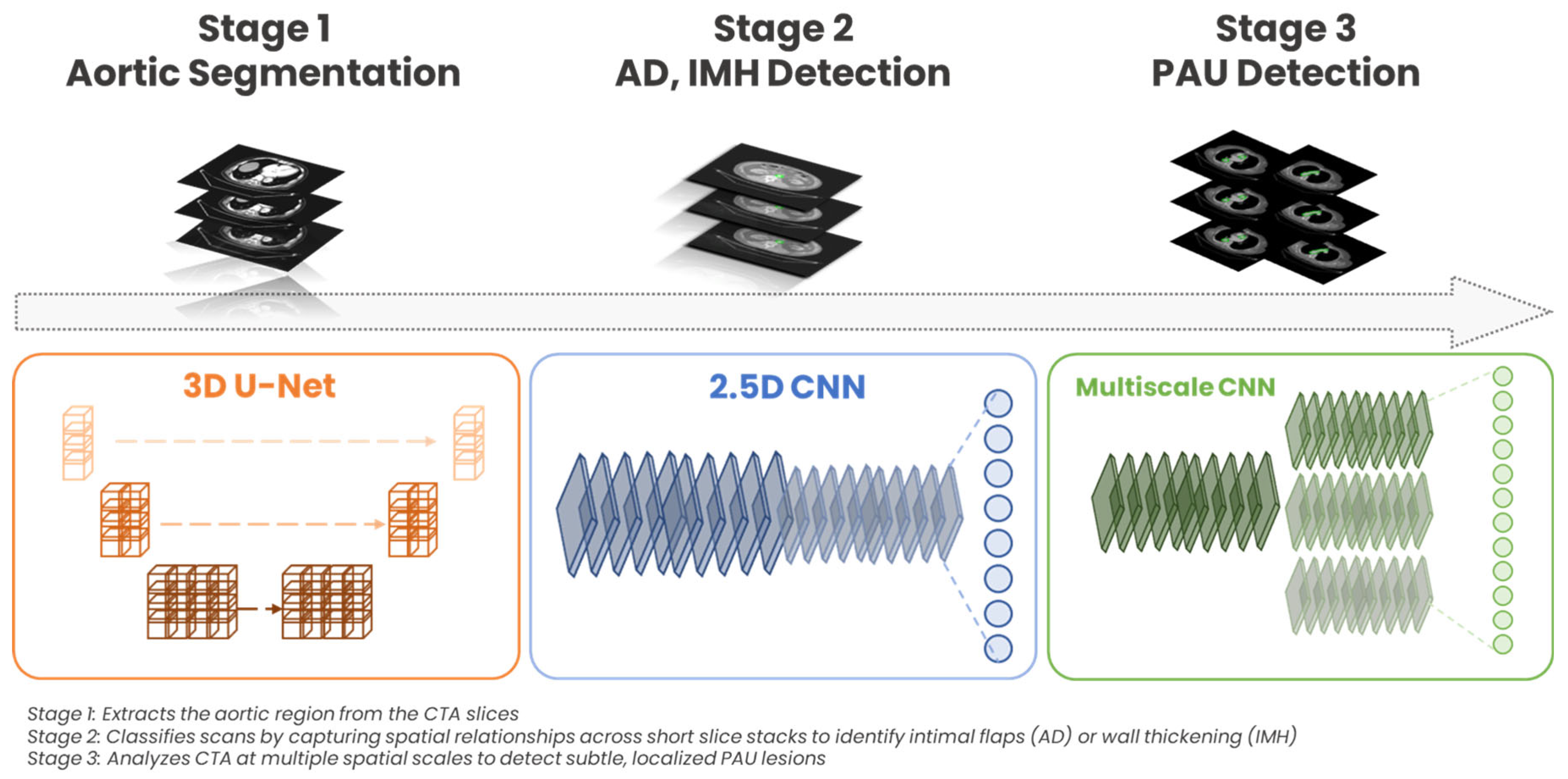

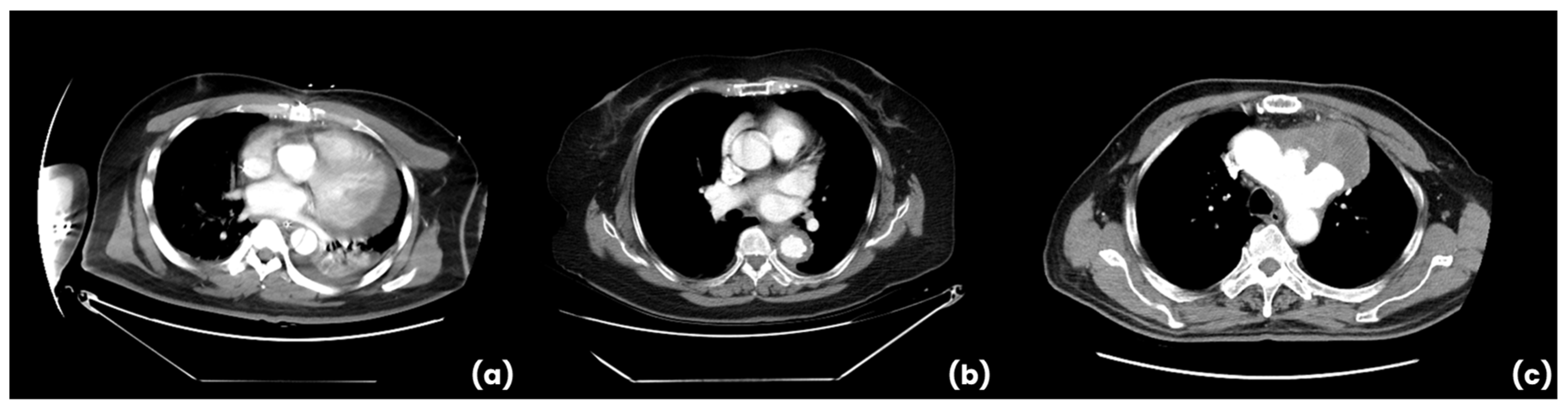

2.1. DL Algorithm: Architecture and Training

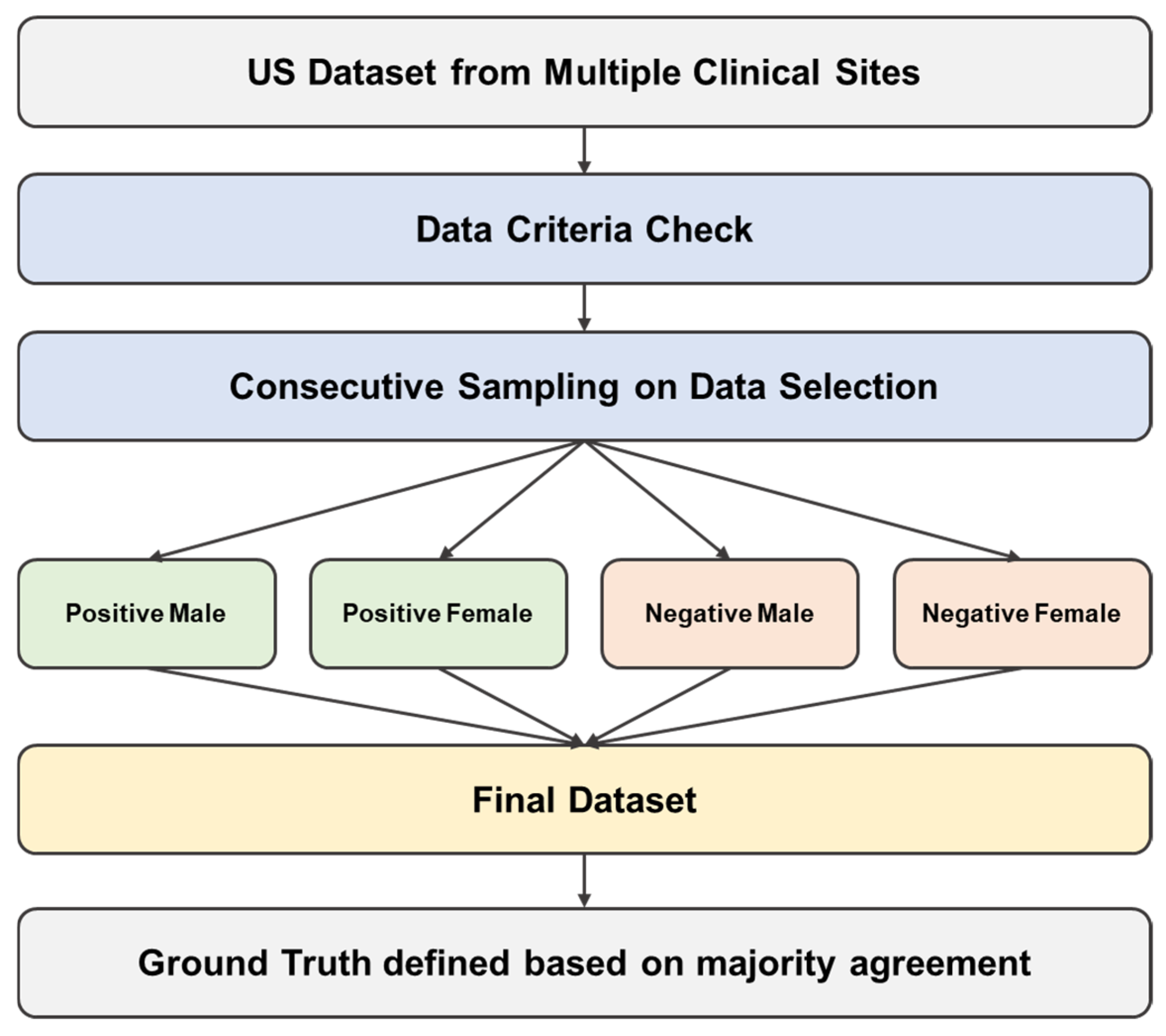

2.2. External Multisite Validation Data Collection

2.3. Ethical Considerations for Data

2.4. Ground Truth Definition

2.5. Statistical Analysis

3. Results

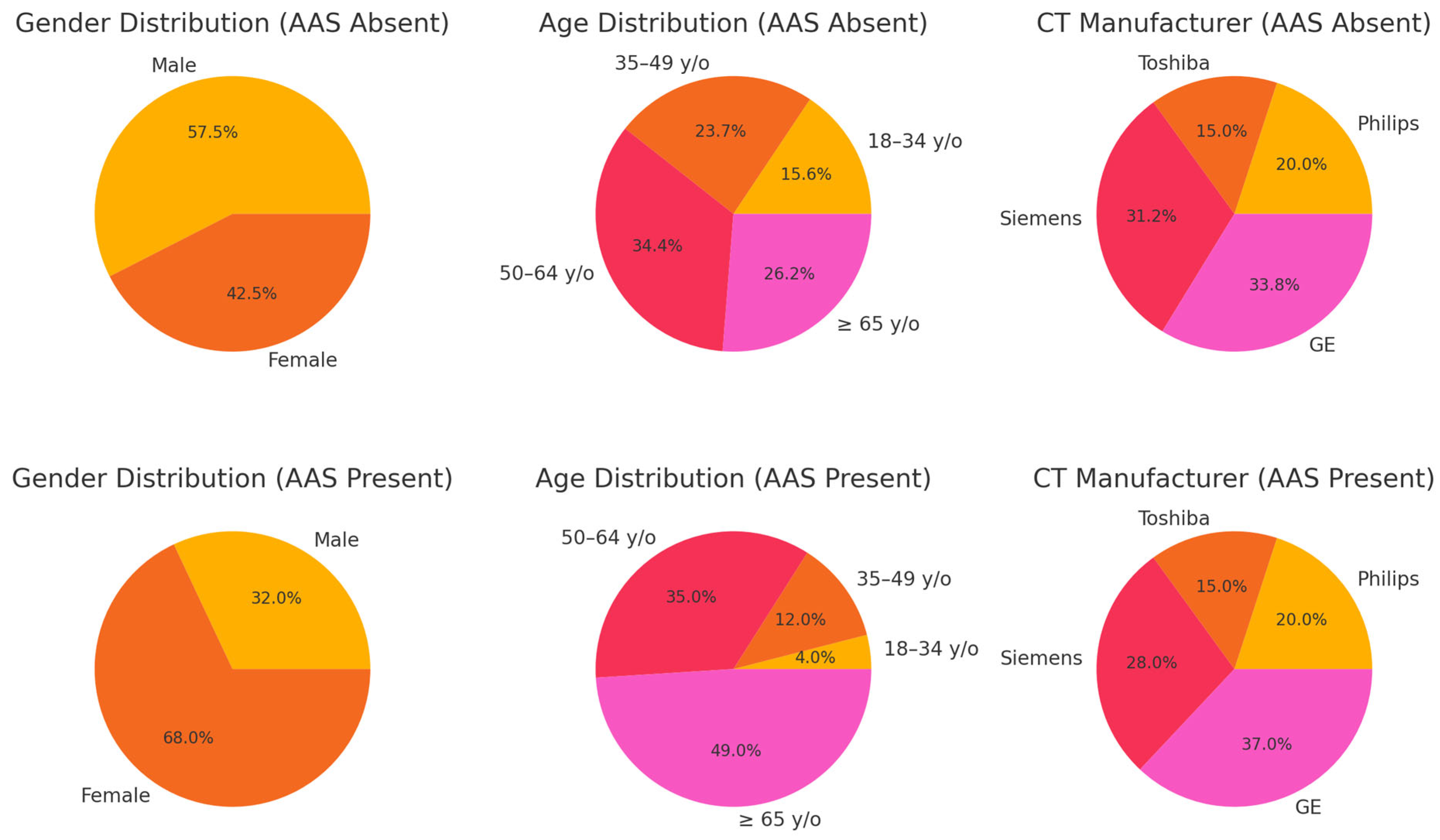

3.1. Patient Characteristics

3.2. Evaluation of AI Performance

3.3. Subgroup Analysis Results

3.4. Comparative Performance with Published AI Studies and FDA-Approved Devices

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AAS | Acute Aortic Syndrome |

| AD | Aortic Dissection |

| IMH | Intramural Hematoma |

| PAU | Penetrating Atherosclerotic Ulcer |

| CNN | Convolutional Neural Network |

| CTA | Computed Tomography Angiography |

| AI | Artificial Intelligence |

| AUC | Area Under the Receiver Operating Curve |

| DL | Deep Learning |

References

- DeMartino, R.; Sen, I.; Huang, Y.; Bower, T.; Oderich, G.; Pochettino, A.; Greason, K.; Kalra, M.; Johnstone, J.K.; Shuja, F.; et al. Population-Based Assessment of the Incidence of Aortic Dissection, Intramural Hematoma, and Penetrating Ulcer, and Its Associated Mortality From 1995 to 2015. Circ. Cardiovasc. Qual. Outcomes 2018, 11, e004689. [Google Scholar] [CrossRef] [PubMed]

- Dieter, R.; Kalya, A.; Pacanowski, J.P.; Migrino, R.A.; Gaines, T.; Dieter, R.A. Acute Aortic Syndromes: Aortic Dissections, Penetrating Aortic Ulcers and Intramural Aortic Hematomas. Expert Rev. Cardiovasc. Ther. 2005, 3, 423–431. [Google Scholar] [CrossRef] [PubMed]

- Nienaber, C.A.; Eagle, K.A. Aortic dissection: New frontiers in diagnosis and management: Part I: From etiology to diagnostic strategies. Circulation 2003, 108, 628–635. [Google Scholar] [CrossRef] [PubMed]

- Evangelista, A.; Isselbacher, E.M. Imaging of acute aortic syndrome. Nat. Rev. Cardiol. 2018, 15, 191–206. [Google Scholar]

- McLatchie, R.; Reed, M.J.; Freeman, N.; Parker, R.A.; Wilson, S.; Goodacre, S.; Cowan, A.; Boyle, J.; Clarke, B.; Clarke, E. Diagnosis of Acute Aortic Syndrome in the Emergency Department (DAShED) study: An observational cohort study. Emerg. Med. J. 2023. [CrossRef]

- Meng, J.; Mellnick, V.; Monteiro, S.; Patlas, M. Acute Aortic Syndrome: Yield of CT Angiography in Acute Chest Pain. Can. Assoc. Radiol. J. 2019, 70, 23–28. [Google Scholar] [CrossRef]

- Waqanivavalagi, S.; Bhat, S.; Schreve, F.; Milsom, P.; Bergin, C.J.; Jones, P.G. Trends in CT Aortography and Acute Aortic Syndrome in New Zealand. Emerg. Med. Australas. 2022, 34, 769–778. [Google Scholar] [CrossRef]

- Steinbrecher, K.; Marquis, K.M.; Bhalla, S.; Mellnick, V.; Ohman, J.; Raptis, C.A. CT of the Difficult Acute Aortic Syndrome. Radiographics 2021, 42, 69–86. [Google Scholar] [CrossRef]

- Dreisbach, J.; Rodrigues, J.; Roditi, G. Emergency CT Misdiagnosis in Acute Aortic Syndrome. Br. J. Radiol. 2021, 94, 20201294. [Google Scholar] [CrossRef]

- Evangelista, A.; Carro, A.; Moral, S.; Teixidó-Tura, G.; Rodríguez-Palomares, J.; Cuéllar, H.; Garcia-Dorado, D. Imaging Modalities for Early Diagnosis of Acute Aortic Syndrome. Nat. Rev. Cardiol. 2013, 10, 477–486. [Google Scholar] [CrossRef]

- Hamilton, M.; Harries, I.; Lopez-Bernal, T.; Karteszi, H.; Redfern, E.; Lyen, S.; Manghat, N. ECG-Gated CT for Aortic Syndrome: Subspecialty Recommendations Impact. Clin. Radiol. 2021, 77, e27–e32. [Google Scholar] [CrossRef] [PubMed]

- Cao, L.; Shi, R.; Ge, Y.; Xing, L.; Zuo, P.; Jia, Y.; Liu, J.; He, Y.; Wang, X.; Luan, S.; et al. Fully Automatic Segmentation of Type B Aortic Dissection. Eur. J. Radiol. 2019, 121, 108713. [Google Scholar] [CrossRef] [PubMed]

- Yi, Y.; Mao, L.; Wang, C.; Guo, Y.; Luo, X.; Jia, D.; Lei, Y.; Pan, J.; Li, J.; Li, S.; et al. Early Warning of Aortic Dissection on Non-Contrast CT. Front. Cardiovasc. Med. 2022, 8, 762958. [Google Scholar] [CrossRef] [PubMed]

- Xiong, X.; Guan, X.; Sun, C.; Zhang, T.; Chen, H.; Ding, Y.; Cheng, Z.; Zhao, L.; Ma, X.; Xie, G. Cascaded Deep Learning for Detecting Aortic Dissection. IEEE EMBC 2021, 43, 2914–2917. [Google Scholar] [CrossRef]

- Laletin, V.; Ayobi, A.; Chang, P.D.; Chow, D.S.; Soun, J.E.; Junn, J.C.; Scudeler, M.; Quenet, S.; Tassy, M.; Avare, C.; et al. Diagnostic Performance of a Deep Learning-Powered Application for Aortic Dissection Triage Prioritization and Classification. Diagnostics 2024, 14, 1877. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Liu, H.-H.; Chang, C.-B.; Chen, Y.-S.; Kuo, C.-F.; Lin, C.-Y.; Ma, C.-Y.; Wang, L.-J. Automated Detection and Differentiation of Stanford Type A and Type B Aortic Dissections in CTA Scans Using Deep Learning. Diagnostics 2025, 15, 12. [Google Scholar] [CrossRef]

- Huang, L.T.; Tsai, Y.S.; Liou, C.F.; Lee, T.H.; Kuo, P.P.; Huang, H.S.; Wang, C.K. Automated Stanford classification of aortic dissection using a 2-step hierarchical neural network at computed tomography angiography. Eur. Radiol. 2022, 32, 2277–2285. [Google Scholar] [CrossRef] [PubMed]

- Raj, A.; Allababidi, A.; Kayed, H.; Gerken, A.L.H.; Müller, J.; Schoenberg, S.O.; Zöllner, F.G.; Rink, J.S. Streamlining Acute Abdominal Aortic Dissection Management-An AI-based CT Imaging Workflow. J. Imaging Inform Med. 2024, 37, 2729–2739. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Chen, J.; Qian, L.; Wang, P.; Sun, C.; Qin, T.; Kalyanasundaram, A.; Zafar, M.; Elefteriades, J.A.; Sun, W.; Liang, L. A 3D Image Segmentation Study on Aorta Anatomy. bioRxiv 2024. [Google Scholar] [CrossRef]

- Zhong, J.; Bian, Z.; Hatt, C.; Burris, N. Segmentation of Thoracic Aorta Using Attention-Gated U-Net. SPIE Med. Imaging 2021, 11597, 147–153. [Google Scholar] [CrossRef]

- Ravichandran, S.R.; Nataraj, B.; Huang, S.; Qin, Z.; Lu, Z.; Katsuki, A.; Huang, W.; Zeng, Z. 3D Inception U-Net for Aorta Segmentation. In Proceedings of the 2019 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Chicago, IL, USA, 19–22 May 2019. [Google Scholar] [CrossRef]

- Ashok, M.; Gupta, A. Automatic Organ-at-Risk Segmentation with Ensembled U-Net. J. Comput. Biol. 2023, 30, 346–362. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Cai, Z. ConvNextUNet: A small-region attentioned model for cardiac MRI segmentation. Comput. Biol. Med. 2024, 177, 108592. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Jia, K. Multiscale CNNs for Brain Tumor Diagnosis. Comput. Math. Methods Med. 2016, 2016, 8356294. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Heldermon, C.D.; Toler-Franklin, C. Multiscale Detection of Cancerous Tissue in High Resolution Slide Scans. Adv. Vis. Comput. 2020, 12510, 139–153. [Google Scholar] [CrossRef]

- Sedai, S.; Mahapatra, D.; Ge, Z.; Chakravorty, R.; Garnavi, R. Deep Multiscale Feature Learning in X-Ray Localization. MICCAI 2018, 11073, 267–275. [Google Scholar] [CrossRef]

- Ristow, A.V.; Massière, B.; Beer, F. Intramural Hematoma and Penetrating Ulcers. Springer Ref. 2011, 555–566. [Google Scholar] [CrossRef]

- Maas, A.; van Bakel, P.A.J.; Ahmed, Y.; Patel, H.; Burris, N. Natural History of Aortic Intimal Flaps. Front. Cardiovasc. Med. 2022, 9, 959517. [Google Scholar] [CrossRef]

- Evangelista, A.; Maldonado, G.; Moral, S.; Teixidó-Tura, G.; Lopez, A.; Cuéllar, H.; Rodríguez-Palomares, J. Intramural Hematoma and Penetrating Ulcer of Descending Aorta. Ann. Cardiothorac. Surg. 2019, 8, 456–470. [Google Scholar] [CrossRef]

- 45 CFR § 164.514 (e); Code of Federal Regulation. Government Publishing Office: Washington, DC, USA, 2023.

- 45 CFR § 46.101; Code of Federal Regulation. Government Publishing Office: Washington, DC, USA, 2023.

- Harris, R.J.; Kim, S.; Lohr, J.; Towey, S.; Velichkovich, Z.; Kabachenko, T.; Driscoll, I.; Baker, B. Classification of Aortic Dissection and Rupture on CT Using CNN. J. Digit. Imaging 2019, 32, 939–946. [Google Scholar] [CrossRef]

- Hata, A.; Yanagawa, M.; Yamagata, K.; Suzuki, Y.; Kido, S.; Kawata, A.; Doi, S.; Yoshida, Y.; Miyata, T.; Tsubamoto, M.; et al. Deep Learning for Aortic Dissection on Non-Contrast CT. Eur. Radiol. 2020, 31, 1151–1159. [Google Scholar] [CrossRef]

- Aidoc Medical. Aidoc BriefCase: Aortic Dissection Module. FDA 510(k) Summary. K222329. Available online: https://www.accessdata.fda.gov/cdrh_docs/pdf22/K222329.pdf (accessed on 21 April 2025).

- AvicennaAI CINA Chest: FDA 510(k) Summary. K210237. Available online: https://www.accessdata.fda.gov/cdrh_docs/pdf21/K210237.pdf (accessed on 21 April 2025).

- Viz.ai, Inc. Viz Aortic: Triage and Notification Software for Aortic Dissection. FDA Summary. Available online: https://www.fda.gov/media/157653/download (accessed on 21 April 2025).

- Evangelista, A.; Moral, S. Penetrating atherosclerotic ulcer. Curr. Opin. Cardiol 2020, 35, 620–626. [Google Scholar] [CrossRef]

| AAS Absent (n = 160) | AAS Present (n = 100) | p-Value | |

|---|---|---|---|

| Gender | 0.001 | ||

| Male | 92 | 32 | |

| Female | 68 | 68 | |

| Age | <0.0001 | ||

| 18–34 y/o | 25 | 4 | |

| 35–49 y/o | 38 | 12 | |

| 50–64 y/o | 55 | 35 | |

| ≥65 y/o | 42 | 49 | |

| CT Manufacturer | <0.0001 | ||

| Philips | 32 | 20 | |

| Toshiba | 24 | 15 | |

| Siemens | 50 | 28 | |

| GE | 54 | 37 |

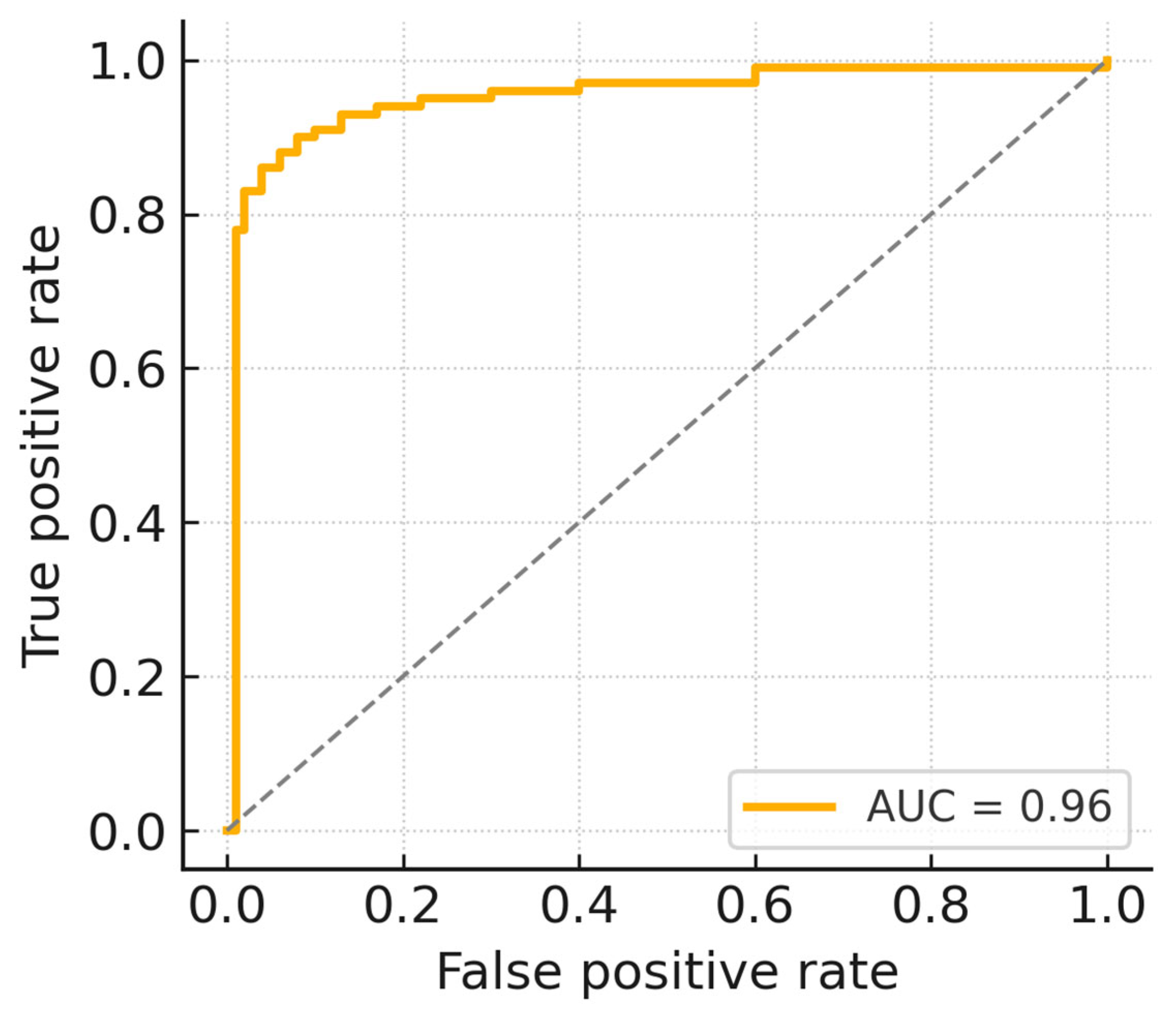

| Metrics | Performance | 95% C.I. |

|---|---|---|

| Sensitivity | 0.94 | (0.88, 0.97) |

| Specificity | 0.93 | (0.89, 0.97) |

| AUC | 0.96 | (0.94, 0.98) |

| Characteristics | Sensitivity (Wilson’s CI) | Specificity (Wilson’s CI) | AUC (DeLong’s CI) |

|---|---|---|---|

| Gender | |||

| Female | 0.94 (0.80, 0.98) | 0.93 (0.86, 0.97) | 0.95 (0.91, 0.98) |

| Male | 0.94 (0.86, 0.97) | 0.92 (0.82, 0.97) | 0.97 (0.93, 0.99) |

| Age | |||

| 18–34 y/o | 0.75 (0.30, 0.95) | 0.92 (0.75, 0.98) | 0.93 (0.84, 1.00) |

| 35–49 y/o | 0.92 (0.65, 0.99) | 0.89 (0.76, 0.96) | 0.96 (0.91, 0.99) |

| 50–64 y/o | 0.97 (0.85, 0.99) | 0.93 (0.83, 0.97) | 0.97 (0.94, 0.99) |

| ≥65 y/o | 0.94 (0.84, 0.98) | 0.92 (0.79, 0.97) | 0.96 (0.92, 0.99) |

| CT Slice Thickness | |||

| 0.625–2.0 mm | 0.95 (0.88, 0.98) | 0.93 (0.87, 0.97) | 0.96 (0.94, 0.98) |

| 2.1–3.0 mm | 0.90 (0.72, 0.97) | 0.93 (0.80, 0.97) | 0.95 (0.90, 0.98) |

| CT Manufacturer | |||

| Philips | 0.95 (0.76, 0.99) | 0.94 (0.80–0.98) | 0.96 (0.91–0.99) |

| Toshiba | 0.93 (0.70–0.99) | 0.96 (0.80–0.99) | 0.95 (0.88–0.99) |

| Siemens | 0.93 (0.77–0.98) | 0.94 (0.84–0.98) | 0.97 (0.93–0.99) |

| GE | 0.95 (0.83–0.99) | 0.93 (0.82–0.97) | 0.96 (0.92–0.99) |

| Anatomical Location | Sensitivity (Wilson’s CI) |

|---|---|

| Type | |

| AD | 0.96 (0.89–0.99) |

| IMH | 0.92 (0.73–0.98) |

| PAU | 0.83 (0.59–0.94) |

| Stanford Classification | |

| Type A | 0.93 (0.83–0.97) |

| Type B | 0.93 (0.81–0.97) |

| Location | |

| Ascending aorta | 0.92 (0.80–0.97) |

| Descending aorta | 0.95 (0.83–0.99) |

| Aortic arch | 0.92 (0.73–0.98) |

| Suprarenal abdominal aorta | 0.89 (0.55–0.98) |

| Infrarenal abdominal aorta | 1.00 (0.52–1.00) |

| Devices | Sensitivity | Specificity | AUROC |

|---|---|---|---|

| Aidoc BriefCase (FDA cleared) | 0.93 | 0.92 | - |

| Avicenna CINA (FDA cleared) | 0.96 | 0.97 | - |

| Viz.ai Aortic (FDA cleared) | 0.94 | 0.97 | - |

| Ours (AD only) | 0.96 | 0.93 | 0.96 |

| Ours (AD + IMH + PAU) | 0.94 | 0.93 | 0.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, J.; Lee, K.-J.; Wang, T.-H.; Chen, C.-M. Multi-Stage Cascaded Deep Learning-Based Model for Acute Aortic Syndrome Detection: A Multisite Validation Study. J. Clin. Med. 2025, 14, 4797. https://doi.org/10.3390/jcm14134797

Chang J, Lee K-J, Wang T-H, Chen C-M. Multi-Stage Cascaded Deep Learning-Based Model for Acute Aortic Syndrome Detection: A Multisite Validation Study. Journal of Clinical Medicine. 2025; 14(13):4797. https://doi.org/10.3390/jcm14134797

Chicago/Turabian StyleChang, Joseph, Kuan-Jung Lee, Ti-Hao Wang, and Chung-Ming Chen. 2025. "Multi-Stage Cascaded Deep Learning-Based Model for Acute Aortic Syndrome Detection: A Multisite Validation Study" Journal of Clinical Medicine 14, no. 13: 4797. https://doi.org/10.3390/jcm14134797

APA StyleChang, J., Lee, K.-J., Wang, T.-H., & Chen, C.-M. (2025). Multi-Stage Cascaded Deep Learning-Based Model for Acute Aortic Syndrome Detection: A Multisite Validation Study. Journal of Clinical Medicine, 14(13), 4797. https://doi.org/10.3390/jcm14134797