Augmented Reality Integration in Surgery for Craniosynostoses: Advancing Precision in the Management of Craniofacial Deformities

Abstract

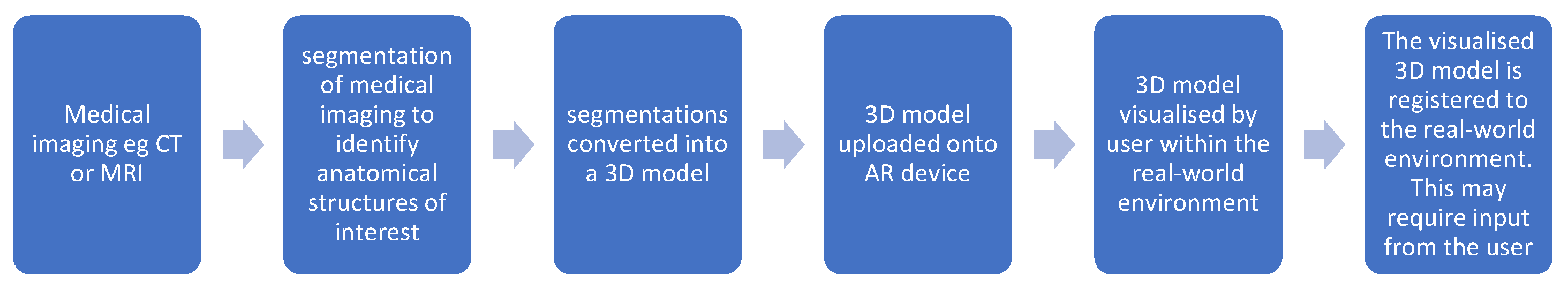

1. Introduction

2. Materials and Methods

3. Clinical Applications in Craniofacial Deformity Surgery

3.1. Preoperative Planning and Simulation

3.2. Intraoperative Navigation

3.3. Education and Telemedicine

3.4. Other Maxillofacial Uses

3.5. Challenges and Limitations

3.6. Future Directions

Advancements in Eyewear and LiDAR

4. Conclusions

Funding

Conflicts of Interest

Abbreviations

| AR | augmented reality |

| VSP | virtual surgical planning |

| VOSTARS | Video and Optical See-Through Augmented Reality Surgical System

|

References

- Kaplan, N.; Marques, M.; Scharf, I.; Yang, K.; Alkureishi, L.; Purnell, C.; Patel, P.; Zhao, L. Virtual Reality and Augmented Reality in Plastic and Craniomaxillofacial Surgery: A Scoping Review. Bioengineering 2023, 10, 480. [Google Scholar] [CrossRef] [PubMed]

- Mischkowski, R.A.; Zinser, M.J.; Kübler, A.C.; Krug, B.; Seifert, U.; Zöller, J.E. Application of an Augmented Reality Tool for Maxillary Positioning in Orthognathic Surgery—A Feasibility Study. J. Cranio-Maxillofac. Surg. 2006, 34, 478–483. [Google Scholar] [CrossRef] [PubMed]

- Maria, B.; Achille, P.; Co-Supervisori, T.; Marcelli, E.; Cercenelli, L. Application of Augmented Reality in Craniofacial Surgery: A Feasibility Study; University of Bologna: Bologna, Italy, 2024. [Google Scholar]

- Cercenelli, L.; Carbone, M.; Condino, S.; Cutolo, F.; Marcelli, E.; Tarsitano, A.; Marchetti, C.; Ferrari, V.; Badiali, G. The Wearable VOSTARS System for Augmented Reality-Guided Surgery: Preclinical Phantom Evaluation for High-Precision Maxillofacial Tasks. J. Clin. Med. 2020, 9, 3562. [Google Scholar] [CrossRef] [PubMed]

- Imahiyerobo, T.A.; Valenti, A.B.; Guadix, S.; LaValley, M.; Asadourian, P.A.; Buontempo, M.; Souweidane, M.; Hoffman, C. The Role of Virtual Surgical Planning in Surgery for Complex Craniosynostosis. Plast. Reconstr. Surg. Glob. Open 2024, 12, e5524. [Google Scholar] [CrossRef]

- Chegini, S.; Edwards, E.; McGurk, M.; Clarkson, M.; Schilling, C. Systematic Review of Techniques Used to Validate the Registration of Augmented-Reality Images Using a Head-Mounted Device to Navigate Surgery. Br. J. Oral Maxillofac. Surg. 2023, 61, 19–27. [Google Scholar] [CrossRef]

- Birlo, M.; Edwards, P.J.E.; Clarkson, M.; Stoyanov, D. Utility of Optical See-through Head Mounted Displays in Augmented Reality-Assisted Surgery: A Systematic Review. Med. Image Anal. 2022, 77, 102361. [Google Scholar] [CrossRef]

- Zhao, R.; Zhu, Z.; Shao, L.; Meng, F.; Lei, Z.; Li, X.; Zhang, T. Augmented Reality Guided in Reconstruction of Mandibular Defect with Fibular Flap: A Cadaver Study. J. Stomatol. Oral Maxillofac. Surg. 2023, 124, 101318. [Google Scholar] [CrossRef]

- Qian, L.; Wu, J.Y.; DiMaio, S.P.; Navab, N.; Kazanzides, P. A Review of Augmented Reality in Robotic-Assisted Surgery. IEEE Trans. Med. Robot. Bionics 2020, 2, 1–16. [Google Scholar] [CrossRef]

- Tokgöz, E.; Carro, M.A. Robotics Applications in Facial Plastic Surgeries. In Cosmetic and Reconstructive Facial Plastic Surgery; Springer Nature: Cham, Switzerland, 2023; pp. 307–341. [Google Scholar]

- Han, W.; Yang, X.; Wu, S.; Fan, S.; Chen, X.; Aung, Z.M.; Liu, T.; Zhang, Y.; Gu, S.; Chai, G. A New Method for Cranial Vault Reconstruction: Augmented Reality in Synostotic Plagiocephaly Surgery. J. Cranio-Maxillofac. Surg. 2019, 47, 1280–1284. [Google Scholar] [CrossRef]

- Thabit, A.; Benmahdjoub, M.; van Veelen, M.-L.C.; Niessen, W.J.; Wolvius, E.B.; van Walsum, T. Augmented Reality Navigation for Minimally Invasive Craniosynostosis Surgery: A Phantom Study. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1453–1460. [Google Scholar] [CrossRef]

- Mato, D.G.; Pascau González-Garzón, J. Optimization of Craniosynostosis Surgery: Virtual Planning, Intraoperative 3D Photography and Surgical Navigation; Universidad Carlos III de Madrid: Madrid, Spain, 2021. [Google Scholar]

- Sakamoto, Y.; Miwa, T.; Kajita, H.; Takatsume, Y. Practical Use of Augmented Reality for Posterior Distraction in Craniosynostosis. J. Plast. Reconstr. Aesthet. Surg. 2022, 75, 3877–3903. [Google Scholar] [CrossRef] [PubMed]

- Kiarostami, P.; Dennler, C.; Roner, S.; Sutter, R.; Fürnstahl, P.; Farshad, M.; Rahm, S.; Zingg, P.O. Augmented Reality-Guided Periacetabular Osteotomy—Proof of Concept. J. Orthop. Surg. Res. 2020, 15, 540. [Google Scholar] [CrossRef] [PubMed]

- Andrew, T.W.; Baylan, J.; Mittermiller, P.A.; Cheng, H.; Johns, D.N.; Edwards, M.S.B.; Cheshier, S.H.; Grant, G.A.; Lorenz, H.P. Virtual Surgical Planning Decreases Operative Time for Isolated Single Suture and Multi-Suture Craniosynostosis Repair. Plast. Reconstr. Surg. Glob. Open 2018, 6, e2038. [Google Scholar] [CrossRef]

- Coelho, G.; Rabelo, N.N.; Vieira, E.; Mendes, K.; Zagatto, G.; Santos de Oliveira, R.; Raposo-Amaral, C.E.; Yoshida, M.; de Souza, M.R.; Fagundes, C.F.; et al. Augmented Reality and Physical Hybrid Model Simulation for Preoperative Planning of Metopic Craniosynostosis Surgery. Neurosurg. Focus. 2020, 48, E19. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.-H.; Yanof, J.; Schwarz, G.S.; West, K.; Gharb, B.B.; Papay, F.A. Holographic Surgical Planning and Telementoring for Craniofacial Surgery. Plast. Reconstr. Surg. Glob. Open 2019, 7, 43–44. [Google Scholar] [CrossRef]

- Chen, J.; Kumar, S.; Shallal, C.; Leo, K.T.; Girard, A.; Bai, Y.; Li, Y.; Jackson, E.M.; Cohen, A.R.; Yang, R. Caregiver Preferences for Three-Dimensional Printed or Augmented Reality Craniosynostosis Skull Models: A Cross-Sectional Survey. J. Craniofacial Surg. 2022, 33, 151–155. [Google Scholar] [CrossRef]

- Gao, Y.; Lin, L.; Chai, G.; Xie, L. A Feasibility Study of a New Method to Enhance the Augmented Reality Navigation Effect in Mandibular Angle Split Osteotomy. J. Cranio-Maxillofac. Surg. 2019, 47, 1242–1248. [Google Scholar] [CrossRef]

- Zhu, M.; Liu, F.; Zhou, C.; Lin, L.; Zhang, Y.; Chai, G.; Xie, L.; Qi, F.; Li, Q. Does Intraoperative Navigation Improve the Accuracy of Mandibular Angle Osteotomy: Comparison between Augmented Reality Navigation, Individualised Templates and Free-Hand Techniques. J. Plast. Reconstr. Aesthetic Surg. 2018, 71, 1188–1195. [Google Scholar] [CrossRef]

- Cho, H.S.; Park, Y.K.; Gupta, S.; Yoon, C.; Han, I.; Kim, H.-S.; Choi, H.; Hong, J. Augmented Reality in Bone Tumour Resection. Bone Jt. Res. 2017, 6, 137–143. [Google Scholar] [CrossRef]

- Chauvet, P.; Collins, T.; Debize, C.; Novais-Gameiro, L.; Pereira, B.; Bartoli, A.; Canis, M.; Bourdel, N. Augmented Reality in a Tumor Resection Model. Surg. Endosc. 2018, 32, 1192–1201. [Google Scholar] [CrossRef]

- Roser, S.M.; Ramachandra, S.; Blair, H.; Grist, W.; Carlson, G.W.; Christensen, A.M.; Weimer, K.A.; Steed, M.B. The Accuracy of Virtual Surgical Planning in Free Fibula Mandibular Reconstruction: Comparison of Planned and Final Results. J. Oral Maxillofac. Surg. 2010, 68, 2824–2832. [Google Scholar] [CrossRef] [PubMed]

- Davey, M.; McInerney, N.M.; Barry, T.; Hussey, A.; Potter, S. Virtual Surgical Planning Computer-Aided Design-Guided Osteocutaneous Fibular Free Flap for Craniofacial Reconstruction: A Novel Surgical Approach. Cureus 2019, 11, e6256. [Google Scholar] [CrossRef] [PubMed]

- Hanasono, M.M.; Skoracki, R.J. Computer-assisted Design and Rapid Prototype Modeling in Microvascular Mandible Reconstruction. Laryngoscope 2013, 123, 597–604. [Google Scholar] [CrossRef] [PubMed]

- Pietruski, P.; Majak, M.; Świątek-Najwer, E.; Żuk, M.; Popek, M.; Jaworowski, J.; Mazurek, M. Supporting Fibula Free Flap Harvest with Augmented Reality: A Proof-of-concept Study. Laryngoscope 2020, 130, 1173–1179. [Google Scholar] [CrossRef]

- Meng, F.H.; Zhu, Z.H.; Lei, Z.H.; Zhang, X.H.; Shao, L.; Zhang, H.Z.; Zhang, T. Feasibility of the Application of Mixed Reality in Mandible Reconstruction with Fibula Flap: A Cadaveric Specimen Study. J. Stomatol. Oral Maxillofac. Surg. 2021, 122, e45–e49. [Google Scholar] [CrossRef]

- Battaglia, S.; Badiali, G.; Cercenelli, L.; Bortolani, B.; Marcelli, E.; Cipriani, R.; Contedini, F.; Marchetti, C.; Tarsitano, A. Combination of CAD/CAM and Augmented Reality in Free Fibula Bone Harvest. Plast. Reconstr. Surg. Glob. Open 2019, 7, e2510. [Google Scholar] [CrossRef]

- Chegini, S.; Tahim, A.; Liu, M.; Chooi, Y.; Edwards, E.; Clarkson, M.; Schilling, C. A training tool for clinicians in segmenting medical images to make 3D models. Ann. Surg. Open 2023, 4, e275. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kim, Y.; Kim, H.; Kim, Y.O. Virtual Reality and Augmented Reality in Plastic Surgery: A Review. Arch. Plast. Surg. 2017, 44, 179–187. [Google Scholar] [CrossRef]

- Du, Y.; Liu, K.; Ju, Y.; Wang, H. Effect of Prolonged Wear and Frame Tightness of AR Glasses on Comfort. Heliyon 2024, 10, e35899. [Google Scholar] [CrossRef]

- Khor, W.S.; Baker, B.; Amin, K.; Chan, A.; Patel, K.; Wong, J. Augmented and Virtual Reality in Surgery—The Digital Surgical Environment: Applications, Limitations and Legal Pitfalls. Ann. Transl. Med. 2016, 4, 454. [Google Scholar] [CrossRef]

- Ma, L.; Huang, T.; Wang, J.; Liao, H. Visualization, Registration and Tracking Techniques for Augmented Reality Guided Surgery: A Review. Phys. Med. Biol. 2023, 68, 04TR02. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, D.; Holden, A.M.; Nezamivand-Chegini, S. Augmented Reality Integration in Surgery for Craniosynostoses: Advancing Precision in the Management of Craniofacial Deformities. J. Clin. Med. 2025, 14, 4359. https://doi.org/10.3390/jcm14124359

Sharma D, Holden AM, Nezamivand-Chegini S. Augmented Reality Integration in Surgery for Craniosynostoses: Advancing Precision in the Management of Craniofacial Deformities. Journal of Clinical Medicine. 2025; 14(12):4359. https://doi.org/10.3390/jcm14124359

Chicago/Turabian StyleSharma, Divya, Adam Matthew Holden, and Soudeh Nezamivand-Chegini. 2025. "Augmented Reality Integration in Surgery for Craniosynostoses: Advancing Precision in the Management of Craniofacial Deformities" Journal of Clinical Medicine 14, no. 12: 4359. https://doi.org/10.3390/jcm14124359

APA StyleSharma, D., Holden, A. M., & Nezamivand-Chegini, S. (2025). Augmented Reality Integration in Surgery for Craniosynostoses: Advancing Precision in the Management of Craniofacial Deformities. Journal of Clinical Medicine, 14(12), 4359. https://doi.org/10.3390/jcm14124359