The Misdiagnosis Tracker: Enhancing Diagnostic Reasoning Through Cognitive Bias Awareness and Error Analysis

Abstract

1. Introduction

2. Methods

2.1. Study Design

2.2. Intervention Phases

2.3. Setting

2.4. Participants

2.5. Variables

2.6. Data Sources and Measurement

2.7. Bias Control

2.8. Study Size

2.9. Quantitative Variables

2.10. Statistical Methods

2.11. Ethical Considerations

3. Results

3.1. Participants

3.2. Descriptive Data

3.3. Outcome Data

3.4. Main Results

3.5. Other Analyses

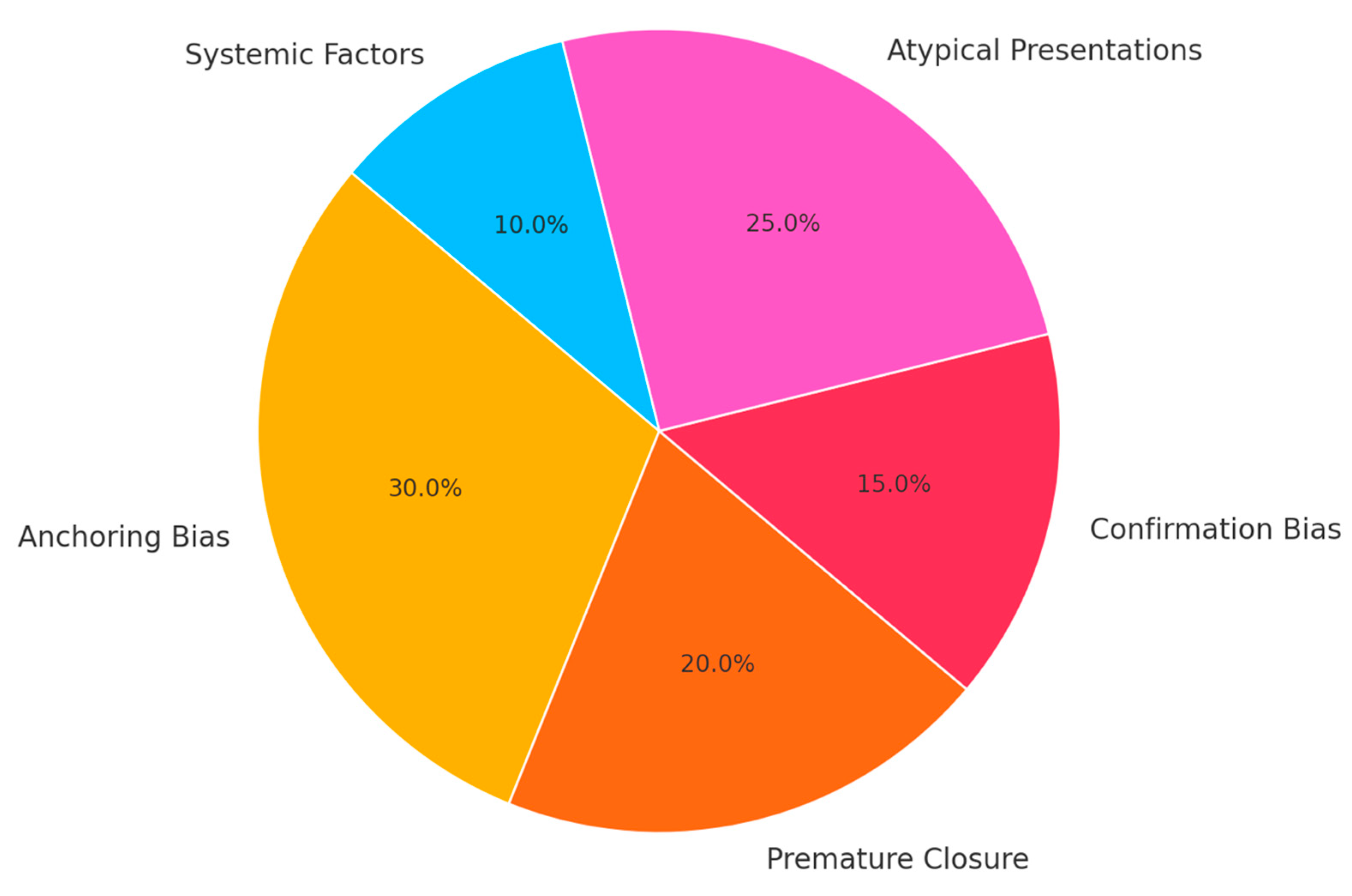

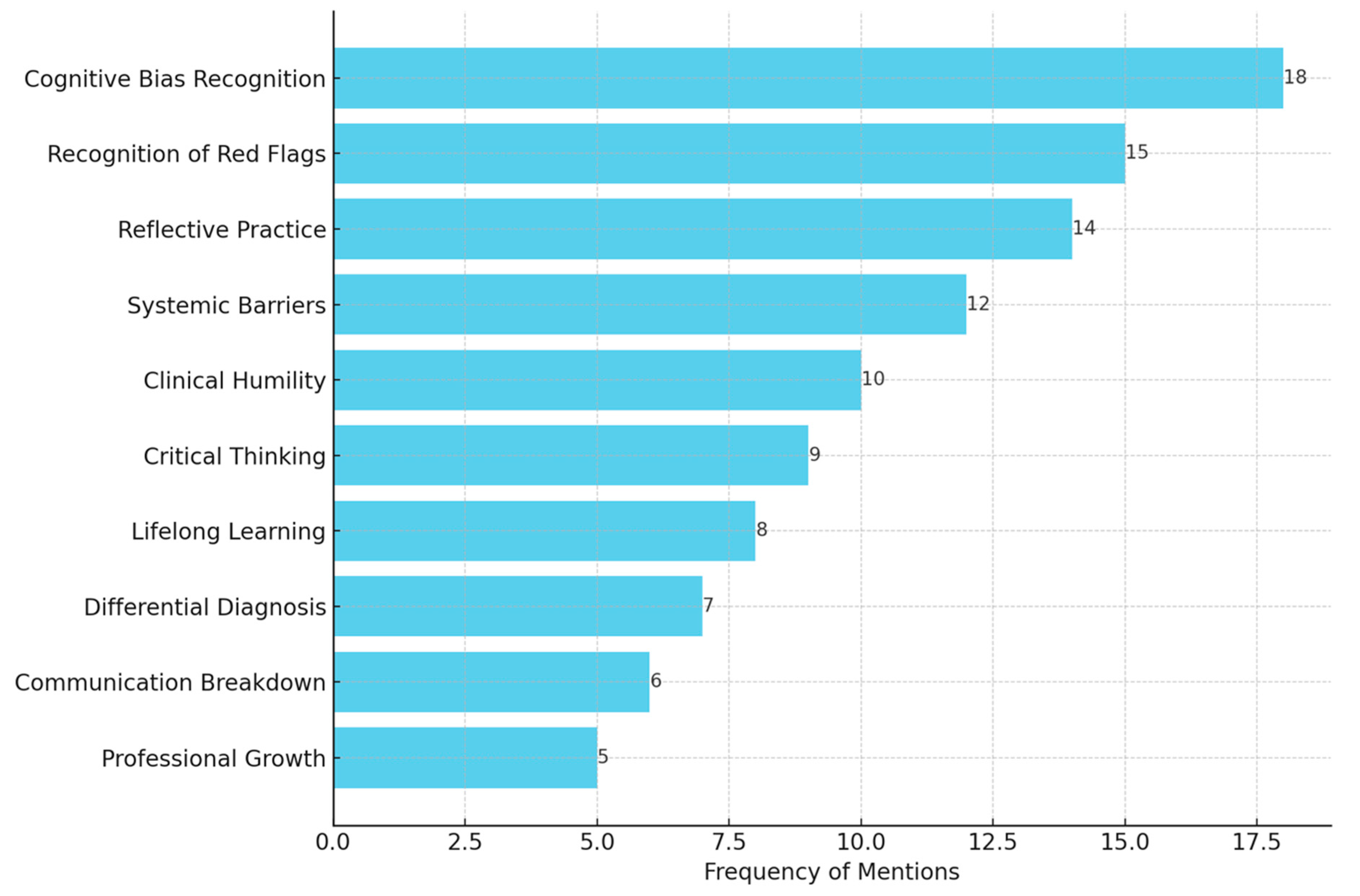

3.6. Common Patterns and Proposed Solutions

4. Discussion

4.1. Key Results

4.2. Interpretation

4.3. Limitations

4.4. Generalisability

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Pre-Study Survey

| Statement |

| I feel confident in my ability to make accurate clinical diagnoses. |

| I understand the concept of cognitive biases and their impact on diagnostic accuracy. |

| I am familiar with red flags and atypical presentations of common conditions. |

| I feel confident distinguishing between disease progression and adverse drug effects. |

| I feel prepared to analyse diagnostic errors to improve my clinical reasoning skills. |

- 1.

- What do you hope to gain from this study on diagnostic reasoning?

- 2.

- What do you find most challenging about recognising and addressing diagnostic errors?

- 3.

- How do you currently address uncertainty in your diagnostic process?

Appendix A.2. Post-Study Survey

| Statement |

| I feel more confident in my ability to make accurate clinical diagnoses. |

| I can identify cognitive biases and their impact on diagnostic decision-making. |

| I am better able to recognise red flags and atypical presentations of conditions. |

| I feel more prepared to consider diagnostic errors in my clinical reasoning process. |

| This study has improved my ability to reflect on and learn from diagnostic errors. |

- 1.

- What was the most valuable insight you gained from analysing diagnostic errors?

- 2.

- How has this study changed your approach to diagnostic reasoning?

- 3.

- What strategies will you use to minimise diagnostic errors in your future practice?

Appendix A.3. Reflective Journal Prompts

- 1.

- Describe a specific case study that challenged your diagnostic reasoning. What did you learn from it?

- 2.

- Reflect on how cognitive biases influenced the diagnostic errors in the cases you analysed.

- 3.

- What strategies did you find most effective in identifying and addressing diagnostic errors?

- 4.

- How has this program influenced your understanding of atypical presentations and diagnostic red flags?

- 5.

- What changes do you plan to make in your clinical practice to minimise diagnostic errors?

Appendix A.4. Group Analysis Report Template

- 1.

- Summarise the key diagnostic error(s) identified in the case.

- 2.

- Discuss the cognitive bias(es) involved and how they influenced the outcome.

- 3.

- Propose specific strategies to prevent similar errors in future clinical practice.

- 4.

- Reflect on the group’s learning process: What insights did you gain from working collaboratively?

References

- Singh, H. Understanding diagnostic errors in medicine: A lesson from aviation. Qual. Saf. Health Care 2006, 15, 159–164. [Google Scholar] [CrossRef]

- Spurgeon, P.; Flanagan, H.; Cooke, M.; Sujan, M.; Cross, S.; Jarvis, R. Creating safer health systems: Lessons from other sectors and an account of an application in the safer clinical systems programme. Health Serv. Manag. Res. 2017, 30, 85–93. [Google Scholar] [CrossRef] [PubMed]

- Jha, A.; Pronovost, P. Toward a safer health care system: The critical need to improve measurement. JAMA 2016, 315, 1831–1832. [Google Scholar] [CrossRef] [PubMed]

- Balogh, E.P.; Miller, B.T.; Ball, J.R.; National Academies of Sciences, Engineering, and Medicine. Overview of diagnostic error in health care. In Improving Diagnosis in Health Care; National Academies Press (US): Washington, DC, USA, 2015. [Google Scholar]

- Cantey, C. The Practice of Medicine: Understanding Diagnostic Error. J. Nurse Pract. 2020, 16, 582–585. [Google Scholar] [CrossRef]

- Thammasitboon, S.; Cutrer, W.B. Diagnostic decision-making and strategies to improve diagnosis. Curr. Probl. Pediatr. Adolesc. Health Care 2013, 43, 232–241. [Google Scholar] [CrossRef] [PubMed]

- Durning, S.J.; Trowbridge, R.L.; Schuwirth, L. Clinical Reasoning and Diagnostic Error: A Call to Merge Two Worlds to Improve Patient Care. Acad. Med. 2020, 95, 1159–1161. [Google Scholar] [CrossRef]

- Prakash, S.; Sladek, R.M.; Schuwirth, L. Interventions to improve diagnostic decision making: A systematic review and meta-analysis on reflective strategies. Med. Teach. 2019, 41, 517–524. [Google Scholar] [CrossRef]

- Braun, L.T.; Zwaan, L.; Kiesewetter, J.; Fischer, M.R.; Schmidmaier, R. Diagnostic errors by medical students: Results of a prospective qualitative study. BMC Med. Educ. 2017, 17, 191. [Google Scholar] [CrossRef]

- Wildi, K.; Gimenez, M.R.; Twerenbold, R.; Reichlin, T.; Jaeger, C.; Heinzelmann, A.; Arnold, C.; Nelles, B.; Druey, S.; Haaf, P.; et al. Misdiagnosis of myocardial infarction related to limitations of the current regulatory approach to define clinical decision values for cardiac troponin. Circulation 2015, 131, 2032–2040. [Google Scholar] [CrossRef]

- Mahajan, P.; Basu, T.; Pai, C.W.; Singh, H.; Petersen, N.; Bellolio, M.F.; Gadepalli, S.K.; Kamdar, N.S. Factors associated with potentially missed diagnosis of appendicitis in the emergency department. JAMA Netw. Open 2020, 3, e200612. [Google Scholar] [CrossRef]

- Sajid, M.S.; Hollingsworth, T.; McGlue, M.; Miles, W.F. Factors influencing the diagnostic accuracy and management in acute surgical patients. World J. Gastrointest. Surg. 2014, 6, 229–234. [Google Scholar] [CrossRef] [PubMed]

- Rafter, N.; Hickey, A.; Condell, S.; Conroy, R.; O’connor, P.; Vaughan, D.; Williams, D. Adverse events in healthcare: Learning from mistakes. QJM Int. J. Med. 2015, 108, 273–277. [Google Scholar] [CrossRef] [PubMed]

- Harada, Y.; Otaka, Y.; Katsukura, S.; Shimizu, T. Prevalence of atypical presentations among outpatients and associations with diagnostic error. Diagnosis 2024, 11, 40–48. [Google Scholar] [CrossRef] [PubMed]

- Gay, S.; Bartlett, M.; McKinley, R. Teaching clinical reasoning to medical students. Clin. Teach. 2013, 10, 308–312. [Google Scholar] [CrossRef]

- Croskerry, P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad. Med. 2003, 78, 775–780. [Google Scholar] [CrossRef]

- Graber, M.L.; Kissam, S.; Payne, V.L.; Meyer, A.N.; Sorensen, A.; Lenfestey, N.; Tant, E.; Henriksen, K.; Labresh, K.; Singh, H. Cognitive interventions to reduce diagnostic error: A narrative review. BMJ Qual. Saf. 2012, 21, 535–557. [Google Scholar] [CrossRef]

- Cook, D.A.; Beckman, T.J. Reflections on experimental research in medical education. Adv. Health Sci. Educ. 2010, 15, 455–464. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.G.; Buchner, A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef]

- Blumenthal-Barby, J.S.; Krieger, H. Cognitive biases and heuristics in medical decision making: A critical review using a systematic search strategy. Med. Decis. Mak. 2015, 35, 539–557. [Google Scholar] [CrossRef]

- Jacobsen, A.P.; Khiew, Y.C.; Murphy, S.P.; Lane, C.M.; Garibaldi, B.T. The Modern Physical Exam—A Transatlantic Perspective from the Resident Level. Teach. Learn. Med. 2020, 32, 442–448. [Google Scholar] [CrossRef]

- Kim, K.; Lee, Y.M. Understanding uncertainty in medicine: Concepts and implications in medical education. Korean J. Med. Educ. 2018, 30, 181–188. [Google Scholar] [CrossRef] [PubMed]

- Niermeyer, W.L.; Philips, R.H.W.; Essig, G.F., Jr.; Moberly, A.C. Diagnostic accuracy and confidence for otoscopy: Are medical students receiving sufficient training? Laryngoscope 2019, 129, 1891–1897. [Google Scholar] [CrossRef] [PubMed]

- Yuen, T.; Derenge, D.; Kalman, N. Cognitive bias: Its influence on clinical diagnosis. J. Fam. Pract. 2018, 67, 366–372. [Google Scholar]

- Melvin, J.E.; Perry, M.F.; McClead, R.E. Diagnostic errors and their associated cognitive biases. In Patient Safety and Quality Improvement in Healthcare: A Case-Based Approach; Springer: Cham, Switzerland, 2021; pp. 265–279. [Google Scholar]

- Wang, H.; Fang, F.; Chai, K.; Luo, Y.; Liu, B.; Liu, D.; He Sr Liu, D.; Yang, J. Pathological characteristics of coronary artery disease in elderly patients aged 80 years and over. Zhonghua Xin Xue Guan Bing Za Zhi 2015, 43, 948–953. [Google Scholar]

- Sharp, A.L.; Baecker, A.; Nassery, N.; Park, S.; Hassoon, A.; Lee, M.S.; Peterson, S.; Pitts, S.; Wang, Z.; Zhu, Y.; et al. Missed acute myocardial infarction in the emergency department-standardizing measurement of misdiagnosis-related harms using the SPADE method. Diagnosis 2021, 8, 177–186. [Google Scholar] [CrossRef]

- Flanagan, E.P.; Geschwind, M.D.; Lopez-Chiriboga, A.S.; Blackburn, K.M.; Turaga, S.; Binks, S.; Zitser, J.; Gelfand, J.M.; Day, G.S.; Dunham, S.R.; et al. Autoimmune encephalitis misdiagnosis in adults. JAMA Neurol. 2023, 80, 30–39. [Google Scholar] [CrossRef]

- Thorne, S.; Oliffe, J.L.; Stajduhar, K.I.; Oglov, V.; Kim-Sing, C.; Hislop, T.G. Poor communication in cancer care: Patient perspectives on what it is and what to do about it. Cancer Nurs. 2013, 36, 445–453. [Google Scholar] [CrossRef]

- Bane, S.; Falasinnu, T.; Espinosa, P.R.; Simard, J.F. Misdiagnosis, Missed Diagnosis, and Delayed Diagnosis of Lupus: A Qualitative Study of Rheumatologists. Arthritis Care Res. 2024, 76, 1566–1573. [Google Scholar] [CrossRef]

- Findley, J.C.; Schatte, D.; Power, J. A difficult patient encounter: Using a standardized patient scenario to teach medical students to treat medication-seeking patients. MedEdPORTAL 2017, 13, 10612. [Google Scholar] [CrossRef]

- Nevalainen, M.K.; Mantyranta, T.; Pitkala, K.H. Facing uncertainty as a medical student—A qualitative study of their reflective learning diaries and writings on specific themes during the first clinical year. Patient Educ. Couns. 2010, 78, 218–223. [Google Scholar] [CrossRef]

- Mamede, S.; van Gog, T.; Moura, A.S.; de Faria, R.M.; Peixoto, J.M.; Rikers, R.M.; Schmidt, H.G. Reflection as a strategy to foster medical students’ acquisition of diagnostic competence. Med. Educ. 2012, 46, 464–472. [Google Scholar] [CrossRef] [PubMed]

- Lim, R.B.; Tan, C.G.; Voo, K.; Lee, Y.L.; Teng, C.W. Student perspectives on interdisciplinary learning in public health education: Insights from a mixed-methods study. Front. Public Health 2024, 12, 1516525. [Google Scholar] [CrossRef] [PubMed]

- Will, K.K.; Essary, A. Competency-based interprofessional continuing education focusing on systems thinking and health care delivery for health care professionals. J. Contin. Educ. Health Prof. 2021, 41, 153–156. [Google Scholar] [CrossRef] [PubMed]

| Phase | Key Activities | Objectives | Outcomes Measured |

|---|---|---|---|

| Phase 1: Preparation |

|

|

|

| Phase 2: Case study analysis |

|

|

|

| Phase 3: Reflection and evaluation |

|

|

|

| Case | Diagnosis | Misdiagnosis | Bias Identified | Key Learning Point |

|---|---|---|---|---|

| 1 | Acute myocardial infarction | Gastritis | Anchoring | Consider cardiac causes in epigastric pain. |

| 2 | Stroke | Vertigo | Availability | Red flags in isolated dizziness. |

| 3 | Sepsis | Viral infection | Premature closure | Do not close diagnosis early. |

| 4 | Pulmonary embolism (PE) | Anxiety | Attribution | PE in anxious patients with tachycardia. |

| 5 | Appendicitis | Gastroenteritis | Confirmation | Recognise atypical signs of appendicitis. |

| 6 | Ectopic pregnancy | Urinary tract infection | Representativeness | Test pregnancy in women with abdominal pain. |

| 7 | Meningitis | Migraine | Anchoring | Lumbar puncture in suspected meningitis. |

| 8 | Lung cancer | Pneumonia | Overconfidence | Follow-up for unresolved symptoms. |

| 9 | Diabetic ketoacidosis | Hyperventilation | Attribution | Check metabolic causes in tachypnoea. |

| 10 | Subarachnoid haemorrhage | Tension headache | Failure to escalate | Image sudden severe headaches. |

| 11 | Ovarian torsion | Dysmenorrhea | Misattribution | Consider torsion in pelvic pain. |

| 12 | Celiac disease | Irritable bowel syndrome | Overgeneralisation | Screen for celiac in persistent gastrointestinal (GI) symptoms. |

| 13 | Renal colic | Musculoskeletal back pain | Context | Imaging for renal causes in young adults. |

| 14 | Aortic dissection | Acute coronary syndrome | Overlap | Dissection signs in chest pain. |

| 15 | Tuberculosis | Pneumonia | Failure to consider endemic causes | Endemic causes in immigrants. |

| 16 | Endocarditis | Viral infection | Anchoring | Echo in unexplained fevers. |

| 17 | Multiple sclerosis | Anxiety | Attribution | Magnetic resonance imaging for unexplained neuro signs. |

| 18 | Gallbladder perforation | Gastritis | Anchoring | Urgency in abdominal emergencies. |

| 19 | Hypercalcemia | Osteoporosis | Representativeness | Metabolic screen for chronic symptoms. |

| 20 | Pericarditis | Myocarditis | Overlap | Cardiac testing in chest pain. |

| Metric | Pre-Study Score (Mean ± SD) | Post-Study Score (Mean ± SD) | Change | Cohen’s d | Key Observations |

|---|---|---|---|---|---|

| Confidence in identifying cognitive biases | 2.9 ± 0.8 | 4.5 ± 0.5 | +1.6 points (p < 0.01) | 2.4 | Students demonstrated a marked improvement in recognising and understanding biases like anchoring, availability, and confirmation bias. |

| Understanding of atypical presentations | 3.1 ± 0.7 | 4.6 ± 0.4 | +1.5 points (p < 0.01) | 2.63 | Increased awareness of non-classical symptoms in conditions like myocardial infarction, stroke, and autoimmune diseases. |

| Awareness of systemic factors | 3.2 ± 0.6 | 4.7 ± 0.5 | +1.5 points (p < 0.01) | 2.72 | Students better recognised the role of communication breakdowns, time constraints, and inadequate follow-ups in diagnostic errors. |

| Confidence in recognising red flags | 2.8 ± 0.9 | 4.4 ± 0.5 | +1.6 points (p < 0.01) | 2.2 | Improved ability to identify critical warning signs that may indicate serious conditions. |

| Overall diagnostic reasoning confidence | 3.0 ± 0.9 | 4.4 ± 0.5 | +1.4 points (p < 0.01) | 1.92 | Students reported increased confidence in approaching complex cases and navigating uncertainty in clinical scenarios. |

| Students confident in managing diagnostic errors (%) | 40% | 89% | +49% | - | The percentage of students feeling equipped to manage diagnostic challenges nearly doubled. |

| Recognition of the importance of learning from errors (%) | 65% | 97% | +32% | - | Students reported a stronger appreciation for analysing diagnostic errors as a tool for professional growth. |

| Students who recognised patterns of diagnostic errors (%) | 48% | 93% | +45% | - | The ability to systematically identify patterns, such as cognitive biases and systemic factors, improved significantly. |

| Theme | Description | Illustrative Student Quotes | Key Implications |

|---|---|---|---|

| Cognitive bias awareness | Students identified biases, such as anchoring, premature closure, and availability, as key contributors to misdiagnoses. | “I realised how often I rely on initial impressions and fail to reconsider the diagnosis when new information arises.” | Emphasises the need for deliberate re-evaluation of differential diagnoses throughout the diagnostic process. |

| Recognition of atypical presentations | Participants acknowledged the impact of non-classical symptoms in obscuring diagnoses and the importance of maintaining a broad differential. | “The patient with GI symptoms and a heart attack completely changed how I think about chest pain presentations.” | Highlights the necessity of training students to recognise red flags even when symptoms deviate from standard patterns. |

| Systemic barriers and oversight | Students identified systemic issues, such as inadequate follow-ups, time constraints, and poor communication, between healthcare providers. | “The missed test results in the cancer case really showed how systemic gaps can have devastating consequences.” | Stresses the importance of addressing organisational inefficiencies to improve diagnostic outcomes. |

| Red flags and missed opportunities | Students reported a deeper understanding of the importance of red flags and how their absence or dismissal contributed to misdiagnoses. | “I didn’t realise how often subtle signs are overlooked because they don’t fit the expected pattern.” | Reinforces the need for structured checklists and protocols to ensure red flags are not missed. |

| Emotional and reflective growth | Participants reflected on how the exercise fostered humility, resilience, and a greater awareness of their cognitive tendencies. | “This made me more comfortable with the idea that I will make mistakes, but I can learn from them to improve patient care.” | Encourages medical education programs to normalise discussions of errors as part of professional growth and continuous improvement. |

| Strategies for improvement | Students proposed actionable solutions, such as incorporating regular case reviews, enhancing communication systems, and using diagnostic decision aids. | “Using structured frameworks for case reviews could make a huge difference in preventing similar mistakes.” | Suggests that healthcare systems should adopt targeted interventions to support clinicians in reducing diagnostic errors. |

| Importance of lifelong learning | Students acknowledged the role of ongoing education and reflection in maintaining diagnostic proficiency and adapting to evolving challenges. | “This exercise taught me that learning doesn’t stop after med school—every mistake is a chance to grow.” | Underscores the value of embedding reflective practices and error analysis into continuous professional development initiatives. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mutlak, Z.; Saqer, N.; Chan, S.C.C.; Majeed, A.; Jerjes, W. The Misdiagnosis Tracker: Enhancing Diagnostic Reasoning Through Cognitive Bias Awareness and Error Analysis. J. Clin. Med. 2025, 14, 4139. https://doi.org/10.3390/jcm14124139

Mutlak Z, Saqer N, Chan SCC, Majeed A, Jerjes W. The Misdiagnosis Tracker: Enhancing Diagnostic Reasoning Through Cognitive Bias Awareness and Error Analysis. Journal of Clinical Medicine. 2025; 14(12):4139. https://doi.org/10.3390/jcm14124139

Chicago/Turabian StyleMutlak, Zeinab, Noor Saqer, See Chai Carol Chan, Azeem Majeed, and Waseem Jerjes. 2025. "The Misdiagnosis Tracker: Enhancing Diagnostic Reasoning Through Cognitive Bias Awareness and Error Analysis" Journal of Clinical Medicine 14, no. 12: 4139. https://doi.org/10.3390/jcm14124139

APA StyleMutlak, Z., Saqer, N., Chan, S. C. C., Majeed, A., & Jerjes, W. (2025). The Misdiagnosis Tracker: Enhancing Diagnostic Reasoning Through Cognitive Bias Awareness and Error Analysis. Journal of Clinical Medicine, 14(12), 4139. https://doi.org/10.3390/jcm14124139