Machine Learning and Artificial Intelligence in Intensive Care Medicine: Critical Recalibrations from Rule-Based Systems to Frontier Models

Abstract

1. Introduction

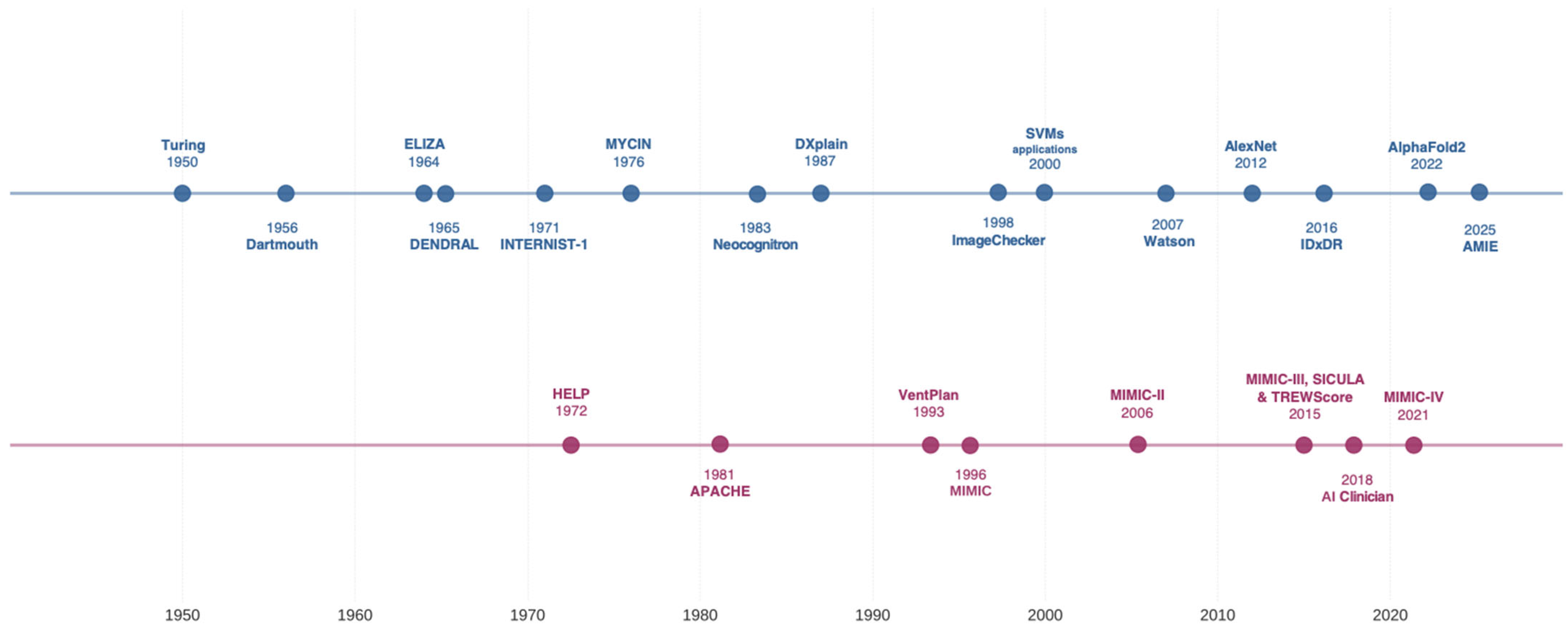

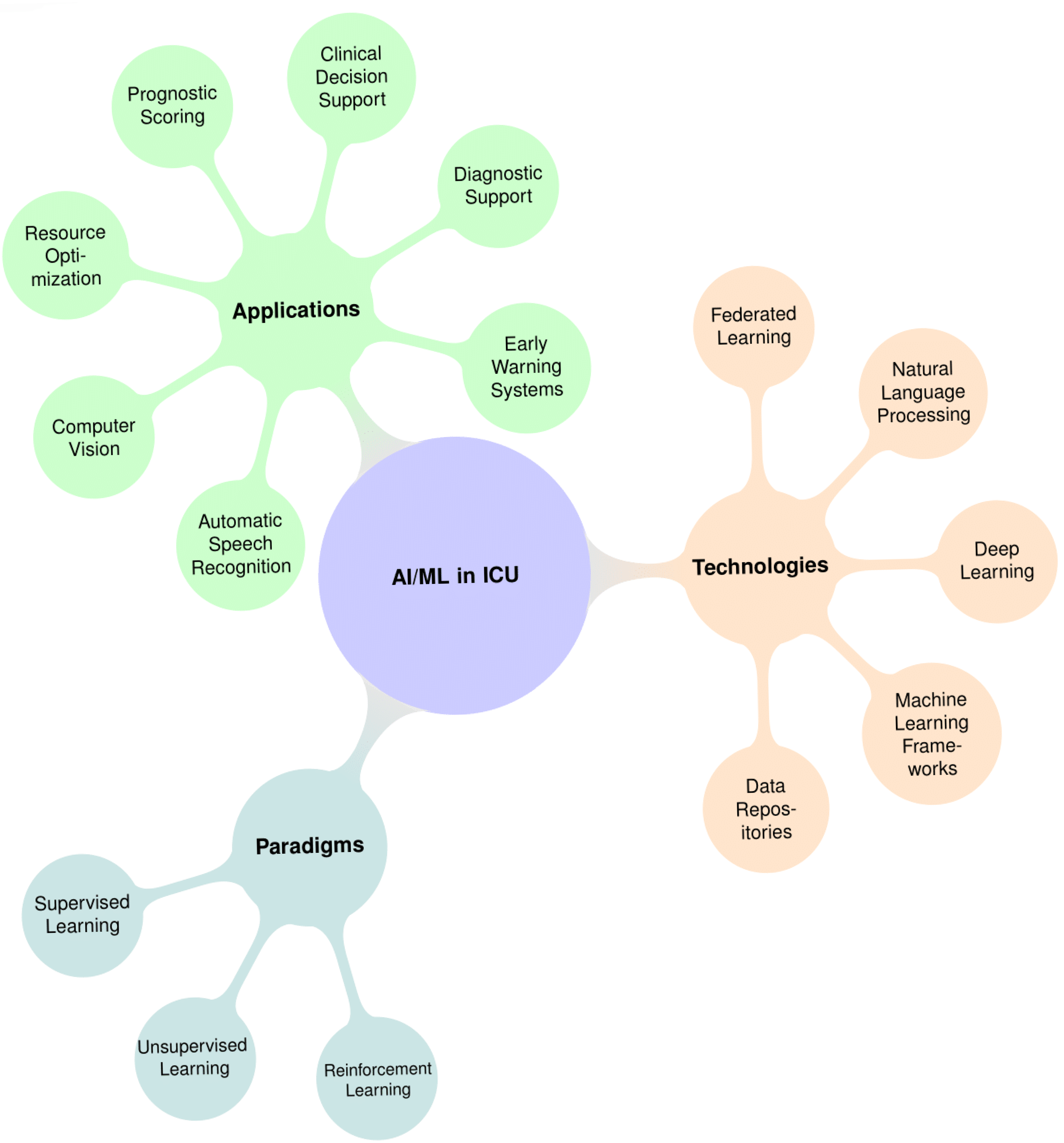

2. Historical Evolution of AI in Critical Care

3. AI/ML Paradigms and Technologies

3.1. Supervised Learning

3.2. Unsupervised Learning

- Clustering algorithms (e.g., k-means, hierarchical clustering): These group patients with similar clinical profiles or characteristics.

- Dimensionality reduction techniques (e.g., principal component analysis (PCA) or deep autoencoders), which compress high-dimensional data (such as hundreds of lab values and vital signs) into a smaller set of informative features, reducing complexity while preserving important information.

- Sepsis Phenotypes: Using clustering analyses on sepsis patient data, researchers have uncovered distinct subgroups of sepsis patients that were not previously recognized. These data-driven clusters differ significantly in their clinical characteristics and outcomes [29].

- ARDS Subphenotypes: unsupervised analyses have identified at least two consistent subphenotypes, often described informally as “hyperinflammatory” and “hypoinflammatory”. Each of these subgroups has its own pattern of biomarker levels, its own severity of illness, and a different response to treatments [38].

3.3. Reinforcement Learning

3.4. Data Infrastructure and Computational Frameworks

4. AI Applications in ICUs: Implementation and Challenges

4.1. Early Warning Systems

4.2. Sepsis Care

4.3. Ventilation Management

4.4. Diagnostic Support

4.5. Documentation and Workflow

4.6. Clinical Decision Support

4.7. Operational Efficiency

4.8. Frontier Models

4.9. Implementation Challenges

5. Future Directions

5.1. Prospective, Real-World Validation

- Determining appropriate control conditions (e.g., standard care versus non-AI decision support).

- Handling continuous model updates during trial periods.

- Measuring both direct outcomes and secondary effects on workflow and team dynamics.

- Evaluating cost-effectiveness and resource utilization.

5.2. Integrated Multimodal Platforms

5.3. Human–AI Co-Intelligence

5.4. AI Literacy

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kramer, A.A.; Zimmerman, J.E.; Knaus, W.A. Severity of Illness and Predictive Models in Society of Critical Care Medicine’s First 50 Years: A Tale of Concord and Conflict. Crit. Care Med. 2021, 49, 728–740. [Google Scholar] [CrossRef] [PubMed]

- Vincent, J.-L.; Creteur, J. Paradigm Shifts in Critical Care Medicine: The Progress We Have Made. Crit. Care 2015, 19, S10. [Google Scholar] [CrossRef] [PubMed]

- Shortliffe, E.H.; Sepúlveda, M.J. Clinical Decision Support in the Era of Artificial Intelligence. JAMA 2018, 320, 2199. [Google Scholar] [CrossRef]

- Yu, K.-H.; Beam, A.L.; Kohane, I.S. Artificial Intelligence in Healthcare. Nat. Biomed. Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef]

- Gutierrez, G. Artificial Intelligence in the Intensive Care Unit. Crit. Care 2020, 24, 101. [Google Scholar] [CrossRef]

- Keegan, M.T.; Gajic, O.; Afessa, B. Severity of Illness Scoring Systems in the Intensive Care Unit. Crit. Care Med. 2011, 39, 163–169. [Google Scholar] [CrossRef] [PubMed]

- Sekulic, A.D.; Trpkovic, S.V.; Pavlovic, A.P.; Marinkovic, O.M.; Ilic, A.N. Scoring Systems in Assessing Survival of Critically Ill ICU Patients. Med. Sci. Monit. 2015, 21, 2621–2629. [Google Scholar] [CrossRef]

- Lekadir, K.; Feragen, A.; Fofanah, A.J.; Frangi, A.F.; Buyx, A.; Emelie, A.; Lara, A.; Porras, A.R.; Chan, A.-W.; Navarro, A.; et al. FUTURE-AI: International Consensus Guideline for Trustworthy and Deployable Artificial Intelligence in Healthcare. BMJ 2023, 388, e081554. [Google Scholar] [CrossRef]

- Michard, F.; Mulder, M.P.; Gonzalez, F.; Sanfilippo, F. AI for the Hemodynamic Assessment of Critically Ill and Surgical Patients: Focus on Clinical Applications. Ann. Intensive Care 2025, 15, 26. [Google Scholar] [CrossRef]

- Shortliffe, E.H.; Davis, R.; Axline, S.G.; Buchanan, B.G.; Green, C.C.; Cohen, S.N. Computer-Based Consultations in Clinical Therapeutics: Explanation and Rule Acquisition Capabilities of the MYCIN System. Comput. Biomed. Res. 1975, 8, 303–320. [Google Scholar] [CrossRef]

- Miller, R.A. Medical Diagnostic Decision Support Systems--Past, Present, And Future: A Threaded Bibliography and Brief Commentary. J. Am. Med. Inform. Assoc. 1994, 1, 8–27. [Google Scholar] [CrossRef] [PubMed]

- Alabdulkarim, A.; Al-Rodhaan, M.; Tian, Y. Privacy-Preserving Healthcare System for Clinical Decision-Support and Emergency Call Systems. Commun. Netw. 2017, 9, 249–274. [Google Scholar] [CrossRef]

- Durand, C.; Alfandari, S.; Béraud, G.; Tsopra, R.; Lescure, F.-X.; Peiffer-Smadja, N. Clinical Decision Support Systems for Antibiotic Prescribing: An Inventory of Current French Language Tools. Antibiotics 2022, 11, 384. [Google Scholar] [CrossRef]

- Adams, I.D.; Chan, M.; Clifford, P.C.; Cooke, W.M.; Dallos, V.; de Dombal, F.T.; Edwards, M.H.; Hancock, D.M.; Hewett, D.J.; McIntyre, N. Computer Aided Diagnosis of Acute Abdominal Pain: A Multicentre Study. Br. Med. J. Clin. Res. Ed. 1986, 293, 800–804. [Google Scholar] [CrossRef] [PubMed]

- Crichton, N.J.; Fryer, J.G.; Spicer, C.C. Some Points on the Use of ‘Independent Bayes’ to Diagnose Acute Abdominal Pain. Stat. Med. 1987, 6, 945–959. [Google Scholar] [CrossRef]

- Miller, R.A.; Pople, H.E.; Myers, J.D. Internist-I, an Experimental Computer-Based Diagnostic Consultant for General Internal Medicine. N. Engl. J. Med. 1982, 307, 468–476. [Google Scholar] [CrossRef]

- Shwe, M.A.; Middleton, B.; Heckerman, D.E.; Henrion, M.; Horvitz, E.J.; Lehmann, H.P.; Cooper, G.F. Probabilistic Diagnosis Using a Reformulation of the INTERNIST-1/QMR Knowledge Base. I. The Probabilistic Model and Inference Algorithms. Methods Inf. Med. 1991, 30, 241–255. [Google Scholar] [CrossRef]

- Mumtaz, H.; Ejaz, M.K.; Tayyab, M.; Vohra, L.I.; Sapkota, S.; Hasan, M.; Saqib, M. APACHE Scoring as an Indicator of Mortality Rate in ICU Patients: A Cohort Study. Ann. Med. Surg. 2023, 85, 416–421. [Google Scholar] [CrossRef]

- Samwald, M.; Fehre, K.; de Bruin, J.; Adlassnig, K.-P. The Arden Syntax Standard for Clinical Decision Support: Experiences and Directions. J. Biomed. Inform. 2012, 45, 711–718. [Google Scholar] [CrossRef]

- Miller, R.A. A History of the INTERNIST-1 and Quick Medical Reference (QMR) Computer-Assisted Diagnosis Projects, with Lessons Learned. Yearb. Med. Inform. 2010, 19, 121–136. [Google Scholar] [CrossRef]

- Barnett, G.O.; Cimino, J.J.; Hupp, J.A.; Hoffer, E.P. DXplain. An Evolving Diagnostic Decision-Support System. JAMA 1987, 258, 67–74. [Google Scholar] [CrossRef] [PubMed]

- Subbe, C.P.; Kruger, M.; Rutherford, P.; Gemmel, L. Validation of a Modified Early Warning Score in Medical Admissions. QJM Int. J. Med. 2001, 94, 521–526. [Google Scholar] [CrossRef]

- Lee, J.; Scott, D.J.; Villarroel, M.; Clifford, G.D.; Saeed, M.; Mark, R.G. Open-Access MIMIC-II Database for Intensive Care Research. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2011, 2011, 8315–8318. [Google Scholar] [CrossRef]

- Pirracchio, R.; Petersen, M.L.; Carone, M.; Rigon, M.R.; Chevret, S.; van der Laan, M.J. Mortality Prediction in Intensive Care Units with the Super ICU Learner Algorithm (SICULA): A Population-Based Study. Lancet Respir. Med. 2015, 3, 42–52. [Google Scholar] [CrossRef]

- Mayampurath, A.; Hagopian, R.; Venable, L.; Carey, K.; Edelson, D.; Churpek, M. Comparison of Machine Learning Methods for Predicting Outcomes after In-Hospital Cardiac Arrest. Crit. Care Med. 2022, 50, e162–e172. [Google Scholar] [CrossRef]

- Miotto, R.; Li, L.; Kidd, B.A.; Dudley, J.T. Deep Patient: An Unsupervised Representation to Predict the Future of Patients from the Electronic Health Records. Sci. Rep. 2016, 6, 26094. [Google Scholar] [CrossRef] [PubMed]

- Komorowski, M.; Celi, L.A.; Badawi, O.; Gordon, A.C.; Faisal, A.A. The Artificial Intelligence Clinician Learns Optimal Treatment Strategies for Sepsis in Intensive Care. Nat. Med. 2018, 24, 1716–1720. [Google Scholar] [CrossRef] [PubMed]

- Komorowski, M.; Celi, L.A.; Badawi, O.; Gordon, A.C.; Faisal, A.A. Understanding the Artificial Intelligence Clinician and Optimal Treatment Strategies for Sepsis in Intensive Care. arXiv 2019, arXiv:1903.02345. [Google Scholar]

- Seymour, C.W.; Kennedy, J.N.; Wang, S.; Chang, C.-C.H.; Elliott, C.F.; Xu, Z.; Berry, S.; Clermont, G.; Cooper, G.; Gomez, H.; et al. Derivation, Validation, and Potential Treatment Implications of Novel Clinical Phenotypes for Sepsis. JAMA 2019, 321, 2003. [Google Scholar] [CrossRef]

- Ibrahim, Z.M.; Wu, H.; Hamoud, A.; Stappen, L.; Dobson, R.J.B.; Agarossi, A. On Classifying Sepsis Heterogeneity in the ICU: Insight Using Machine Learning. J. Am. Med. Inform. Assoc. 2020, 27, 437–443. [Google Scholar] [CrossRef]

- Hyland, S.L.; Faltys, M.; Hüser, M.; Lyu, X.; Gumbsch, T.; Esteban, C.; Bock, C.; Horn, M.; Moor, M.; Rieck, B.; et al. Early Prediction of Circulatory Failure in the Intensive Care Unit Using Machine Learning. Nat. Med. 2020, 26, 364–373. [Google Scholar] [CrossRef]

- Adams, R.; Henry, K.E.; Sridharan, A.; Soleimani, H.; Zhan, A.; Rawat, N.; Johnson, L.; Hager, D.N.; Cosgrove, S.E.; Markowski, A.; et al. Prospective, Multi-Site Study of Patient Outcomes after Implementation of the TREWS Machine Learning-Based Early Warning System for Sepsis. Nat. Med. 2022, 28, 1455–1460. [Google Scholar] [CrossRef]

- Singhal, K.; Tu, T.; Gottweis, J.; Sayres, R.; Wulczyn, E.; Hou, L.; Clark, K.; Pfohl, S.; Cole-Lewis, H.; Neal, D.; et al. Towards Expert-Level Medical Question Answering with Large Language Models. arXiv 2023, arXiv:2305.09617. [Google Scholar] [CrossRef] [PubMed]

- Knaus, W.A.; Draper, E.A.; Wagner, D.P.; Zimmerman, J.E. APACHE II: A Severity of Disease Classification System. Crit. Care Med. 1985, 13, 818–829. [Google Scholar] [CrossRef] [PubMed]

- Johnson, A.E.W.; Stone, D.J.; Celi, L.A.; Pollard, T.J. The MIMIC Code Repository: Enabling Reproducibility in Critical Care Research. J. Am. Med. Inform. Assoc. 2018, 25, 32–39. [Google Scholar] [CrossRef] [PubMed]

- Pollard, T.J.; Johnson, A.E.W.; Raffa, J.D.; Celi, L.A.; Mark, R.G.; Badawi, O. The eICU Collaborative Research Database, a Freely Available Multi-Center Database for Critical Care Research. Sci. Data 2018, 5, 180178. [Google Scholar] [CrossRef]

- Kang, M.A.; Churpek, M.M.; Zadravecz, F.J.; Adhikari, R.; Twu, N.M.; Edelson, D.P. Real-Time Risk Prediction on the Wards: A Feasibility Study. Crit. Care Med. 2016, 44, 1468–1473. [Google Scholar] [CrossRef]

- Calfee, C.S.; Delucchi, K.; Parsons, P.E.; Thompson, B.T.; Ware, L.B.; Matthay, M.A. Subphenotypes in Acute Respiratory Distress Syndrome: Latent Class Analysis of Data from Two Randomised Controlled Trials. Lancet Respir. Med. 2014, 2, 611–620. [Google Scholar] [CrossRef]

- Peine, A.; Hallawa, A.; Bickenbach, J.; Dartmann, G.; Fazlic, L.B.; Schmeink, A.; Ascheid, G.; Thiemermann, C.; Schuppert, A.; Kindle, R.; et al. Development and Validation of a Reinforcement Learning Algorithm to Dynamically Optimize Mechanical Ventilation in Critical Care. NPJ Digit. Med. 2021, 4, 32. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep Learning for Chest Radiograph Diagnosis: A Retrospective Comparison of the CheXNeXt Algorithm to Practicing Radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; et al. Large Language Models Encode Clinical Knowledge. Nature 2023, 620, 172–180. [Google Scholar] [CrossRef] [PubMed]

- Johnson, A.E.W.; Bulgarelli, L.; Shen, L.; Gayles, A.; Shammout, A.; Horng, S.; Pollard, T.J.; Hao, S.; Moody, B.; Gow, B.; et al. MIMIC-IV, a Freely Accessible Electronic Health Record Dataset. Sci. Data 2023, 10, 1. [Google Scholar] [CrossRef]

- Shillan, D.; Sterne, J.A.C.; Champneys, A.; Gibbison, B. Use of Machine Learning to Analyse Routinely Collected Intensive Care Unit Data: A Systematic Review. Crit. Care 2019, 23, 284. [Google Scholar] [CrossRef] [PubMed]

- Delahanty, R.J.; Alvarez, J.; Flynn, L.M.; Sherwin, R.L.; Jones, S.S. Development and Evaluation of a Machine Learning Model for the Early Identification of Patients at Risk for Sepsis. Ann. Emerg. Med. 2019, 73, 334–344. [Google Scholar] [CrossRef]

- Johnson, A.E.W.; Pollard, T.J.; Shen, L.; Lehman, L.H.; Feng, M.; Ghassemi, M.; Moody, B.; Szolovits, P.; Anthony Celi, L.; Mark, R.G. MIMIC-III, a Freely Accessible Critical Care Database. Sci. Data 2016, 3, 160035. [Google Scholar] [CrossRef]

- Chen, I.Y.; Szolovits, P.; Ghassemi, M. Can AI Help Reduce Disparities in General Medical and Mental Health Care? AMA J. Ethics 2019, 21, E167–E179. [Google Scholar] [CrossRef]

- Jayaraman, P.; Desman, J.; Sabounchi, M.; Nadkarni, G.N.; Sakhuja, A. A Primer on Reinforcement Learning in Medicine for Clinicians. NPJ Digit. Med. 2024, 7, 337. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; See, K.C.; Ngiam, K.Y.; Celi, L.A.; Sun, X.; Feng, M. Reinforcement Learning for Clinical Decision Support in Critical Care: Comprehensive Review. J. Med. Internet Res. 2020, 22, e18477. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous Control with Deep Reinforcement Learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Washington, P. A Perspective on Crowdsourcing and Human-in-the-Loop Workflows in Precision Health. J. Med. Internet Res. 2024, 26, e51138. [Google Scholar] [CrossRef] [PubMed]

- Holzinger, A. Interactive Machine Learning for Health Informatics: When Do We Need the Human-in-the-Loop? Brain Inf. 2016, 3, 119–131. [Google Scholar] [CrossRef] [PubMed]

- Tonekaboni, S.; Joshi, S.; McCradden, M.D.; Goldenberg, A. What Clinicians Want: Contextualizing Explainable Machine Learning for Clinical End Use. arXiv 2019, arXiv:1905.05134. [Google Scholar]

- Sadeghi, S.; Hempel, L.; Rodemund, N.; Kirsten, T. Salzburg Intensive Care Database (SICdb): A Detailed Exploration and Comparative Analysis with MIMIC-IV. Sci. Rep. 2024, 14, 11438. [Google Scholar] [CrossRef] [PubMed]

- Akhtar, U.; Khattak, A.M.; Lee, S. Challenges in Managing Real-Time Data in Health Information System (HIS). In Inclusive Smart Cities and Digital Health; Chang, C.K., Chiari, L., Cao, Y., Jin, H., Mokhtari, M., Aloulou, H., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 9677, pp. 305–313. ISBN 978-3-319-39600-2. [Google Scholar]

- Cho, K.-J.; Kwon, O.; Kwon, J.; Lee, Y.; Park, H.; Jeon, K.-H.; Kim, K.-H.; Park, J.; Oh, B.-H. Detecting Patient Deterioration Using Artificial Intelligence in a Rapid Response System. Crit. Care Med. 2020, 48, e285–e289. [Google Scholar] [CrossRef]

- Churpek, M.M.; Yuen, T.C.; Winslow, C.; Meltzer, D.O.; Kattan, M.W.; Edelson, D.P. Multicenter Comparison of Machine Learning Methods and Conventional Regression for Predicting Clinical Deterioration on the Wards. Crit. Care Med. 2016, 44, 368–374. [Google Scholar] [CrossRef]

- Churpek, M.M.; Yuen, T.C.; Winslow, C.; Robicsek, A.A.; Meltzer, D.O.; Gibbons, R.D.; Edelson, D.P. Multicenter Development and Validation of a Risk Stratification Tool for Ward Patients. Am. J. Respir. Crit. Care Med. 2014, 190, 649–655. [Google Scholar] [CrossRef] [PubMed]

- Wong, A.; Otles, E.; Donnelly, J.P.; Krumm, A.; McCullough, J.; DeTroyer-Cooley, O.; Pestrue, J.; Phillips, M.; Konye, J.; Penoza, C.; et al. External Validation of a Widely Implemented Proprietary Sepsis Prediction Model in Hospitalized Patients. JAMA Intern. Med. 2021, 181, 1065. [Google Scholar] [CrossRef]

- Gholami, B.; Phan, T.S.; Haddad, W.M.; Cason, A.; Mullis, J.; Price, L.; Bailey, J.M. Replicating Human Expertise of Mechanical Ventilation Waveform Analysis in Detecting Patient-Ventilator Cycling Asynchrony Using Machine Learning. Comput. Biol. Med. 2018, 97, 137–144. [Google Scholar] [CrossRef]

- Sottile, P.D.; Albers, D.; Higgins, C.; Mckeehan, J.; Moss, M.M. The Association Between Ventilator Dyssynchrony, Delivered Tidal Volume, and Sedation Using a Novel Automated Ventilator Dyssynchrony Detection Algorithm. Crit. Care Med. 2018, 46, e151–e157. [Google Scholar] [CrossRef]

- McDuff, D.; Schaekermann, M.; Tu, T.; Palepu, A.; Wang, A.; Garrison, J.; Singhal, K.; Sharma, Y.; Azizi, S.; Kulkarni, K.; et al. Towards Accurate Differential Diagnosis with Large Language Models. Nature 2025, 254. [Google Scholar] [CrossRef] [PubMed]

- Alsentzer, E.; Murphy, J.; Boag, W.; Weng, W.-H.; Jindi, D.; Naumann, T.; McDermott, M. Publicly Available Clinical. In Proceedings of the 2nd Clinical Natural Language Processing Workshop, Minneapolis, MN, USA, 7 June 2019; Association for Computational Linguistics: New York, NY, USA, 2019; pp. 72–78. [Google Scholar]

- Huang, Z.; Sun, A.; Bai, H. Automatic Speech Recognition Error Correction on ICU Clinical Narration Dataset; Stanford University: Stanford, CA, USA, 2023. [Google Scholar]

- Archana, D.; Singh, S.K. Artificial Intelligence in Critical Care: Enhancing Decision-Making and Patient Outcomes. Healthc. Bull. 2023, 5, 128–134. [Google Scholar]

- Bertsimas, D.; Pauphilet, J.; Stevens, J.; Tandon, M. Predicting Inpatient Flow at a Major Hospital Using Interpretable Analytics. Manuf. Serv. Oper. Manag. 2022, 24, 2809–2824. [Google Scholar] [CrossRef]

- Siegel, S.; Zhang, J.; Bandyopadhyay, S.; Nerella, S.; Silva, B.; Baslanti, T.; Bihorac, A.; Rashidi, P. Leveraging Computer Vision in the Intensive Care Unit (ICU) for Examining Visitation and Mobility. arXiv 2024, arXiv:2403.06322. [Google Scholar]

- Muralitharan, S.; Nelson, W.; Di, S.; McGillion, M.; Devereaux, P.J.; Barr, N.G.; Petch, J. Machine Learning-Based Early Warning Systems for Clinical Deterioration: Systematic Scoping Review. J. Med. Internet Res. 2021, 23, e25187. [Google Scholar] [CrossRef] [PubMed]

- Lundberg, S.M.; Nair, B.; Vavilala, M.S.; Horibe, M.; Eisses, M.J.; Adams, T.; Liston, D.E.; Low, D.K.-W.; Newman, S.-F.; Kim, J.; et al. Explainable Machine-Learning Predictions for the Prevention of Hypoxaemia during Surgery. Nat. Biomed. Eng. 2018, 2, 749–760. [Google Scholar] [CrossRef] [PubMed]

- Zaidi, S.A.J.; Ghafoor, A.; Kim, J.; Abbas, Z.; Lee, S.W. HeartEnsembleNet: An Innovative Hybrid Ensemble Learning Approach for Cardiovascular Risk Prediction. Healthcare 2025, 13, 507. [Google Scholar] [CrossRef]

- Uppal, M.; Gupta, D.; Juneja, S.; Gadekallu, T.R.; El Bayoumy, I.; Hussain, J.; Lee, S.W. Enhancing Accuracy in Brain Stroke Detection: Multi-Layer Perceptron with Adadelta, RMSProp and AdaMax Optimizers. Front. Bioeng. Biotechnol. 2023, 11, 1257591. [Google Scholar] [CrossRef]

- Fleuren, L.M.; Klausch, T.L.T.; Zwager, C.L.; Schoonmade, L.J.; Guo, T.; Roggeveen, L.F.; Swart, E.L.; Girbes, A.R.J.; Thoral, P.; Ercole, A.; et al. Machine Learning for the Prediction of Sepsis: A Systematic Review and Meta-Analysis of Diagnostic Test Accuracy. Intensive Care Med. 2020, 46, 383–400. [Google Scholar] [CrossRef]

- Boussina, A.; Shashikumar, S.P.; Malhotra, A.; Owens, R.L.; El-Kareh, R.; Longhurst, C.A.; Quintero, K.; Donahue, A.; Chan, T.C.; Nemati, S.; et al. Impact of a Deep Learning Sepsis Prediction Model on Quality of Care and Survival. NPJ Digit. Med. 2024, 7, 14. [Google Scholar] [CrossRef]

- Henry, K.E.; Kornfield, R.; Sridharan, A.; Linton, R.C.; Groh, C.; Wang, T.; Wu, A.; Mutlu, B.; Saria, S. Human–Machine Teaming Is Key to AI Adoption: Clinicians’ Experiences with a Deployed Machine Learning System. NPJ Digit. Med. 2022, 5, 97. [Google Scholar] [CrossRef]

- Henry, K.E.; Adams, R.; Parent, C.; Soleimani, H.; Sridharan, A.; Johnson, L.; Hager, D.N.; Cosgrove, S.E.; Markowski, A.; Klein, E.Y.; et al. Factors Driving Provider Adoption of the TREWS Machine Learning-Based Early Warning System and Its Effects on Sepsis Treatment Timing. Nat. Med. 2022, 28, 1447–1454. [Google Scholar] [CrossRef] [PubMed]

- Parreco, J.; Hidalgo, A.; Parks, J.J.; Kozol, R.; Rattan, R. Using Artificial Intelligence to Predict Prolonged Mechanical Ventilation and Tracheostomy Placement. J. Surg. Res. 2018, 228, 179–187. [Google Scholar] [CrossRef] [PubMed]

- Buiteman-Kruizinga, L.A.; Mkadmi, H.E.; Serpa Neto, A.; Kruizinga, M.D.; Botta, M.; Schultz, M.J.; Paulus, F.; Van Der Heiden, P.L.J. Effect of INTELLiVENT-ASV versus Conventional Ventilation on Ventilation Intensity in Patients with COVID-19 ARDS—An Observational Study. J. Clin. Med. 2021, 10, 5409. [Google Scholar] [CrossRef]

- Ghassemi, M.; Naumann, T.; Schulam, P.; Beam, A.L.; Chen, I.Y.; Ranganath, R. A Review of Challenges and Opportunities in Machine Learning for Health. AMIA Jt. Summits Transl. Sci. Proc. 2020, 2020, 191–200. [Google Scholar]

- Pinsky, M.R.; Bedoya, A.; Bihorac, A.; Celi, L.; Churpek, M.; Economou-Zavlanos, N.J.; Elbers, P.; Saria, S.; Liu, V.; Lyons, P.G.; et al. Use of Artificial Intelligence in Critical Care: Opportunities and Obstacles. Crit. Care 2024, 28, 113. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.-F.; Hung, C.-M.; Ko, S.-C.; Cheng, K.-C.; Chao, C.-M.; Sung, M.-I.; Hsing, S.-C.; Wang, J.-J.; Chen, C.-J.; Lai, C.-C.; et al. An Artificial Intelligence System to Predict the Optimal Timing for Mechanical Ventilation Weaning for Intensive Care Unit Patients: A Two-Stage Prediction Approach. Front. Med. 2022, 9, 935366. [Google Scholar] [CrossRef]

- Fan, E.; Del Sorbo, L.; Goligher, E.C.; Hodgson, C.L.; Munshi, L.; Walkey, A.J.; Adhikari, N.K.J.; Amato, M.B.P.; Branson, R.; Brower, R.G.; et al. An Official American Thoracic Society/European Society of Intensive Care Medicine/Society of Critical Care Medicine Clinical Practice Guideline: Mechanical Ventilation in Adult Patients with Acute Respiratory Distress Syndrome. Am. J. Respir. Crit. Care Med. 2017, 195, 1253–1263. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Teotia, R.; Verdone, A.; Cardall, A.; Tyagi, L.; Shen, Y.; Chopra, S. Fine-Tuning In-House Large Language Models to Infer Differential Diagnosis from Radiology Reports. arXiv 2024, arXiv:2410.09234. [Google Scholar]

- Nilius, H.; Tsouka, S.; Nagler, M.; Masoodi, M. Machine Learning Applications in Precision Medicine: Overcoming Challenges and Unlocking Potential. TrAC Trends Anal. Chem. 2024, 179, 117872. [Google Scholar] [CrossRef]

- Pyne, Y.; Wong, Y.M.; Fang, H.; Simpson, E. Analysis of ‘One in a Million’ Primary Care Consultation Conversations Using Natural Language Processing. BMJ Health Care Inform. 2023, 30, e100659. [Google Scholar] [CrossRef]

- Namli, T.; Anıl Sınacı, A.; Gönül, S.; Herguido, C.R.; Garcia-Canadilla, P.; Muñoz, A.M.; Esteve, A.V.; Ertürkmen, G.B.L. A Scalable and Transparent Data Pipeline for AI-Enabled Health Data Ecosystems. Front. Med. 2024, 11, 1393123. [Google Scholar] [CrossRef] [PubMed]

- Hollenbeak, C.S.; Henning, D.J.; Geeting, G.K.; Ledeboer, N.A.; Faruqi, I.A.; Pierce, C.G.; Thomas, C.B.; O’Neal, H.R. Costs and Consequences of a Novel Emergency Department Sepsis Diagnostic Test: The IntelliSep Index. Crit. Care Explor. 2023, 5, e0942. [Google Scholar] [CrossRef]

- Lindroth, H.; Nalaie, K.; Raghu, R.; Ayala, I.N.; Busch, C.; Bhattacharyya, A.; Moreno Franco, P.; Diedrich, D.A.; Pickering, B.W.; Herasevich, V. Applied Artificial Intelligence in Healthcare: A Review of Computer Vision Technology Application in Hospital Settings. J. Imaging 2024, 10, 81. [Google Scholar] [CrossRef] [PubMed]

- Prince, S.J.D. Computer Vision: Models, Learning, and Inference; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Casey, A.; Davidson, E.; Poon, M.; Dong, H.; Duma, D.; Grivas, A.; Grover, C.; Suárez-Paniagua, V.; Tobin, R.; Whiteley, W.; et al. A Systematic Review of Natural Language Processing Applied to Radiology Reports. BMC Med. Inform. Decis. Mak. 2021, 21, 179. [Google Scholar] [CrossRef]

- Soenksen, L.R.; Ma, Y.; Zeng, C.; Boussioux, L.; Villalobos Carballo, K.; Na, L.; Wiberg, H.M.; Li, M.L.; Fuentes, I.; Bertsimas, D. Integrated Multimodal Artificial Intelligence Framework for Healthcare Applications. NPJ Digit. Med. 2022, 5, 149. [Google Scholar] [CrossRef] [PubMed]

- Brundage, M.; Avin, S.; Wang, J.; Belfield, H.; Krueger, G.; Hadfield, G.; Khlaaf, H.; Yang, J.; Toner, H.; Fong, R.; et al. Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims. arXiv 2020, arXiv:2004.07213. [Google Scholar] [CrossRef]

- Michard, F.; Teboul, J.L. Predictive Analytics: Beyond the Buzz. Ann. Intensive Care 2019, 9, 46. [Google Scholar] [CrossRef] [PubMed]

- Alwi, R.; Prowse, P.; Gaamangwe, T. Proactive Role of Clinical Engineering in the Adoption of ISO/IEC 80001-1 within Healthcare Delivery Organization. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 5623–5626. [Google Scholar]

- Duda, S.N.; Kennedy, N.; Conway, D.; Cheng, A.C.; Nguyen, V.; Zayas-Cabán, T.; Harris, P.A. HL7 FHIR-Based Tools and Initiatives to Support Clinical Research: A Scoping Review. J. Am. Med. Inform. Assoc. 2022, 29, 1642–1653. [Google Scholar] [CrossRef] [PubMed]

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.-W.; Da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for Scientific Data Management and Stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef]

- Van Der Sijs, H.; Aarts, J.; Vulto, A.; Berg, M. Overriding of Drug Safety Alerts in Computerized Physician Order Entry. J. Am. Med. Inform. Assoc. 2006, 13, 138–147. [Google Scholar] [CrossRef]

- Sutton, R.T.; Pincock, D.; Baumgart, D.C.; Sadowski, D.C.; Fedorak, R.N.; Kroeker, K.I. An Overview of Clinical Decision Support Systems: Benefits, Risks, and Strategies for Success. NPJ Digit. Med. 2020, 3, 17. [Google Scholar] [CrossRef] [PubMed]

- Sittig, D.F.; Wright, A.; Osheroff, J.A.; Middleton, B.; Teich, J.M.; Ash, J.S.; Campbell, E.; Bates, D.W. Grand Challenges in Clinical Decision Support. J. Biomed. Inform. 2008, 41, 387–392. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 1135–1144. [Google Scholar]

- Lundberg, S.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. arXiv 2017, arXiv:1705.07874. [Google Scholar]

- Rudin, C. Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Miller, T. Explanation in Artificial Intelligence: Insights from the Social Sciences. Artif. Intell. 2019, 267, 1–38. [Google Scholar] [CrossRef]

- Gao, Y.; Li, R.; Croxford, E.; Caskey, J.; Patterson, B.W.; Churpek, M.; Miller, T.; Dligach, D.; Afshar, M. Leveraging Medical Knowledge Graphs Into Large Language Models for Diagnosis Prediction: Design and Application Study. JMIR AI 2025, 4, e58670. [Google Scholar] [CrossRef]

- Topol, E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Ahmed, M.I.; Spooner, B.; Isherwood, J.; Lane, M.; Orrock, E.; Dennison, A. A Systematic Review of the Barriers to the Implementation of Artificial Intelligence in Healthcare. Cureus 2023, 15, e46454. [Google Scholar] [CrossRef]

- Windecker, D.; Baj, G.; Shiri, I.; Kazaj, P.M.; Kaesmacher, J.; Gräni, C.; Siontis, G.C.M. Generalizability of FDA-Approved AI-Enabled Medical Devices for Clinical Use. JAMA Netw. Open 2025, 8, e258052. [Google Scholar] [CrossRef]

- Center for Devices and Radiological Health. Artificial Intelligence and Machine Learning in Software as a Medical Device. FDA 2025. [Google Scholar]

- Price, L.; Nicholson, W., II. Medical AI and Contextual Bias. Harv. J. Law. Technol. 2019, 33, 65–116. [Google Scholar]

- Kaissis, G.A.; Makowski, M.R.; Rückert, D.; Braren, R.F. Secure, Privacy-Preserving and Federated Machine Learning in Medical Imaging. Nat. Mach. Intell. 2020, 2, 305–311. [Google Scholar] [CrossRef]

- Riaz, H.; Naqvi, R.A.; Ellahi, M.; Usman, M.A.; Usman, M.R.; Jeong, D.; Lee, S.W. Robust Steganography Technique for Enhancing the Protection of Medical Records in Healthcare Informatics. IEEE J. Biomed. Health Inform. 2025, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Lu, M.Y.; Williamson, D.F.K.; Chen, T.Y.; Chen, R.J.; Barbieri, M.; Mahmood, F. Data-Efficient and Weakly Supervised Computational Pathology on Whole-Slide Images. Nat. Biomed. Eng. 2021, 5, 555–570. [Google Scholar] [CrossRef]

- Paranjape, K.; Schinkel, M.; Nannan Panday, R.; Car, J.; Nanayakkara, P. Introducing Artificial Intelligence Training in Medical Education. JMIR Med. Educ. 2019, 5, e16048. [Google Scholar] [CrossRef] [PubMed]

- Turing, A.M. I.—Computing machinery and intelligence. Mind 1950, LIX, 433–460. [Google Scholar] [CrossRef]

- McCarthy, J.; Minsky, M.L.; Rochester, N.; Shannon, C.E. A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence: 31 August 1955. AI Mag. 2006, 27, 12–14. [Google Scholar] [CrossRef]

- Weizenbaum, J. ELIZA—A Computer Program for the Study of Natural Language Communication between Man and Machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Lindsay, R.K.; Buchanan, B.G.; Feigenbaum, E.A.; Lederberg, J. DENDRAL: A Case Study of the First Expert System for Scientific Hypothesis Formation. Artif. Intell. 1993, 61, 209–261. [Google Scholar] [CrossRef]

- Computer-Based Medical Consultations: Mycin; Shortliffe, E.H., Ed.; Elsevier: Amsterdam, The Netherlands, 1976; ISBN 978-0-444-00179-5. [Google Scholar]

- Fukushima, K. Neocognitron: A Self-Organizing Neural Network Model for a Mechanism of Pattern Recognition Unaffected by Shift in Position. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef]

- Freer, T.W.; Ulissey, M.J. Screening Mammography with Computer-Aided Detection: Prospective Study of 12,860 Patients in a Community Breast Center. Radiology 2001, 220, 781–786. [Google Scholar] [CrossRef] [PubMed]

- Brown, M.P.S.; Grundy, W.N.; Lin, D.; Cristianini, N.; Sugnet, C.W.; Furey, T.S.; Ares, M.; Haussler, D. Knowledge-Based Analysis of Microarray Gene Expression Data by Using Support Vector Machines. Proc. Natl. Acad. Sci. USA 2000, 97, 262–267. [Google Scholar] [CrossRef] [PubMed]

- Ferrucci, D.; Brown, E.; Chu-Carroll, J.; Fan, J.; Gondek, D.; Kalyanpur, A.A.; Lally, A.; Murdock, J.W.; Nyberg, E.; Prager, J.; et al. Building Watson: An Overview of the DeepQA Project. AI Mag. 2010, 31, 59–79. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Lavin, P.T.; Birch, M.; Shah, N.; Folk, J.C. Pivotal Trial of an Autonomous AI-Based Diagnostic System for Detection of Diabetic Retinopathy in Primary Care Offices. NPJ Digit. Med. 2018, 1, 39. [Google Scholar] [CrossRef]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly Accurate Protein Structure Prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef] [PubMed]

- Tu, T.; Schaekermann, M.; Palepu, A.; Saab, K.; Freyberg, J.; Tanno, R.; Wang, A.; Li, B.; Amin, M.; Cheng, Y.; et al. Towards Conversational Diagnostic Artificial Intelligence. Nature 2025, 1–9. [Google Scholar] [CrossRef]

- Warner Homer, R. Computer-Assisted Medical Decision-Making; Academic Press: Cambridge, MA, USA, 1979. [Google Scholar]

- Knaus, W.A.; Zimmerman, J.E.; Wagner, D.P.; Draper, E.A.; Lawrence, D.E. APACHE—Acute Physiology and Chronic Health Evaluation: A Physiologically Based Classification System. Crit. Care Med. 1981, 9, 591–597. [Google Scholar] [CrossRef]

- Rutledge, G.W.; Thomsen, G.E.; Farr, B.R.; Tovar, M.A.; Polaschek, J.X.; Beinlich, I.A.; Sheiner, L.B.; Fagan, L.M. The Design and Implementation of a Ventilator-Management Advisor. Artif. Intell. Med. 1993, 5, 67–82. [Google Scholar] [CrossRef]

- Moody, G.B.; Mark, R.G. A Database to Support Development and Evaluation of Intelligent Intensive Care Monitoring. In Proceedings of the Computers in Cardiology, Indianapolis, IN, USA, 8–11 September 1996; IEEE: New York, NY, USA, 1996; pp. 657–660. [Google Scholar]

- Henry, K.E.; Hager, D.N.; Pronovost, P.J.; Saria, S. A Targeted Real-Time Early Warning Score (TREWScore) for Septic Shock. Sci. Transl. Med. 2015, 7, 299ra122. [Google Scholar] [CrossRef]

- Johnson, A.; Bulgarelli, L.; Pollard, T.; Horng, S.; Celi, L.A.; Mark, R. MIMIC-IV, version 3.1; MIT Laboratory for Computational Physiology: Cambridge, MA, USA, 2025. [Google Scholar]

| Year | Technology | Example | Key Innovation |

|---|---|---|---|

| 1959 | Logical Framework | Ledley and Lusted’s “Reasoning Foundations of Medical Diagnosis” | First formal approach to medical decision-making using symbolic logic and probability [12] |

| 1972 | Rule-based Expert System | MYCIN | First major clinical decision support system for infectious disease diagnosis and antibiotic selection [13] |

| 1976 | Bayesian Network | de Dombal’s Acute Abdominal Pain Diagnosis System | Applied Bayesian probability for differential diagnosis of abdominal pain [14,15] |

| 1981 | Causal-Associational Network | INTERNIST-I/QMR | Comprehensive internal medicine diagnostic system with disease-finding relationships [16,17] |

| 1985 | Severity Scoring System | APACHE II | First widely adopted ICU mortality prediction model using physiologic variables [18] |

| 1993 | Decision Support Framework | Arden Syntax | Standardized representation for sharing medical knowledge and decision rules [19] |

| 1994 | Integrated Clinical System | HELP System | Hospital-wide clinical decision support integrated with electronic records [20] |

| 1997 | Probabilistic Expert System | DXplain | Diagnostic decision support system using probabilistic reasoning [21] |

| 2001 | Real-time Alerting System | Medical Emergency Team Triggers | Early implementation of rule-based deterioration detection [22] |

| 2006 | Clinical Data Repository | MIMIC-II Database | First major open-access critical care database enabling ML research [23] |

| 2010 | Supervised Machine Learning | SuperLearner ICU Mortality Prediction | Ensemble ML methods outperform traditional scoring systems [24] |

| 2014 | Early Warning System | eCART | ML-based deterioration prediction with demonstrated clinical impact [25] |

| 2016 | Deep Learning | DeepPatient | Deep learning for disease prediction using EHR data [26] |

| 2018 | Reinforcement Learning | AI Clinician | RL for sepsis treatment optimization using retrospective ICU data [27,28] |

| 2019 | Unsupervised Learning | Sepsis Phenotyping | ML-identified sepsis subtypes with differential treatment responses [29,30] |

| 2020 | Temporal Deep Learning | Circulatory Failure Early Warning | Long Short-Term Memory networks for predicting hemodynamic instability from continuous data [31] |

| 2022 | Validated AI Implementation | TREWS Sepsis System | First prospectively validated AI system showing mortality reduction [32] |

| 2023 | Foundation Models | Med-PaLM/Clinical LLMs | Large language models demonstrating medical reasoning and knowledge [33] |

| 2024 | Multimodal AI Systems | Integrated Clinical AI Platforms | Systems combining multiple AI modalities for comprehensive decision support [33] |

| ICU Applications | Data Modalities | AI/ML Paradigms | Methods/Architectures | Example Tools/Studies |

|---|---|---|---|---|

| Early warning and deterioration prediction | Time-series, tabular | Supervised learning | Random Forests, RNNs, Transformers | eCART [37], Hyland et al. [31], SICULA [24] |

| Sepsis prediction and triage | Tabular, Text | Supervised learning | XGBoost, ClinicalBERT | TREWS [32], Sepsis NLP Alerts [33] |

| ICU discharge, length of stay (LOS) prediction | Tabular, time-series | Supervised learning | Gradient Boosting, Deep MLPs | Readmission models, ICU LOS tools |

| Phenotyping (e.g., ARDS, sepsis) | Tabular, time-series | Unsupervised learning | K-means, LCA, Autoencoders | Seymour et al. [29], Calfee et al. [38] |

| Mechanical ventilation management | Time-series | Reinforcement learning | Deep Q-Networks, Policy Gradient | AI Clinician, Peine et al. [39] |

| Diagnostic support (imaging) | Imaging | Supervised learning (Deep Learning) | CNNs, Vision Transformers | CheXNeXt, MIMIC-CXR [40] |

| Clinical documentation/NLP | Text | Supervised learning, pretraining | Transformers, BERT, GPT | AutoNote, Med-PaLM |

| Multimodal clinical reasoning | Text + tabular + imaging | Transfer learning/fine-tuning | Foundation Models (GPT-4, Med-PaLM) | Med-PaLM, LLM-driven ICU copilots [41] |

| ICU Domain | Human Benchmark ‡ | AI Benchmark (Best Published) | Documented Benefit (Effect Size) | Running Cost † | Key AI Advantages | Limitations and Interaction Challenges | References |

|---|---|---|---|---|---|---|---|

| Early-warning/deterioration | MEWS AUROC ≈ 0.70 (ward vital checks every 4 h) | eCART GBM AUROC ≈ 0.85; RNN model AUROC 0.90 at 6 h horizon | Δ + 0.15–0.20 AUROC; sensitivity ↑ ≈ 250% at matched specificity; median alert 2–6 h earlier | Low → Medium | Continuous streaming; multivariate pattern detection; earlier rescue team activation | High-quality signal feed; alert fatigue; model drift; opacity | Cho et al. [56]; Churpek et al. [57,58]; Hyland et al. [31] |

| Sepsis detection and management | Clinical recognition delay 2–6 h; guideline adherence variable | TREWS RF AUROC 0.87; alert acted ≤ 3 h dropped mortality by 3.3 pp | 4–12 h earlier detection; 18.7% relative mortality ↓ | Medium → High | Standardised, 24/7 screening; complex pattern handling; RL prototypes personalise fluids/pressors | Inter-site performance spread (Epic model: Se 33%, PPV 12%); data bias; causal opacity | Adams et al. [32]; Wong et al. [59]; Komorowski et al. [27] |

| Mechanical ventilation | Manual titration; >20% of ARDS cases plateau > 30 cmH2O; dyssynchrony often missed | VentAI RL policy increased protective-vent use by 203%; waveform AI detects cycling asynchrony with > 90% accuracy | Model-guided settings associated with 2–3 pp survival gain (retrospective); ↓ driving pressure | Medium → High | Patient-specific closed-loop optimisation; real-time waveform analytics; workload relief | Limited prospective safety trials; autonomy liability; cross-device generalisability | Peine et al. [39]; Gholami et al. [60]; Sottile et al. [61] |

| Diagnostic support (CXR ± LLM) | 9 radiologists: AUROC 0.832 across 14 findings; variable fatigue | CheXNeXt * AUROC 0.846; read 420 images in 1.5 min; LLM differential top 10 accuracy of 59.1% vs. 33.6% (juniors) | Similar or better accuracy in <1% of the time; broader differential lists | High → Medium | Speed; consistency; 24/7 availability; heat-map explanations | Limited clinical context; rare/atypical cases; automation bias | Rajpurkar et al. [40]; McDuff et al. [62] |

| Documentation and workflow | 30–50% of shift on notes; 50 min after-hours EHR time | NLP/ASR tools cut note time by 15–20%; after-hours EHR ↓ 30%; alert generation ↑ 10% speed | 8% of shifts returned to bedside care; error rate ↓ | Low → Medium | Administrative burden relief; real-time structured data; searchable notes | Privacy; nuance capture; language diversity; dependency on templates | Alsentzer et al. [63]; Huang et al. [64]; Archana et al. [65] |

| Resource optimisation | Experience-based staffing, reactive bed assignment | LOS/discharge ML models improve census forecasting; CV room activity analytics predict delirium | ↑ forecast accuracy → better bed and staff utilisation (exact % context-specific) | Medium | Pro-active, data-driven planning; multi-variable optimization | Sudden exogenous shocks; fairness in allocation; staff acceptance | Bertsimas et al. [66]; Siegel et al. [67] |

| Domain | Challenge | Critical Care Relevance | Mitigation Strategies | Relevant Frameworks/Principles |

|---|---|---|---|---|

| Data and Infrastructure | Data fragmentation and heterogeneity | ICU systems (monitors, EHRs, devices) are poorly integrated, leading to incomplete or unsynchronized inputs | Interoperability layers; data harmonization; standard ontologies (e.g., FHIR) | ISO 80001, HL7, FAIR principles [93,94,95] |

| Data quality and artifact noise | ICU data (e.g., vitals, labs) are prone to gaps, outliers, and artifacts that affect model performance | Robust preprocessing; outlier detection; real-time signal cleaning | Good Clinical Data Management Practice (GCDMP) | |

| Model Design | Generalizability across ICUs | Models trained in one setting (e.g., tertiary ICU) often perform poorly in others due to population differences | External validation; domain adaptation; multicenter training datasets | TRIPOD-AI, MINIMAR |

| Updating and model drift | Static models degrade over time; adaptive models pose safety and validation risks | Version control; locked models with scheduled retraining; monitoring performance | FDA Predetermined Change Control Plan (PCCP) | |

| Clinical Workflow Integration | Poor timing or placement of AI support | Alerts or predictions may come too late or disrupt natural decision points | Workflow-mapped deployment; UI design aligned with ICU care pathways | Human-centered design principles |

| Alert fatigue | ICU teams already face alarm overload; AI tools may add to the burden without benefit | Alert prioritization; threshold tuning; suppressive logic during emergencies | AAMI standards; ISO/IEC usability standards | |

| Low clinical adoption | AI-CDSS often bypassed if they are misaligned with team roles or too opaque | Co-design with ICU teams; agile iterations; training and onboarding | Implementation Science best practices | |

| Trust and Transparency | Black box models and explainability | Clinicians must understand the rationale to use or defend AI-assisted decisions | SHAP/LIME; counterfactuals; interpretable surrogate models | EU AI Act (Transparency and Explainability clauses) |

| Misalignment with clinical reasoning | AI outputs may not fit into clinicians’ diagnostic frameworks | Knowledge-augmented models; explanation interfaces using clinical logic | ACM Code of Ethics, Human–AI Teaming Guidelines | |

| Governance and Regulation | Bias and inequity | AI may reinforce disparities based on race, sex, and comorbidities | Subgroup analysis; fairness audits; demographic-aware training | FDA GMLP; GDPR Art. 22; IEEE P7003 |

| Accountability and liability | Unclear who is responsible when AI recommendations contribute to harm | Maintain clinician-in-the-loop control; document AI influence in decision logs | Malpractice law (jurisdiction-specific); legal gray zones | |

| Privacy and data use | ICU data are highly sensitive; secondary use for training raises legal/ethical concerns | De-identification; federated learning; institutional review board (IRB) approval | HIPAA, GDPR, Institutional Ethics Committees |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hadweh, P.; Niset, A.; Salvagno, M.; Al Barajraji, M.; El Hadwe, S.; Taccone, F.S.; Barrit, S. Machine Learning and Artificial Intelligence in Intensive Care Medicine: Critical Recalibrations from Rule-Based Systems to Frontier Models. J. Clin. Med. 2025, 14, 4026. https://doi.org/10.3390/jcm14124026

Hadweh P, Niset A, Salvagno M, Al Barajraji M, El Hadwe S, Taccone FS, Barrit S. Machine Learning and Artificial Intelligence in Intensive Care Medicine: Critical Recalibrations from Rule-Based Systems to Frontier Models. Journal of Clinical Medicine. 2025; 14(12):4026. https://doi.org/10.3390/jcm14124026

Chicago/Turabian StyleHadweh, Pierre, Alexandre Niset, Michele Salvagno, Mejdeddine Al Barajraji, Salim El Hadwe, Fabio Silvio Taccone, and Sami Barrit. 2025. "Machine Learning and Artificial Intelligence in Intensive Care Medicine: Critical Recalibrations from Rule-Based Systems to Frontier Models" Journal of Clinical Medicine 14, no. 12: 4026. https://doi.org/10.3390/jcm14124026

APA StyleHadweh, P., Niset, A., Salvagno, M., Al Barajraji, M., El Hadwe, S., Taccone, F. S., & Barrit, S. (2025). Machine Learning and Artificial Intelligence in Intensive Care Medicine: Critical Recalibrations from Rule-Based Systems to Frontier Models. Journal of Clinical Medicine, 14(12), 4026. https://doi.org/10.3390/jcm14124026