An Innovative Deep Learning Approach for Ventilator-Associated Pneumonia (VAP) Prediction in Intensive Care Units—Pneumonia Risk Evaluation and Diagnostic Intelligence via Computational Technology (PREDICT)

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design

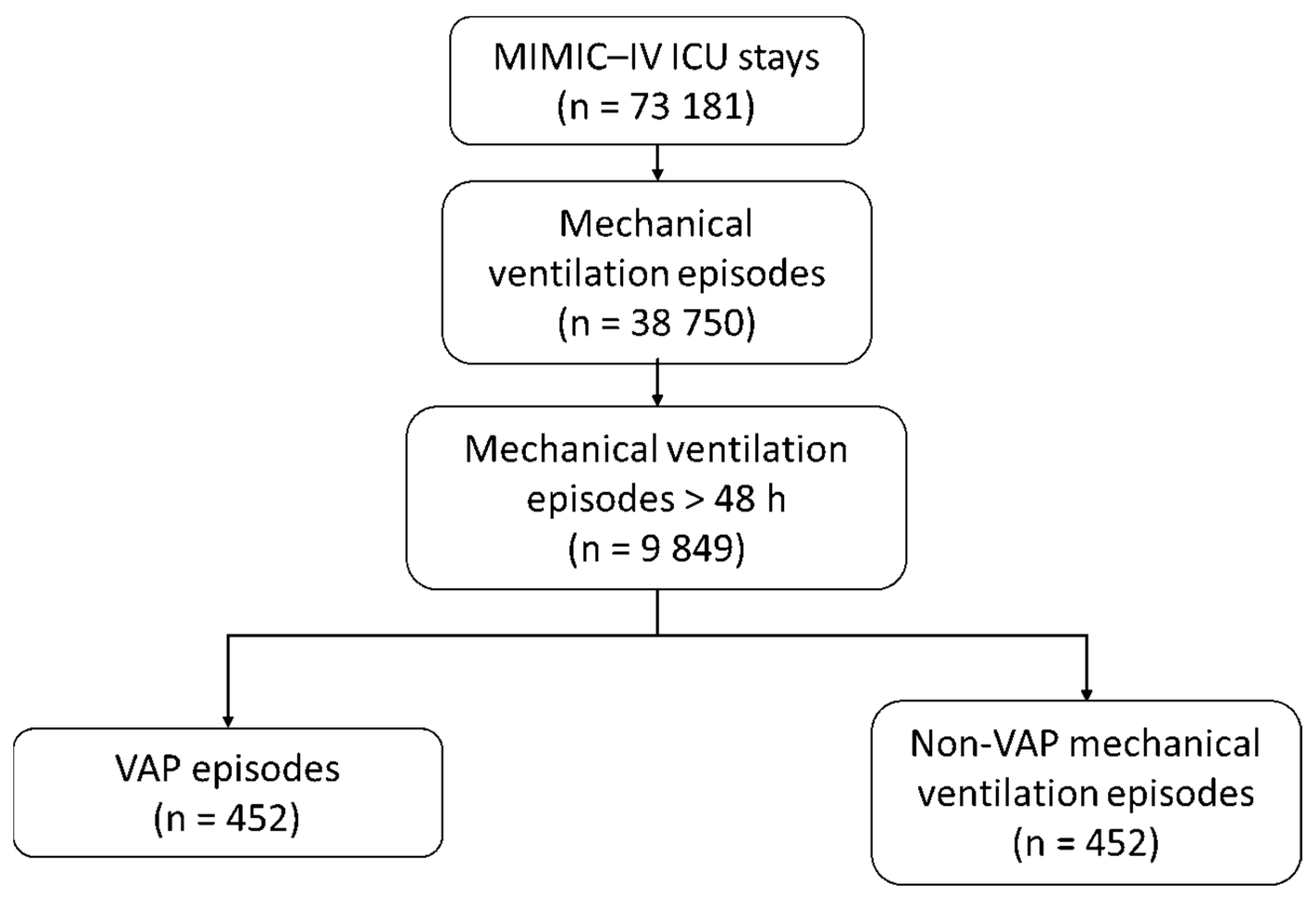

2.2. Patient Population

2.3. Data Collection

2.4. Outcomes

2.5. Annotation of VAP Events

2.6. Selection of Comparator Patients Without VAP

2.7. Data Preprocessing

- (1)

- Data resampling and cleaning: Vital signs were resampled at hourly scale to standardize time intervals and reduce measurement errors. Missing values were handled using linear interpolation to preserve the continuity and integrity of the time-series data;

- (2)

- Normalization: Each vital sign value was standardized by subtracting the mean and dividing by the standard deviation. This normalization step ensured comparability across variables, preventing any single variable with a larger numerical range (e.g., mean arterial pressure) from disproportionately influencing the algorithm;

- (3)

- Temporal windows creation: To allow the algorithm to analyze time-dependent patterns in the patient data, we divided the continuous flow of vital signs into temporal windows. A temporal window is a defined time segment that contains patient data recorded over a specific period. In this study, each temporal window consisted of 24 h of continuous vital sign recordings.

- (4)

- Balancing the dataset: Because VAP events were rare in this dataset (less than 1% of temporal windows), the synthetic minority oversampling technique (SMOTE) [17] was applied (Figure S3). This method was used to artificially generate synthetic examples of VAP-positive temporal windows while preserving the structure of the original data. It ensured the model was exposed to sufficient positive examples, enhancing its sensitivity and specificity [18]. Details on the SMOTE technique are available in Supplementary file B.

2.8. Data Splitting for Algorithm Training and Evaluation

2.9. Algorithm Development

2.10. Comparator Models

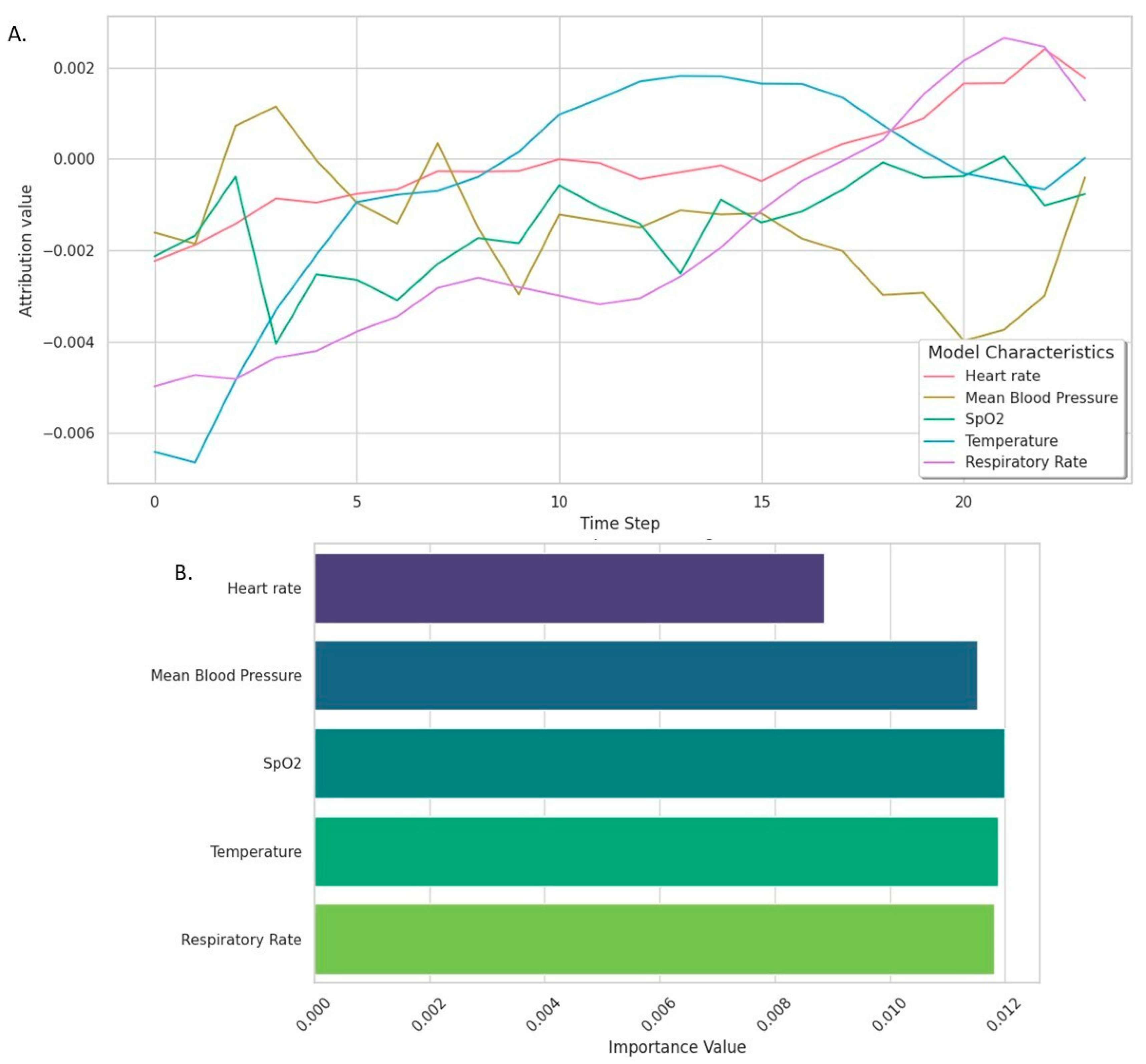

2.11. Model Explainability

2.12. Model Calibration

2.13. Statistical Analysis

3. Results

3.1. VAP Episodes

3.2. Population Characteristics

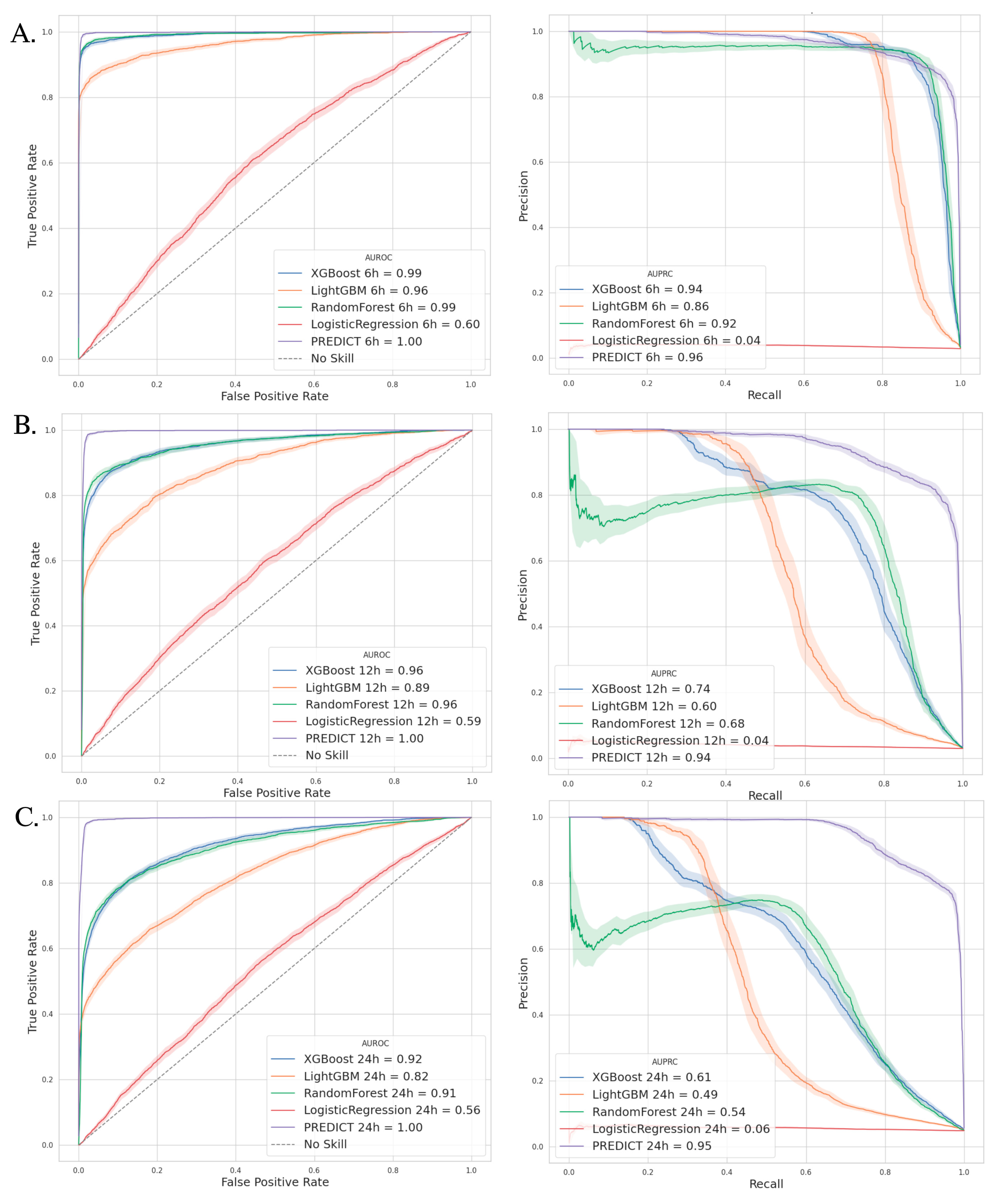

3.3. Outcomes—Model Performance

3.4. Comparators Models

3.5. Calibration Performance

3.6. PREDICT Model Explainability

3.7. Patients’ Prognosis

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ARDS | Acute Respiratory Distress Syndrome |

| AUPRC | Area Under the Precision–Recall Curve |

| AUROC | Area Under the Receiver Operating Characteristic Curve |

| BAL | Bronchoalveolar Lavage |

| CDC | Centers for Disease Control and Prevention |

| COPD | Chronic Obstructive Pulmonary Disease |

| CPIS | Clinical Pulmonary Infection Score |

| HPO | Hyperparameter Optimization |

| ICD-10 | International Classification of Diseases, 10th Revision |

| ICU | Intensive Care Unit |

| IDSA/ATS | Infectious Diseases Society of America/American Thoracic Society |

| IQR | Interquartile Range |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| MIMIC-IV | Medical Information Mart for Intensive Care IV |

| MV | Mechanical Ventilation |

| NPV | Negative Predictive Value |

| PAVM | Pneumonie Associée à la Ventilation Mécanique |

| PREDICT | Pneumonia Risk Evaluation and Diagnostic Intelligence via Computational Technology |

| PPV | Positive Predictive Value |

| ROC | Receiver Operating Characteristic |

| SAP-II | Simplified Acute Physiology Score II |

| SFAR/SRLF | Société Française d’Anesthésie et de Réanimation/Société de Réanimation de Langue Française |

| SMOTE | Synthetic Minority Oversampling Technique |

| SOFA | Sepsis-related Organ Failure Assessment |

| SQL | Structured Query Language |

| TRIPOD+AI | Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (with AI guidelines) |

| VAP | Ventilator-Associated Pneumonia |

| VFD D28 | Ventilation-Free Days at Day 28 |

References

- Papazian, L.; Klompas, M.; Luyt, C.-E. Ventilator-associated pneumonia in adults: A narrative review. Intensive Care Med. 2020, 46, 888–906. [Google Scholar] [CrossRef] [PubMed]

- CDC. Ventilator-Associated Pneumonia Basics. Ventilator-Associated Pneumonia (VAP). 2024. Available online: https://www.cdc.gov/ventilator-associated-pneumonia/about/index.html (accessed on 10 June 2024).

- Ramírez-Estrada, S.; Lagunes, L.; Peña-López, Y.; Vahedian-Azimi, A.; Nseir, S.; Arvaniti, K.; Bastug, A.; Totorika, I.; Oztoprak, N.; Bouadma, L.; et al. Assessing predictive accuracy for outcomes of ventilator-associated events in an international cohort: The EUVAE study. Intensive Care Med. 2018, 44, 1212–1220. [Google Scholar] [CrossRef]

- Jansson, M.; Ala-Kokko, T.; Ahvenjärvi, L.; Karhu, J.; Ohtonen, P.; Syrjälä, H. What Is the Applicability of a Novel Surveillance Concept of Ventilator-Associated Events? Infect. Control Hosp. Epidemiol. 2017, 38, 983–988. [Google Scholar] [CrossRef][Green Version]

- Samadani, A.; Wang, T.; van Zon, K.; Celi, L.A. VAP risk index: Early prediction and hospital phenotyping of ventilator-associated pneumonia using machine learning. Artif. Intell. Med. 2023, 146, 102715. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Lim, B.; Zohren, S. Time-series forecasting with deep learning: A survey. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200209. [Google Scholar] [CrossRef]

- Johnson, A.E.W.; Bulgarelli, L.; Shen, L.; Gayles, A.; Shammout, A.; Horng, S.; Pollard, T.J.; Hao, S.; Moody, B.; Gow, B.; et al. MIMIC-IV, a freely accessible electronic health record dataset. Sci. Data 2023, 10, 1. [Google Scholar] [CrossRef]

- Desautels, T.; Calvert, J.; Hoffman, J.; Jay, M.; Kerem, Y.; Shieh, L.; Shimabukuro, D.; Chettipally, U.; Feldman, M.D.; Barton, C.; et al. Prediction of Sepsis in the Intensive Care Unit With Minimal Electronic Health Record Data: A Machine Learning Approach. JMIR Med. Inf. 2016, 4, e28. [Google Scholar] [CrossRef]

- Calvert, J.S.; Price, D.A.; Chettipally, U.K.; Barton, C.W.; Feldman, M.D.; Hoffman, J.L.; Jay, M.; Das, R. A computational approach to early sepsis detection. Comput. Biol. Med. 2016, 74, 69–73. [Google Scholar] [CrossRef]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Van Calster, B.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M.; et al. TRIPOD+AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 2024, 385, e078378. [Google Scholar] [CrossRef] [PubMed]

- Richard, J.; Beydon, L.; Cantagrel, S.; Cuvelier, A.; Fauroux, B.; Garo, B.; Holzapfel, L.; Lesieur, O.; Levraut, J.; Maury, E. Sevrage de la ventilation mécanique (à l’exclusion du nouveau-né et du réveil d’anesthésie). Réanimation 2001, 10, 699–705. [Google Scholar] [CrossRef]

- Guidelines for the Management of Adults with Hospital-acquired, Ventilator-associated, and Healthcare-Associated Pneumonia. Am. J. Respir. Crit. Care Med. 2005, 171, 388–416. [CrossRef] [PubMed]

- Leone, M.; Bouadma, L.; Bouhemad, B.; Brissaud, O.; Dauger, S.; Gibot, S.; Hraiech, S.; Jung, B.; Kipnis, E.; Launey, Y.; et al. Pneumonies associées aux soins de réanimation. Anesthésie Réanimation 2018, 4, 421–441. [Google Scholar] [CrossRef]

- Seymour, C.W.; Liu, V.X.; Iwashyna, T.J.; Brunkhorst, F.M.; Rea, T.D.; Scherag, A.; Rubenfeld, G.; Kahn, J.M.; Shankar-Hari, M.; Singer, M.; et al. Assessment of Clinical Criteria for Sepsis: For the Third International Consensus Definitions for Sepsis and Septic Shock (Sepsis-3). JAMA 2016, 315, 762–774. [Google Scholar] [CrossRef] [PubMed]

- Lemaıtre, G.; Nogueira, F. Imbalanced-learn: A Python Toolbox to Tackle the Curse of Imbalanced Datasets in Machine Learning. J. Mach. Learn. Res. 2017, 18, 1–5. [Google Scholar]

- Belhaouari, S.B.; Islam, A.; Kassoul, K.; Al-Fuqaha, A.; Bouzerdoum, A. Oversampling techniques for imbalanced data in regression. Expert. Syst. Appl. 2024, 252, 124118. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, ICML ’06, Pittsburgh, PA, USA, 25–29 June 2006; Association for Computing Machinery: New York, NY, USA, 2006; pp. 233–240. [Google Scholar] [CrossRef]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic Attribution for Deep Networks. arXiv 2017, arXiv:1703.01365. [Google Scholar]

- Shrikumar, A.; Greenside, P.; Shcherbina, A.; Kundaje, A. Not Just a Black Box: Learning Important Features Through Propagating Activation Differences. arXiv 2017, arXiv:1605.01713. [Google Scholar] [CrossRef]

- Nonparametric Statistical Methods|Wiley Series in Probability and Statistics. Available online: https://onlinelibrary-wiley-com.lama.univ-amu.fr/doi/book/10.1002/9781119196037 (accessed on 4 May 2025).

- Liang, Y.; Zhu, C.; Tian, C.; Lin, Q.; Li, Z.; Li, Z.; Ni, D.; Ma, X. Early prediction of ventilator-associated pneumonia in critical care patients: A machine learning model. BMC Pulm. Med. 2022, 22, 250. [Google Scholar] [CrossRef] [PubMed]

- Gattarello, S.; Lagunes, L.; Vidaur, L.; Solé-Violán, J.; Zaragoza, R.; Vallés, J.; Torres, A.; Sierra, R.; Sebastian, R.; Rello, J. Improvement of antibiotic therapy and ICU survival in severe non-pneumococcal community-acquired pneumonia: A matched case–control study. Crit. Care 2015, 19, 335. [Google Scholar] [CrossRef]

- Kumar, A.; Roberts, D.; Wood, K.E.; Light, B.; Parrillo, J.E.; Sharma, S.; Suppes, R.; Feinstein, D.; Zanotti, S.; Taiberg, L.; et al. Duration of hypotension before initiation of effective antimicrobial therapy is the critical determinant of survival in human septic shock. Crit. Care Med. 2006, 34, 1589–1596. [Google Scholar] [CrossRef]

- Timsit, J.-F.; Bassetti, M.; Cremer, O.; Daikos, G.; De Waele, J.; Kallil, A.; Kipnis, E.; Kollef, M.; Laupland, K.; Paiva, J.-A.; et al. Rationalizing antimicrobial therapy in the ICU: A narrative review. Intensive Care Med. 2019, 45, 172–189. [Google Scholar] [CrossRef] [PubMed]

- Moazemi, S.; Vahdati, S.; Li, J.; Kalkhoff, S.; Castano, L.J.V.; Dewitz, B.; Bibo, R.; Sabouniaghdam, P.; Tootooni, M.S.; Bundschuh, R.A.; et al. Artificial intelligence for clinical decision support for monitoring patients in cardiovascular ICUs: A systematic review. Front. Med. (Lausanne) 2023, 10, 1109411. [Google Scholar] [CrossRef]

- Devnath, L.; Fan, Z.; Luo, S.; Summons, P.; Wang, D. Detection and Visualisation of Pneumoconiosis Using an Ensemble of Multi-Dimensional Deep Features Learned from Chest X-rays. Int. J. Environ. Res. Public Health 2022, 19, 11193. [Google Scholar] [CrossRef] [PubMed]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning; Springer Series in Statistics; Springer: New York, NY, USA, 2009; Available online: http://link.springer.com/10.1007/978-0-387-84858-7 (accessed on 15 August 2024).

- Dormann, C.F.; Elith, J.; Bacher, S.; Buchmann, C.; Carl, G.; Carré, G.; Marquéz, J.R.G.; Gruber, B.; Lafourcade, B.; Leitão, P.J.; et al. Collinearity: A review of methods to deal with it and a simulation study evaluating their performance. Ecography 2013, 36, 27–46. [Google Scholar] [CrossRef]

- Wolfensberger, A.; Meier, A.H.; Kuster, S.P.; Mehra, T.; Meier, M.-T.; Sax, H. Should International Classification of Diseases codes be used to survey hospital-acquired pneumonia? J. Hosp. Infect. 2018, 99, 81–84. [Google Scholar] [CrossRef]

- Leong, Y.H.; Khoo, Y.L.; Abdullah, H.R.; Ke, Y. Compliance to ventilator care bundles and its association with ventilator-associated pneumonia. Anesthesiol. Perioper. Sci. 2024, 2, 20. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Santurkar, S.; Tsipras, D.; Ilyas, A.; Madry, A. How Does Batch Normalization Help Optimization? arXiv 2019, arXiv:1805.11604. [Google Scholar] [CrossRef]

- Krogh, A.; Hertz, J. A Simple Weight Decay Can Improve Generalization. In Advances in Neural Information Processing Systems; Morgan-Kaufmann: Burlington, MA, USA, 1991; Available online: https://proceedings.neurips.cc/paper/1991/hash/8eefcfdf5990e441f0fb6f3fad709e21-Abstract.html (accessed on 23 August 2024).

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G. On the importance of initialization and momentum in deep learning. Proc. Mach. Learn. Res. 2013, 28, 1139–1147. [Google Scholar]

| Overall 1, n = 904 | VAP 1, n = 452 | No VAP 1, n = 452 | p-Value 2 | |

|---|---|---|---|---|

| Sex (Male) | 573 (63%) | 305 (67%) | 268 (59%) | 0.011 * |

| Age (years) | 64.2 [52.1–75.3] | 63.9 [50.7–74.3] | 65.1 [52.9–76.1] | 0.2 |

| Pre-existing Diseases n (%) | ||||

| Hypertension | 450 (50%) | 217 (48%) | 233 (52%) | 0.3 |

| Ischemic heart disease | 306 (34%) | 131 (29%) | 175 (39%) | 0.002 * |

| Diabetes mellitus | 216 (24%) | 106 (23%) | 110 (24%) | 0.8 |

| Chronic renal failure | 218 (24%) | 95 (21%) | 123 (27%) | 0.029 * |

| Obstructive sleep apnea | 145 (16%) | 69 (15%) | 76 (17%) | 0.5 |

| Active cancer | 134 (15%) | 56 (12%) | 78 (17%) | 0.039 * |

| COPD | 66 (7.3%) | 43 (9.5%) | 23 (5.1%) | 0.011 * |

| Active hematological malignancy | 31 (3.4%) | 18 (4.0%) | 13 (2.9%) | 0.4 |

| Source of admission to ICU | 0.9 | |||

| Emergency ward | 665 (74%) | 331 (73%) | 334 (74%) | |

| Medical ward | 150 (17%) | 74 (16%) | 76 (17%) | |

| Elective surgery | 89 (9.8%) | 47 (10%) | 42 (9.3%) | |

| SOFA—admission | 2.0 [0.0–4.0] | 1.0 [0.0–4.0] | 2.0 [0.0–4.0] | 0.2 |

| SAPS-II on admission | 42.0 [32.0–54.0] | 42.0 [31.0–53.0] | 43.0 [34.0–55.0] | 0.053 |

| Time from ICU admission to initiation of MV(hours) | 7.2 [1.7–67.3] | 8.2 [1.8–73.0] | 6.0 [1.7–60.4] | 0.4 |

| Reason for ICU admission | ||||

| Sepsis | 179 (20%) | 91 (20%) | 88 (19%) | |

| Trauma | 97 (11%) | 73 (16%) | 24 (5.3%) | |

| Hemorrhagic or ischemic stroke | 75 (8.3%) | 44 (9.7%) | 31 (6.9%) | |

| Acute malignancy | 46 (5.1%) | 17 (3.8%) | 29 (6.4%) | |

| ARDS | 40 (4.4%) | 34 (7.5%) | 6 (1.3%) | |

| Pneumonia | 34 (3.8%) | 20 (4.4%) | 14 (3.1%) | |

| Myocardial infarction | 26 (2.9%) | 8 (1.8%) | 18 (4.0%) | |

| VAP Prediction | Best Threshold | AUPRC (%) | Sensibility (%) | Specificity (%) | PPV (%) | NPV (%) | |

|---|---|---|---|---|---|---|---|

| PREDICT Model | 6 h | 0.53 | 96.0 | 89.7 | 99.7 | 89.8 | 99.7 |

| 12 h | 0.52 | 94.1 | 85.9 | 99.6 | 85.6 | 99.6 | |

| 24 h | 0.43 | 94.7 | 85.1 | 99.2 | 85.0 | 99.2 |

| Overall 1, n = 904 | VAP 1, n = 452 | No VAP 1, n = 452 | p-Value 2 | |

|---|---|---|---|---|

| Length of stay—Hospital (days) | 21.4 [12.9–35.2] | 25.9 [17.1–39.0] | 16.3 [9.7–28.3] | <0.001 * |

| Length of stay—ICU (days) | 14.0 [7.8–22.9] | 18.9 [13.0–30.0] | 8.4 [5.3–15.7] | <0.001 * |

| Time from ICU admission to death (days) | 38.0 [13.5–117.5] | 42.5 [18.3–103.3] | 30.1 [7.7–147.8] | 0.007 * |

| Duration of mechanical ventilation (days) | 8.4 [4.0–15.4] | 13.6 [8.4–21.7] | 4.4 [3.0–8.3] | <0.001 * |

| Ventilation-free days D28 (days) | 14.5 [0.0–22.6] | 9.1 [0.0–17.6] | 20.9 [0.0–24.5] | <0.001 * |

| ICU mortality | 188 (21%) | 110 (24%) | 78 (17%) | 0.009 * |

| In-hospital mortality | 261 (29%) | 139 (31%) | 122 (27%) | 0.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agard, G.; Roman, C.; Guervilly, C.; Forel, J.-M.; Orléans, V.; Barrau, D.; Auquier, P.; Ouladsine, M.; Boyer, L.; Hraiech, S. An Innovative Deep Learning Approach for Ventilator-Associated Pneumonia (VAP) Prediction in Intensive Care Units—Pneumonia Risk Evaluation and Diagnostic Intelligence via Computational Technology (PREDICT). J. Clin. Med. 2025, 14, 3380. https://doi.org/10.3390/jcm14103380

Agard G, Roman C, Guervilly C, Forel J-M, Orléans V, Barrau D, Auquier P, Ouladsine M, Boyer L, Hraiech S. An Innovative Deep Learning Approach for Ventilator-Associated Pneumonia (VAP) Prediction in Intensive Care Units—Pneumonia Risk Evaluation and Diagnostic Intelligence via Computational Technology (PREDICT). Journal of Clinical Medicine. 2025; 14(10):3380. https://doi.org/10.3390/jcm14103380

Chicago/Turabian StyleAgard, Geoffray, Christophe Roman, Christophe Guervilly, Jean-Marie Forel, Véronica Orléans, Damien Barrau, Pascal Auquier, Mustapha Ouladsine, Laurent Boyer, and Sami Hraiech. 2025. "An Innovative Deep Learning Approach for Ventilator-Associated Pneumonia (VAP) Prediction in Intensive Care Units—Pneumonia Risk Evaluation and Diagnostic Intelligence via Computational Technology (PREDICT)" Journal of Clinical Medicine 14, no. 10: 3380. https://doi.org/10.3390/jcm14103380

APA StyleAgard, G., Roman, C., Guervilly, C., Forel, J.-M., Orléans, V., Barrau, D., Auquier, P., Ouladsine, M., Boyer, L., & Hraiech, S. (2025). An Innovative Deep Learning Approach for Ventilator-Associated Pneumonia (VAP) Prediction in Intensive Care Units—Pneumonia Risk Evaluation and Diagnostic Intelligence via Computational Technology (PREDICT). Journal of Clinical Medicine, 14(10), 3380. https://doi.org/10.3390/jcm14103380