Large Language Models in Cancer Imaging: Applications and Future Perspectives

Abstract

1. Introduction

2. Large Language Models: Definitions and Different Architectures

2.1. LLM Definition

2.2. Encoder-Only Models

2.3. Decoder-Only Models

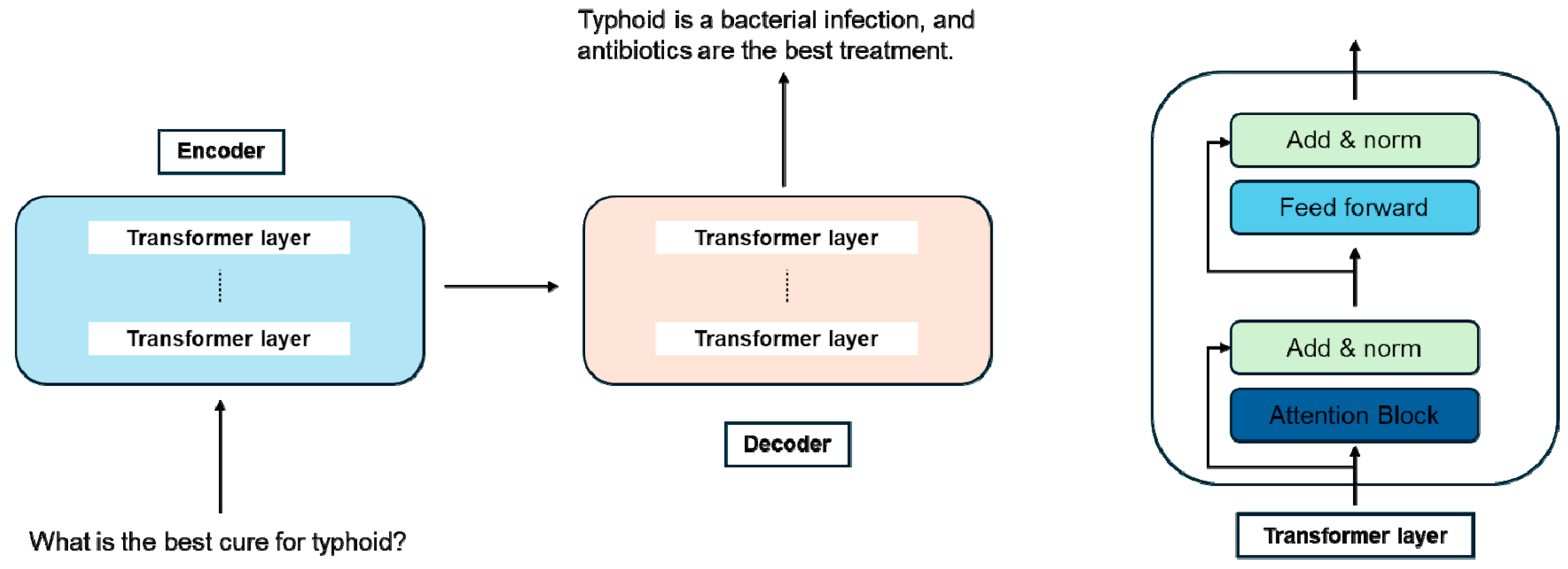

2.4. Encoder–Decoder Models (Figure 1)

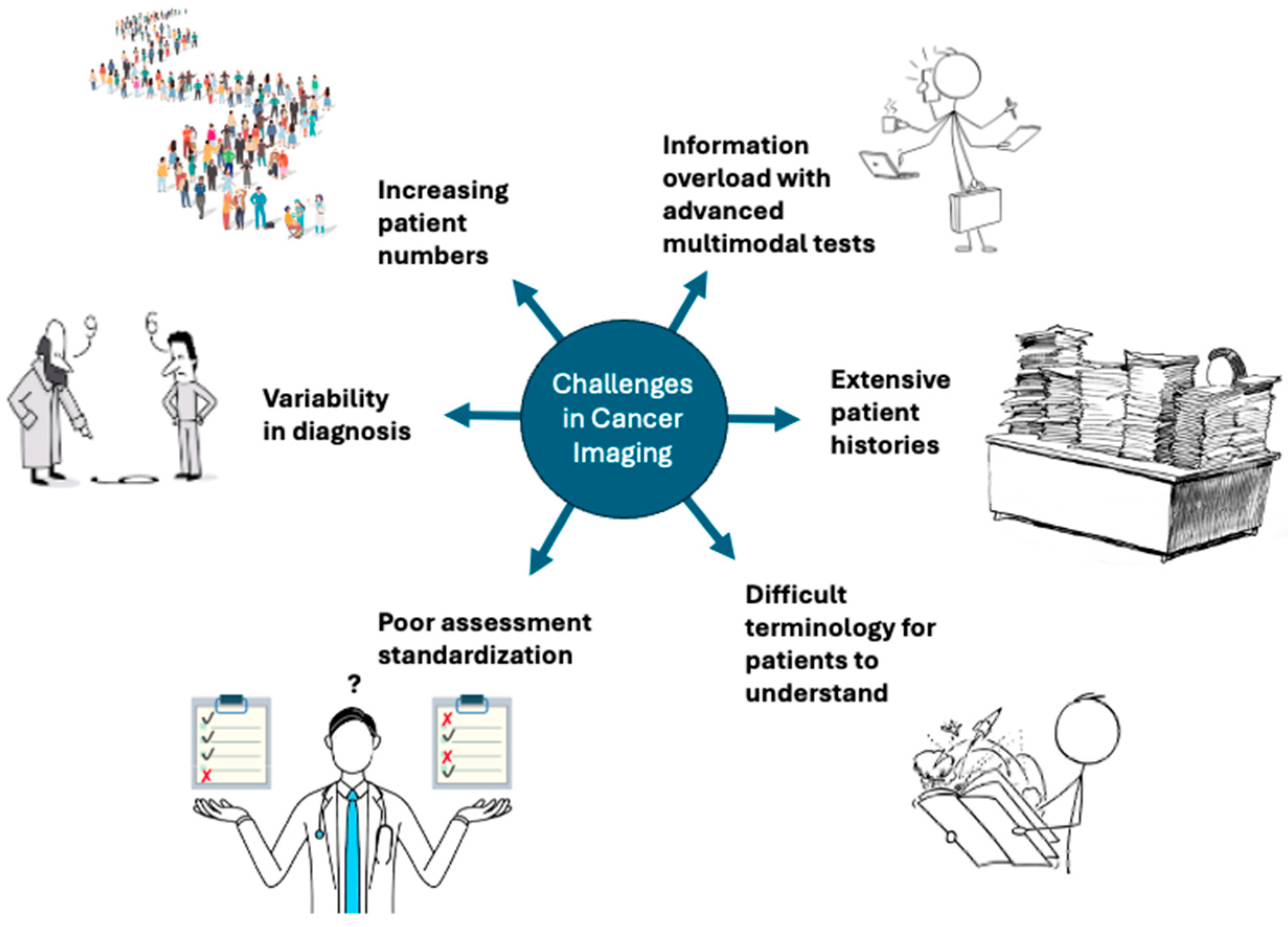

3. Current Challenges in Cancer Imaging

4. LLMs for Radiology Exam Protocol Standardization

5. LLMs for Improving Reporting

6. LLM-Based Cancer Classification and Staging

7. LLM for Individual Prognostication

8. LLMs for Patient Communication

9. Pitfalls and Limitations

10. Future Perspectives

10.1. Tumor Boards

10.2. Preventing Adverse Events

10.3. Drug Development

10.4. Prognostication

10.5. Predictive Value

10.6. Response Assessment

10.7. Treatment Selection

11. Summary

Author Contributions

Funding

Conflicts of Interest

References

- Bhayana, R. Chatbots and Large Language Models in Radiology: A Practical Primer for Clinical and Research Applications. Radiology 2024, 310, e232756. [Google Scholar] [CrossRef] [PubMed]

- Nerella, S.; Bandyopadhyay, S.; Zhang, J.; Contreras, M.; Siegel, S.; Bumin, A.; Silva, B.; Sena, J.; Shickel, B.; Bihorac, A.; et al. Transformers and large language models in healthcare: A review. Artif. Intell. Med. 2024, 154, 102900. [Google Scholar] [CrossRef]

- Carl, N.; Schramm, F.; Haggenmüller, S.; Kather, J.N.; Hetz, M.J.; Wies, C.; Michel, M.S.; Wessels, F.; Brinker, T.J. Large language model use in clinical oncology. NPJ Precis. Oncol. 2024, 8, 240. [Google Scholar] [CrossRef] [PubMed]

- Buvat, I.; Weber, W. Nuclear Medicine from a Novel Perspective: Buvat and Weber Talk with OpenAI’s ChatGPT. J. Nucl. Med. 2023, 64, 505–507. [Google Scholar] [CrossRef] [PubMed]

- Sorin, V.; Glicksberg, B.S.; Artsi, Y.; Barash, Y.; Konen, E.; Nadkarni, G.N.; Klang, E. Utilizing large language models in breast cancer management: Systematic review. J. Cancer Res. Clin. Oncol. 2024, 150, 140. [Google Scholar] [CrossRef]

- Shool, S.; Adimi, S.; Amleshi, R.S.; Bitaraf, E.; Golpira, R.; Tara, M. A systematic review of large language model (LLM) evaluations in clinical medicine. BMC Med. Inf. Decis. Mak. 2025, 25, 117. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. arXiv 2020, arXiv:1909.11942. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar] [CrossRef]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Hricak, H.; Mayerhoefer, M.E.; Herrmann, K.; Lewis, J.S.; Pomper, M.G.; Hess, C.P.; Riklund, K.; Scott, A.M.; Weissleder, R. Advances and challenges in precision imaging. Lancet Oncol. 2025, 26, e34–e45. [Google Scholar] [CrossRef] [PubMed]

- Siegel, R.L.; Kratzer, T.B.; Giaquinto, A.N.; Sung, H.; Jemal, A. Cancer statistics, 2025. CA Cancer J. Clin. 2025, 75, 10–45. [Google Scholar] [CrossRef]

- Schlemmer, H.-P.; Bittencourt, L.K.; D’anastasi, M.; Domingues, R.; Khong, P.-L.; Lockhat, Z.; Muellner, A.; Reiser, M.F.; Schilsky, R.L.; Hricak, H. Global Challenges for Cancer Imaging. J. Glob. Oncol. 2018, 4, 1–10. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Mirak, S.A.; Tirumani, S.H.; Ramaiya, N.; Mohamed, I. The Growing Nationwide Radiologist Shortage: Current Opportunities and Ongoing Challenges for International Medical Graduate Radiologists. Radiology 2025, 314, e232625. [Google Scholar] [CrossRef]

- Hardavella, G.; Frille, A.; Chalela, R.; Sreter, K.B.; Petersen, R.H.; Novoa, N.; de Koning, H.J. How will lung cancer screening and lung nodule management change the diagnostic and surgical lung cancer landscape? Eur. Respir. Rev. 2024, 33, 230232. [Google Scholar] [CrossRef]

- Alshuhri, M.S.; Al-Musawi, S.G.; Al-Alwany, A.A.; Uinarni, H.; Rasulova, I.; Rodrigues, P.; Alkhafaji, A.T.; Alshanberi, A.M.; Alawadi, A.H.; Abbas, A.H. Artificial intelligence in cancer diagnosis: Opportunities and challenges. Pathol. Res. Pract. 2024, 253, 154996. [Google Scholar] [CrossRef]

- Khalighi, S.; Reddy, K.; Midya, A.; Pandav, K.B.; Madabhushi, A.; Abedalthagafi, M. Artificial intelligence in neuro-oncology: Advances and challenges in brain tumor diagnosis, prognosis, and precision treatment. NPJ Precis. Oncol. 2024, 8, 80. [Google Scholar] [CrossRef]

- Tadavarthi, Y.; Makeeva, V.; Wagstaff, W.; Zhan, H.; Podlasek, A.; Bhatia, N.; Heilbrun, M.; Krupinski, E.; Safdar, N.; Banerjee, I.; et al. Overview of Noninterpretive Artificial Intelligence Models for Safety, Quality, Workflow, and Education Applications in Radiology Practice. Radiol. Artif. Intell. 2022, 4, e210114. [Google Scholar] [CrossRef]

- Barash, Y.; Klang, E.; Konen, E.; Sorin, V. ChatGPT-4 Assistance in Optimizing Emergency Department Radiology Referrals and Imaging Selection. J. Am. Coll. Radiol. 2023, 20, 998–1003. [Google Scholar] [CrossRef]

- Kalra, A.; Chakraborty, A.; Fine, B.; Reicher, J. Machine Learning for Automation of Radiology Protocols for Quality and Efficiency Improvement. J. Am. Coll. Radiol. 2020, 17, 1149–1158. [Google Scholar] [CrossRef] [PubMed]

- Gichoya, J.W.; Thomas, K.; Celi, L.A.; Safdar, N.; Banerjee, I.; Banja, J.D.; Seyyed-Kalantari, L.; Trivedi, H.; Purkayastha, S. AI pitfalls and what not to do: Mitigating bias in AI. Br. J. Radiol. 2023, 96, 20230023. [Google Scholar] [CrossRef]

- Elendu, C.; Amaechi, D.C.M.; Elendu, T.C.B.; Jingwa, K.A.M.; Okoye, O.K.M.; Okah, M.M.J.; Ladele, J.A.M.; Farah, A.H.; Alimi, H.A.M. Ethical implications of AI and robotics in healthcare: A review. Medicine 2023, 102, e36671. [Google Scholar] [CrossRef]

- Busch, F.; Hoffmann, L.; dos Santos, D.P.; Makowski, M.R.; Saba, L.; Prucker, P.; Hadamitzky, M.; Navab, N.; Kather, J.N.; Truhn, D.; et al. Large language models for structured reporting in radiology: Past, present, and future. Eur. Radiol. 2024, 35, 2589–2602. [Google Scholar] [CrossRef]

- Kim, S.; Kim, D.; Shin, H.J.; Lee, S.H.; Kang, Y.; Jeong, S.; Kim, J.; Han, M.; Lee, S.-J.; Kim, J.; et al. Large-Scale Validation of the Feasibility of GPT-4 as a Proofreading Tool for Head CT Reports. Radiology 2025, 314, e240701. [Google Scholar] [CrossRef] [PubMed]

- Sowa, A.; Avram, R. Fine-tuned large language models can generate expert-level echocardiography reports. Eur. Hearth J. Digit. Health 2025, 6, 5–6. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, M.; Wang, L.; Zhang, Y.; Xu, X.; Pan, Z.; Feng, Y.; Zhao, J.; Zhang, L.; Yao, G.; et al. Constructing a Large Language Model to Generate Impressions from Findings in Radiology Reports. Radiology 2024, 312, e240885. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Xin, J.; Shen, Q.; Huang, Z.; Wang, Z. Automatic medical report generation based on deep learning: A state of the art survey. Comput. Med. Imaging Graph. 2025, 120, 102486. [Google Scholar] [CrossRef]

- Al Mohamad, F.; Donle, L.; Dorfner, F.; Romanescu, L.; Drechsler, K.; Wattjes, M.P.; Nawabi, J.; Makowski, M.R.; Häntze, H.; Adams, L.; et al. Open-source Large Language Models can Generate Labels from Radiology Reports for Training Convolutional Neural Networks. Acad. Radiol. 2025, 32, 2402–2410. [Google Scholar] [CrossRef]

- Gupta, A.; Malhotra, H.; Garg, A.K.; Rangarajan, K. Enhancing Radiological Reporting in Head and Neck Cancer: Converting Free-Text CT Scan Reports to Structured Reports Using Large Language Models. Indian J. Radiol. Imaging 2025, 35, 043–049. [Google Scholar] [CrossRef]

- Choi, H.; Lee, D.; Kang, Y.-K.; Suh, M. Empowering PET imaging reporting with retrieval-augmented large language models and reading reports database: A pilot single center study. Eur. J. Nucl. Med. 2025. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, R.A.; Seah, J.C.Y.; Cao, K.; Lim, L.; Lim, W.; Yeung, J. Generative Large Language Models for Detection of Speech Recognition Errors in Radiology Reports. Radiol. Artif. Intell. 2024, 6, e230205. [Google Scholar] [CrossRef]

- Van Veen, D.; Van Uden, C.; Blankemeier, L.; Delbrouck, J.-B.; Aali, A.; Bluethgen, C.; Pareek, A.; Polacin, M.; Reis, E.P.; Seehofnerová, A.; et al. Adapted large language models can outperform medical experts in clinical text summarization. Nat. Med. 2024, 30, 1134–1142. [Google Scholar] [CrossRef]

- Tozuka, R.; Johno, H.; Amakawa, A.; Sato, J.; Muto, M.; Seki, S.; Komaba, A.; Onishi, H. Application of NotebookLM, a large language model with retrieval-augmented generation, for lung cancer staging. Jpn. J. Radiol. 2024, 43, 706–712. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.E.; Park, K.-S.; Kim, Y.-H.; Song, H.-C.; Park, B.; Jeong, Y.J. Lung Cancer Staging Using Chest CT and FDG PET/CT Free-Text Reports: Comparison Among Three ChatGPT Large Language Models and Six Human Readers of Varying Experience. Am. J. Roentgenol. 2024, 223, e2431696. [Google Scholar] [CrossRef] [PubMed]

- Güneş, Y.C.; Cesur, T.; Çamur, E.; Karabekmez, L.G. Evaluating text and visual diagnostic capabilities of large language models on questions related to the Breast Imaging Reporting and Data System Atlas 5th edition. Diagn. Interv. Radiol. 2024, 31, 111–129. [Google Scholar] [CrossRef]

- López-Úbeda, P.; Martín-Noguerol, T.; Ruiz-Vinuesa, A.; Luna, A. The added value of including thyroid nodule features into large language models for automatic ACR TI-RADS classification based on ultrasound reports. Jpn. J. Radiol. 2024, 43, 593–602. [Google Scholar] [CrossRef]

- Lee, K.-L.; Kessler, D.A.; Caglic, I.; Kuo, Y.-H.; Shaida, N.; Barrett, T. Assessing the performance of ChatGPT and Bard/Gemini against radiologists for Prostate Imaging-Reporting and Data System classification based on prostate multiparametric MRI text reports. Br. J. Radiol. 2024, 98, 368–374. [Google Scholar] [CrossRef]

- Bhayana, R.; Jajodia, A.; Chawla, T.; Deng, Y.; Bouchard-Fortier, G.; Haider, M.; Krishna, S. Accuracy of Large Language Model–based Automatic Calculation of Ovarian-Adnexal Reporting and Data System MRI Scores from Pelvic MRI Reports. Radiology 2025, 315, e241554. [Google Scholar] [CrossRef]

- Iannessi, A.; Beaumont, H.; Ojango, C.; Bertrand, A.-S.; Liu, Y. RECIST 1.1 assessments variability: A systematic pictorial review of blinded double reads. Insights Imaging 2024, 15, 199. [Google Scholar] [CrossRef]

- Ruchalski, K.; Anaokar, J.M.; Benz, M.R.; Dewan, R.; Douek, M.L.; Goldin, J.G. A call for objectivity: Radiologists’ proposed wishlist for response evaluation in solid tumors (RECIST 1.1). Cancer Imaging 2024, 24, 154. [Google Scholar] [CrossRef] [PubMed]

- Bucho, T.M.T.; Petrychenko, L.; Abdelatty, M.A.; Bogveradze, N.; Bodalal, Z.; Beets-Tan, R.G.; Trebeschi, S. Reproducing RECIST lesion selection via machine learning: Insights into intra and inter-radiologist variation. Eur. J. Radiol. Open 2024, 12, 100562. [Google Scholar] [CrossRef]

- Arya, A.; Niederhausern, A.; Bahadur, N.; Shah, N.J.; Nichols, C.; Chatterjee, A.; Philip, J. Artificial Intelligence–Assisted Cancer Status Detection in Radiology Reports. Cancer Res. Commun. 2024, 4, 1041–1049. [Google Scholar] [CrossRef]

- Kim, K.; Lee, Y.; Park, D.; Eo, T.; Youn, D.; Lee, H.; Hwang, D. LLM-Guided Multi-modal Multiple Instance Learning for 5-Year Overall Survival Prediction of Lung Cancer. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2024, Marrakesh, Morocco, 6–10 October 2024; Proc Part III. Springer: Berlin/Heidelberg, Germany, 2024; pp. 239–249. [Google Scholar] [CrossRef]

- Yao, J.; Zhu, X.; Zhu, F.; Huang, J. Deep Correlational Learning for Survival Prediction from Multi-modality Data. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2017, Quebec City, QC, Canada, 10–14 September 2017; Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D.L., Duchesne, S., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 406–414. [Google Scholar] [CrossRef]

- Zheng, S.; Guo, J.; Langendijk, J.A.; Both, S.; Veldhuis, R.N.; Oudkerk, M.; van Ooijen, P.M.; Wijsman, R.; Sijtsema, N.M. Survival prediction for stage I-IIIA non-small cell lung cancer using deep learning. Radiother. Oncol. 2023, 180, 109483. [Google Scholar] [CrossRef]

- Kim, S.; Kim, S.-S.; Kim, E.; Cecchini, M.; Park, M.-S.; Choi, J.A.; Kim, S.H.; Hwang, H.K.; Kang, C.M.; Choi, H.J.; et al. Deep-Transfer-Learning–Based Natural Language Processing of Serial Free-Text Computed Tomography Reports for Predicting Survival of Patients with Pancreatic Cancer. JCO Clin. Cancer Inf. 2024, 8, e2400021. [Google Scholar] [CrossRef] [PubMed]

- Tay, S.B.; Low, G.H.; Wong, G.J.E.; Tey, H.J.; Leong, F.L.; Li, C.; Chua, M.L.K.; Tan, D.S.W.; Thng, C.H.; Tan, I.B.H.; et al. Use of Natural Language Processing to Infer Sites of Metastatic Disease from Radiology Reports at Scale. JCO Clin. Cancer Inf. 2024, 8, e2300122. [Google Scholar] [CrossRef]

- Tan, R.S.Y.C.; Lin, Q.; Low, G.H.; Lin, R.; Goh, T.C.; Chang, C.C.E.; Lee, F.F.; Chan, W.Y.; Tan, W.C.; Tey, H.J.; et al. Inferring cancer disease response from radiology reports using large language models with data augmentation and prompting. J. Am. Med. Inf. Assoc. 2023, 30, 1657–1664. [Google Scholar] [CrossRef]

- Xiang, J.; Wang, X.; Zhang, X.; Xi, Y.; Eweje, F.; Chen, Y.; Li, Y.; Bergstrom, C.; Gopaulchan, M.; Kim, T.; et al. A vision–language foundation model for precision oncology. Nature 2025, 638, 769–778. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Kreuter, D.; Chen, Y.; Dittmer, S.; Tull, S.; Shadbahr, T.; Schut, M.; Asselbergs, F.; Kar, S.; Sivapalaratnam, S.; et al. Recent methodological advances in federated learning for healthcare. Patterns 2024, 5, 101006. [Google Scholar] [CrossRef]

- Busch, F.; Hoffmann, L.; Rueger, C.; van Dijk, E.H.; Kader, R.; Ortiz-Prado, E.; Makowski, M.R.; Saba, L.; Hadamitzky, M.; Kather, J.N.; et al. Current applications and challenges in large language models for patient care: A systematic review. Commun. Med. 2025, 5, 26. [Google Scholar] [CrossRef]

- Maroncelli, R.; Rizzo, V.; Pasculli, M.; Cicciarelli, F.; Macera, M.; Galati, F.; Catalano, C.; Pediconi, F. Probing clarity: AI-generated simplified breast imaging reports for enhanced patient comprehension powered by ChatGPT-4o. Eur. Radiol. Exp. 2024, 8, 124. [Google Scholar] [CrossRef]

- Gupta, A.; Rastogi, A.; Malhotra, H.; Rangarajan, K. Comparative Evaluation of Large Language Models for Translating Radiology Reports into Hindi. Indian J. Radiol. Imaging 2025, 35, 088–096. [Google Scholar] [CrossRef] [PubMed]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting hallucinations in large language models using semantic entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef]

- Nazario-Johnson, L.; Zaki, H.A.; Tung, G.A. Use of Large Language Models to Predict Neuroimaging. J. Am. Coll. Radiol. 2023, 20, 1004–1009. [Google Scholar] [CrossRef]

- Macchia, G.; Ferrandina, G.; Patarnello, S.; Autorino, R.; Masciocchi, C.; Pisapia, V.; Calvani, C.; Iacomini, C.; Cesario, A.; Boldrini, L.; et al. Multidisciplinary Tumor Board Smart Virtual Assistant in Locally Advanced Cervical Cancer: A Proof of Concept. Front. Oncol. 2022, 11, 797454. [Google Scholar] [CrossRef]

- Schmidl, B.; Hütten, T.; Pigorsch, S.; Stögbauer, F.; Hoch, C.C.; Hussain, T.; Wollenberg, B.; Wirth, M. Assessing the role of advanced artificial intelligence as a tool in multidisciplinary tumor board decision-making for primary head and neck cancer cases. Front. Oncol. 2024, 14, 1353031. [Google Scholar] [CrossRef] [PubMed]

- Zabaleta, J.; Aguinagalde, B.; Lopez, I.; Fernandez-Monge, A.; Lizarbe, J.A.; Mainer, M.; Ferrer-Bonsoms, J.A.; de Assas, M. Utility of Artificial Intelligence for Decision Making in Thoracic Multidisciplinary Tumor Boards. J. Clin. Med. 2025, 14, 399. [Google Scholar] [CrossRef] [PubMed]

- Sorin, V.; Klang, E.; Sklair-Levy, M.; Cohen, I.; Zippel, D.B.; Lahat, N.B.; Konen, E.; Barash, Y. Large language model (ChatGPT) as a support tool for breast tumor board. NPJ Breast Cancer 2023, 9, 44. [Google Scholar] [CrossRef]

- Benary, M.; Wang, X.D.; Schmidt, M.; Soll, D.; Hilfenhaus, G.; Nassir, M.; Sigler, C.; Knödler, M.; Beule, D.; Keilholz, U.; et al. Leveraging Large Language Models for Decision Support in Personalized Oncology. JAMA Netw. Open 2023, 6, e2343689. [Google Scholar] [CrossRef]

- Amiri, E. Optimizing Premedication Strategies for Iodinated Contrast Media in CT scans: A Literature Review. J. Med. Imaging Radiat. Sci. 2025, 56, 101782. [Google Scholar] [CrossRef]

- Schopp, J.G.; Iyer, R.S.; Wang, C.L.; Petscavage, J.M.; Paladin, A.M.; Bush, W.H.; Dighe, M.K. Allergic reactions to iodinated contrast media: Premedication considerations for patients at risk. Emerg. Radiol. 2013, 20, 299–306. [Google Scholar] [CrossRef]

- Dercle, L.; Ammari, S.; Champiat, S.; Massard, C.; Ferté, C.; Taihi, L.; Seban, R.-D.; Aspeslagh, S.; Mahjoubi, L.; Kamsu-Kom, N.; et al. Rapid and objective CT scan prognostic scoring identifies metastatic patients with long-term clinical benefit on anti-PD-1/-L1 therapy. Eur. J. Cancer 2016, 65, 33–42. [Google Scholar] [CrossRef]

- Do, R.K.G.; Lupton, K.; Andrieu, P.I.C.; Luthra, A.; Taya, M.; Batch, K.; Nguyen, H.; Rahurkar, P.; Gazit, L.; Nicholas, K.; et al. Patterns of Metastatic Disease in Patients with Cancer Derived from Natural Language Processing of Structured CT Radiology Reports over a 10-year Period. Radiology 2021, 301, 115–122. [Google Scholar] [CrossRef]

- Andrieu, P.C.; Pernicka, J.S.G.; Yaeger, R.; Lupton, K.; Batch, K.; Zulkernine, F.; Simpson, A.L.; Taya, M.; Gazit, L.; Nguyen, H.; et al. Natural Language Processing of Computed Tomography Reports to Label Metastatic Phenotypes with Prognostic Significance in Patients with Colorectal Cancer. JCO Clin. Cancer Inf. 2022, 6, e2200014. [Google Scholar] [CrossRef]

- Yuan, Q.; Cai, T.; Hong, C.; Du, M.; Johnson, B.E.; Lanuti, M.; Cai, T.; Christiani, D.C. Performance of a Machine Learning Algorithm Using Electronic Health Record Data to Identify and Estimate Survival in a Longitudinal Cohort of Patients with Lung Cancer. JAMA Netw. Open 2021, 4, e2114723. [Google Scholar] [CrossRef] [PubMed]

- Seban, R.-D.; Nemer, J.S.; Marabelle, A.; Yeh, R.; Deutsch, E.; Ammari, S.; Moya-Plana, A.; Mokrane, F.-Z.; Gartrell, R.D.; Finkel, G.; et al. Prognostic and theranostic 18F-FDG PET biomarkers for anti-PD1 immunotherapy in metastatic melanoma: Association with outcome and transcriptomics. Eur. J. Nucl. Med. 2019, 46, 2298–2310. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.; Paek, H.; Huang, L.-C.; Hilton, C.B.; Datta, S.; Higashi, J.; Ofoegbu, N.; Wang, J.; Rubinstein, S.M.; Cowan, A.J.; et al. SEETrials: Leveraging large language models for safety and efficacy extraction in oncology clinical trials. Inf. Med. Unlocked 2024, 50, 101589. [Google Scholar] [CrossRef] [PubMed]

- Dennstaedt, F.; Windisch, P.; Filchenko, I.; Zink, J.; Putora, P.M.; Shaheen, A.; Gaio, R.; Cihoric, N.; Wosny, M.; Aeppli, S.; et al. Application of a general LLM-based classification system to retrieve information about oncological trials. medRxiv 2024. [Google Scholar] [CrossRef]

- Lammert, J.; Dreyer, T.; Mathes, S.; Kuligin, L.; Borm, K.J.; Schatz, U.A.; Kiechle, M.; Lörsch, A.M.; Jung, J.; Lange, S.; et al. Expert-Guided Large Language Models for Clinical Decision Support in Precision Oncology. JCO Precis. Oncol. 2024, 8, e2400478. [Google Scholar] [CrossRef]

- Chen, H.; Jiang, Z.; Liu, X.; Xue, C.C.; Yew, S.M.E.; Sheng, B.; Zheng, Y.-F.; Wang, X.; Wu, Y.; Sivaprasad, S.; et al. Can large language models fully automate or partially assist paper selection in systematic reviews? Br. J. Ophthalmol. 2025. [Google Scholar] [CrossRef]

- Verlingue, L.; Boyer, C.; Olgiati, L.; Mairesse, C.B.; Morel, D.; Blay, J.-Y. Artificial intelligence in oncology: Ensuring safe and effective integration of language models in clinical practice. Lancet Reg. Health Eur. 2024, 46, 101064. [Google Scholar] [CrossRef] [PubMed]

- Alber, D.A.; Yang, Z.; Alyakin, A.; Yang, E.; Rai, S.; Valliani, A.A.; Zhang, J.; Rosenbaum, G.R.; Amend-Thomas, A.K.; Kurland, D.B.; et al. Medical large language models are vulnerable to data-poisoning attacks. Nat. Med. 2025, 31, 618–626. [Google Scholar] [CrossRef] [PubMed]

| LLMs | Type | Availability | Time of Release |

|---|---|---|---|

| BERT | Encoder-only | Open-source | 2018 |

| RoBERTa | Encoder-only | Open-source | 2019 |

| ERNIE | Encoder-only | Open-source | 2019 |

| ALBERT | Encoder-only | Open-source | 2019 |

| GPT—series | Decoder-only | Closed-source | 2018–Current |

| DeepSeek—series | Decoder-only | Open-source | 2023–Current |

| LLaMA—series | Decoder-only | Open-source | 2023–Current |

| T5 | Encoder–Decoder | Open-source | 2019–Current |

| BART | Encoder–Decoder | Open-source | 2019 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tordjman, M.; Bolger, I.; Yuce, M.; Restrepo, F.; Liu, Z.; Dercle, L.; McGale, J.; Meribout, A.L.; Liu, M.M.; Beddok, A.; et al. Large Language Models in Cancer Imaging: Applications and Future Perspectives. J. Clin. Med. 2025, 14, 3285. https://doi.org/10.3390/jcm14103285

Tordjman M, Bolger I, Yuce M, Restrepo F, Liu Z, Dercle L, McGale J, Meribout AL, Liu MM, Beddok A, et al. Large Language Models in Cancer Imaging: Applications and Future Perspectives. Journal of Clinical Medicine. 2025; 14(10):3285. https://doi.org/10.3390/jcm14103285

Chicago/Turabian StyleTordjman, Mickael, Ian Bolger, Murat Yuce, Francisco Restrepo, Zelong Liu, Laurent Dercle, Jeremy McGale, Anis L. Meribout, Mira M. Liu, Arnaud Beddok, and et al. 2025. "Large Language Models in Cancer Imaging: Applications and Future Perspectives" Journal of Clinical Medicine 14, no. 10: 3285. https://doi.org/10.3390/jcm14103285

APA StyleTordjman, M., Bolger, I., Yuce, M., Restrepo, F., Liu, Z., Dercle, L., McGale, J., Meribout, A. L., Liu, M. M., Beddok, A., Lee, H.-C., Rohren, S., Yu, R., Mei, X., & Taouli, B. (2025). Large Language Models in Cancer Imaging: Applications and Future Perspectives. Journal of Clinical Medicine, 14(10), 3285. https://doi.org/10.3390/jcm14103285