Development and Validation of an Artificial Intelligence Model for Detecting Rib Fractures on Chest Radiographs

Abstract

1. Introduction

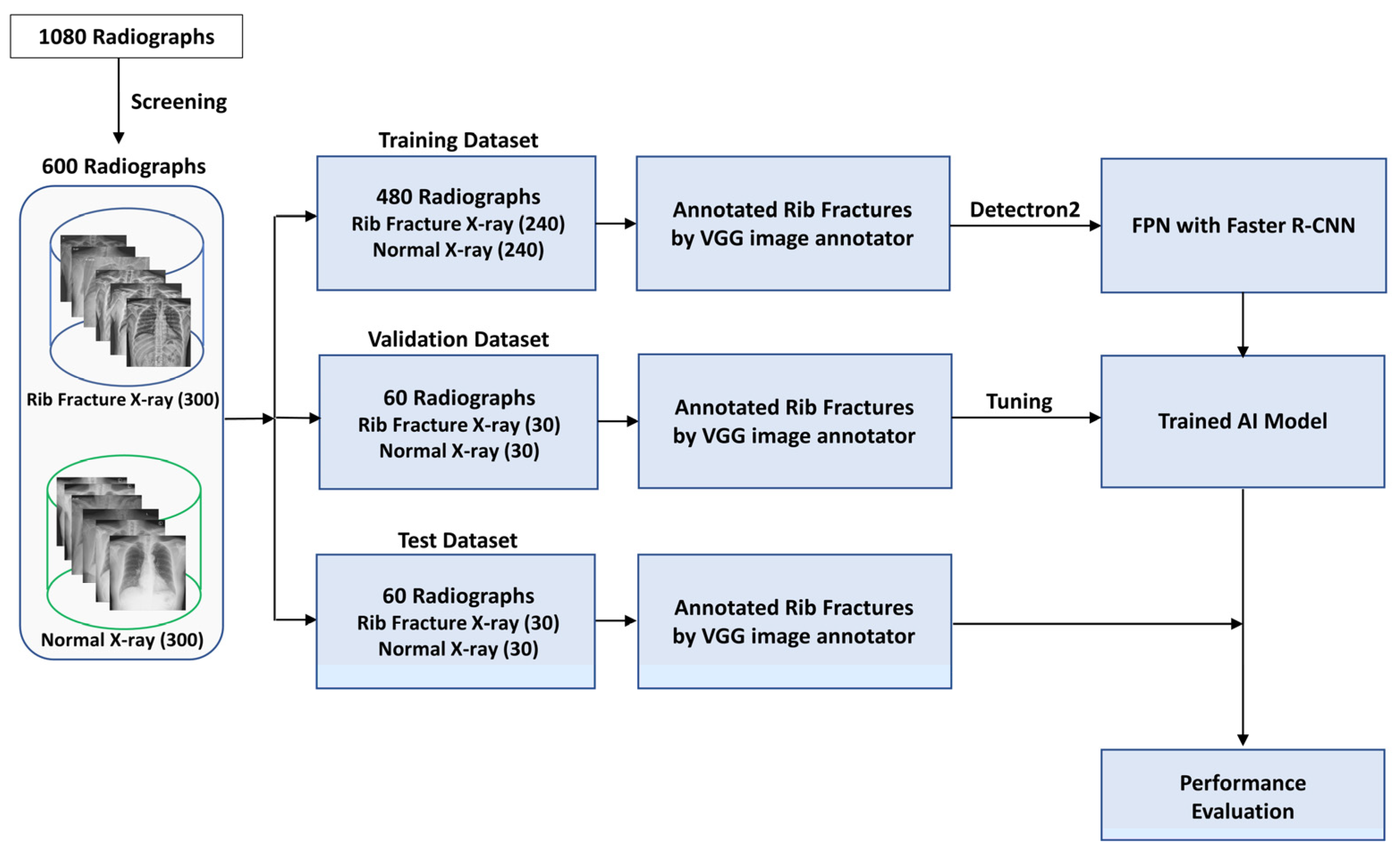

2. Materials and Methods

2.1. Dataset Preparation

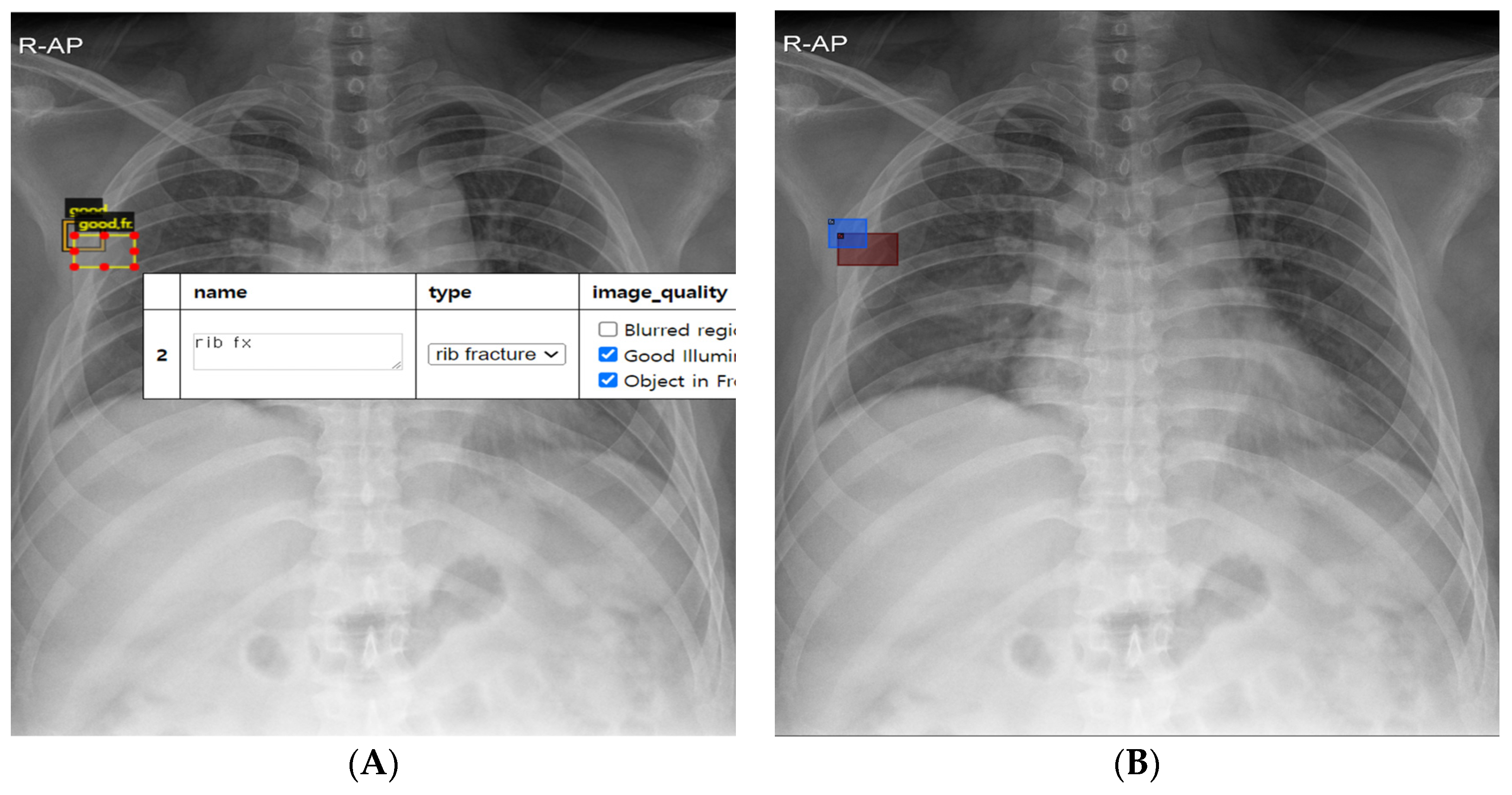

2.2. Rib Fracture Annotation on Chest Radiographs

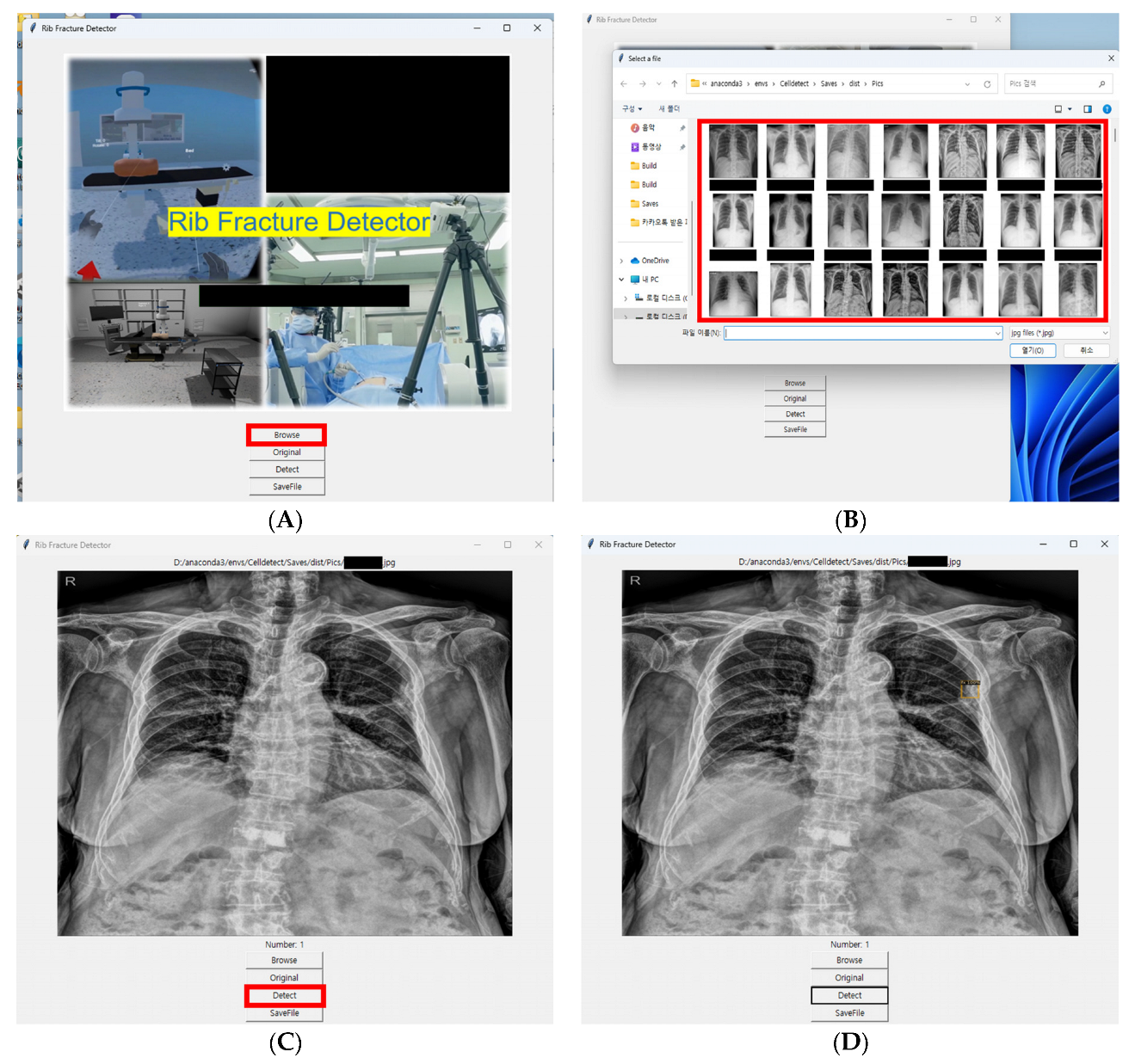

2.3. Development of AI Model

2.4. Evaluation of AI Model

2.5. Statistical Analysis

3. Results

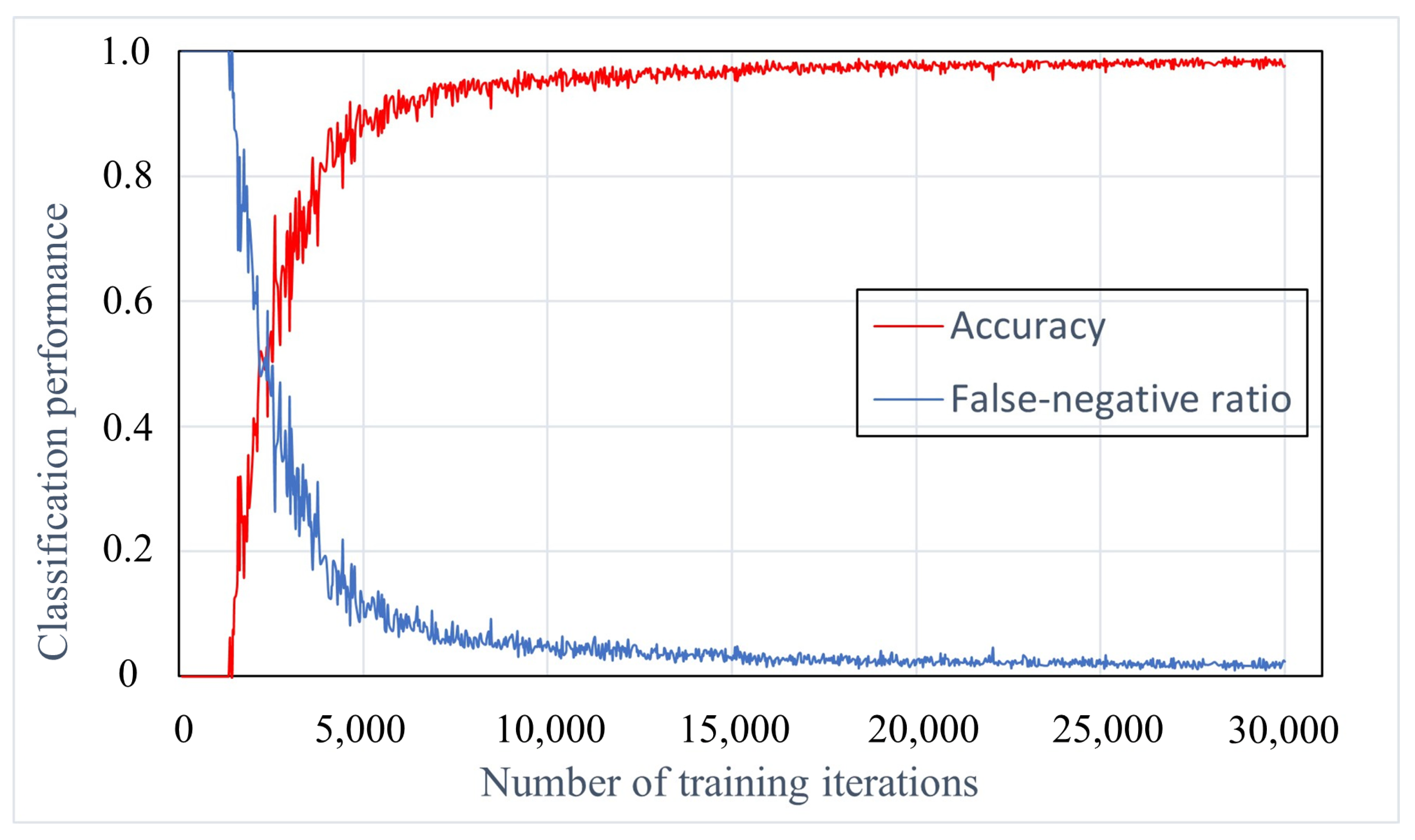

3.1. Training Iterations Experiment

3.2. Classification Performance of AI Model

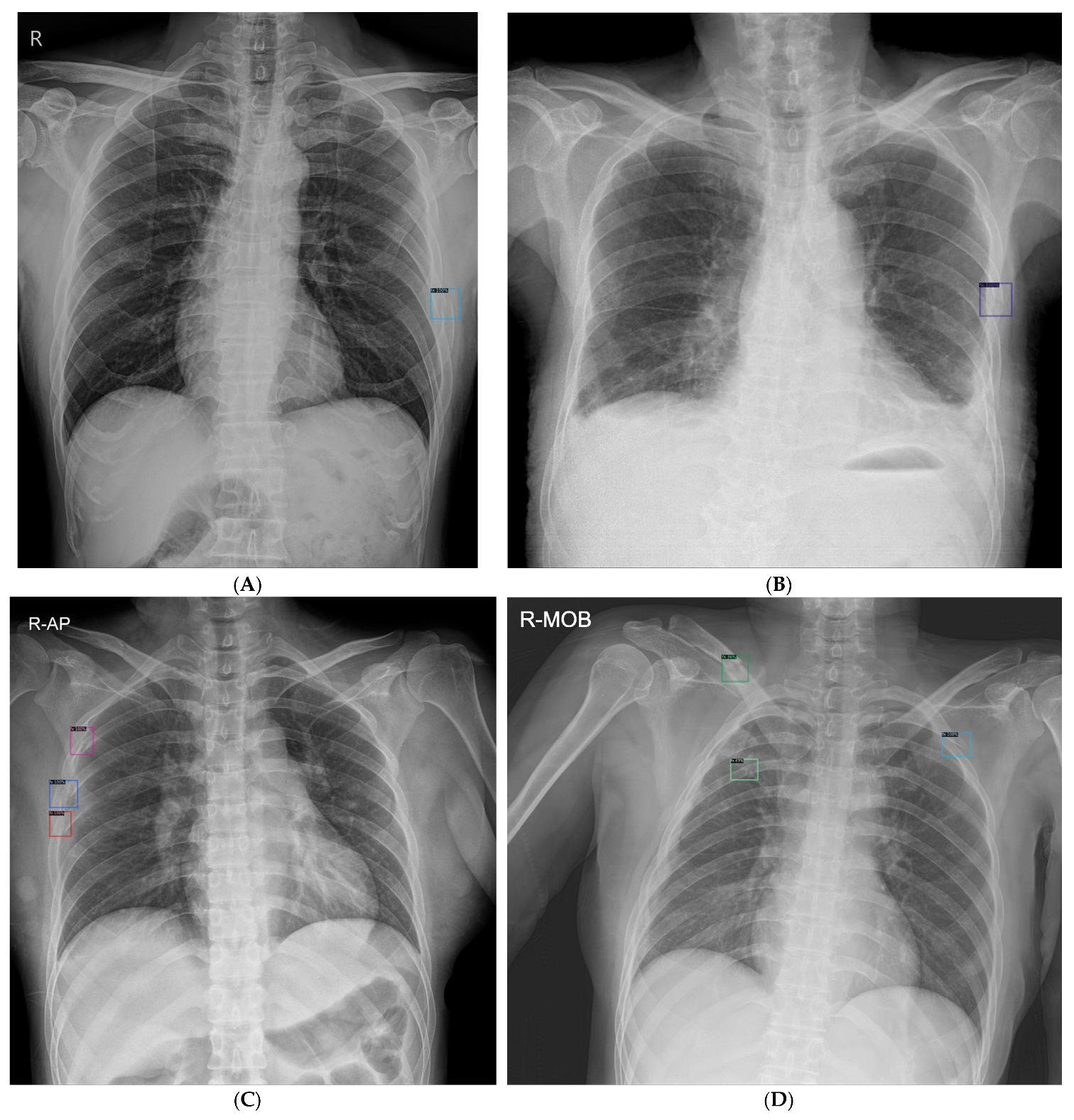

3.3. Rib Fracture Detection Performance of AI Model

3.4. Comparison of Radiograph Classification and Fracture Detection Performance between AI Model and Observers

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alkadhi, H.; Wildermuth, S.; Marincek, B.; Boehm, T. Accuracy and time efficiency for the detection of thoracic cage fractures: Volume rendering compared with transverse computed tomography images. J. Comput. Assist. Tomogr. 2004, 28, 378–385. [Google Scholar] [CrossRef]

- Henry, T.S.; Donnelly, E.F.; Boiselle, P.M.; Crabtree, T.D.; Iannettoni, M.D.; Johnson, G.B.; Kazerooni, E.A.; Laroia, A.T.; Maldonado, F.; Olsen, K.M.; et al. ACR Appropriateness Criteria(®) Rib Fractures. J. Am. Coll. Radiol. 2019, 16, S227–S234. [Google Scholar] [CrossRef] [PubMed]

- Talbot, B.S.; Gange, C.P., Jr.; Chaturvedi, A.; Klionsky, N.; Hobbs, S.K.; Chaturvedi, A. Traumatic Rib Injury: Patterns, Imaging Pitfalls, Complications, and Treatment. Radiographics 2017, 37, 628–651. [Google Scholar] [CrossRef]

- Kalra, M.K.; Maher, M.M.; Rizzo, S.; Kanarek, D.; Shepard, J.A. Radiation exposure from chest CT: Issues and strategies. J. Korean Med. Sci. 2004, 19, 159–166. [Google Scholar] [CrossRef]

- Assi, A.A.; Nazal, Y. Rib fracture: Different radiographic projections. Pol. J. Radiol. 2012, 77, 13–16. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Awais, M.; Salam, B.; Nadeem, N.; Rehman, A.; Baloch, N.U. Diagnostic Accuracy of Computed Tomography Scout Film and Chest X-ray for Detection of Rib Fractures in Patients with Chest Trauma: A Cross-sectional Study. Cureus 2019, 11, e3875. [Google Scholar] [CrossRef] [PubMed]

- Dubinsky, I.; Low, A. Non-life-threatening blunt chest trauma: Appropriate investigation and treatment. Am. J. Emerg. Med. 1997, 15, 240–243. [Google Scholar] [CrossRef]

- Sano, A. Rib Radiography versus Chest Computed Tomography in the Diagnosis of Rib Fractures. Thorac. Cardiovasc. Surg. 2018, 66, 693–696. [Google Scholar] [CrossRef]

- Weingrow, D. Under What Situations Is Ultrasound Beneficial for the Detection of Rib Fractures? Ann. Emerg. Med. 2022, 79, 540–542. [Google Scholar] [CrossRef]

- Chen, X.; Wang, X.; Zhang, K.; Fung, K.M.; Thai, T.C.; Moore, K.; Mannel, R.S.; Liu, H.; Zheng, B.; Qiu, Y. Recent advances and clinical applications of deep learning in medical image analysis. Med. Image Anal. 2022, 79, 102444. [Google Scholar] [CrossRef]

- Seong, H.; Yun, D.; Yoon, K.S.; Kwak, J.S.; Koh, J.C. Development of pre-procedure virtual simulation for challenging interventional procedures: An experimental study with clinical application. Korean J. Pain 2022, 35, 403–412. [Google Scholar] [CrossRef] [PubMed]

- Nam, J.G.; Park, S.; Hwang, E.J.; Lee, J.H.; Jin, K.N.; Lim, K.Y.; Vu, T.H.; Sohn, J.H.; Hwang, S.; Goo, J.M.; et al. Development and Validation of Deep Learning-based Automatic Detection Algorithm for Malignant Pulmonary Nodules on Chest Radiographs. Radiology 2019, 290, 218–228. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Wu, D.; Ye, L.; Chen, Z.; Zhan, Y.; Li, Y. Assessment of automatic rib fracture detection on chest CT using a deep learning algorithm. Eur. Radiol. 2023, 33, 1824–1834. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; Chai, Z.; Qian, G.; Lin, H.; Wang, Q.; Wang, L.; Chen, H. Development and Evaluation of a Deep Learning Algorithm for Rib Segmentation and Fracture Detection from Multicenter Chest CT Images. Radiol. Artif. Intell. 2021, 3, e200248. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.K.A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2: A PyTorch-Based Modular Object Detection Library. Available online: https://ai.facebook.com/blog/-detectron2-a-pytorch-based-modular-object-detection-library-/ (accessed on 17 October 2022).

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef] [PubMed]

- Fletcher, J.G.; Yu, L.; Li, Z.; Manduca, A.; Blezek, D.J.; Hough, D.M.; Venkatesh, S.K.; Brickner, G.C.; Cernigliaro, J.C.; Hara, A.K.; et al. Observer Performance in the Detection and Classification of Malignant Hepatic Nodules and Masses with CT Image-Space Denoising and Iterative Reconstruction. Radiology 2015, 276, 465–478. [Google Scholar] [CrossRef] [PubMed]

- Chassagnon, G.; Vakalopoulou, M.; Paragios, N.; Revel, M.P. Artificial intelligence applications for thoracic imaging. Eur. J. Radiol. 2020, 123, 108774. [Google Scholar] [CrossRef]

- Fourcade, A.; Khonsari, R.H. Deep learning in medical image analysis: A third eye for doctors. J. Stomatol. Oral Maxillofac. Surg. 2019, 120, 279–288. [Google Scholar] [CrossRef] [PubMed]

- Martin, T.J.; Eltorai, A.S.; Dunn, R.; Varone, A.; Joyce, M.F.; Kheirbek, T.; Adams, C., Jr.; Daniels, A.H.; Eltorai, A.E.M. Clinical management of rib fractures and methods for prevention of pulmonary complications: A review. Injury 2019, 50, 1159–1165. [Google Scholar] [CrossRef]

- Crandall, J.; Kent, R.; Patrie, J.; Fertile, J.; Martin, P. Rib fracture patterns and radiologic detection—A restraint-based comparison. Annu. Proc. Assoc. Adv. Automot. Med. 2000, 44, 235–259. [Google Scholar]

- Shuaib, W.; Vijayasarathi, A.; Tiwana, M.H.; Johnson, J.O.; Maddu, K.K.; Khosa, F. The diagnostic utility of rib series in assessing rib fractures. Emerg. Radiol. 2014, 21, 159–164. [Google Scholar] [CrossRef] [PubMed]

- Tieu, A.; Kroen, E.; Kadish, Y.; Liu, Z.; Patel, N.; Zhou, A.; Yilmaz, A.; Lee, S.; Deyer, T. The Role of Artificial Intelligence in the Identification and Evaluation of Bone Fractures. Bioengineering 2024, 11, 338. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef] [PubMed]

- Chaddad, A.; Peng, J.; Xu, J.; Bouridane, A. Survey of Explainable AI Techniques in Healthcare. Sensors 2023, 23, 634. [Google Scholar] [CrossRef] [PubMed]

- Kaiume, M.; Suzuki, S.; Yasaka, K.; Sugawara, H.; Shen, Y.; Katada, Y.; Ishikawa, T.; Fukui, R.; Abe, O. Rib fracture detection in computed tomography images using deep convolutional neural networks. Medicine 2021, 100, e26024. [Google Scholar] [CrossRef]

- Niiya, A.; Murakami, K.; Kobayashi, R.; Sekimoto, A.; Saeki, M.; Toyofuku, K.; Kato, M.; Shinjo, H.; Ito, Y.; Takei, M.; et al. Development of an artificial intelligence-assisted computed tomography diagnosis technology for rib fracture and evaluation of its clinical usefulness. Sci. Rep. 2022, 12, 8363. [Google Scholar] [CrossRef]

- Zhou, Z.; Fu, Z.; Jia, J.; Lv, J. Rib Fracture Detection with Dual-Attention Enhanced U-Net. Comput. Math. Methods Med. 2022, 2022, 8945423. [Google Scholar] [CrossRef]

- Guermazi, A.; Tannoury, C.; Kompel, A.J.; Murakami, A.M.; Ducarouge, A.; Gillibert, A.; Li, X.; Tournier, A.; Lahoud, Y.; Jarraya, M.; et al. Improving Radiographic Fracture Recognition Performance and Efficiency Using Artificial Intelligence. Radiology 2022, 302, 627–636. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.T.; Liu, L.R.; Chiu, H.W.; Huang, M.Y.; Tsai, M.F. Deep convolutional neural network for rib fracture recognition on chest radiographs. Front. Med. 2023, 10, 1178798. [Google Scholar] [CrossRef]

- Sun, H.; Wang, X.; Li, Z.; Liu, A.; Xu, S.; Jiang, Q.; Li, Q.; Xue, Z.; Gong, J.; Chen, L.; et al. Automated Rib Fracture Detection on Chest X-Ray Using Contrastive Learning. J. Digit. Imaging 2023, 36, 2138–2147. [Google Scholar] [CrossRef]

- Wu, J.; Liu, N.; Li, X.; Fan, Q.; Li, Z.; Shang, J.; Wang, F.; Chen, B.; Shen, Y.; Cao, P.; et al. Convolutional neural network for detecting rib fractures on chest radiographs: A feasibility study. BMC Med. Imaging 2023, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- Sabottke, C.F.; Spieler, B.M. The Effect of Image Resolution on Deep Learning in Radiography. Radiol. Artif. Intell. 2020, 2, e190015. [Google Scholar] [CrossRef] [PubMed]

- Satheesh Kumar, K.G.; Arunachalam, V. Depthwise convolution based pyramid ResNet model for accurate detection of COVID-19 from chest X-Ray images. Imaging Sci. J. 2024, 72, 540–556. [Google Scholar] [CrossRef]

- Iyeke, L.; Moss, R.; Hall, R.; Wang, J.; Sandhu, L.; Appold, B.; Kalontar, E.; Menoudakos, D.; Ramnarine, M.; LaVine, S.P.; et al. Reducing Unnecessary ‘Admission’ Chest X-rays: An Initiative to Minimize Low-Value Care. Cureus 2022, 14, e29817. [Google Scholar] [CrossRef]

- Zhang, B.; Jia, C.; Wu, R.; Lv, B.; Li, B.; Li, F.; Du, G.; Sun, Z.; Li, X. Improving rib fracture detection accuracy and reading efficiency with deep learning-based detection software: A clinical evaluation. Br. J. Radiol. 2021, 94, 20200870. [Google Scholar] [CrossRef]

| Radiograph Classification Performance | Rib Fracture Detection Performance | |||||

|---|---|---|---|---|---|---|

| AUROC | Sensitivity 1 (%) | Specificity (%) | JAFROC FOM | Sensitivity 2 (%) | Rate of FP 3 (%) | |

| AI model | 0.89 | 0.87 (26/30) | 0.83 (25/30) | 0.76 | 0.62 (45/73) | 0.3 (18/60) |

| Radiograph Classification Performance | Rib Fracture Detection Performance | |||

|---|---|---|---|---|

| Observer 1 | Sensitivity | Specificity | Sensitivity 2 | Rate of FP 3 |

| Board-certified anesthesiologists | ||||

| Observer 1 | 0.97 (29/30) | 0.97 (29/30) | 0.84 (61/73) | 0.12 (7/60) |

| Observer 2 | 0.90 (27/30) | 0.93 (28/30) | 0.74 (54/73) | 0.18 (11/60) |

| Observer 3 | 0.63 (19/30) | 1.00 (30/30) | 0.48 (35/73) | 0.02 (2/60) |

| Observer 4 | 0.90 (27/30) | 0.80 (24/30) | 0.81 (59/73) | 0.57 (34/60) |

| Observer 5 | 0.87 (26/30) | 0.93 (28/30) | 0.59 (43/73) | 0.13 (8/60) |

| Observer 6 | 0.87 (26/30) | 0.93 (28/30) | 0.71 (52/73) | 0.33 (20/60) |

| Anesthesiology residents | ||||

| Observer 7 | 0.77 (23/30) | 0.93 (28/30) | 0.59 (43/73) | 0.17 (10/60) |

| Observer 8 | 0.90 (27/30) | 0.80 (24/30) | 0.70 (51/73) | 0.35 (21/60) |

| Observer 9 | 0.93 (28/30) | 0.70 (21/30) | 0.58 (42/73) | 0.25 (15/60) |

| Observer 10 | 0.87 (26/30) | 0.77 (23/30) | 0.55 (40/73) | 0.20 (12/60) |

| Observer 11 | 0.87 (26/30) | 0.77 (23/30) | 0.56 (41/73) | 0.25 (15/60) |

| Observer 12 | 0.87 (26/30) | 0.90 (27/30) | 0.60 (44/73) | 0.22 (13/60) |

| Test | AI Model versus Observer (p Value) | |||

|---|---|---|---|---|

| Radiograph Classification (AUROC) | Rib Fracture Detection (JAFROC FOM) | Radiograph Classification | Rib Fracture Detection | |

| Board-certified anesthesiologists | ||||

| Observer 1 | 0.98 | 0.91 | 0.013 | <0.001 |

| Observer 2 | 0.95 | 0.86 | 0.054 | 0.028 |

| Observer 3 | 0.82 | 0.74 | 0.2 | 0.7 |

| Observer 4 | 0.90 | 0.85 | 0.9 | 0.050 |

| Observer 5 | 0.93 | 0.78 | 0.4 | 0.6 |

| Observer 6 | 0.93 | 0.85 | 0.4 | 0.059 |

| Group | 0.83 | 0.051 | ||

| Anesthesiology residents | ||||

| Observer 7 | 0.87 | 0.73 | 0.7 | 0.6 |

| Observer 8 | 0.90 | 0.84 | 0.8 | 0.093 |

| Observer 9 | 0.88 | 0.68 | 0.9 | 0.079 |

| Observer 10 | 0.88 | 0.70 | 0.9 | 0.2 |

| Observer 11 | 0.88 | 0.71 | 0.8 | 0.3 |

| Observer 12 | 0.92 | 0.78 | 0.4 | 0.6 |

| Group | 0.74 | 0.6 | ||

| AI model | 0.89 | 0.76 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, K.; Lee, S.; Kwak, J.S.; Park, H.; Oh, H.; Koh, J.C. Development and Validation of an Artificial Intelligence Model for Detecting Rib Fractures on Chest Radiographs. J. Clin. Med. 2024, 13, 3850. https://doi.org/10.3390/jcm13133850

Lee K, Lee S, Kwak JS, Park H, Oh H, Koh JC. Development and Validation of an Artificial Intelligence Model for Detecting Rib Fractures on Chest Radiographs. Journal of Clinical Medicine. 2024; 13(13):3850. https://doi.org/10.3390/jcm13133850

Chicago/Turabian StyleLee, Kaehong, Sunhee Lee, Ji Soo Kwak, Heechan Park, Hoonji Oh, and Jae Chul Koh. 2024. "Development and Validation of an Artificial Intelligence Model for Detecting Rib Fractures on Chest Radiographs" Journal of Clinical Medicine 13, no. 13: 3850. https://doi.org/10.3390/jcm13133850

APA StyleLee, K., Lee, S., Kwak, J. S., Park, H., Oh, H., & Koh, J. C. (2024). Development and Validation of an Artificial Intelligence Model for Detecting Rib Fractures on Chest Radiographs. Journal of Clinical Medicine, 13(13), 3850. https://doi.org/10.3390/jcm13133850