Application and Progress of Artificial Intelligence in Fetal Ultrasound

Abstract

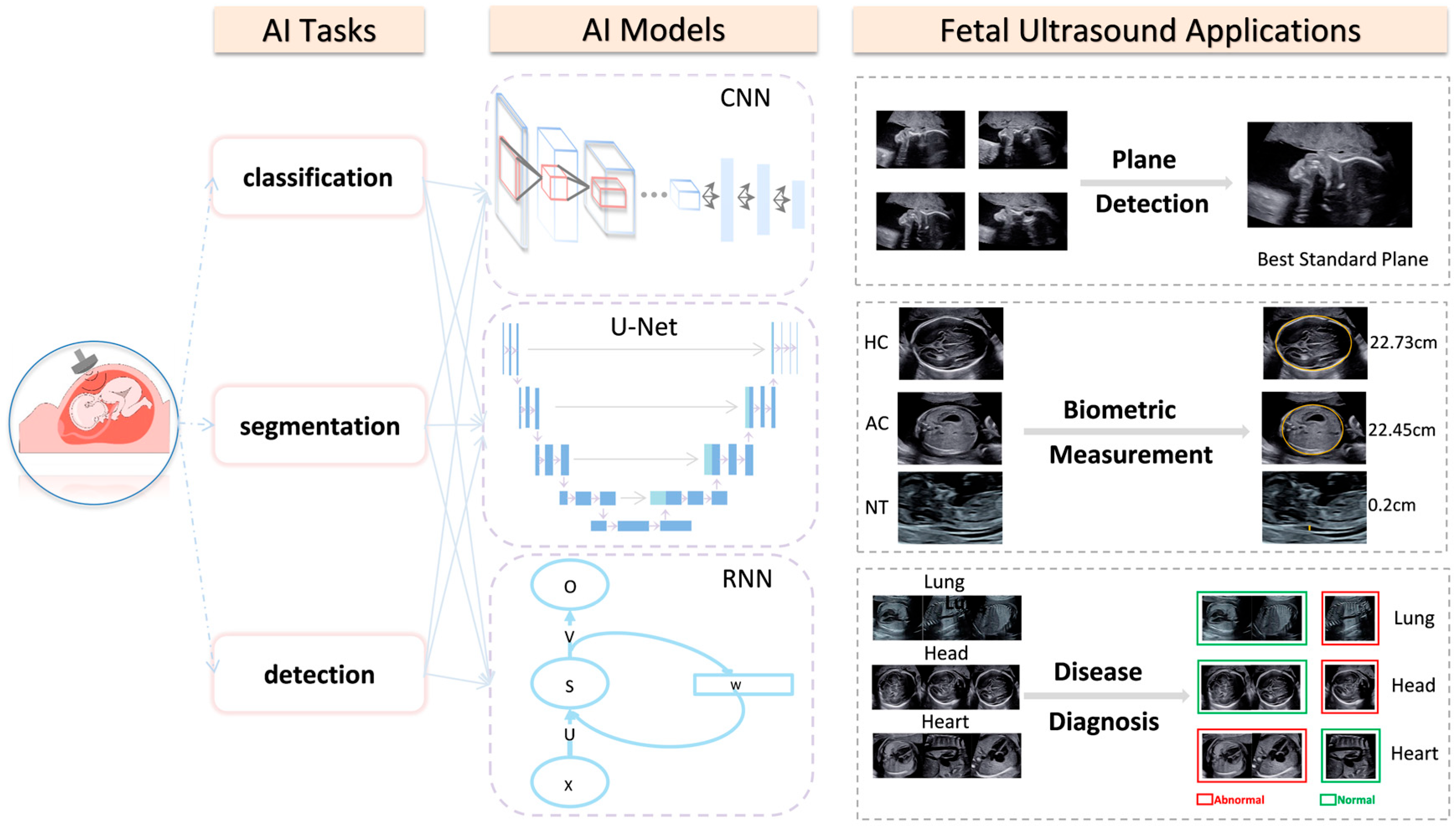

1. Introduction

2. AI Applications in Fetal Ultrasonography

2.1. AI Applications in Intelligent Detection of the Fetal Ultrasonic Standard Plane

2.2. AI Applications in the Measurement of Fetal Ultrasonic Biometry Parameters

2.2.1. Intelligent Measurement of Fetal Head Circumference (HC)

2.2.2. Intelligent Measurement of the Fetal Abdominal Circumference (AC)

2.2.3. Intelligent Measurement of Fetal Nuchal Translucency (NT) Thickness

2.3. AI Applications in Fetal Ultrasonic Diseases Diagnosis

2.3.1. AI Applications in Fetal Ultrasound of Neonatal Respiratory Diseases

2.3.2. AI Applications in Fetal Ultrasound of Intracranial Malformations and GA Estimation

- Intracranial malformations

- GA estimation

2.3.3. AI Applications in Fetal Ultrasound of Congenital Heart Diseases

3. Limitations and Future Perspectives

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, X.; Wang, X.; Wang, Y.; Dou, H.; Li, S.; Wen, H.; Lin, Y.; Heng, P.-A.; Ni, D. Hybrid attention for automatic segmentation of whole fetal head in prenatal ultrasound volumes. Comput. Methods Programs Biomed. 2020, 194, 105519. [Google Scholar] [CrossRef]

- Ghelich Oghli, M.; Shabanzadeh, A.; Moradi, S.; Sirjani, N.; Gerami, R.; Ghaderi, P.; Sanei Taheri, M.; Shiri, I.; Arabi, H.; Zaidi, H. Automatic Fetal Biometry Prediction Using a Novel Deep Convolutional Network Architecture. Phys. Med. 2021, 88, 127–137. [Google Scholar] [CrossRef]

- Akkus, Z.; Cai, J.; Boonrod, A.; Zeinoddini, A.; Weston, A.D.; Philbrick, K.A.; Erickson, B.J. A Survey of Deep-Learning Applications in Ultrasound: Artificial Intelligence-Powered Ultrasound for Improving Clinical Workflow. J. Am. Coll. Radiol. 2019, 16, 1318–1328. [Google Scholar] [CrossRef]

- Dawood, Y.; Buijtendijk, M.F.; Shah, H.; Smit, J.A.; Jacobs, K.; Hagoort, J.; Oostra, R.-J.; Bourne, T.; Hoff, M.J.V.D.; de Bakker, B.S. Imaging fetal anatomy. Semin. Cell Dev. Biol. 2022, 131, 78–92. [Google Scholar] [CrossRef]

- Arnaout, R.; Curran, L.; Zhao, Y.; Levine, J.C.; Chinn, E.; Moon-Grady, A.J. An ensemble of neural networks provides expert-level prenatal detection of complex congenital heart disease. Nat. Med. 2021, 27, 882–891. [Google Scholar] [CrossRef]

- Fiorentino, M.C.; Villani, F.P.; Di Cosmo, M.; Frontoni, E.; Moccia, S. A review on deep-learning algorithms for fetal ultrasound-image analysis. Med Image Anal. 2023, 83, 102629. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, Z.; Du, M.; Wang, Z. Artificial Intelligence in Obstetric Ultrasound: An Update and Future Applications. Front. Med. 2021, 8, 733468. [Google Scholar] [CrossRef] [PubMed]

- He, F.; Wang, Y.; Xiu, Y.; Zhang, Y.; Chen, L. Artificial Intelligence in Prenatal Ultrasound Diagnosis. Front. Med. 2021, 8, 729978. [Google Scholar] [CrossRef] [PubMed]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metab. Clin. Exp. 2017, 69S, S36–S40. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Drukker, L.; Noble, J.A.; Papageorghiou, A.T. Introduction to artificial intelligence in ultrasound imaging in obstetrics and gynecology. Ultrasound Obstet. Gynecol. 2020, 56, 498–505. [Google Scholar] [CrossRef]

- Garcia-Canadilla, P.; Sanchez-Martinez, S.; Crispi, F.; Bijnens, B. Machine Learning in Fetal Cardiology: What to Expect. Fetal Diagn. Ther. 2020, 47, 363–372. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Wu, L.; Dou, Q.; Qin, J.; Li, S.; Cheng, J.-Z.; Ni, D.; Heng, P.-A. Ultrasound Standard Plane Detection Using a Composite Neural Network Framework. IEEE Trans. Cybern. 2017, 47, 1576–1586. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Tan, E.-L.; Ni, D.; Qin, J.; Chen, S.; Li, S.; Lei, B.; Wang, T. A Deep Convolutional Neural Network-Based Framework for Automatic Fetal Facial Standard Plane Recognition. IEEE J. Biomed. Health Inform. 2018, 22, 874–885. [Google Scholar] [CrossRef]

- Chen, H.; Ni, D.; Qin, J.; Li, S.; Yang, X.; Wang, T.; Heng, P.A. Standard Plane Localization in Fetal Ultrasound via Domain Transferred Deep Neural Networks. IEEE J. Biomed. Health Inform. 2015, 19, 1627–1636. [Google Scholar] [CrossRef]

- Lin, Z.; Li, S.; Ni, D.; Liao, Y.; Wen, H.; Du, J.; Chen, S.; Wang, T.; Lei, B. Multi-task learning for quality assessment of fetal head ultrasound images. Med. Image Anal. 2019, 58, 101548. [Google Scholar] [CrossRef]

- Qu, R.; Xu, G.; Ding, C.; Jia, W.; Sun, M. Standard Plane Identification in Fetal Brain Ultrasound Scans Using a Differential Convolutional Neural Network. IEEE Access 2020, 8, 83821–83830. [Google Scholar] [CrossRef]

- Qu, R.; Xu, G.; Ding, C.; Jia, W.; Sun, M. Deep Learning-Based Methodology for Recognition of Fetal Brain Standard Scan Planes in 2D Ultrasound Images. IEEE Access 2020, 8, 44443–44451. [Google Scholar] [CrossRef]

- Stoean, R.; Iliescu, D.; Stoean, C.; Ilie, V.; Patru, C.; Hotoleanu, M.; Nagy, R.; Ruican, D.; Trocan, R.; Marcu, A.; et al. Deep Learning for the Detection of Frames of Interest in Fetal Heart Assessment from First Trimester Ultrasound; Springer: Cham, Switzerland, 2021; pp. 3–14. [Google Scholar]

- Li, J.; Wang, Y.; Lei, B.; Cheng, J.-Z.; Qin, J.; Wang, T.; Li, S.; Ni, D. Automatic Fetal Head Circumference Measurement in Ultrasound Using Random Forest and Fast Ellipse Fitting. IEEE J. Biomed. Health Inform. 2018, 22, 215–223. [Google Scholar] [CrossRef]

- Sobhaninia, Z.; Rafiei, S.; Emami, A.; Karimi, N.; Najarian, K.; Samavi, S.; Reza Soroushmehr, S.M. Fetal Ultrasound Image Segmentation for Measuring Biometric Parameters Using Multi-Task Deep Learning. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2019, 2019, 6545–6548. [Google Scholar] [CrossRef] [PubMed]

- Yasutomi, S.; Arakaki, T.; Matsuoka, R.; Sakai, A.; Komatsu, R.; Shozu, K.; Dozen, A.; Machino, H.; Asada, K.; Kaneko, S.; et al. Shadow Estimation for Ultrasound Images Using Auto-Encoding Structures and Synthetic Shadows. Appl. Sci. 2021, 11, 1127. [Google Scholar] [CrossRef]

- Foi, A.; Maggioni, M.; Pepe, A.; Rueda, S.; Noble, J.A.; Papageorghiou, A.T.; Tohka, J. Difference of Gaussians revolved along elliptical paths for ultrasound fetal head segmentation. Comput. Med. Imaging Graph. Off. J. Comput. Med. Imaging Soc. 2014, 38, 774–784. [Google Scholar] [CrossRef]

- Yu, J.; Wang, Y.; Chen, P. Fetal ultrasound image segmentation system and its use in fetal weight estimation. Med. Biol. Eng. Comput. 2008, 46, 1227–1237. [Google Scholar] [CrossRef]

- Carneiro, G.; Georgescu, B.; Good, S.; Comaniciu, D. Detection and measurement of fetal anatomies from ultrasound images using a constrained probabilistic boosting tree. IEEE Trans. Med. Imaging 2008, 27, 1342–1355. [Google Scholar] [CrossRef]

- Fiorentino, M.C.; Moccia, S.; Capparuccini, M.; Giamberini, S.; Frontoni, E. A regression framework to head-circumference delineation from US fetal images. Comput. Methods Programs Biomed. 2021, 198, 105771. [Google Scholar] [CrossRef]

- Yang, C.; Liao, S.; Yang, Z.; Guo, J.; Zhang, Z.; Yang, Y.; Guo, Y.; Yin, S.; Liu, C.; Kang, Y. RDHCformer: Fusing ResDCN and Transformers for Fetal Head Circumference Automatic Measurement in 2D Ultrasound Images. Front. Med. 2022, 9, 848904. [Google Scholar] [CrossRef]

- Pluym, I.D.; Afshar, Y.; Holliman, K.; Kwan, L.; Bolagani, A.; Mok, T.; Silver, B.; Ramirez, E.; Han, C.S.; Platt, L.D. Accuracy of automated three-dimensional ultrasound imaging technique for fetal head biometry. Ultrasound Obstet. Gynecol. 2021, 57, 798–803. [Google Scholar] [CrossRef]

- Chen, X.; He, M.; Dan, T.; Wang, N.; Lin, M.; Zhang, L.; Xian, J.; Cai, H.; Xie, H. Automatic Measurements of Fetal Lateral Ventricles in 2D Ultrasound Images Using Deep Learning. Front. Neurol. 2020, 11, 526. [Google Scholar] [CrossRef] [PubMed]

- Li, P.; Zhao, H.; Liu, P.; Cao, F. Automated measurement network for accurate segmentation and parameter modification in fetal head ultrasound images. Med. Biol. Eng. Comput. 2020, 58, 2879–2892. [Google Scholar] [CrossRef] [PubMed]

- Ambroise Grandjean, G.; Hossu, G.; Bertholdt, C.; Noble, P.; Morel, O.; Grangé, G. Artificial intelligence assistance for fetal head biometry: Assessment of automated measurement software. Diagn. Interv. Imaging 2018, 99, 709–716. [Google Scholar] [CrossRef] [PubMed]

- Jang, J.; Park, Y.; Kim, B.; Lee, S.M.; Kwon, J.-Y.; Seo, J.K. Automatic Estimation of Fetal Abdominal Circumference from Ultrasound Images. IEEE J. Biomed. Health Inform. 2018, 22, 1512–1520. [Google Scholar] [CrossRef]

- Kim, B.; Kim, K.C.; Park, Y.; Kwon, J.-Y.; Jang, J.; Seo, J.K. Machine-learning-based automatic identification of fetal abdominal circumference from ultrasound images. Physiol. Meas. 2018, 39, 105007. [Google Scholar] [CrossRef]

- Espinoza, J.; Good, S.; Russell, E.; Lee, W. Does the use of automated fetal biometry improve clinical work flow efficiency? J. Ultrasound Med. 2013, 32, 847–850. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Dong, D.; Sun, Y.; Hu, C.; Sun, C.; Wu, Q.; Tian, J. Development and Validation of a Deep Learning Model to Screen for Trisomy 21 During the First Trimester from Nuchal Ultrasonographic Images. JAMA Netw. Open 2022, 5, e2217854. [Google Scholar] [CrossRef] [PubMed]

- Kagan, K.O.; Wright, D.; Baker, A.; Sahota, D.; Nicolaides, K.H. Screening for trisomy 21 by maternal age, fetal nuchal translucency thickness, free beta-human chorionic gonadotropin and pregnancy-associated plasma protein-A. Ultrasound Obstet. Gynecol. 2008, 31, 618–624. [Google Scholar] [CrossRef]

- Sciortino, G.; Tegolo, D.; Valenti, C. Automatic detection and measurement of nuchal translucency. Comput. Biol. Med. 2017, 82, 12–20. [Google Scholar] [CrossRef] [PubMed]

- Yasrab, R.; Fu, Z.; Zhao, H.; Lee, L.H.; Sharma, H.; Drukker, L.; Papageorgiou, A.T.; Alison Noble, J. A Machine Learning Method for Automated Description and Workflow Analysis of First Trimester Ultrasound Scans. IEEE Trans. Med. Imaging 2022. [Google Scholar] [CrossRef] [PubMed]

- Moratalla, J.; Pintoffl, K.; Minekawa, R.; Lachmann, R.; Wright, D.; Nicolaides, K.H. Semi-automated system for measurement of nuchal translucency thickness. Ultrasound Obstet. Gynecol. 2010, 36, 412–416. [Google Scholar] [CrossRef] [PubMed]

- Deng, Y.; Wang, Y.; Chen, P.; Yu, J. A hierarchical model for automatic nuchal translucency detection from ultrasound images. Comput. Biol. Med. 2012, 42, 706–713. [Google Scholar] [CrossRef]

- Shen, Y.T.; Chen, L.; Yue, W.W.; Xu, H.X. Artificial intelligence in ultrasound. Eur. J. Radiol. 2021, 139, 109717. [Google Scholar] [CrossRef]

- Cobo, T.; Bonet-Carne, E.; Martínez-Terrón, M.; Perez-Moreno, A.; Elías, N.; Luque, J.; Amat-Roldan, I.; Palacio, M. Feasibility and reproducibility of fetal lung texture analysis by Automatic Quantitative Ultrasound Analysis and correlation with gestational age. Fetal Diagn. Ther. 2012, 31, 230–236. [Google Scholar] [CrossRef] [PubMed]

- Palacio, M.; Cobo, T.; Martínez-Terrón, M.; Rattá, G.A.; Bonet-Carné, E.; Amat-Roldán, I.; Gratacós, E. Performance of an automatic quantitative ultrasound analysis of the fetal lung to predict fetal lung maturity. Am. J. Obstet. Gynecol. 2012, 207, 504.e501–504.e505. [Google Scholar] [CrossRef] [PubMed]

- Bonet-Carne, E.; Palacio, M.; Cobo, T.; Perez-Moreno, A.; Lopez, M.; Piraquive, J.P.; Ramirez, J.C.; Botet, F.; Marques, F.; Gratacos, E. Quantitative ultrasound texture analysis of fetal lungs to predict neonatal respiratory morbidity. Ultrasound Obstet. Gynecol. 2015, 45, 427–433. [Google Scholar] [CrossRef]

- Palacio, M.; Bonet-Carne, E.; Cobo, T.; Perez-Moreno, A.; Sabrià, J.; Richter, J.; Kacerovsky, M.; Jacobsson, B.; García-Posada, R.A.; Bugatto, F.; et al. Prediction of neonatal respiratory morbidity by quantitative ultrasound lung texture analysis: A multicenter study. Am. J. Obstet. Gynecol. 2017, 217, 196.e1–196.e14. [Google Scholar] [CrossRef]

- Moreno-Espinosa, A.L.; Hawkins-Villarreal, A.; Burgos-Artizzu, X.P.; Coronado-Gutierrez, D.; Castelazo, S.; Lip-Sosa, D.L.; Fuenzalida, J.; Gallo, D.M.; Peña-Ramirez, T.; Zuazagoitia, P.; et al. Concordance of the risk of neonatal respiratory morbidity assessed by quantitative ultrasound lung texture analysis in fetuses of twin pregnancies. Sci. Rep. 2022, 12, 9016. [Google Scholar] [CrossRef]

- Xia, T.-H.; Tan, M.; Li, J.-H.; Wang, J.-J.; Wu, Q.-Q.; Kong, D.-X. Establish a normal fetal lung gestational age grading model and explore the potential value of deep learning algorithms in fetal lung maturity evaluation. Chin. Med. J. 2021, 134, 1828–1837. [Google Scholar] [CrossRef]

- Paladini, D.; Malinger, G.; Birnbaum, R.; Monteagudo, A.; Pilu, G.; Salomon, L.J.; Timor-Tritsch, I.E. ISUOG Practice Guidelines (updated): Sonographic examination of the fetal central nervous system. Part 2: Performance of targeted neurosonography. Ultrasound Obstet. Gynecol. 2021, 57, 661–671. [Google Scholar] [CrossRef] [PubMed]

- Van den Veyver, I.B. Prenatally diagnosed developmental abnormalities of the central nervous system and genetic syndromes: A practical review. Prenat. Diagn. 2019, 39, 666–678. [Google Scholar] [CrossRef]

- Xie, B.; Lei, T.; Wang, N.; Cai, H.; Xian, J.; He, M.; Zhang, L.; Xie, H. Computer-aided diagnosis for fetal brain ultrasound images using deep convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1303–1312. [Google Scholar] [CrossRef]

- Sonographic examination of the fetal central nervous system: Guidelines for performing the ‘basic examination’ and the ‘fetal neurosonogram’. Ultrasound Obstet. Gynecol. 2007, 29, 109–116. [CrossRef]

- Xie, H.N.; Wang, N.; He, M.; Zhang, L.H.; Cai, H.M.; Xian, J.B.; Lin, M.F.; Zheng, J.; Yang, Y.Z. Using deep-learning algorithms to classify fetal brain ultrasound images as normal or abnormal. Ultrasound Obstet. Gynecol. 2020, 56, 579–587. [Google Scholar] [CrossRef]

- Lin, M.; He, X.; Guo, H.; He, M.; Zhang, L.; Xian, J.; Lei, T.; Xu, Q.; Zheng, J.; Feng, J.; et al. Use of real-time artificial intelligence in detection of abnormal image patterns in standard sonographic reference planes in screening for fetal intracranial malformations. Ultrasound Obstet. Gynecol. 2022, 59, 304–316. [Google Scholar] [CrossRef]

- Papageorghiou, A.T.; Kemp, B.; Stones, W.; Ohuma, E.O.; Kennedy, S.H.; Purwar, M.; Salomon, L.J.; Altman, D.G.; Noble, J.A.; Bertino, E.; et al. Ultrasound-based gestational-age estimation in late pregnancy. Ultrasound Obs. Gynecol 2016, 48, 719–726. [Google Scholar] [CrossRef]

- Lee, L.H.; Bradburn, E.; Craik, R.; Yaqub, M.; Norris, S.A.; Ismail, L.C.; Ohuma, E.O.; Barros, F.C.; Lambert, A.; Carvalho, M.; et al. Machine learning for accurate estimation of fetal gestational age based on ultrasound images. NPJ Digit. Med. 2023, 6, 36. [Google Scholar] [CrossRef] [PubMed]

- Namburete, A.I.; Stebbing, R.V.; Kemp, B.; Yaqub, M.; Papageorghiou, A.T.; Alison Noble, J. Learning-based prediction of gestational age from ultrasound images of the fetal brain. Med. Image Anal. 2015, 21, 72–86. [Google Scholar] [CrossRef] [PubMed]

- Burgos-Artizzu, X.P.; Coronado-Gutiérrez, D.; Valenzuela-Alcaraz, B.; Vellvé, K.; Eixarch, E.; Crispi, F.; Bonet-Carne, E.; Bennasar, M.; Gratacos, E. Analysis of maturation features in fetal brain ultrasound via artificial intelligence for the estimation of gestational age. Am. J. Obstet. Gynecol. MFM 2021, 3, 100462. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Chen, S.; Zühlke, L.; Black, G.C.; Choy, M.-K.; Li, N.; Keavney, B.D. Global birth prevalence of congenital heart defects 1970–2017: Updated systematic review and meta-analysis of 260 studies. Int. J. Epidemiol. 2019, 48, 455–463. [Google Scholar] [CrossRef]

- Gong, Y.; Zhang, Y.; Zhu, H.; Lv, J.; Cheng, Q.; Zhang, H.; He, Y.; Wang, S. Fetal Congenital Heart Disease Echocardiogram Screening Based on DGACNN: Adversarial One-Class Classification Combined with Video Transfer Learning. IEEE Trans. Med. Imaging 2020, 39, 1206–1222. [Google Scholar] [CrossRef]

- Yeo, L.; Romero, R. Fetal Intelligent Navigation Echocardiography (FINE): A novel method for rapid, simple, and automatic examination of the fetal heart. Ultrasound Obstet. Gynecol. 2013, 42, 268–284. [Google Scholar] [CrossRef]

- Garcia, M.; Yeo, L.; Romero, R.; Haggerty, D.; Giardina, I.; Hassan, S.S.; Chaiworapongsa, T.; Hernandez-Andrade, E. Prospective evaluation of the fetal heart using Fetal Intelligent Navigation Echocardiography (FINE). Ultrasound Obstet. Gynecol. 2016, 47, 450–459. [Google Scholar] [CrossRef] [PubMed]

- Bridge, C.P.; Ioannou, C.; Noble, J.A. Automated annotation and quantitative description of ultrasound videos of the fetal heart. Med. Image Anal. 2017, 36, 147–161. [Google Scholar] [CrossRef]

- Tegnander, E.; Eik-Nes, S.H. The examiner's ultrasound experience has a significant impact on the detection rate of congenital heart defects at the second-trimester fetal examination. Ultrasound Obs. Gynecol. 2006, 28, 8–14. [Google Scholar] [CrossRef] [PubMed]

- Crispi, F.; Gratacós, E. Fetal cardiac function: Technical considerations and potential research and clinical applications. Fetal Diagn. Ther. 2012, 32, 47–64. [Google Scholar] [CrossRef] [PubMed]

- Akkus, Z.; Aly, Y.H.; Attia, I.Z.; Lopez-Jimenez, F.; Arruda-Olson, A.M.; Pellikka, P.A.; Pislaru, S.V.; Kane, G.C.; Friedman, P.A.; Oh, J.K. Artificial Intelligence (AI)-Empowered Echocardiography Interpretation: A State-of-the-Art Review. J. Clin. Med. 2021, 10, 1391. [Google Scholar] [CrossRef]

- Ma, M.; Li, Y.; Chen, R.; Huang, C.; Mao, Y.; Zhao, B. Diagnostic performance of fetal intelligent navigation echocardiography (FINE) in fetuses with double-outlet right ventricle (DORV). Int. J. Cardiovasc. Imaging 2020, 36, 2165–2172. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Zhao, B.W.; Chen, R.; Pang, H.S.; Pan, M.; Peng, X.H.; Wang, B. Is Fetal Intelligent Navigation Echocardiography Helpful in Screening for d-Transposition of the Great Arteries? J. Ultrasound Med. 2020, 39, 775–784. [Google Scholar] [CrossRef]

- Yeo, L.; Romero, R. New and advanced features of fetal intelligent navigation echocardiography (FINE) or 5D heart. J. Matern. Fetal Neonatal Med. 2022, 35, 1498–1516. [Google Scholar] [CrossRef]

- Yeo, L.; Romero, R. Color and power Doppler combined with Fetal Intelligent Navigation Echocardiography (FINE) to evaluate the fetal heart. Ultrasound Obstet. Gynecol. 2017, 50, 476–491. [Google Scholar] [CrossRef] [PubMed]

- Anda, U.; Andreea-Sorina, M.; Laurentiu, P.C.; Dan, R.; Rodica, N.; Ruxandra, S.; Catalin, S.; Gabriel, I.D. Learning deep architectures for the interpretation of first-trimester fetal echocardiography (LIFE)—A study protocol for developing an automated intelligent decision support system for early fetal echocardiography. BMC Pregnancy Childbirth 2023, 23, 20. [Google Scholar] [CrossRef] [PubMed]

- Gembicki, M.; Hartge, D.R.; Dracopoulos, C.; Weichert, J. Semiautomatic Fetal Intelligent Navigation Echocardiography Has the Potential to Aid Cardiac Evaluations Even in Less Experienced Hands. J. Ultrasound Med. 2020, 39, 301–309. [Google Scholar] [CrossRef]

- Papageorghiou, A.T.; Ohuma, E.O.; Altman, D.G.; Todros, T.; Ismail, L.C.; Lambert, A.; Jaffer, Y.A.; Bertino, E.; Gravett, M.G.; Purwar, M.; et al. International standards for fetal growth based on serial ultrasound measurements: The Fetal Growth Longitudinal Study of the INTERGROWTH-21st Project. Lancet 2014, 384, 869–879. [Google Scholar] [CrossRef] [PubMed]

- Villar, J.; Gunier, R.B.; Tshivuila-Matala, C.O.O.; Rauch, S.A.; Nosten, F.; Ochieng, R.; Restrepo-Méndez, M.C.; McGready, R.; Barros, F.C.; Fernandes, M.; et al. Fetal cranial growth trajectories are associated with growth and neurodevelopment at 2 years of age: INTERBIO-21st Fetal Study. Nat. Med. 2021, 27, 647–652. [Google Scholar] [CrossRef] [PubMed]

- Kulkarni, U.; Meena, S.M.; Gurlahosur, S.V.; Bhogar, G. Quantization Friendly MobileNet (QF-MobileNet) Architecture for Vision Based Applications on Embedded Platforms. Neural Netw. 2021, 136, 28–39. [Google Scholar] [CrossRef] [PubMed]

- Pu, B.; Lu, Y.; Chen, J.; Li, S.; Zhu, N.; Wei, W.; Li, K. MobileUNet-FPN: A Semantic Segmentation Model for Fetal Ultrasound Four-Chamber Segmentation in Edge Computing Environments. IEEE J. Biomed. Health Inform. 2022, 26, 5540–5550. [Google Scholar] [CrossRef] [PubMed]

| Paper | Plane | AI Task | Technology | GA | Dataset | Performance Metric |

|---|---|---|---|---|---|---|

| Chen, H. et al. [13] | FASP FFASP FFVSP | detection | CNN; RNN; LSTM; multi-task learning; 2D US | 18–40 w | Training Dataset: FASP(11,942); FFASP(13,091); FFVSP(12,343); Test Dataset: FASP(8718); FFASP(2278); FFVSP(2252) | Accuracy: 0.941; 0.717; 0.846; Precision: 0.945; 0.737; 0.898; Recall: 0.995; 0.955; 0.936; F1score: 0.969; 0.832; 0.917 (FASP; FFASP; FFVSP) |

| Yu, Z. et al. [14] | FFSP | detection classification | DCNN; transfer learning; 2D US | 20–36 w | Training Dataset: 4849; Test Dataset: 2418 | Accuracy: 0.9653; Precision: 0.9698; Recall: 0.9700; F1score: 0.9699; AUC: 0.99 |

| Chen, H. et al. [15] | FASP | detection classification | CNN; transfer learning; Barnes-Hut-SNE; 2D US | 18–40 w | Training Dataset: 11,942; Test Dataset: 8718 | Accuracy: 0.896; Precision: 0.714; Recall: 0.710; F1 score: 0.712 |

| Lin, Z. et al. [16] | FHSP | detection classification | MF R-CNN; transfer learning; 2D US | 14–28 w | Training Dataset: 1451; Test Dataset: 320 | Accuracy: 0.9625; Precision: 0.9776; F1score: 0.9568; AUC: 0.9889 (From Group B1) |

| Qu, R. et al. [17] | FBSP | detection | differential-CNN; 2D US | 16–34 w | Training Dataset1: 18,000; Training Dataset2: 720; Test Dataset1: 6000; Test Dataset2: 240 | Accuracy: 0.910; 0.891; Precision: 0.855; 0.853; Recall: 0.901; 0.864; F1 score: 0.900; 0.901 (Data Set1; Data Set2) |

| Qu, R. et al. [18] | FBSP | detection classification | DCNN; transfer learning; 2D US | 16–34 w | Training Dataset: 18,000; Test Dataset: 6000 | Accuracy: 0.9311; Precision: 0.9262; Recall: 0.9239; F1score: 0.9353; AUC: 0.937 |

| Stoean, R. et al. [19] | FECG | detection classification | CNN; DenseNet-201; Inception-V4; ResNet-152; ResNet-18; ResNet-50; Xception; 2D US | 12–14 w | Training Dataset: 4260; Validation Dataset: 1495; Test Dataset: 1496 | Accuracy: 95%; F1score: 0.9091–0.9958 |

| Paper | Biometry | AI Tasks | Technology | GA | Samples | Performance Metrics |

|---|---|---|---|---|---|---|

| Li, J. et al. [20] | HC | detection classification | ElliFit; random forest; prior knowledge; 2D US | 18–33 w | training: 524 images; testing: 145 images | DSC (%): 96.66 ± 3.15; MAE (mm): 1.7; MSD (mm): 1.78 ± 1.58; RMSD (mm): 1.77 ± 1.37; Precision (%): 96.84 ± 2.99 |

| Sobhaninia, Z. et al. [21] | HC | segmentation | multi-task deep CNN; 2D US | 12–35 w | 8823 images | DSC (%): 96.84; ADF (mm): 2.12; HD (mm): 1.72 |

| Foi, A. et al. [23] | HC BPD OFD | segmentation | DoGEll;multistart Nelder–Mead; 2D US | 21, 28 and 33 w | 90 images (90 fetuses) | DSC (%): 97.73; RMSE (mm): 4.39 (HC) |

| Fiorentino, M. C. et al. [26] | HC | segmentation | region-proposal CNN; 2D US | 12–35 w | HC18dataset training: 999 images; testing: 335 images | DSC (%): 97.75 ± 1.32; MAD (mm): 1.90 ± 1.76 |

| Yang, C. et al. [27] | HC | detection classification | ResDCN; transformer; SSR; rotating ellipse; KLD loss; 2D US | 12–35 w | HC18dataset training: 999 images; testing: 335 images | AP (%): 84.45; MAE ± std (mm): 1.97 ± 1.89; ME ± std (mm): 0.11 ± 2.71 |

| Pluym, I. D. et al. [28] | BPD HC TCD CM Vp | detection | SonoCNS; computer-aided analysis; 3D US | 18–22.6 w | 143 subjects | The ICC reflected moderate reliability (>0.68) for BPD and HC and poor reliability (<0.31) for TCD, CM, and Vp. |

| Chen, X. et al. [29] | LV | detection classification segmentation | Mask R-CNN; FPN; RPN; clinical prior knowledge; 2D US | Not reported | training: 2400 images testing: 500 images | MAE (mm): 1.8; SD (mm): 3.4; RMSE (mm): 2.38 |

| Li, P. et al. [30] | HC BPD OFD | segmentation prediction | FCNN; Feature pyramid; ROI pooling; 2D US | 12–35 w | HC18dataset training: 999 images; testing: 335 images | DSC (%): 97.94 ± 1.34; ADF (mm): 1.81 ± 1.69; HD (mm): 1.22 ± 0.77 |

| Ambroise Grandjean, G. et al. [31] | HC BPD | detection | Smartplanes 3D US | 17–29w | 30 subjects | Intra- and interobserver reproducibility rates were high with ICC values >0.98 |

| Yang, X. et al. [1] | fetal head volume | segmentation | HAS; U-net; 3D US | 20–31 w | traning: 50 volumes testing: 50 volumes | DSC (%): 96.05; MSD (ml): 11.524 |

| Jang, J. et al. [32] | AC | classification segmentation | CNN; Hough transform; 2D US | 20–34 w | training: 56 subjects testing: 32 subjects | DSC (%): 85.28; Accuracy: 0.809 (expert 1); Accuracy: 0.771 (expert 2); Accuracy: 0.905 (between the two experts) |

| Kim, B. et al. [33] | AC | detection classification segmentation | CNN; U-Net; 2D US | Not reported | training: 112 images testing: 77 subjects | DSC (%): 92.55 ± 0.83; Accuracy (%): 87.10 |

| Ghelich Oghli, M. et al. [2] | BPD HC AC FL | segmentation | CNN; MFP-Unet; AG; 2D US | 14–26 w | HC18dataset: training: 999 images; testing: 335 images local dataset: 473 images | DSC (%): 98; HD (mm): 1.14; Conformity: 0.95; APD (mm): 0.2 |

| Moratalla, J. et al. [39] | NT | detection segmentation | semi-automated method; 2D US | 11–13 w | 48 images (12subjects) | Within-operator SD (mm): 0.05 (semi-automated method); 0.126 (manual method); ICC: 0.98 (semi-automated method); 0.85 (manual method) |

| Deng, Y. et al. [40] | NT | detection segmentation | hierarchical model; Gaussian pyramids; 2D US | 11–13.6 w | 690 images (training: 345 images; testing: 345 images) | The spatial model increases the performance by about 5.68% on average compared with the single SVM classifier for the NT in the proposed model |

| Sciortino, G. et al. [37] | NT | detection segmentation | wavelet analysis; multi-resolution analysis; 2D US | 11–13 w | 382 images (12 subjects) | True positive rate (%): 99.95 |

| Paper | Organ | Samples | GA | Task | Technology | Performance Metrics |

|---|---|---|---|---|---|---|

| Palacio et al. [43] | Lung | 103 subjects | 24–41 w | classification prediction | AQUA; genetic algorithm; SVM; 2D US | Accuracy: 90.3%; Sensitivity: 95.1%; Specificity: 85.7% |

| Bonet-Carne et al. [44] | Lung | >13,000 non-clinical images 957 fetal lung images | 28–39 w | classification prediction | quantusFLM; regression model; classification tree; neural network; 2D US | Sensitivity: 86.2%; Specificity: 87% |

| Palacio et al. [45] | Lung | 730 images | 25–38.6 w | classification prediction | quantusFLM; regression model; classification tree; neural network; 2D US | Accuracy: 86.5%; Sensitivity: 74.3%; Specificity: 88.6% |

| Moreno-Espinosa et al. [46] | Lung | 262 images 131 pairs of twins | 26–38.6 w | classification prediction | quantusFLM; regression model; classification tree; neural network; 2D US | Concordance in the risk of NRM: 97.4%; 73.5%; 88.4% (Group 1; Group 2; Group 3) |

| Xia et al. [47] | Lung | 7013 images 1023 subjects | 20–41.6 w | classification prediction | CNN; DenseNet; AlexNet; 2D US | Accuracy: 83.8%; Sensitivity: 91.7%; 69.8%; 86.4%; Specificity: 76.8%; 90%; 83.1%; AUC: 0.982; 0.907; 0.960 (class I; class II; class III) |

| Xie, B. et al. [50] | Brain | Segmentation Dataset: 13,350 images; Classification Dataset: 11,645 images | 18–32 w | segmentation classification prediction | DCNN; U-Net; VGG-net; ImageNet; Grad-CAM; 2D US | DSC: 0.942; F1 score: 0.96 |

| Xie, H.N. et al. [52] | Brain | Normal: 15,372 images, 10,251 subjects; Abnormal: 14,047 images, 2529 subjects | Average normal: 22.4 w; abnormal: 26.3 w | classification prediction | CNN; Keras; 2D US | Accuracy: 96.3%; Sensitivity: 96.9%; Specificity: 95.9%; AUC: 0.989 |

| Lin, M. et al. [53] | Brain | 43,890 images, 16,463 subjects | 18–40 w | segmentation classification prediction | PAICS; CNN; YOLOv3; 2D US | Internal dataset: mean accuracy: 0.992; external dataset: macroaverage accuracy: 0.963; microaverage accuracy: 0.963 |

| Lee, L.H. et al. [55] | Brain | INTERGROWTH-21st dataset [72]; INTERBIO-21st dataset [73] | / | prediction | ML; CNN; ResNet-50; 2D US | MAE: 3.0 days (2nd trimester) MAE: 4.3 days (3rd trimester) (from the best-performing model) |

| Namburete, A.I. et al. [56] | Brain | INTERGROWTH-21st dataset [72]; INTERBIO-21st dataset [73] | / | prediction | 3D cranial parametrization regression forest 3D US | RMSE: 5.18 days (2nd trimester); RMSE: 7.77 days (3rd trimester) |

| Burgos-Artizzu, X.P. et al. [57] | Brain | 1394 subjects | / | prediction | DL; supervised learning; 2D US | Avg error: 3.03 days (2nd trimester); Avg error: 7.06 days (3rd trimester) |

| Yeo, L. & Romero, R. et al. [60] | Heart | training: 918 images; 51 STIC volumes; testing: 900 images; 50 STIC volumes | training: 19.5–39.3 w; test: 18.6–37.2 w | detection classification prediction | FINE; VIS-Assistance; STICLoop; 2D US; 4D US | FINE generated nine fetal echocardiography views in 76–100% of cases using diagnostic planes, 98–100% using VIS-Assistance, and 98–100% using a combination of diagnostic planes and/or VIS-Assistance. |

| Garcia, M. et al. [61] | Heart | 2700 images; 150 STIC volumes; 150 subjects | 19–30 w | detection classification prediction | FINE; VIS-Assistance; STICLoop; 2D US; 4D US | The success rate of obtaining the four-chamber view, left ventricular outflow tract view, short-axis view of the great vessels/right ventricular outflow tract, and abdomen view was 95% (n = 143) using diagnostic planes and 100% (n = 150) using VIS-Assistance. |

| Arnaout, R. et al. [5] | Heart | 107,823 images; 1326 subjects | 18–24 w | segmentation classification prediction | DL; Grad-CAM; Saliency mapping; 2D US | AUC: 0.99; Sensitivity: 95%; Specificity: 96%; Negative predictive value: 100% |

| Ma, M. et al. [66] | Heart | 25 STIC volumes; 25 subjects | 15–35 w | detection classification prediction | FINE; VIS-Assistance; 4D US | Display rates (3VT, LVOT, RVOT): 84%, 76%, 84%. |

| Huang, C. et al. [67] | Heart | 28 STIC volumes; 28 subjects | 22–37 w | detection classification prediction | FINE; VIS-Assistance; 4D US | FINE successfully showed an abnormal 3VT view in 85.7% (n = 25) of d-TGA cases, 75% (n = 21) for LVOT, and 89.2% for RVOT. The interobserver ICCs in this study were greater than 0.81. |

| Yeo, L. & Romero, R. et al. [69] | Heart | 1418 images; 60 STIC volumes | 21–27.5 w | detection classification prediction | FINE; VIS-Assistance; STICLoop; S-flow Doppler; 2D US; 4D US | Color Doppler FINE generated nine fetal echocardiography views (grayscale) using (1) diagnostic planes in 73–100% of cases, (2) VIS-Assistance in 100% of cases, and (3) a combination of diagnostic planes and/or VIS-Assistance in 100% of cases. |

| Gong et al. [59] | Heart | 3596 images | 18–39 w | recognition classification | DANomaly; GACNN (Wgan-GP and CNN); generative adversarial network; transfer learning; 2D US | Accuracy: 0.850; AUC: 0.881 |

| Anda, U. et al. [70] | Heart | ≥6000 images | 12–13.6 w | detection classification | CNN | The IS can assist the early-stage sonographers in helping and training for accurate detection of the four first-trimester cardiac key-planes (four-chamber view, left and right ventricular outflow tracts, three vessels, and trachea view). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, S.; Zhang, J.; Zhu, Y.; Zhang, Z.; Cao, H.; Xie, M.; Zhang, L. Application and Progress of Artificial Intelligence in Fetal Ultrasound. J. Clin. Med. 2023, 12, 3298. https://doi.org/10.3390/jcm12093298

Xiao S, Zhang J, Zhu Y, Zhang Z, Cao H, Xie M, Zhang L. Application and Progress of Artificial Intelligence in Fetal Ultrasound. Journal of Clinical Medicine. 2023; 12(9):3298. https://doi.org/10.3390/jcm12093298

Chicago/Turabian StyleXiao, Sushan, Junmin Zhang, Ye Zhu, Zisang Zhang, Haiyan Cao, Mingxing Xie, and Li Zhang. 2023. "Application and Progress of Artificial Intelligence in Fetal Ultrasound" Journal of Clinical Medicine 12, no. 9: 3298. https://doi.org/10.3390/jcm12093298

APA StyleXiao, S., Zhang, J., Zhu, Y., Zhang, Z., Cao, H., Xie, M., & Zhang, L. (2023). Application and Progress of Artificial Intelligence in Fetal Ultrasound. Journal of Clinical Medicine, 12(9), 3298. https://doi.org/10.3390/jcm12093298