Significant Differences and Experimental Designs Do Not Necessarily Imply Clinical Relevance: Effect Sizes and Causality Claims in Antidepressant Treatments

Abstract

1. Introduction

1.1. Background

1.2. Experimental Design in Clinical Trials and Its Role in Internal and External Validity

1.3. Null Hypothesis Significance Testing, ES, and Clinical Significance

1.4. Objective and Hypotheses

- (a)

- Examine causality claims about treatment efficacy not only in that particular trial with its specific clinical conditions, but effectiveness in the everyday life of the population affected by depression. From the literature, it is anticipated that some studies will not acknowledge the limited generalizability of their experimental findings to the population.

- (b)

- Determine the proportion of studies that used ES estimators, and the proportion of studies that interpreted the magnitude of statistical effects found (interpreting the reported ES estimators or otherwise). Our hypothesis is that, similar to other areas of scientific research, approximately 50% of studies examining antidepressant treatments will not report ES indices, and a similar proportion will fail to provide an interpretation of the magnitude of their significant results.

2. Methods

2.1. Eligibility Criteria

2.2. Information Sources

2.3. Search Strategy

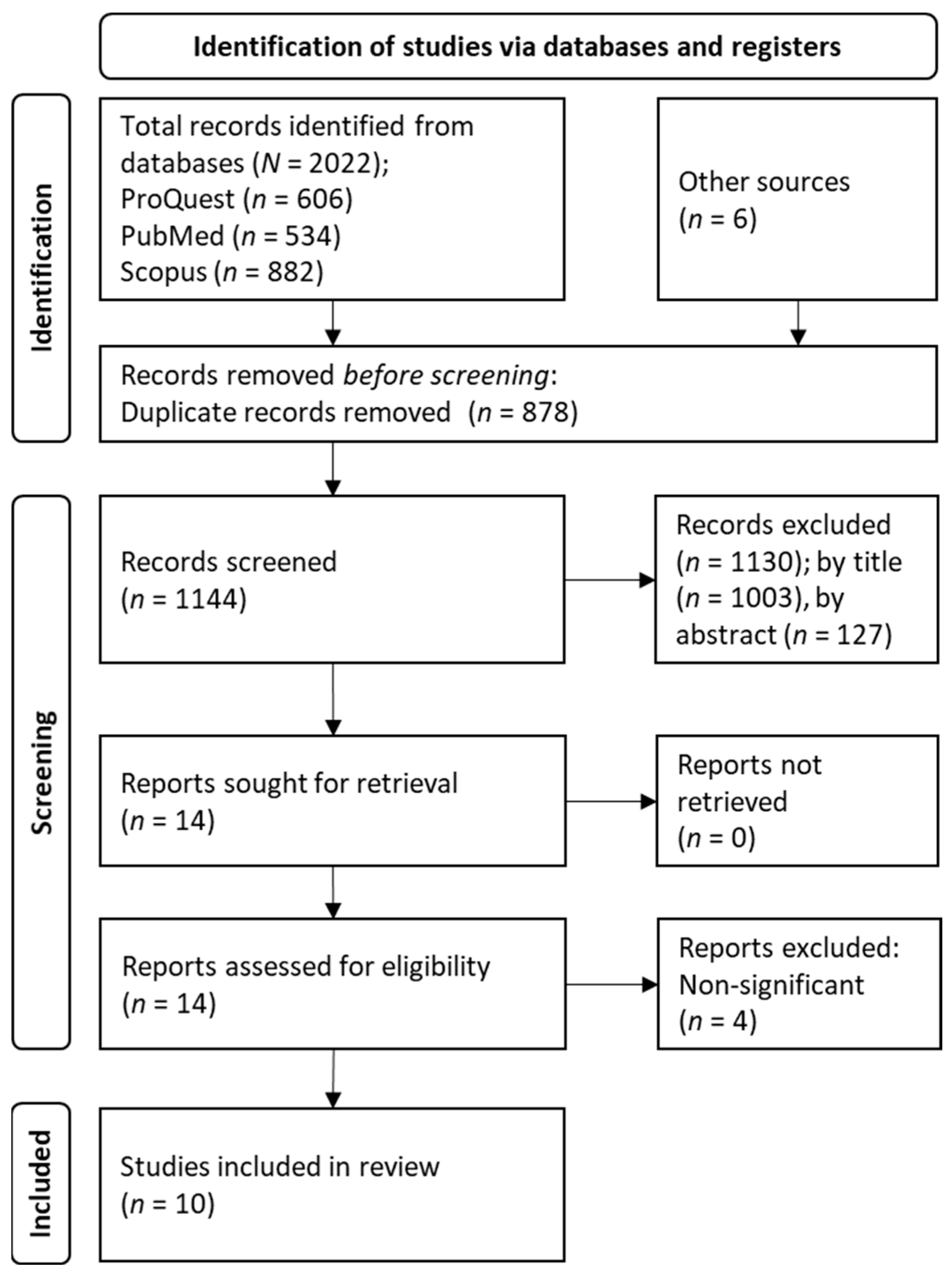

2.4. Selection Process

2.5. Data Collection Process

2.6. Data Items

3. Results

3.1. Study Selection

3.2. Characteristics of Studies and Patients

- Exceeding a certain weight [64];

- Intolerance to certain excipients such as lactose [71];

- Contraindication for certain drugs such as aspirin [67];

- Developmental disorder [63];

- Mental retardation [63];

- Personality disorder [68];

- Risk of poor compliance [69];

- Recent hospitalization [68];

- Hospitalization due to severe depression [69];

3.3. Claims about Efficacy and Efectiveness

3.4. Effect Size Estimators and Their Interpretation

4. Discussion

4.1. Balancing Internal and Ecological Validity in Clinical Trials: Implications for Practice

4.2. The Need for Encouraging ES Estimators in Scientific Reports

4.3. Limitations

4.4. Future Research and Implications for Practice

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization (WHO). Available online: https://www.who.int (accessed on 10 February 2023).

- Kessler, R.C.; Petukhova, M.; Sampson, N.A.; Zaslavsky, A.M.; Wittchen, H.-U. Twelve-Month and Lifetime Prevalence and Lifetime Morbid Risk of Anxiety and Mood Disorders in the United States: Anxiety and Mood Disorders in the United States. Int. J. Methods Psychiatr. Res. 2012, 21, 169–184. [Google Scholar] [CrossRef] [PubMed]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders (DSM-5®), 5th ed.; American Psychiatric Publishing: Washington, DC, USA, 2013. [Google Scholar]

- Moreno-Agostino, D.; Wu, Y.-T.; Daskalopoulou, C.; Hasan, M.T.; Huisman, M.; Prina, M. Global Trends in the Prevalence and Incidence of Depression: A Systematic Review and Meta-Analysis. J. Affect. Disord. 2021, 281, 235–243. [Google Scholar] [CrossRef] [PubMed]

- Moras, K. Twenty-Five Years of Psychological Treatment Research on Unipolar Depression in Adult Outpatients: Introduction to the Special Section. Psychother. Res. 2006, 16, 519–525. [Google Scholar] [CrossRef]

- Kendrick, T. Strategies to Reduce Use of Antidepressants. Br. J. Clin. Pharmacol. 2021, 87, 23–33. [Google Scholar] [CrossRef]

- Dodd, S.; Bauer, M.; Carvalho, A.F.; Eyre, H.; Fava, M.; Kasper, S.; Kennedy, S.H.; Khoo, J.-P.; Lopez Jaramillo, C.; Malhi, G.S.; et al. A Clinical Approach to Treatment Resistance in Depressed Patients: What to Do When the Usual Treatments Don’t Work Well Enough? World J. Biol. Psychiatry 2021, 22, 483–494. [Google Scholar] [CrossRef]

- Masand, P.S. Tolerability and Adherence Issues in Antidepressant Therapy. Clin. Ther. 2003, 25, 2289–2304. [Google Scholar] [CrossRef]

- Gaynes, B.N.; Lux, L.; Gartlehner, G.; Asher, G.; Forman-Hoffman, V.; Green, J.; Boland, E.; Weber, R.P.; Randolph, C.; Bann, C.; et al. Defining Treatment-resistant Depression. Depress. Anxiety 2020, 37, 134–145. [Google Scholar] [CrossRef]

- Sackeim, H.A.; Aaronson, S.T.; Bunker, M.T.; Conway, C.R.; Demitrack, M.A.; George, M.S.; Prudic, J.; Thase, M.E.; Rush, A.J. The Assessment of Resistance to Antidepressant Treatment: Rationale for the Antidepressant Treatment History Form: Short Form (ATHF-SF). J. Psychiatr. Res. 2019, 113, 125–136. [Google Scholar] [CrossRef]

- Crowe, M.; Inder, M.; McCall, C. Experience of Antidepressant Use and Discontinuation: A Qualitative Synthesis of the Evidence. J. Psychiatr. Ment. Health Nurs. 2023, 30, 21–34. [Google Scholar] [CrossRef]

- Zarin, D.A.; Young, J.L.; West, J.C. Challenges to Evidence-Based Medicine: A Comparison of Patients and Treatments in Randomized Controlled Trials with Patients and Treatments in a Practice Research Network. Soc. Psychiatry Psychiatr. Epidemiol. 2005, 40, 27–35. [Google Scholar] [CrossRef]

- Keezhupalat, S.M.; Naik, A.; Gupta, S.; Srivatsan, R.; Saberwal, G. An Analysis of Sponsors/Collaborators of 69,160 Drug Trials Registered with ClinicalTrials.Gov. PLoS ONE 2016, 11, e0149416. [Google Scholar] [CrossRef]

- Lundh, A.; Lexchin, J.; Mintzes, B.; Schroll, J.B.; Bero, L. Industry Sponsorship and Research Outcome: Systematic Review with Meta-Analysis. Intensive Care Med. 2018, 44, 1603–1612. [Google Scholar] [CrossRef]

- Gluud, L.L. Bias in Clinical Intervention Research. Am. J. Epidemiol. 2006, 163, 493–501. [Google Scholar] [CrossRef]

- Cipriani, A.; Furukawa, T.A.; Salanti, G.; Chaimani, A.; Atkinson, L.Z.; Ogawa, Y.; Leucht, S.; Ruhe, H.G.; Turner, E.H.; Higgins, J.P.T.; et al. Comparative Efficacy and Acceptability of 21 Antidepressant Drugs for the Acute Treatment of Adults with Major Depressive Disorder: A Systematic Review and Network Meta-Analysis. Lancet 2018, 391, 1357–1366. [Google Scholar] [CrossRef]

- Hengartner, M.P.; Plöderl, M. Statistically Significant Antidepressant-Placebo Differences on Subjective Symptom-Rating Scales Do Not Prove That the Drugs Work: Effect Size and Method Bias Matter! Front. Psychiatry 2018, 9, 517. [Google Scholar] [CrossRef]

- Kirsch, I. Antidepressants and the Placebo Effect. Z. Psychol. 2014, 222, 128–134. [Google Scholar] [CrossRef]

- Kirsch, I.; Moncrieff, J. Clinical Trials and the Response Rate Illusion. Contemp. Clin. Trials 2007, 28, 348–351. [Google Scholar] [CrossRef]

- Moncrieff, J.; Kirsch, I. Efficacy of Antidepressants in Adults. BMJ 2005, 331, 155–157. [Google Scholar] [CrossRef]

- Campbell, D.T.; Stanley, J.C. Experimental and Quasi-Experimental Designs for Research; Wadsworth: Belmont, CA, USA, 2011; ISBN 978-0-395-30787-8. [Google Scholar]

- Cook, T.D.; Campbell, D.T. Quasi-Experimentation: Design & Analysis Issues for Field Settings; Houghton Mifflin: Boston, MA, USA, 1979; ISBN 978-0-395-30790-8. [Google Scholar]

- Cook, T.D.; Campbell, D.T. The Causal Assumptions of Quasi-Experimental Practice: The Origins of Quasi-Experimental Practice. Synthese 1986, 68, 141–180. [Google Scholar] [CrossRef]

- Shadish, W.R.; Cook, T.D.; Campbell, D.T. Experimental and Quasi-Experimental Designs for Generalized Causal Inference; Houghton Mifflin: Boston, MA, USA, 2001; ISBN 978-0-395-61556-0. [Google Scholar]

- Stefanos, R.; Graziella, D.A.; Giovanni, T. Methodological Aspects of Superiority, Equivalence, and Non-Inferiority Trials. Intern. Emerg. Med. 2020, 15, 1085–1091. [Google Scholar] [CrossRef]

- Rief, W.; Hofmann, S.G. Some Problems with Non-Inferiority Tests in Psychotherapy Research: Psychodynamic Therapies as an Example. Psychol. Med. 2018, 48, 1392–1394. [Google Scholar] [CrossRef] [PubMed]

- Rief, W.; Hofmann, S.G. The Limitations of Equivalence and Non-Inferiority Trials. Psychol. Med. 2019, 49, 349–350. [Google Scholar] [CrossRef] [PubMed]

- Garattini, S.; Bertele’, V. Non-Inferiority Trials Are Unethical Because They Disregard Patients’ Interests. Lancet 2007, 370, 1875–1877. [Google Scholar] [CrossRef] [PubMed]

- Hunter, C.P.; Frelick, R.W.; Feldman, A.R.; Bavier, A.R.; Dunlap, W.H.; Ford, L.; Henson, D.; Macfarlane, D.; Smart, C.R.; Yancik, R. Selection Factors in Clinical Trials: Results from the Community Clinical Oncology Program Physician’s Patient Log. Cancer Treat. Rep. 1987, 71, 559–565. [Google Scholar]

- You, K.H.; Lwin, Z.; Ahern, E.; Wyld, D.; Roberts, N. Factors That Influence Clinical Trial Participation by Patients with Cancer in Australia: A Scoping Review Protocol. BMJ Open 2022, 12, e057675. [Google Scholar] [CrossRef]

- Calin-Jageman, R.J.; Cumming, G. The New Statistics for Better Science: Ask How Much, How Uncertain, and What Else Is Known. Am. Stat. 2019, 73, 271–280. [Google Scholar] [CrossRef]

- Gill, J. The Insignificance of Null Hypothesis Significance Testing. Polit. Res. Q. 1999, 52, 647–674. [Google Scholar] [CrossRef]

- Schneider, J.W. Null Hypothesis Significance Tests. A Mix-up of Two Different Theories: The Basis for Widespread Confusion and Numerous Misinterpretations. Scientometrics 2015, 102, 411–432. [Google Scholar] [CrossRef]

- García-Pérez, M.A. Thou Shalt Not Bear False Witness Against Null Hypothesis Significance Testing. Educ. Psychol. Meas. 2017, 77, 631–662. [Google Scholar] [CrossRef]

- Mayo, D.G.; Hand, D. Statistical Significance and Its Critics: Practicing Damaging Science, or Damaging Scientific Practice? Synthese 2022, 200, 220. [Google Scholar] [CrossRef]

- Nickerson, R.S. Null Hypothesis Significance Testing: A Review of an Old and Continuing Controversy. Psychol. Methods 2000, 5, 241–301. [Google Scholar] [CrossRef]

- Biskin, B.H. Comment on Significance Testing. Meas. Eval. Couns. Dev. 1998, 31, 58–62. [Google Scholar] [CrossRef]

- Kirk, R.E. Practical Significance: A Concept Whose Time Has Come. Educ. Psychol. Meas. 1996, 56, 746–759. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; L. Erlbaum Associates: Hillsdale, NJ, USA, 1988; ISBN 978-0-8058-0283-2. [Google Scholar]

- American Psychological Association (Washington, District of Columbia) (Ed.) Publication Manual of the American Psychological Association, 7th ed.; American Psychological Association: Washington, DC, USA, 2020; ISBN 978-1-4338-3215-4. [Google Scholar]

- Hahn, E.D.; Ang, S.H. From the Editors: New Directions in the Reporting of Statistical Results in the Journal of World Business. J. World Bus. 2017, 52, 125–126. [Google Scholar] [CrossRef]

- Jones, P.M.; Bryson, G.L.; Backman, S.B.; Mehta, S.; Grocott, H.P. Statistical Reporting and Table Construction Guidelines for Publication in the Canadian Journal of Anesthesia. Can. J. Anesth. 2018, 65, 152–161. [Google Scholar] [CrossRef]

- Althouse, A.D.; Below, J.E.; Claggett, B.L.; Cox, N.J.; de Lemos, J.A.; Deo, R.C.; Duval, S.; Hachamovitch, R.; Kaul, S.; Keith, S.W.; et al. Recommendations for Statistical Reporting in Cardiovascular Medicine: A Special Report from the American Heart Association. Circulation 2021, 144, e70–e91. [Google Scholar] [CrossRef]

- Ou, F.-S.; Le-Rademacher, J.G.; Ballman, K.V.; Adjei, A.A.; Mandrekar, S.J. Guidelines for Statistical Reporting in Medical Journals. J. Thorac. Oncol. 2020, 15, 1722–1726. [Google Scholar] [CrossRef]

- Lang, T.A.; Altman, D.G. Basic Statistical Reporting for Articles Published in Biomedical Journals: The “Statistical Analyses and Methods in the Published Literature” or the SAMPL Guidelines. Int. J. Nurs. Stud. 2015, 52, 5–9. [Google Scholar] [CrossRef]

- Indrayan, A. Reporting of Basic Statistical Methods in Biomedical Journals: Improved SAMPL Guidelines. Indian Pediatr. 2020, 57, 43–48. [Google Scholar] [CrossRef]

- Indrayan, A. Revised SAMPL Guidelines for Reporting of Statistical Methods in Biomedical Journals. Acad. Lett. 2021. [Google Scholar] [CrossRef]

- Charan, J.; Saxena, D. Suggested Statistical Reporting Guidelines for Clinical Trials Data. Indian J. Psychol. Med. 2012, 34, 25–29. [Google Scholar] [CrossRef] [PubMed]

- Harrington, D.; D’Agostino, R.B.; Gatsonis, C.; Hogan, J.W.; Hunter, D.J.; Normand, S.-L.T.; Drazen, J.M.; Hamel, M.B. New Guidelines for Statistical Reporting in the journal. N. Engl. J. Med. 2019, 381, 285–286. [Google Scholar] [CrossRef] [PubMed]

- Fidler, F.; Burgman, M.A.; Cumming, G.; Buttrose, R.; Thomason, N. Impact of Criticism of Null-Hypothesis Significance Testing on Statistical Reporting Practices in Conservation Biology. Conserv. Biol. 2006, 20, 1539–1544. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Pan, W.; Wang, L.L. A Comprehensive Review of Effect Size Reporting and Interpreting Practices in Academic Journals in Education and Psychology. J. Educ. Psychol. 2010, 102, 989–1004. [Google Scholar] [CrossRef]

- Sánchez-Iglesias, I.; Saiz, J.; Molina, A.J.; Goldsby, T.L. Reporting and Interpreting Effect Sizes in Applied Health-Related Settings: The Case of Spirituality and Substance Abuse. Healthcare 2022, 11, 133. [Google Scholar] [CrossRef]

- Elvira-Flores, G.; Sánchez-Iglesias, I. Methodological rigor in the interpretation of the effect size in physical exercise on depressive symptoms: The importance of effect size. In Proceedings of the Libro de Resúmenes del V Congreso Nacional de Psicología, Online, 9–11 July 2021; p. 795. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Vacha-Haase, T.; Thompson, B. How to Estimate and Interpret Various Effect Sizes. J. Couns. Psychol. 2004, 51, 473–481. [Google Scholar] [CrossRef]

- Tomczak, M.; Tomczak, E. The Need to Report Effect Size Estimates Revisited. An Overview of Some Recommended Measures of Effect Size. Trends Sport Sci. 2014, 21, 1–7. [Google Scholar]

- Bielski, R.J.; Ventura, D.; Chang, C.-C. A Double-Blind Comparison of Escitalopram and Venlafaxine Extended Release in the Treatment of Major Depressive Disorder. J. Clin. Psychiatry 2004, 65, 1190–1196. [Google Scholar] [CrossRef]

- Montgomery, S.A.; Huusom, A.K.T.; Bothmer, J. A Randomised Study Comparing Escitalopram with Venlafaxine XR in Primary Care Patients with Major Depressive Disorder. Neuropsychobiology 2004, 50, 57–64. [Google Scholar] [CrossRef]

- Taner, E.; Demir, E.Y.; Cosar, B. Comparison of the Effectiveness of Reboxetine versus Fluoxetine in Patients with Atypical Depression: A Single-Blind, Randomized Clinical Trial. Adv. Ther. 2006, 23, 974–987. [Google Scholar] [CrossRef]

- Waintraub, L.; Septien, L.; Azoulay, P. Efficacy and Safety of Tianeptine in Major Depression: Evidence from a 3-Month Controlled Clinical Trial versus Paroxetine. CNS Drugs 2002, 16, 65–75. [Google Scholar] [CrossRef]

- Daly, E.J.; Singh, J.B.; Fedgchin, M.; Cooper, K.; Lim, P.; Shelton, R.C.; Thase, M.E.; Winokur, A.; Van Nueten, L.; Manji, H.; et al. Efficacy and Safety of Intranasal Esketamine Adjunctive to Oral Antidepressant Therapy in Treatment-Resistant Depression: A Randomized Clinical Trial. JAMA Psychiatry 2018, 75, 139. [Google Scholar] [CrossRef]

- Detke, M.J.; Wiltse, C.G.; Mallinckrodt, C.H.; McNamara, R.K.; Demitrack, M.A.; Bitter, I. Duloxetine in the Acute and Long-Term Treatment of Major Depressive Disorder: A Placebo- and Paroxetine-Controlled Trial. Eur. Neuropsychopharmacol. 2004, 14, 457–470. [Google Scholar] [CrossRef]

- Khan, A.; Bose, A.; Alexopoulos, G.S.; Gommoll, C.; Li, D.; Gandhi, C. Double-Blind Comparison of Escitalopram and Duloxetine in the Acute Treatment of Major Depressive Disorder. Clin. Drug Investig. 2007, 27, 481–492. [Google Scholar] [CrossRef]

- Furey, M.L.; Drevets, W.C. Antidepressant Efficacy of the Antimuscarinic Drug Scopolamine: A Randomized, Placebo-Controlled Clinical Trial. Arch. Gen. Psychiatry 2006, 63, 1121. [Google Scholar] [CrossRef]

- Perahia, D.G.S.; Wang, F.; Mallinckrodt, C.H.; Walker, D.J.; Detke, M.J. Duloxetine in the Treatment of Major Depressive Disorder: A Placebo- and Paroxetine-Controlled Trial. Eur. Psychiatry 2006, 21, 367–378. [Google Scholar] [CrossRef]

- Abolfazli, R.; Hosseini, M.; Ghanizadeh, A.; Ghaleiha, A.; Tabrizi, M.; Raznahan, M.; Golalizadeh, M.; Akhondzadeh, S. Double-Blind Randomized Parallel-Group Clinical Trial of Efficacy of the Combination Fluoxetine plus Modafinil versus Fluoxetine plus Placebo in the Treatment of Major Depression. Depress. Anxiety 2011, 28, 297–302. [Google Scholar] [CrossRef]

- Sepehrmanesh, Z.; Fahimi, H.; Akasheh, G.; Davoudi, M.; Gilasi, H.; Ghaderi, A. The Effects of Combined Sertraline and Aspirin Therapy on Depression Severity among Patients with Major Depressive Disorder: A Randomized Clinical Trial. Electron. Physician 2017, 9, 5770–5777. [Google Scholar] [CrossRef]

- Han, C.; Wang, S.-M.; Bahk, W.-M.; Lee, S.-J.; Patkar, A.A.; Masand, P.S.; Mandelli, L.; Pae, C.-U.; Serretti, A. A Pharmacogenomic-Based Antidepressant Treatment for Patients with Major Depressive Disorder: Results from an 8-Week, Randomized, Single-Blinded Clinical Trial. Clin. Psychopharmacol. Neurosci. 2018, 16, 469–480. [Google Scholar] [CrossRef]

- Januel, D. Multicenter Double-Blind Randomized Parallel-Group Clinical Trial of Efficacy of the Combination Clomipramine (150 Mg/Day) plus Lithium Carbonate (750 Mg/Day) versus Clomipramine (150 Mg/Day) plus Placebo in the Treatment of Unipolar Major Depression. J. Affect. Disord. 2003, 76, 191–200. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Khan, S.R.; Shankles, E.B.; Polissar, N.L. Relative Sensitivity of the Montgomery-Asberg Depression Rating Scale, the Hamilton Depression Rating Scale and the Clinical Global Impressions Rating Scale in Antidepressant Clinical Trials. Int. Clin. Psychopharmacol. 2002, 17, 281–285. [Google Scholar] [CrossRef] [PubMed]

- Wade, A.; Gembert, K.; Florea, I. A Comparative Study of the Efficacy of Acute and Continuation Treatment with Escitalopram versus Duloxetine in Patients with Major Depressive Disorder. Curr. Med. Res. Opin. 2007, 23, 1605–1614. [Google Scholar] [CrossRef] [PubMed]

- Smith, G.C.S. Parachute Use to Prevent Death and Major Trauma Related to Gravitational Challenge: Systematic Review of Randomised Controlled Trials. BMJ 2003, 327, 1459–1461. [Google Scholar] [CrossRef]

- Yeh, R.W.; Valsdottir, L.R.; Yeh, M.W.; Shen, C.; Kramer, D.B.; Strom, J.B.; Secemsky, E.A.; Healy, J.L.; Domeier, R.M.; Kazi, D.S.; et al. Parachute Use to Prevent Death and Major Trauma When Jumping from Aircraft: Randomized Controlled Trial. BMJ 2018, 363, k5094. [Google Scholar] [CrossRef]

- Dexter, F.; Shafer, S.L. Narrative Review of Statistical Reporting Checklists, Mandatory Statistical Editing, and Rectifying Common Problems in the Reporting of Scientific Articles. Anesth. Analg. 2017, 124, 943–947. [Google Scholar] [CrossRef]

| Citation. | Efficacy Measure | Groups | Duration (Weeks) | Scale | Statistical Test | ES | ES Inter. |

|---|---|---|---|---|---|---|---|

| Abolfazli et al., 2011 [66] (Iran) | Total/Response/Remission | Fluoxetine-modafinil vs. Fluoxetina-placebo | 6 | HAMD-17 | t-test/ANOVA | No | - |

| Daly et al., 2017 [61] (USA) | Total/Response/Remission | Esketamine (28 mg) vs.Esketamine (56 mg) vs. Esketamine (84 mg) vs. placebo | 76 | MADRS | ANCOVA | No | Context |

| Detke et al., 2004 [62] (USA) | Total/Response/Remission | Duloxetine (80 mg) vs. Duloxetine (120 mg) vs. Paroxetine vs. Placebo | 9 + 24 | HAMD-17/MADRS | ANCOVA/MMRM/FET | No | Context |

| Han et al., 2018 [68] (Korea) | Total/Response/Remission | Neuropharmagen PGAT vs. treatment as usual | 8 | HAMD-17 | ANCOVA/FET | No | Context |

| Januel et al., 2003 [69] (France) | % Pre-post reduction/Remission/Response | Clomipramine-lithium vs. Clomipramine-placebo | 6 | MADRS | t-test/ANOVA/FET | No | - |

| Khan et al., 2007 [63] (USA) | Total/Response/Remission | Escitalopram vs. Duloxetine | 8 | MADRS/HAMD-24 | ANCOVA/MMRM | No | - |

| Furey and Drevets, 2006 [64] (USA) | Total/Response/Remission | Placebo vs. Scopolamine | 4 | MADRS | Mixed ANOVA | d | Context |

| Perahia et al., 2006 [65] (USA) | Total/Response/Remission | Placebo vs. Duloxetine (80 mg) vs. Duloxetine (120 mg) vs. Paroxetine | 8 + 24 | HAMD-17/MADRS | ANCOVA/MMRM | No | - |

| Sepehrmanesh et al., 2017 [67] (Iran) | Total | Sertraline-Aspirin vs. Sertraline-placebo | 8 | BDI | t-test | No | - |

| Wade et al., 2007 [71] (UK) | Total/Response/Remission | Escitalopram vs. Duloxetine | 24 | MADRS | ANCOVA | Mean change | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sánchez-Iglesias, I.; Martín-Aguilar, C. Significant Differences and Experimental Designs Do Not Necessarily Imply Clinical Relevance: Effect Sizes and Causality Claims in Antidepressant Treatments. J. Clin. Med. 2023, 12, 3181. https://doi.org/10.3390/jcm12093181

Sánchez-Iglesias I, Martín-Aguilar C. Significant Differences and Experimental Designs Do Not Necessarily Imply Clinical Relevance: Effect Sizes and Causality Claims in Antidepressant Treatments. Journal of Clinical Medicine. 2023; 12(9):3181. https://doi.org/10.3390/jcm12093181

Chicago/Turabian StyleSánchez-Iglesias, Iván, and Celia Martín-Aguilar. 2023. "Significant Differences and Experimental Designs Do Not Necessarily Imply Clinical Relevance: Effect Sizes and Causality Claims in Antidepressant Treatments" Journal of Clinical Medicine 12, no. 9: 3181. https://doi.org/10.3390/jcm12093181

APA StyleSánchez-Iglesias, I., & Martín-Aguilar, C. (2023). Significant Differences and Experimental Designs Do Not Necessarily Imply Clinical Relevance: Effect Sizes and Causality Claims in Antidepressant Treatments. Journal of Clinical Medicine, 12(9), 3181. https://doi.org/10.3390/jcm12093181