Speech Discrimination in Infancy Predicts Language Outcomes at 30 Months for Both Children with Normal Hearing and Those with Hearing Differences

Abstract

1. Introduction

- Is there a difference in speech discrimination abilities between CHH and CNH on /a-i/ and /ba-da/ discrimination?

- How do early speech discrimination abilities relate to later spoken receptive and expressive language abilities in CHH?

- At 30 months of age, will scores from a parent questionnaire of their child’s spoken language inventory significantly correlate with their assessed early receptive and expressive spoken language ability?

- Among CHH, what effect does audibility and hearing aid use have on early speech discrimination and spoken language abilities?

2. Materials and Methods

2.1. Participants

Participant Hearing Aids

2.2. Procedures and Materials

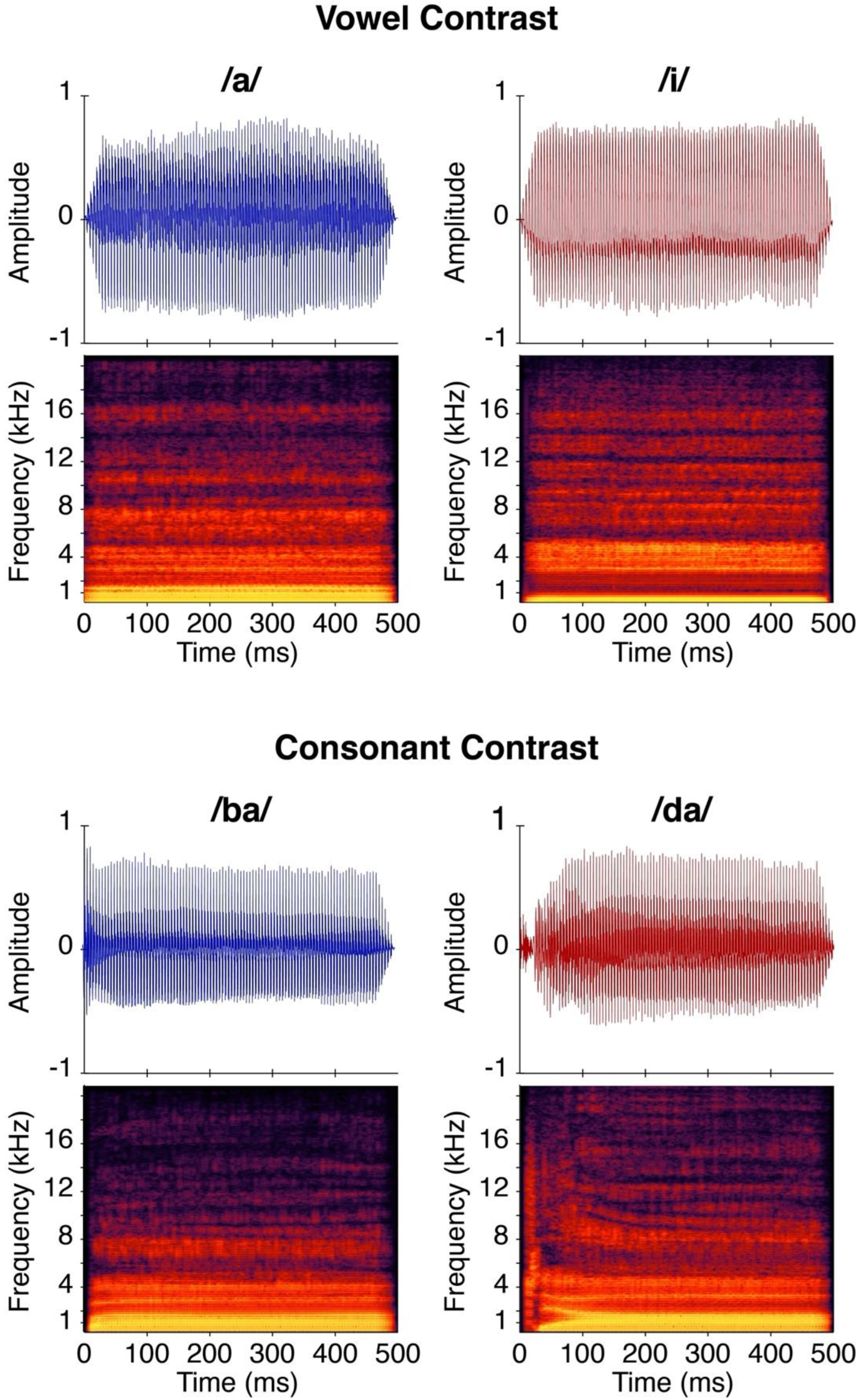

2.2.1. Stimuli

2.2.2. Speech Discrimination

inverse norm ((hits)/(hits + misses)).

2.2.3. Receptive and Expressive Spoken Language Assessment

2.2.4. Early Language Inventory

2.3. Statistical Analysis

3. Results

3.1. CHT Performance

- Question 1: Is there a difference in speech discrimination abilities between CHH and CNH on /a-i/ and /ba-da/ discrimination? Does maternal level of education impact speech discrimination?

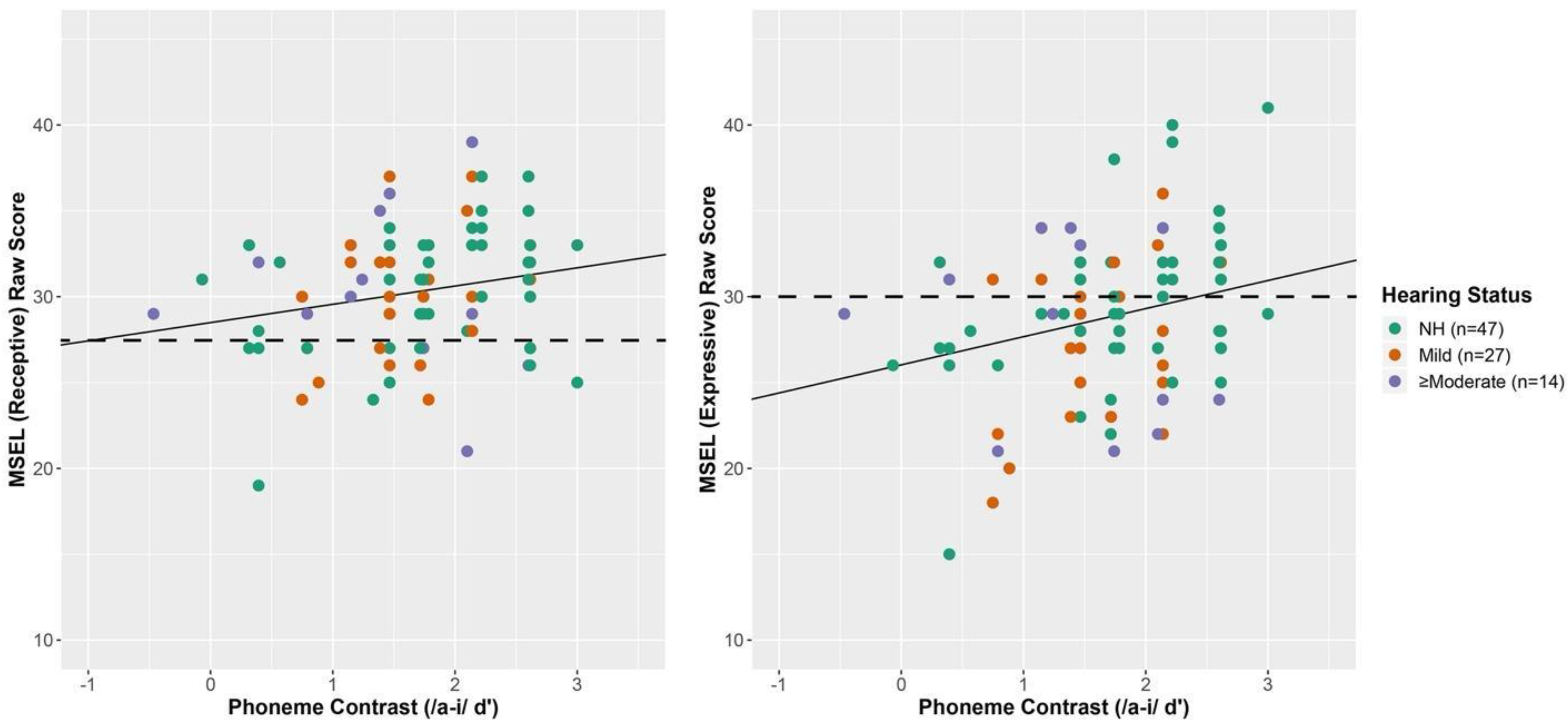

- Question 2: How do early speech discrimination abilities relate to later receptive and expressive spoken language abilities?

3.2. MSEL Receptive Spoken Language

3.3. MSEL Expressive Spoken Language

- Question 3. At 30 months of age, will scores from a parent questionnaire of their child’s spoken language inventory significantly correlate with their assessed early receptive and expressive spoken language ability?

3.4. MBCDI Words Produced

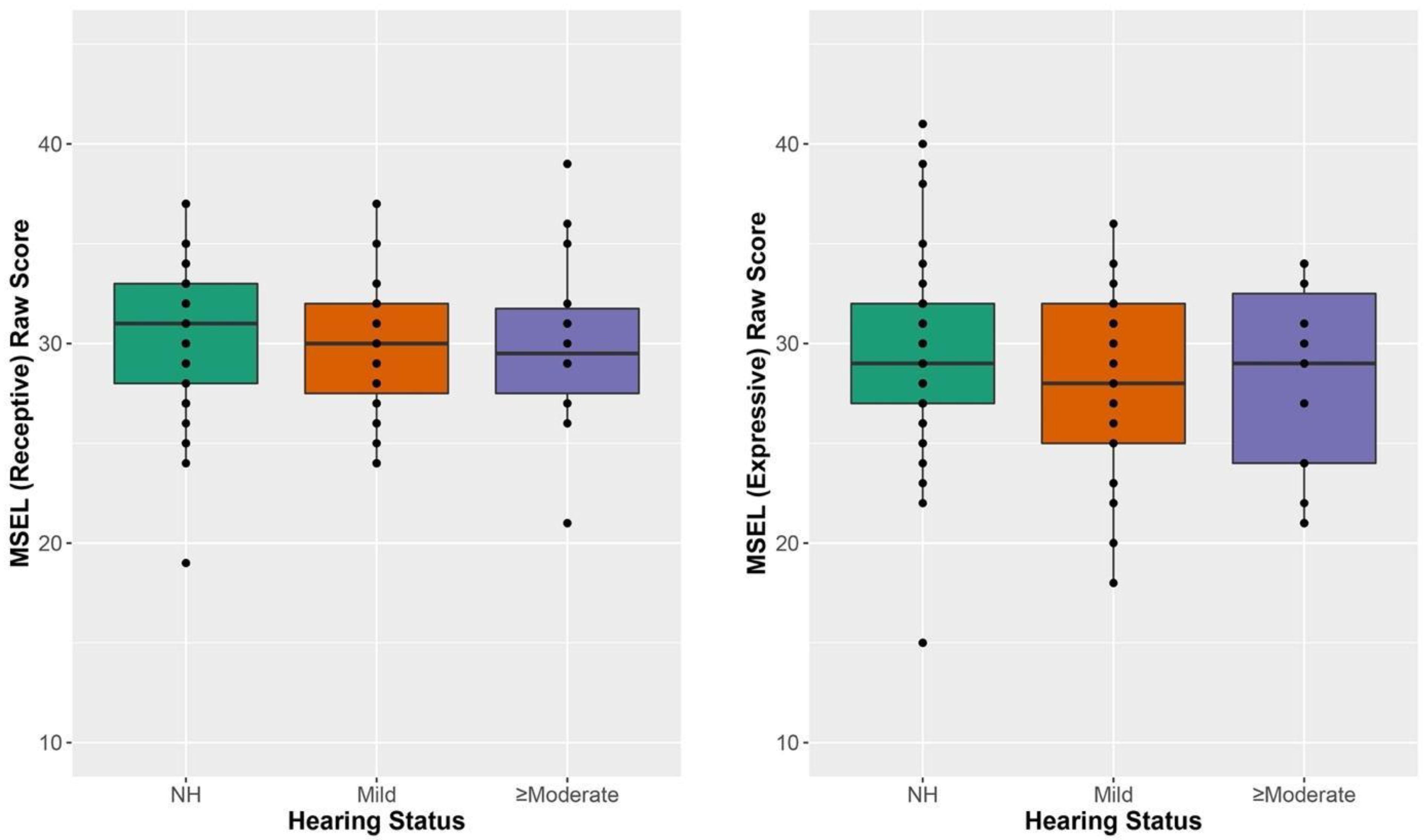

- Question 4: How do receptive and expressive language abilities in CHH compare to CNH?

- Question 5: Among CHH, what effect does audibility and hearing aid use have on early speech discrimination and language abilities?

3.5. Speech Intelligibility Index and Data Logging among CHH

4. Discussion

4.1. Speech Discrimination

4.2. Speech Discrimination and Language Abilities

4.3. Are the MBCDI and the MSEL Related?

4.4. Comparison of Receptive and Expressive Language

4.5. Is There an Effect on Audibility and Hearing Aid Use among CHH

5. Potential Clinical Implications

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- U. S. National Institutes of Health; Office of Medical Applications Research; U.S. National Institute on Deafness and Other Communication Disorders. Consensus Development Conference on Early Identification of Hearing Impairment in Infants and Young Children: NIH Consensus Development Conference, 1–3 March 1993, … National Institutes of Health, Bethesda, Maryland, 11th ed.; U.S. National Institutes of Health: Bethesda, MD, USA, 1993.

- Joint Committee on Infant Hearing 1994 Position Statement. American Academy of Pediatrics Joint Committee on Infant Hearing. Pediatrics 1995, 95, 152–156. [CrossRef]

- Mehl, A.L.; Thomson, V. The Colorado Newborn Hearing Screening Project, 1992–1999: On the Threshold of Effective Population-Based Universal Newborn Hearing Screening. Pediatrics 2002, 109, e7. [Google Scholar] [CrossRef] [PubMed]

- Vohr, B.R.; Carty, L.M.; Moore, P.E.; Letourneau, K. The Rhode Island hearing assessment program: Experience with statewide hearing screening (1993–1996). J. Pediatr. 1998, 133, 353–357. [Google Scholar] [CrossRef]

- Johnson, J.L.; Kuntz, N.L.; Sia, C.C.; White, K.R.; Johnson, R.L. Newborn hearing screening in Hawaii. Hawaii Med. J. 1997, 56, 352–355. [Google Scholar]

- Moeller, M.P. Early intervention and language development in children who are deaf and hard of hearing. Pediatrics 2000, 106, E43. Available online: http://www.ncbi.nlm.nih.gov/pubmed/10969127 (accessed on 15 February 2015). [CrossRef]

- Yoshinaga-Itano, C.; Sedey, A.L.; Coulter, D.K.; Mehl, A.L. Language of early- and later-identified children with hearing loss. Pediatrics 1998, 102, 1161–1171. Available online: http://www.ncbi.nlm.nih.gov/pubmed/9794949 (accessed on 1 May 2008). [CrossRef]

- Joint Committee on Infant Hearing. Year 2019 Position Statement: Principles and Guidelines for Early Hearing Detection and Intervention Programs. JEHDI 2019, 4, 1–44. [Google Scholar]

- Martinez, A.; Eisenberg, L.; Boothroyd, A.; Visser-Dumont, L. Assessing speech pattern contrast perception in infants: Early results on VRASPAC. Otol. Neurotol. 2008, 29, 183–188. [Google Scholar] [CrossRef]

- Uhler, K.M.; Baca, R.; Dudas, E.; Fredrickson, T. Refining Stimulus Parameters in Assessing Infant Speech Perception Using Visual Reinforcement Infant Speech Discrimination: Sensation Level. J. Am. Acad. Audiol. 2015, 26, 807–814. [Google Scholar] [CrossRef]

- Uhler, K.; Yoshinaga-Itano, C.; Gabbard, S.A.; Rothpletz, A.M.; Jenkins, H. Longitudinal infant speech perception in young cochlear implant users. J. Am. Acad. Audiol. 2011, 22, 129–142. [Google Scholar] [CrossRef]

- Uhler, K.M.; Kaizer, A.M.; Walker, K.A.; Gilley, P.M. Relationship between Behavioral Infant Speech Perception and Hearing Age for Children with Hearing Loss. J. Clin. Med. 2021, 10, 4566. [Google Scholar] [CrossRef] [PubMed]

- Uhler, K.M.; Gifford, R.H.; Forster, J.E.; Anderson, M.; Tierney, E.; Claycomb, S.D.; Werner, L.A. Refining Stimulus Parameters in Assessing Infant Speech Perception Using Visual Reinforcement Infant Speech Discrimination in Infants with and without Hearing Loss: Presentation Level. J. Am. Acad. Audiol. 2017, 29, 847–854. [Google Scholar] [CrossRef] [PubMed]

- Bagatto, M.P.; Moodie, S.T.; Seewald, R.C.; Bartlett, D.J. A Critical Review of Audiological Outcome Measures for Infants and Children. Trends Amplif. 2011, 15, 23–33. [Google Scholar] [CrossRef] [PubMed]

- McCreery, R.W.; Walker, E.A.; Spratford, M.; Oleson, J.; Bentler, R.; Holte, L.; Roush, P. Speech recognition and parent ratings from auditory development questionnaires in children who are hard of hearing. Ear Hear. 2015, 36, 60S–75S. [Google Scholar] [CrossRef]

- Uhler, K.; Gifford, R.H. Current trends in pediatric cochlear implant candidate selection and postoperative follow-up. Am. Speech Hear. Assoc. Monogr. 2014, 23, 309–325. [Google Scholar] [CrossRef]

- Tsao, F.M.; Liu, H.M.; Kuhl, P.K. Speech perception in infancy predicts language development in the second year of life: A longitudinal study. Child Dev. 2004, 75, 1067–1084. [Google Scholar] [CrossRef]

- Walker, E.A.; Tomblin, J.B.; Moeller, M.P.; Oleson, J.J.; Harrison, M.; Ambrose, S.E. Language Outcomes in Young Children with Mild to Severe Hearing Loss. Ear Hear. 2015, 36, 76S–91S. [Google Scholar] [CrossRef]

- Vohr, B.; Jodoin-Krauzyk, J.; Tucker, R.; Topol, D.; Johnson, M.J.; Ahlgren, M.; Pierre, L. Expressive vocabulary of children with hearing loss in the first 2 years of life: Impact of early intervention. J. Perinatol. 2011, 31, 274–280. [Google Scholar] [CrossRef]

- Sininger, Y.S.; Grimes, A.; Christensen, E. Auditory development in early amplified children: Factors influencing auditory-based communication outcomes in children with hearing loss. Ear Hear. 2010, 31, 166–185. [Google Scholar] [CrossRef]

- Tomblin, J.B.; Ambrose, S.E.; Walker, E.; Moeller, M.P. The influence of hearing aids on the speech and language development of children with hearing loss. JAMA 2014, 140, 403–409. [Google Scholar] [CrossRef]

- Ching, T.Y.C.; Zhang, V.W.; Johnson, E.E.; Van Buynder, P.; Hou, S.; Burns, L.; Button, L.; Flynn, C.; McGhie, K. Hearing aid fitting and developmental outcomes of children fit according to either the NAL or DSL prescription: Fit-to-target, audibility, speech and language abilities. Int. J. Audiol. 2018, 57, S41–S54. [Google Scholar] [CrossRef] [PubMed]

- Ching, T.Y.C.; Dillon, H.; Leigh, G.; Cupples, L. Learning from the Longitudinal Outcomes of Children with Hearing Impairment (LOCHI) study: Summary of 5-year findings and implications. Int. J. Audiol. 2018, 57, S105–S111. [Google Scholar] [CrossRef] [PubMed]

- McCreery, R.W.; Stelmachowicz, P.G. Audibility-based predictions of speech recognition for children and adults with normal hearing. J. Acoust. Soc. Am. 2011, 130, 4070–4081. [Google Scholar] [CrossRef] [PubMed]

- Scollie, S. Children’s speech recognition scores: The speech intelligibility index and proficiency factors for age and hearing level. Ear Hear. 2008, 29, 543–556. [Google Scholar] [CrossRef] [PubMed]

- Humes, L. Understanding the speech-understanding problems of the hearing impaired. J. Am. Acad. Audiol. 1991, 2, 59–70. [Google Scholar]

- Rallapalli, V.; Anderson, M.; Kates, J.; Balmert, L.; Sirow, L.; Arehart, K.; Souza, P. Quantifying the Range of Signal Modification in Clinically Fit Hearing Aids. Ear Hear. 2019, 1, 433. [Google Scholar] [CrossRef]

- Scollie, S.; Seewald, R.; Cornelisse, L.; Moodie, S.; Bagatto, M.; Laurnagaray, D.; Beaulac, S.; Pumford, J. The Desired Sensation Level multistage input/output algorithm. Trends Amplif. 2005, 9, 159–197. [Google Scholar] [CrossRef]

- McCreery, R.W.; Bentler, R.A.; Roush, P.A. Characteristics of hearing aid fittings in infants and young children. Ear Hear. 2013, 34, 701–710. [Google Scholar] [CrossRef]

- McCreery, R.W.; Walker, E.A.; Spratford, M.; Bentler, R.; Holte, L.; Roush, P.; Oleson, J.; Van Buren, J.; Moeller, M.P. Longitudinal Predictors of Aided Speech Audibility in Infants and Children. Ear Hear. 2015, 36, 24–37. [Google Scholar] [CrossRef]

- Strauss, S.; van Dijk, C. Hearing instrument fittings of pre-school children: Do we meet the prescription goals? Int. J. Audiol. 2008, 47, S62–S71. [Google Scholar] [CrossRef]

- Fenson, L.; Marchman, V.A.; Thal, D.J.; Dale, P.S.; Reznick, J.S.; Bates, E. MacArthur-Bates Communicative Development Inventories: User’s Guide and Technical Manual; Brookes Publishing: Baltimore, MD, USA, 2007. [Google Scholar]

- Mullen, E. Mullen Scales of Early Learning; AGS: Circle Pines, MN, USA, 1995. [Google Scholar]

- Bradley, R.H.; Corwyn, R.F. Socioeconomic Status and Child Development. Annu. Rev. Psychol. 2002, 53, 371–399. [Google Scholar] [CrossRef] [PubMed]

- JCIH. Year 2007 position statement: Principles and guidelines for early hearing detection and intervention programs. Pediatrics 2007, 120, 898–921. [Google Scholar] [CrossRef] [PubMed]

- American Academy of Audiology Task Force on Pediatric Amplification. American Academy of Audiology Clinical Practice Guidelines Pediatric Amplification American Academy of Audiology Clinical Practice Guidelines. 2011. Available online: http://audiology-web.s3.amazonaws.com/migrated/PediatricAmplificationGuidelines.pdf_539975b3e7e9f1.74471798.pdf (accessed on 1 August 2022).

- Macmillan, N.; Creelman, C. Detection Theory: A User’s Guide; Routledge: Mahwah, NJ, USA, 2005. [Google Scholar]

- McArdle, R.; Hnath-Chisolm, T. Speech Audiometry. In Handbook of Clinical Audiology, 6th ed.; Katz, J., Medwetsky, L., Burkard, R., Hood, L., Eds.; Lippincott Williams and Wilkins: Baltimore, MD, USA, 2009. [Google Scholar]

- Riccio, C.A.; Sullivan, J.R.; Cohen, M.J. Neuropsychological Assessment and Intervention for Childhood and Adolescent Disorders; Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.r-project.org/ (accessed on 1 August 2022).

- Bates, D.; Mächler, M.; Bolker, B.M.; Walker, S.C. Fitting linear mixed-effects models using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Brockhoff, P.B.; Christensen, R.H.B. lmerTest package: Tests in linear mixed effects models. J. Stat. Softw. 2017, 82, 1–26. [Google Scholar] [CrossRef]

- Snijders, T.A.B.; Bosker, R.J. Multivariate multilevel models. In Multilevel Analysis: An Introduction to Basic and Advanced Multilevel Modeling; Sage: Los Angeles, CA, USA, 2012. [Google Scholar]

- Uhler, K.; Warner-Czyz, A.; Gifford, R. Pediatric minimum speech test battery. J. Am. Acad. Audiol. 2017, 28, 232–247. [Google Scholar] [CrossRef] [PubMed]

- Harrison, M.; Roush, J. Age of suspicion, identification, and intervention for infants and young children with hearing loss: A national study. Ear Hear. 1996, 17, 55–62. [Google Scholar] [CrossRef] [PubMed]

- Kuhl, P.K.; Williams, K.A.; Lacerda, F.; Kenneth Stevens, B.; Lindblom, N. Linguistic Experience Alters Phonetic Perception in Infants by 6 Months of Age. Science 1992, 255, 606–608. [Google Scholar] [CrossRef]

- Werker, J.F.; Tees, R.C. Cross-language speech perception: Evidence for perceptual reorganization during the first year of life. Infant Behav. Dev. 1984, 7, 49–63. [Google Scholar] [CrossRef]

- Kooijman, V.; Junge, C.; Johnson, E.K.; Hagoort, P.; Cutler, A. Predictive brain signals of linguistic development. Front. Psychol. 2013, 4, 1–13. [Google Scholar] [CrossRef]

- Cristia, A.; Seidl, A.; Junge, C.; Soderstrom, M.; Hagoort, P. Predicting individual variation in language from infant speech perception measures. Child Dev. 2014, 85, 1330–1345. [Google Scholar] [CrossRef]

- Nozza, R.J.; Miller, S.L.; Rossman, R.N.; Bond, L.C. Reliability and validity of infant speech-sound discrimination-in-noise thresholds. J. Speech Hear. Res. 1991, 34, 643–650. Available online: http://www.ncbi.nlm.nih.gov/pubmed/2072689 (accessed on 1 August 2022). [CrossRef] [PubMed]

- Nozza, R.J. Infant speech-sound discrimination testing: Effects of stimulus intensity and procedural model on measures of performance. J. Acoust. Soc. Am. 1987, 81, 1928–1939. Available online: http://www.ncbi.nlm.nih.gov/pubmed/3611513 (accessed on 1 August 2022). [CrossRef] [PubMed]

- Nozza, R.J. Thresholds are not enough: Understanding how infants’ Process. Speech has a role in how we manage hearing loss. In A Sound Foundation through Early Amplification; Phonak AG: Chicago, IL, USA, 2000; pp. 47–54. [Google Scholar]

- CDC. Summary of Diagnostics Among Infants Not Passing Hearing Screening. Centers Dis. Control Prev. 2018. Available online: https://www.cdc.gov/ncbddd/hearingloss/2015-data/06-diagnostics.html (accessed on 1 August 2022).

- Ching, T.Y.C.; Dillon, H.; Button, L.; Seeto, M.; Van Buynder, P.; Marnane, V.; Cupples, L.; Leigh, G. Age at Intervention for Permanent Hearing Loss and 5-Year Language Outcomes. Pediatrics 2017, 140, e20164274. [Google Scholar] [CrossRef] [PubMed]

- Yoshinaga-Itano, C.; Sedey, A.L.; Wiggin, M.; Chung, W. Early Hearing Detection and Vocabulary of Children with Hearing Loss. Pediatrics 2017, 140, e20162964. [Google Scholar] [CrossRef]

- Tomblin, J.B.; Oleson, J.; Ambrose, S.; Walker, E.; Mccreery, R.; Moeller, M. Aided Hearing Moderates the Academic Outcomes of Children with Mild to Severe Hearing Loss. Ear Hear. 2020, 41, 1. [Google Scholar] [CrossRef]

- Rescorla, L.; Schwartz, E. Outcome of toddlers with specific expressive language delay. Appl. Psycholinguist. 1990, 11, 393–407. [Google Scholar] [CrossRef]

| Demographic | CNH | CHH | Statistical Test | p-Value |

|---|---|---|---|---|

| Male | 23 (46.9%) | 21 (51.2%) | ||

| Age at CHT | M = 8.99 (SD = 1.79) | M = 9.91 (SD = 2.21) | t(88) = 2.18 | p = 0.03 |

| Age at MSEL | M = 30.61 (SD = 0.71) | M = 30.79 (SD = 0.93) | t(88) = 1.06 | p = 0.29 |

| Age at MBCDI | M = 30.48 (SD = 0.49) | M = 30.66 (SD = 1.22) | t(82) = 0.89 | p = 0.38 |

| Race and Ethnicity | ||||

| White | 45 (91.8%) | 36 (87.8%) | ||

| Black | 2 (4.1%) | 0 (0.0%) | ||

| Asian | 1 (2.0%) | 0 (0.0%) | ||

| More than One Race | 1 (2.0%) | 5 (12.2%) | ||

| Hispanic/Latino | 12 (24.5%) | 5 (12.2%) | ||

| Maternal Level of Education | ||||

| High School Diploma or lower | 8 (16.3%) | 10 (24.3%) | ||

| Post-secondary attendance | 4 (8.2%) | 7 (17.1%) | ||

| College Graduate | 20 (40.8%) | 13 (31.7%) | ||

| Post-Graduate Degree | 17 (34.7%) | 11 (26.9%) |

| Mean (SD) | Range (Min, Max) | |

|---|---|---|

| Age at Hearing Aid Fit (months) | 2.79 (0.88) | 1.28, 6.17 |

| Datalogging at CHT (average hours/day) | 6.39 (4.16) | 0.0, 16.0 |

| Missing | 4 (9.7%) | |

| SII at 50 dB at CHT | 0.74 (0.19) | 0.23, 0.97 |

| SII at 60 dB at CHT | 0.83 (0.14) | 0.35, 0.96 |

| SII at 70 dB at CHT | 0.82 (0.12) | 0.44, 0.94 |

| Missing (50 and 70) | 2 (4.9%) | |

| Missing (60) | 3 (7.3%) | |

| Datalogging at MSEL (average hours/day) | 8.26 (4.70) | 0.10, 19.70 |

| Missing | 11(27%) | |

| SII at 50 dB at MSEL | 0.72 (0.18) | 0.12, 0.92 |

| SII at 60 dB at MSEL | 0.82 (0.13) | 0.36, 0.96 |

| SII at 70 dB at MSEL | 0.84 (0.09) | 0.53, 0.94 |

| Missing at any level | 11 (26.8%) * |

| /a-i/ Contrast | CNH | CHH | Chi-Square Test | p-Value |

|---|---|---|---|---|

| 60 dB | 18 (38%) | 7 (17%) | χ2(1) = 2.754 | 0.388 |

| 50 dB | 14 (30%) | 15 (37%) | χ2(1) = 0.230 | 1.000 |

| 70 dB | 6 (13%) | 9 (22%) | χ2(1) = 0.922 | 1.000 |

| Did not reach criterion | 9 (19%) | 10 (24%) | χ2(1) = 0.229 | 1.000 |

| /ba-da/ Contrast | ||||

| 60 dB | 8 (19%) | 9 (22%) | χ2(1) = 0.127 | 1.000 |

| 50 dB | 8 (19%) | 5 (12%) | χ2(1) = 0.427 | 1.000 |

| 70 dB | 4 (14%) | 9 (22%) | χ2(1) = 1.991 | 0.633 |

| Did not reach criterion | 21 (49%) | 17 (42%) | χ2(1) = 0.125 | 1.000 |

| /a-i/ Contrast | ||||||

| Factor | F | df | Error df | p-Value | Cohen’s f2 | |

| Presentation Level | 7.572 | 2 | 213 | 0.0007 | 0.07 | |

| Hearing Status | 0.597 | 2 | 81 | 0.553 | 0.01 | |

| /ba-da/ Contrast | ||||||

| Factor | F | df | Error df | p-Value | Cohen’s f2 | |

| Presentation Level | 4.225 | 2 | 213 | 0.016 | 0.04 | |

| Hearing Status | 0.485 | 2 | 81 | 0.618 | 0.01 | |

| /a-i/ Contrast | t | df | p-Value |

| 50 vs. 60 dB | −2.025 | 213 | 0.332 |

| 50 vs. 70 dB | −3.890 | 213 | 0.002 |

| 60 vs. 70 dB | −1.866 | 213 | 0.426 |

| /ba-da/ Contrast | t | df | p-Value |

| 50 vs. 60 dB | −2.138 | 213 | 0.272 |

| 50 vs. 70 dB | −2.775 | 213 | 0.066 |

| 60 vs. 70 dB | −0.637 | 213 | 0.988 |

| Receptive Vocabulary | ||||||

| Factor | Estimate (Standard Error) | 95% Confidence Interval | t-Statistic | p-Value | Cohen’s f2 | |

| Intercept | 26.2 (1.38) | [23.5,28.9] | 19.03 | <0.0001 | -- | |

| Discrimination of /a-i/ Contrast | 1.06 (0.53) | [0.0,2.1] | 2.00 | 0.049 | 0.07 | |

| Maternal Level of Education | 0.48 (0.24) | [0.0,1.0] | 1.98 | 0.051 | 0.05 | |

| Expressive Vocabulary | ||||||

| Factor | Estimate (Standard Error) | 95% Confidence Interval | t-Statistic | p-Value | Cohen’s f2 | |

| Intercept | 21.67 (1.63) | [18.5,24.9] | 13.32 | <0.0001 | -- | |

| Discrimination of /a-i/ Contrast | 1.64 (0.63) | [0.4,2.9] | 2.61 | 0.011 | 0.12 | |

| Maternal Level of Education | 0.93 (0.29) | [0.4,1.5] | 3.24 | 0.002 | 0.12 | |

| Receptive Vocabulary | ||||||

| Factor | F | df | Error df | p-Value | Cohen’s f2 | |

| Time | 3.615 | 2 | 143 | 0.029 | 0.05 | |

| Mullen Scales of Early Learning Score | 26.885 | 1 | 81 | <0.0001 | 0.33 | |

| Hearing Status | 1.987 | 2 | 81 | 0.144 | 0.05 | |

| Time × MSEL Score | 5.121 | 2 | 143 | 0.0071 | 0.07 | |

| Expressive Vocabulary | ||||||

| Factor | F | df | Error df | p-Value | Cohen’s f2 | |

| Time | 4.841 | 2 | 140 | 0.009 | 0.07 | |

| MSEL Score | 15.038 | 1 | 81 | 0.0002 | 0.19 | |

| Hearing Status | 1.601 | 2 | 81 | 0.208 | 0.04 | |

| Time × MSEL Score | 7.095 | 2 | 140 | 0.001 | 0.10 | |

| Model Including Receptive Vocabulary | |||

| Time | t | df | p-Value |

| 16 vs. 24 months | −3.312 | 141 | 0.003 |

| 16 vs. 30 months | −4.106 | 143 | 0.0002 |

| 24 vs. 30 months | −0.652 | 143 | 0.792 |

| Model Including Expressive Vocabulary | |||

| Time | t | df | p-Value |

| 16 vs. 24 months | −3.295 | 141 | 0.004 |

| 16 vs. 30 months | −4.157 | 142 | 0.0002 |

| 24 vs. 30 months | −0.718 | 142 | 0.753 |

| Hearing Status | MSEL Receptive Raw Score Mean (Standard Deviation) | MSEL Expressive Raw Score Mean (Standard Deviation) | MBCDI Percentile at 30 Months Mean (Standard Deviation) |

|---|---|---|---|

| CNH | 30.65 (3.65) | 29.47 (4.54) | 51.59 (29.24) |

| Mild CHH | 29.85 (3.50) | 28.00 (4.57) | 50.92 (32.66) |

| ≥Moderate CHHs | 30.14 (4.52) | 28.07 (4.92) | 31.50 (23.01) |

| Variables | Pearson r | df | p-Value |

|---|---|---|---|

| Aided SII (50 dB) vs. /a-i/ discrimination | −0.016 | 36 | 0.923 |

| Aided SII (60 dB) vs. /a-i/ discrimination | 0.334 | 21 | 0.120 |

| Aided SII (70 dB) vs. /a-i/ discrimination | 0.513 | 23 | 0.009 |

| Aided SII (50 dB) vs. /ba-da/ discrimination | 0.153 | 32 | 0.365 |

| Aided SII (60 dB) vs. /ba-da/ discrimination | 0.065 | 35 | 0.716 |

| Aided SII (70 dB) vs. /ba-da/ discrimination | 0.278 | 32 | 0.096 |

| Aided SII (50 dB at 9 mos) vs. MSEL receptive vocabulary | −0.097 | 37 | 0.556 |

| Aided SII (60 dB at 9 mos) vs. MSEL receptive vocabulary | −0.114 | 36 | 0.496 |

| Aided SII (70 dB at 9 mos) vs. MSEL receptive vocabulary | −0.094 | 37 | 0.569 |

| Aided SII (50 dB at 9 mos) vs. MSEL expressive vocabulary | −0.091 | 37 | 0.582 |

| Aided SII (60 dB at 9 mos) vs. MSEL expressive vocabulary | −0.057 | 36 | 0.732 |

| Aided SII (70 dB at 9 mos) vs. MSEL expressive vocabulary | −0.094 | 37 | 0.551 |

| Aided SII (50 dB at 30 mos) vs. MSEL receptive vocabulary | −0.090 | 28 | 0.638 |

| Aided SII (60 dB at 30 mos) vs. MSEL receptive vocabulary | −0.070 | 28 | 0.711 |

| Aided SII (70 dB at 30 mos) vs. MSEL receptive vocabulary | −0.048 | 28 | 0.799 |

| Aided SII (50 dB at 30 mos) vs. MSEL expressive vocabulary | 0.030 | 28 | 0.876 |

| Aided SII (60 dB at 30 mos) vs. MSEL expressive vocabulary | 0.086 | 28 | 0.652 |

| Aided SII (70 dB at 30 mos) vs. MSEL expressive vocabulary | 0.115 | 28 | 0.545 |

| Datalogging (9 mos) vs. MSEL receptive vocabulary | −0.259 | 35 | 0.122 |

| Datalogging (9 mos) vs. MSEL expressive vocabulary | −0.240 | 35 | 0.153 |

| Datalogging (9 mos) vs. maternal level of education | 0.074 | 35 | 0.662 |

| Datalogging (30 mos) vs. MSEL receptive vocabulary | −0.072 | 28 | 0.704 |

| Datalogging (30 mos) vs. MSEL expressive vocabulary | 0.020 | 28 | 0.916 |

| Datalogging (30 mos) vs. maternal level of education | 0.466 | 28 | 0.009 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Uhler, K.M.; Anderson, S.R.; Yoshinaga-Itano, C.; Walker, K.A.; Hunter, S. Speech Discrimination in Infancy Predicts Language Outcomes at 30 Months for Both Children with Normal Hearing and Those with Hearing Differences. J. Clin. Med. 2022, 11, 5821. https://doi.org/10.3390/jcm11195821

Uhler KM, Anderson SR, Yoshinaga-Itano C, Walker KA, Hunter S. Speech Discrimination in Infancy Predicts Language Outcomes at 30 Months for Both Children with Normal Hearing and Those with Hearing Differences. Journal of Clinical Medicine. 2022; 11(19):5821. https://doi.org/10.3390/jcm11195821

Chicago/Turabian StyleUhler, Kristin M., Sean R. Anderson, Christine Yoshinaga-Itano, Kerry A. Walker, and Sharon Hunter. 2022. "Speech Discrimination in Infancy Predicts Language Outcomes at 30 Months for Both Children with Normal Hearing and Those with Hearing Differences" Journal of Clinical Medicine 11, no. 19: 5821. https://doi.org/10.3390/jcm11195821

APA StyleUhler, K. M., Anderson, S. R., Yoshinaga-Itano, C., Walker, K. A., & Hunter, S. (2022). Speech Discrimination in Infancy Predicts Language Outcomes at 30 Months for Both Children with Normal Hearing and Those with Hearing Differences. Journal of Clinical Medicine, 11(19), 5821. https://doi.org/10.3390/jcm11195821