Abstract

Background: Heart rate variability (HRV) and electrocardiogram (ECG)-derived respiration (EDR) have been used to detect sleep apnea (SA) for decades. The present study proposes an SA-detection algorithm using a machine-learning framework and bag-of-features (BoF) derived from an ECG spectrogram. Methods: This study was verified using overnight ECG recordings from 83 subjects with an average apnea–hypopnea index (AHI) 29.63 (/h) derived from the Physionet Apnea-ECG and National Cheng Kung University Hospital Sleep Center database. The study used signal preprocessing to filter noise and artifacts, ECG time–frequency transformation using continuous wavelet transform (CWT), BoF feature generation, machine-learning classification using support vector machine (SVM), ensemble learning (EL), k-nearest neighbor (KNN) classification, and cross-validation. The time length of the spectrogram was set as 10 and 60 s to examine the required minimum spectrogram window time length to achieve satisfactory accuracy. Specific frequency bands of 0.1–50, 8–50, 0.8–10, and 0–0.8 Hz were also extracted to generate the BoF to determine the band frequency best suited for SA detection. Results: The five-fold cross-validation accuracy using the BoF derived from the ECG spectrogram with 10 and 60 s time windows were 90.5% and 91.4% for the 0.1–50 Hz and 8–50 Hz frequency bands, respectively. Conclusion: An SA-detection algorithm utilizing BoF and a machine-learning framework was successfully developed in this study with satisfactory classification accuracy and high temporal resolution.

1. Introduction

Sleep apnea (SA) is a sleep disorder with high prevalence, particularly among middle-aged and elderly subjects. SA prevalence in the overall population ranges from 9% to 38% [1]. Frost and Sullivan (2016) estimated the annual medical cost of undiagnosed SA among U.S. adults to be nearly USD 149.6 billion per year [2]. To reduce the number of undiagnosed SA patients, sleep examinations with fewer channels of physiological signals, such as type III home sleep testing (HST), have received increasing attention in recent years. Facco et al. demonstrated that HST has relatively high intraclass correlation and unconditional agreement with in-lab polysomnography (PSG) testing in SA diagnosis [3]. Dalewski et al. estimated the usefulness of employing modified Mallampati scores (MMP) and the upper airway volume (UAV) to diagnose obstructive SA among patients with breathing-related sleep disorders compared to the more expensive and time-consuming PSG [4]. Philip et al. showed that self-reported sleepiness at the wheel is a better predictor than the apnea hypopnea index (AHI) for sleepiness-related accidents among obstructive SA patients [5]. Furthermore, Kukwa et al. determined that there was no significant difference in the percentage of supine sleep between in-lab PSG and HST. However, women presented more supine sleep with HST than with PSG [6]. Thus, the development of sleep technologies such as type III or type IV monitors may be beneficial for the development of sleep medicines due to the convenience of such medicines compared to PSG.

SA episodes are generally accompanied by abnormal breathing [7] and relative sympathetic nervous system hyperactivity [8]. Several studies attempted to utilize electrocardiogram (ECG) technology to develop automatic SA-detection algorithms since ECG can yield both electrocardiogram-derived respiration (EDR), representing breathing activity [9], and heart rate variability (HRV), representing autonomous nervous function indexes [10]. Most existing automatic SA-detection algorithms using ECG can be categorized into EDR-, HRV-, and cardiopulmonary coupling (CPC)-related approaches.

Many studies have shown that EDR is correlated with respiratory variation [11,12,13,14]. EDR is used to estimate respiration based on changes in the morphology of the ECG. Varon et al. proposed a method to detect SA by using an EDR signal derived from a single-lead ECG [12]. In this study, the ECG was transformed into EDR signals in three different ways; moreover, two novel feature sets were proposed (principal components for QRS complexes and orthogonal subspace projections between respiration and heart rate) and compared with the two most popular features in heart rate variability analysis. The accuracy was 85% for the discrimination of both apnea and hypopneas together. An algorithm based on deep learning approaches for automatically extracting features and detecting SA events in an EDR signal was proposed by Steenkiste et al. [14]. The authors employed a balanced bootstrapping scheme to extract efficient respiratory information and trained long short-term memory (LSTM) networks to produce a robust and accurate model.

Several studies have investigated the association between HRV and SA, and many SA-detection algorithms were developed using the HRV parameter [15,16,17,18,19,20]. HRV assesses the variability in periods between consecutive heartbeats, which change under the control of the autonomic nervous system. Quiceno-Manrique et al. [16] transformed an HRV signal into the time–frequency domain using short-time Fourier transform (STFT) and extracted indices including spectral centroids, spectral centroid energy, and cepstral coefficients to detect SA with 92.67% accuracy. However, these approaches required the collection of at least 3 min of ECG signals to include low-frequency components. Martin-Gonzalez et al. [19] developed a detection algorithm using machine-learning methods to characterize and classify SA based on an HRV feature selection process, focusing on the underlying process from a cardiac-rate point of view. The authors generated linear and nonlinear variables such as Cepstrum coefficients (CCs), Filterbanks (Fbank), and detrended fluctuation analysis (DFA). This algorithm achieved 84.76% accuracy, 81.45% sensitivity, 86.82% specificity, and 0.87 area under the receiver operating characteristic (ROC) curve AUC value. Singh et al. [20] implemented a convolutional neural network (CNN) using a pre-trained AlexNet model in order to improve the detection performance of obstructive SA based on a single-lead ECG scalogram with accuracy of 86.22%, sensitivity of 90%, specificity of 83.8%, and an AUC value of 0.8810.

One of the classic analysis tools for SA disorders is electrocardiogram-derived CPC sleep spectrograms using RR interval and EDR coupling characteristics. Thomas et al. [21] used CPC to evaluate ECG-based cardiopulmonary interactions against standard sleep staging among 35 PSG test subjects (including 15 healthy subjects). Spectrogram features included normal-to-normal sinus inter-beat interval series and corresponding EDR signals. However, using the kappa statistic, agreement with standard sleep staging was poor, with 62.7% for the training set and 43.9% for the testing set. Meanwhile, the cyclic alternating pattern scoring was higher, with 74% for the training set and 77.3% for the testing set. Guo et al. [22] found that CPC high frequency coupling (HFC) proportionally reduced sleep disorder behavior and that HFC durations were negatively correlated with the nasal-flow-derived respiratory disturbance index. Liu et al. [23] developed a CPC method based on the Hilbert–Huang transform (HHT) and found that HHT-CPC spectra provided better temporal and frequency resolution (8 s and 0.001 Hz, respectively) compared to the original CPC (8.5 min and 0.004 Hz, respectively).

Previous studies showed that HRV, EDR, and CPC features can be used to develop automated SA-classification algorithms. However, the RR interval and EDR need a relatively longer time to collect the data; hence, the temporal resolution is poor for algorithms based on HRV or EDR features. Sleep CPC is a good visualization tool for RR interval and EDR coupling since it can indicate sleep quality or sleep-based breathing disorders. However, CPC’s temporal resolution for sleep is also poor, as breathing- disorder patterns present large variance, making automated classification difficult. Thus, developing an automated SA algorithm with high temporal resolution was taken as the main research aim of the present study. In this work, we used ECG spectrogram features to develop an algorithm that employs bag-of-features (BoF) techniques and machine-learning classifiers to identify various patterns in SA episodes from ECG spectrograms. Penzel et al. [24] initially exhibited different patterns on the ECG time–frequency spectrogram between normal and SA episodes. However, the authors did not develop automatic classification for SA episodes. Thus, the aim of this study was to develop automatic SA classification based on ECG time–frequency spectrograms.

2. Materials and Methods

2.1. Sleep Apnea ECG Database

Two datasets, the National Cheng Kung University Hospital Sleep Center Apnea Database (NCKUHSCAD) and Physionet Apnea-ECG Database [25] (PAED), were used in this study. NCKUHSCAD was used to observe differences in the apnea and normal periods of the ECG spectrogram. PAED was used to validate the proposed algorithm, as PAED is a public database, which enabled us to compare our results with the existing literature. NCKUHSCAD included information collected from patients who underwent overnight PSG in the Sleep Center of NCKU Hospital (Taiwan) between December 2016 and August 2018. Patients with the following conditions were excluded: PSG recordings for continuous positive airway pressure ventilation titration, the use of hypnotic medicine during the test, and missing data. The study protocol was approved by the Institutional Review Board of NCKUH (protocol number: B-ER-108-426). The database included 50 recordings sampled at 200 Hz, with annotations provided for 10 and 60 s as either normal breathing, hypopnea, or apnea disordered breathing. Hypopnea was defined as a ≥30% reduction in baseline airflow for at least 10 seconds combined with either arousal in an electroencephalogram for ≥3 seconds or oxygen desaturation ≥ 3%. Apnea was defined as a ≥90% decrease in airflow over a 10-second period with concomitant respiratory-related chest wall movement for obstructive apnea [26]. In this study, ECG recordings with hypopnea and apnea annotations were merged together as the apnea group.

The 50 recordings from NCKUHSCAD were rearranged into three groups (APEG-A, APEG-B, and APEG-C) (APEG is the abbreviation of APnEa Group) to fulfill different purposes during algorithm performance evaluation.

- NCKUHSCAD-APEG-A included 11 participants who provided severe SA recordings (30 < AHI ≤ 45), with an average ± standard deviation AHI of 39.25 ± 5.78/h.

- NCKUHSCAD-APEG-B included 35 participants who suffered from SA (AHI ≥ 10), with an average ± standard deviation AHI of 39.83 ± 23.08/h.

- NCKUHSCAD-APEG-C included the whole database, with an average ± standard deviation AHI of 29.02 ± 25.49/h.

PAED [25] was adopted to develop and verify the proposed algorithm’s performance. The ECG recordings of PAED were sampled at 100 Hz, and sleep recording durations ranged between 7 and 10 h depending on the participant. The database included 35 recordings, with SA annotations provided on a minute-by-minute basis—i.e., each minute of ECG recording was annotated as either N (normal breathing) or A (disordered breathing including the occurrence of an apnea episode).

PAED included three participant groups: A: apnea, B: borderline, and C: healthy (the control), with 20, 5, and 10 participants, respectively. Those in Group A were known to be related to people definitely suffering from obstructive SA and had total apnea durations > 100 min for each recording. The range of ages among subjects in this group was 38–63, and the AHI of this group’s subjects ranged between 21 and 83; those in Group B were borderline and had total apnea episode durations of 10–96 min. The age range within this group was 42–53, and the AHI ranged between 0 and 25; those in Group C had no obstructive SA or very low levels of disease and total apnea durations between 0 and 3 min [27]. Recordings b05 from Group B and c05 from Group C were excluded because recording b05 contained a grinding noise, and c05 was identical to c06. Therefore, only 33 recordings were ultimately included in this study [28].

The remaining 33 recordings from the PAED [28] were also regrouped into three categories:

- PAED-APEG-A included 8 participants with severe SA recordings from group A (30 < AHI ≤ 45, average ± standard deviation AHI: 39.14 ± 3.60/h, age 51.38 ± 6.43 years, and weight 87.88 ± 9.42 kg).

- PAED-APEG-B included participants who suffered from SA—i.e., all of group A (21 < AHI < 83) and group B (0 < AHI < 25, except b05). Thus, APEG-B included 1 female and 23 males, with an average ± standard deviation AHI of 41.55 ± 23.45/h, an age of 51.42 ± 6.50 years, and a weight of 93.04 ± 16.67 kg.

- PAED-APEG-C included the whole database (excluding b05 and c05). Thus, APEG-C included 4 females and 29 males, with an average ± standard deviation AHI of 30.23 ± 27.35/h, an age of 46.85 ± 9.80 years, and a weight of 86.67 ± 18.23 kg. This group featured the same arrangement of participants used in [12,16,17,18,20,29,30].

Table 1 presents the ECG spectrogram results counted for the different groups after dividing the nocturnal ECG signals into 1 min time windows. The ECG signals were divided into 1 min time window because the sleep expert labelled the ECG signal event (normal vs. apnea) based on a 1 min time window, and some previous studies also employed this database from PhysioNet and used 1 min time window divisions to separate ECG signal events [12,17,18,19]. Contaminated window ECG spectrograms in PAED (but not in NCKUHSCAD) were removed, which was also done in [12,14,16], since raw ECG signals could contain a wide range of noise caused by the patient’s movement, poor patch contact, electrical interference, measurement noise, or other disturbances.

Table 1.

General apnea group subject patterns for the proposed frequency bands.

2.2. Sleep Apnea Detection Algorithm Using a Machine-Learning Framework and Bag-of-Features Derived from ECG Spectrograms

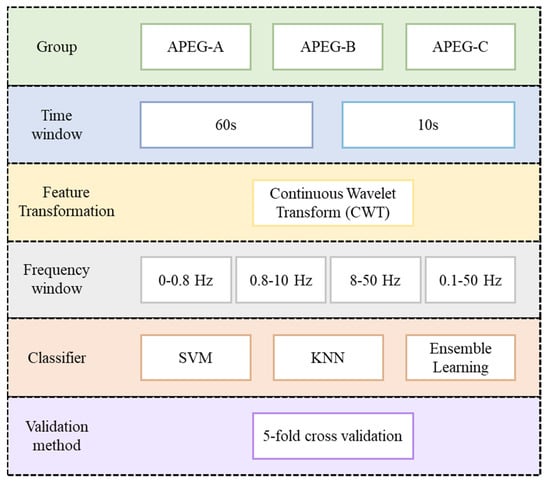

This study proposes an SA-detection algorithm using a machine-learning framework and BoF derived from ECG spectral intensity differences between SA and normal breathing. Figure 1 shows the proposed algorithm flowchart. Single-lead ECG data were input and then divided into consecutive 60 s ECG windows. The time–domain ECG for each window was transformed into the time–frequency spectrogram to obtain ECG spectrograms as the main feature. The BoF technique was then used to obtain features to discriminate ECG spectrograms for SA and normal breathing. Finally, machine-learning classifiers, including support vector machine (SVM), ensemble learning (EL), k-nearest neighbor (KNN), and cross-validation were used to obtain the classification results.

Figure 1.

Proposed sleep apnea detection algorithm using machine learning framework and bag -of-features derived from ECG spectrograms (SVM: Support Vector Machine; KNN: k-nearest neighbor).

2.3. Data Preprocessing

In this study, data preprocessing consisted of zero means computation and windowing preprocessing parameters. In this method, the zero-means subtract the mean from the ECG signals to eliminate trend-variation effects, and then nocturnal ECG spectra are segmented into consecutive 60 s windows (to match the database annotation), where windows with large noise are excluded for algorithm development. The PAED [25,28] and NKCUHSCAD utilized in this study labeled every 60 s window as containing an apnea episode or not.

2.4. Time–Frequency Transformation of ECG

The time–domain ECG for each window after data preprocessing was transformed into the time–frequency domain to facilitate better SA-episode classification. Continuous wavelet transform (CWT) [31] was used due to its high-resolution time–frequency components. CWT uses different time lengths to adaptively optimize the resolution in different frequency ranges. A CWT wavelet is a small wave compared to a sinusoidal wave—i.e., a brief oscillation that can be dilated or shifted according to the input signal. Common wavelet types include Meyer, Morlet, and Mexican hat. We selected the Morlet wavelet regime for this study to specify the extraction of several prominent frequency bands. Thus, the CWT regime can be expressed as

where is a time series function, is the wavelet function, and is a scaling or dilation factor.

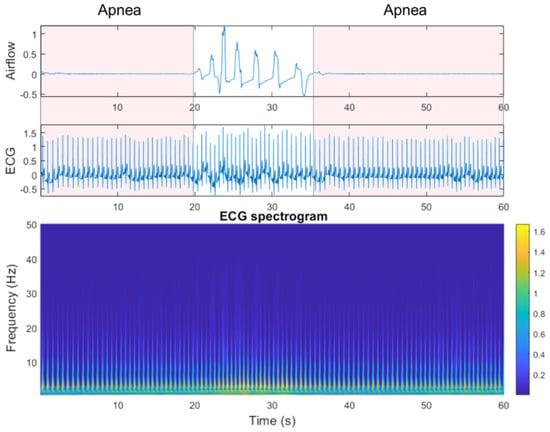

To observe the differences between SA episodes and normal breathing (i.e., periods with no SA episode), we used clinical data from the National Cheng Kung University Hospital sleep center for ECG spectrogram observation. Figure 2 shows example benchmark data that include an SA episode, followed by a return to normal breathing and then a subsequent SA episode. ECG spectrogram differences between apnea onset and normal breathing, derived by continuous wavelet transform (CWT), were significant. The power spectrum intensity for normal breathing was much stronger than that for SA episodes in the 5–10 Hz band.

Figure 2.

Airflow, electrocardiogram (ECG), and ECG spectrograms from apnea to non-apnea, followed by apnea again.

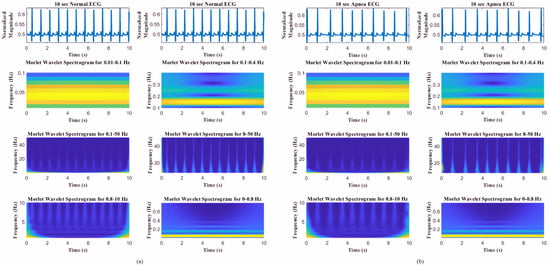

To achieve high-temporal-resolution pattern visualization, the different spectrogram frequency bands were extracted to classify SA based on other authors’ observations and findings [21,22,31]: (1) overall frequency 0.1–50 Hz, (2) high frequency 8–50 Hz, (3) middle frequency 0.8–10 Hz, and (4) low frequency 0–0.8 Hz. Thomas et al. [21,32] and Guo et al. [22] verified that certain frequency bands were associated with periodic respiration during sleep-disorder breathing (0.01–0.1 Hz), as well as physiologic respiratory sinus arrhythmia and deep sleep (0.1–0.4 Hz). As shown in Figure 3, the frequency range definitions of Thomas et al. and Guo et al. do not offer good pattern visualization features between normal ECG and apnea ECG events.

Figure 3.

Spectrograms of different frequency bands (0.01–0.1, 0.1–0.4, 0.5–50, 8–50, 0.8–10, and 0–0.8 Hz) [17,18,25] for (a) normal ECG and (b) apnea ECG events.

2.5. Feature Extraction Using Bag-of-Features

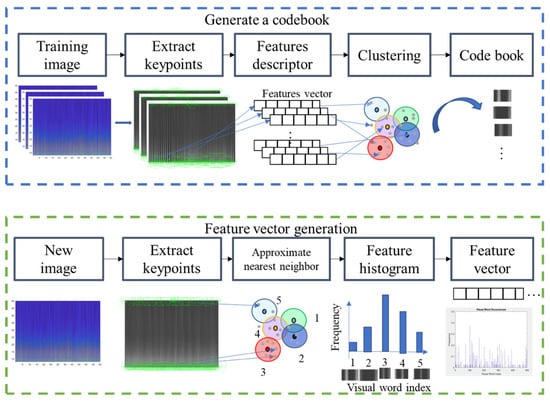

To extract features that best discriminate the spectrogram with apnea onset and normal breathing, we used the bag-of-features (BoF) or bag-of-visual-words [33], a visual classification approach commonly employed for image classification. The BoF corresponds to the frequency histogram of a particular image pattern occurrence in a given image and was also successfully used for text classification. Figure 4 shows the BoF flowchart used in this study.

Figure 4.

Codebook and feature vector generation for the proposed bag-of-features method.

The two main steps in this process were codebook generation and BoF feature-vector extraction. Codebook generation extracted representative features (visual words) to describe an image. ECG spectrograms were then regarded as images, with visual words being generated as follows. Key points (points of interest) were extracted from training images using a speeded-up robust features (SURF) detector [34], and 64-dimensional descriptors were used to describe the key points. Descriptors were then clustered by k-means clustering, and the resulting clusters were compacted and subsequently separated based on similar characteristics. Each cluster center represented a visual word (analogous to vocabulary), thereby producing a codebook (analogous to a dictionary) [33]. The codebook was then used to obtain the BoF for each ECG spectrogram image. Then, image key points were extracted, and image descriptors were obtained. Here, each descriptor corresponded to the closest visual word, and visual word occurrences in an image were counted to produce a histogram representing the image. Figure 4 shows how these feature vectors were then directly input to machine-learning classifiers for SA classification.

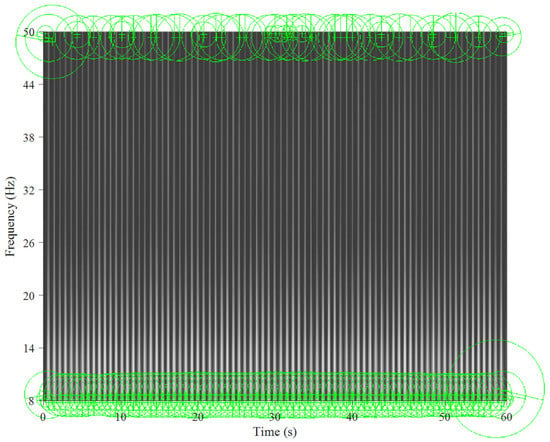

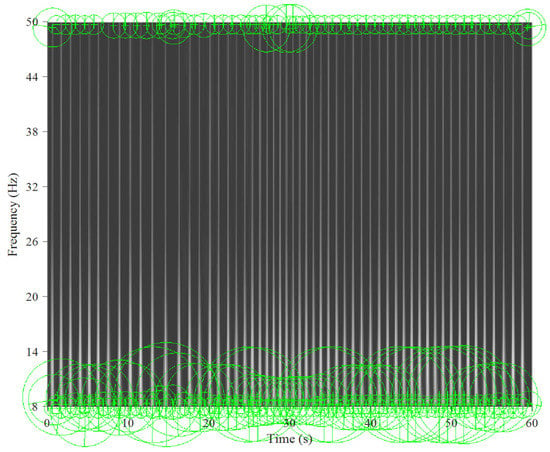

Figure 5 and Figure 6 show the key points automatically extracted by the BoF in the ECG spectrogram algorithm for normal breathing and SA, respectively. Each green circle represents a key point able to best discriminate breathing patterns for each ECG spectrogram. Key points were similar for normal breathing but varied significantly for SA spectrograms.

Figure 5.

Key points extracted from an ECG spectrogram of normal breathing for a 60 s window and 8–50 Hz band.

Figure 6.

Key points extracted from the sleep apnea ECG spectrogram for a 60 s window and 8–50 Hz band.

2.6. Machine-Learning classifiers

Support vector machine (SVM) is a supervised learning model for classification and regression. The core concept of SVM is to find the hyperplane that best separates data into two classes. In binary classification, generally, p-dimensional data can be separated by a (p-1)-dimensional hyperplane. The best hyperplane is defined as that with the largest margin between the two classes, and the closest point on the hyperplane boundary is considered the support vector [35].

The k-nearest neighbor (KNN) classifier is a simple supervised learning method and uses the nearest distance approach in deciding the group of the new data in the training set. During the training phase, the feature space is split into several regions and the training data are mapped into the similar groups of feature space. The unlabeled testing data are then classified into a certain group of feature space based on the minimum distance. Distance is an important factor for the model and can be determined, e.g., by Euclidean, Mahalanobis, and cosine-distance metrics [36,37].

Ensemble learning (EL) combines multiple learning algorithms and weight sets to construct a better classifier model. Prediction using an ensemble algorithm requires extensive computation compared to using only a single model. Therefore, ensembles can compensate for poor learning algorithms by performing extra computations. EL methods generally use fast algorithms, although slower algorithms can also benefit from ensemble techniques. Many different ensemble models have been proposed, including the Bayes optimal classifier and boosting and bootstrap aggregating (bagging) techniques [38]. EL bagged trees and subspace KNN were employed in this study. The bagged trees method established a set of decision tree models trained on randomly selected portion of data; then, the predictions are merged to achieve final predictions using averaging [38]. Meanwhile, subspace KNN was designed using majority vote rule, where the random subspace ensemble method was used with nearest neighbor learner type of 30 learners [39,40].

2.7. k-Fold Cross-Validation

k-fold cross-validation is a well-developed validation technique [41]. The first step is to divide the data samples into k subgroups. Subsequently, each subgroup can be selected as the testing set with the remaining (k-1) subgroups as the training set. In this way, k-fold cross-validation repeats training and testing k times, and the final accuracy is the average of the k accuracy values for each iteration. In this study, the k-fold cross-validation with k = 5 was performed at the spectrogram-level instead of the participant-level, as some studies have done [16,42,43,44]. As evaluation criteria, the accuracy, sensitivity, and specificity parameters were computed for classification performance metrics. The definitions of these metrics can be found in Table 2 [45].

Table 2.

Confusion matrix and evaluation parameter equations.

3. Experimental Results

The experiments were executed using MATLAB R2020a software on several computers with 24 GB installed RAM, Intel® Core™ i5-8400 CPU @2.80 GHz, and NVIDIA GeForce GTX 1060 6 GB mounted graphic card. The Classification Learner toolbox from MATLAB was utilized to perform the machine-learning classification. Hyperparameter optimization was performed using nested five-fold cross-validation and grid search using a 60 s time window based on the best frequency range, 8~50 Hz, and machine-learning model construction, SVM (see Table 3). Table 4 and Table 5 compare the selected APEGs from NCKUHSCAD and PAED for the frequency bands (overall frequency, 0.1–50 Hz; high frequency, 8–50 Hz; middle frequency, 0.8–10 Hz; and low frequency, 0–0.8 Hz) along with SVM, KNN, and EL classifiers using a time-window length of 60 s and five-fold validation. The best classification accuracy for NCKUHSCAD-APEG-A, NCKUSCAD-APEG-B, and NCKUHSCAD-APEG-C was 84.4%, 80.8%, and 83.8% at 8–50 Hz, 8–50 Hz, and 8–50 Hz, respectively, using SVM, EL, and SVM. Conversely, the best classification accuracy for PAED-APEG-A, PAED-APEG-B, and PAED-APEG-C was 88.2%, 88.3%, and 91.4% at 8–50, 8–50, and 8–50 Hz using SVM, EL, and EL, respectively. The PAED-APEG-C classification accuracy for the 0.8–10 Hz band using EL was 89.1%, which was only 2.3% less than the best accuracy (91.4%). Hence, we selected the 8–50 Hz band and EL classifier to classify all SA episodes in the remaining work.

Table 3.

Nested five-fold cross-validation training and validation performance using a 60 s time window based on 8~50 Hz frequency range and SVM classifier of PAED and NCKUHSCAD.

Table 4.

Five-fold cross-validation classification performance using a 60 s time window for NCKUHSCAD.

Table 5.

Five-fold cross-validation classification performance using a 60 s time window for PAED.

SA detection in a short time window was an important consideration for this study. Therefore, we also investigated a shorter window length (10 s). For example, eleven subjects from NCKUHSCAD-APEG-A were selected, and different frequency bands were compared via five-fold cross-validation. The time window length was too short to provide variation in the lower frequency band; hence, that band was not considered in this comparison. Table 6 shows that the 10 s time window provided greater accuracy than the 60 s time window, with the best accuracy reaching 95% for the 8–50 Hz band using SVM.

Table 6.

Five-fold cross-validation classification performance using a 10 s time window for NCKUHSCAD.

4. Discussion

To the best of our knowledge, our study is the first to use an ECG spectrogram for SA detection. This proposed algorithm used high-temporal resolution feature visualization of the ECG spectrogram in differentiating normal and SA breathing. Using this high-temporal resolution pattern visualization, the proposed algorithm was able to achieve high accuracy, sensitivity, and specificity in SA detection.

4.1. ECG Variation during Rapid Eye Movement (REM) and Non-REM Sleep Stages

Sleep is a dynamic situation of consciousness characterized by rapid changes in autonomic activity that regulates coronary artery tone, systemic blood pressure, and heart rate. In the analysis of 24-hour heart rate variability (HRV), a nocturnal increase in the standard deviation of mean RR intervals commonly occurrs. In the study by Zemaityte et al. [46] and Raetz et al. [47], they observed that, when compared to the wakefulness stage, non-REM sleep stage was associated with lower overall HRV and during REM sleep stage, the opposite phenomenon was observed, an increase in overall HRV. Otherwise, some studies examined the highest nocturnal activity during REM sleep due to the peripheral sympathetic nerve activity with no difference in heart rates between the sleep stages [48,49,50].

In this study, by investigating the REM and non-REM partitions on the SA classification using PAED 60 s time window based on the best frequency range (8~50 Hz) and machine-learning model (SVM), it was concluded that the ECG variation (HRV) did not significantly affect the SA classification performance. Both REM and non-REM partitions of imbalanced and balanced datasets generated for PAED-APEG-A, PAED-APEG-B, and PAED-APEG-C groups presented a good classification performance with the average accuracy was up to 81.42% for REM stage and 79.2% for non-REM stage (see Table 7). The balancing data were randomly performed in order to address more imbalanced apnea and normal events as a consequence of partitioning the datasets into REM and non-REM.

Table 7.

The REM and Non-REM classification performance using a PAED 60 s time window based on 8~50 Hz frequency range and SVM classifier.

4.2. Per Subject Classification (Leave-One-Subject-Out Cross-Validation)

A more realistic scenario to the medical application, which is required to classify a new unseen subject into the model, is the so-called per-subject classification. Leave-one-subject-out cross-validation (LOSOCV) was used in this study to perform the per-subject classification. In LOSOCV, one subject is set aside for the evaluation (testing) and the model is trained on remaining subjects. The process is repeated each time with a different subject for evaluation and results are averaged over all folds (subjects).

Results from k-fold cross-validation PAED 60 s time window based on 8~50 Hz frequency range and SVM classifier experimental setting demonstrates that the model almost certainly can detect subjects with disease if training and testing sets are not separated in terms of subjects, leaving data related to a subject in both sets. As the result shows, it can achieve a high level of accuracy (89.13%). With all other experimental settings remaining the same, except PAED-APEG-C group as some subjects in the Group C were excluded since they did not experience anapnea event, when the LOSOCV was applied the accuracy decreased significantly to 70% (Table 8, Table 9 and Table 10). This probably means that in the case of k-fold cross-validation where subject data are in both training and testing sets, the algorithm is learning the subject rather than the disease condition.

Table 8.

Leave-one-subject-out cross-validation classification performance using a PAED-APEG-A 60 s time window based on 8~50 Hz frequency range and SVM classifier.

Table 9.

Leave-one-subject-out cross-validation classification performance using a PAED-APEG-B 60 s time window based on 8~50 Hz frequency range and SVM classifier.

Table 10.

Leave-one-subject-out cross-validation classification performance using a PAED-APEG-C 60 s time window based on 8~50 Hz frequency range and SVM classifier.

4.3. Performance Comparison with the Existing Literature

Table 11 compares the proposed algorithm with various current best-practice algorithms from the literature using PAED. Quinceno-Manrique et al. [16], Nguyen et al. [17], Sannino et al. [18], and Hassan [29] used HRV-extracted features in the time domain and frequency domain along with non-linear methods, such as features from spectral centroids, spectral centroid energy, recurrence statistics, and wavelet transform, while Varon et al. [12] used EDR as the feature (84.74% accuracy, 84.71% sensitivity, and 84.69% specificity). Singh et al. [20] proposed a CNN-based deep learning approach using the time–frequency scalogram transformation of an ECG signal (86.22% accuracy, 90% sensitivity, and 83.8% specificity). Surrel et al. [30] used RR intervals and RS amplitude series (85.70% accuracy, 81.40% sensitivity, and 88.40% specificity). The proposed method achieved significantly better performance in accuracy, sensitivity, and specificity compared to all other considered methods for 1 min time windows and also achieved comparable accuracy to the compared methods (90.5%) for the 10 s time windows, indicating that the proposed method offers higher temporal resolution.

Table 11.

Proposed algorithm comparison with current best-practice algorithms.

Quinceno-Manrique et al. (89.02% accuracy) [16], Nguyen et al. (85.26% accuracy, 86.37% sensitivity, and 83.47% specificity) [17], Sannino et al. (85.76% accuracy, 65.82% sensitivity, and 66.03% specificity) [18], Hassan (87.33% accuracy, 81.99% sensitivity, and 90.72% specificity) [29], and Surrel et al. [30] used HRV features to analyze ECG signals, which had two major disadvantages. First, the HRV frequency band was low, meaning that the time windows had to be extended to ensure that signal variation was properly expressed (e.g., Quinceno-Manrique et al. used 3 min time windows, and HRV features required 5 min windows [16]). OSA onsets and offsets could occur several times within windows of these lengths, significantly limiting the practical applications of these approaches. Second, HRV features use simplified information from the original ECG (QRS complexes), so considerable physiological information, such as ECG signal morphology, could be lost. The same situation occurs for EDR [12], as EDR was derived from the R-wave amplitude, which also used simplified ECG information. Conversely, the proposed algorithm directly used ECG spectrograms to classify SA and normal breathing. Hence, the main advantage of the proposed method is the identification of significant variations in the occurrence of apnea episodes.

Similar to the proposed method, Singh et al. [20] also employed time–frequency transformation using CWT to obtain the scalogram of an ECG signal. The deep learning layers of CNN-pre-trained AlexNet were used for feature extraction. At the final layer, the decision fusion of some machine-learning approaches (SVM, KNN, EL, and Linear Discriminant Analysis (LDA)) was utilized for classification. However, the main difference and advantage of this study algorithm is the application of a more sophisticated time–frequency transformation using the Morlet wavelet in order to observe significant variations between several frequency ranges and obtain high temporal resolution. Moreover, the use of bag-of-features in the proposed method enables robust features called SURFs to be generated and outperformed the Singh et al. study performance with 91.4% accuracy and 92.4% specificity.

4.4. Limitations and Future Developments

Although the proposed algorithm exhibited excellent performance, there were several limitations in this study. First, a limited sample size from PAED was used to validate the proposed algorithm. Second, in PAED, insufficient physiological data and subject disease history was available for further analysis since such information was not provided. Mass data collection from the Sleep Center NCKUH could be a solution for these drawbacks. Third, the proposed algorithm could not be applied to patients with cardiovascular disease complications since their ECG spectrograms tended to be somewhat irregular and not affected only by SA.

Future works could identify physiological meanings for the OSA features automatically extracted from the AI algorithm, test a large-scale group of participants from the sleep center database, and develop an algorithm to discriminate SA and cardiovascular disease using ECG data.

5. Conclusions

This paper proposed a new algorithm to classify SA patterns from ECG spectrograms. Four different frequency bands were considered along with three classifiers. High accuracy was obtained when applying time–frequency spectrograms to SA, and the features extracted by visual classification revealed previously unknown physiological significance, such that SA detection was feasible.

The algorithm provided superior accuracy compared to current common-practice approaches over generally shorter time windows (60 s) compared to those used in earlier models. Acceptable accuracy was also derived for very short time windows (10 s), highlighting the considerable flexibility in potential applications for this algorithm.

Author Contributions

Conceptualization, C.-Y.L. and C.-W.L.; methodology, C.-Y.L., C.-W.L., Y.-W.W. and F.S.; software, Y.-W.W., F.S. and N.T.H.T.; validation, Y.-W.W., F.S. and N.T.H.T.; investigation, C.-Y.L., C.-W.L., Y.-W.W. and F.S.; resources, C.-W.L.; writing—original draft preparation, Y.-W.W. and F.S.; writing—review and editing, C.-Y.L. and C.-W.L.; supervision, C.-Y.L. and C.-W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by grants from National Cheng Kung University Hospital (grant number NCKUH-10802018, NCKUH-10904006).

Institutional Review Board Statement

The study protocol was approved by the Institutional Review Board of NCKUH (protocol number: B-ER-108-426).

Informed Consent Statement

Not applicable.

Data Availability Statement

The Physionet Apnea-ECG Database (PAED) is openly available at Physionet at https://doi.org/10.13026/C23W2R (accessed on 15 October 2021). However, the National Cheng Kung University Hospital Sleep Center Apnea Database (NCKUHSCAD) is not publicly available due to privacy and ethical issues.

Acknowledgments

The authors thank Wen-Kuei Lin, Li-Zhen Lin, Yen-Su Lin, Fu-Hsin Liao, Shin-Ru Hou, and Yi-Chun Lin (the staff of the sleep medicine center at National Cheng Kung University Hospital).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Senaratna, C.V.; Perret, J.L.; Lodge, C.J.; Lowe, A.J.; Campbell, B.E.; Matheson, M.C.; Hamilton, G.S.; Dharmage, S.C. Prevalence of obstructive sleep apnea in the general population: A systematic review. Sleep Med. Rev. 2017, 34, 70–81. [Google Scholar] [CrossRef]

- American Academy of Sleep Medicine. Hidden health crisis costing America billions. In Underdiagnosing and Undertreating Obstructive Sleep Apnea Draining Healthcare System; Frost & Sullivan: Mountain View, CA, USA, 2016. [Google Scholar]

- Facco, F.L.; Lopata, V.; Wolsk, J.M.; Patel, S.; Wisniewski, S.R. Can We Use Home Sleep Testing for the Evaluation of Sleep Apnea in Obese Pregnant Women? Sleep Disord. 2019, 2019, 3827579. [Google Scholar] [CrossRef] [PubMed]

- Dalewski, B.; Kamińska, A.; Syrico, A.; Kałdunska, A.; Pałka, Ł.; Sobolewska, E. The Usefulness of Modified Mallampati Score and CT Upper Airway Volume Measurements in Diagnosing OSA among Patients with Breathing-Related Sleep Disorders. Appl. Sci. 2021, 11, 3764. [Google Scholar] [CrossRef]

- Philip, P.; Bailly, S.; Benmerad, M.; Micoulaud-Franchi, J.; Grillet, Y.; Sapène, M.; Jullian-Desayes, I.; Joyeux-Faure, M.; Tamisier, R.; Pépin, J. Self-reported sleepiness and not the apnoea hypopnoea index is the best predictor of sleepiness-related accidents in obstructive sleep apnoea. Sci. Rep. 2020, 10, 16267. [Google Scholar] [CrossRef]

- Kukwa, W.; Migacz, E.; Lis, T.; Ishman, S.L. The effect of in-lab polysomnography and home sleep polygraphy on sleep position. Sleep Breath. Schlaf Atm. 2021, 25, 251. [Google Scholar] [CrossRef]

- Young, T.; Palta, M.; Dempsey, J.; Skatrud, J.; Weber, S.; Badr, S. The occurrence of sleep-disordered breathing among middle-aged adults. N. Eng. J. Med. 1993, 328, 1230–1235. [Google Scholar] [CrossRef]

- Guilleminault, C.; Poyares, D.; Rosa, A.; Huang, Y.-S. Heart rate variability, sympathetic and vagal balance and EEG arousals in upper airway resistance and mild obstructive sleep apnea syndromes. Sleep Med. 2005, 6, 451–457. [Google Scholar] [CrossRef]

- Babaeizadeh, S.; Zhou, S.H.; Pittman, S.D.; White, D.P. Electrocardiogram-derived respiration in screening of sleep-disordered breathing. J. Electrocardiol. 2011, 44, 700–706. [Google Scholar] [CrossRef]

- Berntson, G.G.; Thomas Bigger, J., Jr.; Eckberg, D.L.; Grossman, P.; Kaufmann, P.G.; Malik, M.; Nagaraja, H.N.; Porges, S.W.; Saul, J.P.; Stone, P.H. Heart rate variability: Origins, methods, and interpretive caveats. Psychophysiology 1997, 34, 623–648. [Google Scholar] [CrossRef]

- Langley, P.; Bowers, E.J.; Murray, A. Principal component analysis as a tool for analyzing beat-to-beat changes in ECG features: Application to ECG-derived respiration. IEEE Trans. Biomed. Eng. 2009, 57, 821–829. [Google Scholar] [CrossRef]

- Varon, C.; Caicedo, A.; Testelmans, D.; Buyse, B.; Van Huffel, S. A novel algorithm for the automatic detection of sleep apnea from single-lead ECG. IEEE Trans. Biomed. Eng. 2015, 62, 2269–2278. [Google Scholar] [CrossRef]

- Hwang, S.H.; Lee, Y.J.; Jeong, D.-U.; Park, K.S. Apnea–hypopnea index prediction using electrocardiogram acquired during the sleep-onset period. IEEE Trans. Biomed. Eng. 2016, 64, 295–301. [Google Scholar]

- Van Steenkiste, T.; Groenendaal, W.; Deschrijver, D.; Dhaene, T. Automated sleep apnea detection in raw respiratory signals using long short-term memory neural networks. IEEE J. Biomed. Health Inform. 2018, 23, 2354–2364. [Google Scholar] [CrossRef]

- Penzel, T.; Kantelhardt, J.W.; Grote, L.; Peter, J.-H.; Bunde, A. Comparison of detrended fluctuation analysis and spectral analysis for heart rate variability in sleep and sleep apnea. IEEE Trans. Biomed. Eng. 2003, 50, 1143–1151. [Google Scholar] [CrossRef]

- Quiceno-Manrique, A.; Alonso-Hernandez, J.; Travieso-Gonzalez, C.; Ferrer-Ballester, M.; Castellanos-Dominguez, G. Detection of obstructive sleep apnea in ECG recordings using time-frequency distributions and dynamic features. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; IEEE: Manhattan, NY, USA, 2009; pp. 5559–5562. [Google Scholar]

- Nguyen, H.D.; Wilkins, B.A.; Cheng, Q.; Benjamin, B.A. An online sleep apnea detection method based on recurrence quantification analysis. IEEE J. Biomed. Health Inform. 2013, 18, 1285–1293. [Google Scholar] [CrossRef]

- Sannino, G.; De Falco, I.; De Pietro, G. Monitoring obstructive sleep apnea by means of a real-time mobile system based on the automatic extraction of sets of rules through differential evolution. J. Biomed. Inform. 2014, 49, 84–100. [Google Scholar] [CrossRef]

- Martín-González, S.; Navarro-Mesa, J.L.; Juliá-Serdá, G.; Kraemer, J.F.; Wessel, N.; Ravelo-García, A.G. Heart rate variability feature selection in the presence of sleep apnea: An expert system for the characterization and detection of the disorder. Comput. Biol. Med. 2017, 91, 47–58. [Google Scholar] [CrossRef]

- Singh, S.A.; Majumder, S. A novel approach osa detection using single-lead ECG scalogram based on deep neural network. J. Mech. Med. Biol. 2019, 19, 1950026. [Google Scholar] [CrossRef]

- Thomas, R.J.; Mietus, J.E.; Peng, C.-K.; Goldberger, A.L. An electrocardiogram-based technique to assess cardiopulmonary coupling during sleep. Sleep 2005, 28, 1151–1161. [Google Scholar] [CrossRef] [PubMed]

- Guo, D.; Peng, C.-K.; Wu, H.-L.; Mietus, J.E.; Liu, Y.; Sun, R.-S.; Thomas, R.J. ECG-derived cardiopulmonary analysis of pediatric sleep-disordered breathing. Sleep Med. 2011, 12, 384–389. [Google Scholar] [CrossRef]

- Liu, D.; Yang, X.; Wang, G.; Ma, J.; Liu, Y.; Peng, C.-K.; Zhang, J.; Fang, J. HHT based cardiopulmonary coupling analysis for sleep apnea detection. Sleep Med. 2012, 13, 503–509. [Google Scholar] [CrossRef]

- Penzel, T.; McNames, J.; De Chazal, P.; Raymond, B.; Murray, A.; Moody, G. Systematic comparison of different algorithms for apnoea detection based on electrocardiogram recordings. Med. Biol. Eng. Comput. 2002, 40, 402–407. [Google Scholar] [CrossRef] [PubMed]

- Goldberger, A.L.; Amaral, L.A.N.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.-K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals. Circulation 2003, 101, e215–e220. [Google Scholar] [CrossRef]

- American Academy of Sleep Medicine (AASM). AASM Clarifies Hypopnea Scoring Criteria. 2013. Available online: https://aasm.org/aasm-clarifies-hypopnea-scoring-criteria/ (accessed on 2 December 2021).

- Ruehland, W.R.; Rochford, P.D.; O’Donoghue, F.J.; Pierce, R.J.; Singh, P.; Thornton, A.T. The new AASM criteria for scoring hypopneas: Impact on the apnea hypopnea index. Sleep 2009, 32, 150–157. [Google Scholar] [CrossRef]

- Penzel, T.; Moody, G.B.; Mark, R.G.; Goldberger, A.L.; Peter, J.H. The apnea-ECG database. In Proceedings of the Computers in Cardiology 2000. Vol. 27 (Cat. 00CH37163), Cambridge, MA, USA, 24–27 September 2000; IEEE: Manhattan, NY, USA, 2000; pp. 255–258. [Google Scholar]

- Hassan, A.R. Computer-aided obstructive sleep apnea detection using normal inverse Gaussian parameters and adaptive boosting. Biomed. Signal Process. Control 2016, 29, 22–30. [Google Scholar] [CrossRef]

- Surrel, G.; Aminifar, A.; Rincón, F.; Murali, S.; Atienza, D. Online obstructive sleep apnea detection on medical wearable sensors. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 762–773. [Google Scholar] [CrossRef] [PubMed]

- Rioul, O.; Duhamel, P. Fast algorithms for discrete and continuous wavelet transforms. IEEE Trans. Inf. Theory 1992, 38, 569–586. [Google Scholar] [CrossRef]

- Thomas, R.J.; Mietus, J.E.; Peng, C.-K.; Gilmartin, G.; Daly, R.W.; Goldberger, A.L.; Gottlieb, D.J. Differentiating obstructive from central and complex sleep apnea using an automated electrocardiogram-based method. Sleep 2007, 30, 1756–1769. [Google Scholar] [CrossRef]

- Csurka, G.; Dance, C.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the Workshop on Statistical Learning in Computer Vision, ECCV, Prague, Czech Republic, 11–14 May 2004; pp. 1–2. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Peterson, L.E. K-nearest neighbor. Scholarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Dasarathy, B.V. Nearest Neighbor (NN) Norms: NN Pattern Classification Technique; IEEE Computer Society Tutorial: Ann Arbor, MI, USA, 1991. [Google Scholar]

- Dietterich, T.G. Ensemble methods in machine learning. In Proceedings of the International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2000; pp. 1–15. [Google Scholar]

- Gul, A.; Perperoglou, A.; Khan, Z.; Mahmoud, O.; Miftahuddin, M.; Adler, W.; Lausen, B. Ensemble of a subset of k NN classifiers. Adv. Data Anal. Classif. 2018, 12, 827–840. [Google Scholar] [CrossRef]

- Ho, T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar]

- Bengio, Y.; Grandvalet, Y. No unbiased estimator of the variance of k-fold cross-validation. J. Mach. Learn. Res. 2004, 5, 1089–1105. [Google Scholar]

- Sadek, I.; Heng, T.T.S.; Seet, E.; Abdulrazak, B. A new approach for detecting sleep apnea using a contactless bed sensor: Comparison study. J. Med. Internet Res. 2020, 22, e18297. [Google Scholar] [CrossRef] [PubMed]

- Singh, H.; Tripathy, R.K.; Pachori, R.B. Detection of sleep apnea from heart beat interval and ECG derived respiration signals using sliding mode singular spectrum analysis. Digit. Signal Process. 2020, 104, 102796. [Google Scholar] [CrossRef]

- Niroshana, S.I.; Zhu, X.; Nakamura, K.; Chen, W. A fused-image-based approach to detect obstructive sleep apnea using a single-lead ECG and a 2D convolutional neural network. PLoS ONE 2021, 16, e0250618. [Google Scholar] [CrossRef] [PubMed]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- ŽEmaitytė, D.; Varoneckas, G.; Sokolov, E. Heart rhythm control during sleep. Psychophysiology 1984, 21, 279–289. [Google Scholar] [CrossRef]

- Raetz, S.L.; Richard, C.A.; Garfinkel, A.; Harper, R.M. Dynamic characteristics of cardiac RR intervals during sleep and waking states. Sleep 1991, 14, 526–533. [Google Scholar] [CrossRef][Green Version]

- Hornyak, M.; Cejnar, M.; Elam, M.; Matousek, M.; Wallin, B.G. Sympathetic muscle nerve activity during sleep in man. Brain 1991, 114, 1281–1295. [Google Scholar] [CrossRef] [PubMed]

- Somers, V.K.; Dyken, M.E.; Mark, A.L.; Abboud, F.M. Sympathetic-nerve activity during sleep in normal subjects. N. Eng. J. Med. 1993, 328, 303–307. [Google Scholar] [CrossRef] [PubMed]

- Valoni, E.; Adamson, P.; Piann, G. A comparison of healthy subjects with patients after myocardial infarction. Circulation 1995, 91, 1918–1922. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).