Validity and Reproducibility of the Peer Assessment Rating Index Scored on Digital Models Using a Software Compared with Traditional Manual Scoring

Abstract

1. Introduction

2. Materials and Methods

2.1. Sample Size Calculation

2.2. Setting

2.3. Sample Collection

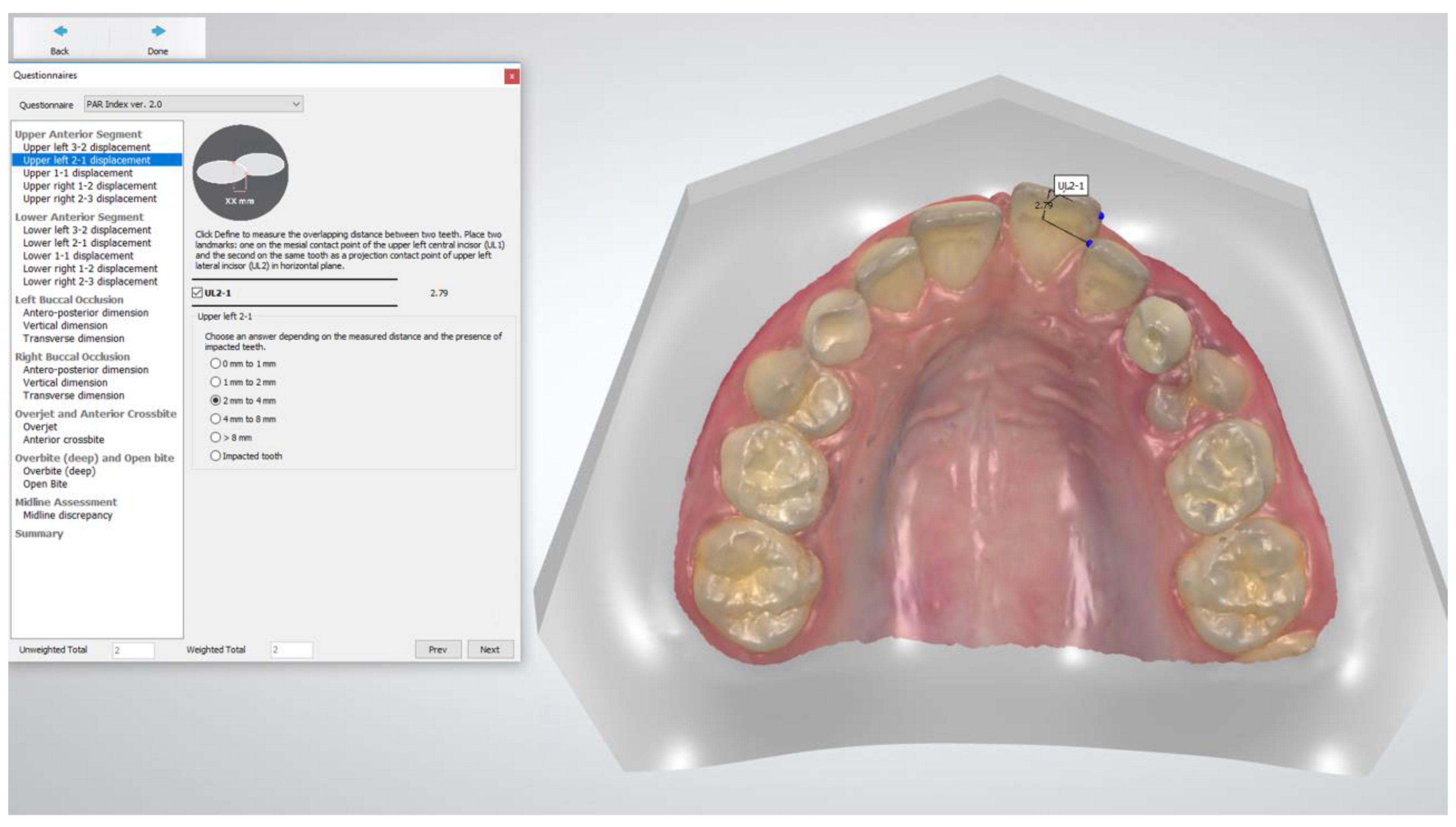

2.4. Measurements

2.5. Statistical Analyses

3. Results

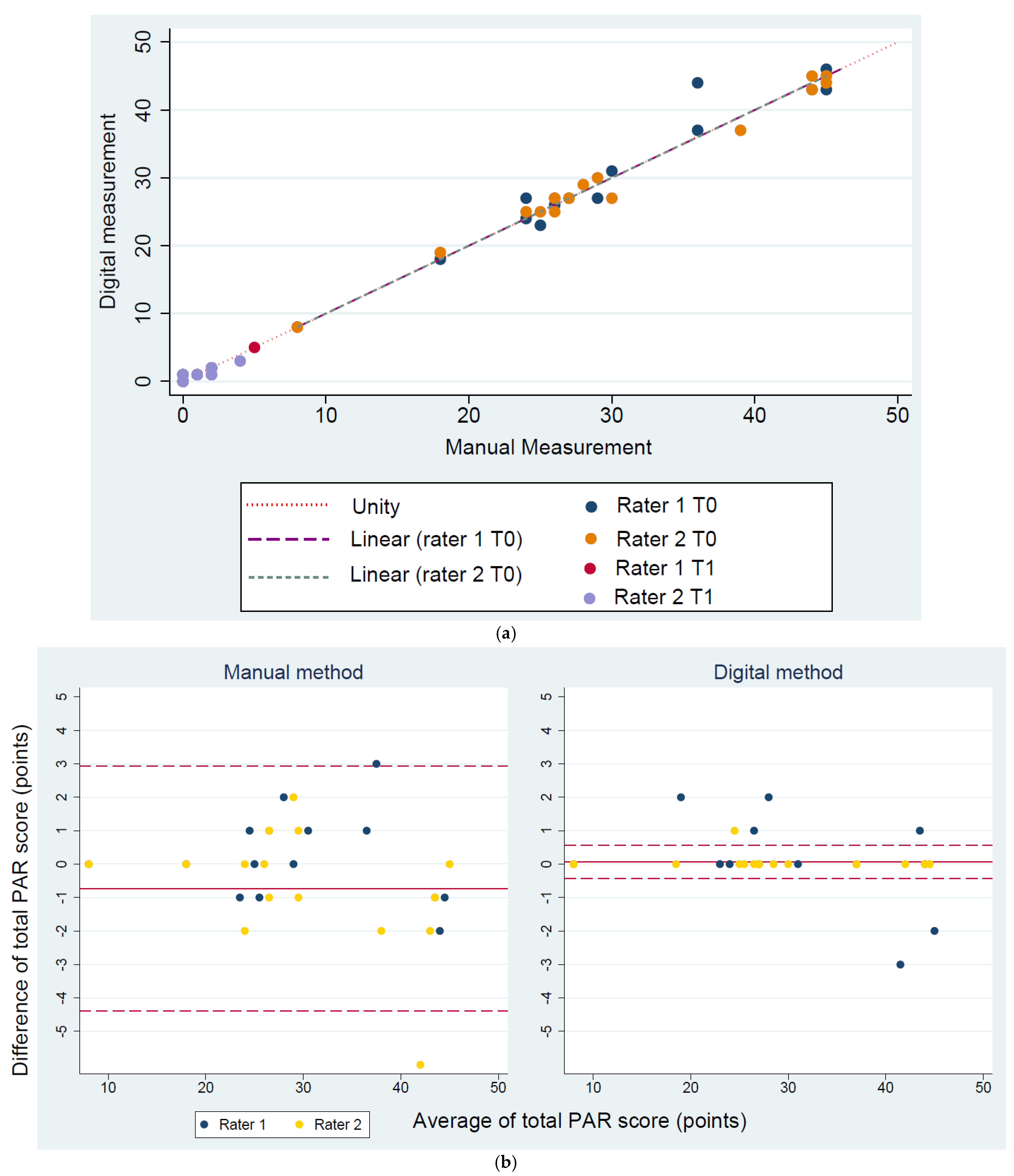

3.1. Validity

3.2. Reproducibility

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gottlieb, E.L. Grading your orthodontic treatment results. J. Clin. Orthod. 1975, 9, 155–161. [Google Scholar]

- Pangrazio-Kulbersh, V.; Kaczynski, R.; Shunock, M. Early treatment outcome assessed by the Peer Assessment Rating index. Am. J. Orthod. Dentofac. Orthop. 1999, 115, 544–550. [Google Scholar] [CrossRef]

- Summers, C.J. The occlusal index: A system for identifying and scoring occlusal disorders. Am. J. Orthod. 1971, 59, 552–567. [Google Scholar] [CrossRef][Green Version]

- Brook, P.H.; Shaw, W.C. The development of an index of orthodontic treatment priority. Eur. J. Orthod. 1989, 11, 309–320. [Google Scholar] [CrossRef]

- Daniels, C.; Richmond, S. The development of the index of complexity, outcome and need (ICON). J. Orthod. 2000, 27, 149–162. [Google Scholar] [CrossRef] [PubMed]

- Casko, J.S.; Vaden, J.L.; Kokich, V.G.; Damone, J.; James, R.D.; Cangialosi, T.J.; Riolo, M.L.; Owens, S.E., Jr.; Bills, E.D. Objective grading system for dental casts and panoramic radiographs. American Board of Orthodontics. Am. J. Orthod. Dentofacial Orthop. 1998, 114, 589–599. [Google Scholar] [CrossRef]

- Richmond, S.; Shaw, W.C.; O’Brien, K.D.; Buchanan, I.B.; Jones, R.; Stephens, C.D.; Roberts, C.T.; Andrews, M. The development of the PAR Index (Peer Assessment Rating): Reliability and validity. Eur. J. Orthod. 1992, 14, 125–139. [Google Scholar] [CrossRef] [PubMed]

- Cons, N.C.; Jenny, J.; Kohout, F.J.; Songpaisan, Y.; Jotikastira, D. Utility of the dental aesthetic index in industrialized and developing countries. J. Public Health Dent. 1989, 49, 163–166. [Google Scholar] [CrossRef] [PubMed]

- Richmond, S.; Shaw, W.C.; Roberts, C.T.; Andrews, M. The PAR Index (Peer Assessment Rating): Methods to determine outcome of orthodontic treatment in terms of improvement and standards. Eur. J. Orthod. 1992, 14, 180–187. [Google Scholar] [CrossRef]

- DeGuzman, L.; Bahiraei, D.; Vig, K.W.; Vig, P.S.; Weyant, R.J.; O’Brien, K. The validation of the Peer Assessment Rating index for malocclusion severity and treatment difficulty. Am. J. Orthod. Dentofac. Orthop. 1995, 107, 172–176. [Google Scholar] [CrossRef]

- Firestone, A.R.; Beck, F.M.; Beglin, F.M.; Vig, K.W. Evaluation of the peer assessment rating (PAR) index as an index of orthodontic treatment need. Am. J. Orthod. Dentofac. Orthop. 2002, 122, 463–469. [Google Scholar] [CrossRef]

- Stevens, D.R.; Flores-Mir, C.; Nebbe, B.; Raboud, D.W.; Heo, G.; Major, P.W. Validity, reliability, and reproducibility of plaster vs digital study models: Comparison of peer assessment rating and Bolton analysis and their constituent measurements. Am. J. Orthod. Dentofac. Orthop. 2006, 129, 794–803. [Google Scholar] [CrossRef] [PubMed]

- Zilberman, O.; Huggare, J.A.; Parikakis, K.A. Evaluation of the validity of tooth size and arch width measurements using conventional and three-dimensional virtual orthodontic models. Angle Orthod. 2003, 73, 301–306. [Google Scholar] [CrossRef] [PubMed]

- Bichara, L.M.; Aragon, M.L.; Brandao, G.A.; Normando, D. Factors influencing orthodontic treatment time for non-surgical Class III malocclusion. J. Appl. Oral Sci. 2016, 24, 431–436. [Google Scholar] [CrossRef]

- Chalabi, O.; Preston, C.B.; Al-Jewair, T.S.; Tabbaa, S. A comparison of orthodontic treatment outcomes using the Objective Grading System (OGS) and the Peer Assessment Rating (PAR) index. Aust. Orthod. J. 2015, 31, 157–164. [Google Scholar] [PubMed]

- Dyken, R.A.; Sadowsky, P.L.; Hurst, D. Orthodontic outcomes assessment using the peer assessment rating index. Angle Orthod. 2001, 71, 164–169. [Google Scholar] [CrossRef]

- Fink, D.F.; Smith, R.J. The duration of orthodontic treatment. Am. J. Orthod. Dentofac. Orthop. 1992, 102, 45–51. [Google Scholar] [CrossRef]

- Ormiston, J.P.; Huang, G.J.; Little, R.M.; Decker, J.D.; Seuk, G.D. Retrospective analysis of long-term stable and unstable orthodontic treatment outcomes. Am. J. Orthod. Dentofac. Orthop. 2005, 128, 568–574. [Google Scholar] [CrossRef]

- Pavlow, S.S.; McGorray, S.P.; Taylor, M.G.; Dolce, C.; King, G.J.; Wheeler, T.T. Effect of early treatment on stability of occlusion in patients with Class II malocclusion. Am. J. Orthod. Dentofac. Orthop. 2008, 133, 235–244. [Google Scholar] [CrossRef]

- Garino, F.; Garino, G.B. Comparison of dental arch measurements between stone and digital casts. Am. J. Orthod. Dentofac. Orthop. 2002, 3, 250–254. [Google Scholar]

- Mullen, S.R.; Martin, C.A.; Ngan, P.; Gladwin, M. Accuracy of space analysis with emodels and plaster models. Am. J. Orthod. Dentofac. Orthop. 2007, 132, 346–352. [Google Scholar] [CrossRef]

- Rheude, B.; Sadowsky, P.L.; Ferriera, A.; Jacobson, A. An evaluation of the use of digital study models in orthodontic diagnosis and treatment planning. Angle Orthod. 2005, 75, 300–304. [Google Scholar] [CrossRef]

- Mayers, M.; Firestone, A.R.; Rashid, R.; Vig, K.W. Comparison of peer assessment rating (PAR) index scores of plaster and computer-based digital models. Am. J. Orthod. Dentofac. Orthop. 2005, 128, 431–434. [Google Scholar] [CrossRef]

- Abizadeh, N.; Moles, D.R.; O’Neill, J.; Noar, J.H. Digital versus plaster study models: How accurate and reproducible are they? J. Orthod. 2012, 39, 151–159. [Google Scholar] [CrossRef] [PubMed]

- Dalstra, M.; Melsen, B. From alginate impressions to digital virtual models: Accuracy and reproducibility. J. Orthod. 2009, 36, 36–41. [Google Scholar] [CrossRef] [PubMed]

- Brown, G.B.; Currier, G.F.; Kadioglu, O.; Kierl, J.P. Accuracy of 3-dimensional printed dental models reconstructed from digital intraoral impressions. Am. J. Orthod. Dentofac. Orthop. 2018, 154, 733–739. [Google Scholar] [CrossRef] [PubMed]

- Camardella, L.T.; de Vasconcellos Vilella, O.; Breuning, H. Accuracy of printed dental models made with 2 prototype technologies and different designs of model bases. Am. J. Orthod. Dentofac. Orthop. 2017, 151, 1178–1187. [Google Scholar] [CrossRef]

- Luqmani, S.; Jones, A.; Andiappan, M.; Cobourne, M.T. A comparison of conventional vs automated digital Peer Assessment Rating scoring using the Carestream 3600 scanner and CS Model+ software system: A randomized controlled trial. Am. J. Orthod. Dentofac. Orthop. 2020, 157, 148–155.e141. [Google Scholar] [CrossRef]

- Walter, S.D.; Eliasziw, M.; Donner, A. Sample size and optimal designs for reliability studies. Stat. Med. 1998, 17, 101–110. [Google Scholar] [CrossRef]

- Harris, P.A.; Taylor, R.; Minor, B.L.; Elliott, V.; Fernandez, M.; O’Neal, L.; McLeod, L.; Delacqua, G.; Delacqua, F.; Kirby, J.; et al. The REDCap consortium: Building an international community of software platform partners. J. Biomed. Inform. 2019, 95, 103208. [Google Scholar] [CrossRef]

- Harris, P.A.; Taylor, R.; Thielke, R.; Payne, J.; Gonzalez, N.; Conde, J.G. Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inform. 2009, 42, 377–381. [Google Scholar] [CrossRef] [PubMed]

- Dahlberg, G. Statistical Methods for Medical and Biological Students; George Allen and Unwin: London, UK, 1940. [Google Scholar]

- Joffe, L. Current Products and Practices OrthoCAD™: Digital models for a digital era. J. Orthod. 2004, 31, 344–347. [Google Scholar] [CrossRef] [PubMed]

- McGuinness, N.J.; Stephens, C.D. Storage of orthodontic study models in hospital units in the U.K. Br. J. Orthod. 1992, 19, 227–232. [Google Scholar] [CrossRef] [PubMed]

- Flügge, T.V.; Schlager, S.; Nelson, K.; Nahles, S.; Metzger, M.C. Precision of intraoral digital dental impressions with iTero and extraoral digitization with the iTero and a model scanner. Am. J. Orthod. Dentofacial Orthop. 2013, 144, 471–478. [Google Scholar] [CrossRef]

- Grünheid, T.; McCarthy, S.D.; Larson, B.E. Clinical use of a direct chairside oral scanner: An assessment of accuracy, time, and patient acceptance. Am. J. Orthod. Dentofac. Orthop. 2014, 146, 673–682. [Google Scholar] [CrossRef] [PubMed]

| PAR Component | Assessment | Scoring | Weighting | |

|---|---|---|---|---|

| 1 | Anterior † | Contact point displacement | 0–4 | × 1 |

| Impacted incisors/canines | 5 | |||

| 2 | Posterior ‡ | Sagittal occlusion | 0–2 | × 1 |

| Vertical occlusion | 0–1 | |||

| Transverse occlusion | 0–4 | |||

| 3 | Overjet § | Overjet | 0–4 | × 6 |

| Anterior crossbite | 0–4 | |||

| 4 | Overbite ¶ | Overbite | 0–3 | × 2 |

| Open bite | 0–4 | |||

| 5 | Centerline | Deviation from dental midline | 0–2 | × 4 |

| Total | Unweighted PAR score | Weighted PAR score |

| Rater I | Rater II | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PAR Index Scoring and Timepoint | Scoring Method | Mean (SD) a | Err. Method *,a | MSD a | ICC | [95% CI] | p-Value (Paired t-Test) | Mean (SD) a | Err. Method *,a | MSD a | ICC | [95% CI] | p-Value (Paired t-Test) | |||

| T0 | Total PAR | Manual | 29.5 (10.1) | 0.9 | 10.1 | 0.99 | 0.99 | 1.00 | 0.16 | 30.5 (10.7) | 1.4 | 10.1 | 0.99 | 0.98 | 1.00 | 0.74 |

| Digital | 30.2 (10.5) | 0.9 | 10.1 | 1.00 | 0.99 | 1.00 | 30.4 (10.4) | 0.6 | 10.3 | 1.00 | 0.99 | 1.00 | ||||

| T1 | Manual | 1.1 (1.4) | 0.2 | 1.4 | 0.99 | 0.99 | 1.00 | 0.72 | 1.2 (1.3) | 0.4 | 1.2 | 0.95 | 0.84 | 0.98 | 0.49 | |

| Digital | 1.2 (1.3) | 0.2 | 1.1 | 0.98 | 0.94 | 0.99 | 1.0 (0.9) | 0.2 | 1.0 | 0.98 | 0.95 | 0.99 | ||||

| T0 | Lower anterior | Manual | 4.1 (4.0) | 0.5 | 4.0 | 0.99 | 0.99 | 1.00 | 0.12 | 4.4 (4.2) | 0.6 | 4.1 | 0.99 | 0.97 | 1.00 | 0.60 |

| Digital | 4.4 (3.6) | 0.2 | 3.5 | 1.00 | 0.99 | 1.00 | 4.5 (3.7) | 0.4 | 3.7 | 0.99 | 0.98 | 1.00 | ||||

| T1 | Manual | 0.3 (0.6) | 0.0 | 0.6 | 1.00 | - | - | 0.16 | 0.3 (0.6) | 0.0 | 0.6 | 1.00 | - | - | 0.16 | |

| Digital | 0.1 (0.3) | 0.0 | 0.4 | 1.00 | - | - | 0.1 (0.3) | 0.0 | 0.4 | 1.00 | - | - | ||||

| T0 | Upper anterior | Manual | 6.1 (2.8) | 0.6 | 2.6 | 0.97 | 0.92 | 0.99 | 0.45 | 6.3 (2.8) | 0.7 | 2.6 | 0.98 | 0.93 | 0.99 | 0.50 |

| Digital | 6.3 (2.8) | 0.7 | 2.6 | 0.96 | 0.89 | 0.99 | 6.4 (2.7) | 0.6 | 2.6 | 0.98 | 0.95 | 0.99 | ||||

| T1 | Manual | 0.2 (0.4) | 0.0 | 0.4 | 1.00 | - | - | 0.19 | 0.2 (0.4) | 0.0 | 0.4 | 1.00 | - | - | 0.19 | |

| Digital | 0.3 (0.5) | 0.1 | 0.5 | 1.00 | - | - | 0.3 (0.5) | 0.1 | 0.5 | 1.00 | - | - | ||||

| T0 | Posterior | Manual | 1.3 (1.5) | 0.4 | 1.4 | 0.97 | 0.91 | 0.99 | 0.16 | 1.4 (1.5) | 0.0 | 1.5 | 1.00 | - | - | 0.33 |

| Digital | 1.5 (1.6) | 0.0 | 1.6 | 1.00 | - | - | 1.5 (1.6) | 0.0 | 1.6 | 1.00 | - | - | ||||

| T1 | Manual | 0.2 (0.6) | 0.2 | 0.6 | 0.95 | 0.84 | 0.98 | 0.67 | 0.2 (0.6) | 0.0 | 0.6 | 1.00 | - | - | - | |

| Digital | 0.3 (0.6) | 0.0 | 1.5 | 1.00 | - | - | 0.3 (0.6) | 0.0 | 0.6 | 1.00 | - | - | ||||

| T0 | Overjet | Manual | 12.0 (7.5) | 0.0 | 7.5 | 1.00 | - | - | - | 12.0 (7.5) | 0.0 | 7.5 | 1.00 | - | - | - |

| Digital | 12.0 (7.5) | 0.0 | 7.5 | 1.00 | - | - | 12.0 (7.5) | 0.0 | 7.5 | 1.00 | - | - | ||||

| T1 | Manual | 0.0 (0.0) | 0.0 | 0.0 | ** - | - | - | - | 0.0 (0.0) | 0.0 | 0.0 | - | - | - | - | |

| Digital | 0.0 (0.0) | 0.0 | 0.0 | ** - | - | - | 0.0 (0.0) | 0.0 | 0.0 | - | - | - | ||||

| T0 | Overbite | Manual | 3.1 (1.8) | 0.0 | 1.8 | 1.00 | - | - | 0.33 | 2.9 (1.9) | 0.5 | 1.8 | 0.96 | 0.89 | 0.99 | 1.00 |

| Digital | 3.0 (1.8) | 0.4 | 1.8 | 0.98 | 0.94 | 0.99 | 2.9 (1.8) | 0.0 | 1.8 | 1.00 | - | - | ||||

| T1 | Manual | 0.3 (0.7) | 0.5 | 0.7 | 1.00 | - | - | - | 0.3 (0.7) | 0.0 | 0.7 | 1.00 | - | - | - | |

| Digital | 0.3 (0.7) | 0.5 | 0.7 | 1.00 | - | - | 0.3 (0.7) | 0.4 | 0.5 | 1.00 | - | - | ||||

| T0 | Centerline | Manual | 2.1 (3.0) | 0.0 | 3.0 | 1.00 | 1.00 | 1.00 | 0.33 | 2.1 (3.0) | 0.0 | 3.0 | 1.00 | 1.00 | 1.00 | 0.33 |

| Digital | 2.0 (2.7) | 0.8 | 2.6 | 0.96 | 0.89 | 0.99 | 1.9 (2.6) | 0.0 | 2.6 | 1.00 | - | - | ||||

| T1 | Manual | 0.0 (0.0) | 0.0 | 0.0 | ** - | - | - | 0.0 (0.0) | 0.0 | 0.0 | - | - | - | - | ||

| Digital | 0.0 (0.0) | 0.0 | 0.0 | ** - | - | 0.0 (0.0) | 0.0 | 0.0 | - | - | - | |||||

| PAR Index Scoring and Timepoint | Scoring Method | Rater I | Rater II | Rater I vs. II | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean (SD) a | Mean (SD) a | Err. Method a,* | MSD a | ICC | [95% CI] | p-Value (Paired t-Test | ||||

| T0 | Total PAR | Manual | 29.6 (10.0) | 30.3 (9.72) | 1.8 | 10.3 | 0.99 | 0.99 | 1.00 | 0.20 |

| Digital | 30.2 (10.2) | 30.4 (10.2) | 0.8 | 10.6 | 1.00 | 0.99 | 1.00 | 0.55 | ||

| T1 | Manual | 1.1 (1.4) | 1.1 (1.4) | 0.2 | 1.2 | 0.99 | 0.96 | 0.99 | 1.00 | |

| Digital | 1.1 (1.2) | 1.0 (1.0) | 0.4 | 1.0 | 0.98 | 0.95 | 0.99 | 0.26 | ||

| T0 | Lower anterior | Manual | 4.1 (4.0) | 4.5 (4.4) | 0.6 | 4.0 | 0.99 | 0.98 | 1.00 | 0.06 |

| Digital | 4.4 (3.6) | 4.5 (3.7) | 0.7 | 3.5 | 0.99 | 0.96 | 0.99 | 0.64 | ||

| T1 | Manual | 0.3 (0.6) | 0.3 (0.4) | 0.0 | 0.6 | 1.00 | - | - | - | |

| Digital | 0.0 (0.1) | 0.1 (0.2) | 0.0 | 0.3 | 1.00 | - | - | - | ||

| T0 | Upper anterior | Manual | 6.1 (2.8) | 6.3 (2.8) | 0.5 | 2.8 | 0.99 | 0.97 | 1.00 | 0.33 |

| Digital | 6.4 (2.8) | 6.4 (2.7) | 0.6 | 2.7 | 0.99 | 0.98 | 1.00 | 0.38 | ||

| T1 | Manual | 0.1 (0.3) | 0.1 (0.2) | 0.3 | 0.3 | 1.00 | 0.49 | 0.94 | - | |

| Digital | 0.4 (0.7) | 0.1 (0.3) | 0.6 | 0.3 | 1.00 | 0.64 | 0.96 | - | ||

| T0 | Posterior | Manual | 1.3 (1.4) | 1.4 (1.5) | 0.4 | 1.4 | 0.98 | 0.93 | 0.99 | 0.58 |

| Digital | 1.5 (1.6) | 1.5 (1.6) | 0.0 | 1.6 | 1.00 | - | - | - | ||

| T1 | Manual | 0.2 (0.6) | 0.2 (0.6) | 0.0 | 0.6 | 0.99 | 0.96 | 0.99 | 0.33 | |

| Digital | 0.3 (0.6) | 0.2 (0.6) | 0.2 | 0.6 | 0.95 | 0.84 | 0.98 | 0.33 | ||

| T0 | Overjet | Manual | 12 (7.5) | 12 (7.5) | 0.0 | 7.5 | 1.00 | - | - | - |

| Digital | 12 (7.5) | 12 (7.5) | 0.0 | 7.5 | 1.00 | - | - | - | ||

| T1 | Manual | 0.0 (0.0) | 0.0 (0.0) | 0.0 | 0.0 | ** - | - | - | - | |

| Digital | 0.0 (0.0) | 0.0 (0.0) | 0.0 | 0.0 | ** - | - | - | - | ||

| T0 | Overbite | Manual | 3.1 (1.8) | 3.1 (1.8) | 0.4 | 1.8 | 0.99 | 0.97 | 1.00 | 0.16 |

| Digital | 3.1 (1.8) | 2.9 (1.8) | 0.4 | 1.8 | 0.97 | 0.92 | 0.99 | 0.67 | ||

| T1 | Manual | 0.3 (0.7) | 0.3 (0.7) | 0.0 | 0.5 | 1.00 | - | - | - | |

| Digital | 0.3 (0.7) | 0.3 (0.7) | 0.0 | 0.5 | 1.00 | - | - | - | ||

| T0 | Centerline | Manual | 2.1 (3.0) | 2.1 (3.0) | 0.0 | 3.0 | 1.00 | - | - | - |

| Digital | 2.1 (3.0) | 1.8 (2.5) | 0.8 | 2.6 | 0.99 | 0.97 | 1.00 | 0.33 | ||

| T1 | Manual | 0.0 (0.0) | 0.0 (0.0) | 0.0 | 0.0 | ** - | - | - | - | |

| Digital | 0.0 (0.0) | 0.0 (0.0) | 0.0 | 0.0 | ** - | - | - | - | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gera, A.; Gera, S.; Dalstra, M.; Cattaneo, P.M.; Cornelis, M.A. Validity and Reproducibility of the Peer Assessment Rating Index Scored on Digital Models Using a Software Compared with Traditional Manual Scoring. J. Clin. Med. 2021, 10, 1646. https://doi.org/10.3390/jcm10081646

Gera A, Gera S, Dalstra M, Cattaneo PM, Cornelis MA. Validity and Reproducibility of the Peer Assessment Rating Index Scored on Digital Models Using a Software Compared with Traditional Manual Scoring. Journal of Clinical Medicine. 2021; 10(8):1646. https://doi.org/10.3390/jcm10081646

Chicago/Turabian StyleGera, Arwa, Shadi Gera, Michel Dalstra, Paolo M. Cattaneo, and Marie A. Cornelis. 2021. "Validity and Reproducibility of the Peer Assessment Rating Index Scored on Digital Models Using a Software Compared with Traditional Manual Scoring" Journal of Clinical Medicine 10, no. 8: 1646. https://doi.org/10.3390/jcm10081646

APA StyleGera, A., Gera, S., Dalstra, M., Cattaneo, P. M., & Cornelis, M. A. (2021). Validity and Reproducibility of the Peer Assessment Rating Index Scored on Digital Models Using a Software Compared with Traditional Manual Scoring. Journal of Clinical Medicine, 10(8), 1646. https://doi.org/10.3390/jcm10081646