Abstract

Brain–Computer Interfaces (BCIs) offer a non-invasive pathway for restoring motor function, particularly for individuals with limb loss. This review explored the effectiveness of Electroencephalography (EEG) and function Near-Infrared Spectroscopy (fNIRS) in decoding Motor Imagery (MI) movements for both offline and online BCI systems. EEG has been the dominant non-invasive neuroimaging modality due to its high temporal resolution and accessibility; however, it is limited by high susceptibility to electrical noise and motion artifacts, particularly in real-world settings. fNIRS offers improved robustness to electrical and motion noise, making it increasingly viable in prosthetic control tasks; however, it has an inherent physiological delay. The review categorizes experimental approaches based on modality, paradigm, and study type, highlighting the methods used for signal acquisition, feature extraction, and classification. Results show that while offline studies achieve higher classification accuracy due to fewer time constraints and richer data processing, recent advancements in machine learning—particularly deep learning—have improved the feasibility of online MI decoding. Hybrid EEG–fNIRS systems further enhance performance by combining the temporal precision of EEG with the spatial specificity of fNIRS. Overall, the review finds that predicting online imagined movement is feasible, though still less reliable than motor execution, and continued improvements in neuroimaging integration and classification methods are essential for real-world BCI applications. Broader dissemination of recent advancements in MI-based BCI research is expected to stimulate further interdisciplinary collaboration among roboticists, neuroscientists, and clinicians, accelerating progress toward practical and transformative neuroprosthetic technologies.

1. Introduction

With advancements in neuroimaging techniques, BCIs are becoming more accessible and affordable for a wider range of individuals [1,2]. BCIs allow for the control of external devices using brain signals, enabling individuals to interact with their environment in ways that were previously impossible. This technology has the potential to significantly improve the quality of life for those with mobility impairments or limb loss. The integration of BCIs with prosthetic limbs has been a significant focus of research, as it allows for more intuitive control and better functionality. By decoding brain signals, BCIs can translate the user’s intentions into actions performed by the prosthetic limb, providing a more natural and efficient way to interact with the world. One of the main challenges for BCI-based applications is the need for accurate and reliable signal processing techniques. The quality of the brain signals can be affected by various factors, including noise, artifacts, and individual differences in brain activity. Therefore, developing robust signal processing methods is crucial for improving the performance of BCI systems [3].

There have been two modalities chosen for this review: Electroencephalography (EEG) and functional Near-Infrared Spectroscopy (fNIRS). These modalities have been chosen due to their non-invasive nature, portability, and ability to provide real-time feedback, making them suitable for practical applications in BCI-based prosthetic control [1,2]. The modalities are also widely used in BCI research and have shown promise in decoding motor intentions from brain signals [4]. EEG measures electrical activity in the brain through electrodes placed on the scalp, while fNIRS measures changes in blood oxygenation levels in the brain using near-infrared light. Both modalities have their own advantages and limitations, which will be discussed in detail later in this review.

BCIs are controlled using cognitive or motor tasks, such as imagining movement or performing mental calculations. These tasks generate specific patterns of brain activity that can be detected and interpreted by the BCI system. Whilst motor imagery and motor execution tasks share similar components in the motor pathways [5], subjects can often struggle with motor imagery based approaches. It is often found that the time taken to complete motor imagery tasks is longer than that of motor execution tasks [6]. For the BCI-based control of a prosthetic limb, the user will typically perform a motor imagery task due to the loss of limb and resulting inability to execute the movement physically. This presents a unique challenge for BCI systems, as they must accurately decode the user’s intentions from imagined movements rather than actual physical actions [7].

Prostheses are used by amputees who have lost limbs due to an amputation from illness, accident, trauma or missing limb from birth; amputations cause a loss of motor control and sensory feedback for individuals, which can significantly impact their quality of life [8,9,10]. The integration of BCIs with prosthetic limbs has been a significant focus of research, as it allows for more intuitive control and better functionality. By decoding brain signals, BCIs can translate the user’s intentions into actions performed by the prosthetic limb, providing a more natural and efficient way to interact with the world [11,12,13]. Prosthetics used for upper limb amputees are typically controlled through bio-signals, which are electrical signals generated by muscle contractions. However, these control systems can limit a users control and dexterity. In order to improve the functionality of prosthetic limbs, researchers have been exploring the use of BCIs to provide more direct and intuitive control.

To develop a robust BCI system, a user will learn a new skill to control the system. BCI skills are similar to natural learn skills as they require practice and adaptation to achieve proficiency. But even the simplest skill can take many hours to learn, and the speed at which a user can learn a new skill is dependent on the individual [4,14]. With training and practice, users can learn to improve the dexterity and control of a BCI device. In preliminary studies, users have classified discrete movement classes but noted that there is a lack of continuous kinematic reconstruction [15,16].

Within BCI research, experiments are commonly classified as either offline or online. Offline experiments involve collecting brain signal data and analyzing it after the session has ended, allowing researchers to develop and validate signal processing and classification algorithms without the constraints of real-time processing. In contrast, online experiments require the system to process brain signals and provide feedback or control external devices in real time, closely simulating practical, real-world applications. While offline experiments are essential for algorithm development and benchmarking, online experiments are crucial for evaluating the usability, robustness, and responsiveness of BCI systems in dynamic environments. Both approaches play a vital role in advancing BCI technology for prosthetic control.

This review aims to explore the current state of EEG and fNIRS-based BCI technology, focusing on how well the modalities can be used to predict imagined movement for offline and online applications. The review will also discuss the challenges and limitations of these modalities, as well as the potential for future research and development in this area.

2. Methods

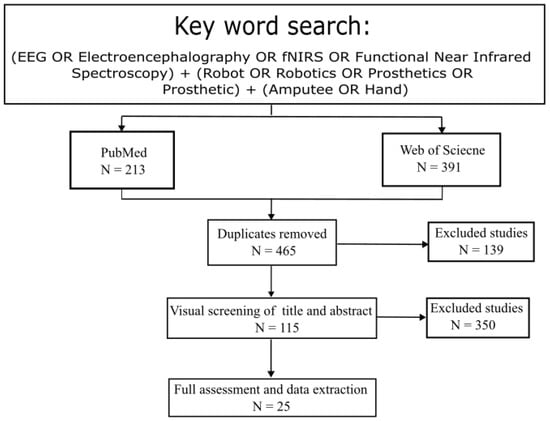

In this study, a two-stage literature search was performed which identified the relevant investigations. The first approach was an online search targeting two popular databases: PubMed, Web of Science. These consisted of peer-reviewed articles from January 2017 to September 2024 with the following keywords: (EEG OR Electroencephalography OR fNIRS OR Functional Near Infrared Spectroscopy) + (Robot OR Robotics OR Prosthetics OR Prosthetic) + (Amputee OR Hand). Additional literature was identified through the reference list in selected studies or from relevant review papers. The aim of the two-stage literature search was to ensure that the identified studies were as comprehensive as possible. As shown in Figure 1, the search returned 604 studies with 213 from PubMed and 391 from Web of Science. After removing 139 duplicates and 350 studies that did not related to this review (i.e., review studies, stroke rehabilitation, autonomous navigation, lacking fNIRS or EEG), 105 articles remained.

Figure 1.

Overview of literature search for review.

Figure 2 presents the distribution of studies based on the neuroimaging modality used—EEG, fNIRS, or a combination of both. EEG has remained the dominant technique across all years, while fNIRS-only studies have consistently been in the minority. However, hybrid EEG–fNIRS studies, although limited in number, highlight a growing interest in leveraging the complementary strengths of both modalities. The continued preference for EEG may reflect its maturity and availability, but the increased proportion of studies using fNIRS suggests rising recognition of its value, particularly in hybrid systems aimed at enhancing classification performance and reducing signal noise.

Figure 2.

Papers published, grouped by neuroimaging modality.

3. Background

For this review, the applications considered are those that use a non-invasive technology that can acquire brain activity for a BCI device. BCI devices allow for the control of an external device by interpreting signals that have been extracted from the central nervous system [17,18,19]. There are two categories of BCI devices: invasive and non-invasive. One of the challenges of non-invasive neuroimaging techniques is the inaccessibility of measuring deep neural activity in the brain reliability; as Section 3.3 will discuss, it is difficult to control BCI devices through imagined movements without numerous hours in training a BCI. This can be compounded by BCI applications for amputees where a limb amputation causes immense emotional trauma and stress on the brain, consequently the loss of a limb re-wires the neural pathways in the brain [20,21].

3.1. EEG

EEG is a widely used neuroimaging technique that measures electrical activity in the brain through electrodes placed on the scalp [22,23,24,25]. EEG is able to record the brain’s electrical potentials by deriving the activation of the cerebral cortex; which could be used to spontaneous or evoked brain activity [26]. The signals recorded are a time series; this time series is acquired through non-invasive electrodes (often referred to as channels) which are places on the scalp of a subject [27,28,29]. For high-level accuracy, the electrode positioning follows standard placement systems such as the 10–20 or 10–10 Internation System. These systems define the distance between the electrodes using anatomical landmarks as references such as the distance from the nasion to the inion, and the distance between the left and right preauricular points [28,30]. The systems are such that the electrodes are placed according to the brain’s anatomical structure; however, the neural activations of the brain are not always recorded uniformly and this can lead to imprecise recordings [31].

EEG is not a one-size-fits-all solution for recording brain activity as it has some limitations. EEG signals are easily affected by noise and therefore usually have low signal-to-noise ratio [32,33]. This is a problem when using EEG signals in a real-world application where it is not possible to block signals such as bluetooth, Wi-Fi, and cellular data [34]. Furthermore, the signal quality in EEG recordings is highly dependent on the electrode-scalp interface. To optimise the conductivity and reduce impedance it is often necessary to cleanse the scalp and apply conductive materials such as NeuroPrep gel or Ten20 paste [27]. While this preparation enhances the signal fidelity, it can residual substances on the scalp and hair, necessitating in post-session cleaning.

3.2. fNIRS

fNIRS is a non-invasive neuroimaging technique that uses near-infrared light to measure the changes in the oxygenated and de-oxygenated hemoglobin (HbO/HbR) levels in a targeted brain region, which is a consequence of neurovascular coupling. When a specific brain region becomes active due to cognitive or motor processes, there is an associated increase in the metabolic demand for oxygen and glucose [4,35]. Initially, this results in a transient increase in local oxygen consumption, leading to a slight elevation in HbR concentration. However, to compensate for this increased metabolic demand, the body initiates a rapid and localized increase in cerebral blood flow, which is measured by the fNIRS optodes [9,36]. A source emits near-infrared light into the scalp, while detectors positioned a few centimeters away pick up the reflected light after it has passed through the cortical tissue. When the infrared light is shone through the brain, it is scatted due to the change in direction of the light as it passes through biological tissue. The reflected light interacts differently with oxygenated and deoxygenated blood, in which the amount of light absorbed enables the system to track the blood oxygenation. Consequently, this allowed for an indirect measure of brain activity from neural processes through the changes in blood flow and oxygenation in response to neural demand.

As fNIRS measures the hemodynamic response in the brain, it is not susceptible to movement artifacts, which are so prominent in other neuroimaging techniques such as eye blinks. However, it is common for fNIRS data to be visually inspected to correct the data in case of any identified artifacts [37]. Further to this, fNIRS is better suited to real-world environments [38], as electrical signals do not affect the data as the reflected light in the brain is recorded via flexible fiber optic cables, which allow neuroimaging experiments to be conducted on participants while performing tasks such as standing or walking [9,39].

In fNIRS, the reflected light does not penetrate the brain very deep. fNIRS can only scan the cortical tissue and the maximum depth it can scan is 2–3 cm with it typically only reaching a depth of 1.5 cm [40,41].

3.3. Motor Imagery

Motor Imagery (MI) is the mental stimulation of body movements. This works by a user consciously accessing a motor representation; they will show intention of making a movement [35,42]. There have been numerous studies that have implemented MI as a means to control external BCI prosthetics with common movement classes of open hand, close hand, wrist flexion, and wrist extension [16,43,44,45].

MI-based BCIs are a good non-invasive alternative for applications such as prosthetics due to the current myoelectric methods relying on residual muscles in the forearm, which can make it difficult to identify the precise action that is intended by the user, especially after an amputation where nerves have been damaged and repaired [7,10]. For fine motor control in the wrist and finger movements, MI is more challenging to identify and reproduce, as the region of interest in the brain is a significantly smaller area of activation in the sensorimotor cortex. This is further compounded by the lower spatial resolution that non-invasive neuroimaging techniques provide [45].

EEG signals from the primary sensorimotor cortex contain oscillations known as sensorimotor rhythms. These rhythms are predominantly observed in the and bands. The rhythms undergo event-related amplitude modulations which are classified as event-related synchronization and synchronization. Event-related synchronization refers to a decrease in the rhythmic amplitudes and is commonly associated with cortical activation whereas event-related synchronization represents an increase in rhythmic amplitude, generally corresponding to cortical idling or inhibition [14]. The event refers to any cognitive, motor or sensory signal that is strong enough to register as a neural response. These are not the only way to identify movement in EEG signals, evoked potentials are characterized by stereotyped, time-locked positive and negative deflections in EEG voltage. Some EEG paradigms like P300 or steady-state visual evoked potentials do not rely on spontaneous modulations of sensorimotor rhythms and are stable oscillations in voltage that are elicited by rapid repetitive stimulation such as strobe light, LED [46].

MI-based BCIs can be slow and suffer from low accuracy due to a significant amount of training that needs to be done by a user. However, the MI ability is subjective due to the uniqueness of the individuals that take part in MI studies. This is identified in MI sessions and can impact on the amount of training that an individual needs [47]. MI is often used in offline experiments due to the method requiring a lot of computational power to classify the movements [24,48].

3.4. Motor Execution

Whilst MI is used for BCI-based prosthetics; when BCIs are in the initial phase of experimentation or being used for offline experiments as will be discussed in Section 3.5, healthy individuals are often recruited for studies [49,50]. The purpose of this is to set a baseline for future experiments that conduct studies on BCI applications. One BCI application is for prosthetic limb control; here, motor execution cannot be used for online use but is used to determine the success of offline machine learning and deep learning techniques [22,51]. Motor execution is frequently used as the performance benchmark in review studies evaluating the accuracy of MI-based BCIs. Motor execution provides a reliable platform for assessing the effectiveness of the machine learning and deep learning techniques [32,52,53].

Motor execution-based BCIs perform better than MI-based BCIs due to a clear motor cortex activation; this results in higher classification accuracy and participants find it easier to reproduce the movement compared to MI where they can struggle to reliably repeat the motion [32,34,35].

3.5. Offline/Online Experiments

There are two types of experimental research: amongst data-driven research, there is either online experiments or offline experiments [3]. Online experiments are conducted in real-time environments where user behaviour is continuously monitored and observed. Numerous research studies have found that online experiments struggle with the performance of classification methods [3,24,43,48]. It can be difficult to achieve high classification accuracy and low latency in these experiments, but it is possible to predict motions [9,16,38,54].

Offline experiments are generally more computationally expensive and often yield more reliable and accurate classifications [45,55,56]. The advantage of offline experimentation is that machine learning algorithms can be applied to the data gathered to help improve the classification accuracy of predicted movements [45,52,56,57].

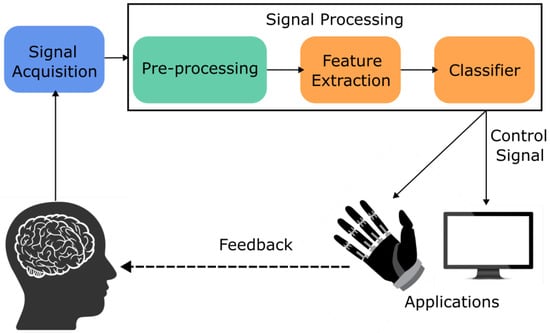

For a BCI-device to be effective, they will typically have two phases [33]; the initial phase will be offline experimentation on healthy individuals where non-invasive neuroimaging techniques will be used to gather data and then classify movements [28,39,58]. The offline phase can also be a chance to fine-tune the deep learning model that will be utilised in the second phase [33]. Following an online phase is generally an online phase that would include healthy individuals or individuals with disabilities that fit the criteria for that BCI application, such as amputees for prosthetic prediction control [9,59,60]. Figure 3 and Figure 4 show an overview of an online and offline pipeline used for experiments.

Figure 3.

BCI pipeline for EEG-based online experiment.

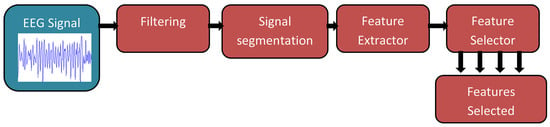

Figure 4.

BCI pipeline for EEG-based offline experiment [61].

Both offline and online experiment complement each other. Whilst offline experiments provide accuracy and robustness [28,55], online experiments ensure real-time applicability [53]. Recognising their respective trade-offs is essential when design effective BCI systems.

3.6. Summary of Modalities and Motor Imagery Viability

This section established the foundational concepts and challenges involved in using non-invasive neuroimaging techniques—particularly EEG and fNIRS—for brain–computer interface (BCI) systems. It discussed the physiological and technical constraints inherent in interpreting brain signals, especially from individuals with amputations, where neural plasticity and emotional trauma can rewire cortical pathways. While EEG offers high temporal resolution and is sensitive to changes in sensorimotor rhythms during Motor Imagery (MI), it is highly susceptible to noise. Conversely, fNIRS offers better resilience to movement artifacts and environmental interference but suffers from limited depth and delayed hemodynamic response. MI, which is critical for amputees who cannot physically execute movement, poses particular challenges due to individual variability, low spatial resolution, and the significant training required. Nevertheless, both modalities show potential for decoding MI, especially in offline contexts where computational demands can be met. Overall, while MI prediction is viable, it remains less accurate and slower than motor execution tasks due to these physiological and technical limitations.

4. Overview of BCI Experiments

This section is aimed to review recent experimental for EEG, fNIRS, and hybrid EEG-fNIRS modalities with a primary focus on MI with motor execution and how well-imagined movement can be predicted. It examines the differences in processing the raw signal data for feature extraction and classifiers, touching on both offline and online experiments for traditional methods and newer deep learning and machine learning approaches to predict user intention.

4.1. EEG-Based BCI Experiments

EEG has shown promise as a modality for use in BCI-based applications [23,24,54,62]. However, the main issue associated with EEG signals in BCI-based applications is the induced movement artifacts that are observed [50,57,63]. These can be intentional movements or spontaneous movements such as eye blinks or muscle twitches that impact the quality of the EEG signals [64,65,66]. Unintentional responses or artifacts can be removed through visual inspection and, in some cases, a channel or trial will be removed entirely from the data [67].

Table 1 presents an overview of EEG-BCI studies for determining how well-imagined movement can be predicted. The commonly used brain regions for offline studies were the sensorimotor cortex which includes the primary motor cortex and somatosensory cortical area [32,55,68]. Some studies that use commercial equipment such as the Emotiv Epox [11,53,69] do not have the same adaptability to choose which channels they use on the 10–20 international system. They are confined to use 14 specific channels with the headset getting minimal coverage over the entire brain.

Depending on whether the study is online or offline, analyzing EEG signals can use the same techniques; the most common method to extract the features from the EEG signals is Movement-related Cortical Potentials, Independent Component Analysis (ICA) and Linear Discriminant Analysis (LDA) [28,32,36,70]. ICA is a method that separates signals into additive subcomponents [11,71], transforming EEG signals into a format so that they can be generalized. Other processes such as LDA can be applied to reduce the dimensionality of the signals. LDA is a linear classifier that projects signals/data onto a lower-dimensional space; this helps identify the most relevant features that can contribute to a targeted movement [11,72]. Thus, LDA reduces the computational complexity and makes a more suitable implementation for online applications [23]. Offline stuides are generally used to improve the classification accuracy and will often compared multiple classification methods together to find the optimum method for a future study [28,55,70,73]. Offline studies are crucial for validating the effectiveness of different feature extraction techniques and selecting the appropriate classifiers for experimentation. SVM is a preferred choice for multi-class classifications where time is not a factor and offline experiments as it is computationally expensive [32,45,73].

Online BCI studies validate the performance of models for real-time constraints such as controlling prosthetic limbs. These systems must be able to process the raw EEG signals, extract features, and classify the movements with minimal latency and high tolerance and robustness [55,59,65]. For online experiments, LDA is a common classifier used. This is due to the low computational complexity where the accuracy of the classifier and speed are vital [23]. However it is possible to implement similar dimensional reducing methods such as principle component analysis and SVM to achieve similar results [53].

Table 1.

EEG–BCI studies for motor imagery and motor execution movement prediction.

Table 1.

EEG–BCI studies for motor imagery and motor execution movement prediction.

| Study | Paradigm | Brain Area | Features | Classifier | Type of Study |

|---|---|---|---|---|---|

| Kansal et al. [55] | Motor Execution | Sensorimotor Cortex | Time-domain, Min–Max scaled | Genetic Algorithm optimized Long Short-Term Memory | Offline |

| Mikson et al. [69] | Motor Execution + Facial | Frontal/Temporal | FFT, ICA | Thresholding | Online |

| Staffa et al. [52] | Motor Imagery | FC, C, Cz channels | Wavelet-based decomposition | WiSARD (Weightless Neural Network) | Offline training; Online execution |

| Carnio-Escobar et al. [23] | Motor Imagery | F3-P4 (16 channels) | CSP | LDA | Online |

| Xu et al. [28] | Motor Execution | Frontal and Parietal | MRCP | LDA | Offline |

| Xu et al. [70] | Motor Execution | Frontal and Parietal | MRCP | LDA | Offline |

| Cho et al. [32] | Motor Execution + Motor Imagery | Sensorimotor Cortex | LDA | SVM | Offline |

| Faiz & Al-Hamadani [53] | Motor Execution + Motor Imagery | Frontal, Parietal, and Temporal lobes | Autoregressive + CSP | PCA + SVM | Online |

| Gayathri et al. [11] | Motor Imagery | Fontal, Parietal and Temporal lobes | ICA | LDA | Offline |

| Shantala & Rashmi [73] | Motor Imagery | Frontal, Parietal and Temporal lobes | Wavelet Transform | LDA, SVM, kNN | Offline |

| Alazrai et al. [45] | Motor Imagery | Sensorimotor Cortex | Convolutional Neural Network | Convolutional Neural Network | Offline |

Advancements in machine learning and deep learning algorithms have reduced the quantity of manual feature extraction and classification implementations. MI-based studies are a prime focus for deep learning and offline studies where the algorithms can produce high accuracy classifications and reliability [51,54,63,66]. Common methods implemented are convolutional neural networks and long short-term memory algorithms for feature extraction and classification [45,55,74,75]. Alazrai et al. [45] found that a custom neural network improved the accuracy for able-bodied and amputees by and , respectively. The classification increase was using 18 able-bodied and 4 amputees. This is in comparison to the implementation by Kansal et al. [55] where the optimized classification accuracy improved on average by . Whilst both where trained in offline experiments, the models can be applied to real-time situations directly as both models only took up approximately of the sliding window used in EEG classifications [45,55]. Advancements have been made in processing multiple EEG frequency bands and applying recurrent neural networks to them [75]. They found that long short-term memory models that were trained on segregated frequency channels were able to learn temporal correlations across rhythms which can reduce noise sensitivity. Long short-term memory models combined with recurrent neural networks are able to be trained to anticipate upcoming human motor movements [76]. These anticipated movements can improve the safety of BCI devices.

Transitioning from offline to online BCI systems requires consideration for the computational latency, classifier accuracy, and robustness to real-world noise [34,77]. The classifiers used must perform reliably on signals that are being observed in non-clinical environments and to the subject over time [22,63]. There have been studies that have been able to demonstrate the effective integration of offline-trained models in robust online BCI systems [23,52]. These models have been trained on offline datasets [78] or experiments [48] and the parameters of the algorithms are uploaded onto the BCI for real-time decision-making. Online BCI applications require the need to have an adaptable neural network, as the classifiers can be subjected to unexpected data such as unintentional movement. For this, SVM is an ideal classifier but due to the computational load, lightweight classifiers such as LDA are utilized; weightless neural networks also show promise here and these networks can be used to find the relationship in high dimensional spaces without processing the signals through ICA or LDA [52,62,63].

4.2. fNIRS-Based BCI Experiments

As shown in Table 2, fNIRS research employs both MI and motor execution paradigms, with motor execution studies generally outnumbering MI. This trend aligns with the stronger and more consistent hemodynamic responses elicited during actual movements, facilitating signal decoding [79,80]. However, MI paradigms remain critical for BCI applications targeting users unable to perform physical movements, such as amputees or patients with severe motor impairments [4,9,81].

Table 2.

fNIRS-BCI studies for motor imagery and motor execution movement prediction.

fNIRS has shown promise over recent years as a potential for portable BCI applications [9,35,79]. However, fNIRS has an inherent physiological delay of approximately 2 s, which poses challenges for real-time control [79]. fNIRS can be used for both online and offline experiments [4,79,81], with online experiments commonly being integrated into hybrid BCI-based research [17,82,83] as discussed in Section 4.3.

Offline fNIRS studies allow for researchers to not be limited by the physiological delays that occur when measuring the changes in HbO and HbR levels in the blood. It also allows for analysis of the pipeline notably the classifiers under controlled conditions [35,81]. The most common feature extraction method of fNIRS is to use the signal mean, signal slope, signal variance, signal skewness, kurtosis, and signal peak [4,35,37]. Nazeer et al. [81] proposed an alternative way to identify the active channels from MI data that are used for classification, the z-score-based method improved the accuracy of the LDA classifier on average by 10% in comparison to standard methods that identify active regions of the brain by Δhemodynamic response. Four daily-life arm movements (lifting, putting down, pulling back, and pushing forward) can also be identified using fNIRS and achieve high offline classification accuracies, with Random Forest reaching 94.4% and SVM achieving 84.4% [84] for multi-class recognition.

Online BCI systems using the fNIRS modality often struggle with the slower hemodynamic response but benefit from the robustness to motion artifacts [4,9,38]. Similarly to EEG, LDA is a popular classifier to use for online studies due to SVM requiring more resources and inducing more latency in a modality [79].

fNIRS has also been applied to predict motion movements. This has shown that there is a stronger activation in the inferior and superior parietal lobes under equal time structure conditions to predict arrival time of moving objects [85].

fNIRS-based systems will typically use HbO and HbR signals as the inputs for machine learning models, Ortega & Faisal [80] explores the application of deep learning for offline fNIRS studies, focusing on decoding bimanual grip force from multimodal fNIRS and EEG signals. The study introduces a novel deep-learning architecture, which incorporates residual layers and self-attention mechanisms to enhance the fusion of EEG and fNIRS signals. The fNIRS data is processed using the modified Beer–Lambert law. The deep-learning models outperform traditional linear methods in reconstructing force trajectories, detecting force generation, and disentangling hand-specific activities. This highlights the advantages of deep learning in capturing non-linear modulations of brain signals and improving the fusion of multimodal data, offering significant potential for advancing offline fNIRS studies in motor control and brain–machine interface applications [80,86].

4.3. Hybrid BCI-Based EEG & fNIRS

Notably, a majority of recent EEG-fNIRS hybrid BCI studies have employed motor execution paradigms rather than purely MI tasks, as reflected in Table 3. This predominance is likely due to motor execution providing clearer neurophysiological signals and more robust hemodynamic responses, facilitating initial algorithm development and system validation [17,80]. However, motor execution requires actual movement, which is not feasible for many amputees or severely impaired users.

Table 3.

EEG/fNIRS-BCI studies using for movement prediction.

In contrast, MI-based paradigms, though less represented in the current literature, remain critical for applications where voluntary movement is limited or absent. MI poses greater challenges due to weaker and more variable cortical activations, resulting in lower classification accuracies and increased training demands [89]. Therefore, ongoing research aims to improve MI decoding through advanced signal processing and multimodal fusion with fNIRS to compensate for its inherent signal limitations [22,87]. Bridging this gap is essential for developing clinically viable hybrid BCIs tailored to users with motor disabilities.

Hybrid BCIs utilizing EEG and fNIRS modalities have emerged as a promising approach in the development of neuroprosthetics and assistive technologies for rehabilitation [17,36,80]. Among the various signal combinations, EEG and fNIRS are particularly effective for decoding MI, which allows users to mentally simulate movements—such as grasping or reaching—without physical execution. This capability is crucial for individuals with upper-limb amputations, especially where muscle signals are not viable due to tissue damage or limb absence [7,90,91,92].

EEG provides high temporal resolution, capturing fast neural activity during MI, while fNIRS measures hemodynamic responses related to cortical activation. This complementary relationship enhances the reliability and accuracy of movement prediction. However, practical implementation of hybrid systems remains complex due to increased computational demands and sensor setup requirements [17,93,94].

To address these challenges, channel selection techniques such as Sequential Backward Floating Search have been employed to reduce EEG data dimensionality while retaining MI-relevant features [22]. While traditional MI-based EEG systems may struggle with low accuracy and extensive training requirements [89], the integration of fNIRS can compensate by providing spatially localized information that reinforces EEG signals during MI tasks [4,89].

Several studies have demonstrated that hybrid BCI devices enhances classification performance in multi-degree-of-freedom prosthetic control [36,80,94], enabling the differentiation of imagined reaching, grasping, or lifting actions. Furthermore, strategic electrode placements—such as embedding EEG electrodes between fNIRS optodes—can improve cortical coverage without signal interference [80].

Recent deep learning approaches, including convolutional neural networks, have been applied to extract event-related synchronization/desynchronization features from EEG-fNIRS data, offering improved classification of MI-related tasks [87]. These hybrid techniques improve long-term stability and accuracy, making them viable for real-world applications in rehabilitation and assistive technologies [26,88,95,96].

4.4. Summary of Experimental Findings and Predictive Performance

This section reviewed numerous BCI experiments using EEG, fNIRS, and hybrid EEG-fNIRS systems to predict motor imagery and execution tasks. Offline EEG studies demonstrate strong performance, particularly when using advanced machine learning models like CNNs and LSTMs, with several studies reporting classification improvements of up to 20%. These models, though trained offline, can be adapted for online use due to their efficiency in feature extraction and classification. Online EEG experiments, while more challenging due to real-time constraints and artifacts, show growing reliability, especially when lightweight classifiers like LDA are employed. fNIRS-based studies also validate the capability to predict MI, though generally with lower temporal resolution and a physiological delay. However, fNIRS offers improved motion artifact robustness and remains promising, particularly in hybrid systems. Hybrid EEG-fNIRS systems leverage the strengths of both modalities, enhancing MI classification accuracy by combining EEG’s fast response with fNIRS’s spatial precision. In conclusion, the prediction of online imagined movement is possible and improving, but accuracy and speed still lag behind motor execution. Continued integration of modalities and algorithmic advancements are narrowing this gap.

5. Conclusions

This systematic review has explored the comparative strengths and limitations of EEG and fNIRS in BCI systems for prosthetic limb control. While EEG has long been the cornerstone of non-invasive neural interfaces due to its high temporal resolution and affordability, it remains limited by spatial resolution, susceptibility to motion artifacts, and signal noise in real-world environments. In contrast, fNIRS offers a compelling alternative, with its robustness to motion artifacts, improved spatial resolution, and enhanced usability in mobile and less controlled settings.

Despite a lower temporal resolution due to the hemodynamic delay, fNIRS demonstrates significant potential in decoding motor intention, particularly in tasks involving gross and fine motor control. Recent advances in real-time processing, lightweight hardware, and hybrid systems further demonstrate its viability. Experimental results consistently show that fNIRS-based systems can achieve reliable classification accuracies for both motor execution and imagery tasks, especially when combined with efficient signal processing and machine learning techniques.

fNIRS is increasingly proving its value not only as a stand-alone modality but especially as a core component in hybrid BCI systems. When combined with EEG, fNIRS compensates for EEG’s shortcomings, particularly its low spatial resolution and vulnerability to noise and using the hemodynamic response, it can enhance signal robustness and classification accuracy. Hybrid systems leveraging both EEG and fNIRS benefit from the high temporal resolution of EEG and the spatial precision and motion artifact resilience of fNIRS, creating a more comprehensive and adaptive neural interface. This integration improves the detection of complex motor intentions, reduces cognitive load, and enables more precise control of prosthetic devices. Therefore, fNIRS is not only an alternative but also a well-suited modality to complement EEG in hybrid BCIs, offering a more dependable and scalable path forward for neuroprosthetic applications and assistive technologies.

Notably, analysis of publication trends between 2017 and 2024 reveals a peak in research output around 2021, with a marked decline in the following years. While this may not directly coincide with the peak of the COVID-19 pandemic, the lag in publication timelines suggests that restrictions on in-person experimentation during the pandemic likely delayed data collection and disrupted research involving human participants. This disruption is reflected in the drop in published studies post 2021, particularly those involving novel data acquisition or user trials which are key aspects of BCI research.

Future research should focus on the advancements in hybrid BCI framework to optimised the strength that resonate from EEG and fNIRS. Further, it should also address the challenges of portability, long-term usability and real-time performance. Expanding research into clinical trials with diverse participant groups and not just healthy individuals will be critical to validate these systems in real-world environments and accelerate the translation into practical neuroprosthetic applications.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kocejko, T.; Weglerski, R.; Zubowicz, T.; Ruminski, J.; Wtorek, J.; Arminski, K. Design aspects of a low-cost prosthetic arm for people with severe movement disabilities. In Proceedings of the 2020 13th International Conference on Human System Interaction (HSI), Tokyo, Japan, 6–8 June 2020. [Google Scholar] [CrossRef]

- Suma, D.; Meng, J.; Edelman, B.J.; He, B. Spatial-temporal aspects of continuous EEG-based neurorobotic control. J. Neural Eng. 2020, 17, 066006. [Google Scholar] [CrossRef]

- Li, G.; Jiang, S.; Xu, Y.; Wu, Z.; Chen, L.; Zhang, D. A preliminary study towards prosthetic hand control using human stereo-electroencephalography (SEEG) signals. In Proceedings of the 2017 8th International IEEE/EMBS Conference on Neural Engineering (NER), Shanghai, China, 25–28 May 2017; pp. 375–378. [Google Scholar] [CrossRef]

- Batula, A.M.; Kim, Y.E.; Ayaz, H. Virtual and Actual Humanoid Robot Control with Four-Class Motor-Imagery-Based Optical Brain-Computer Interface. BioMed Res. Int. 2017, 2017, 1463512. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wang, S.; Shi, F.Y.; Guan, Y.; Wu, Y.; Zhang, L.L.; Shen, C.; Zeng, Y.W.; Wang, D.H.; Zhang, J. The effect of motor imagery with specific implement in expert badminton player. Neuroscience 2014, 275, 102–112. [Google Scholar] [CrossRef]

- Meng, H.J.; Pi, Y.L.; Liu, K.; Cao, N.; Wang, Y.Q.; Wu, Y.; Zhang, J. Differences between motor execution and motor imagery of grasping movements in the motor cortical excitatory circuit. PeerJ 2018, 6, e5588. [Google Scholar] [CrossRef]

- Ruhunage, I.; Perera, C.J.; Nisal, K.; Subodha, J.; Lalitharatne, T.D. EMG signal controlled transhumerai prosthetic with EEG-SSVEP based approch for hand open/close. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Cambridge, MA, USA, 14–17 November 2017; pp. 3169–3174. [Google Scholar] [CrossRef]

- Sattar, N.Y.; Kausar, Z.; Usama, S.A.; Naseer, N.; Farooq, U.; Abdullah, A.; Hussain, S.Z.; Khan, U.S.; Khan, H.; Mirtaheri, P. Enhancing Classification Accuracy of Transhumeral Prosthesis: A Hybrid sEMG and fNIRS Approach. IEEE Access 2021, 9, 113246–113257. [Google Scholar] [CrossRef]

- Sattar, N.Y.; Kausar, Z.; Usama, S.A.; Farooq, U.; Shah, M.F.; Muhammad, S.; Khan, R.; Badran, M. fNIRS-Based Upper Limb Motion Intention Recognition Using an Artificial Neural Network for Transhumeral Amputees. Sensors 2022, 22, 726. [Google Scholar] [CrossRef]

- Kumarasinghe, K.; Owen, M.; Taylor, D.; Kasabov, N.; Kit, C. FaNeuRobot: A Framework for Robot and Prosthetics Control Using the NeuCube Spiking Neural Network Architecture and Finite Automata Theory. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4465–4472. [Google Scholar] [CrossRef]

- Gayathri, G.; Udupa, G.; Nair, G.J. Control of bionic arm using ICA-EEG. In Proceedings of the 2017 International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 6–7 July 2017; pp. 1254–1259. [Google Scholar] [CrossRef]

- Parr, J.V.V.; Vine, S.J.; Wilson, M.R.; Harrison, N.R.; Wood, G. Visual attention, EEG alpha power and T7-Fz connectivity are implicated in prosthetic hand control and can be optimized through gaze training. J. Neuroeng. Rehabil. 2019, 16, 52. [Google Scholar] [CrossRef]

- Kasim, M.A.A.; Low, C.Y.; Ayub, M.A.; Zakaria, N.A.C.; Salleh, M.H.M.; Johar, K.; Hamli, H. User-Friendly LabVIEW GUI for Prosthetic Hand Control Using Emotiv EEG Headset. In Proceedings of the 2016 IEEE International Symposium on Robotics and Intelligent Sensors, IRIS 2016, Tokyo, Japan, 17–20 December 2016; Volume 105, pp. 276–281. [Google Scholar] [CrossRef]

- Cho, J.-H.; Jeong, J.-H.; Shim, K.-H.; Kim, D.-J.; Lee, S.-W. Classification of Hand Motions within EEG Signals for Non-Invasive BCI-Based Robot Hand Control. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 515–518. [Google Scholar] [CrossRef]

- Xu, B.; Li, W.; He, X.; Wei, Z.; Zhang, D.; Wu, C.; Song, A. Motor Imagery Based Continuous Teleoperation Robot Control with Tactile Feedback. Electronics 2020, 9, 174. [Google Scholar] [CrossRef]

- Yun, Y.-D.; Jeong, J.-H.; Cho, J.-H.; Kim, D.-J.; Lee, S.-W. Reconstructing Degree of Forearm Rotation from Imagined movements for BCI-based Robot Hand Control. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 3014–3017. [Google Scholar] [CrossRef]

- Zhu, G.; Li, R.; Zhang, T.; Lou, D.; Wang, R.; Zhang, Y. A simplified hybrid EEG-fNIRS Brain-Computer Interface for motor task classification. In Proceedings of the 2017 8th International IEEE/EMBS Conference on Neural Engineering (NER), Shanghai, China, 25–28 May 2017; pp. 134–137. [Google Scholar] [CrossRef]

- Sosnik, R.; Ben Zur, O. Reconstruction of hand, elbow and shoulder actual and imagined trajectories in 3D space using EEG slow cortical potentials. J. Neural Eng. 2020, 17, 016065. [Google Scholar] [CrossRef]

- Sreeja, S.R.; Himanshu; Samanta, D. Distance-based weighted sparse representation to classify motor imagery EEG signals for BCI applications. Multimed. Tools Appl. 2020, 79, 13775–13793. [Google Scholar] [CrossRef]

- Murphy, D.P.; Bai, O.; Gorgey, A.S.; Fox, J.; Lovegreen, W.T.; Burkhardt, B.W.; Atri, R.; Marquez, J.S.; Li, Q.; Fei, D.Y. Electroencephalogram-Based Brain-Computer Interface and Lower-Limb Prosthesis Control: A Case Study. Front. Neurol. 2017, 8, 696. [Google Scholar] [CrossRef]

- Ali, H.; Popescu, D.; Hadar, A.; Vasilateanu, A.; Popa, R.; Goga, N.; Hussam, H. EEG-based Brain Computer Interface Prosthetic Hand using Raspberry Pi 4. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 44–49. [Google Scholar] [CrossRef]

- Tang, C.; Gao, T.; Li, Y.; Chen, B. EEG channel selection based on sequential backward floating search for motor imagery classification. Front. Neurosci. 2022, 16, 1045851. [Google Scholar] [CrossRef]

- Carino-Escobar, R.I.; Rodríguez-García, M.E.; Carrillo-Mora, P.; Valdés-Cristerna, R.; Cantillo-Negrete, J. Continuous versus discrete robotic feedback for brain-computer interfaces aimed for neurorehabilitation. Front. Neurorobotics 2023, 17, 1015464. [Google Scholar] [CrossRef]

- Yu, G.; Wang, J.; Chen, W.; Zhang, J. EEG-based brain-controlled lower extremity exoskeleton rehabilitation robot. In Proceedings of the 2017 IEEE International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), Ningbo, China, 19–21 November 2017; pp. 763–767. [Google Scholar] [CrossRef]

- Naveed, K.; Iqbal, J.; ur Rehman, H. Brain controlled human robot interface. In Proceedings of the 2012 International Conference of Robotics and Artificial Intelligence, Islamabad, Pakistan, 22–23 October 2012; pp. 55–60. [Google Scholar] [CrossRef]

- Tayeb, Z.; Waniek, N.; Fedjaev, J.; Ghaboosi, N.; Rychly, L.; Widderich, C.; Richter, C.; Braun, J.; Saveriano, M.; Cheng, G.; et al. Gumpy: A Python toolbox suitable for hybrid brain-computer interfaces. J. Neural Eng. 2018, 15, 065003. [Google Scholar] [CrossRef]

- Sh, T.; Pirouzi, S.; Zamani, A.; Motealleh, A.; Bagheri, Z. Does Muscle Fatigue Alter EEG Bands of Brain Hemispheres? J. Biomed. Phys. Eng. 2020, 10, 187–196. [Google Scholar] [CrossRef] [PubMed]

- Xu, B.; Wang, Y.; Deng, L.; Wu, C.; Zhang, W.; Li, H.; Song, A. Decoding Hand Movement Types and Kinematic Information From Electroencephalogram. IEEE Trans. Neural Syst. Rehabil. Eng. A Publ. IEEE Eng. Med. Biol. Soc. 2021, 29, 1744–1755. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Kim, Y.; Miyakoshi, M.; Stapornchaisit, S.; Yoshimura, N.; Koike, Y. Brain Activity Reflects Subjective Response to Delayed Input When Using an Electromyography-Controlled Robot. Front. Syst. Neurosci. 2021, 15, 767477. [Google Scholar] [CrossRef]

- Herbert, J. Report of the committee on methods of clinical examination in electroencephalography. Electroencephalogr. Clin. Neurophysiol. 1958, 10, 370–375. [Google Scholar] [CrossRef]

- Xygonakis, I.; Athanasiou, A.; Pandria, N.; Kugiumtzis, D.; Bamidis, P.D. Decoding Motor Imagery through Common Spatial Pattern Filters at the EEG Source Space. Comput. Intell. Neurosci. 2018, 2018, 7957408. [Google Scholar] [CrossRef]

- Cho, J.H.; Kwon, B.H.; Lee, B.H. Decoding Multi-class Motor-related Intentions with User-optimized and Robust BCI System Based on Multimodal Dataset. In Proceedings of the 2023 11th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 20–22 February 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Ding, Y.; Udompanyawit, C.; Zhang, Y.; He, B. EEG-based brain-computer interface enables real-time robotic hand control at individual finger level. Nat. Commun. 2025, 16, 5401. [Google Scholar] [CrossRef]

- Akmal, M.; Zubair, S.; Alquhayz, H. Classification Analysis of Tensor-Based Recovered Missing EEG Data. IEEE Access 2021, 9, 41745–41756. [Google Scholar] [CrossRef]

- Asgher, U.; Khan, M.J.; Asif Nizami, M.H.; Khalil, K.; Ahmad, R.; Ayaz, Y.; Naseer, N. Motor Training Using Mental Workload (MWL) With an Assistive Soft Exoskeleton System: A Functional Near-Infrared Spectroscopy (fNIRS) Study for Brain–Machine Interface (BMI). Front. Neurorobotics 2021, 15, 605751. [Google Scholar] [CrossRef] [PubMed]

- Bandara, D.S.V.; Arata, J.; Kiguchi, K. Towards Control of a Transhumeral Prosthesis with EEG Signals. Bioengineering 2018, 5, 26. [Google Scholar] [CrossRef] [PubMed]

- Helmich, I.; Holle, H.; Rein, R.; Lausberg, H. Brain oxygenation patterns during the execution of tool use demonstration, tool use pantomime, and body-part-as-object tool use. Int. J. Psychophysiol. 2015, 96, 1–7. [Google Scholar] [CrossRef]

- Mizuochi, C.; Yabuki, Y.; Mouri, Y.; Togo, S.; Morishita, S.; Jiang, Y.; Kato, R.; Yokoi, H. Real-time cortical adaptation monitoring system for prosthetic rehabilitation based on functional near-infrared spectroscopy. In Proceedings of the 2017 IEEE International Conference on Cyborg and Bionic Systems (CBS), Beijing, China, 17–19 October 2017; pp. 130–135. [Google Scholar] [CrossRef]

- Paleari, M.; Luciani, R.; Ariano, P. Towards NIRS-based hand movement recognition. IEEE Int. Conf. Rehabil. Robot. 2017, 2017, 1506–1511. [Google Scholar] [CrossRef] [PubMed]

- Patil, A.V.; Safaie, J.; Moghaddam, H.A.; Wallois, F.; Grebe, R. Experimental investigation of NIRS spatial sensitivity. Biomed. Opt. Express 2011, 2, 1478–1493. [Google Scholar] [CrossRef]

- Pinti, P.; Tachtsidis, I.; Hamilton, A.; Hirsch, J.; Aichelburg, C.; Gilbert, S.; Burgess, P.W. The present and future use of functional near-infrared spectroscopy (fNIRS) for cognitive neuroscience. Ann. N. Y. Acad. Sci. 2020, 1464, 5–29. [Google Scholar] [CrossRef]

- Scherer, R.; Vidaurre, C. Chapter 8—Motor imagery based brain–computer interfaces. In Smart Wheelchairs and Brain-Computer Interfaces; Diez, P., Ed.; Academic Press: Cambridge, MA, USA, 2018; pp. 171–195. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Zhao, X.; Jiang, Y. Real-Time Brain Machine Interaction via Social Robot Gesture Control. In Proceedings of the DSCC2017, Volume 1: Aerospace Applications; Advances in Control Design Methods; Bio Engineering Applications; Advances in Non-Linear Control; Adaptive and Intelligent Systems Control; Advances in Wind Energy Systems; Advances in Robotics; Assistive and Rehabilitation Robotics; Biomedical and Neural Systems Modeling, Diagnostics, and Control; Bio-Mechatronics and Physical Human Robot; Advanced Driver Assistance Systems and Autonomous Vehicles; Automotive Systems, 2017. V001T37A002. Available online: https://asmedigitalcollection.asme.org/DSCC/DSCC2017/volume/58271 (accessed on 16 September 2024).

- Khan, M.J.; Zafar, A.; Hong, K.-S. Comparison of brain areas for executed and imagined movements after motor training: An fNIRS study. In Proceedings of the 2017 10th International Conference on Human System Interactions (HSI), Ulsan, Republic of Korea, 17–19 July 2017; pp. 125–130. [Google Scholar] [CrossRef]

- Alazrai, R.; Abuhijleh, M.; Alwanni, H.; Daoud, M.I. A Deep Learning Framework for Decoding Motor Imagery Tasks of the Same Hand Using EEG Signals. IEEE Access 2019, 7, 109612–109627. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Wolpaw, E.W. Brain-Computer Interfaces: Principles and Practice, 1st ed.; Oxford Press: New York, NY, USA, 2012. [Google Scholar]

- Li, C.; Yang, H.; Wu, X.; Zhang, Y. Improving EEG-Based Motor Imagery Classification Using Hybrid Neural Network. In Proceedings of the 2021 IEEE 9th International Conference on Information, Communication and Networks (ICICN), Xi’an, China, 25–28 November 2021; pp. 486–489. [Google Scholar] [CrossRef]

- Reust, A.; Desai, J.; Gomez, L. Extracting Motor Imagery Features to Control Two Robotic Hands. In Proceedings of the 2018 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Louisville, KY, USA, 6–8 December 2018; pp. 118–122. [Google Scholar] [CrossRef]

- Xi, X.; Pi, S.; Zhao, Y.B.; Wang, H.; Luo, Z. Effect of muscle fatigue on the cortical-muscle network: A combined electroencephalogram and electromyogram study. Brain Res. 2021, 1752, 147221. [Google Scholar] [CrossRef]

- Guggenberger, R.; Heringhaus, M.; Gharabaghi, A. Brain-Machine Neurofeedback: Robotics or Electrical Stimulation? Front. Bioeng. Biotechnol. 2020, 8, 639. [Google Scholar] [CrossRef]

- Idowu, O.P.; Fang, P.; Li, X.; Xia, Z.; Xiong, J.; Li, G. Towards Control of EEG-Based Robotic Arm Using Deep Learning via Stacked Sparse Autoencoder. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 1053–1057. [Google Scholar] [CrossRef]

- Staffa, M.; Giordano, M.; Ficuciello, F. A WiSARD Network Approach for a BCI-Based Robotic Prosthetic Control. Int. J. Soc. Robot. 2020, 12, 749–764. [Google Scholar] [CrossRef]

- Faiz, M.Z.A.; Al-Hamadani, A.A. Online Brain Computer Interface Based Five Classes EEG To Control Humanoid Robotic Hand. In Proceedings of the 2019 42nd International Conference on Telecommunications and Signal Processing (TSP), Budapest, Hungary, 1–3 July 2019; pp. 406–410. [Google Scholar] [CrossRef]

- Huang, Q.; Zhang, Z.; Yu, T.; He, S.; Li, Y. An EEG-/EOG-Based Hybrid Brain-Computer Interface: Application on Controlling an Integrated Wheelchair Robotic Arm System. Front. Neurosci. 2019, 13, 1243. [Google Scholar] [CrossRef]

- Kansal, S.; Garg, D.; Upadhyay, A.; Mittal, S.; Talwar, G.S. A novel deep learning approach to predict subject arm movements from EEG-based signals. Neural Comput. Appl. 2023, 35, 11669–11679. [Google Scholar] [CrossRef]

- Yavuz, E.; Eyupoglu, C. A cepstrum analysis-based classification method for hand movement surface EMG signals. Med. Biol. Eng. Comput. 2019, 57, 2179–2201, Place: United States. [Google Scholar] [CrossRef] [PubMed]

- Kansal, S.; Garg, D.; Upadhyay, A.; Mittal, S.; Talwar, G.S. DL-AMPUT-EEG: Design and development of the low-cost prosthesis for rehabilitation of upper limb amputees using deep-learning-based techniques. Eng. Appl. Artif. Intell. 2023, 126, 106990. [Google Scholar] [CrossRef]

- Copeland, C.; Mukherjee, M.; Wang, Y.; Fraser, K.; Zuniga, J.M. Changes in Sensorimotor Cortical Activation in Children Using Prostheses and Prosthetic Simulators. Brain Sci. 2021, 11, 991. [Google Scholar] [CrossRef]

- Araujo, R.S.; Silva, C.R.; Netto, S.P.N.; Morya, E.; Brasil, F.L. Development of a Low-Cost EEG-Controlled Hand Exoskeleton 3D Printed on Textiles. Front. Neurosci. 2021, 15, 661569. [Google Scholar] [CrossRef]

- Norman, S.L.; McFarland, D.J.; Miner, A.; Cramer, S.C.; Wolbrecht, E.T.; Wolpaw, J.R.; Reinkensmeyer, D.J. Controlling pre-movement sensorimotor rhythm can improve finger extension after stroke. J. Neural Eng. 2018, 15, 056026. [Google Scholar] [CrossRef]

- Pawan; Dhiman, R. Machine learning techniques for electroencephalogram based brain-computer interface: A systematic literature review. Meas. Sens. 2023, 28, 100823. [Google Scholar] [CrossRef]

- Park, J.; Berman, J.; Dodson, A.; Liu, Y.; Armstrong, M.; Huang, H.; Kaber, D.; Ruiz, J.; Zahabi, M. Assessing workload in using electromyography (EMG)-based prostheses. Ergonomics 2024, 67, 257–273. [Google Scholar] [CrossRef]

- Wang, X.; Lu, H.; Shen, X.; Ma, L.; Wang, Y. Prosthetic control system based on motor imagery. Comput. Methods Biomech. Biomed. Eng. 2022, 25, 764–771. [Google Scholar] [CrossRef]

- Mondini, V.; Kobler, R.J.; Sburlea, A.I.; Müller-Putz, G.R. Continuous low-frequency EEG decoding of arm movement for closed-loop, natural control of a robotic arm. J. Neural Eng. 2020, 17, 046031. [Google Scholar] [CrossRef]

- Shafiul Hasan, S.M.; Siddiquee, M.R.; Atri, R.; Ramon, R.; Marquez, J.S.; Bai, O. Prediction of gait intention from pre-movement EEG signals: A feasibility study. J. Neuroeng. Rehabil. 2020, 17, 50. [Google Scholar] [CrossRef]

- Vadivelan D, S.; Sethuramalingam, P. A hybrid approach for EEG motor imagery classification using adaptive margin disparity and knowledge transfer in convolutional neural networks. Comput. Biol. Med. 2025, 195, 110675. [Google Scholar] [CrossRef]

- Holle, H.; Gunter, T.C. The Role of Iconic Gestures in Speech Disambiguation: ERP Evidence. J. Cogn. Neurosci. 2007, 19, 1175–1192. [Google Scholar] [CrossRef]

- Sessle, B.J. Chapter 1—The Biological Basis of a Functional Occlusion: The Neural Framework. In Functional Occlusion in Restorative Dentistry and Prosthodontics; Klineberg, I., Eckert, S.E., Eds.; Mosby: Maryland, MO, USA, 2016; pp. 3–22. [Google Scholar] [CrossRef]

- Miskon, A.; Djonhari, A.; Azhar, S.; Thanakodi, S.; Mohd Tawil, S.N. Identification of Raw EEG Signal for Prosthetic Hand Application. In Proceedings of the ICBRA ’19: Proceedings of the 6th International Conference on Bioinformatics Research and Applications; Seoul, Republic of Korea, 19–21 June 2019, p. 83. [CrossRef]

- Xu, B.; Zhang, D.; Wang, Y.; Deng, L.; Wang, X.; Wu, C.; Song, A. Decoding Different Reach-and-Grasp Movements Using Noninvasive Electroencephalogram. Front. Neurosci. 2021, 15, 684547. [Google Scholar] [CrossRef] [PubMed]

- Mohamed, A.K.; Aharonson, V. Four-class BCI discrimination of right and left wrist and finger movements. In Proceedings of the Control Conference 663 Africa CCA 2021, Chengdu, China, 7–8 December 2021; Volume 54, pp. 91–96. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Q.; Zeng, S.; Zhang, B.; Fang, L. Latent Linear Discriminant Analysis for feature extraction via Isometric Structural Learning. Pattern Recognit. 2024, 149, 110218. [Google Scholar] [CrossRef]

- Shantala, C.P.; Rashmi, C.R. Mind Controlled Wireless Robotic Arm Using Brain Computer Interface. In Proceedings of the 2017 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), Coimbatore, India, 14–16 December 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Shafiei, S.B.; Durrani, M.; Jing, Z.; Mostowy, M.; Doherty, P.; Hussein, A.A.; Elsayed, A.S.; Iqbal, U.; Guru, K. Surgical Hand Gesture Recognition Utilizing Electroencephalogram as Input to the Machine Learning and Network Neuroscience Algorithms. Sensors 2021, 21, 1733. [Google Scholar] [CrossRef]

- Chen, W.; Wang, S.; Zhang, X.; Yao, L.; Yue, L.; Qian, B.; Li, X. EEG-based Motion Intention Recognition via Multi-task RNNs. In Proceedings of the 2018 SIAM International Conference on Data Mining (SDM), San Diego, CA, USA, 3–5 May 2018; Proceedings. Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2018; pp. 279–287. [Google Scholar] [CrossRef]

- Buerkle, A.; Eaton, W.; Lohse, N.; Bamber, T.; Ferreira, P. EEG based arm movement intention recognition towards enhanced safety in symbiotic Human-Robot Collaboration. Robot. -Comput.-Integr. Manuf. 2021, 70, 102137. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, B.; Zhang, C.; Xiao, Y.; Wang, M.Y. An EEG/EMG/EOG-Based Multimodal Human-Machine Interface to Real-Time Control of a Soft Robot Hand. Front. Neurorobotics 2019, 13, 7. [Google Scholar] [CrossRef]

- Ofner, P.; Schwarz, A.; Pereira, J.; Müller-Putz, G.R. Upper limb movements can be decoded from the time-domain of low-frequency EEG. PLoS ONE 2017, 12, e0182578. [Google Scholar] [CrossRef]

- Lee, J.; Mukae, N.; Arata, J.; Iwata, H.; Iramina, K.; Iihara, K.; Hashizume, M. A multichannel-near-infrared-spectroscopy-triggered robotic hand rehabilitation system for stroke patients. IEEE Int. Conf. Rehabil. Robot. 2017, 2017, 158–163. [Google Scholar] [CrossRef] [PubMed]

- Ortega, P.; Faisal, A.A. Deep learning multimodal fNIRS and EEG signals for bimanual grip force decoding. J. Neural Eng. 2021, 18. [Google Scholar] [CrossRef] [PubMed]

- Nazeer, H.; Naseer, N.; Mehboob, A.; Khan, M.J.; Khan, R.A.; Khan, U.S.; Ayaz, Y. Enhancing Classification Performance of fNIRS-BCI by Identifying Cortically Active Channels Using the z-Score Method. Sensors 2020, 20, 6995. [Google Scholar] [CrossRef]

- Rieke, J.D.; Matarasso, A.K.; Yusufali, M.M.; Ravindran, A.; Alcantara, J.; White, K.D.; Daly, J.J. Development of a combined, sequential real-time fMRI and fNIRS neurofeedback system to enhance motor learning after stroke. J. Neurosci. Methods 2020, 341, 108719. [Google Scholar] [CrossRef]

- Syed, A.U.; Sattar, N.Y.; Ganiyu, I.; Sanjay, C.; Alkhatib, S.; Salah, B. Deep learning-based framework for real-time upper limb motion intention classification using combined bio-signals. Front. Neurorobot. 2023, 17, 1174613. [Google Scholar] [CrossRef]

- Li, C.; Xu, Y.; He, L.; Zhu, Y.; Kuang, S.; Sun, L. Research on fNIRS Recognition Method of Upper Limb Movement Intention. Electronics 2021, 10, 1239. [Google Scholar] [CrossRef]

- Qin, K.; Liu, Y.; Liu, S.; Li, Y.; Li, Y.; You, X. Neural mechanisms for integrating time and visual velocity cues in a prediction motion task: An fNIRS study. Psychophysiology 2024, 61, e14425. [Google Scholar] [CrossRef] [PubMed]

- Saadati, M.; Nelson, J.; Ayaz, H. Multimodal fNIRS-EEG Classification Using Deep Learning Algorithms for Brain-Computer Interfaces Purposes. In Proceedings of the Advances in Neuroergonomics and Cognitive Engineering; Ayaz, H., Ed.; Springer: Cham, Switzerland, 2020; pp. 209–220. [Google Scholar]

- Kim, S.; Shin, D.Y.; Kim, T.; Lee, S.; Hyun, J.K.; Park, S.M. Enhanced Recognition of Amputated Wrist and Hand Movements by Deep Learning Method Using Multimodal Fusion of Electromyography and Electroencephalography. Sensors 2022, 22, 680. [Google Scholar] [CrossRef]

- Mavridis, C.N.; Baras, J.S.; Kyriakopoulos, K.J. A Human-Robot Interface based on Surface Electroencephalographic Sensors. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 10927–10932. [Google Scholar] [CrossRef]

- Shiman, F.; López-Larraz, E.; Sarasola-Sanz, A.; Irastorza-Landa, N.; Spüler, M.; Birbaumer, N.; Ramos-Murguialday, A. Classification of different reaching movements from the same limb using EEG. J. Neural Eng. 2017, 14, 046018. [Google Scholar] [CrossRef]

- Darmakusuma, R.; Prihatmanto, A.; Indrayanto, A.; Mengko, T. Hybrid Brain-Computer Interface: A Novel Method on the Integration of EEG and sEMG Signal for Active Prosthetic Control. Makara J. Technol. 2018, 22, 28–36. [Google Scholar] [CrossRef]

- Radha, H.; Hassan, A.; Al-Timemy, A. Enhancing Upper Limb Prosthetic Control in Amputees Using Non-invasive EEG and EMG Signals with Machine Learning Techniques. Aro-Sci. J. Koya Univ. 2023, 11, 99–108. [Google Scholar] [CrossRef]

- Aly, H.I.; Youssef, S.; Fathy, C. Hybrid Brain Computer Interface for Movement Control of Upper Limb Prostheses. In Proceedings of the 2018 International Conference on Biomedical Engineering and Applications (ICBEA), Funchal, Portugal, 9–12 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Keihani, A.; Mohammadi, A.M.; Marzbani, H.; Nafissi, S.; Haidari, M.R.; Jafari, A.H. Sparse representation of brain signals offers effective computation of cortico-muscular coupling value to predict the task-related and non-task sEMG channels: A joint hdEEG-sEMG study. PLoS ONE 2022, 17, e0270757. [Google Scholar] [CrossRef] [PubMed]

- Schreiter, J.; Mielke, T.; Schott, D.; Thormann, M.; Omari, J.; Pech, M.; Hansen, C. A multimodal user interface for touchless control of robotic ultrasound. Int. J. Comput. Assist. Radiol. Surg. 2023, 18, 1429–1436. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, S.; Wang, Z.; Ji, F.; Ming, D. A Novel Modular and Wearable Supernumerary Robotic Finger via EEG-EMG Control with 4-week Training Assessment. In Proceedings of the Intelligent Robotics and Applications, Yantai, China, 22–25 October 2021; Liu, X.J., Nie, Z., Yu, J., Xie, F., Song, R., Eds.; Springer: Cham, Switzerland, 2021; pp. 748–758. [Google Scholar]

- Soto-Florido, M.; Garcia-Cifuentes, E.; Irragorri, A.M.; Mondragon, I.F.; Mendez, D.; Colorado, J.D.; Alvarado-Rojas, C. Identification of Neuromotor Patterns Associated with Hand Rehabilitation Movements from EMG and EEG Signals. In Proceedings of the 2025 International Conference on Rehabilitation Robotics (ICORR), Chicago, IL, USA, 12–16 May 2025; pp. 1307–1312. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).