3.1. Study Selection and General Characteristics

The study selection process adhered to the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines and is detailed in the corresponding flow diagram. The initial search across six electronic databases (Pubmed, WOS, Science, Scopus, Springer, Scholar) and clinical trial registries identified a total of 313 records. After the removal of 160 duplicates, 153 unique records proceeded to the screening phase. During this phase, 139 records were eliminated in the title and abstract filter because they did not meet the preliminary inclusion criteria.

Of the 14 remaining articles, full texts were sought for a more detailed eligibility assessment. It was not possible to retrieve two of these reports (See

Figure 1). The remaining 12 full-text articles were rigorously evaluated for inclusion. At this stage, seven articles were excluded for specific reasons: three were not randomized trials, one was not a clinical trial at all, and three did not report outcomes relevant to the objectives of this review. This meticulous selection process culminated in the inclusion of five unique studies that form the basis of this analysis.

The five trials included in this systematic review were conducted in high-income Western countries, reflecting the current epicenter of research and development in this field. Three studies were carried out in the United Kingdom (Upton et al., 2024 [

16]; Fleming et al., 2021 [

17]; Palmer et al., 2019 [

18]), and two in North America (Braley et al., 2021 [

19], in the US and Canada; Cherney et al., 2021 [

20], in the US). The publication period, spanning from 2019 to 2024, underscores the contemporary nature and rapid evolution of research on digital therapies for aphasia.

The geographical concentration of these studies in settings with robust healthcare systems, such as the UK’s National Health Service (NHS), and well-funded research ecosystems is noteworthy. The development, validation, and implementation of AI-driven digital health technologies require significant investment in technological infrastructure, academic-clinical collaboration, and funding for large-scale trials, such as the multicenter Big CACTUS study. This reality has direct implications for the generalizability of the findings. The studied platforms, developed and tested primarily in English-speaking populations, may have limited applicability in low- and middle-income countries, where barriers related to access to smart devices, internet connectivity, digital literacy, and healthcare infrastructure could impede their widespread adoption without substantial investment in localization and cultural adaptation.

The designs of the included studies show considerable methodological heterogeneity, reflecting the diversity of approaches to evaluating these new interventions. Included were two-arm RCTs (Braley et al., 2021 [

19]; Cherney et al., 2021 [

20]), a large-scale, three-arm multicenter RCT (Palmer et al., 2019 [

18]), a crossover RCT (Fleming et al., 2021 [

17]), and a Phase II within-subject design (Upton et al., 2024 [

16]). A summary of the general characteristics of the included studies is presented in

Table 2.

3.2. Participant Characteristics

A total of 366 individuals participated in the five included studies. Sample sizes varied considerably, from 27 participants in the within-subject design study by Upton et al. (2024) [

16] to 240 participants in the modified intention-to-treat analysis of the large-scale trial by Palmer et al. (2019) [

18]. The mean age of participants was consistently around 60 years (range 58.3 to ~64.9 years), a demographic representative of the stroke population.

A fundamental and unifying methodological feature of all studies was their exclusive focus on individuals with chronic aphasia. The mean time since stroke ranged from 38.1 months (in the control group of Braley et al., 2021 [

19]) to 83 months (Upton et al., 2024 [

16]). This focus on the chronic phase (>6–12 months post-stroke) is a significant methodological strength. Observed improvements in language function are more likely to be attributed to the therapeutic intervention itself, rather than to the spontaneous biological recovery that characterizes the acute and subacute phases post-stroke. Therefore, the findings of this review are particularly relevant for the long-term management of aphasia, a context in which intensive treatment options are often limited.

The aphasia profiles of the participants showed considerable variability, reflecting the clinical spectrum of the disorder. Some studies focused on specific deficits, such as anomia (word-finding difficulty) in the study by Upton et al. (2024) [

16] or auditory comprehension deficits in Fleming et al. (2021) [

17]. Others used broader inclusion criteria, such as a Western Aphasia Battery-Revised Aphasia Quotient (WAB-R AQ) score of ≤90 (Braley et al., 2021 [

19]), or included a mix of fluent and non-fluent aphasia types (Cherney et al., 2021 [

20]). The WAB-R AQ is a standardized and widely used measure to quantify the overall severity of aphasia, where lower scores indicate greater severity. The Big CACTUS trial, the largest in the cohort, stratified participants by the severity of their word-finding difficulty, allowing for a more nuanced analysis.

This clinical heterogeneity, while increasing the applicability of the findings to a real-world patient population, also introduces challenges for direct comparison of results across studies. The effectiveness of a digital intervention may vary depending on the patient’s aphasia profile. For example, a therapy designed to improve word retrieval may have a different impact on an individual with pure anomia compared to one with global aphasia and severe comprehension deficits. Emerging evidence supports this notion; the economic analysis of the Big CACTUS trial suggested that computerized therapy might be more cost-effective for individuals with mild to moderate word-finding difficulties compared to those with severe deficits. This suggests that a “one-size-fits-all” approach to digital therapy may be suboptimal. The future trajectory of this field will likely move towards more personalized medicine, where the type of AI-driven digital therapy is precisely tailored to each patient’s specific neuropsychological and linguistic profile, requiring further research focused on subgroup analyses.

Table 3 details the characteristics of the participants in the reviewed studies.

3.3. Intervention Details and Comparison Groups

The interventions evaluated in this review represent a spectrum of technological sophistication, from highly adaptive AI-driven platforms to more static programs with remote supervision. This variability is key to understanding the mechanisms underlying the observed outcomes.

At the most advanced end of the spectrum are platforms like Constant Therapy, which uses a proprietary “NeuroPerformance Engine” to personalize exercise selection and adjust task difficulty based on the user’s real-time performance. Similarly,

iTalkBetter employs a “NUVA Classifier” that performs real-time speech analysis to provide immediate feedback. These technologies embody the principle of adaptive therapy, which aims to keep the patient in a zone of “desirable difficulty.” This concept, rooted in the science of learning and neuroplasticity, posits that for lasting learning and neural reorganization to occur, tasks must be challenging enough to stimulate the brain but not so difficult as to cause frustration and abandonment. Adaptive AI engines are designed to dynamically calibrate this level of challenge, thereby optimizing the quality and effectiveness of each practice session.

Other platforms, such as Listen-In and StepByStep (used in the Big CACTUS trial), employ more specific adaptive algorithms. Listen-In adjusts difficulty in word-picture matching tasks, while StepByStep offers personalization and adaptation based on user performance, automatically moving them to higher or lower levels according to their progress. In contrast, the Web ORLA® platform is explicitly non-adaptive; the difficulty of the oral reading tasks is adjusted remotely by a clinician. This model more closely resembles traditional telerehabilitation than an autonomous AI-driven therapy.

The dose and duration of therapy also varied significantly across studies. Intensity, measured in hours per week, ranged from a moderate-intensity regimen of approximately 2.5 h weekly (Braley et al., 2021 [

19]) to high-intensity regimens of 7.5 to 8.5 h weekly (Upton et al., 2024 [

16]; Fleming et al., 2021 [

17]). The total duration of the intervention varied from 6 weeks (Upton et al., 2024 [

16]; Cherney et al., 2021 [

20]) to 6 months (Palmer et al., 2019 [

18]). A key finding is that, regardless of the schedule, the total therapy dose (total time on task) was substantial in all studies, far exceeding the typical provision of outpatient therapy in many healthcare systems. For example, the study by Fleming et al. (2021) [

17] delivered an average of 85 h of therapy in 12 weeks, while the Palmer et al. (2019) [

18] trial facilitated an average of 28 h of self-managed practice over 6 months.

This data offers a nuanced perspective on the intensity debate in aphasia therapy. While some systematic reviews suggest that higher weekly intensity (>8 h/week) is associated with better outcomes, the studies reviewed here indicate that both short-term, high-intensity regimens (Fleming et al. [

17]) and long-term, moderate-intensity regimens (Palmer et al. [

18], Braley et al. [

19]) can produce clinically significant effects. This suggests that the critical factor may be the total cumulative dose of practice. The main advantage of digital therapy is its ability to facilitate a high total dose in a way that is logistically flexible and more economically viable than traditional face-to-face therapy.

The control groups used were also diverse, ranging from usual care combined with an attention control (puzzles) (Palmer et al., 2019 [

18]), to paper-based exercises (Braley et al., 2021 [

19]), a placebo game (Bejeweled 2) (Cherney et al., 2021 [

20]), or within-subject designs where participants acted as their own control (Fleming et al., 2021 [

17]; Upton et al., 2024 [

16]).

Table 4 summarizes the intervention details.

3.4. Synthesis of Efficacy Results

All five included trials reported positive outcomes, demonstrating the efficacy of digital therapies in improving various aspects of language function in people with chronic aphasia. However, the magnitude and nature of these effects varied depending on the intervention and the outcome measure used.

The study by Upton et al. (2024) [

16], using the iTalkBetter app, showed a 13% improvement in word retrieval accuracy on a naming test. Crucially, it also observed a transfer of these gains to more functional language, with an increase of 4.4 informative words in spontaneous speech. These improvements were maintained 12 weeks after the therapy ended, suggesting durable learning.

Fleming et al. (2021) [

17], evaluating the Listen-In app for auditory comprehension, reported an 11% improvement in accuracy on an auditory comprehension task, which translated to a large effect size (d = 1.12), indicating a substantial clinical impact.

The trial by Braley et al. (2021) [

19], using the Constant Therapy platform, yielded one of the most notable results in terms of overall impact. The experimental group showed a 6.75-point improvement on the WAB-R AQ, a measure of overall aphasia severity. This gain was dramatically greater than that observed in the control group, which only improved by 0.38 points, a difference that is both statistically and clinically significant.

Cherney et al. (2021) [

20], with the non-adaptive Web ORLA

® platform, found more modest but statistically significant improvements in the WAB Language Quotient (WAB-LQ). Interestingly, the gains continued to increase during the follow-up period, rising from +0.99 points immediately post-therapy to +2.70 points at follow-up, which could indicate a learning consolidation effect.

Finally, the Big CACTUS trial by Palmer et al. (2019) [

18], the largest in the review, reported the greatest effect on a specific task. The group using the StepByStep computerized therapy improved by 16.4% in retrieving personally relevant words, compared to minimal changes in the usual care (+1.1%) and attention control (+2.4%) groups.

Despite these positive results, a deeper analysis reveals a critical and recurring pattern: the generalization gap. This term refers to the discrepancy between the significant improvements observed in impairment-level tasks (i.e., the ability to perform specific exercises like naming pictures) and the lack of transfer of these gains to functional communication in daily life.

The Big CACTUS trial is the clearest example of this phenomenon. Despite its resounding success in improving word retrieval (its primary impairment-level outcome), the study found no statistically significant improvement in its functional co-primary outcome: communication ability in a conversation, rated by blinded assessors using the Therapy Outcome Measures activity scale. Similarly, no improvements were observed in quality of life or in participants’ perception of communication. This finding is of utmost importance, as it suggests that while AI is an exceptionally effective tool for repetitive practice and the automation of specific language skills, this does not automatically translate into richer or more effective real-world conversations.

The study by Upton et al. (iTalkBetter) [

16] offers a promising counterpoint, as it did demonstrate a transfer of gains in naming to an increase in the informational content of connected speech. This could be due to the specific features of the platform, which focuses on high-frequency words and provides real-time feedback on oral production.

The generalization gap represents a fundamental challenge for the field of aphasia rehabilitation. It indicates that future developments in digital therapy must go beyond the simple practice of isolated words. To achieve a truly functional impact, platforms may need to incorporate modules that explicitly train sentence construction, discourse coherence, and conversational strategies. The integration of therapeutic techniques such as Response Elaboration Training (RET), which focuses on expanding patient productions in a back-and-forth context, could be a promising avenue for closing this gap.

Table 5 summarizes the quantitative results of the studies.

3.5. Methodological Quality and Risk of Bias Assessment

The methodological quality of the included studies was assessed using the Jadad Scale and the Cochrane Risk of Bias tool for randomized trials. The results of these assessments are crucial for determining confidence in the reported findings.

On the Jadad Scale, all five studies scored 3 out of a maximum of 5 (see

Table 6). This score, which generally indicates moderate methodological quality, was due to a consistent pattern: all studies were described as randomized and appropriately handled withdrawals and dropouts (1 point for each), but none could be described as double-blind, thus losing points for the description and appropriateness of blinding.

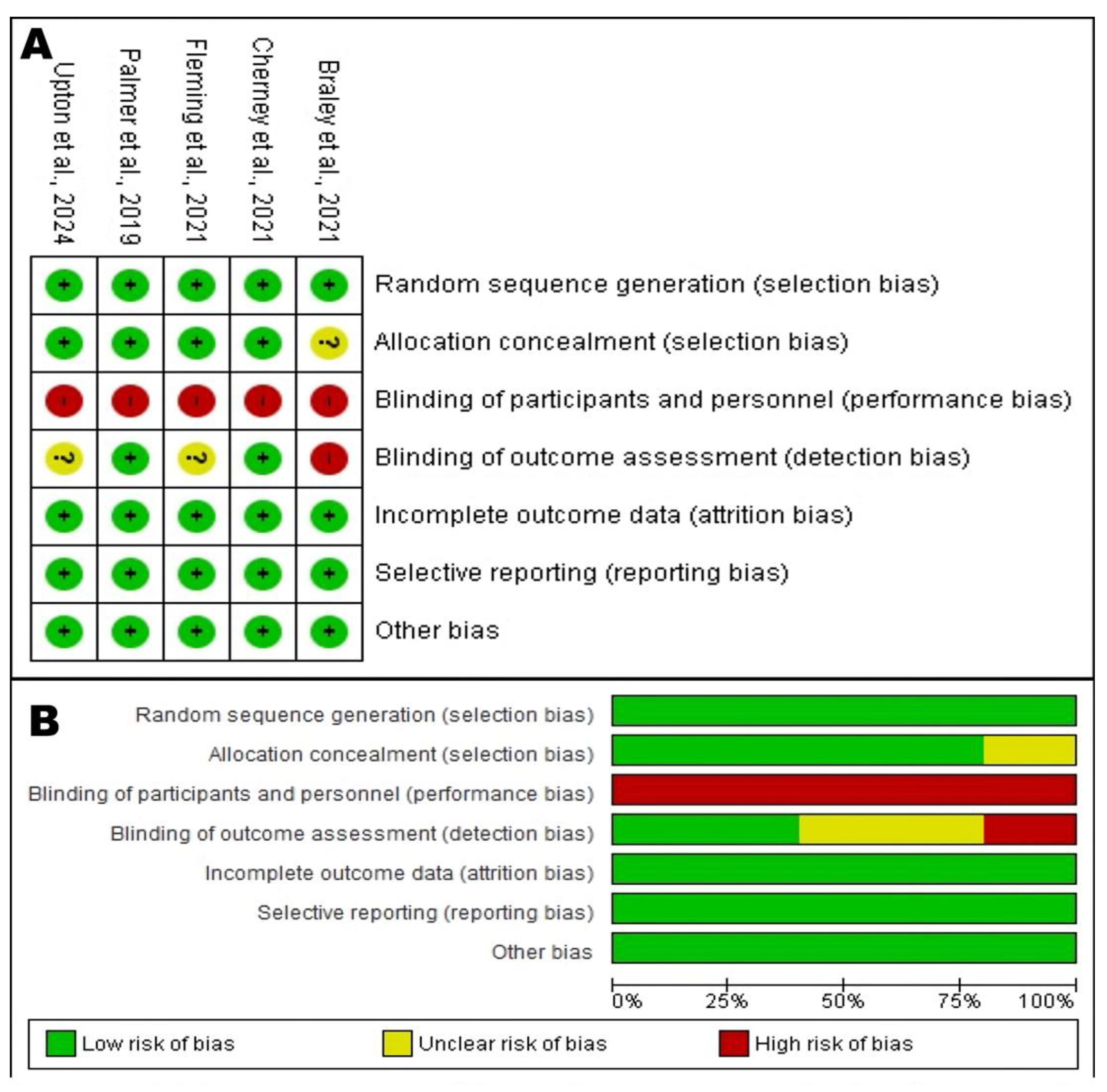

A more detailed analysis, as visualized in

Figure 2, reveals a risk of bias profile with clear strengths and weaknesses. In general, the domains of random sequence generation, incomplete outcome data (attrition bias), and selective reporting presented a low risk across all studies. Allocation concealment was also mostly low risk, with only one study rated as unclear. This indicates that the randomization and reporting processes were robust, minimizing the risk of selection and attrition bias.

Figure 2.

Risk of Bias Assessment of the RCTs. (

A) Risk of bias summary: Judgments about each risk of bias item for each included study. The “+” symbol (green) indicates a low risk of bias, “?” (yellow) indicates an unclear risk, and “-” (red) indicates a high risk. (

B) Risk of bias graph: Judgments about each risk of bias item presented as percentages across all included studies [

16,

17,

18,

19,

20].

Figure 2.

Risk of Bias Assessment of the RCTs. (

A) Risk of bias summary: Judgments about each risk of bias item for each included study. The “+” symbol (green) indicates a low risk of bias, “?” (yellow) indicates an unclear risk, and “-” (red) indicates a high risk. (

B) Risk of bias graph: Judgments about each risk of bias item presented as percentages across all included studies [

16,

17,

18,

19,

20].

The most significant and universal methodological limitation in this cohort of studies lies in the blinding of participants and personnel (performance bias). As shown in

Figure 2, all five studies were rated at high risk of bias in this domain. This finding should not necessarily be interpreted as a flaw in the study designs, but rather as a challenge inherent to the nature of behavioral interventions. It is practically impossible to blind a participant as to whether they are interacting with a dynamic, gamified, and personalized therapy app, or completing paper-based exercises or playing a simple puzzle game. This knowledge can generate potent expectancy and motivation effects. Participants in the experimental groups may be more engaged, invest more effort, and have a greater belief in the treatment’s efficacy. These non-specific factors alone can lead to better performance, regardless of the app’s therapeutic content. Therefore, the large effect sizes reported should be interpreted with caution, as they likely represent a combination of the intervention’s true therapeutic effect and a non-specific effect driven by differential motivation and engagement between groups.

The blinding of outcome assessment (detection bias) presented a mixed picture. The studies by Cherney et al. (2021) [

20] and Palmer et al. (2019) [

18] had a low risk of detection bias, as the outcome assessors were blinded to group allocation, which is a major methodological strength that increases confidence in their outcome measurements. Conversely, the study by Braley et al. (2021) [

19] was rated at high risk in this domain, which constitutes a notable weakness. An unblinded assessor could, even unconsciously, score the intervention group more favorably, which may have contributed to the large +6.75-point gain on the WAB-AQ. The studies by Upton et al. (2024) [

16] and Fleming et al. (2021) [

17] were rated as unclear risk due to a lack of explicit information.

The domain of other bias was consistently rated as low risk, suggesting that the studies were free from other significant issues that could skew the results.