Elucidating White Matter Contributions to the Cognitive Architecture of Affective Prosody Recognition: Evidence from Right Hemisphere Stroke

Abstract

1. Introduction

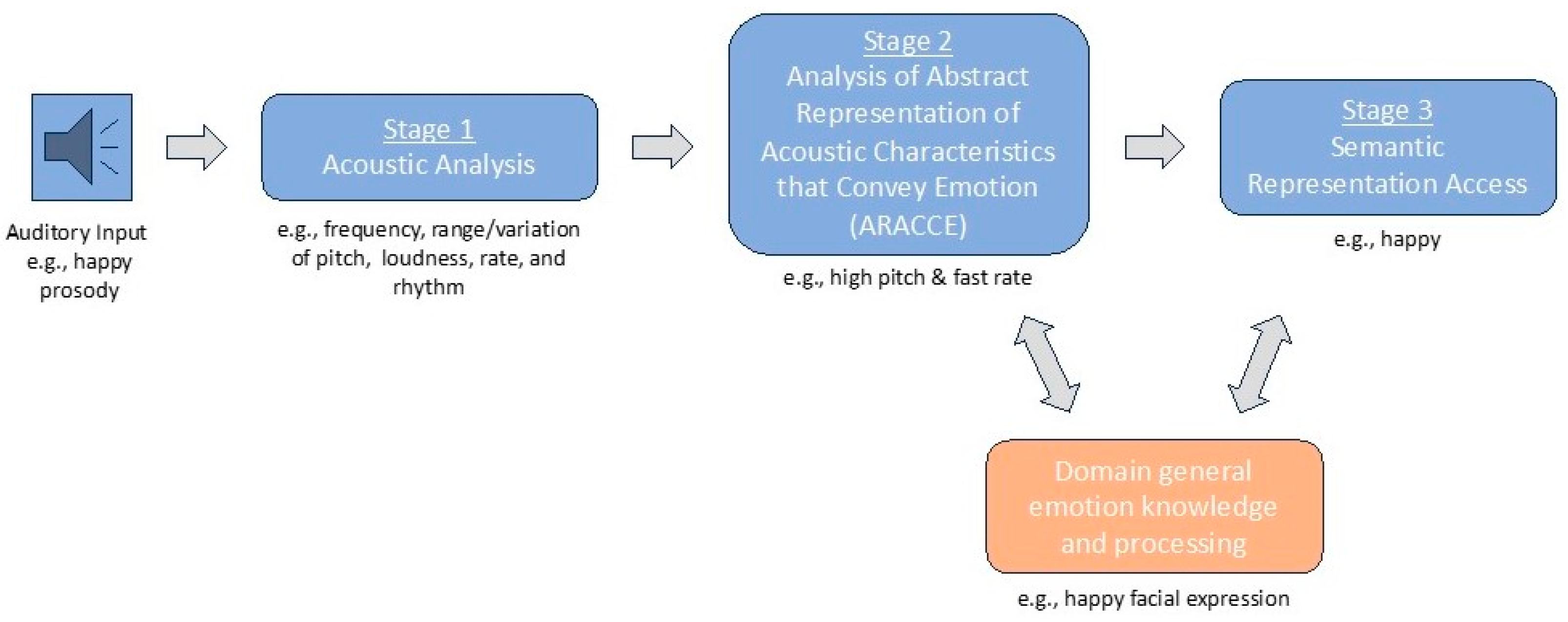

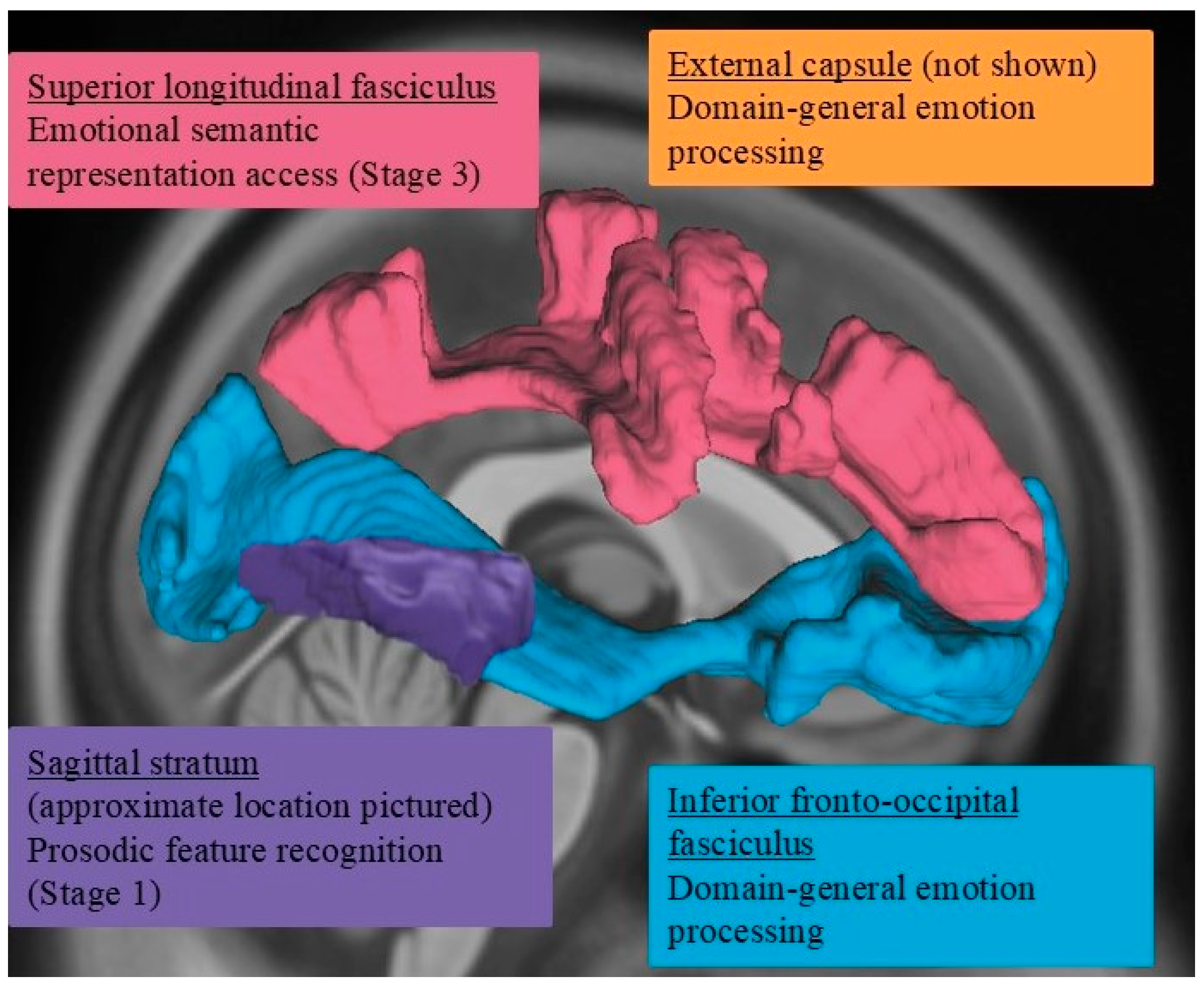

- According to the dual-stream model of prosody processing [36], damage to right hemisphere ventral stream white matter structures, such as the inferior fronto-occipital fasciculus and uncinate fasciculus, is expected to be more frequently associated with impairments in affective prosody recognition. In contrast, damage to more dorsally situated white matter pathways, such as the arcuate fasciculus as captured within the superior longitudinal fasciculus, would not be as commonly associated with affective prosody recognition deficits.

- Damage within specific ventral stream white matter structures is also expected to impact specific stages underlying prosody recognition. According to Schirmer and Kotz’s model [2], more posterior right hemisphere white matter structures (e.g., sagittal stratum) would be associated with earlier stages of prosody recognition, whereas damage to more anterior structures (e.g., uncinate fasciculus) would likely affect later stages of prosody recognition.

2. Materials and Methods

2.1. Participants

2.2. Procedures: Behavioral Testing

2.2.1. Affective Prosody Recognition (i.e., Word Prosody Recognition)

2.2.2. Recognition of Prosodic Features in Speech (Stage 1)

2.2.3. Matching Features with Emotions (Stage 2)

2.2.4. Emotion Synonym Task (Stage 3)

2.2.5. Facial Expression Recognition

2.3. Image Acquisition and Processing

2.4. Statistical Analyses

3. Results

3.1. Behavioral Assessment Results: Between-Group Comparisons

3.2. Behavioral Assessment Results: Within-Group (RHS) Comparisons

3.3. Lesion–Symptom Mapping Results

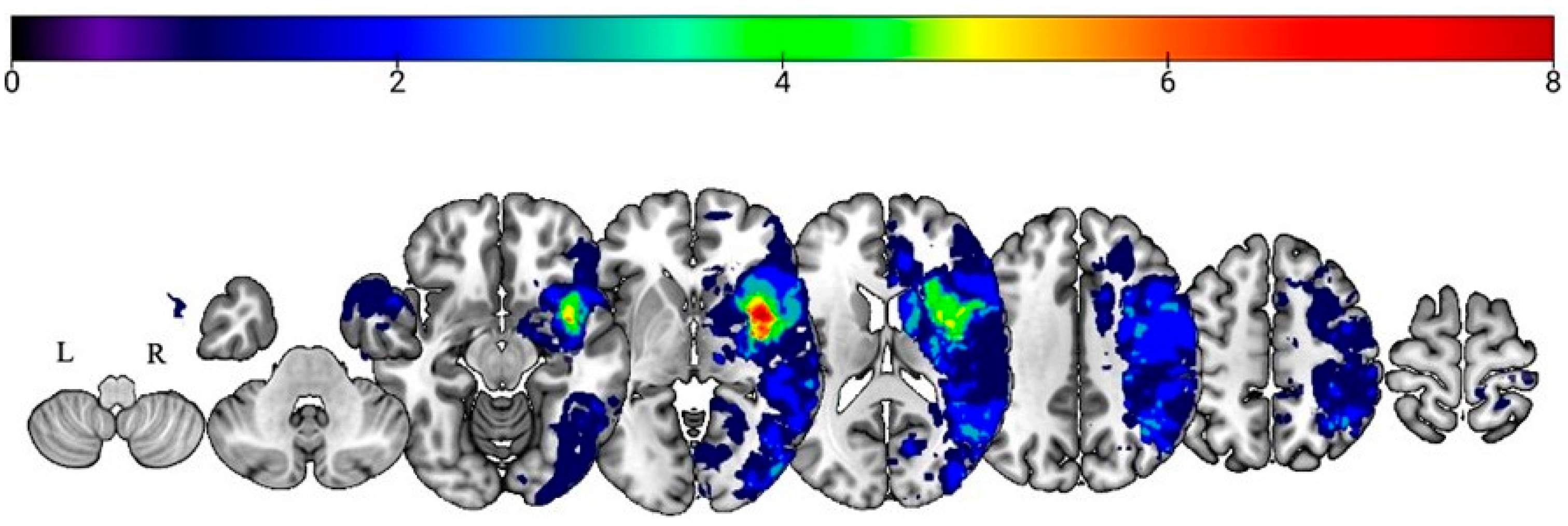

3.3.1. Prosody Recognition

3.3.2. Recognition of Prosodic Features in Speech (Stage 1) Findings

3.3.3. Matching Features with Emotions (Stage 2) Findings

3.3.4. Emotion Synonym Matching (Stage 3) Findings

3.3.5. Facial Expression Recognition (Domain-General Emotion Processing)

3.4. Association of DTI Metrics with Affective Prosody Recognition Across Recovery

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| RHS | Right hemisphere stroke |

| ARACCE | Abstract representations of acoustic characteristics that convey emotion |

| MRI | Magnetic resonance imaging |

| DTI | Diffusion tensor imaging |

| DWI | Diffusion weighted imaging |

| MNI | Montreal Neurological Institute |

| SPM | Statistical parametric mapping |

| JHU | Johns Hopkins University |

| ROI | Regions of interest |

| FDR | False discovery rate |

| EC | External capsule |

| IFOF | Inferior fronto-occipital fasciculus |

| UF | Uncinate fasciculus |

| SLF | Superior longitudinal fasciculus |

| SS | Sagittal stratum |

| BCC | Body of the corpus callosum |

| GCC | Genu of the corpus callosum |

References

- Wymer, J.H.; Lindman Linda, S.; Booksh, R.L. A Neuropsychological Perspective of Aprosody: Features, Function, Assessment, and Treatment. Appl. Neuropsychol. 2002, 9, 37–47. [Google Scholar] [CrossRef] [PubMed]

- Schirmer, A.; Kotz, S.A. Beyond the Right Hemisphere: Brain Mechanisms Mediating Vocal Emotional Processing. Trends Cogn. Sci. 2006, 10, 24–30. [Google Scholar] [CrossRef] [PubMed]

- Blonder, L.X.; Pettigrew, L.C.; Kryscio, R.J. Emotion Recognition and Marital Satisfaction in Stroke. J. Clin. Exp. Neuropsychol. 2012, 34, 634–642. [Google Scholar] [CrossRef] [PubMed]

- Hillis, A.E.; Tippett, D.C. Stroke Recovery: Surprising Influences and Residual Consequences. Adv. Med. 2014, 2014, 378263. [Google Scholar] [CrossRef] [PubMed]

- Martinez, M.; Multani, N.; Anor, C.J.; Misquitta, K.; Tang-Wai, D.F.; Keren, R.; Fox, S.; Lang, A.E.; Marras, C.; Tartaglia, M.C. Emotion Detection Deficits and Decreased Empathy in Patients with Alzheimer’s Disease and Parkinson’s Disease Affect Caregiver Mood and Burden. Front. Aging Neurosci. 2018, 10, 120. [Google Scholar] [CrossRef] [PubMed]

- O’Connell, K.; Marsh, A.A.; Edwards, D.F.; Dromerick, A.W.; Seydell-Greenwald, A. Emotion Recognition Impairments and Social Well-Being Following Right-Hemisphere Stroke. Neuropsychol. Rehabil. 2022, 32, 1337–1355. [Google Scholar] [CrossRef] [PubMed]

- Dara, C.; Bang, J.; Gottesman, R.F.; Hillis, A.E. Right Hemisphere Dysfunction Is Better Predicted by Emotional Prosody Impairments as Compared to Neglect. J. Neurol. Transl. Neurosci. 2014, 2, 1037. [Google Scholar] [PubMed]

- Sheppard, S.M.; Keator, L.M.; Breining, B.L.; Wright, A.E.; Saxena, S.; Tippett, D.C.; Hillis, A.E. Right Hemisphere Ventral Stream for Emotional Prosody Identification: Evidence from Acute Stroke. Neurology 2020, 94, e1013–e1020. [Google Scholar] [CrossRef] [PubMed]

- Sheppard, S.M.; Stockbridge, M.D.; Keator, L.M.; Murray, L.L.; Blake, M.L. Right Hemisphere Damage working group, Evidence-Based Clinical Research Committee, Academy of Neurologic Communication Disorders and Sciences The Company Prosodic Deficits Keep Following Right Hemisphere Stroke: A Systematic Review. J. Int. Neuropsychol. Soc. 2022, 28, 1075–1090. [Google Scholar] [CrossRef] [PubMed]

- Ukaegbe, O.C.; Holt, B.E.; Keator, L.M.; Brownell, H.; Blake, M.L.; Lundgren, K. Aprosodia Following Focal Brain Damage: What’s Right and What’s Left? Am. J. Speech-Lang. Pathol. 2022, 31, 2313–2328. [Google Scholar] [CrossRef] [PubMed]

- Coulombe, V.; Joyal, M.; Martel-Sauvageau, V.; Monetta, L. Affective Prosody Disorders in Adults with Neurological Conditions: A Scoping Review. Int. J. Lang. Commun. Disord. 2023, 58, 1939–1954. [Google Scholar] [CrossRef] [PubMed]

- Hewetson, R.; Cornwell, P.; Shum, D.H.K. Relationship and Social Network Change in People With Impaired Social Cognition Post Right Hemisphere Stroke. Am. J. Speech Lang. Pathol. 2021, 30, 962–973. [Google Scholar] [CrossRef] [PubMed]

- Blake, M.L.; Duffy, J.R.; Myers, P.S.; Tompkins, C.A. Prevalence and Patterns of Right Hemisphere Cognitive/Communicative Deficits: Retrospective Data from an Inpatient Rehabilitation Unit. Aphasiology 2002, 16, 537–547. [Google Scholar] [CrossRef]

- Leigh, R.; Oishi, K.; Hsu, J.; Lindquist, M.; Gottesman, R.F.; Jarso, S.; Crainiceanu, C.; Mori, S.; Hillis, A.E. Acute Lesions That Impair Affective Empathy. Brain 2013, 136, 2539–2549. [Google Scholar] [CrossRef] [PubMed]

- Ramsey, A.; Blake, M.L. Speech-Language Pathology Practices for Adults With Right Hemisphere Stroke: What Are We Missing? Am. J. Speech Lang. Pathol. 2020, 29, 741–759. [Google Scholar] [CrossRef] [PubMed]

- Ethofer, T.; Anders, S.; Erb, M.; Herbert, C.; Wiethoff, S.; Kissler, J.; Grodd, W.; Wildgruber, D. Cerebral Pathways in Processing of Affective Prosody: A Dynamic Causal Modeling Study. Neuroimage 2006, 30, 580–587. [Google Scholar] [CrossRef] [PubMed]

- Seydell-Greenwald, A.; Chambers, C.E.; Ferrara, K.; Newport, E.L. What You Say versus How You Say It: Comparing Sentence Comprehension and Emotional Prosody Processing Using fMRI. NeuroImage 2020, 209, 116509. [Google Scholar] [CrossRef] [PubMed]

- Sheppard, S.M.; Meier, E.L.; Zezinka Durfee, A.; Walker, A.; Shea, J.; Hillis, A.E. Characterizing Subtypes and Neural Correlates of Receptive Aprosodia in Acute Right Hemisphere Stroke. Cortex 2021, 141, 36–54. [Google Scholar] [CrossRef] [PubMed]

- Wright, A.; Saxena, S.; Sheppard, S.M.; Hillis, A.E. Selective Impairments in Components of Affective Prosody in Neurologically Impaired Individuals. Brain Cogn. 2018, 124, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Bowers, D.; Bauer, R.M.; Heilman, K.M. The Nonverbal Affect Lexicon: Theoretical Perspectives from Neuropsychological Studies of Affect Perception. Neuropsychology 1993, 7, 433–444. [Google Scholar] [CrossRef]

- Ross, E.D. The Aprosodias. Functional-Anatomic Organization of the Affective Components of Language in the Right Hemisphere. Arch. Neurol. 1981, 38, 561–569. [Google Scholar] [CrossRef] [PubMed]

- Gorelick, P.B.; Ross, E.D. The Aprosodias: Further Functional-Anatomical Evidence for the Organisation of Affective Language in the Right Hemisphere. J. Neurol. Neurosurg. Psychiatry 1987, 50, 553–560. [Google Scholar] [CrossRef] [PubMed]

- Durfee, A.Z.; Sheppard, S.M.; Meier, E.L.; Bunker, L.; Cui, E.; Crainiceanu, C.; Hillis, A.E. Explicit Training to Improve Affective Prosody Recognition in Adults with Acute Right Hemisphere Stroke. Brain Sci. 2021, 11, 667. [Google Scholar] [CrossRef] [PubMed]

- Belyk, M.; Brown, S. Perception of Affective and Linguistic Prosody: An ALE Meta-Analysis of Neuroimaging Studies. Soc. Cogn. Affect. Neurosci. 2014, 9, 1395–1403. [Google Scholar] [CrossRef] [PubMed]

- Themistocleous, C. Linguistic and Emotional Prosody: A Systematic Review and ALE Meta-Analysis. Neurosci. Biobehav. Rev. 2025, 175, 106210. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, R.L.C.; Elliott, R.; Barry, M.; Cruttenden, A.; Woodruff, P.W.R. The Neural Response to Emotional Prosody, as Revealed by Functional Magnetic Resonance Imaging. Neuropsychologia 2003, 41, 1410–1421. [Google Scholar] [CrossRef] [PubMed]

- Wildgruber, D.; Riecker, A.; Hertrich, I.; Erb, M.; Grodd, W.; Ethofer, T.; Ackermann, H. Identification of Emotional Intonation Evaluated by fMRI. Neuroimage 2005, 24, 1233–1241. [Google Scholar] [CrossRef] [PubMed]

- Grandjean, D. Brain Networks of Emotional Prosody Processing. Emot. Rev. 2021, 13, 34–43. [Google Scholar] [CrossRef]

- Ross, E.D.; Monnot, M. Neurology of Affective Prosody and Its Functional-Anatomic Organization in Right Hemisphere. Brain Lang. 2008, 104, 51–74. [Google Scholar] [CrossRef] [PubMed]

- Starkstein, S.E.; Federoff, J.P.; Price, T.R.; Leiguarda, R.C.; Robinson, R.G. Neuropsychological and Neuroradiologic Correlates of Emotional Prosody Comprehension. Neurology 1994, 44, 515–522. [Google Scholar] [CrossRef] [PubMed]

- Walker, J.P.; Daigle, T.; Buzzard, M. Hemispheric Specialisation in Processing Prosodic Structures: Revisited. Aphasiology 2002, 16, 1155–1172. [Google Scholar] [CrossRef]

- Obleser, J.; Eisner, F.; Kotz, S.A. Bilateral Speech Comprehension Reflects Differential Sensitivity to Spectral and Temporal Features. J. Neurosci. 2008, 28, 8116–8123. [Google Scholar] [CrossRef] [PubMed]

- Kotz, S.A.; Meyer, M.; Alter, K.; Besson, M.; von Cramon, D.Y.; Friederici, A.D. On the Lateralization of Emotional Prosody: An Event-Related Functional MR Investigation. Brain Lang. 2003, 86, 366–376. [Google Scholar] [CrossRef] [PubMed]

- Buchanan, T.W.; Lutz, K.; Mirzazade, S.; Specht, K.; Shah, N.J.; Zilles, K.; Jäncke, L. Recognition of Emotional Prosody and Verbal Components of Spoken Language: An fMRI Study. Brain Res. Cogn. Brain Res. 2000, 9, 227–238. [Google Scholar] [CrossRef] [PubMed]

- Hickok, G.; Poeppel, D. The Cortical Organization of Speech Processing. Nat. Rev. Neurosci. 2007, 8, 393–402. [Google Scholar] [CrossRef] [PubMed]

- Durfee, A.Z.; Sheppard, S.M.; Blake, M.L.; Hillis, A.E. Lesion Loci of Impaired Affective Prosody: A Systematic Review of Evidence from Stroke. Brain Cogn. 2021, 152, 105759. [Google Scholar] [CrossRef] [PubMed]

- Sammler, D.; Grosbras, M.-H.; Anwander, A.; Bestelmeyer, P.E.G.; Belin, P. Dorsal and Ventral Pathways for Prosody. Curr. Biol. 2015, 25, 3079–3085. [Google Scholar] [CrossRef] [PubMed]

- Sihvonen, A.J.; Sammler, D.; Ripollés, P.; Leo, V.; Rodríguez-Fornells, A.; Soinila, S.; Särkämö, T. Right Ventral Stream Damage Underlies Both Poststroke Aprosodia and Amusia. Eur. J. Neurol. 2022, 29, 873–882. [Google Scholar] [CrossRef] [PubMed]

- Frühholz, S.; Gschwind, M.; Grandjean, D. Bilateral Dorsal and Ventral Fiber Pathways for the Processing of Affective Prosody Identified by Probabilistic Fiber Tracking. NeuroImage 2015, 109, 27–34. [Google Scholar] [CrossRef] [PubMed]

- Hickok, G.; Poeppel, D. Dorsal and Ventral Streams: A Framework for Understanding Aspects of the Functional Neuroanatomy of Language. Cognition 2004, 92, 67–99. [Google Scholar] [CrossRef] [PubMed]

- Patel, S.; Oishi, K.; Wright, A.; Sutherland-Foggio, H.; Saxena, S.; Sheppard, S.M.; Hillis, A.E. Right Hemisphere Regions Critical for Expression of Emotion Through Prosody. Front. Neurol. 2018, 9, 224. [Google Scholar] [CrossRef] [PubMed]

- Paulmann, S.; Ott, D.V.M.; Kotz, S.A. Emotional Speech Perception Unfolding in Time: The Role of the Basal Ganglia. PLoS ONE 2011, 6, e17694. [Google Scholar] [CrossRef] [PubMed]

- Kaplan, E.; Goodglass, H.; Weintraub, S. The Boston Naming Test, 2nd ed.; PRO-ED: Austin, TX, USA, 2001. [Google Scholar]

- Berube, S.; Nonnemacher, J.; Demsky, C.; Glenn, S.; Saxena, S.; Wright, A.; Tippett, D.C.; Hillis, A.E. Stealing Cookies in the Twenty-First Century: Measures of Spoken Narrative in Healthy Versus Speakers With Aphasia. Am. J. Speech-Lang. Pathol. 2019, 28, 321–329. [Google Scholar] [CrossRef] [PubMed]

- Goodglass, H.; Kaplan, E.; Barresi, B. Boston Diagnostic Aphasia Examination, 3rd ed.; BDAE-3; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2001. [Google Scholar]

- Ota, H.; Fujii, T.; Suzuki, K.; Fukatsu, R.; Yamadori, A. Dissociation of Body-Centered and Stimulus-Centered Representations in Unilateral Neglect. Neurology 2001, 57, 2064–2069. [Google Scholar] [CrossRef] [PubMed]

- Banse, R.; Scherer, K.R. Acoustic Profiles in Vocal Emotion Expression. J. Pers. Soc. Psychol. 1996, 70, 614–636. [Google Scholar] [CrossRef] [PubMed]

- Rorden, C.; Bonilha, L.; Fridriksson, J.; Bender, B.; Karnath, H.-O. Age-Specific CT and MRI Templates for Spatial Normalization. Neuroimage 2012, 61, 957–965. [Google Scholar] [CrossRef] [PubMed]

- Oishi, K.; Faria, A.; Jiang, H.; Li, X.; Akhter, K.; Zhang, J.; Hsu, J.T.; Miller, M.I.; van Zijl, P.C.M.; Albert, M.; et al. Atlas-Based Whole Brain White Matter Analysis Using Large Deformation Diffeomorphic Metric Mapping: Application to Normal Elderly and Alzheimer’s Disease Participants. NeuroImage 2009, 46, 486–499. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H.; van Zijl, P.C.M.; Kim, J.; Pearlson, G.D.; Mori, S. DtiStudio: Resource Program for Diffusion Tensor Computation and Fiber Bundle Tracking. Comput. Methods Programs Biomed. 2006, 81, 106–116. [Google Scholar] [CrossRef] [PubMed]

- Pumphrey, J.D.; Ramani, S.; Islam, T.; Berard, J.A.; Seegobin, M.; Lymer, J.M.; Freedman, M.S.; Wang, J.; Walker, L.A.S. Assessing Multimodal Emotion Recognition in Multiple Sclerosis with a Clinically Accessible Measure. Mult. Scler. Relat. Disord. 2024, 86, 105603. [Google Scholar] [CrossRef] [PubMed]

- Kraemer, M.; Herold, M.; Uekermann, J.; Kis, B.; Daum, I.; Wiltfang, J.; Berlit, P.; Diehl, R.R.; Abdel-Hamid, M. Perception of Affective Prosody in Patients at an Early Stage of Relapsing-Remitting Multiple Sclerosis. J. Neuropsychol. 2013, 7, 91–106. [Google Scholar] [CrossRef] [PubMed]

- Thompson, W.F.; Marin, M.M.; Stewart, L. Reduced Sensitivity to Emotional Prosody in Congenital Amusia Rekindles the Musical Protolanguage Hypothesis. Proc. Natl. Acad. Sci. USA 2012, 109, 19027–19032. [Google Scholar] [CrossRef] [PubMed]

- Catani, M.; Thiebaut de Schotten, M. A Diffusion Tensor Imaging Tractography Atlas for Virtual in Vivo Dissections. Cortex 2008, 44, 1105–1132. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Sun, D.; Wang, Y.; Wang, Y. Subcomponents and Connectivity of the Inferior Fronto-Occipital Fasciculus Revealed by Diffusion Spectrum Imaging Fiber Tracking. Front. Neuroanat. 2016, 10, 88. [Google Scholar] [CrossRef] [PubMed]

- Ethofer, T.; Bretscher, J.; Wiethoff, S.; Bisch, J.; Schlipf, S.; Wildgruber, D.; Kreifelts, B. Functional Responses and Structural Connections of Cortical Areas for Processing Faces and Voices in the Superior Temporal Sulcus. NeuroImage 2013, 76, 45–56. [Google Scholar] [CrossRef] [PubMed]

- Pierce, J.E.; Péron, J. The Basal Ganglia and the Cerebellum in Human Emotion. Soc. Cogn. Affect. Neurosci. 2020, 15, 599–613. [Google Scholar] [CrossRef] [PubMed]

- Efthymiopoulou, E.; Kasselimis, D.S.; Ghika, A.; Kyrozis, A.; Peppas, C.; Evdokimidis, I.; Petrides, M.; Potagas, C. The Effect of Cortical and Subcortical Lesions on Spontaneous Expression of Memory-Encoded and Emotionally Infused Information: Evidence for a Role of the Ventral Stream. Neuropsychologia 2017, 101, 115–120. [Google Scholar] [CrossRef] [PubMed]

- Maldonado, I.L.; Destrieux, C.; Ribas, E.C.; Siqueira de Abreu Brito Guimarães, B.; Cruz, P.P.; Duffau, H. Composition and Organization of the Sagittal Stratum in the Human Brain: A Fiber Dissection Study. J. Neurosurg. 2021, 135, 1214–1222. [Google Scholar] [CrossRef] [PubMed]

- Davis, C.; Oishi, K.; Faria, A.; Hsu, J.; Gomez, Y.; Mori, S.; Hillis, A.E. White Matter Tracts Critical for Recognition of Sarcasm. Neurocase 2016, 22, 22–29. [Google Scholar] [CrossRef] [PubMed]

- Shahid, H.; Sebastian, R.; Schnur, T.T.; Hanayik, T.; Wright, A.; Tippett, D.C.; Fridriksson, J.; Rorden, C.; Hillis, A.E. Important Considerations in Lesion-Symptom Mapping: Illustrations from Studies of Word Comprehension. Hum. Brain Mapp. 2017, 38, 2990–3000. [Google Scholar] [CrossRef] [PubMed]

- Rauschecker, J.P.; Scott, S.K. Maps and Streams in the Auditory Cortex: Nonhuman Primates Illuminate Human Speech Processing. Nat. Neurosci. 2009, 12, 718–724. [Google Scholar] [CrossRef] [PubMed]

- Zündorf, I.C.; Lewald, J.; Karnath, H.-O. Testing the Dual-Pathway Model for Auditory Processing in Human Cortex. Neuroimage 2016, 124, 672–681. [Google Scholar] [CrossRef] [PubMed]

- Herbet, G.; Lafargue, G.; Bonnetblanc, F.; Moritz-Gasser, S.; Menjot de Champfleur, N.; Duffau, H. Inferring a Dual-Stream Model of Mentalizing from Associative White Matter Fibres Disconnection. Brain 2014, 137, 944–959. [Google Scholar] [CrossRef] [PubMed]

- Barbey, A.K.; Colom, R.; Grafman, J. Distributed Neural System for Emotional Intelligence Revealed by Lesion Mapping. Soc. Cogn. Affect. Neurosci. 2014, 9, 265–272. [Google Scholar] [CrossRef] [PubMed]

- Fridriksson, J.; Yourganov, G.; Bonilha, L.; Basilakos, A.; Den Ouden, D.-B.; Rorden, C. Revealing the Dual Streams of Speech Processing. Proc. Natl. Acad. Sci. USA 2016, 113, 15108–15113. [Google Scholar] [CrossRef] [PubMed]

- Friederici, A.D. The Brain Basis of Language Processing: From Structure to Function. Physiol. Rev. 2011, 91, 1357–1392. [Google Scholar] [CrossRef] [PubMed]

- Saur, D.; Kreher, B.W.; Schnell, S.; Kümmerer, D.; Kellmeyer, P.; Vry, M.-S.; Umarova, R.; Musso, M.; Glauche, V.; Abel, S.; et al. Ventral and Dorsal Pathways for Language. Proc. Natl. Acad. Sci. USA 2008, 105, 18035–18040. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Zhong, S.; Zhou, L.; Yu, Y.; Tan, X.; Wu, M.; Sun, P.; Zhang, W.; Li, J.; Cheng, R.; et al. Correlations between Dual-Pathway White Matter Alterations and Language Impairment in Patients with Aphasia: A Systematic Review and Meta-Analysis. Neuropsychol. Rev. 2021, 31, 402–418. [Google Scholar] [CrossRef] [PubMed]

- Kern, K.C.; Wright, C.B.; Leigh, R. Global Changes in Diffusion Tensor Imaging during Acute Ischemic Stroke and Post-Stroke Cognitive Performance. J. Cereb. Blood Flow. Metab. 2022, 42, 1854–1866. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Yin, X.; Hong, H.; Wang, S.; Jiaerken, Y.; Zhang, F.; Pasternak, O.; Zhang, R.; Yang, L.; Lou, M.; et al. Increased Extracellular Fluid Is Associated with White Matter Fiber Degeneration in CADASIL: In Vivo Evidence from Diffusion Magnetic Resonance Imaging. Fluids Barriers CNS 2021, 18, 29. [Google Scholar] [CrossRef] [PubMed]

- Harnish, S.M.; Schwen Blackett, D.; Zezinka, A.; Lundine, J.P.; Pan, X. Influence of Working Memory on Stimulus Generalization in Anomia Treatment: A Pilot Study. J. Neurolinguist. 2018, 48, 142–156. [Google Scholar] [CrossRef]

- Harnish, S.M.; Lundine, J.P. Nonverbal Working Memory as a Predictor of Anomia Treatment Success. Am. J. Speech-Lang. Pathol. 2015, 24, S880–S894. [Google Scholar] [CrossRef] [PubMed]

- Stockbridge, M.D.; Sheppard, S.-M.; Keator, L.M.; Murray, L.L.; Blake, M.L.; Right Hemisphere Disorders Working Group, Evidence-Based Clinical Research Committee, Academy of Neurological Communication Disorders and Sciences. Aprosodia Subsequent to Right Hemisphere Brain Damage: A Systematic Review and Meta-Analysis. J. Int. Neuropsychol. Soc. 2021, 28, 709–735. [Google Scholar] [CrossRef] [PubMed]

- Lehman Blake, M.; Frymark, T.; Venedictov, R. An Evidence-Based Systematic Review on Communication Treatments for Individuals with Right Hemisphere Brain Damage. Am. J. Speech Lang. Pathol. 2013, 22, 146–160. [Google Scholar] [CrossRef] [PubMed]

| Task | Acute (n = 24) | Subacute (n = 13) | Chronic (n = 23) | Controls (n = 57) |

|---|---|---|---|---|

| Word prosody recognition | 64.58 *† ± 14.90 | 71.43 * ± 12.43 | 71.56 † ± 12.97 | 77.9 ± 8.19 |

| Recognition of prosodic features (Stage 1) | 77.43 * ± 16.62 | 85.86 ± 11.15 | 82.25 ± 16.96 | 87.96 ± 11.24 |

| Matching features with emotions (Stage 2) | 68.84 ± 14.90 | 72.62 ± 15.48 | 75.00 ± 13.76 | 75.69 ± 11.64 |

| Emotion synonym matching (Stage 3) | 90.87 * ± 7.20 | 90.77 * ± 8.98 | 92.61 * ± 5.74 | 96.76 ± 4.47 |

| Emotional facial expression recognition (domain-general) | 83.42 * ± 10.04 | 88.39 ± 9.59 | 85.34 ± 12.13 | 89.79 ± 5.71 |

| Task | Cut-Off Score (%) | Impaired: Acute (%) | Impaired: Subacute (%) | Impaired: Chronic (%) |

|---|---|---|---|---|

| Word prosody recognition | 63.33 | 41.67 | 14.29 | 21.74 |

| Recognition of prosodic features (Stage 1) | 64.58 | 33.33 | 7.14 | 21.74 |

| Matching features with emotion (Stage 2) | 58.33 | 29.17 | 21.43 | 21.74 |

| Emotion synonym matching (Stage 3) | 87.50 | 33.33 | 21.43 | 21.74 |

| Emotional facial expression recognition (domain-general emotion) | 80.00 | 29.17 | 28.57 | 34.78 |

| Model | IV | Estimate | SE | t | p |

|---|---|---|---|---|---|

| Base | (Intercept) | 45.568 | 12.683 | 3.593 | 0.002 |

| age | −0.460 | 0.192 | −2.390 | 0.027 | |

| education | 2.802 | 0.742 | 3.778 | 0.001 | |

| Base + WML:EC | (Intercept) | 38.582 | 16.069 | 2.401 | 0.027 |

| acute lesion volume | 0.117 | 0.121 | 0.965 | 0.347 | |

| EC | −0.316 | 0.136 | −2.325 | 0.032 | |

| age | −0.452 | 0.270 | −1.676 | 0.111 | |

| education | 3.297 | 0.845 | 3.900 | 0.001 | |

| Base + WML:IFOF | (Intercept) | 41.351 | 15.857 | 2.608 | 0.018 |

| acute lesion volume | 0.039 | 0.075 | 0.526 | 0.606 | |

| IFOF | −0.237 | 0.098 | −2.408 | 0.027 | |

| age | −0.495 | 0.156 | −3.168 | 0.005 | |

| education | 3.254 | 0.980 | 3.322 | 0.004 | |

| Base + WML:SLF | (Intercept) | 43.233 | 14.569 | 2.967 | 0.008 |

| acute lesion volume | −0.097 | 0.079 | −1.229 | 0.235 | |

| SLF | 0.169 | 0.069 | 2.443 | 0.025 | |

| age | −0.428 | 0.271 | −1.575 | 0.133 | |

| education | 2.872 | 0.826 | 3.476 | 0.003 |

| Model | IV | Estimate | SE | t | p |

|---|---|---|---|---|---|

| Base | (Intercept) | 70.268 | 20.266 | 3.467 | 0.002 |

| age | −0.217 | 0.236 | −0.918 | 0.370 | |

| education | 1.218 | 1.078 | 1.130 | 0.272 | |

| Base + WML:SLF | (Intercept) | 81.446 | 6.214 | 13.107 | <0.001 |

| acute lesion volume | −0.163 | 0.169 | −0.962 | 0.347 | |

| SLF | −2.068 | 0.973 | −2.127 | 0.046 | |

| Base + WML:UF | (Intercept) | 77.607 | 4.547 | 17.067 | <0.001 |

| acute lesion volume | −0.130 | 0.075 | −1.731 | 0.098 | |

| UF | 0.294 | 0.085 | 3.456 | 0.002 | |

| Base + WML:SS | (Intercept) | 83.352 | 6.184 | 13.478 | <0.001 |

| acute lesion volume | −0.101 | 0.121 | −0.842 | 0.409 | |

| SS | −3.373 | 0.782 | −4.312 | <0.001 | |

| Base + WML:BCC | (Intercept) | 76.241 | 4.816 | 15.832 | <0.001 |

| acute lesion volume | 0.047 | 0.089 | 0.532 | 0.600 | |

| BCC | 0.462 | 0.151 | 3.062 | 0.006 | |

| Base + WML:GCC | (Intercept) | 76.226 | 4.813 | 15.836 | <0.001 |

| acute lesion volume | 0.048 | 0.088 | 0.546 | 0.591 | |

| GCC | 24.178 | 7.582 | 3.189 | 0.004 |

| Model | IV | Estimate | SE | t | p |

|---|---|---|---|---|---|

| Base | (Intercept) | 48.369 | 15.421 | 3.137 | 0.005 |

| age | −0.315 | 0.118 | −2.682 | 0.015 | |

| education | 2.446 | 0.911 | 2.685 | 0.015 | |

| Base + WML:SLF | (Intercept) | 41.152 | 13.440 | 3.062 | 0.007 |

| acute lesion volume | −0.102 | 0.072 | −1.403 | 0.179 | |

| SLF | 0.328 | 0.078 | 4.211 | 0.001 | |

| age | −0.158 | 0.098 | −1.611 | 0.126 | |

| education | 2.318 | 0.986 | 2.350 | 0.031 |

| Model | IV | Estimate | SE | t | p |

|---|---|---|---|---|---|

| Base | (Intercept) | 67.867 | 5.908 | 11.487 | <0.001 |

| age | −0.001 | 0.062 | −0.020 | 0.984 | |

| education | 1.469 | 0.314 | 4.685 | <0.001 | |

| Base + WML:SLF | (Intercept) | 67.965 | 5.522 | 12.309 | <0.001 |

| acute lesion volume | 0.027 | 0.021 | 1.316 | 0.207 | |

| SLF | −0.080 | 0.024 | −3.319 | 0.004 | |

| education | 1.468 | 0.314 | 4.679 | <0.001 | |

| Base + WML:BCC | (Intercept) | 66.286 | 5.473 | 12.112 | <0.001 |

| acute lesion volume | −0.013 | 0.017 | −0.774 | 0.450 | |

| BCC | 0.250 | 0.054 | 4.594 | <0.001 | |

| education | 1.558 | 0.316 | 4.933 | <0.001 | |

| Base + WML:GCC | (Intercept) | 66.274 | 5.471 | 12.114 | <0.001 |

| acute lesion volume | −0.013 | 0.017 | −0.757 | 0.460 | |

| GCC | 12.995 | 2.746 | 4.732 | <0.001 | |

| education | 1.558 | 0.316 | 4.935 | <0.001 |

| Model | IV | Estimate | SE | t | p |

|---|---|---|---|---|---|

| Base | (Intercept) | 65.169 | 7.786 | 8.370 | <0.001 |

| age | −0.062 | 0.133 | −0.464 | 0.649 | |

| education | 1.347 | 0.543 | 2.479 | 0.026 | |

| Base + WML:EC | (Intercept) | 59.538 | 8.281 | 7.190 | <0.001 |

| acute lesion volume | 0.184 | 0.058 | 3.178 | 0.007 | |

| EC | −0.261 | 0.101 | −2.594 | 0.021 | |

| education | 1.414 | 0.494 | 2.859 | 0.013 | |

| Base + WML:IFOF | (Intercept) | 60.159 | 7.867 | 7.647 | <0.001 |

| acute lesion volume | 0.128 | 0.035 | 3.647 | 0.003 | |

| IFOF | −0.213 | 0.068 | −3.132 | 0.007 | |

| education | 1.389 | 0.488 | 2.845 | 0.013 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jackson, M.S.; Uchida, Y.; Sheppard, S.M.; Oishi, K.; Crainiceanu, C.; Hillis, A.E.; Durfee, A.Z. Elucidating White Matter Contributions to the Cognitive Architecture of Affective Prosody Recognition: Evidence from Right Hemisphere Stroke. Brain Sci. 2025, 15, 769. https://doi.org/10.3390/brainsci15070769

Jackson MS, Uchida Y, Sheppard SM, Oishi K, Crainiceanu C, Hillis AE, Durfee AZ. Elucidating White Matter Contributions to the Cognitive Architecture of Affective Prosody Recognition: Evidence from Right Hemisphere Stroke. Brain Sciences. 2025; 15(7):769. https://doi.org/10.3390/brainsci15070769

Chicago/Turabian StyleJackson, Meyra S., Yuto Uchida, Shannon M. Sheppard, Kenichi Oishi, Ciprian Crainiceanu, Argye E. Hillis, and Alexandra Z. Durfee. 2025. "Elucidating White Matter Contributions to the Cognitive Architecture of Affective Prosody Recognition: Evidence from Right Hemisphere Stroke" Brain Sciences 15, no. 7: 769. https://doi.org/10.3390/brainsci15070769

APA StyleJackson, M. S., Uchida, Y., Sheppard, S. M., Oishi, K., Crainiceanu, C., Hillis, A. E., & Durfee, A. Z. (2025). Elucidating White Matter Contributions to the Cognitive Architecture of Affective Prosody Recognition: Evidence from Right Hemisphere Stroke. Brain Sciences, 15(7), 769. https://doi.org/10.3390/brainsci15070769