Application of Graph-Theoretic Methods Using ERP Components and Wavelet Coherence on Emotional and Cognitive EEG Data

Abstract

1. Introduction

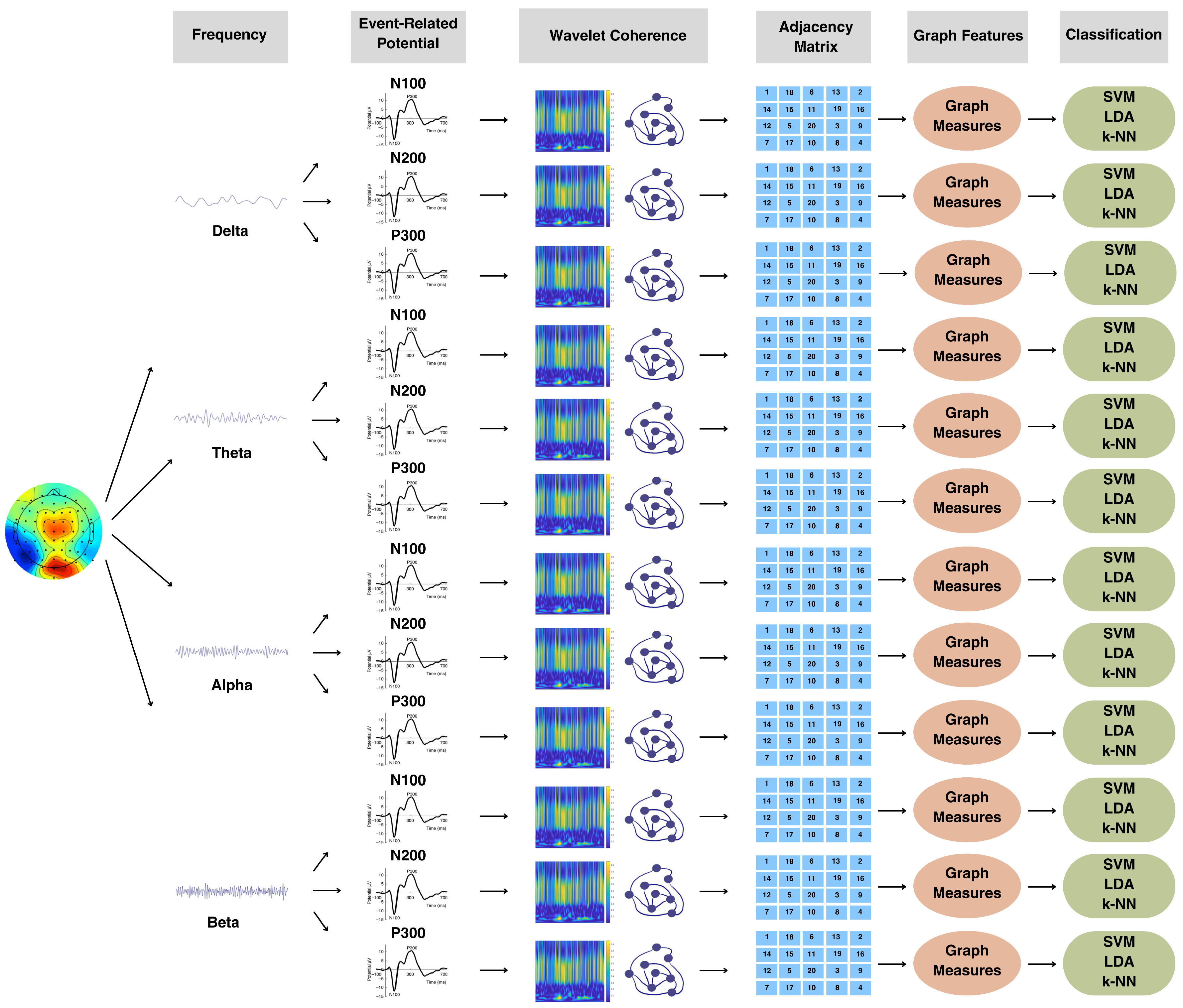

2. Materials and Methods

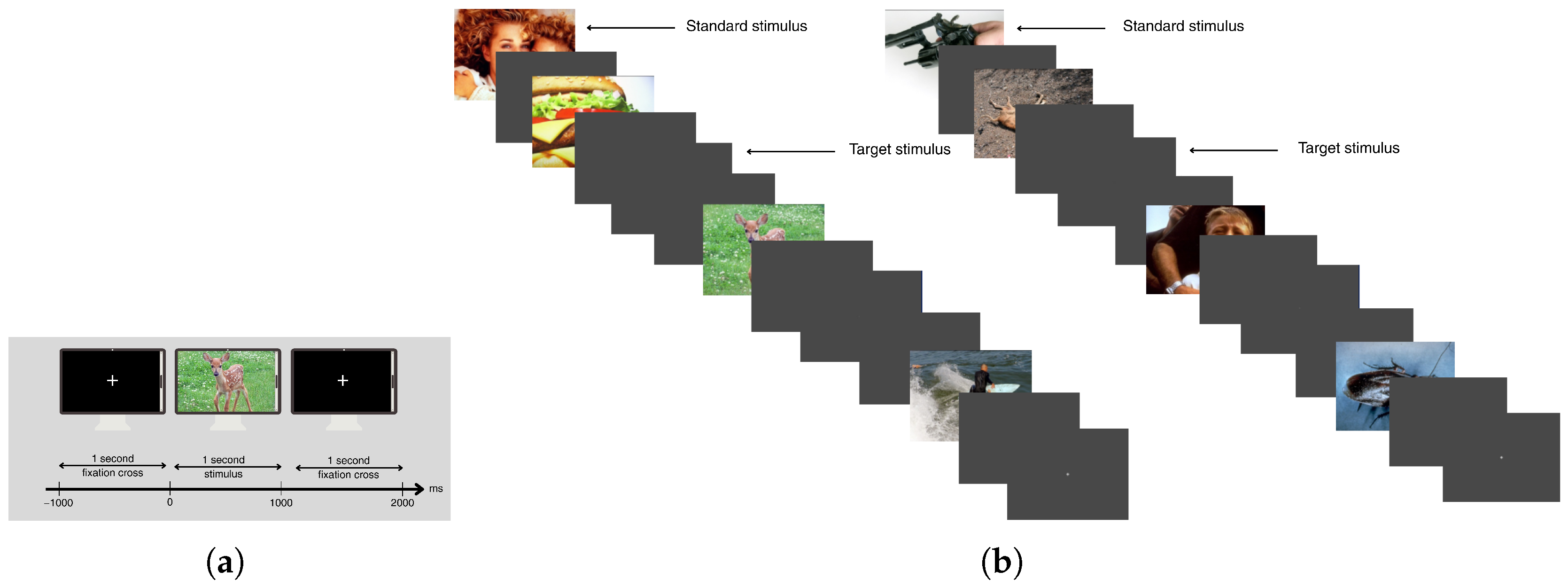

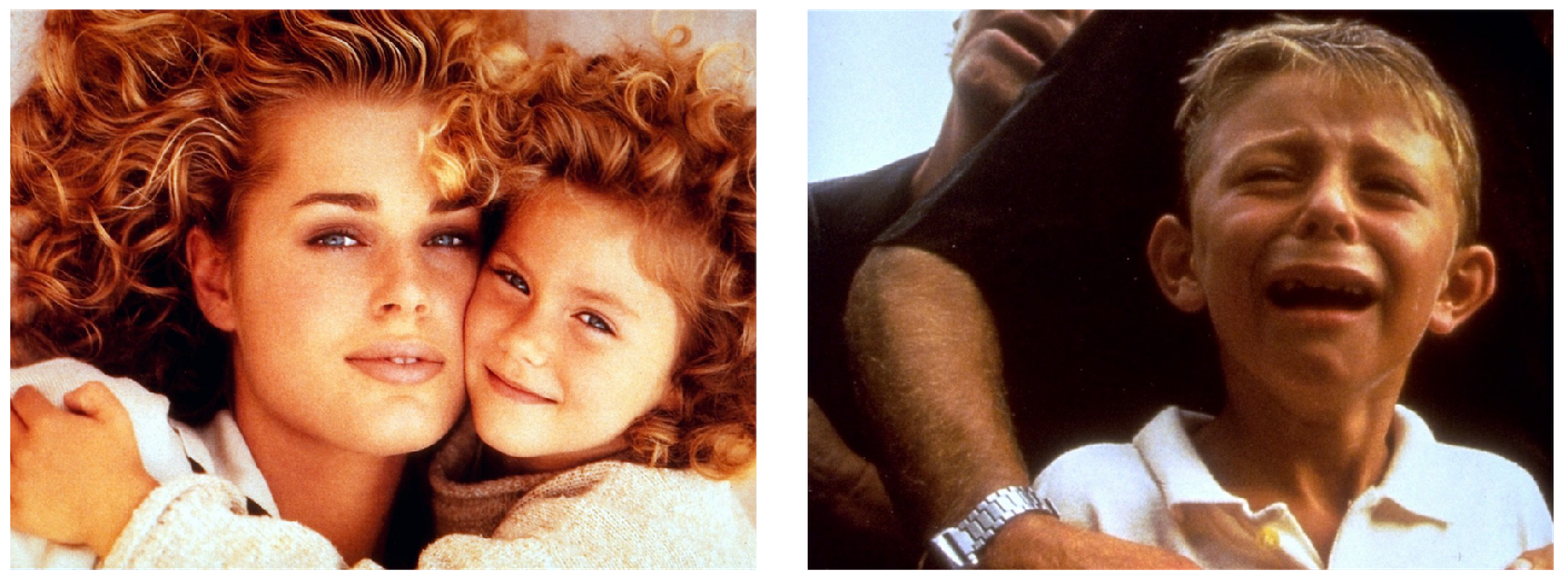

2.1. Emotional Data: Subjects, Data Acquisition, and Experimental Paradigm

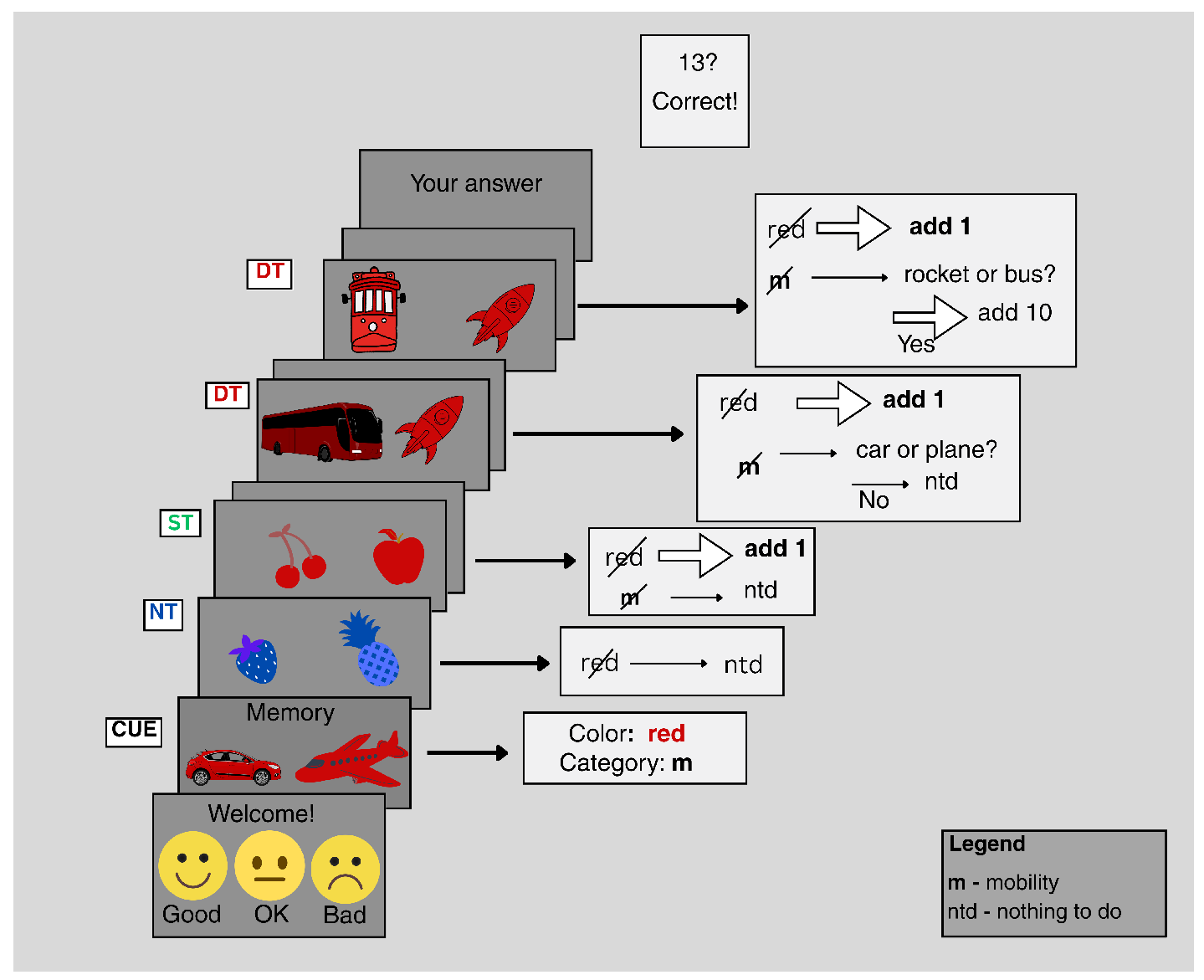

2.2. Cognitive Data: Subjects, Data Acquisition, and Experimental Paradigm

2.3. Preprocessing of Emotional Data

2.4. Preprocessing of Cognitive Data

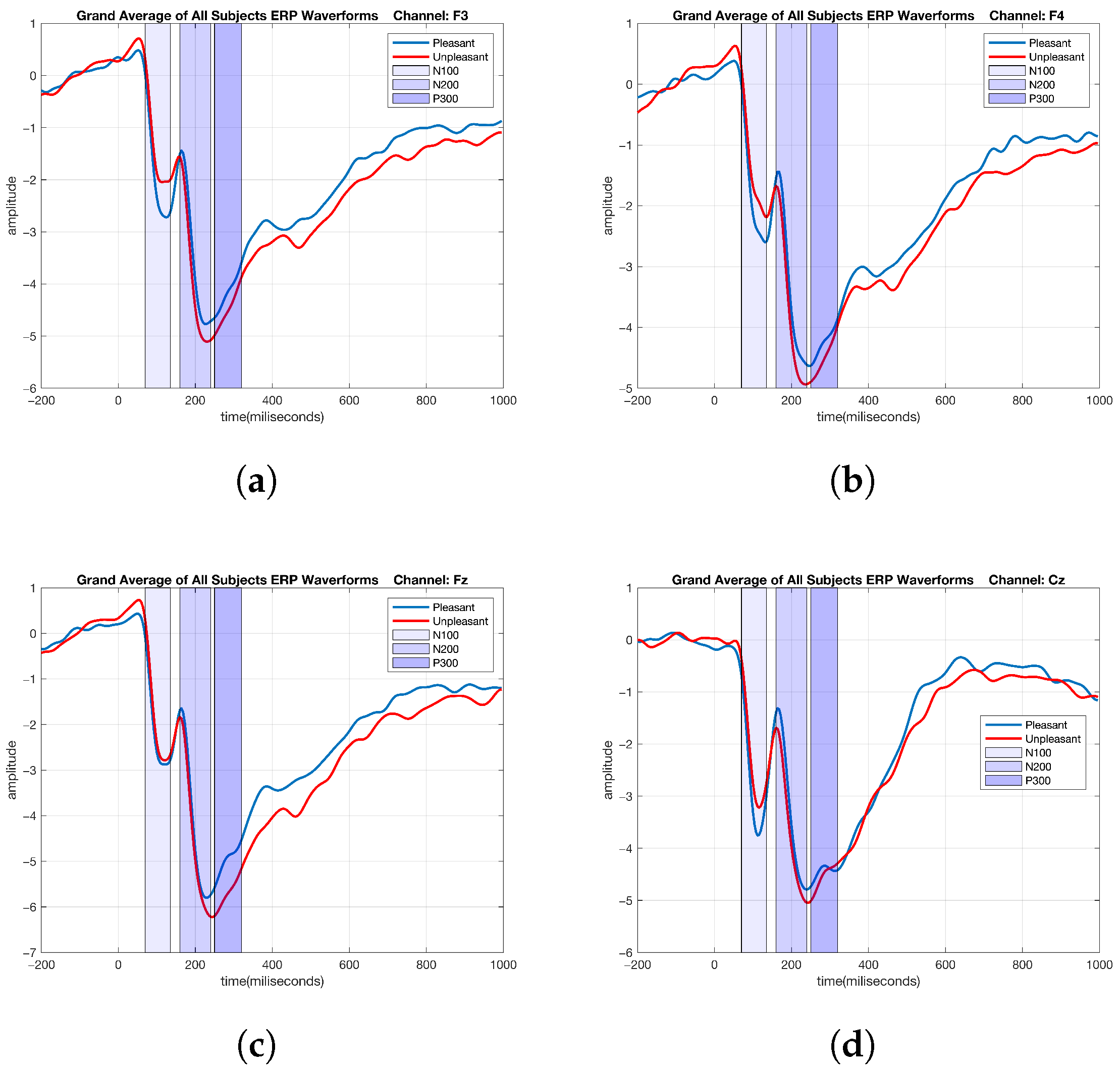

2.5. ERP Analysis of Emotional Data

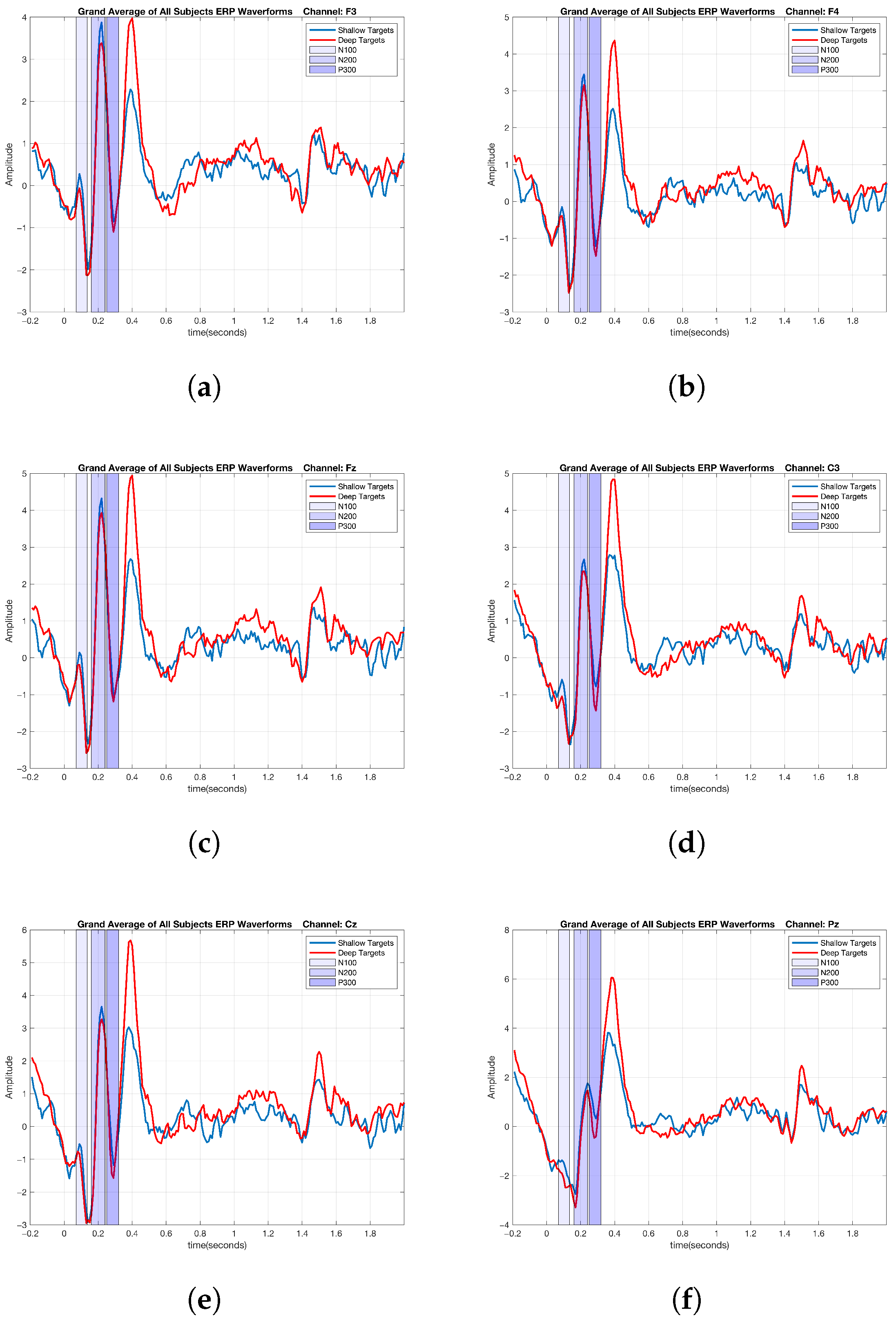

2.6. ERP Analysis of Cognitive Data

2.7. Wavelet Coherence Analysis of Emotional Data

3. Results

3.1. Graph-Theoretic-Based Analysis of Emotional Data

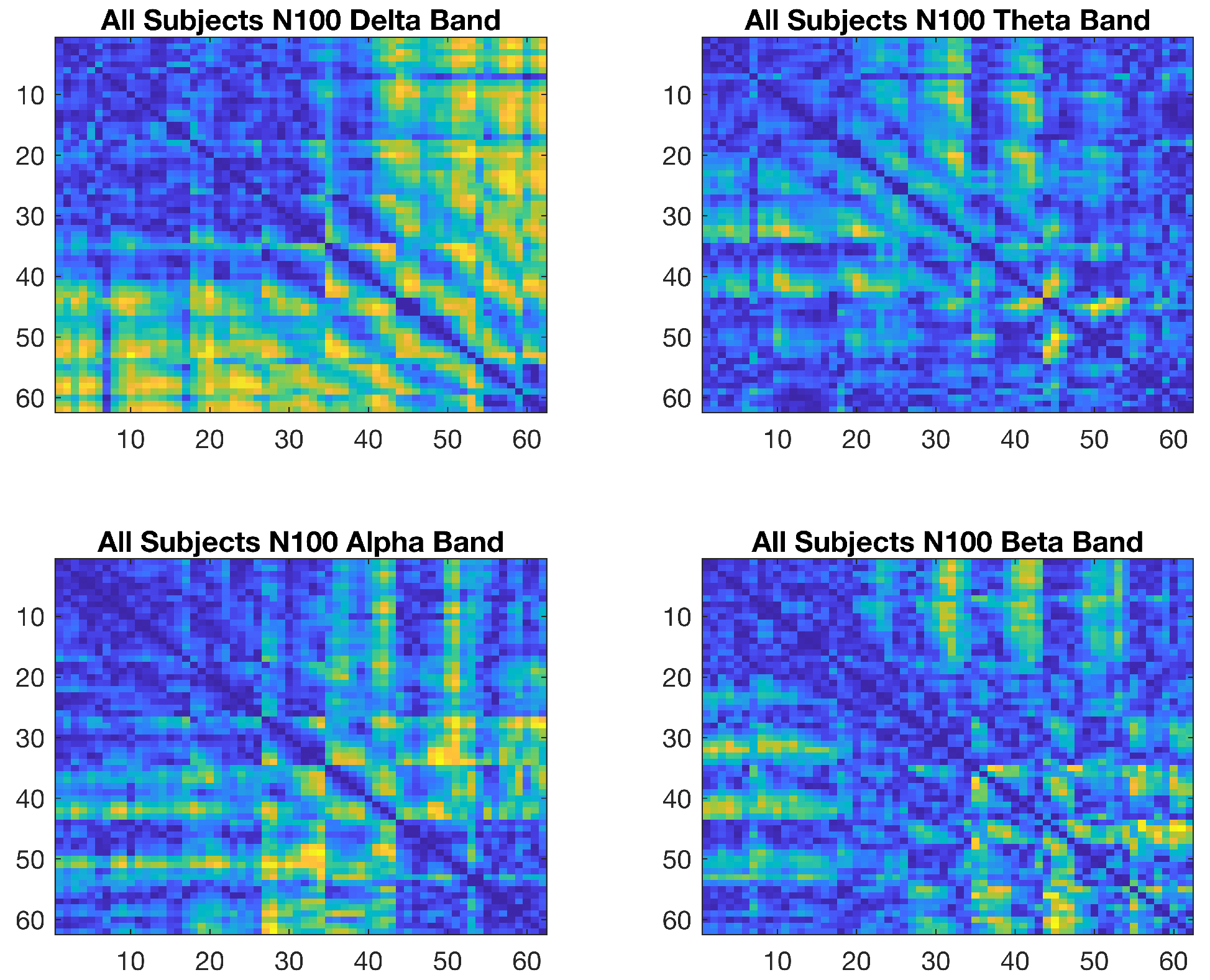

3.2. Graph-Theoretic-Based Analysis of Cognitive Data

4. Discussion

4.1. Comparison with Related Work

4.2. Interpretation of Key Findings

4.3. Methodological Implications

5. Conclusions

5.1. Future Work

5.2. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AgCl | Silver chloride |

| BCI | Brain–computer interface |

| DEAP | Database for Emotion Analysis using Physiological Signals |

| DT | Deep target |

| ECG | Electrocardiography |

| EEG | Electroencephalography |

| EMG | Electromyography |

| EOG | Electro-oculography |

| ERP | Event-related potential |

| FIR | Finite impulse response |

| fMRI | Functional magnetic resonance imaging |

| ICA | Independent component analysis |

| K-NN | K-nearest neighbor |

| LDA | Linear discriminant analysis |

| MARA | Multiple artifact rejection algorithm |

| MEG | Magnetoencephalography |

| MRMR | Minimum redundancy, maximum relevance |

| NT | Non-target |

| N100 | Negative 100 |

| N200 | Negative 200 |

| PLV | Phase-locking value |

| P300 | Positive 300 |

| RBF | Radial basis function |

| SEED | The SJTU Emotion EEG Dataset (SEED) |

| ST | Shallow target |

| SVM | Support vector machine |

References

- Maddirala, A.K.; Veluvolu, K.C. Eye-blink artifact removal from single channel EEG with k-means and SSA. Sci. Rep. 2021, 11, 11043. [Google Scholar] [CrossRef] [PubMed]

- Fragopanagos, N.; Taylor, J.G. Emotion recognition in human–computer interaction. Neural Netw. 2005, 18, 389–405. [Google Scholar] [CrossRef] [PubMed]

- Spezialetti, M.; Placidi, G.; Rossi, S. Emotion recognition for human-robot interaction: Recent advances and future perspectives. Front. Robot. AI 2020, 7, 532279. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Zhang, Z.; He, H. Implementation of EEG emotion recognition system based on hierarchical convolutional neural networks. In Proceedings of the Advances in Brain Inspired Cognitive Systems: 8th International Conference, BICS 2016, Beijing, China, 28–30 November 2016; Proceedings 8. Springer: Berlin/Heidelberg, Germany, 2016; pp. 22–33. [Google Scholar]

- Kothe, C.A.; Makeig, S. Estimation of task workload from EEG data: New and current tools and perspectives. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 6547–6551. [Google Scholar]

- Yokota, Y.; Naruse, Y. Phase coherence of auditory steady-state response reflects the amount of cognitive workload in a modified N-back task. Neurosci. Res. 2015, 100, 39–45. [Google Scholar] [CrossRef]

- Lin, C.J.; Hsieh, M.H. Classification of mental task from EEG data using neural networks based on particle swarm optimization. Neurocomputing 2009, 72, 1121–1130. [Google Scholar] [CrossRef]

- Shi, L.C.; Lu, B.L. EEG-based vigilance estimation using extreme learning machines. Neurocomputing 2013, 102, 135–143. [Google Scholar] [CrossRef]

- Liu, Y.H.; Wu, C.T.; Cheng, W.T.; Hsiao, Y.T.; Chen, P.M.; Teng, J.T. Emotion recognition from single-trial EEG based on kernel Fisher’s emotion pattern and imbalanced quasiconformal kernel support vector machine. Sensors 2014, 14, 13361–13388. [Google Scholar] [CrossRef]

- Liu, Y.; Sourina, O.; Nguyen, M.K. Real-time EEG-based emotion recognition and its applications. In Transactions on Computational Science XII: Special Issue on Cyberworlds; Springer: Berlin/Heidelberg, Germany, 2011; pp. 256–277. [Google Scholar]

- Basar, M.D.; Duru, A.D.; Akan, A. Emotional state detection based on common spatial patterns of EEG. Signal Image Video Process. 2020, 14, 473–481. [Google Scholar] [CrossRef]

- Ma, W.; Zheng, Y.; Li, T.; Li, Z.; Li, Y.; Wang, L. A comprehensive review of deep learning in EEG-based emotion recognition: Classifications, trends, and practical implications. PeerJ Comput. Sci. 2024, 10, e2065. [Google Scholar] [CrossRef]

- Balli, T.; Deniz, S.M.; Cebeci, B.; Erbey, M.; Duru, A.D.; Demiralp, T. Emotion recognition based on spatially smooth spectral features of the EEG. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 407–410. [Google Scholar]

- Dong, S.; Reder, L.M.; Yao, Y.; Liu, Y.; Chen, F. Individual differences in working memory capacity are reflected in different ERP and EEG patterns to task difficulty. Brain Res. 2015, 1616, 146–156. [Google Scholar] [CrossRef]

- Thejaswini, S.; Kumar, K.R.; Vijayendra, A.; Shyam, R.; Anchan, P.D.; Gowda, E. An algorithm to detect emotion states and stress levels using EEG signals. Int. J. Latest Res. Eng. Technol. (IJLRET) 2017, 3, 5–12. [Google Scholar]

- Xefteris, V.R.; Tsanousa, A.; Georgakopoulou, N.; Diplaris, S.; Vrochidis, S.; Kompatsiaris, I. Graph theoretical analysis of eeg functional connectivity patterns and fusion with physiological signals for emotion recognition. Sensors 2022, 22, 8198. [Google Scholar] [CrossRef]

- Kim, M.K.; Kim, M.; Oh, E.; Kim, S.P. A review on the computational methods for emotional state estimation from the human EEG. Comput. Math. Methods Med. 2013, 2013, 573734. [Google Scholar] [CrossRef]

- Davidson, R.J.; Fox, N.A. Asymmetrical brain activity discriminates between positive and negative affective stimuli in human infants. Science 1982, 218, 1235–1237. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Leeb, R.; Keinrath, C.; Friedman, D.; Neuper, C.; Guger, C.; Slater, M. Walking from thought. Brain Res. 2006, 1071, 145–152. [Google Scholar] [CrossRef]

- Elfenbein, H.A.; Ambady, N. Predicting workplace outcomes from the ability to eavesdrop on feelings. J. Appl. Psychol. 2002, 87, 963. [Google Scholar] [CrossRef] [PubMed]

- Cecchetto, C.; Korb, S.; Rumiati, R.I.; Aiello, M. Emotional reactions in moral decision-making are influenced by empathy and alexithymia. Soc. Neurosci. 2018, 13, 226–240. [Google Scholar] [CrossRef]

- Preuss, N.; Brändle, L.S.; Hager, O.M.; Haynes, M.; Fischbacher, U.; Hasler, G. Inconsistency and social decision making in patients with Borderline Personality Disorder. Psychiatry Res. 2016, 243, 115–122. [Google Scholar] [CrossRef]

- Si, Y.; Li, F.; Duan, K.; Tao, Q.; Li, C.; Cao, Z.; Zhang, Y.; Biswal, B.; Li, P.; Yao, D.; et al. Predicting individual decision-making responses based on single-trial EEG. NeuroImage 2020, 206, 116333. [Google Scholar] [CrossRef]

- Chikhi, S.; Matton, N.; Blanchet, S. EEG power spectral measures of cognitive workload: A meta-analysis. Psychophysiology 2022, 59, e14009. [Google Scholar] [CrossRef]

- Kahneman, D. Attention and Effort; Prentice-Hall: Englewood Cliffs, NJ, USA, 1973. [Google Scholar]

- Torres, E.P.; Torres, E.A.; Hernández-Álvarez, M.; Yoo, S.G. EEG-based BCI emotion recognition: A survey. Sensors 2020, 20, 5083. [Google Scholar] [CrossRef] [PubMed]

- Makeig, S.; Kothe, C.; Mullen, T.; Bigdely-Shamlo, N.; Zhang, Z.; Kreutz-Delgado, K. Evolving signal processing for brain–computer interfaces. Proc. IEEE 2012, 100, 1567–1584. [Google Scholar] [CrossRef]

- Aricò, P.; Borghini, G.; Di Flumeri, G.; Colosimo, A.; Pozzi, S.; Babiloni, F. A passive brain–computer interface application for the mental workload assessment on professional air traffic controllers during realistic air traffic control tasks. Prog. Brain Res. 2016, 228, 295–328. [Google Scholar]

- Di Flumeri, G.; Borghini, G.; Aricò, P.; Colosimo, A.; Pozzi, S.; Bonelli, S.; Golfetti, A.; Kong, W.; Babiloni, F. On the use of cognitive neurometric indexes in aeronautic and air traffic management environments. In Proceedings of the Symbiotic Interaction: 4th International Workshop, Symbiotic 2015, Berlin, Germany, 7–8 October 2015; Proceedings 4. Springer: Berlin/Heidelberg, Germany, 2015; pp. 45–56. [Google Scholar]

- Brouwer, A.M.; van de Water, L.; Hogervorst, M.; Kraaij, W.; Schraagen, J.M.; Hogenelst, K. Monitoring mental state during real life office work. In Proceedings of the Symbiotic Interaction: 6th International Workshop, Symbiotic 2017, Eindhoven, The Netherlands, 18–19 December 2017; Revised Selected Papers 6. Springer: Berlin/Heidelberg, Germany, 2018; pp. 18–29. [Google Scholar]

- Venthur, B.; Blankertz, B.; Gugler, M.F.; Curio, G. Novel applications of BCI technology: Psychophysiological optimization of working conditions in industry. In Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 417–421. [Google Scholar]

- Maimon, N.B.; Molcho, L.; Intrator, N.; Lamy, D. Single-channel EEG features during n-back task correlate with working memory load. arXiv 2020, arXiv:2008.04987. [Google Scholar]

- Pesonen, M.; Hämäläinen, H.; Krause, C.M. Brain oscillatory 4–30 Hz responses during a visual n-back memory task with varying memory load. Brain Res. 2007, 1138, 171–177. [Google Scholar] [CrossRef]

- Wang, S.; Gwizdka, J.; Chaovalitwongse, W.A. Using wireless EEG signals to assess memory workload in the n-back task. IEEE Trans. -Hum.-Mach. Syst. 2015, 46, 424–435. [Google Scholar] [CrossRef]

- Gaurav, G.; Anand, R.S.; Kumar, V. EEG based cognitive task classification using multifractal detrended fluctuation analysis. Cogn. Neurodynamics 2021, 15, 999–1013. [Google Scholar] [CrossRef]

- Joseph, A.F.A.; Govindaraju, C. Minimizing electrodes for effective brain computer interface. Biomed. Signal Process. Control 2021, 63, 102201. [Google Scholar]

- Magosso, E.; De Crescenzio, F.; Ricci, G.; Piastra, S.; Ursino, M. EEG alpha power is modulated by attentional changes during cognitive tasks and virtual reality immersion. Comput. Intell. Neurosci. 2019, 2019, 7051079. [Google Scholar] [CrossRef]

- Zhang, Z.T.; Argın, S.K.; Bilen, M.B.; Urgun, D.; Deniz, S.M.; Liu, Y.; Hassib, M. Measuring the effect of mental workload and explanations on appropriate AI reliance using EEG. Behav. Inf. Technol. 2024, 1–19. [Google Scholar] [CrossRef]

- Bakhshali, M.A.; Ebrahimi-Moghadam, A.; Khademi, M.; Moghimi, S. Coherence-based correntropy spectral density: A novel coherence measure for functional connectivity of EEG signals. Measurement 2019, 140, 354–364. [Google Scholar] [CrossRef]

- Demiralp, T.; Başar, E. Theta rhythmicities following expected visual and auditory targets. Int. J. Psychophysiol. 1992, 13, 147–160. [Google Scholar] [CrossRef]

- Demiralp, T.; Ademoglu, A.; Comerchero, M.; Polich, J. Wavelet analysis of P3a and P3b. Brain Topogr. 2001, 13, 251–267. [Google Scholar] [CrossRef] [PubMed]

- Başar, E.; Schürmann, M.; Demiralp, T.; Başar-Eroglu, C.; Ademoglu, A. Event-related oscillations are ‘real brain responses’—Wavelet analysis and new strategies. Int. J. Psychophysiol. 2001, 39, 91–127. [Google Scholar] [CrossRef] [PubMed]

- Blankertz, B.; Lemm, S.; Treder, M.; Haufe, S.; Müller, K.R. Single-trial analysis and classification of ERP components—A tutorial. NeuroImage 2011, 56, 814–825. [Google Scholar] [CrossRef]

- Batty, M.; Taylor, M.J. Early processing of the six basic facial emotional expressions. Cogn. Brain Res. 2003, 17, 613–620. [Google Scholar] [CrossRef]

- Hofmann, M.J.; Kuchinke, L.; Tamm, S.; Võ, M.L.; Jacobs, A.M. Affective processing within 1/10th of a second: High arousal is necessary for early facilitative processing of negative but not positive words. Cogn. Affect. Behav. Neurosci. 2009, 9, 389–397. [Google Scholar] [CrossRef]

- Kissler, J.; Herbert, C. Emotion, Etmnooi, or Emitoon?–Faster lexical access to emotional than to neutral words during reading. Biol. Psychol. 2013, 92, 464–479. [Google Scholar] [CrossRef]

- Utama, N.P.; Takemoto, A.; Nakamura, K.; Koike, Y. Single-trial EEG data to classify type and intensity of facial emotion from P100 and N170. In Proceedings of the 2009 International Joint Conference on Neural Networks, Atlanta, GA, USA, 14–19 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 3156–3163. [Google Scholar]

- Wang, C.; Xiong, S.; Hu, X.; Yao, L.; Zhang, J. Combining features from ERP components in single-trial EEG for discriminating four-category visual objects. J. Neural Eng. 2012, 9, 056013. [Google Scholar] [CrossRef]

- Qin, Y.; Zhan, Y.; Wang, C.; Zhang, J.; Yao, L.; Guo, X.; Wu, X.; Hu, B. Classifying four-category visual objects using multiple ERP components in single-trial ERP. Cogn. Neurodynamics 2016, 10, 275–285. [Google Scholar] [CrossRef]

- Wiens, S.; Sand, A.; Olofsson, J.K. Nonemotional features suppress early and enhance late emotional electrocortical responses to negative pictures. Biol. Psychol. 2011, 86, 83–89. [Google Scholar] [CrossRef] [PubMed]

- Kirchner, W.K. Age differences in short-term retention of rapidly changing information. J. Exp. Psychol. 1958, 55, 352. [Google Scholar] [CrossRef]

- Basar, E.; Demiralp, T.; Schürmann, M.; Basar-Eroglu, C.; Ademoglu, A. Oscillatory brain dynamics, wavelet analysis, and cognition. Brain Lang. 1999, 66, 146–183. [Google Scholar] [CrossRef] [PubMed]

- Demiralp, T.; Yordanova, J.; Kolev, V.; Ademoglu, A.; Devrim, M.; Samar, V.J. Time–frequency analysis of single-sweep event-related potentials by means of fast wavelet transform. Brain Lang. 1999, 66, 129–145. [Google Scholar] [CrossRef] [PubMed]

- Erdogdu, E.; Kurt, E.; Duru, A.D.; Uslu, A.; Başar-Eroğlu, C.; Demiralp, T. Measurement of cognitive dynamics during video watching through event-related potentials (ERPs) and oscillations (EROs). Cogn. Neurodynamics 2019, 13, 503–512. [Google Scholar] [CrossRef]

- Straube, S.; Fahle, M. The electrophysiological correlate of saliency: Evidence from a figure-detection task. Brain Res. 2010, 1307, 89–102. [Google Scholar] [CrossRef]

- Müller-Putz, G.R.; Riedl, R.; C Wriessnegger, S. Electroencephalography (EEG) as a research tool in the information systems discipline: Foundations, measurement, and applications. Commun. Assoc. Inf. Syst. 2015, 37, 46. [Google Scholar] [CrossRef]

- Demiralp, T.; Ademoglu, A. Decomposition of event-related brain potentials into multiple functional components using wavelet transform. Clin. Electroencephalogr. 2001, 32, 122–138. [Google Scholar] [CrossRef]

- Ademoglu, A.; Demiralp, T.; Yordanova, J.; Kolev, V.; Devrim, M. Decomposition of event-related brain potentials into multicomponents using wavelet transform. Appl. Signal Process. 1998, 5, 142–151. [Google Scholar] [CrossRef]

- Ademoglu, A.; Micheli-Tzanakou, E.; Istefanopoulos, Y. Analysis of pattern reversal visual evoked potentials (PRVEPs) by spline wavelets. IEEE Trans. Biomed. Eng. 1997, 44, 881–890. [Google Scholar] [CrossRef]

- Li, M.; Lu, B.L. Emotion classification based on gamma-band EEG. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1223–1226. [Google Scholar]

- Hadjidimitriou, S.K.; Hadjileontiadis, L.J. EEG-based classification of music appraisal responses using time-frequency analysis and familiarity ratings. IEEE Trans. Affect. Comput. 2013, 4, 161–172. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Shi, L.C.; Jiao, Y.Y.; Lu, B.L. Differential entropy feature for EEG-based vigilance estimation. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 6627–6630. [Google Scholar]

- Tang, C.; Wang, D.; Tan, A.H.; Miao, C. EEG-based emotion recognition via fast and robust feature smoothing. In Proceedings of the Brain Informatics: International Conference, BI 2017, Beijing, China, 16–18 November 2017; Proceedings. Springer: Berlin/Heidelberg, Germany, 2017; pp. 83–92. [Google Scholar]

- Akin, M. Comparison of wavelet transform and FFT methods in the analysis of EEG signals. J. Med. Syst. 2002, 26, 241–247. [Google Scholar] [CrossRef] [PubMed]

- Zhong, P.; Wang, D.; Miao, C. EEG-based emotion recognition using regularized graph neural networks. IEEE Trans. Affect. Comput. 2020, 13, 1290–1301. [Google Scholar] [CrossRef]

- Wu, X.; Zheng, W.L.; Lu, B.L. Identifying functional brain connectivity patterns for EEG-based emotion recognition. In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), San Francisco, CA, USA, 20–23 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 235–238. [Google Scholar]

- Bullmore, E.; Sporns, O. Complex brain networks: Graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 2009, 10, 186–198. [Google Scholar] [CrossRef] [PubMed]

- Fingelkurts, A.A.; Fingelkurts, A.A.; Kähkönen, S. Functional connectivity in the brain—Is it an elusive concept? Neurosci. Biobehav. Rev. 2005, 28, 827–836. [Google Scholar] [CrossRef]

- Greenblatt, R.E.; Pflieger, M.; Ossadtchi, A. Connectivity measures applied to human brain electrophysiological data. J. Neurosci. Methods 2012, 207, 1–16. [Google Scholar] [CrossRef]

- Li, P.; Liu, H.; Si, Y.; Li, C.; Li, F.; Zhu, X.; Huang, X.; Zeng, Y.; Yao, D.; Zhang, Y.; et al. EEG based emotion recognition by combining functional connectivity network and local activations. IEEE Trans. Biomed. Eng. 2019, 66, 2869–2881. [Google Scholar] [CrossRef]

- Fallani, F.D.V.; Costa, L.d.F.; Rodriguez, F.A.; Astolfi, L.; Vecchiato, G.; Toppi, J.; Borghini, G.; Cincotti, F.; Mattia, D.; Salinari, S.; et al. A graph-theoretical approach in brain functional networks. Possible implications in EEG studies. In Proceedings of the Nonlinear Biomedical Physics, Limassol, Cyprus, 29 November–1 December 2009; Springer: Berlin/Heidelberg, Germany, 2010; Volume 4, pp. 1–13. [Google Scholar]

- Stam, C.J.; Reijneveld, J.C. Graph theoretical analysis of complex networks in the brain. Nonlinear Biomed. Phys. 2007, 1, 3. [Google Scholar] [CrossRef]

- Ismail, L.E.; Karwowski, W. A graph theory-based modeling of functional brain connectivity based on EEG: A systematic review in the context of neuroergonomics. IEEE Access 2020, 8, 155103–155135. [Google Scholar] [CrossRef]

- Ménoret, M.; Farrugia, N.; Pasdeloup, B.; Gripon, V. Evaluating graph signal processing for neuroimaging through classification and dimensionality reduction. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 618–622. [Google Scholar]

- Van Den Heuvel, M.P.; Pol, H.E.H. Exploring the brain network: A review on resting-state fMRI functional connectivity. Eur. Neuropsychopharmacol. 2010, 20, 519–534. [Google Scholar] [CrossRef] [PubMed]

- Gonuguntla, V.; Wang, Y.; Veluvolu, K.C. Phase synchrony in subject-specific reactive band of EEG for classification of motor imagery tasks. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 2784–2787. [Google Scholar]

- Wang, Z.; Tong, Y.; Heng, X. Phase-locking value based graph convolutional neural networks for emotion recognition. IEEE Access 2019, 7, 93711–93722. [Google Scholar] [CrossRef]

- Chiarion, G.; Sparacino, L.; Antonacci, Y.; Faes, L.; Mesin, L. Connectivity analysis in EEG data: A tutorial review of the state of the art and emerging trends. Bioengineering 2023, 10, 372. [Google Scholar] [CrossRef]

- Zhao, Y.; Laguna, R.C.; Zhao, Y.; Liu, J.J.; He, X.; Yianni, J.; Sarrigiannis, P.G. A wavelet-based correlation analysis framework to study cerebromuscular activity in essential tremor. Complexity 2018, 2018, 7269494. [Google Scholar] [CrossRef]

- Ortega, A.; Frossard, P.; Kovačević, J.; Moura, J.M.; Vandergheynst, P. Graph signal processing: Overview, challenges, and applications. Proc. IEEE 2018, 106, 808–828. [Google Scholar] [CrossRef]

- Jang, S.; Moon, S.E.; Lee, J.S. Graph Signal Representation of Eeg for Graph Convolutional Neural Network. 2018. Available online: https://openreview.net/pdf?id=Bk6Y0RR8M (accessed on 1 April 2025).

- Wu, X.; Zheng, W.L.; Li, Z.; Lu, B.L. Investigating EEG-based functional connectivity patterns for multimodal emotion recognition. J. Neural Eng. 2022, 19, 016012. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Y.; Tiwari, P.; Song, D.; Hu, B.; Yang, M.; Zhao, Z.; Kumar, N.; Marttinen, P. EEG based emotion recognition: A tutorial and review. ACM Comput. Surv. 2022, 55, 1–57. [Google Scholar] [CrossRef]

- Lee, Y.Y.; Hsieh, S. Classifying different emotional states by means of EEG-based functional connectivity patterns. PLoS ONE 2014, 9, e95415. [Google Scholar] [CrossRef]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossings analysis. IEEE Trans. Affect. Comput. 2010, 1, 81–97. [Google Scholar] [CrossRef]

- Lan, Z.; Sourina, O.; Wang, L.; Liu, Y. Real-time EEG-based emotion monitoring using stable features. Vis. Comput. 2016, 32, 347–358. [Google Scholar] [CrossRef]

- Wang, X.W.; Nie, D.; Lu, B.L. Emotional state classification from EEG data using machine learning approach. Neurocomputing 2014, 129, 94–106. [Google Scholar] [CrossRef]

- Sorkhabi, M.M. Emotion detection from EEG signals with continuous wavelet analyzing. Am. J. Comput. Res. Repos. 2014, 2, 66–70. [Google Scholar]

- Mohammadi, Z.; Frounchi, J.; Amiri, M. Wavelet-based emotion recognition system using EEG signal. Neural Comput. Appl. 2017, 28, 1985–1990. [Google Scholar] [CrossRef]

- Rotem-Kohavi, N.; Oberlander, T.; Virji-Babul, N. Infants and adults have similar regional functional brain organization for the perception of emotions. Neurosci. Lett. 2017, 650, 118–125. [Google Scholar] [CrossRef] [PubMed]

- Olofsson, J.K.; Nordin, S.; Sequeira, H.; Polich, J. Affective picture processing: An integrative review of ERP findings. Biol. Psychol. 2008, 77, 247–265. [Google Scholar] [CrossRef] [PubMed]

- Bernat, E.; Bunce, S.; Shevrin, H. Event-related brain potentials differentiate positive and negative mood adjectives during both supraliminal and subliminal visual processing. Int. J. Psychophysiol. 2001, 42, 11–34. [Google Scholar] [CrossRef] [PubMed]

- Frantzidis, C.A.; Bratsas, C.; Papadelis, C.L.; Konstantinidis, E.; Pappas, C.; Bamidis, P.D. Toward emotion aware computing: An integrated approach using multichannel neurophysiological recordings and affective visual stimuli. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 589–597. [Google Scholar] [CrossRef]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International affective picture system (IAPS): Technical manual and affective ratings. NIMH Cent. Study Emot. Atten. 1997, 1, 3. [Google Scholar]

- Nicolae, I.E.; Acqualagna, L.; Blankertz, B. Assessing the depth of cognitive processing as the basis for potential user-state adaptation. Front. Neurosci. 2017, 11, 548. [Google Scholar] [CrossRef]

- Nicolae, I.E.; Acqualagna, L.; Neagu, G.M. Enhanced Classification Methods for the Depth of Cognitive Processing Depicted in Neural Signals. Univ. Politeh. Buchar. Sci. Bull. Ser. C-Electr. Eng. Comput. Sci. 2018, 80, 135–146. [Google Scholar]

- Craik, F.I.; Lockhart, R.S. Levels of processing: A framework for memory research. J. Verbal Learn. Verbal Behav. 1972, 11, 671–684. [Google Scholar] [CrossRef]

- Nicolae, I.E.; Acqualagna, L.; Blankertz, B. Neural indicators of the depth of cognitive processing for user-adaptive neurotechnological applications. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1484–1487. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Luo, J.; Chu, S.; Cannard, C.; Hoffmann, S.; Miyakoshi, M. ICA’s bug: How ghost ICs emerge from effective rank deficiency caused by EEG electrode interpolation and incorrect re-referencing. Front. Signal Process. 2023, 3, 1064138. [Google Scholar] [CrossRef]

- Nicolae, I.E. Advanced EEG Signal Processing with Applications in Brain-Computer Interfaces: Evaluating User Focused Paradigms for the Purpose of Enhancing Brain-Computer Interaction. Ph.D. Thesis, Technische Universität Berlin, Berlin, Germany, 2019. [Google Scholar]

- Winkler, I.; Haufe, S.; Tangermann, M. Automatic classification of artifactual ICA-components for artifact removal in EEG signals. Behav. Brain Funct. 2011, 7, 30. [Google Scholar] [CrossRef]

- Luck, S.J. An Introduction to the Event-Related Potential Technique; MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Van Milligen, B.P.; Sanchez, E.; Estrada, T.; Hidalgo, C.; Brañas, B.; Carreras, B.; García, L. Wavelet bicoherence: A new turbulence analysis tool. Phys. Plasmas 1995, 2, 3017–3032. [Google Scholar] [CrossRef]

- Grinsted, A.; Moore, J.C.; Jevrejeva, S. Application of the cross wavelet transform and wavelet coherence to geophysical time series. Nonlinear Processes Geophys. 2004, 11, 561–566. [Google Scholar] [CrossRef]

- König, N.; Steber, S.; Borowski, A.; Bliem, H.R.; Rossi, S. Neural processing of cognitive control in an emotionally neutral context in anxiety patients. Brain Sci. 2021, 11, 543. [Google Scholar] [CrossRef]

- Rubinov, M.; Sporns, O. Complex network measures of brain connectivity: Uses and interpretations. Neuroimage 2010, 52, 1059–1069. [Google Scholar] [CrossRef]

- Stefano Filho, C.A.; Attux, R.; Castellano, G. Can graph metrics be used for EEG-BCIs based on hand motor imagery? Biomed. Signal Process. Control 2018, 40, 359–365. [Google Scholar] [CrossRef]

- Achard, S.; Bullmore, E. Efficiency and cost of economical brain functional networks. PLoS Comput. Biol. 2007, 3, e17. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, M.; Zhao, S.; Hu, S.; Shi, Z.; Cao, Y. ReliefF-based EEG sensor selection methods for emotion recognition. Sensors 2016, 16, 1558. [Google Scholar] [CrossRef] [PubMed]

- Al-Nafjan, A. Feature selection of EEG signals in neuromarketing. PeerJ Comput. Sci. 2022, 8, e944. [Google Scholar] [CrossRef]

- Sakkalis, V.; Oikonomou, T.; Pachou, E.; Tollis, I.; Micheloyannis, S.; Zervakis, M. Time-significant wavelet coherence for the evaluation of schizophrenic brain activity using a graph theory approach. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 4265–4268. [Google Scholar]

- Wang, G.; Li, J.; Li, Z.; Wei, M.; Li, S. Medial frontal negativity reflects advantageous inequality aversion of proposers in the ultimatum game: An ERP study. Brain Res. 2016, 1639, 38–46. [Google Scholar] [CrossRef]

- Jadhav, N.; Manthalkar, R.; Joshi, Y. Effect of meditation on emotional response: An EEG-based study. Biomed. Signal Process. Control 2017, 34, 101–113. [Google Scholar] [CrossRef]

- Knyazev, G.G.; Slobodskaya, H.R. Personality trait of behavioral inhibition is associated with oscillatory systems reciprocal relationships. Int. J. Psychophysiol. 2003, 48, 247–261. [Google Scholar] [CrossRef]

- Bourdillon, P.; Hermann, B.; Guénot, M.; Bastuji, H.; Isnard, J.; King, J.R.; Sitt, J.; Naccache, L. Brain-scale cortico-cortical functional connectivity in the delta-theta band is a robust signature of conscious states: An intracranial and scalp EEG study. Sci. Rep. 2020, 10, 14037. [Google Scholar] [CrossRef] [PubMed]

- Bae, J.H.; Choi, M.; Lee, J.J.; Lee, K.H.; Kim, J.U. Connectivity changes in two-channel prefrontal ERP associated with early cognitive decline in the elderly population: Beta band responses to the auditory oddball stimuli. Front. Aging Neurosci. 2024, 16, 1456169. [Google Scholar] [CrossRef]

- Roshanaei, M.; Norouzi, H.; Onton, J.; Makeig, S.; Mohammadi, A. EEG-based functional and effective connectivity patterns during emotional episodes using graph theoretical analysis. Sci. Rep. 2025, 15, 2174. [Google Scholar] [CrossRef]

- Zhu, G.; Zong, F.; Zhang, H.; Wei, B.; Liu, F. Cognitive load during multitasking can be accurately assessed based on single channel electroencephalography using graph methods. IEEE Access 2021, 9, 33102–33109. [Google Scholar] [CrossRef]

- Torres-Herraez, A.; Watson, T.C.; Rondi-Reig, L. Delta oscillations coordinate intracerebellar and cerebello-hippocampal network dynamics during sleep. J. Neurosci. 2022, 42, 2268–2281. [Google Scholar] [CrossRef]

- Aliramezani, M.; Farrokhi, A.; Constantinidis, C.; Daliri, M.R. Delta-alpha/beta coupling as a signature of visual working memory in the prefrontal cortex. Iscience 2024, 27, 110453. [Google Scholar] [CrossRef] [PubMed]

- Nácher, V.; Ledberg, A.; Deco, G.; Romo, R. Coherent delta-band oscillations between cortical areas correlate with decision making. Proc. Natl. Acad. Sci. USA 2013, 110, 15085–15090. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Lombardi, A.; Costa, B.G.; Gutiérrez, P.P.; Carvajal, P.M.; Rivera, L.Z.; El-Deredy, W. The cognitive triad network-oscillation-behaviour links individual differences in EEG theta frequency with task performance and effective connectivity. Sci. Rep. 2024, 14, 21482. [Google Scholar] [CrossRef]

- Schlumpf, Y.R.; Nijenhuis, E.R.; Klein, C.; Jäncke, L.; Bachmann, S. Functional connectivity changes in the delta frequency band following trauma treatment in complex trauma and dissociative disorder patients. Front. Psychiatry 2022, 13, 889560. [Google Scholar] [CrossRef] [PubMed]

- Harper, J.; Malone, S.M.; Iacono, W.G. Theta-and delta-band EEG network dynamics during a novelty oddball task. Psychophysiology 2017, 54, 1590–1605. [Google Scholar] [CrossRef]

- Su, J.; Zhu, J.; Song, T.; Chang, H. Subject-independent eeg emotion recognition based on genetically optimized projection dictionary pair learning. Brain Sci. 2023, 13, 977. [Google Scholar] [CrossRef]

- Liu, J.; Wu, G.; Luo, Y.; Qiu, S.; Yang, S.; Li, W.; Bi, Y. EEG-based emotion classification using a deep neural network and sparse autoencoder. Front. Syst. Neurosci. 2020, 14, 43. [Google Scholar] [CrossRef]

- Zhao, J.; Yang, Y.; An, X.; Liu, S.; Du, H.; Ming, D. Auditory event-related potentials based on name stimuli: A pilot study. Front. Neurosci. 2022, 16, 808897. [Google Scholar] [CrossRef]

- Key, A.P.; Jones, D.; Peters, S.; Dold, C. Feasibility of using auditory event-related potentials to investigate learning and memory in nonverbal individuals with Angelman syndrome. Brain Cogn. 2018, 128, 73–79. [Google Scholar] [CrossRef]

- Kim, K.H.; Bang, S.W.; Kim, S.R. Emotion recognition system using short-term monitoring of physiological signals. Med. Biol. Eng. Comput. 2004, 42, 419–427. [Google Scholar] [CrossRef]

| Subject | t-Test Feature Selection (%) | ReliefF Feature Selection (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | F1 Score | Accuracy | Sensitivity | Specificity | F1 Score | |

| Subject-1 | 87.5 | 89.0 | 86.1 | 87.9 | 91.9 | 92.4 | 90.5 | 91.6 |

| Subject-2 | 87.3 | 89.1 | 85.4 | 87.6 | 90.7 | 90.6 | 88.6 | 89.8 |

| Subject-3 | 92.4 | 95.1 | 89.6 | 92.6 | 93.2 | 94.2 | 91.4 | 92.9 |

| Subject-4 | 84.6 | 85.2 | 84.3 | 84.9 | 89.8 | 91.9 | 88.6 | 90.4 |

| Subject-5 | 96.1 | 96.7 | 95.5 | 96.2 | 96.5 | 97.7 | 94.0 | 96.0 |

| Subject-6 | 91.0 | 92.8 | 89.1 | 91.2 | 91.1 | 89.7 | 90.2 | 90.0 |

| Subject-7 | 90.7 | 92.2 | 89.4 | 90.9 | 93.0 | 93.1 | 91.6 | 92.4 |

| Subject-8 | 88.3 | 83.5 | 93.4 | 87.9 | 90.6 | 88.9 | 93.4 | 91.0 |

| Subject-9 | 91.1 | 91.9 | 90.4 | 91.2 | 91.5 | 92.7 | 90.6 | 91.8 |

| Subject-10 | 91.2 | 90.1 | 92.4 | 91.2 | 89.5 | 88.4 | 90.7 | 89.5 |

| Subject-11 | 84.3 | 81.5 | 86.8 | 83.8 | 90.4 | 89.2 | 92.9 | 90.9 |

| Subject-12 | 93.0 | 91.5 | 94.6 | 92.9 | 95.7 | 94.6 | 96.0 | 95.3 |

| Subject-13 | 91.6 | 95.8 | 87.2 | 91.8 | 92.3 | 95.0 | 90.2 | 92.8 |

| Average | 89.9 | 90.3 | 89.6 | 90.0 | 91.8 | 92.2 | 91.4 | 91.9 |

| Subject | t-Test Feature Selection (%) | ReliefF Feature Selection (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | F1 Score | Accuracy | Sensitivity | Specificity | F1 Score | |

| Subject-1 | 82.4 | 81.7 | 83.3 | 82.6 | 90.2 | 91.0 | 89.2 | 90.3 |

| Subject-2 | 87.4 | 89.5 | 85.5 | 87.9 | 85.8 | 88.0 | 83.6 | 86.3 |

| Subject-3 | 90.4 | 92.7 | 88.5 | 90.9 | 90.8 | 91.9 | 89.6 | 91.0 |

| Subject-4 | 89.9 | 89.1 | 90.7 | 89.9 | 91.3 | 90.2 | 92.8 | 91.5 |

| Subject-5 | 93.6 | 95.0 | 92.1 | 93.7 | 92.2 | 93.3 | 90.9 | 92.3 |

| Subject-6 | 88.6 | 88.6 | 88.0 | 88.5 | 88.0 | 85.8 | 90.3 | 87.9 |

| Subject-7 | 91.6 | 89.9 | 93.4 | 91.6 | 85.8 | 85.5 | 86.2 | 86.0 |

| Subject-8 | 69.2 | 71.4 | 70.2 | 70.9 | 88.0 | 88.9 | 87.2 | 88.4 |

| Subject-9 | 80.0 | 82.3 | 83.1 | 80.3 | 87.7 | 89.1 | 86.3 | 88.1 |

| Subject-10 | 75.6 | 77.0 | 78.1 | 76.2 | 91.1 | 91.2 | 91.0 | 91.3 |

| Subject-11 | 78.8 | 80.2 | 81.0 | 79.8 | 89.0 | 88.7 | 89.7 | 89.3 |

| Subject-12 | 75.2 | 77.4 | 76.5 | 76.1 | 86.3 | 83.4 | 89.3 | 86.2 |

| Subject-13 | 73.1 | 75.2 | 74.7 | 74.1 | 87.2 | 85.4 | 89.1 | 89.6 |

| Subject-14 | 87.9 | 86.3 | 89.2 | 87.7 | 89.3 | 89.8 | 89.1 | 89.6 |

| Subject-15 | 88.4 | 82.5 | 94.4 | 87.8 | 85.2 | 85.2 | 85.2 | 85.3 |

| Average | 83.5 | 83.9 | 84.6 | 83.9 | 88.5 | 88.5 | 88.6 | 88.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deniz, S.M.; Ademoglu, A.; Duru, A.D.; Demiralp, T. Application of Graph-Theoretic Methods Using ERP Components and Wavelet Coherence on Emotional and Cognitive EEG Data. Brain Sci. 2025, 15, 714. https://doi.org/10.3390/brainsci15070714

Deniz SM, Ademoglu A, Duru AD, Demiralp T. Application of Graph-Theoretic Methods Using ERP Components and Wavelet Coherence on Emotional and Cognitive EEG Data. Brain Sciences. 2025; 15(7):714. https://doi.org/10.3390/brainsci15070714

Chicago/Turabian StyleDeniz, Sencer Melih, Ahmet Ademoglu, Adil Deniz Duru, and Tamer Demiralp. 2025. "Application of Graph-Theoretic Methods Using ERP Components and Wavelet Coherence on Emotional and Cognitive EEG Data" Brain Sciences 15, no. 7: 714. https://doi.org/10.3390/brainsci15070714

APA StyleDeniz, S. M., Ademoglu, A., Duru, A. D., & Demiralp, T. (2025). Application of Graph-Theoretic Methods Using ERP Components and Wavelet Coherence on Emotional and Cognitive EEG Data. Brain Sciences, 15(7), 714. https://doi.org/10.3390/brainsci15070714