Epileptic Seizure Detection Using Machine Learning: A Systematic Review and Meta-Analysis

Abstract

1. Introduction

2. Materials and Methods

2.1. Registration

2.2. Search Strategy

2.3. Eligibility Criteria

2.3.1. Inclusion Criteria

2.3.2. Exclusion Criteria

2.4. Study Screening and Data Extraction

2.5. Quality Assessment

2.6. Data Analysis

3. Results

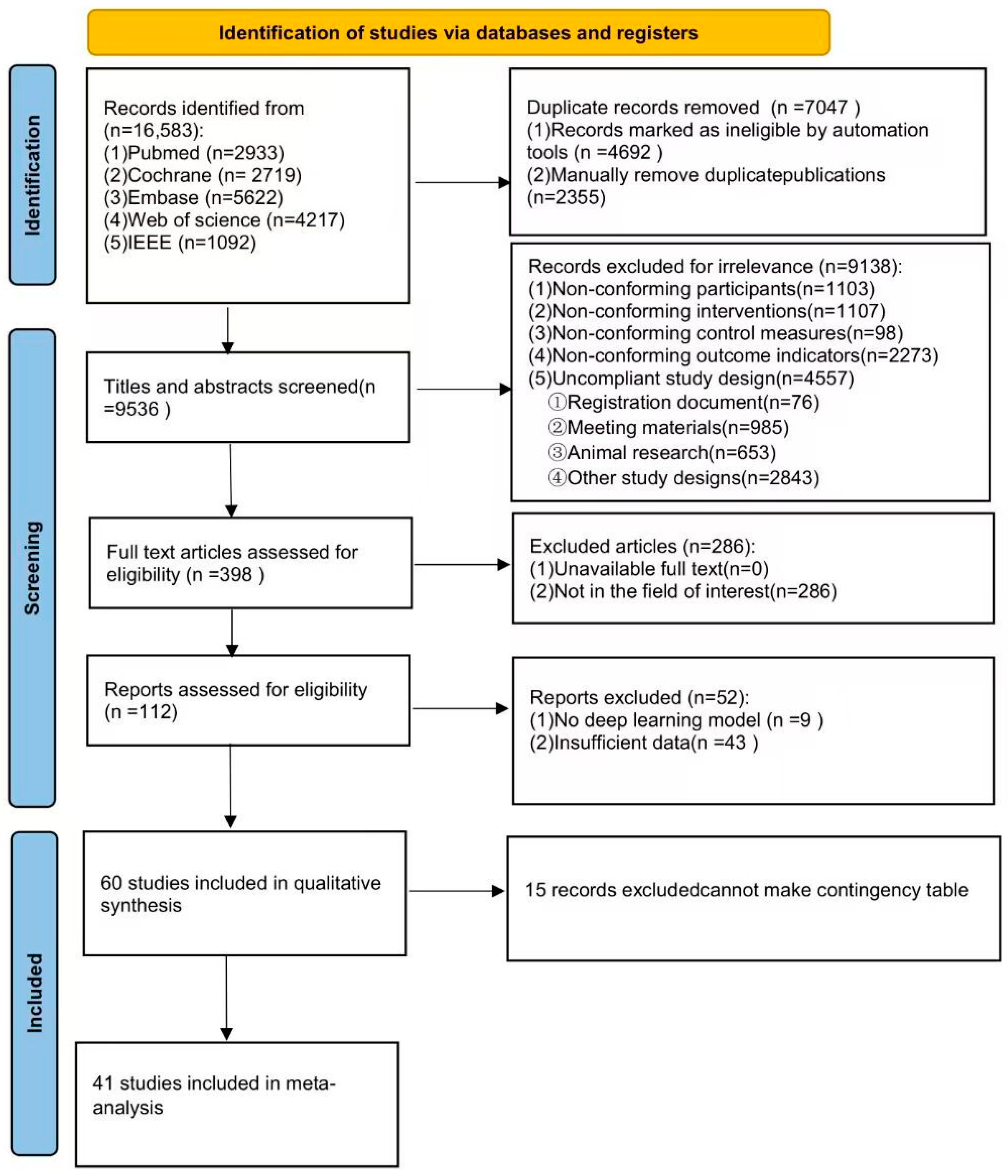

3.1. Search Results

3.2. Study Characteristics

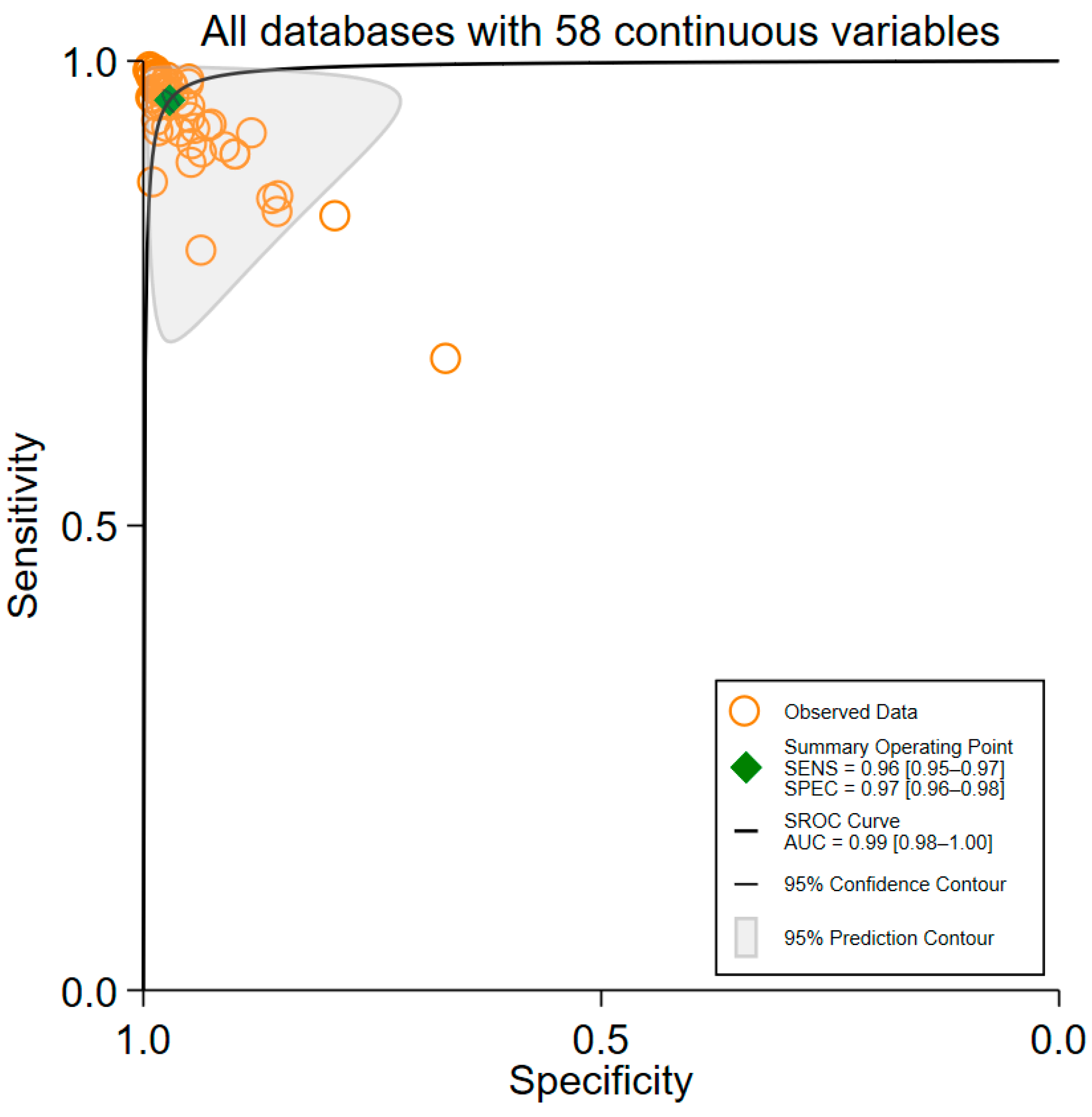

3.3. Overall Performance of ML Algorithms

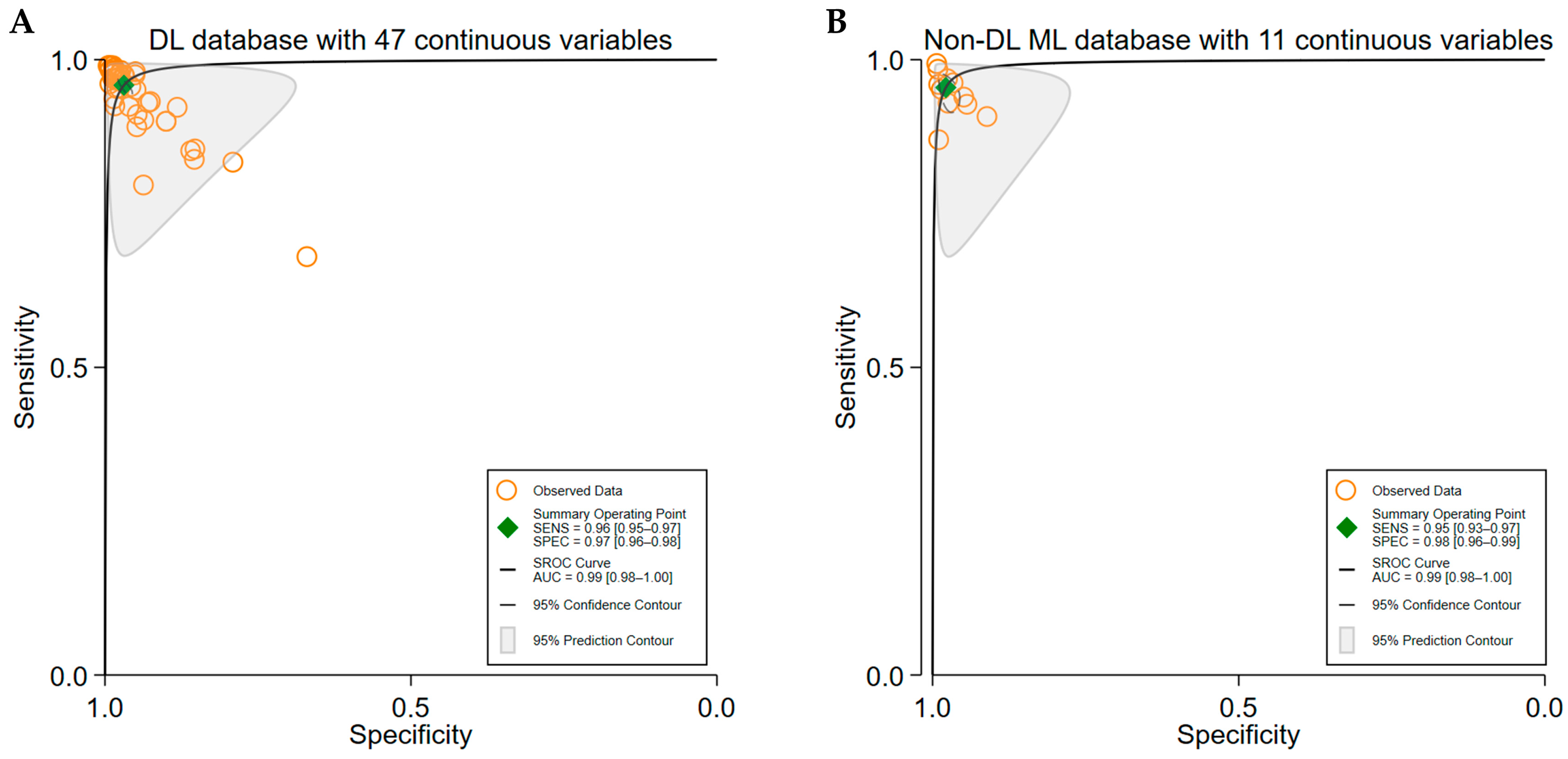

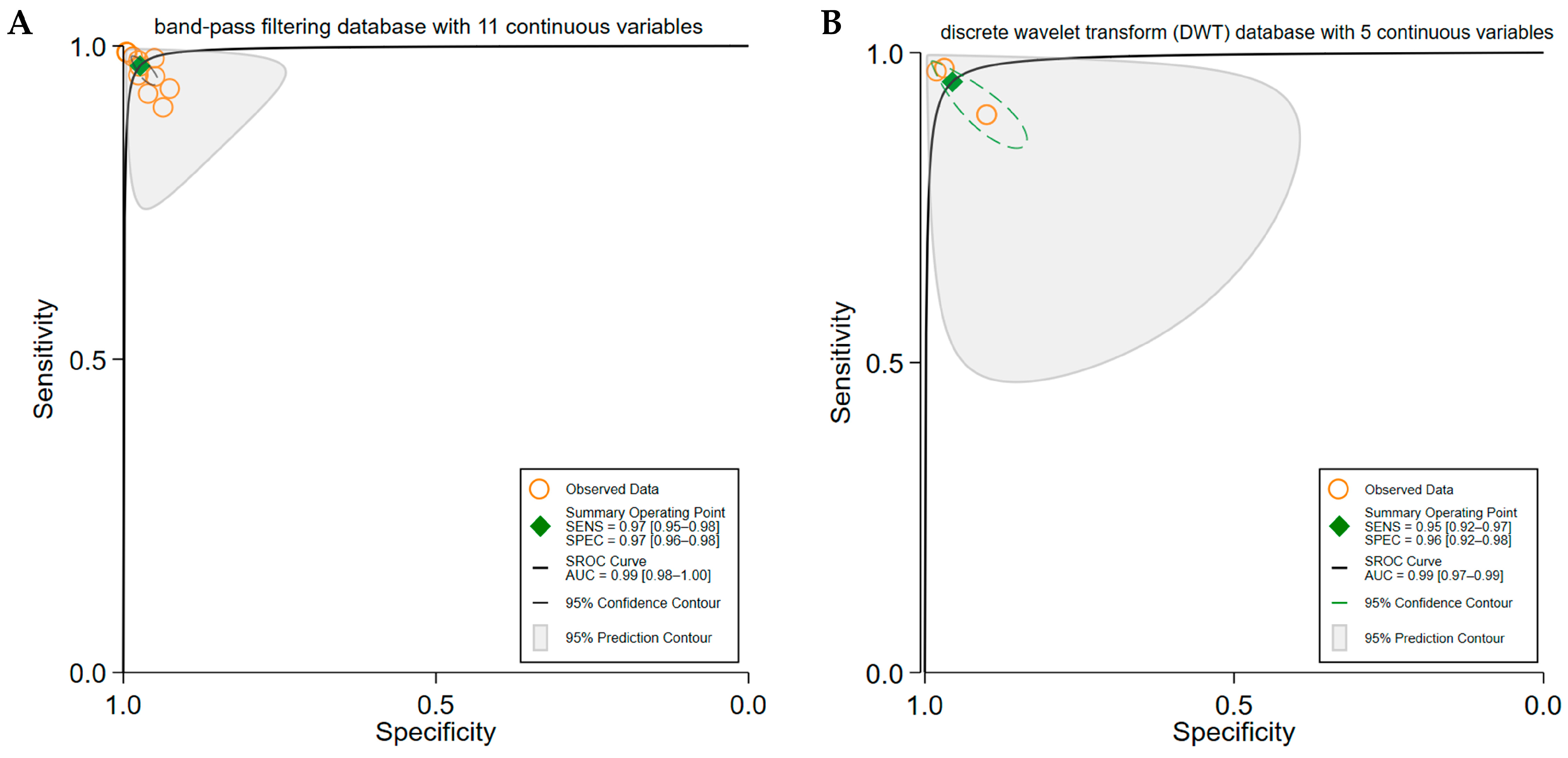

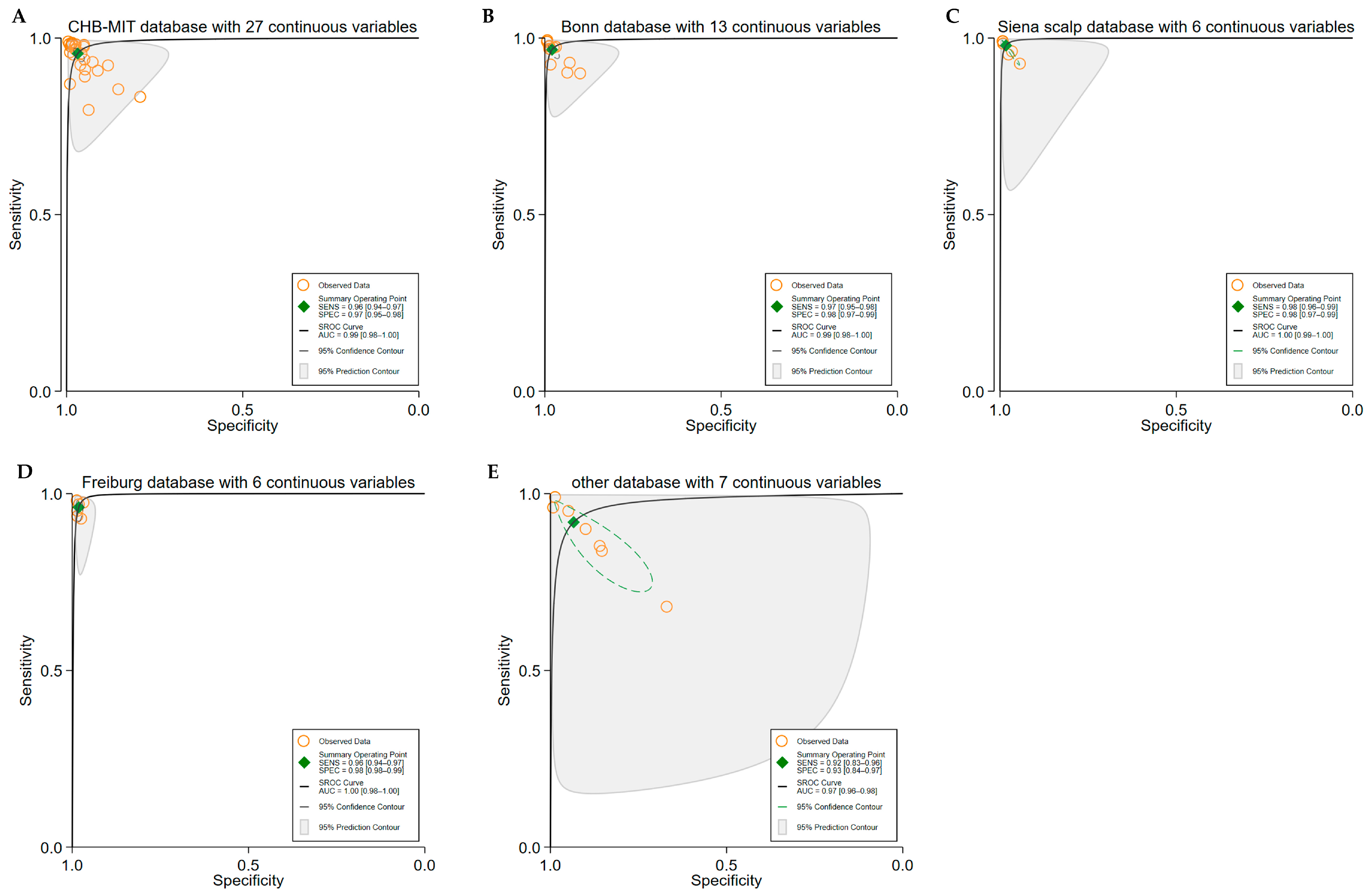

3.4. Subgroup Analyses

3.5. Heterogeneity Analysis

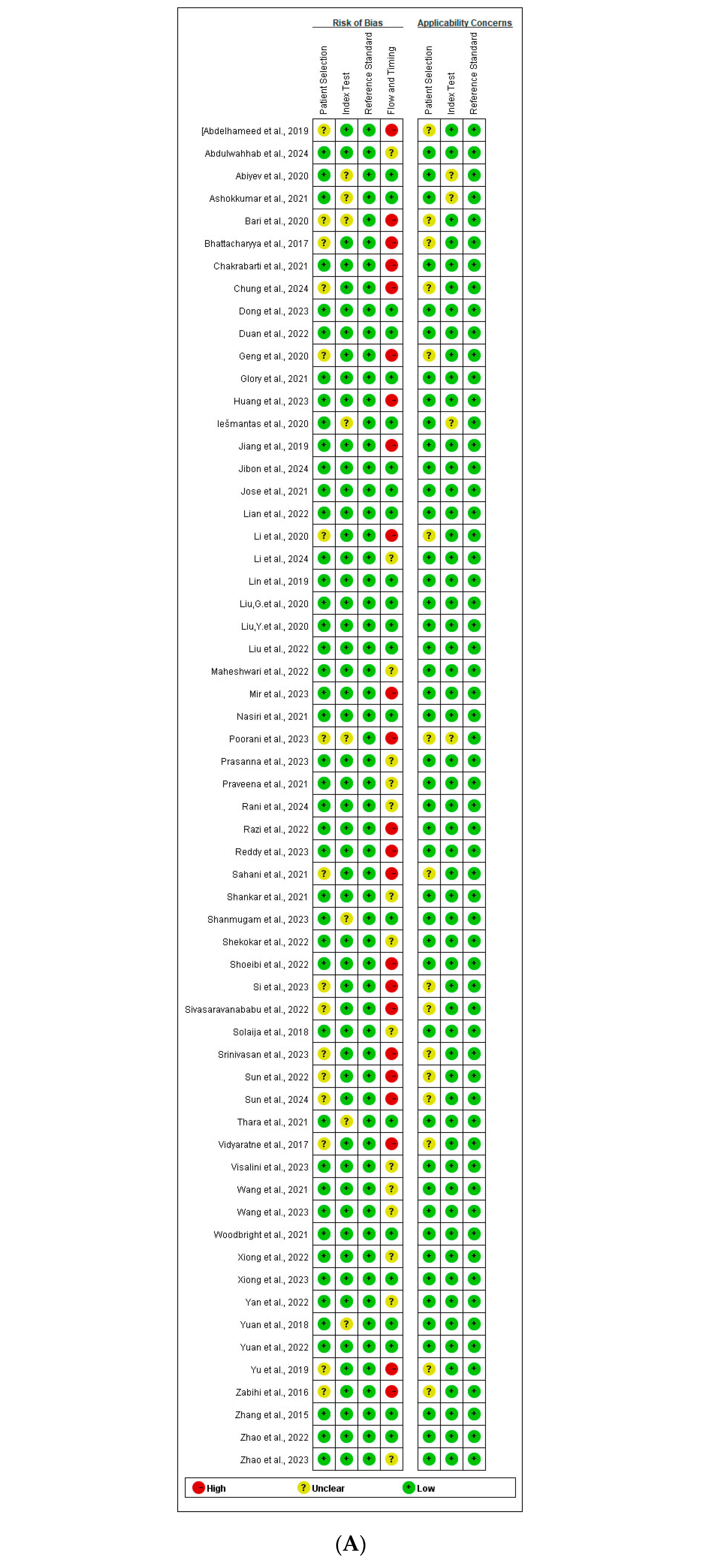

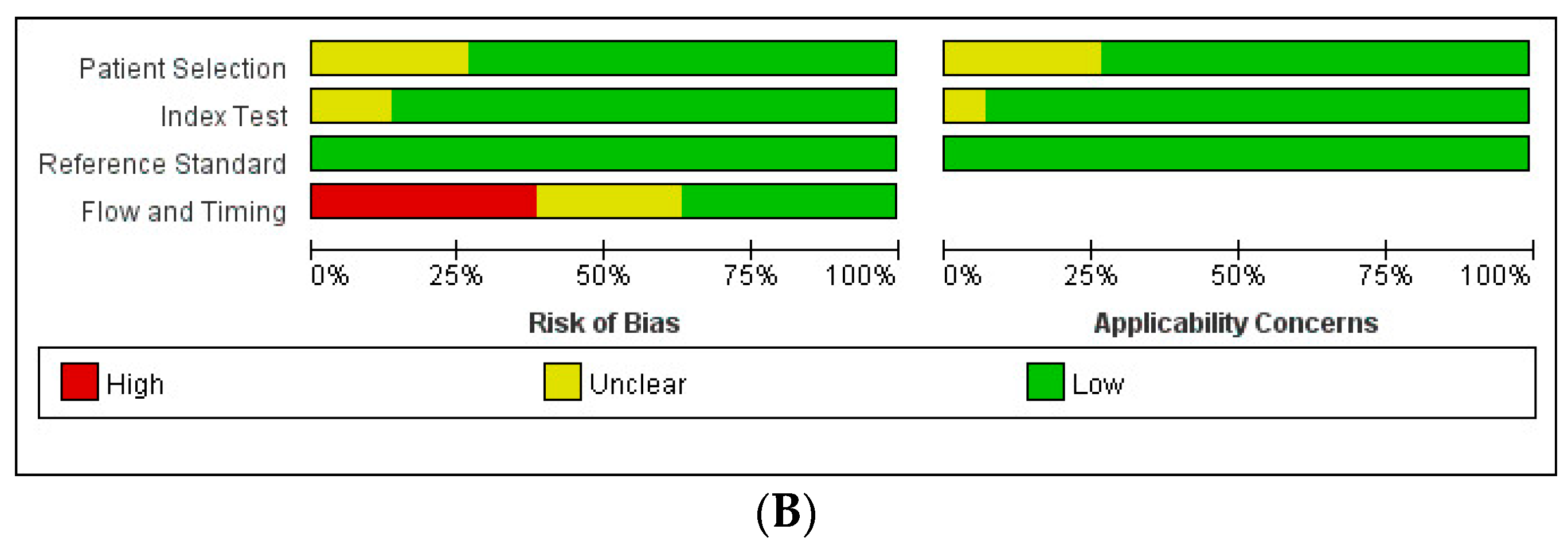

3.6. Quality Assessment

- Index Test: Seven studies [25,28,44,55,57,58,63] were assessed as having an unclear RoB, primarily because they did not explicitly state whether the Index Test was interpreted without knowledge of the Reference Standard results. However, since all studies employed predefined thresholds, the RoB for the Reference Standard was rated as low across all studies.

- Flow and Timing:

- ○

- ○

- No studies were found to have applicability concerns related to the Reference Standard.

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AUC | Area Under the Curve |

| CI | Confidence Interval |

| EEG | Electroencephalogram |

| ML | Machine Learning |

| ROC | Receiver Operating Characteristic |

| ROB | Risk of Bias |

| SROC | Summary Receiver Operating Characteristic |

References

- Tatum, W.O.; Rubboli, G.; Kaplan, P.W.; Mirsatari, S.M.; Radhakrishnan, K.; Gloss, D.; Caboclo, L.O.; Drislane, F.W.; Koutroumanidis, M.; Schomer, D.L.; et al. Clinical utility of EEG in diagnosing and monitoring epilepsy in adults. Clin. Neurophysiol. Off. J. Int. Fed. Clin. Neurophysiol. 2018, 129, 1056–1082. [Google Scholar] [CrossRef] [PubMed]

- Bandopadhyay, R.; Singh, T.; Ghoneim, M.M.; Alshehri, S.; Angelopoulou, E.; Paudel, Y.N.; Piperi, C.; Ahmad, J.; Alhakamy, N.A.; Alfaleh, M.A.; et al. Recent Developments in Diagnosis of Epilepsy: Scope of MicroRNA and Technological Advancements. Biology 2021, 10, 1097. [Google Scholar] [CrossRef]

- Iasemidis, L.D. Epileptic seizure prediction and control. IEEE Trans. Biomed. Eng. 2003, 50, 549–558. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; El Atrache, R.; Tang, J.; Jackson, M.; Makarucha, A.; Cantley, S.; Sheehan, T.; Vieluf, S.; Zhang, B.; Rogers, J.L.; et al. Artificial intelligence-enhanced epileptic seizure detection by wearables. Epilepsia 2023, 64, 3213–3226. [Google Scholar] [CrossRef]

- Khan, G.H.; Khan, N.A.; Bin Altaf, M.A.; Abbasi, Q. A Shallow Autoencoder Framework for Epileptic Seizure Detection in EEG Signals. Sensors 2023, 23, 4112. [Google Scholar] [CrossRef]

- Yun, S. Advances, challenges, and prospects of electroencephalography-based biomarkers for psychiatric disorders: A narrative review. J. Yeungnam Med. Sci. 2024, 41, 261–268. [Google Scholar] [CrossRef]

- Djemal, A.; Bouchaala, D.; Fakhfakh, A.; Kanoun, O. Wearable Electromyography Classification of Epileptic Seizures: A Feasibility Study. Bioengineering 2023, 10, 703. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Yan, X.; Gao, P.; Gao, X.; Zhang, S. Automatic detection of epileptic seizure based on approximate entropy, recurrence quantification analysis and convolutional neural networks. Artif. Intell. Med. 2020, 102, 101711. [Google Scholar] [CrossRef] [PubMed]

- An, S.; Kim, S.; Chikontwe, P.; Park, S.H. Dual Attention Relation Network With Fine-Tuning for Few-Shot EEG Motor Imagery Classification. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 15479–15493. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Zhong, X.; Liu, G.; Dong, X.; Li, C.; Li, H.; Cui, H.; Zhou, W. Automatic Seizure Detection Based on Stockwell Transform and Transformer. Sensors 2023, 24, 77. [Google Scholar] [CrossRef] [PubMed]

- Xiong, W.; Nurse, E.S.; Lambert, E.; Cook, M.J.; Kameneva, T. Classification of Epileptic and Psychogenic Non-Epileptic Seizures Using Electroencephalography and Electrocardiography. IEEE Trans. Neural Syst. Rehabil. Eng. A Publ. IEEE Eng. Med. Biol. Soc. 2023, 31, 2831–2838. [Google Scholar] [CrossRef] [PubMed]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: Explanation and elaboration. BMJ Clin. Res. Ed. 2009, 339, b2700. [Google Scholar] [CrossRef]

- Sounderajah, V.; Ashrafian, H.; Rose, S.; Shah, N.H.; Ghassemi, M.; Golub, R.; Kahn, C.E., Jr.; Esteva, A.; Karthikesalingam, A.; Mateen, B.; et al. A quality assessment tool for artificial intelligence-centered diagnostic test accuracy studies: QUADAS-AI. Nat. Med. 2021, 27, 1663–1665. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Yang, B.; Mallett, S.; Takwoingi, Y.; Davenport, C.F.; Hyde, C.J.; Whiting, P.F.; Deeks, J.J.; Leeflang, M.M.G.; Bossuyt, P.M.M.; Brazzelli, M.G.; et al. QUADAS-C: A Tool for Assessing Risk of Bias in Comparative Diagnostic Accuracy Studies. Ann. Intern. Med. 2021, 174, 1592–1599. [Google Scholar] [CrossRef]

- Abdelhameed, A.M.; Bayoumi, M. Semi-Supervised EEG Signals Classification System for Epileptic Seizure Detection. IEEE Signal Process. Lett. 2019, 26, 1922–1926. [Google Scholar] [CrossRef]

- Abdulwahhab, A.H.; Abdulaal, A.H.; Al-Ghrairi, A.H.T.; Mohammed, A.A.; Valizadeh, M. Detection of epileptic seizure using EEG signals analysis based on deep learning techniques. Chaos Solitons Fractals 2024, 181, 114700. [Google Scholar] [CrossRef]

- Abiyev, R.; Arslan, M.; Idoko, J.B.; Sekeroglu, B.; Ilhan, A. Identification of Epileptic EEG Signals Using Convolutional Neural Networks. Appl. Sci. 2020, 10, 4089. [Google Scholar] [CrossRef]

- Bari, M.F.; Fattah, S.A. Epileptic seizure detection in EEG signals using normalized IMFs in CEEMDAN domain and quadratic discriminant classifier. Biomed. Signal Process. Control 2020, 58, 101833. [Google Scholar] [CrossRef]

- Bhattacharyya, A.; Pachori, R.B. A Multivariate Approach for Patient-Specific EEG Seizure Detection Using Empirical Wavelet Transform. IEEE Trans. Biomed. Eng. 2017, 64, 2003–2015. [Google Scholar] [CrossRef]

- Chakrabarti, S.; Swetapadma, A.; Pattnaik, P.K. A channel independent generalized seizure detection method for pediatric epileptic seizures. Comput. Methods Programs Biomed. 2021, 209, 106335. [Google Scholar] [CrossRef]

- Chung, Y.G.; Cho, A.; Kim, H.; Kim, K.J. Single-channel seizure detection with clinical confirmation of seizure locations using CHB-MIT dataset. Front. Neurol. 2024, 15, 1389731. [Google Scholar] [CrossRef]

- Dong, F.; Yuan, Z.; Wu, D.; Jiang, L.; Liu, J.; Hu, W. Novel seizure detection algorithm based on multi-dimension feature selection. Biomed. Signal Process. Control 2023, 84, 104747. [Google Scholar] [CrossRef]

- Duan, L.; Wang, Z.; Qiao, Y.; Wang, Y.; Huang, Z.; Zhang, B. An Automatic Method for Epileptic Seizure Detection Based on Deep Metric Learning. IEEE J. Biomed. Health Inform. 2022, 26, 2147–2157. [Google Scholar] [CrossRef]

- Geng, M.; Zhou, W.; Liu, G.; Li, C.; Zhang, Y. Epileptic Seizure Detection Based on Stockwell Transform and Bidirectional Long Short-Term Memory. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 573–580. [Google Scholar] [CrossRef]

- Glory, H.A.; Vigneswaran, C.; Jagtap, S.S.; Shruthi, R.; Hariharan, G.; Sriram, V.S.S. AHW-BGOA-DNN: A novel deep learning model for epileptic seizure detection. Neural Comput. Appl. 2021, 33, 6065–6093. [Google Scholar] [CrossRef]

- Huang, W.; Xu, H.; Yu, Y. MRP-Net: Seizure detection method based on modified recurrence plot and additive attention convolution neural network. Biomed. Signal Process. Control 2023, 86, 105165. [Google Scholar] [CrossRef]

- Iesmantas, T.; Alzbutas, R. Convolutional neural network for detection and classification of seizures in clinical data. Med. Biol. Eng. Comput. 2020, 58, 1919–1932. [Google Scholar] [CrossRef]

- Jiang, X.; Xu, K.; Zhang, R.; Ren, H.; Chen, W. Redundancy Removed Dual-Tree Discrete Wavelet Transform to Construct Compact Representations for Automated Seizure Detection. Appl. Sci. 2019, 9, 5215. [Google Scholar] [CrossRef]

- Jibon, F.A.; Jamil Chowdhury, A.R.; Miraz, M.H.; Jin, H.H.; Khandaker, M.U.; Sultana, S.; Nur, S.; Siddiqui, F.H.; Kamal, A.H.M.; Salman, M.; et al. Sequential graph convolutional network and DeepRNN based hybrid framework for epileptic seizure detection from EEG signal. Digit. Health 2024, 10, 20552076241249874. [Google Scholar] [CrossRef]

- Jose, J.P.; Sundaram, M.; Jaffino, G. Adaptive rag-bull rider: A modified self-adaptive optimization algorithm for epileptic seizure detection with deep stacked autoencoder using electroencephalogram. Biomed. Signal Process. Control 2021, 64, 102322. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Y.; Cui, W.-G.; Guo, Y.-Z.; Huang, H.; Hu, Z.-Y. Epileptic Seizure Detection in EEG Signals Using a Unified Temporal-Spectral Squeeze-and-Excitation Network. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 782–794. [Google Scholar] [CrossRef]

- Li, Y.; Yang, Y.; Zheng, Q.; Liu, Y.; Wang, H.; Song, S.; Zhao, P. Dynamical graph neural network with attention mechanism for epilepsy detection using single channel EEG. Med. Biol. Eng. Comput. 2024, 62, 307–326. [Google Scholar] [CrossRef]

- Lian, J.; Xu, F. Spatial Enhanced Pattern Through Graph Convolutional Neural Network for Epileptic EEG Identification. Int. J. Neural Syst. 2022, 32, 2250033. [Google Scholar] [CrossRef]

- Lin, Q.; Ye, S.; Wu, C.; Gu, W.; Wang, J.; Zhang, H.-L.; Xue, Y. A novel framework based on biclustering for automatic epileptic seizure detection. Int. J. Mach. Learn. Cybern. 2019, 10, 311–323. [Google Scholar] [CrossRef]

- Liu, G.; Zhou, W.; Geng, M. Automatic Seizure Detection Based on S-Transform and Deep Convolutional Neural Network. Int. J. Neural Syst. 2020, 30, 1950024. [Google Scholar] [CrossRef]

- Liu, X.; Wang, J.; Shang, J.; Liu, J.; Dai, L.; Yuan, S. Epileptic Seizure Detection Based on Variational Mode Decomposition and Deep Forest Using EEG Signals. Brain Sci. 2022, 12, 1275. [Google Scholar] [CrossRef]

- Liu, Y.; Lin, Y.; Jia, Z.; Ma, Y.; Wang, J. Representation based on ordinal patterns for seizure detection in EEG signals. Comput. Biol. Med. 2020, 126, 104033. [Google Scholar] [CrossRef]

- Maheshwari, J.; Joshi, S.D.; Gandhi, T.K. Real-Time Automated Epileptic Seizure Detection by Analyzing Time-Varying High Spatial Frequency Oscillations. IEEE Trans. Instrum. Meas. 2022, 71, 4002608. [Google Scholar] [CrossRef]

- Mir, W.A.; Anjum, M.; Izharuddin, I.; Shahab, S. Deep-EEG: An Optimized and Robust Framework and Method for EEG-Based Diagnosis of Epileptic Seizure. Diagnostics 2023, 13, 773. [Google Scholar] [CrossRef]

- Nasiri, S.; Clifford, G.D. Generalizable Seizure Detection Model Using Generating Transferable Adversarial Features. IEEE Signal Process. Lett. 2021, 28, 568–572. [Google Scholar] [CrossRef]

- Poorani, S.; Balasubramanie, P. Deep learning based epileptic seizure detection with EEG data. Int. J. Syst. Assur. Eng. Manag. 2023, 6, 1–3. [Google Scholar] [CrossRef]

- Prasanna, C.S.L.; Rahman, M.Z.U.; Bayleyegn, M.D. Brain Epileptic Seizure Detection Using Joint CNN and Exhaustive Feature Selection With RNN-BLSTM Classifier. IEEE Access 2023, 11, 97990–98004. [Google Scholar] [CrossRef]

- Praveena, H.D.; Subhas, C.; Naidu, K.R. iEEG based Epileptic Seizure Detection using Reconstruction Independent Component Analysis and Long Short Term Memory Network. Int. J. Comput. Commun. Control 2021, 16, 5. [Google Scholar] [CrossRef]

- Rani, T.J.; Kavitha, D. Effective Epileptic Seizure Detection Using Enhanced Salp Swarm Algorithm-based Long Short-Term Memory Network. IETE J. Res. 2024, 70, 1538–1555. [Google Scholar] [CrossRef]

- Razi, K.F.; Schmid, A. Epileptic Seizure Detection With Patient-Specific Feature and Channel Selection for Low-power Applications. IEEE Trans. Biomed. Circuits Syst. 2022, 16, 626–635. [Google Scholar] [CrossRef]

- Sahani, M.; Rout, S.K.; Dash, P.K. FPGA implementation of epileptic seizure detection using semisupervised reduced deep convolutional neural network. Appl. Soft Comput. 2021, 110, 107639. [Google Scholar] [CrossRef]

- Reddy, C.S.K.; Suchetha, M. A 1-D CNN-FCM model for the classification of epileptic seizure disorders. Neural Comput. Appl. 2023, 35, 17871–17881. [Google Scholar] [CrossRef]

- Shankar, A.; Khaing, H.K.; Dandapat, S.; Barma, S. Analysis of epileptic seizures based on EEG using recurrence plot images and deep learning. Biomed. Signal Process. Control 2021, 69, 102854. [Google Scholar] [CrossRef]

- Shanmugam, S.; Dharmar, S. A CNN-LSTM hybrid network for automatic seizure detection in EEG signals. Neural Comput. Appl. 2023, 35, 20605–20617. [Google Scholar] [CrossRef]

- Shoeibi, A.; Ghassemi, N.; Khodatars, M.; Moridian, P.; Alizadehsani, R.; Zare, A.; Khosravi, A.; Subasi, A.; Acharya, U.R.; Gorriz, J.M. Detection of epileptic seizures on EEG signals using ANFIS classifier, autoencoders and fuzzy entropies. Biomed. Signal Process. Control 2022, 73, 103417. [Google Scholar] [CrossRef]

- Si, X.; Yang, Z.; Zhang, X.; Sun, Y.; Jin, W.; Wang, L.; Yin, S.; Ming, D. Patient-independent seizure detection based on long-term iEEG and a novel lightweight CNN. J. Neural Eng. 2023, 20, 016037. [Google Scholar] [CrossRef]

- Sivasaravanababu, S.; Prabhu, V.; Parthasarathy, V.; Mahendran, R.K. An efficient epileptic seizure detection based on tunable Q-wavelet transform and DCVAE-stacked Bi-LSTM model using electroencephalogram. Eur. Phys. J.-Spec. Top. 2022, 231, 2425–2437. [Google Scholar] [CrossRef]

- Solaija, M.S.J.; Saleem, S.; Khurshid, K.; Hassan, S.A.; Kamboh, A.M. Dynamic Mode Decomposition Based Epileptic Seizure Detection from Scalp EEG. IEEE Access 2018, 6, 38683–38692. [Google Scholar] [CrossRef]

- Srinivasan, S.; Dayalane, S.; Mathivanan, S.k.; Rajadurai, H.; Jayagopal, P.; Dalu, G.T. Detection and classification of adult epilepsy using hybrid deep learning approach. Sci. Rep. 2023, 13, 38683–38692. [Google Scholar] [CrossRef]

- Sun, Q.; Liu, Y.; Li, S. Automatic Seizure Detection Using Multi-Input Deep Feature Learning Networks for EEG Signals. J. Sens. 2024, 2024, 8835396. [Google Scholar] [CrossRef]

- Sun, Y.; Jin, W.; Si, X.; Zhang, X.; Cao, J.; Wang, L.; Yin, S.; Ming, D. Continuous Seizure Detection Based on Transformer and Long-Term iEEG. IEEE J. Biomed. Health Inform. 2022, 26, 5418–5427. [Google Scholar] [CrossRef]

- Thara, D.K.; Premasudha, B.G.; Nayak, R.S.; Murthy, T.V.; Prabhu, G.A.; Hanoon, N. Electroencephalogram for epileptic seizure detection using stacked bidirectional LSTM_GAP neural network. Evol. Intell. 2021, 14, 823–833. [Google Scholar] [CrossRef]

- Vidyaratne, L.S.; Iftekharuddin, K.M. Real-Time Epileptic Seizure Detection Using EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 2146–2156. [Google Scholar] [CrossRef]

- Visalini, K.; Alagarsamy, S.; Nagarajan, D. Neonatal seizure detection using deep belief networks from multichannel EEG data. Neural Comput. Appl. 2023, 35, 10637–10647. [Google Scholar] [CrossRef]

- Wang, X.; Wang, X.; Liu, W.; Chang, Z.; Karkkainen, T.; Cong, F. One dimensional convolutional neural networks for seizure onset detection using long-term scalp and intracranial EEG. Neurocomputing 2021, 459, 212–222. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, F.; Shi, S.; Xia, S.; Peng, F.; Wang, L.; Ai, S.; Xu, Z. Automatic epileptic seizure detection based on persistent homology. Front. Physiol. 2023, 14, 1227952. [Google Scholar] [CrossRef]

- Woodbright, M.; Verma, B.; Haidar, A. Autonomous deep feature extraction based method for epileptic EEG brain seizure classification. Neurocomputing 2021, 444, 30–37. [Google Scholar] [CrossRef]

- Xiong, Y.; Dong, F.; Wu, D.; Jiang, L.; Liu, J.; Li, B. Seizure Detection Based on Improved Genetic Algorithm Optimized Multilayer Network. IEEE Access 2022, 10, 81343–81354. [Google Scholar] [CrossRef]

- Xiong, Y.; Li, J.; Wu, D.; Dong, F.; Liu, J.; Jiang, L.; Cao, J.; Xu, Y. Seizure detection algorithm based on fusion of spatio-temporal network constructed with dispersion index. Biomed. Signal Process. Control 2023, 79, 104155. [Google Scholar] [CrossRef]

- Yan, R.; Julius, A.A. Interpretable seizure detection with signal temporal logic neural network. Biomed. Signal Process. Control 2022, 78, 103998. [Google Scholar] [CrossRef]

- Yu, Z.; Zhou, W.; Zhang, F.; Xu, F.; Yuan, S.; Leng, Y.; Li, Y.; Yuan, Q. Automatic seizure detection based on kernel robust probabilistic collaborative representation. Med. Biol. Eng. Comput. 2019, 57, 205–219. [Google Scholar] [CrossRef]

- Yuan, S.; Liu, J.; Shang, J.; Kong, X.; Yuan, Q.; Ma, Z. The earth mover’s distance and Bayesian linear discriminant analysis for epileptic seizure detection in scalp EEG. Biomed. Eng. Lett. 2018, 8, 373–382. [Google Scholar] [CrossRef]

- Yuan, S.; Mu, J.; Zhou, W.; Dai, L.Y.; Liu, J.X.; Wang, J.; Liu, X. Automatic Epileptic Seizure Detection Using Graph-Regularized Non-Negative Matrix Factorization and Kernel-Based Robust Probabilistic Collaborative Representation. IEEE Trans. Neural Syst. Rehabil. Eng. A Publ. IEEE Eng. Med. Biol. Soc. 2022, 30, 2641–2650. [Google Scholar] [CrossRef]

- Zabihi, M.; Kiranyaz, S.; Rad, A.B.; Katsaggelos, A.K.; Gabbouj, M.; Ince, T. Analysis of High-Dimensional Phase Space via Poincare Section for Patient-Specific Seizure Detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 386–398. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhou, W.; Yuan, S. Multifractal Analysis and Relevance Vector Machine-Based Automatic Seizure Detection in Intracranial EEG. Int. J. Neural Syst. 2015, 25, 1550020. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Chu, D.; He, J.; Xue, M.; Jia, W.; Xu, F.; Zheng, Y. Interactive local and global feature coupling for EEG-based epileptic seizure detection. Biomed. Signal Process. Control 2023, 81, 104441. [Google Scholar] [CrossRef]

- Zhao, Y.; Xue, M.; Dong, C.; He, J.; Chu, D.; Zhang, G.; Xu, F.; Ge, X.; Zheng, Y. Automatic Seizure Identification from EEG Signals Based on Brain Connectivity Learning. Int. J. Neural Syst. 2022, 32, 2250050. [Google Scholar] [CrossRef]

- Qian, L.; Lu, J.; Li, W.; Huan, Y.; Sun, Y.; Zheng, L.; Zou, Z. MCU-Enabled Epileptic Seizure Detection System With Compressed Learning. IEEE Internet Things J. 2024, 11, 8771–8782. [Google Scholar] [CrossRef]

- Tautan, A.-M.; Andrei, A.-G.; Smeralda, C.L.; Vatti, G.; Rossi, S.; Ionescu, B. Unsupervised learning from EEG data for epilepsy: A systematic literature review. Artif. Intell. Med. 2025, 162, 103095. [Google Scholar] [CrossRef]

- Nafea, M.S.; Ismail, Z.H. Supervised Machine Learning and Deep Learning Techniques for Epileptic Seizure Recognition Using EEG Signals-A Systematic Literature Review. Bioengineering 2022, 9, 781. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, B.; Xiao, D.; Tang, F.; Li, X. Accuracy of Machine Learning in Detecting Pediatric Epileptic Seizures: Systematic Review and Meta-Analysis. J. Med. Internet Res. 2024, 26, e55986. [Google Scholar] [CrossRef]

- Wu, L.; Liu, Z.; Huang, H.; Pan, D.; Fu, C.; Lu, Y.; Zhou, M.; Huang, K.; Huang, T.; Yang, L. Development and validation of an interpretable machine learning model for predicting the risk of hepatocellular carcinoma in patients with chronic hepatitis B: A case-control study. BMC Gastroenterol. 2025, 25, 157. [Google Scholar] [CrossRef]

- Scheffer, D.; Kloepper, J.; Scheffer, N.; Rose, G.; Fraunholz, T.; Klein, P.; Fricke, K.R.; Herzberg, P.Y. Using Dynamic Item Response Theory and Machine Learning Based on Natural Language Processing to Improve the Reliability of the Operant Motive Test. Motiv. Sci. 2025, 11, 24–38. [Google Scholar] [CrossRef]

- Zhu, L.; Ooi, Z.J.; Zhang, T.; Brooks, C.S.; Pan, L. Identification of flow regimes in boiling flow with clustering algorithms: An interpretable machine-learning perspective. Appl. Therm. Eng. 2023, 228, 120493. [Google Scholar] [CrossRef]

- Chan, C.M.J.; Madej, D.; Chung, C.K.J.; Lam, H. Deep Learning-Based Prediction of Decoy Spectra for False Discovery Rate Estimation in Spectral Library Searching. J. Proteome Res. 2025, 24, 2235–2242. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Khan, M.K.H.; Guo, W.; Dong, F.; Ge, W.; Zhang, C.; Gong, P.; Patterson, T.A.; Hong, H. Machine learning and deep learning approaches for enhanced prediction of hERG blockade: A comprehensive QSAR modeling study. Expert Opin. Drug Metab. Toxicol. 2024, 20, 665–684. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, L.; Litscher, G.; Li, X. Epileptic Seizure Detection Using Machine Learning: A Systematic Review and Meta-Analysis. Brain Sci. 2025, 15, 634. https://doi.org/10.3390/brainsci15060634

Bai L, Litscher G, Li X. Epileptic Seizure Detection Using Machine Learning: A Systematic Review and Meta-Analysis. Brain Sciences. 2025; 15(6):634. https://doi.org/10.3390/brainsci15060634

Chicago/Turabian StyleBai, Lin, Gerhard Litscher, and Xiaoning Li. 2025. "Epileptic Seizure Detection Using Machine Learning: A Systematic Review and Meta-Analysis" Brain Sciences 15, no. 6: 634. https://doi.org/10.3390/brainsci15060634

APA StyleBai, L., Litscher, G., & Li, X. (2025). Epileptic Seizure Detection Using Machine Learning: A Systematic Review and Meta-Analysis. Brain Sciences, 15(6), 634. https://doi.org/10.3390/brainsci15060634