Voice-Evoked Color Prediction Using Deep Neural Networks in Sound–Color Synesthesia

Abstract

1. Introduction

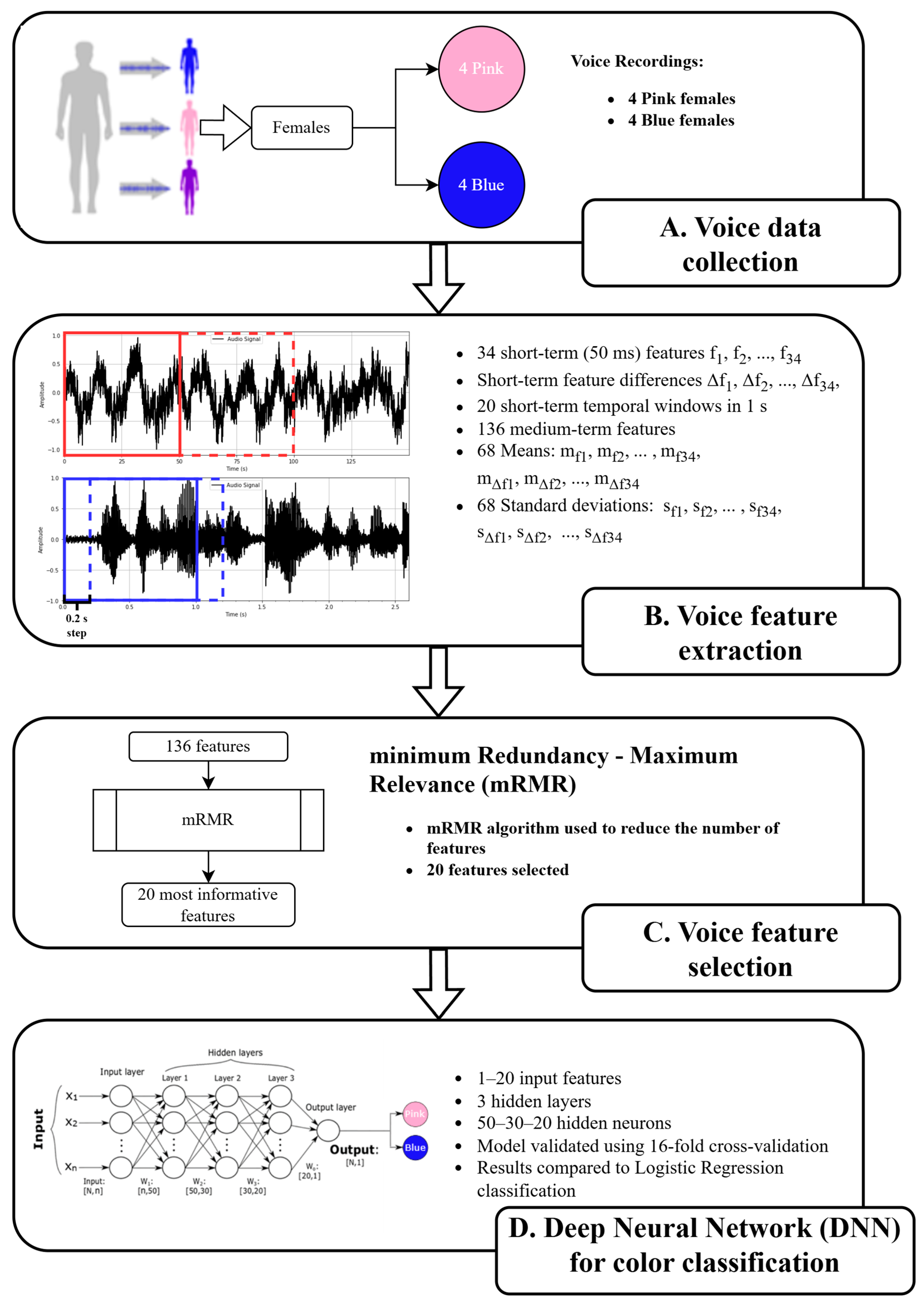

2. Materials and Methods

2.1. The Subject with Sound–Color Synesthesia

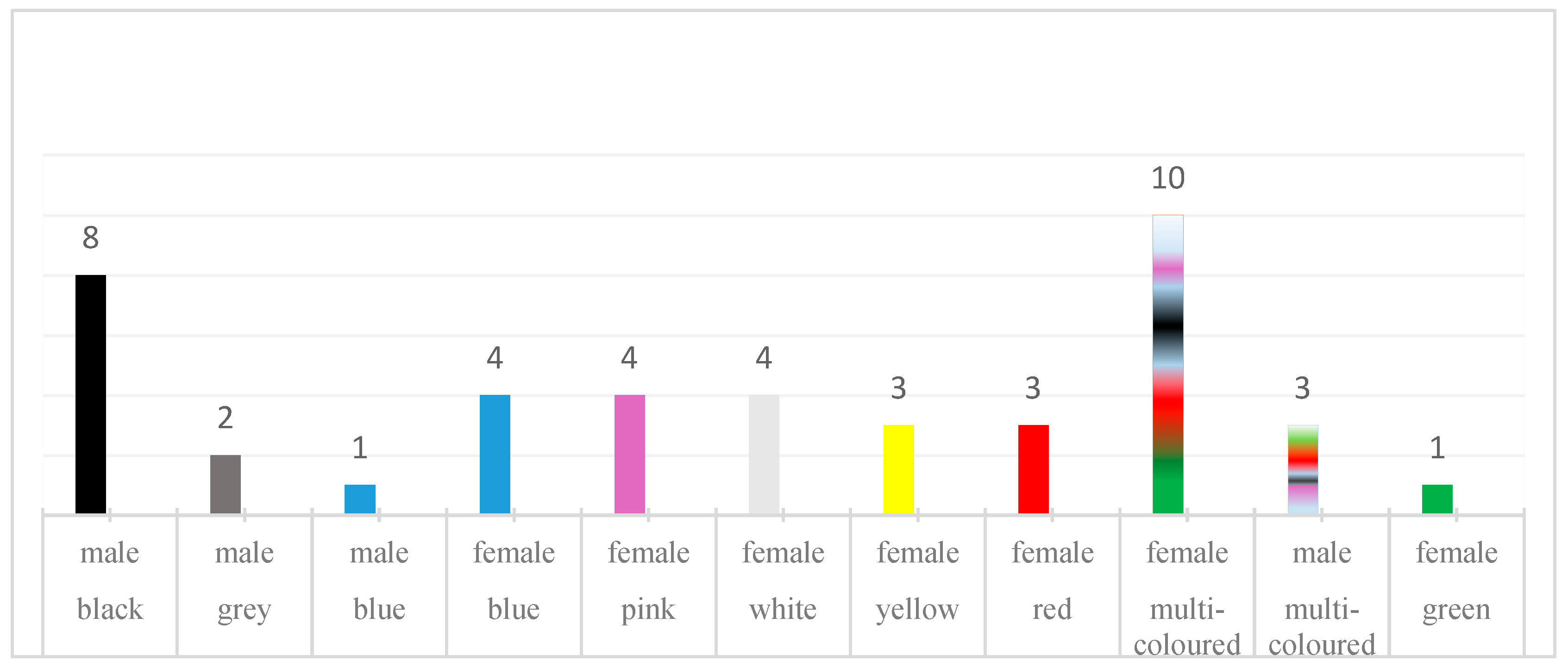

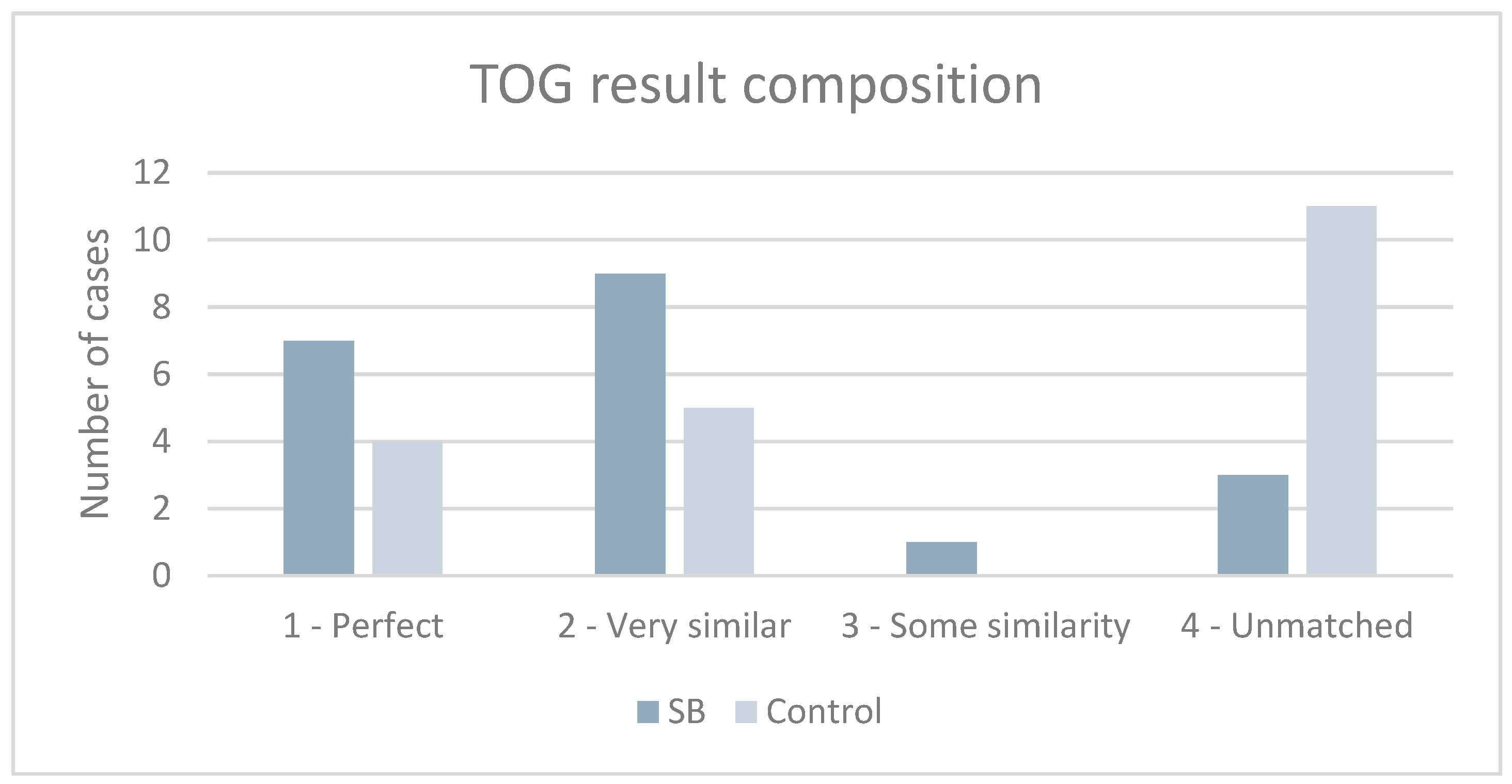

2.2. Voice-Evoked Colors of the Participants and TOG Assessment

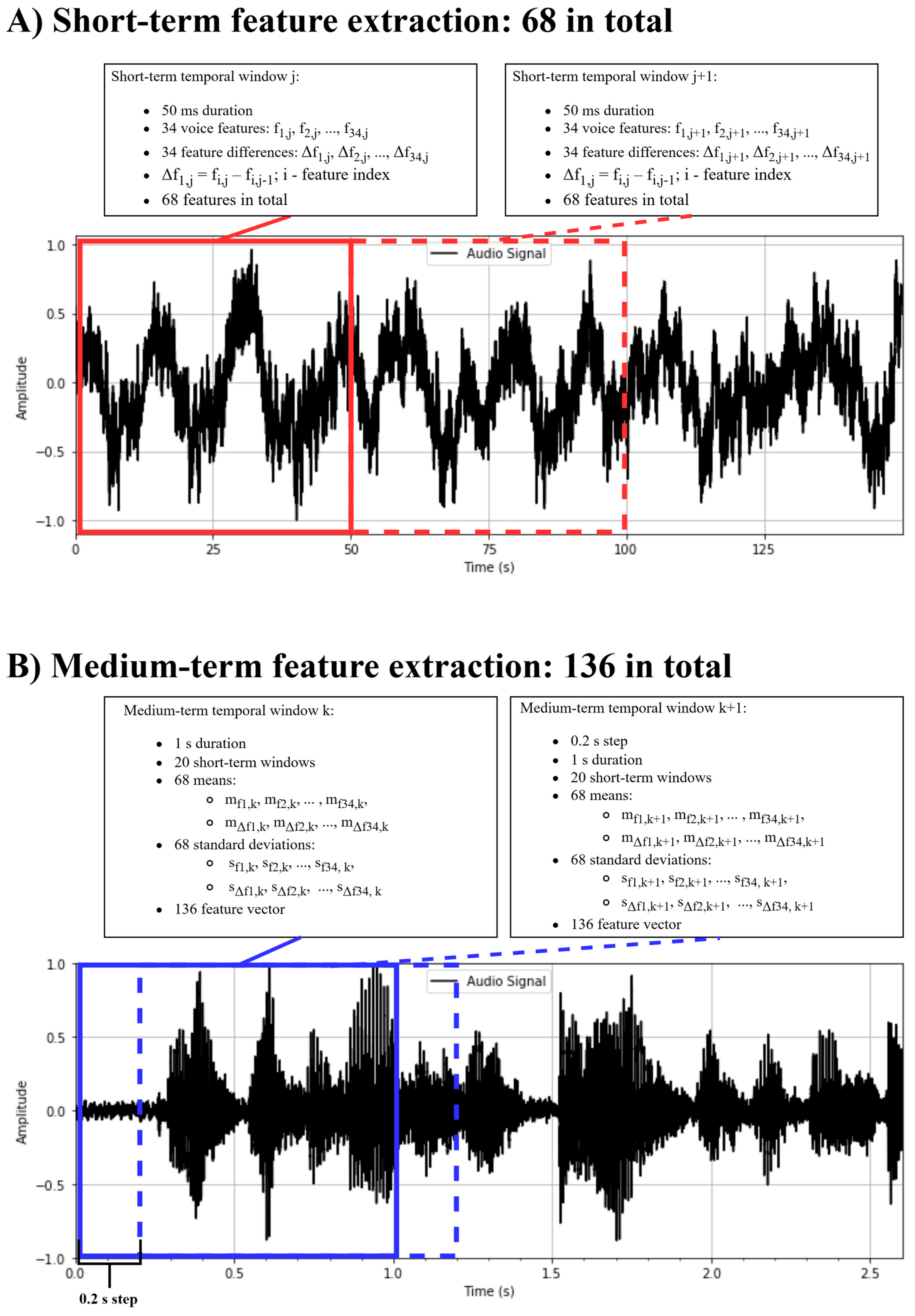

2.3. Voice Data Collection, Voice Feature Extraction, and Selection

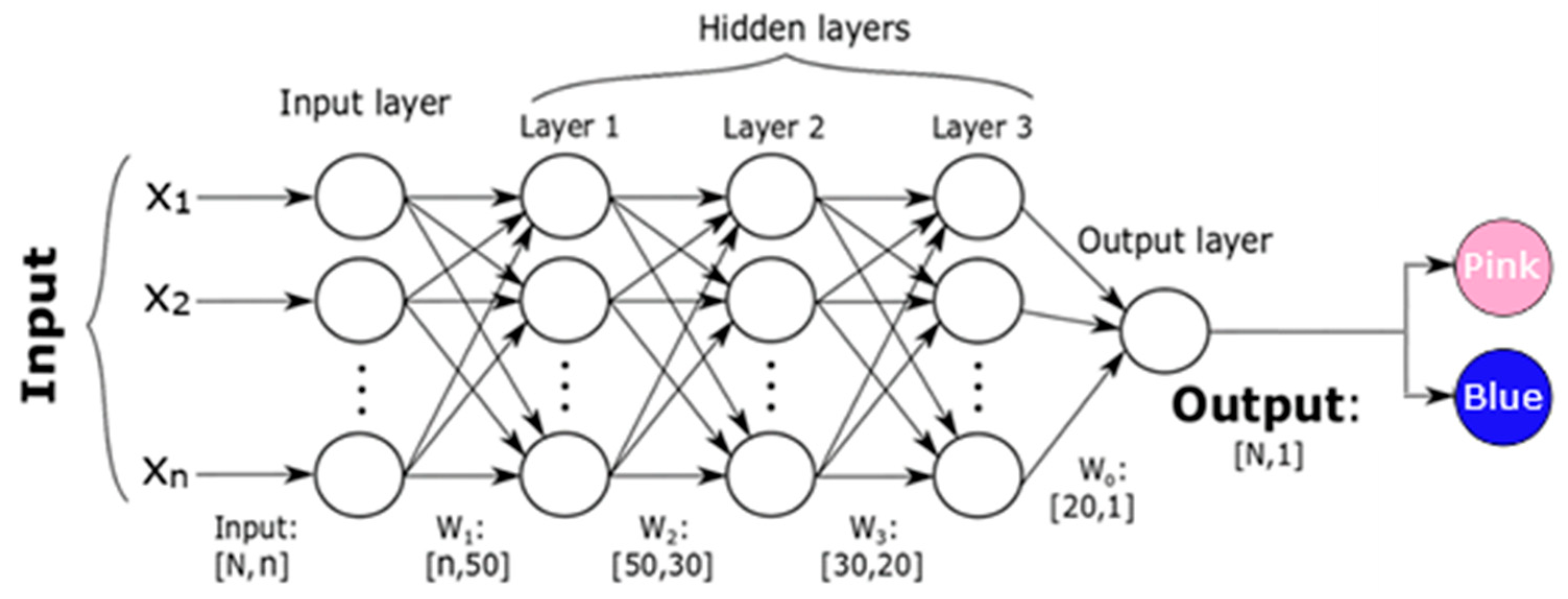

2.4. Machine Learning Algorithms for Voice-Evoked Color Prediction

3. Results

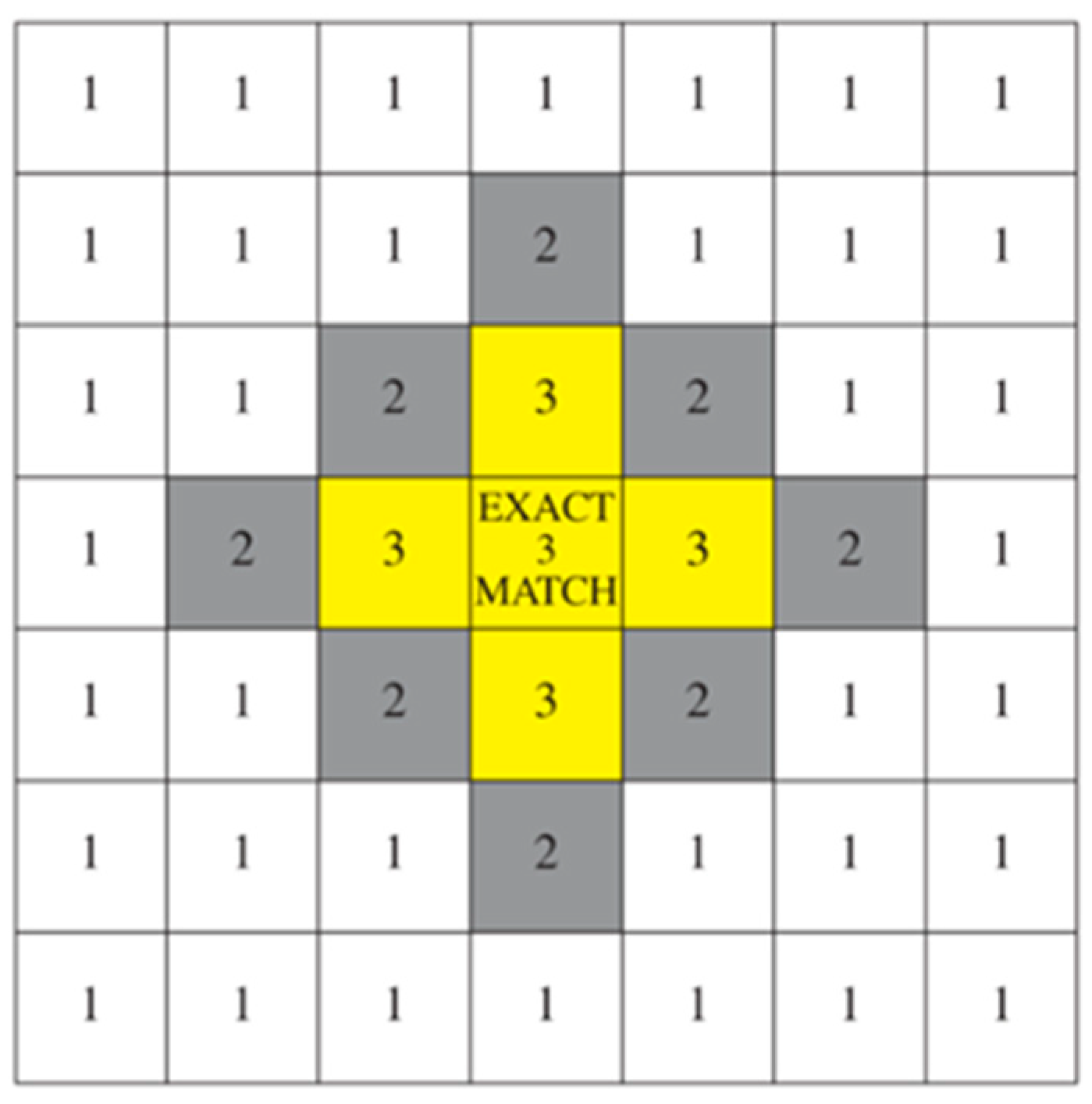

3.1. TOG Scores Based on the Recorded Voice Signals

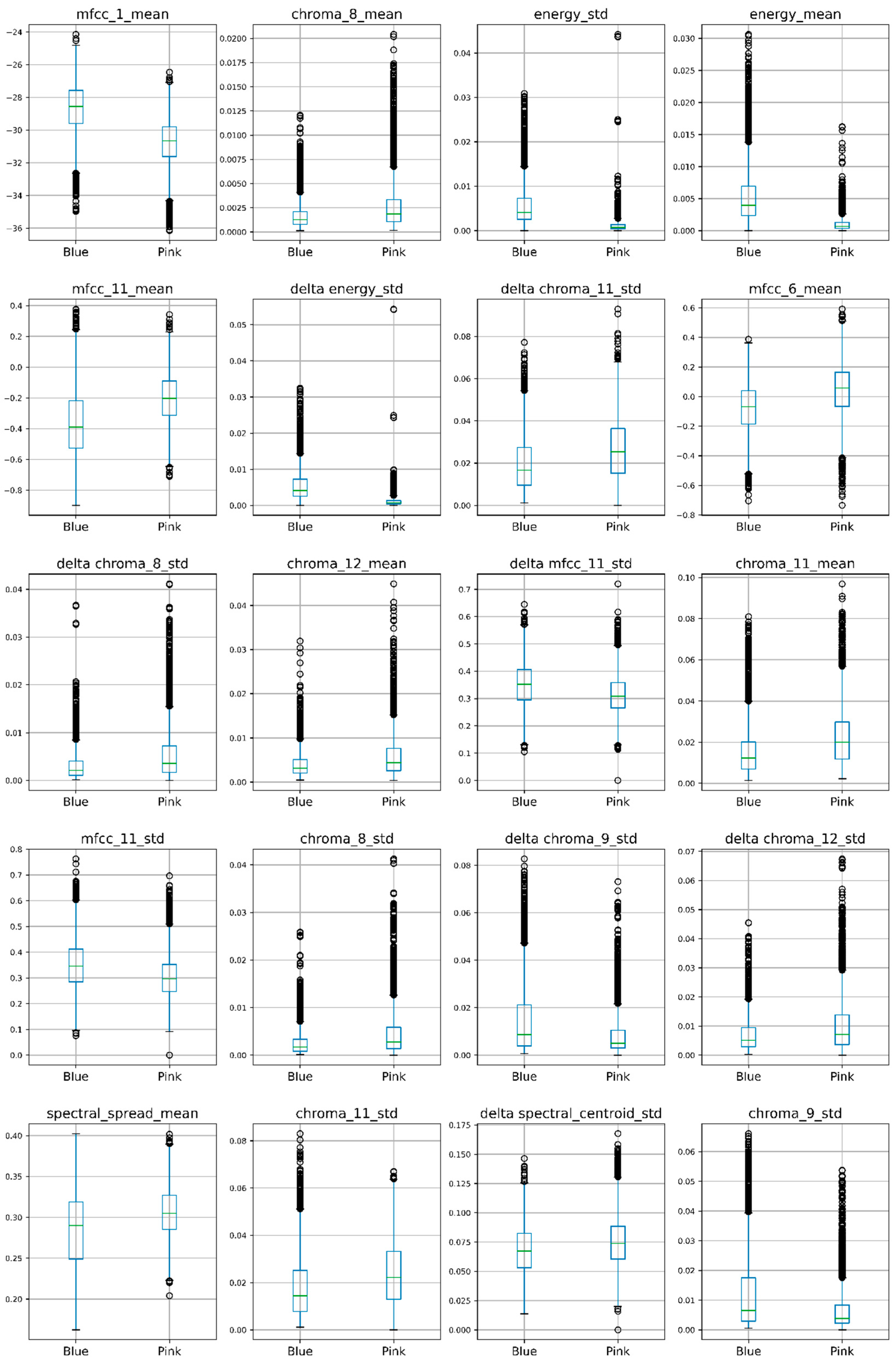

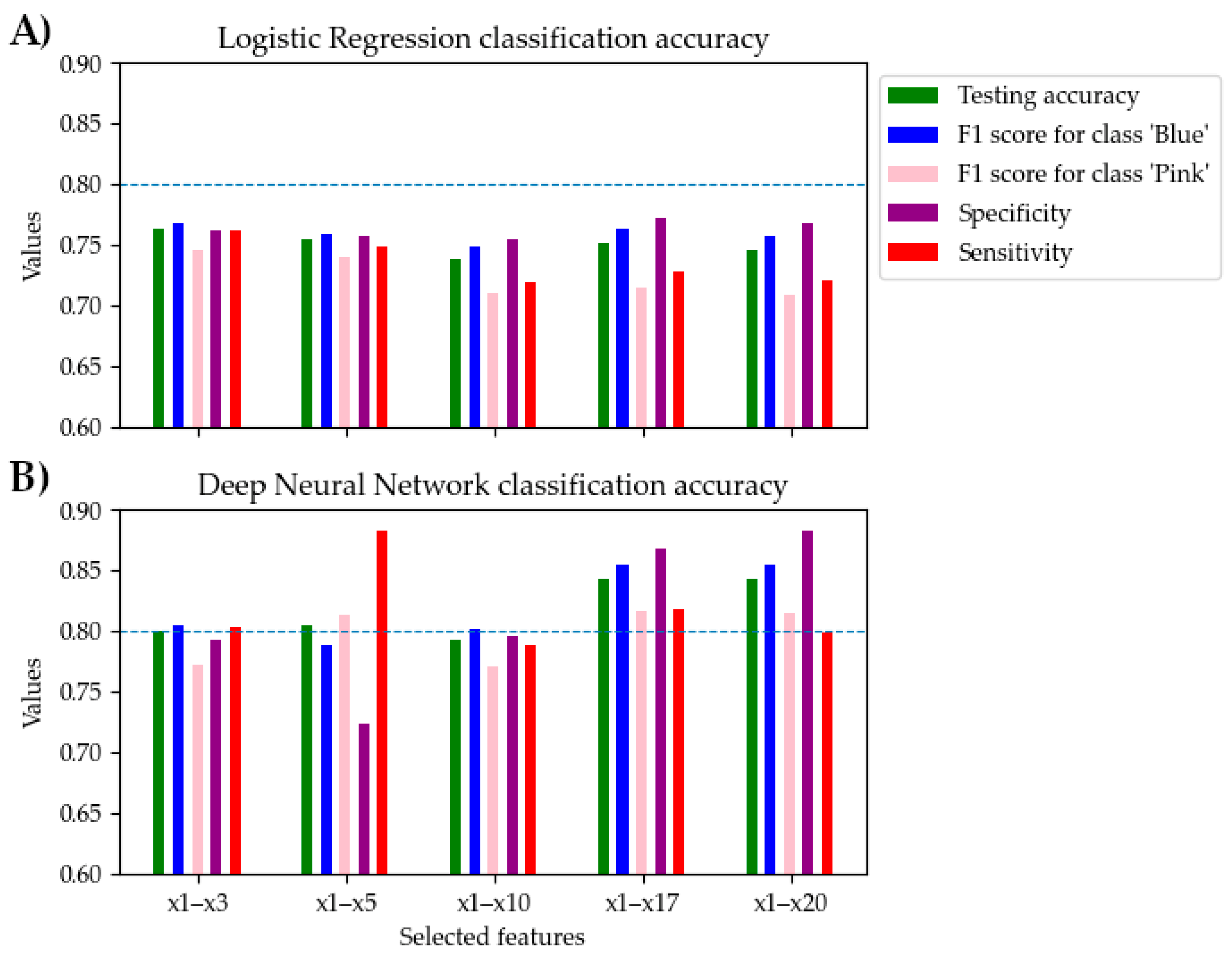

3.2. Selected Voice Features and Voice-Evoked Color Recognition Accuracy

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Banissy, M.J.; Kadosh, R.C.; Maus, G.W.; Walsh, V.; Ward, J. Prevalence, characteristics and a neurocognitive model of mirror-touch synaesthesia. Exp. Brain Res. 2009, 198, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Ward, J.; Li, R.; Salih, S.; Savig, N. Varieties of grapheme-colour synaesthesia: A new theory of phenomenological and behavioural differences. Conscious. Cogn. 2007, 16, 913–931. [Google Scholar] [CrossRef]

- Specht, K. Synaesthesia: Cross activations, high interconnectivity, and a parietal hub. Transl. Neurosci. 2012, 3, 15–21. [Google Scholar] [CrossRef]

- Brang, D.; Rouw, R.; Ramachandran, V.S.; Coulson, S. Similarly shaped letters evoke similar colors in grapheme–color synesthesia. Neuropsychologia 2011, 49, 1355–1358. [Google Scholar] [CrossRef] [PubMed]

- Eagleman, D.M. The objectification of overlearned sequences: A new view of spatial sequence synesthesia. Cortex 2009, 45, 1266–1277. [Google Scholar] [CrossRef] [PubMed]

- Naumer, M.J.; Van Den Bosch, J.J.F. Touching sounds: Thalamocortical plasticity and the neural basis of multisensory integration. J. Neurophysiol. 2009, 102, 7–8. [Google Scholar] [CrossRef] [PubMed]

- Atwater, C.J. Lexical-Gustatory Synesthesia. In The Five Senses and Beyond: The Encyclopedia of Perception; Bloomsbury Publishing: New York, NY, USA, 2009; pp. 2149–2152. [Google Scholar]

- Ward, J.; Huckstep, B.; Tsakanikos, E. Sound-Colour Synaesthesia: To What Extent Does it Use Cross-Modal Mechanisms Common to us All? Cortex 2006, 42, 264–280. [Google Scholar] [CrossRef]

- Simner, J.; Ward, J.; Lanz, M.; Jansari, A.; Noonan, K.; Glover, L.; Oakley, D.A. Non-random associations of graphemes to colours in synaesthetic and non-synaesthetic populations. Cogn. Neuropsychol. 2005, 22, 1069–1085. [Google Scholar] [CrossRef]

- Simner, J.; Mulvenna, C.; Sagiv, N.; Tsakanikos, E.; A Witherby, S.; Fraser, C.; Scott, K.; Ward, J. Synaesthesia: The prevalence of atypical cross-modal experiences. Perception 2006, 35, 1024–1033. [Google Scholar] [CrossRef]

- Hubbard, E.M.; Brang, D.; Ramachandran, V.S. The cross-activation theory at 10. J. Neuropsychol. 2011, 5, 152–177. [Google Scholar] [CrossRef]

- Meier, B. Synesthesia. In Encyclopedia of Behavioral Neuroscience, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2021; pp. 561–569. [Google Scholar]

- Weiss, P.H.; Kalckert, A.; Fink, G.R. Priming Letters by Colors: Evidence for the Bidirectionality of Grapheme–Color Synesthesia. J. Cogn. Neurosci. 2009, 21, 2019–2026. [Google Scholar] [CrossRef] [PubMed]

- van Leeuwen, T.M.; den Ouden, H.E.M.; Hagoort, P. Effective connectivity determines the nature of subjectiveexperience in grapheme-color synesthesia. J. Neurosci. 2011, 31, 9879–9884. [Google Scholar] [CrossRef]

- Hubbard, E. Neurophysiology of synesthesia. Curr. Psychiatry Rep. 2007, 9, 193–199. [Google Scholar] [CrossRef]

- Dovern, A.; Fink, G.R.; Fromme, A.C.B.; Wohlschläger, A.M.; Weiss, P.H.; Riedl, V. Intrinsic network connectivity reflects consistency of synesthetic experiences. J. Neurosci. 2012, 32, 7614–7621. [Google Scholar] [CrossRef]

- Murray, R. A review of Synesthesia: Historical and current perspectives. Murray UWL J. Undergrad. Res. 2021, 24. [Google Scholar]

- Maurer, D.; Ghloum, J.K.; Gibson, L.C.; Watson, M.R.; Chen, L.M.; Akins, K.; Enns, J.T.; Hensch, T.K.; Werker, J.F. Reduced perceptual narrowing in synesthesia. Proc. Natl. Acad. Sci. USA 2020, 117, 10089–10096. [Google Scholar] [CrossRef] [PubMed]

- Grossenbacher, P.G.; Lovelace, C.T. Mechanisms of synesthesia: Cognitive and physiological constraints. Trends Cogn. Sci. 2001, 5, 36–41. [Google Scholar] [CrossRef]

- Mroczko-Wasowicz, A.; Nikolić, D. Semantic mechanisms may be responsible for developing synesthesia. Front. Hum. Neurosci. 2014, 8, 509. [Google Scholar]

- Zamm, A.; Schlaug, G.; Eagleman, D.M.; Loui, P. Pathways to seeing music: Enhanced structural connectivity in colored-music synesthesia. Neuroimage 2013, 74, 359–366. [Google Scholar] [CrossRef]

- Jäncke, L.; Langer, N. A strong parietal hub in the small-world network of coloured-hearing synaesthetes during resting state EEG. J. Neuropsychol. 2011, 5, 178–202. [Google Scholar] [CrossRef]

- Nunn, J.A.; Gregory, L.J.; Brammer, M.; Williams, S.C.; Parslow, D.M.; Morgan, M.J.; Morris, R.G.; Bullmore, E.T.; Baron-Cohen, S. Functional magnetic resonance imaging of synesthesia: Activation of V4/V8 by spoken words. Nat. Neurosci. 2002, 5, 371–375. [Google Scholar] [CrossRef]

- Neufeld, J.; Sinke, C.; Zedler, M.; Emrich, H.M.; Szycik, G.R. Reduced audio–visual integration in synaesthetes indicated by the double-flash illusion. Brain Res. 2012, 1473, 78–86. [Google Scholar] [CrossRef] [PubMed]

- Laeng, B.; Flaaten, C.B.; Walle, K.M.; Hochkeppler, A.; Specht, K. Mickey Mousing’ in the Brain: Motion-Sound Synesthesia and the Subcortical Substrate of Audio-Visual Integration. Front. Hum. Neurosci. 2021, 15, 605166. [Google Scholar] [CrossRef]

- Eckardt, N.; Sinke, C.; Bleich, S.; Lichtinghagen, R.; Zedler, M. Investigation of the relationship between neuroplasticity and grapheme-color synesthesia. Front. Neurosci. 2024, 18, 1434309. [Google Scholar] [CrossRef] [PubMed]

- Simner, J.; Hubbart, E.M. Oxford Handbook of Synesthesia; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Baron-Cohen, S.; Wyke, M.A.; Binnie, C. Hearing words and seeing colours: An experimental investigation of a case of synaesthesia. Perception 1987, 16, 761–767. [Google Scholar] [CrossRef] [PubMed]

- Asher, J.; Aitken, M.R.F.; Farooqi, N.; Kurmani, S.; Baron-Cohen, S. Diagnosing and phenotyping visual synaesthesia: A preliminary evaluation of the revised test of genuineness (TOG-R). Cortex 2006, 42, 137–146. [Google Scholar] [CrossRef]

- Eagleman, D.M.; Kagan, A.D.; Nelson, S.S.; Sagaram, D.; Sarma, A.K. A standardized test battery for the study of synesthesia. J. Neurosci. Methods 2007, 159, 139–145. [Google Scholar] [CrossRef]

- Xing, B.; Zhang, K.; Zhang, L.; Wu, X.; Dou, J.; Sun, S. Image-music synesthesia-aware learning based on emotional similarity recognition. IEEE Access 2019, 7, 136378–136390. [Google Scholar] [CrossRef]

- Xing, B.; Dou, J.; Huang, Q.; Si, H. Stylized image generation based on music-image synesthesia emotional style transfer using CNN network. KSII Trans. Internet Inf. Syst. 2021, 15, 1464–1485. [Google Scholar]

- Rothen, N.; Bartl, G.; Franklin, A.; Ward, J. Electrophysiological correlates and psychoacoustic characteristics of hearing-motion synaesthesia. Neuropsychologia 2017, 106, 280–288. [Google Scholar] [CrossRef]

- Hartmann, W.; Candy, J. Acoustic Signal Processing; Springer: Berlin/Heidelberg, Germany, 2014; pp. 519–563. [Google Scholar]

- Benesty, J.; Sondhi, M.M.; Huang, Y. Introduction to Speech Processing. In Springer Handbook of Speech Processing; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–4. [Google Scholar]

- Dalmiya, C.P.; Dharun, V.S.; Rajesh, K.P. An efficient method for Tamil speech recognition using MFCC and DTW for mobile applications. In Proceedings of the 2013 IEEE Conference on Information & Communication Technologies, Thuckalay, Tamil Nadu, India, 11–12 April 2013. [Google Scholar]

- Jalali-najafabadi, F.; Gadepalli, C.; Jarchi, D.; Cheetham, B.M.G. Acoustic analysis and digital signal processing for the assessment of voice quality. Biomed. Signal Process. Control 2021, 70, 103018. [Google Scholar] [CrossRef]

- Krishnan, P.T.; Joseph Raj, A.N.; Rajangam, V. Emotion classification from speech signal based on empirical mode decomposition and non-linear features: Speech emotion recognition. Complex. Intell. Syst. 2021, 7, 1919–1934. [Google Scholar] [CrossRef]

- Liégeois-Chauvel, C.; de Graaf, J.B.; Laguitton, V.; Chauvel, P. Specialization of Left Auditory Cortex for Speech Perception in Man Depends on Temporal Coding. Cereb. Cortex 1999, 9, 484–496. [Google Scholar] [CrossRef] [PubMed]

- Kraus, K.S.; Canlon, B. Neuronal connectivity and interactions between the auditory and limbic systems. Effects of noise and tinnitus. Hear. Res. 2012, 288, 34–46. [Google Scholar] [CrossRef]

- Jorgensen, E.; Wu, Y.H. Effects of entropy in real-world noise on speech perception in listeners with normal hearing and hearing loss. J. Acoust. Soc. Am. 2023, 154, 3627–3643. [Google Scholar] [CrossRef] [PubMed]

- Steinschneider, M.; Nourski, K.V.; Fishman, Y.I. Representation of speech in human auditory cortex: Is it special? Hear. Res. 2013, 305, 57–73. [Google Scholar] [CrossRef]

- Ward, J.; Simner, J. Lexical-gustatory synaesthesia: Linguistic and conceptual factors. Cognition 2013, 89, 237–261. [Google Scholar] [CrossRef] [PubMed]

- Giannakopoulos, T. PyAudioAnalysis: An open-source python library for audio signal analysis. PLoS ONE 2015, 10, e0144610. [Google Scholar] [CrossRef]

- Ding, C.; Peng, H. Minimum redundancy feature selection from microarray gene expression data. In Proceedings of the 2003 IEEE Bioinformatics Conference, Stanford, CA, USA, 11–14 August 2003; pp. 523–528. [Google Scholar]

- Berrendero, J.R.; Cuevas, A.; Torrecilla, J. The mRMR variable selection method: A comparative study for functional data. J. Stat. Comput. Simul. 2015, 86, 291–907. [Google Scholar] [CrossRef]

- Zhao, Z.; Anand, R.; Wang, M. Maximum Relevance and Minimum Redundancy Feature Selection Methods for a Marketing Machine Learning Platform. In Proceedings of the IEEE International Conference on Data Science and Advanced Analytics (DSAA 2019) Proceedings, Washington, DC, USA, 5–8 October 2019. [Google Scholar]

- Haykin, S. Neural Networks and Learning Machines, 3rd ed.; Pearson Education: London, UK, 2009. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Lipton, Z.C.; Elkan, C.; Naryanaswamy, B. LNAI 8725—Optimal Thresholding of Classifiers to Maximize F1 Measure. Lect. Notes Comput. Sci. 2014, 8725, 225–239. [Google Scholar]

- Stone, M. Cross-Validatory Choice and Assessment of Statistical Predictions. J. R. Stat. Soc. Ser. B 1974, 36, 111–133. [Google Scholar] [CrossRef]

- Oyedele, O. Determining the optimal number of folds to use in a K-fold cross-validation: A neural network classification experiment. Res. Math. 2023, 10, 2201015. [Google Scholar] [CrossRef]

- Chollet, K. Keras. 2015. Available online: https://github.com/keras-team/keras (accessed on 19 June 2024).

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Safran, A.B.; Sanda, N. Color synesthesia. Insight into perception, emotion, and consciousness. Curr. Opin. Neurol. 2015, 28, 36–44. [Google Scholar] [CrossRef]

- Lunke, K.; Meier, B. Creativity and involvement in art in different types of synaesthesia. Br. J. Psychol. 2019, 110, 727–744. [Google Scholar] [CrossRef]

- Palmer, S.E.; Schloss, K.B.; Xu, Z.; Prado-León, L.R. Music-color associations are mediated by emotion. Proc. Natl. Acad. Sci. USA 2013, 110, 8836–8841. [Google Scholar] [CrossRef]

- Beeli, G.; Esslen, M.; Jäncke, L. Time Course of Neural Activity Correlated with Colored-Hearing Synesthesia. Cereb. Cortex 2008, 18, 379–385. [Google Scholar] [CrossRef]

- Isbilen, E.S.; Krumhansl, C.L. The color of music: Emotion-mediated associations to Bach’s Well-tempered Clavier. Psychomusicol. Music. Mind Brain 2016, 26, 149–161. [Google Scholar] [CrossRef]

- Kaya, N.; Epps, H.H. Relationship between Color and Emotion: A Study of College Students. College Student Journal. 2004, 38, 396–405. [Google Scholar]

- Moss, A.; Simmons, D.; Simner, J.; Smith, R. Color and texture associations in voice-induced synesthesia. Front. Psychol. 2013, 4, 58198. [Google Scholar] [CrossRef] [PubMed]

- Bargary, G.; Mitchell, K.J. Synaesthesia and cortical connectivity. Trends Neurosci. 2008, 7, 335–342. [Google Scholar] [CrossRef]

- Itoh, K.; Sakata, H.; Igarashi, H.; Nakada, T. Automaticity of pitch class-color synesthesia as revealed by a Stroop-like effect. Conscious. Cogn. 2019, 71, 86–91. [Google Scholar] [CrossRef]

- Curwen, C. Music-colour synaesthesia: Concept, context and qualia. Conscious. Cogn. 2018, 61, 94–106. [Google Scholar] [CrossRef] [PubMed]

- Bouvet, L.; Amsellem, F.; Maruani, A.; Dupont, A.T.-V.; Mathieu, A.; Bourgeron, T.; Delorme, R.; Mottron, L. Synesthesia & Autistic Features in a Large Family: Evidence for spatial imagery as a common factor. Behav. Brain Res. 2019, 362, 266–272. [Google Scholar]

- Marks, L.E. Synesthesia, Then and Now. Intellectica 2011, 55, 47–80. [Google Scholar] [CrossRef][Green Version]

- Benítez-Burraco, A.; Adornetti, I.; Ferretti, F.; Progovac, L. An evolutionary account of impairment of self in cognitive disorders. Cogn. Process. 2023, 24, 107–127. [Google Scholar] [CrossRef]

| Matched | Matched perfectly | The color of the retest matched the color of the first registration completely. |

| Very similar | The main color was indicated as an undertone. | |

| Very similar | The main color matches, but new undertones were assigned. | |

| Very similar | The main color matches, but an undertone is not indicated. | |

| Very similar | Colors matched, but voice recording was not observed as a multi-color. | |

| Very similar | One color matches, but multi-colorism was not observed. | |

| Unmatched | Unmatched | The colors did not match at all. |

| Unmatched but had some similarity | The voice record was originally not specified as multi-colored. New color tones and new colors are assigned. |

| Feature Code | Description |

|---|---|

| zcr | Zero crossing rate: the rate of sign changes in the signal within a given frame. |

| energy | The sum of squared signal values, normalized by frame length. |

| energy_entropy | Entropy of the normalized energy across sub-frames; reflects abrupt energy changes. |

| spectral_centroid | The center of gravity of the spectrum |

| spectral_spread | The second central moment of the spectrum. |

| spectral_entropy | Entropy of normalized spectral energies across sub-frames. |

| spectral_flux | Squared difference between normalized magnitudes of successive spectral frames |

| spectral_rolloff | Frequency below which 90% of the total spectral energy is contained. |

| mfcc_1 to mfcc_13 | Mel-frequency cepstral coefficients: a representation of the spectral envelope on the Mel scale. |

| chroma_1 to chroma_12 | Chroma vector: a 12-element vector representing energy in each of the 12 pitch classes on the chromatic scale. |

| chroma_dev | Standard deviation of the 12 chroma coefficients |

| Feature | Name |

|---|---|

| x1 | mfcc_1_mean |

| x2 | chroma_8_mean |

| x3 | energy_std |

| x4 | energy_mean |

| x5 | mfcc_11_mean |

| x6 | delta energy_std |

| x7 | delta chroma_11_std |

| x8 | mfcc_6_mean |

| x9 | delta chroma_8_std |

| x10 | chroma_12_mean |

| x11 | delta mfcc_11_std |

| x12 | chroma_11_mean |

| x13 | mfcc_11_std |

| x14 | chroma_8_std |

| x15 | delta chroma_9_std |

| x16 | delta chroma_12_std |

| x17 | spectral_spread_mean |

| x18 | chroma_11_std |

| x19 | Delta spectral_centroid_std |

| x20 | chroma_9_std |

| DNN Results | ||||||

|---|---|---|---|---|---|---|

| Features | Training Accuracy | Testing Accuracy | Blue F1 Score | Pink F1 Score | Specificity | Sensitivity |

| x1 | 0.652 (±0.063) | 0.645 (±0.098) | 0.719 (±0.049) | 0.489 (±0.215) | 0.897 (±0.079) | 0.398 (±0.252) |

| x1–x2 | 0.686 (±0.096) | 0.685 (±0.117) | 0.740 (±0.064) | 0.560 (±0.258) | 0.868 (±0.100) | 0.504 (±0.303) |

| x1–x3 | 0.824 (±0.042) | 0.800 (±0.115) | 0.805 (±0.081) | 0.772 (±0.195) | 0.793 (±0.103) | 0.803 (±0.270) |

| x1–x4 | 0.832 (±0.036) | 0.778 (±0.106) | 0.758 (±0.120) | 0.773 (±0.148) | 0.730 (±0.186) | 0.820 (±0.232) |

| x1–x5 | 0.850 (±0.028) | 0.805 (±0.093) | 0.788 (±0.089) | 0.813 (±0.109) | 0.724 (±0.105) | 0.882 (±0.172) |

| x1–x6 | 0.863 (±0.037) | 0.806 (±0.105) | 0.808 (±0.074) | 0.789 (±0.160) | 0.785 (±0.084) | 0.823 (±0.249) |

| x1–x7 | 0.864 (±0.033) | 0.796 (±0.118) | 0.801 (±0.081) | 0.771 (±0.186) | 0.784 (±0.091) | 0.805 (±0.274) |

| x1–x8 | 0.883 (±0.023) | 0.800 (±0.130) | 0.815 (±0.087) | 0.763 (±0.210) | 0.822 (±0.057) | 0.774 (±0.285) |

| x1–x9 | 0.886 (±0.021) | 0.805 (±0.122) | 0.819 (±0.081) | 0.771 (±0.196) | 0.828 (±0.058) | 0.778 (±0.274) |

| x1–x10 | 0.880 (±0.023) | 0.793 (±0.122) | 0.801 (±0.091) | 0.770 (±0.182) | 0.795 (±0.087) | 0.788 (±0.253) |

| x1–x11 | 0.882 (±0.026) | 0.811 (±0.119) | 0.825 (±0.085) | 0.786 (±0.174) | 0.839 (±0.033) | 0.782 (±0.238) |

| x1–x12 | 0.885 (±0.022) | 0.790 (±0.123) | 0.804 (±0.083) | 0.749 (±0.210) | 0.821 (±0.100) | 0.755 (±0.289) |

| x1–x13 | 0.892 (±0.022) | 0.801 (±0.108) | 0.812 (±0.074) | 0.775 (±0.167) | 0.826 (±0.069) | 0.773 (±0.238) |

| x1–x14 | 0.891 (±0.019) | 0.814 (±0.112) | 0.826 (±0.075) | 0.785 (±0.184) | 0.840 (±0.056) | 0.787 (±0.247) |

| x1–x15 | 0.890 (±0.025) | 0.805 (±0.105) | 0.810 (±0.074) | 0.788 (±0.153) | 0.802 (±0.070) | 0.805 (±0.229) |

| x1–x16 | 0.896 (±0.020) | 0.805 (±0.112) | 0.814 (±0.077) | 0.782 (±0.169) | 0.811 (±0.056) | 0.796 (±0.245) |

| x1–x17 | 0.922 (±0.025) | 0.843 (±0.114) | 0.855 (±0.083) | 0.816 (±0.174) | 0.867 (±0.077) | 0.817 (±0.252) |

| x1–x18 | 0.918 (±0.029) | 0.814 (±0.145) | 0.843 (±0.090) | 0.741 (±0.272) | 0.896 (±0.060) | 0.730 (±0.338) |

| x1–x19 | 0.924 (±0.021) | 0.837 (±0.113) | 0.849 (±0.079) | 0.810 (±0.176) | 0.853 (±0.059) | 0.819 (±0.256) |

| x1–x20 | 0.925 (±0.022) | 0.842 (±0.108) | 0.855 (±0.078) | 0.814 (±0.163) | 0.883 (±0.070) | 0.799 (±0.238) |

| LogReg Results | ||||||

|---|---|---|---|---|---|---|

| Features | Training Accuracy | Testing Accuracy | Blue F1 Score | Pink F1 Score | Specificity | Sensitivity |

| x1 | 0.771 (±0.027) | 0.761 (±0.100) | 0.767 (±0.066) | 0.745 (±0.149) | 0.760 (±0.047) | 0.759 (±0.222) |

| x1–x2 | 0.771 (±0.027) | 0.762 (±0.100) | 0.767 (±0.066) | 0.745 (±0.149) | 0.761 (±0.047) | 0.760 (±0.223) |

| x1–x3 | 0.772 (±0.027) | 0.763 (±0.100) | 0.768 (±0.066) | 0.746 (±0.149) | 0.761 (±0.047) | 0.761 (±0.223) |

| x1–x4 | 0.773 (±0.027) | 0.763 (±0.101) | 0.768 (±0.066) | 0.746 (±0.149) | 0.761 (±0.048) | 0.762 (±0.223) |

| x1–x5 | 0.778 (±0.023) | 0.754 (±0.095) | 0.759 (±0.061) | 0.739 (±0.141) | 0.757 (±0.032) | 0.748 (±0.206) |

| x1–x6 | 0.779 (±0.023) | 0.755 (±0.094) | 0.760 (±0.061) | 0.740 (±0.141) | 0.758 (±0.032) | 0.749 (±0.206) |

| x1–x7 | 0.779 (±0.024) | 0.753 (±0.099) | 0.759 (±0.063) | 0.735 (±0.150) | 0.758 (±0.034) | 0.745 (±0.217) |

| x1–x8 | 0.783 (±0.029) | 0.736 (±0.115) | 0.747 (±0.072) | 0.708 (±0.183) | 0.753 (±0.040) | 0.717 (±0.248) |

| x1–x9 | 0.784 (±0.029) | 0.738 (±0.116) | 0.749 (±0.073) | 0.710 (±0.184) | 0.754 (±0.039) | 0.719 (±0.249) |

| x1–x10 | 0.785 (±0.029) | 0.738 (±0.116) | 0.749 (±0.073) | 0.710 (±0.184) | 0.754 (±0.039) | 0.719 (±0.249) |

| x1–x11 | 0.784 (±0.029) | 0.734 (±0.114) | 0.745 (±0.071) | 0.707 (±0.182) | 0.750 (±0.039) | 0.715 (±0.247) |

| x1–x12 | 0.784 (±0.029) | 0.734 (±0.115) | 0.745 (±0.071) | 0.705 (±0.184) | 0.751 (±0.039) | 0.714 (±0.249) |

| x1–x13 | 0.787 (±0.028) | 0.734 (±0.114) | 0.745 (±0.071) | 0.706 (±0.182) | 0.751 (±0.043) | 0.714 (±0.248) |

| x1–x14 | 0.788 (±0.029) | 0.734 (±0.114) | 0.745 (±0.071) | 0.706 (±0.182) | 0.752 (±0.043) | 0.714 (±0.248) |

| x1–x15 | 0.789 (±0.028) | 0.735 (±0.114) | 0.746 (±0.071) | 0.706 (±0.184) | 0.753 (±0.043) | 0.714 (±0.249) |

| x1–x16 | 0.789 (±0.029) | 0.734 (±0.115) | 0.746 (±0.071) | 0.705 (±0.185) | 0.753 (±0.043) | 0.713 (±0.251) |

| x1–x17 | 0.809 (±0.035) | 0.752 (±0.125) | 0.763 (±0.087) | 0.714 (±0.208) | 0.772 (±0.096) | 0.728 (±0.283) |

| x1–x18 | 0.810 (±0.036) | 0.750 (±0.126) | 0.761 (±0.088) | 0.712 (±0.210) | 0.770 (±0.099) | 0.726 (±0.286) |

| x1–x19 | 0.810 (±0.035) | 0.747 (±0.123) | 0.758 (±0.085) | 0.708 (±0.208) | 0.768 (±0.098) | 0.722 (±0.283) |

| x1–x20 | 0.811 (±0.035) | 0.746 (±0.123) | 0.757 (±0.086) | 0.708 (±0.208) | 0.767 (±0.101) | 0.721 (±0.283) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bartulienė, R.; Saudargienė, A.; Reinytė, K.; Davidavičius, G.; Davidavičienė, R.; Ašmantas, Š.; Raškinis, G.; Šatkauskas, S. Voice-Evoked Color Prediction Using Deep Neural Networks in Sound–Color Synesthesia. Brain Sci. 2025, 15, 520. https://doi.org/10.3390/brainsci15050520

Bartulienė R, Saudargienė A, Reinytė K, Davidavičius G, Davidavičienė R, Ašmantas Š, Raškinis G, Šatkauskas S. Voice-Evoked Color Prediction Using Deep Neural Networks in Sound–Color Synesthesia. Brain Sciences. 2025; 15(5):520. https://doi.org/10.3390/brainsci15050520

Chicago/Turabian StyleBartulienė, Raminta, Aušra Saudargienė, Karolina Reinytė, Gustavas Davidavičius, Rūta Davidavičienė, Šarūnas Ašmantas, Gailius Raškinis, and Saulius Šatkauskas. 2025. "Voice-Evoked Color Prediction Using Deep Neural Networks in Sound–Color Synesthesia" Brain Sciences 15, no. 5: 520. https://doi.org/10.3390/brainsci15050520

APA StyleBartulienė, R., Saudargienė, A., Reinytė, K., Davidavičius, G., Davidavičienė, R., Ašmantas, Š., Raškinis, G., & Šatkauskas, S. (2025). Voice-Evoked Color Prediction Using Deep Neural Networks in Sound–Color Synesthesia. Brain Sciences, 15(5), 520. https://doi.org/10.3390/brainsci15050520