Automatic Change Detection of Human Attractiveness: Comparing Visual and Auditory Perception

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Materials

2.3. Procedure

2.4. EEG Recording and Pre-Processing

2.5. Statistical Analyses

3. Results

3.1. Behavioral Results

3.2. ERP Results

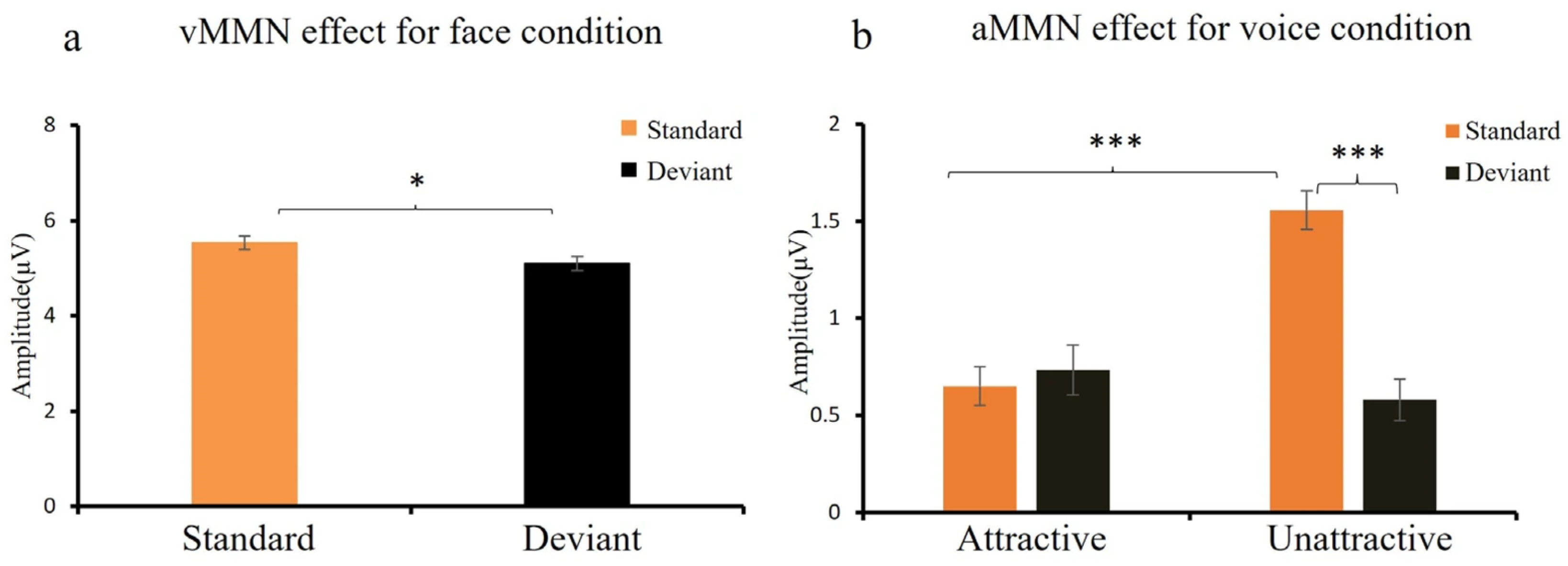

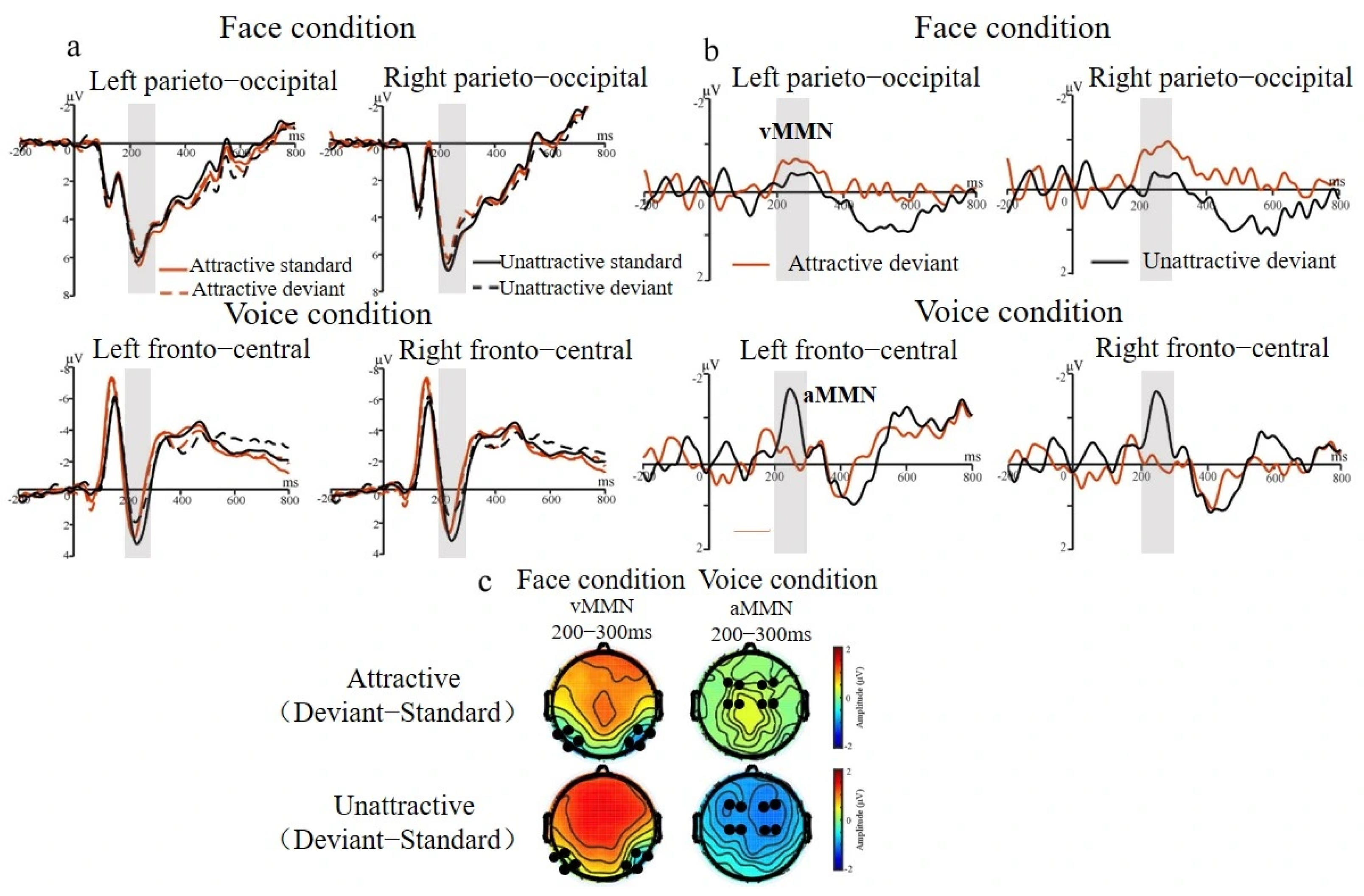

3.2.1. MMN Effect for Face and Voice Conditions

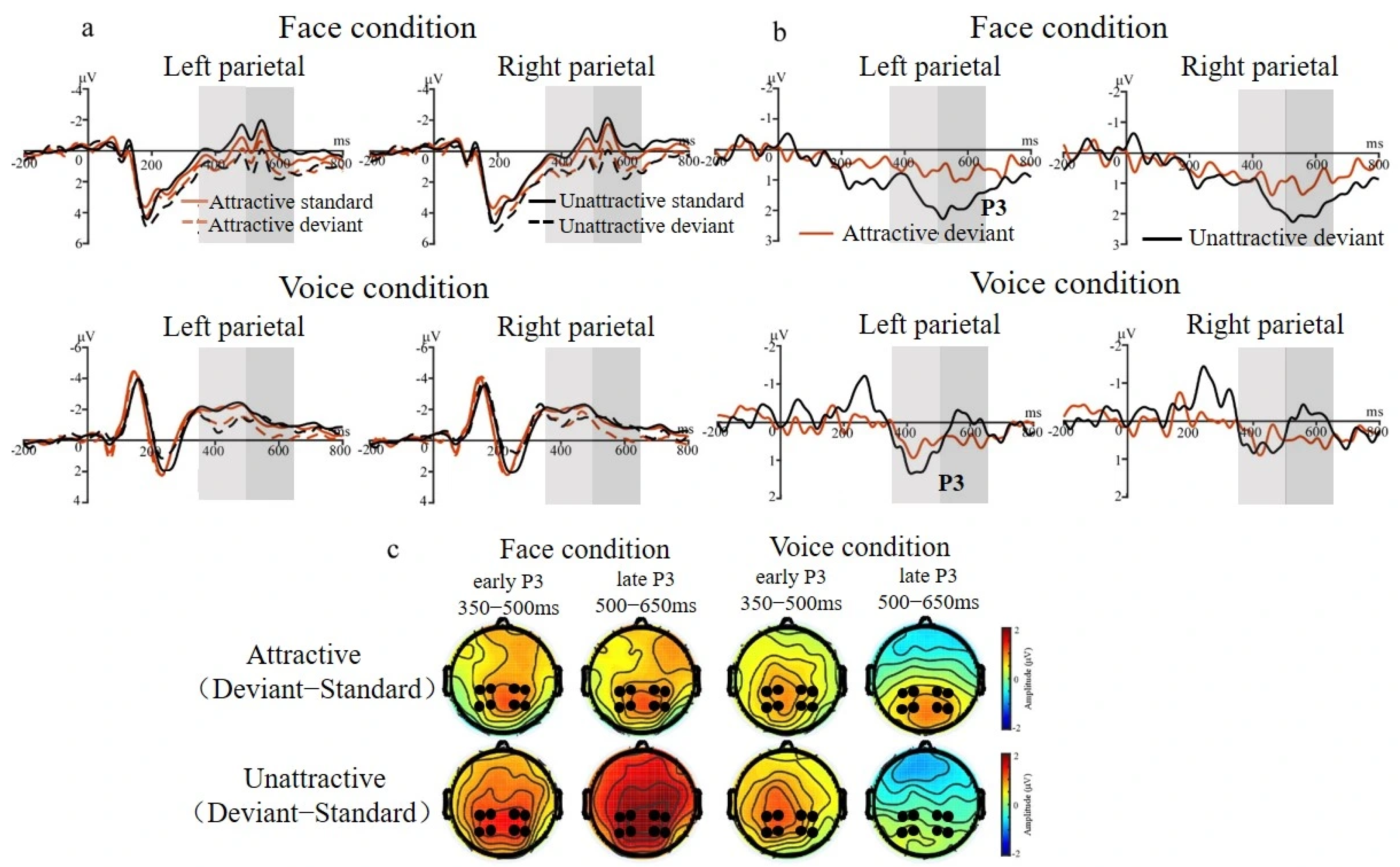

3.2.2. P3 Effect for Face and Voice Conditions

4. Discussion

4.1. The Change Detection of Facial Attractiveness

4.2. The Change Detection of Vocal Attractiveness

4.3. Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rensink, R.A. Change Detection. Annu. Rev. Psychol. 2002, 53, 245–277. [Google Scholar] [CrossRef] [PubMed]

- Olofsson, J.K.; Nordin, S.; Sequeira, H.; Polich, J. Affective picture processing: An integrative review of ERP findings. Biol. Psychol. 2008, 77, 247–265. [Google Scholar] [CrossRef] [PubMed]

- Rozin, P.; Royzman, E.B. Negativity bias, negativity dominance, and contagion. Personal. Soc. Psychol. Rev. 2001, 5, 296–320. [Google Scholar] [CrossRef]

- Hahn, A.C.; Perrett, D.I. Neural and behavioral responses to attractiveness in adult and infant faces. Neurosci. Biobehav. Rev. 2014, 46, 591–603. [Google Scholar] [CrossRef]

- Liu, M.; Sommer, W.; Yue, S.; Li, W. Dominance of face over voice in human attractiveness judgments: ERP evidence. Psychophysiology 2023, 60, e14358. [Google Scholar] [CrossRef]

- Darwin, C. The Descent of Man, and Selection in Relation to Sex; John Murray: London, UK, 1871. [Google Scholar]

- Gallup, G.G.; Frederick, D.A. The science of sex appeal: An Evolutionary perspective. Rev. Gen. Psychol. 2010, 14, 240–250. [Google Scholar] [CrossRef]

- Wang, S.S.; Moon SIl Kwon, K.H.; Evans, C.A.; Stefanone, M.A. Face off: Implications of visual cues on initiating friendship on Facebook. Comput. Hum. Behav. 2010, 26, 226–234. [Google Scholar] [CrossRef]

- Li, T.; Liang, Z.; Yuan, Y.; Sommer, W.; Li, W. The impact of facial attractiveness and alleged personality traits on fairness decisions in the ultimatum game: Evidence from ERPs. Biol. Psychol. 2024, 190, 108809. [Google Scholar] [CrossRef]

- Rhodes, G. The evolutionary psychology of facial beauty. Annu. Rev. Psychol. 2006, 57, 199–226. [Google Scholar] [CrossRef]

- He, D.; Workman, C.; He, X.; Chatterjee, A. What Is Good Is Beautiful: How Moral Character Affects Perceived Facial Attractiveness. Psychol. Aesthet. Creat. Arts 2024, 18, 633. [Google Scholar] [CrossRef]

- Wu, C.; Liang, X.; Duan, Y.; Gong, L.; Zhang, W.; He, M.; Ouyang, Y.; He, X. The Voice of Morality: The Two-Way Rela tionship Between the Beauty of Voice and Moral Goodness. J. Voice 2024, 38, 1533.e1. [Google Scholar] [CrossRef]

- Niimi, R.; Goto, M. Good conduct makes your face attractive: The effect of personality perception on facial attractiveness judgments. PLoS ONE 2023, 18, e0281758. [Google Scholar] [CrossRef]

- Olson, I.R.; Marshuetz, C. Facial attractiveness is appraised in a glance. Emotion 2005, 5, 498–502. [Google Scholar] [CrossRef]

- Shang, J.; Zhong, K.; Hou, X.; Yao, L.; Shi, R.; Wang, Z.J. Invisible and Visible Processing of Facial Attractiveness in Implicit Tasks: Evidence From Event-Related Potentials (ERPs). PsyCh J. 2025, 14, 573–582. [Google Scholar] [CrossRef] [PubMed]

- Hung, S.-M.; Nieh, C.-H.; Hsieh, P.-J. Unconscious processing of facial attractiveness: Invisible attractive faces orient visual attention. Sci. Rep. 2016, 6, 37117. [Google Scholar] [CrossRef] [PubMed]

- van Hooff, J.C.; Crawford, H.; van Vugt, M. The wandering mind of men: ERP evidence for gender differences in attention bias towards attractive opposite sex faces. Soc. Cogn. Affect. Neurosci. 2011, 6, 477–485. [Google Scholar] [CrossRef]

- Marzi, T.; Viggiano, M.P. When memory meets beauty: Insights from event-related potentials. Biol. Psychol. 2010, 84, 192–205. [Google Scholar] [CrossRef]

- Yu, D.W.; Shepard, G.H. Is beauty in the eye of the beholder? Nature 1998, 396, 321–322. [Google Scholar] [CrossRef]

- Pisanski, K.; Feinberg, D.R. Vocal attractiveness. In The Oxford Handbook of Voice Perception; Frühholz, S., Belin, P., Eds.; Oxford University Press: Oxford, UK, 2018; pp. 606–626. [Google Scholar]

- Puts, D.A.; Jones, B.C.; Debruine, L.M. Sexual selection on human faces and voices. J. Sex Res. 2012, 49, 227–243. [Google Scholar] [CrossRef] [PubMed]

- Hughes, S.M.; Miller, N.E. What sounds beautiful looks beautiful stereotype: The matching of attractiveness of voices and faces. J. Soc. Pers. Relatsh. 2016, 33, 984–996. [Google Scholar] [CrossRef]

- Hönn, M.; Göz, G. The ideal of facial beauty: A review. J. Orofac. Orthop. Fortschritte Der Kieferorthopädie 2007, 68, 6–16. [Google Scholar] [CrossRef]

- Bestelmeyer, P.E.G.; Latinus, M.; Bruckert, L.; Rouger, J.; Crabbe, F.; Belin, P. Implicitly perceived vocal attractiveness modulates prefrontal cortex activity. Cereb. Cortex 2012, 22, 1263–1270. [Google Scholar] [CrossRef]

- Hensel, L.; Bzdok, D.; Müller, V.I.; Zilles, K.; Eickhoff, S.B. Neural Correlates of Explicit Social Judgments on Vocal Stimuli. Cereb. Cortex 2015, 25, 1152–1162. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, M.; Li, W.; Sommer, W. Human voice attractiveness processing: Electrophysiological evidence. Biol. Psychol. 2020, 150, 107827. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, H.; Guo, Q. Sex and Physiological Cycles Affect the Automatic Perception of Attractive Opposite-Sex Faces: A Visual Mismatch Negativity Study. Evol. Psychol. 2018, 16, 1474704918812140. [Google Scholar] [CrossRef] [PubMed]

- Atienza, M.; Cantero, J.L.; Escera, C. Auditory information processing during human sleep as revealed by event-related brain potentials. Clin. Neurophysiol. 2001, 112, 2031–2045. [Google Scholar] [CrossRef] [PubMed]

- Näätänen, R.; Paavilainen, P.; Rinne, T.; Alho, K. The mismatch negativity (MMN) in basic research of central auditory processing: A review. Clin. Neurophysiol. 2007, 118, 2544–2590. [Google Scholar] [CrossRef]

- Kimura, M.; Schröger, E.; Czigler, I. Visual mismatch negativity and its importance in visual cognitive sciences. NeuroReport 2011, 22, 669–673. [Google Scholar] [CrossRef]

- Polich, J. Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 2007, 118, 2128–2148. [Google Scholar] [CrossRef]

- Kecskés-Kovács, K.; Sulykos, I.; Czigler, I. Is it a face of a woman or a man? Visual mismatch negativity is sensitive to gender category. Front. Hum. Neurosci. 2013, 7, 532. [Google Scholar] [CrossRef] [PubMed]

- Rellecke, J.; Sommer, W.; Schacht, A. Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biol. Psychol. 2012, 90, 23–32. [Google Scholar] [CrossRef] [PubMed]

- Luo, Q.; Rossion, B.; Dzhelyova, M. A robust implicit measure of facial attractiveness discrimination. Soc. Cogn. Affect. Neurosci. 2019, 14, 737–746. [Google Scholar] [CrossRef] [PubMed]

- Rellecke, J.; Bakirtas, A.M.; Sommer, W.; Schacht, A. Automaticity in attractive face processing: Brain potentials from a dual task. NeuroReport 2011, 22, 706–710. [Google Scholar] [CrossRef] [PubMed]

- Schacht, A.; Werheid, K.; Sommer, W. The appraisal of facial beauty is rapid but not mandatory. Cogn. Affect. Behav. Neurosci. 2008, 8, 132–142. [Google Scholar] [CrossRef]

- Todd, E.; Subendran, S.; Wright, G.; Guo, K. Emotion category-modulated interpretation bias in perceiving ambiguous facial expressions. Perception 2023, 52, 695–711. [Google Scholar] [CrossRef]

- Kreegipuu, K.; Kuldkepp, N.; Sibolt, O.; Toom, M.; Allik, J.; Näätänen, R. vMMN for schematic faces: Automatic detection of change in emotional expression. Front. Hum. Neurosci. 2013, 7, 714. [Google Scholar] [CrossRef]

- Conde, T.; Gonçalves, Ó.F.; Pinheiro, A.P. Paying attention to my voice or yours: An ERP study with words. Biol. Psychol. 2015, 111, 40–52. [Google Scholar] [CrossRef]

- Susac, A.; Ilmoniemi, R.J.; Pihko, E.; Supek, S. Neurodynamic studies on emotional and inverted faces in an oddball para digm. Brain Topogr. 2003, 16, 265–268. [Google Scholar] [CrossRef]

- Schirmer, A.; Kotz, S.A. Beyond the right hemisphere: Brain mechanisms mediating vocal emotional processing. Trends Cogn. Sci. 2006, 10, 24–30. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.G.; Buchner, A. G*power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef] [PubMed]

- Yang, T.; Chen, H.; Hu, Y.; Zheng, Y.; Wang, W. Preferences for sexual dimorphism on attractiveness levels: An eye-tracking study. Personal. Individ. Differ. 2015, 77, 179–185. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single- trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Czigler, I. Visual Mismatch Negativity. J. Psychophysiol. 2007, 21, 224–230. [Google Scholar] [CrossRef]

- Pinheiro, A.P.; Barros, C.; Vasconcelos, M.; Obermeier, C.; Kotz, S.A. Is laughter a better vocal change detector than a growl? Cortex 2017, 92, 233–248. [Google Scholar] [CrossRef] [PubMed]

- Grammer, K.; Fink, B.; Juette, A.; Ronzal, G.; Thornhill, R. Female faces and bodies: N-dimensional feature space and attractiveness. Adv. Vis. Cogn. 2001, 1, 91–125. [Google Scholar]

- Baumeister, R.F.; Bratslavsky, E.; Finkenauer, C.; Vohs, K.D. Bad is Stronger than Good. Rev. Gen. Psychol. 2001, 5, 323–370. [Google Scholar] [CrossRef]

- Elliot, A.J. (Ed.) Handbook of Approach and Avoidance Motivation; Psychology Press: Oxfordshire, UK, 2013. [Google Scholar]

- Winkler, I.; Háden, G.P.; Ladinig, O.; Sziller, I.; Honing, H. Newborn infants detect the beat in music. Proc. Natl. Acad. Sci. USA 2009, 106, 2468–2471. [Google Scholar] [CrossRef]

- Winkler, I.; Czigler, I. Evidence from auditory and visual event-related potential (ERP) studies of deviance detection (MMN and vMMN) linking predictive coding theories and perceptual object representations. Int. J. Psychophysiol. 2012, 83, 132–143. [Google Scholar] [CrossRef] [PubMed]

- Bregman, A.S. Auditory Scene Analysis: The Perceptual Organization of Sound; MIT Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Omori, K.; Kojima, H.; Kakani, R.; Slavit, D.H.; Blaugrund, S.M. Acoustic characteristics of rough voice: Subharmonics. J. Voice 1997, 11, 40–47. [Google Scholar] [CrossRef]

- Rezlescu, C.; Penton, T.; Walsh, V.; Tsujimura, H.; Scott, S.K.; Banissy, M.J. Dominant Voices and Attractive Faces: The Contribution of Visual and Auditory Information to Integrated Person Impressions. J. Nonverbal Behav. 2015, 39, 355–370. [Google Scholar] [CrossRef]

- Schneider, W.; Shiffrin, R.M. Controlled and automatic human information processing: I. Detection, search, and attention. Psychol. Rev. 1977, 84, 1. [Google Scholar] [CrossRef]

- Zhang, Z.; Deng, Z. Gender, facial attractiveness, and early and late event-related potential components. J. Integr. Neurosci. 2012, 11, 477–487. [Google Scholar] [CrossRef] [PubMed]

- Rubenstein, A.J. Variation in perceived attractiveness: Differences between dynamic and static faces. Psychol. Sci. 2005, 16, 759–762. [Google Scholar] [CrossRef] [PubMed]

- Kosonogov, V.; Kovsh, E.; Vorobyeva, E. Event-related potentials during Verbal Recognition of naturalistic neutral-to-emotional dynamic facial expressions. Appl. Sci. 2022, 12, 7782. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, M.; Gao, J.; Sommer, W.; Li, W. Automatic Change Detection of Human Attractiveness: Comparing Visual and Auditory Perception. Brain Sci. 2025, 15, 1226. https://doi.org/10.3390/brainsci15111226

Liu M, Gao J, Sommer W, Li W. Automatic Change Detection of Human Attractiveness: Comparing Visual and Auditory Perception. Brain Sciences. 2025; 15(11):1226. https://doi.org/10.3390/brainsci15111226

Chicago/Turabian StyleLiu, Meng, Jin Gao, Werner Sommer, and Weijun Li. 2025. "Automatic Change Detection of Human Attractiveness: Comparing Visual and Auditory Perception" Brain Sciences 15, no. 11: 1226. https://doi.org/10.3390/brainsci15111226

APA StyleLiu, M., Gao, J., Sommer, W., & Li, W. (2025). Automatic Change Detection of Human Attractiveness: Comparing Visual and Auditory Perception. Brain Sciences, 15(11), 1226. https://doi.org/10.3390/brainsci15111226