Embodied Cognition of Manipulative Actions: Subliminal Grasping Semantics Enhance Using-Action Recognition

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

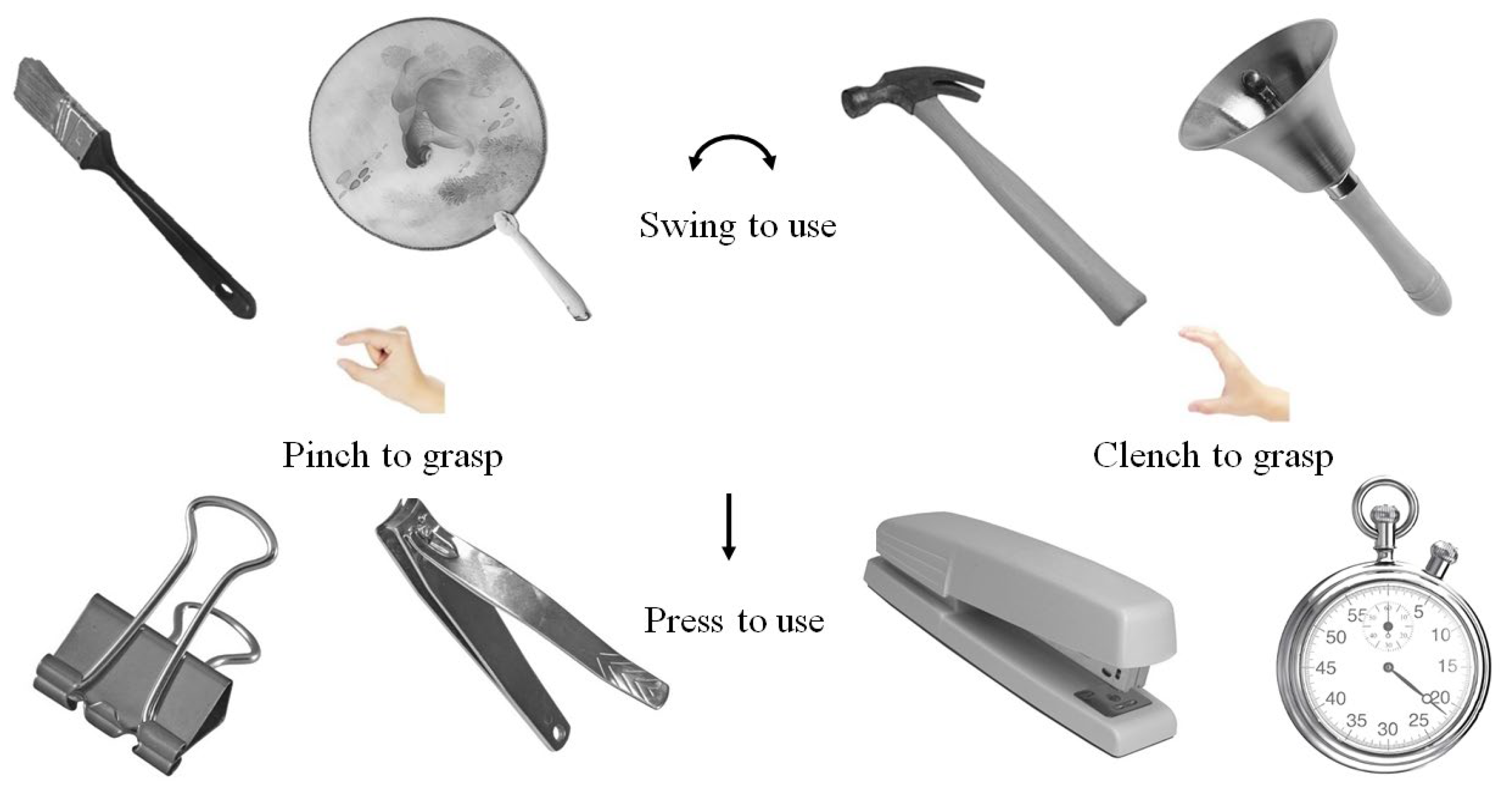

2.2. Stimuli

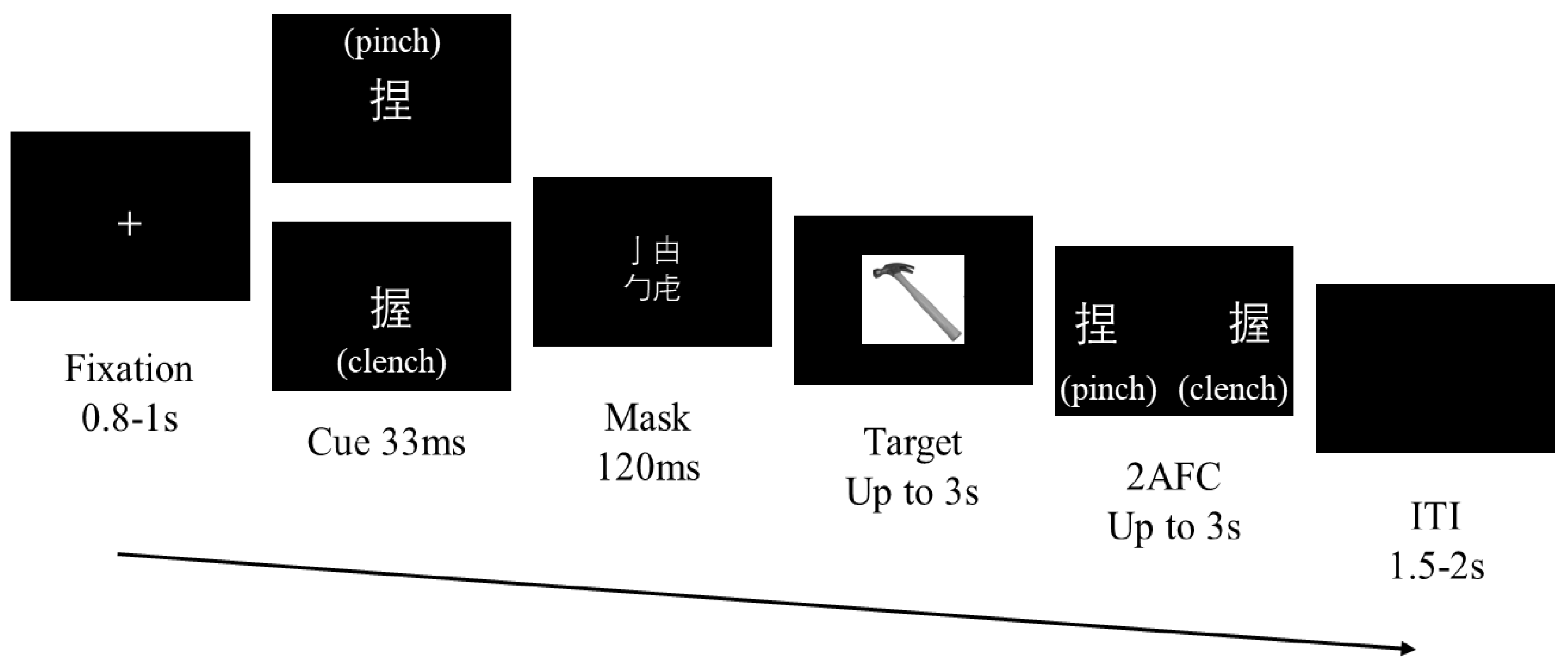

2.3. Task and Procedure

2.4. EEG Data Acquisition

2.5. EEG Data Analysis

2.6. Statistical Methods

3. Results

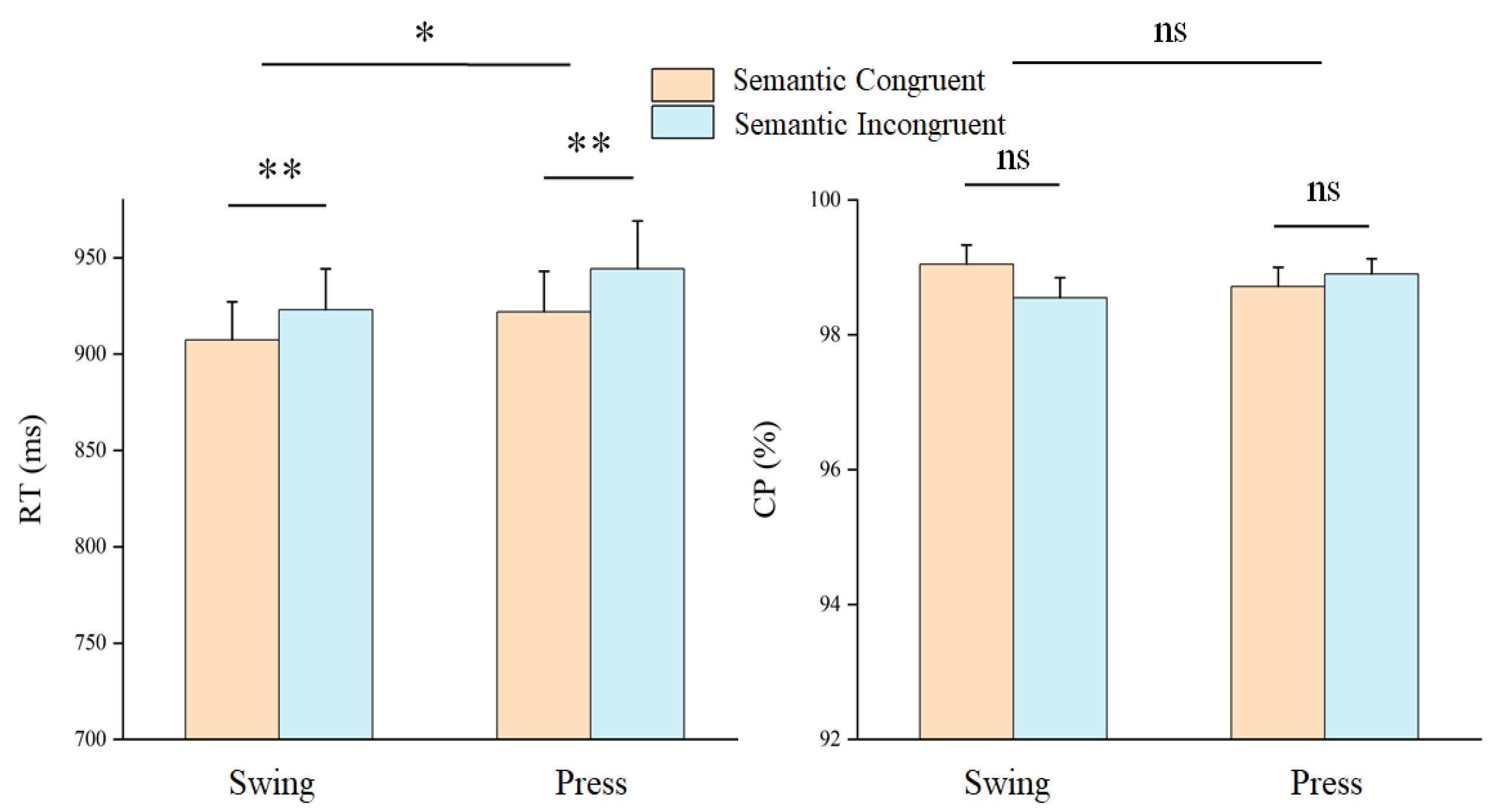

3.1. Behavior

| Mean | SEM | t(32) | p | |

|---|---|---|---|---|

| ACC | 50.29% | 0.37 | 0.251 | 0.840 |

| ER | 49.71% | 0.40 | ||

| Dprime | 0.01 | 0.02 | 0.249 | 0.850 |

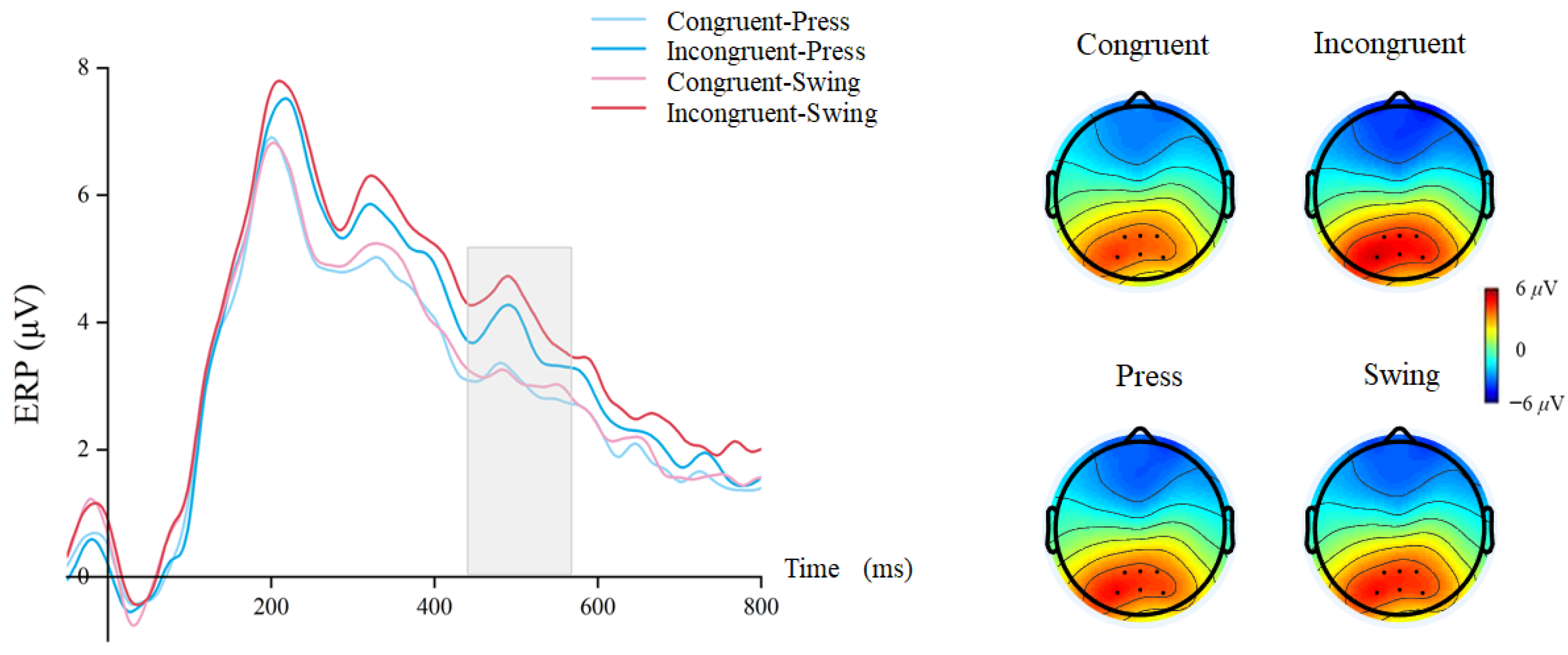

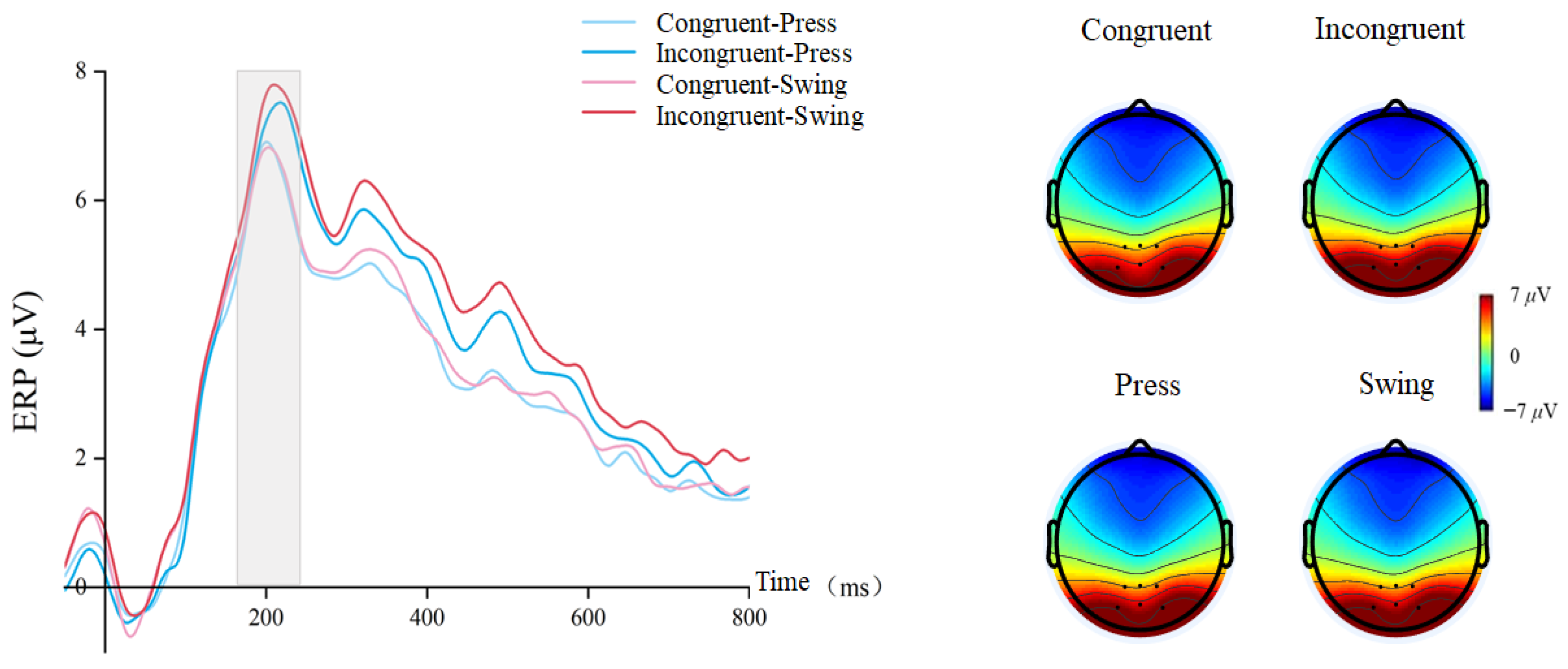

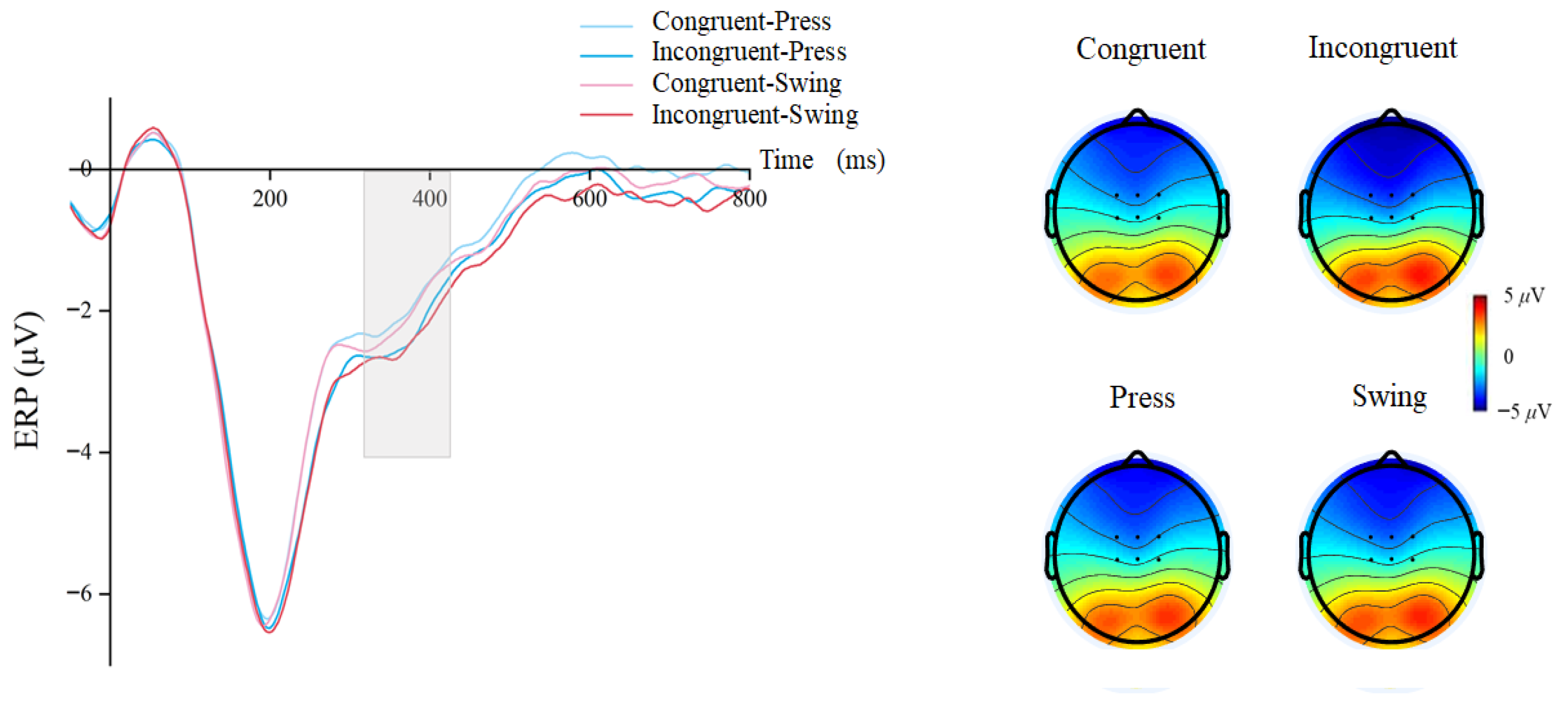

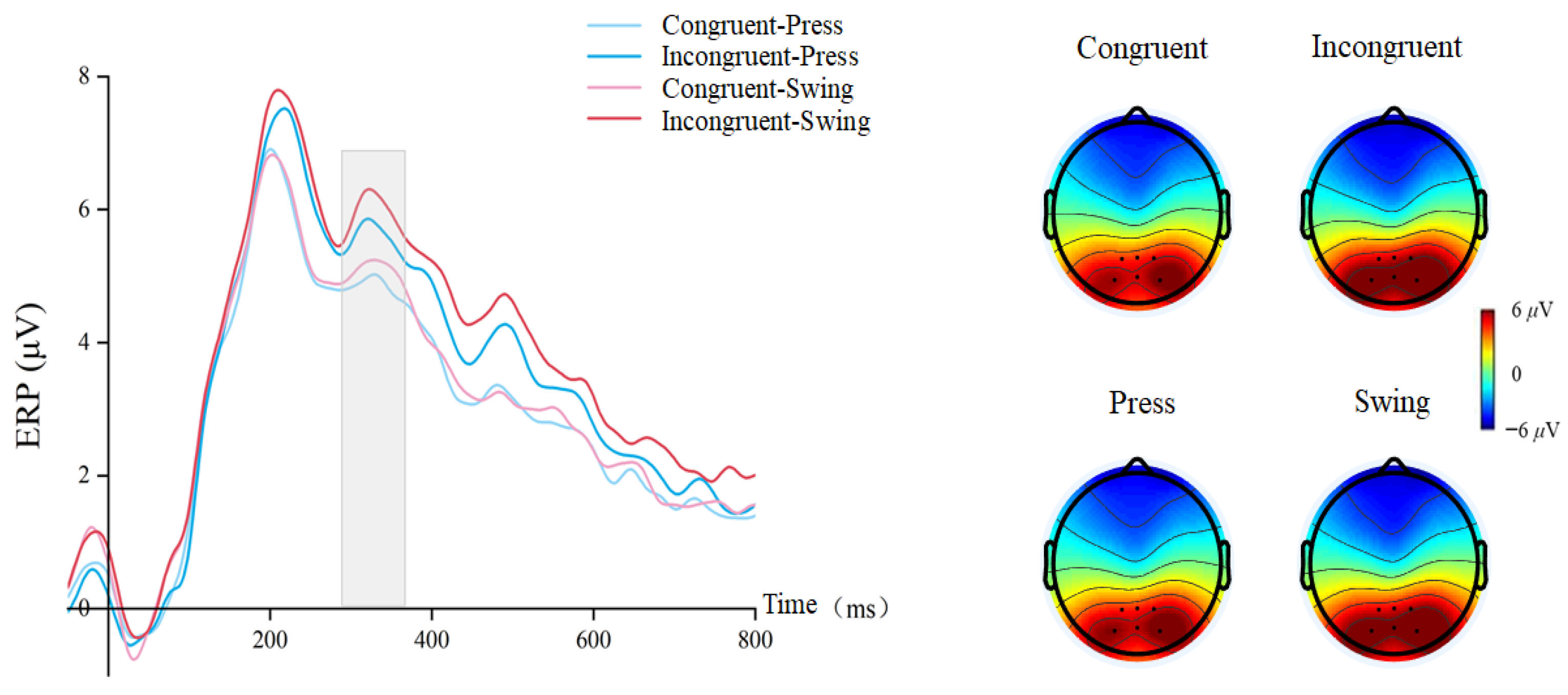

3.2. Electrophysiology Components of the Subliminal Priming Task

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lambon Ralph, M.A.; Pobric, G.; Jefferies, E. Conceptual knowledge is underpinned by the temporal pole bilaterally: Convergent evidence from rTMS. Cereb. Cortex 2009, 19, 832–838. [Google Scholar] [CrossRef]

- Dehaene, S.; Changeux, J.-P.; Naccache, L.; Sackur, J.; Sergent, C. Conscious, preconscious, and subliminal processing: A testable taxonomy. Trends Cogn. Sci. 2006, 10, 204–211. [Google Scholar] [CrossRef] [PubMed]

- Dehaene, S.; Naccache, L.; Le Clec’H, G.; Koechlin, E.; Mueller, M.; Dehaene-Lambertz, G.; van de Moortele, P.-F.; Le Bihan, D. Imaging unconscious semantic priming. Nature 1998, 395, 597–600. [Google Scholar] [CrossRef] [PubMed]

- Collins, A.M.; Loftus, E.F. A spreading-activation theory of semantic processing. Psychol. Rev. 1975, 82, 407. [Google Scholar] [CrossRef]

- Ortells, J.J.; Kiefer, M.; Castillo, A.; Megías, M.; Morillas, A. The semantic origin of unconscious priming: Behavioral and event-related potential evidence during category congruency priming from strongly and weakly related masked words. Cognition 2016, 146, 143–157. [Google Scholar] [CrossRef]

- Bechtold, L.; Cosper, S.H.; Malyshevskaya, A.; Montefinese, M.; Morucci, P.; Niccolai, V.; Repetto, C.; Zappa, A.; Shtyrov, Y. Brain Signatures of Embodied Semantics and Language: A Consensus Paper. J. Cogn. 2023, 6, 61. [Google Scholar] [CrossRef]

- Dam, V. Context Effects in Embodied Lexical-Semantic Processing. Front. Psychol. 2010, 1, 2102. [Google Scholar] [CrossRef]

- Barsalou, L.W. Perceptual symbol systems. Behav. Brain Sci. 1999, 22, 577–609. [Google Scholar] [CrossRef]

- Courson, M.; Tremblay, P. Neural correlates of manual action language: Comparative review, ALE meta-analysis and ROI meta-analysis. Neurosci. Biobehav. Rev. 2020, 116, 221–238. [Google Scholar] [CrossRef]

- Friedemann, P. Brain Mechanisms Linking Language and Action. Nat. Rev. Neurosci. 2005, 6, 576–582. [Google Scholar] [CrossRef]

- Ball, L.V.; Mak, M.H.; Ryskin, R.; Curtis, A.J.; Rodd, J.M.; Gaskell, M.G. The contribution of learning and memory processes to verb-specific syntactic processing. J. Mem. Lang. 2025, 141, 104595. [Google Scholar] [CrossRef]

- Monaco, E.; Mouthon, M.; Britz, J.; Sato, S.; Stefanos-Yakoub, I.; Annoni, J.; Jost, L. Embodiment of action-related language in the native and a late foreign language—An fMRI-study. Brain Lang. 2023, 244, 105312. [Google Scholar] [CrossRef]

- Gu, L.; Jiang, J.; Han, H.; Gan, J.Q.; Wang, H. Recognition of unilateral lower limb movement based on EEG signals with ERP-PCA analysis. Neurosci. Lett. 2023, 800, 137133. [Google Scholar] [CrossRef] [PubMed]

- Gibson, K.R.; Ingold, T. Tools, Language and Cognition in Human Evolution; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- Bergstrom, F.; Wurm, M.; Valério, D.; Lingnau, A.; Almeida, J. Decoding stimuli (tool-hand) and viewpoint invariant grasp-type information. Cortex 2021, 139, 152–165. [Google Scholar] [CrossRef]

- Fragaszy, D.M.; Mangalam, M. Chapter Five—Tooling. In Advances in the Study of Behavior; Naguib, M., Barrett, L., Healy, S.D., Podos, J., Simmons, L.W., Zuk, M., Eds.; Academic Press: Cambridge, MA, USA, 2018; pp. 177–241. [Google Scholar]

- Klepp, A.; Weissler, H.; Niccolai, V.; Terhalle, A.; Geisler, H.; Schnitzler, A.; Biermann-Ruben, K. Neuromagnetic hand and foot motor sources recruited during action verb processing. Brain Lang. 2014, 128, 41–52. [Google Scholar] [CrossRef] [PubMed]

- Caggiano, P.; Grossi, G.; De Mattia, L.C.; Vanvelzen, J.; Cocchini, G. Objects with motor valence affect the visual processing of human body parts: Evidence from behavioural and ERP studies. Cortex 2022, 153, 194–206. [Google Scholar] [CrossRef] [PubMed]

- Proverbio, A.M.; Adorni, R.; D’Aniello, G.E. 250 ms to code for action affordance during observation of manipulable objects. Neuropsychologia 2011, 49, 2711–2717. [Google Scholar] [CrossRef]

- Campanella, F.; Shallice, T. Manipulabilit and object recognition: Is manipulability a semantic feature? Exp. Brain Res. 2011, 208, 369–383. [Google Scholar] [CrossRef]

- Buxbaum, L.J.; Kalenine, S.E. Action knowledge, visuomotor activation, and embodiment in the two action systems. Ann. N. Y. Acad. Sci. 2010, 1191, 201–218. [Google Scholar] [CrossRef]

- Binkofski, F.; Buxbaum, L.J. Two action systems in the human brain. Brain Lang. 2013, 127, 222–229. [Google Scholar] [CrossRef]

- Knights, E.; Mansfield, C.; Tonin, D.; Saada, J.; Smith, F.W.; Rossit, S. Hand-Selective Visual Regions Represent How to Grasp 3D Tools: Brain Decoding during Real Actions. J. Neurosci. 2021, 41, 5263–5273. [Google Scholar] [CrossRef]

- Osiurak, F.; Badets, A. Tool use and affordance: Manipulation-based versus reasoning-based approaches. Psychol. Rev. 2016, 123, 534–568. [Google Scholar] [CrossRef]

- Errante, A.; Ziccarelli, S.; Mingolla, G.P.; Fogassi, L. Decoding grip type and action goal during the observation of reaching-grasping actions: A multivariate fMRI study. Neuroimage 2021, 243, 118511. [Google Scholar] [CrossRef] [PubMed]

- Brandi, M.L.; Wohlschläger, A.; Sorg, C.; Hermsdörfer, J. The Neural Correlates of Planning and Executing Actual Tool Use. J. Neurosci. 2014, 34, 13183–13194. [Google Scholar] [CrossRef] [PubMed]

- Bub, D.N.; Masson, M.E.J. Grasping Beer Mugs: On the Dynamics of Alignment Effects Induced by Handled Objects. J. Exp. Psychol. Hum. Percept. Perform. 2010, 36, 341–358. [Google Scholar] [CrossRef] [PubMed]

- Pappas, Z.; Mack, A. Potentiation of action by undetected affordant objects. Vis. Cogn. 2008, 16, 892–915. [Google Scholar] [CrossRef]

- Tucker, M.; Ellis, R. On the Relations Between Seen Objects and Components of Potential Actions. J. Exp. Psychol. 1998, 24, 830–846. [Google Scholar] [CrossRef]

- Garrido-Vasquez, P.; Wengemuth, E.; Schubo, A. Priming of grasp affordance in an ambiguous object: Evidence from ERPs, source localization, and motion tracking. Heliyon 2021, 7, e06870. [Google Scholar] [CrossRef]

- Lee, C.L.; Huang, H.-W.; Federmeier, K.D.; Buxbaum, L.J. Sensory and semantic activations evoked by action attributes of manipulable objects: Evidence from ERPs. NeuroImage 2018, 167, 331–341. [Google Scholar] [CrossRef]

- Deng, Y.; Wu, Q.; Wang, J.; Feng, L.; Xiao, Q. Event-related potentials reveal early activation of syntax information in Chinese verb processing. Neurosci. Lett. 2016, 631, 19–23. [Google Scholar] [CrossRef]

- Leynes, P.A.; Verma, Y.; Santos, A. Separating the FN400 and N400 event-related potential components in masked word priming. Brain Cogn. 2024, 182, 106226. [Google Scholar] [CrossRef] [PubMed]

- Evans, K.M.; Federmeier, K.D. The memory that’s right and the memory that’s left: Event-related potentials reveal hemispheric asymmetries in the encoding and retention of verbal information. Neuropsychologia 2007, 45, 1777–1790. [Google Scholar] [CrossRef] [PubMed]

- Thepsatitporn, S.; Pichitpornchai, C. Visual event-related potential studies supporting the validity of VARK learning styles’ visual and read/write learners. AJP Adv. Physiol. Educ. 2016, 40, 206–212. [Google Scholar] [CrossRef] [PubMed]

- Emmorey, K.; Akers, E.M.; Martinez, P.M.; Midgley, K.J.; Holcomb, P.J. Assessing sensitivity to semantic and syntactic information in deaf readers: An ERP study. Neuropsychologia 2025, 215, 109171. [Google Scholar] [CrossRef]

- Kim, A.E.; McKnight, S.M.; Miyake, A. How variable are the classic ERP effects during sentence processing? A systematic resampling analysis of the N400 and P600 effects. Cortex 2024, 177, 130–149. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef]

- Buxbaum, L.J.; Kyle, K.M.; Tang, K.; Detre, J.A. Neural substrates of knowledge of hand postures for object grasping and functional object use: Evidence from fMRI. Brain Res. 2006, 1117, 175–185. [Google Scholar] [CrossRef]

- Brodeur, M.B.; Guérard, K.; Bouras, M. Bank of Standardized Stimuli (BOSS) Phase II 930 New photos. PLoS ONE 2014, 9, e106953. [Google Scholar] [CrossRef]

- Brainard, D.H.; Vision, S. The psychophysics toolbox. Spat. Vis. 1997, 10, 433–436. [Google Scholar] [CrossRef]

- Pelli, D.G. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spat. Vis. 1997, 10, 437–442. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Iversen, J.R.; Makeig, S. MEG/EEG Data Analysis Using EEGLAB; Springer: Berlin/Heidelberg, Germany, 2014; Volume 1, pp. 199–212. [Google Scholar]

- Gratton, G.; Coles, M.G.; Donchin, E. A new method for off-line removal of ocular artifact. Electroencephalogr. Clin. Neurophysiol. 1983, 55, 468–484. [Google Scholar] [CrossRef] [PubMed]

- Dux, P.E.; Marois, R. The attentional blink: A review of data and theory. Atten. Percept. Psychophys. 2009, 71, 1683–1700. [Google Scholar] [CrossRef] [PubMed]

- Brown, C.; Hagoort, P. The Processing Nature of the N400: Evidence from Masked Priming. J. Cogn. Neurosci. 1993, 5, 34. [Google Scholar] [CrossRef] [PubMed]

- Dudschig, C. Language and non-linguistic cognition: Shared mechanisms and principles reflected in the N400. Biol. Psychol. 2022, 169, 108282. [Google Scholar] [CrossRef]

- Agustín, R.T.; González, Z.B.; Camacho, M.A.R.; Almonte, S.; Galindo, W.F.L.; Aguirre, F.A.R. Detection of semantic inconsistencies of motor actions: From language to praxis. Cogn. Syst. Res. 2024, 88, 101292. [Google Scholar] [CrossRef]

- Droge, A.; Fleischer, J.; Schlesewsky, M.; Bornkessel-Schlesewsky, I. Neural mechanisms of sentence comprehension based on predictive processes and decision certainty: Electrophysiological evidence from non-canonical linearizations in a flexible word order language. Brain Res. 2016, 1633, 149–166. [Google Scholar] [CrossRef]

- Ibanez, A.; Kühne, K.; Miklashevsky, A.; Monaco, E.; Muraki, E.; Ranzini, M.; Speed, L.J.; Tuena, C. Ecological Meanings: A Consensus Paper on Individual Differences and Contextual Influences in Embodied Language. J. Cogn. 2023, 6, 59. [Google Scholar] [CrossRef]

- Rueschemeyer, S.-A.; Pfeiffer, C.; Bekkering, H. Body schematics: On the role of the body schema in embodied lexical-semantic representations. Neuropsychologia 2010, 48, 774–781. [Google Scholar] [CrossRef]

- Rueschemeyer, S.-A.; van Rooij, D.; Lindemann, O.; Willems, R.M.; Bekkering, H. The Function of Words: Distinct Neural Correlates for Words Denoting Differently Manipulable Objects. J. Cogn. Neurosci. 2010, 22, 1844–1851. [Google Scholar] [CrossRef]

- Salazar-López, E.; Schwaiger, B.J.; HermsderRfer, J. Lesion correlates of impairments in actual tool use following unilateral brain damage. Neuropsychologia 2016, 84, 167–180. [Google Scholar] [CrossRef]

- Kiefer, M.; Brendel, D. Attentional Modulation of Unconscious ‘Automatic’ Processes: Evidence from Event-related Potentials in a Masked Priming Paradigm. J. Cogn. Neurosci. 2006, 18, 184–198. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.H.; Zhou, J.; Li, K.-A.; Hung, T.; Pegna, A.J.; Yeh, S.-L. Opposite ERP effects for conscious and unconscious semantic processing under continuous flash suppression. Conscious. Cogn. 2017, 54, 114–128. [Google Scholar] [CrossRef] [PubMed]

- Velji-Ibrahim, J.; Crawford, J.D.; Cattaneo, L.; Monaco, S. Action planning modulates the representation of object features in human fronto-parietal and occipital cortex. Eur. J. Neurosci. 2022, 56, 4803–4818. [Google Scholar] [CrossRef] [PubMed]

- Ghio, M.; Cassone, B.; Tettamanti, M. Unaware processing of words activates experience-derived information in conceptual-semantic brain networks. Imaging Neurosci. 2025, 3, imag_a_00484. [Google Scholar] [CrossRef]

- Fagg, A.H.; Arbib, M.A. Modeling parietal-premotor interactions in primate control of grasping. Neural Netw. 1998, 11, 1277–1303. [Google Scholar] [CrossRef]

- Kozunov, V.V.; West, T.O.; Nikolaeva, A.Y.; Stroganova, T.A.; Friston, K.J. Object recognition is enabled by an experience-dependent appraisal of visual features in the brain’s value system. Neuroimage 2020, 221, 117143. [Google Scholar] [CrossRef]

- McKoon, G.; Macfarland, T. Event templates in the lexical representations of verbs. Cogn. Psychol. 2002, 45, 1–44. [Google Scholar] [CrossRef]

- Barde, L.; Buxbaum, L.J.; Moll, A.D. Abnormal reliance on object structure in apraxics’ learning of novel object-related actions. J. Int. Neuropsychol. Soc. 2007, 13, 997–1008. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, Y.; Huang, Q.; Gao, S.; Li, A. Embodied Cognition of Manipulative Actions: Subliminal Grasping Semantics Enhance Using-Action Recognition. Brain Sci. 2025, 15, 1206. https://doi.org/10.3390/brainsci15111206

Yu Y, Huang Q, Gao S, Li A. Embodied Cognition of Manipulative Actions: Subliminal Grasping Semantics Enhance Using-Action Recognition. Brain Sciences. 2025; 15(11):1206. https://doi.org/10.3390/brainsci15111206

Chicago/Turabian StyleYu, Yanglan, Qin Huang, Shiying Gao, and Anmin Li. 2025. "Embodied Cognition of Manipulative Actions: Subliminal Grasping Semantics Enhance Using-Action Recognition" Brain Sciences 15, no. 11: 1206. https://doi.org/10.3390/brainsci15111206

APA StyleYu, Y., Huang, Q., Gao, S., & Li, A. (2025). Embodied Cognition of Manipulative Actions: Subliminal Grasping Semantics Enhance Using-Action Recognition. Brain Sciences, 15(11), 1206. https://doi.org/10.3390/brainsci15111206