Overcoming the Challenge of Singing Among Cochlear Implant Users: An Analysis of the Disrupted Feedback Loop and Strategies for Improvement

Abstract

1. Introduction

1.1. Cochlear Implantation: Triumphs and Tribulations

1.2. Structure of the Review

2. Methods

3. The Challenge of Auditory Perception Through an Impoverished Electric Signal

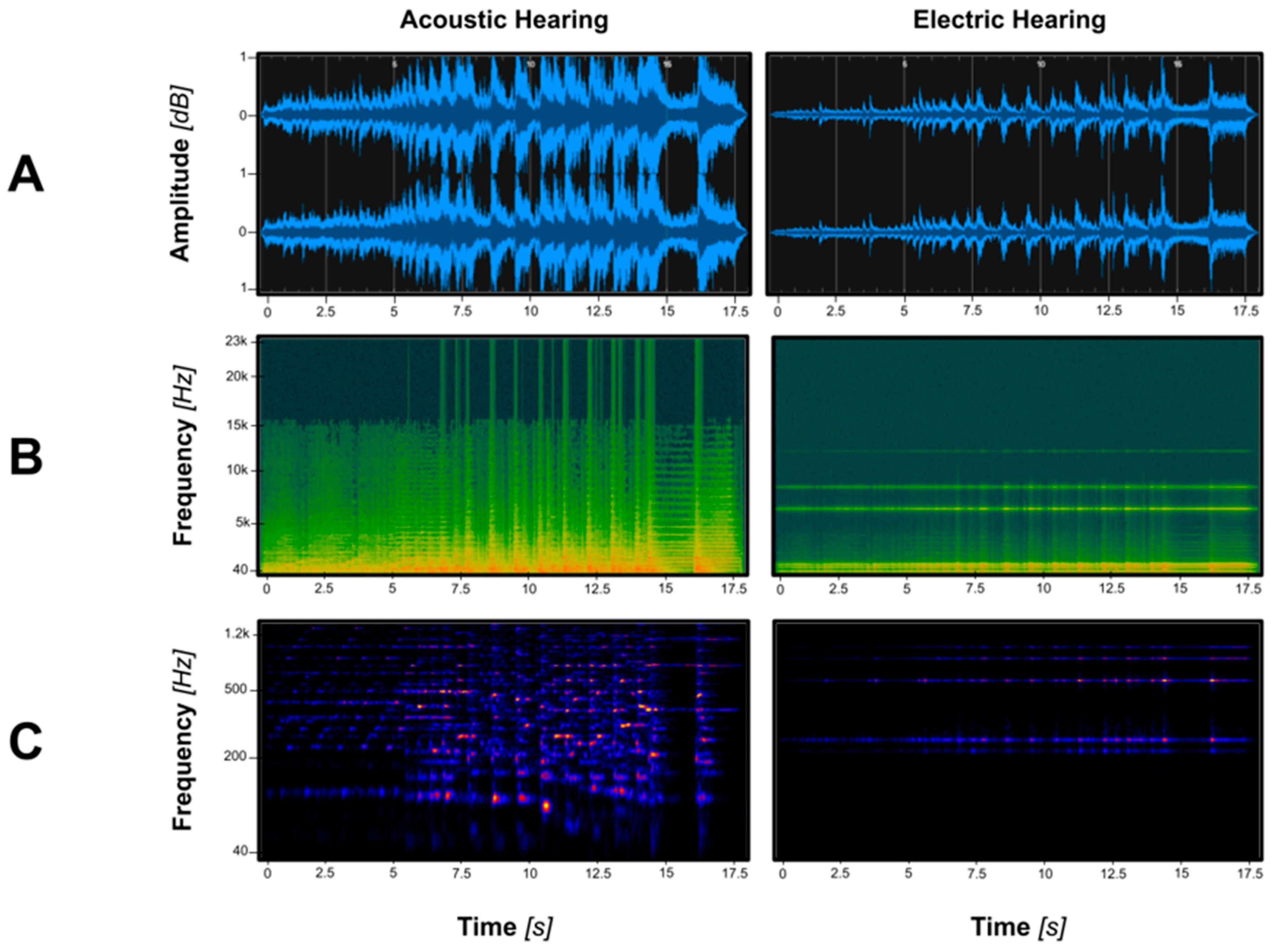

3.1. CI Deficits When Applied to Music Perception

3.2. Music-Related CI Deficits as Applied to Vocal Perception

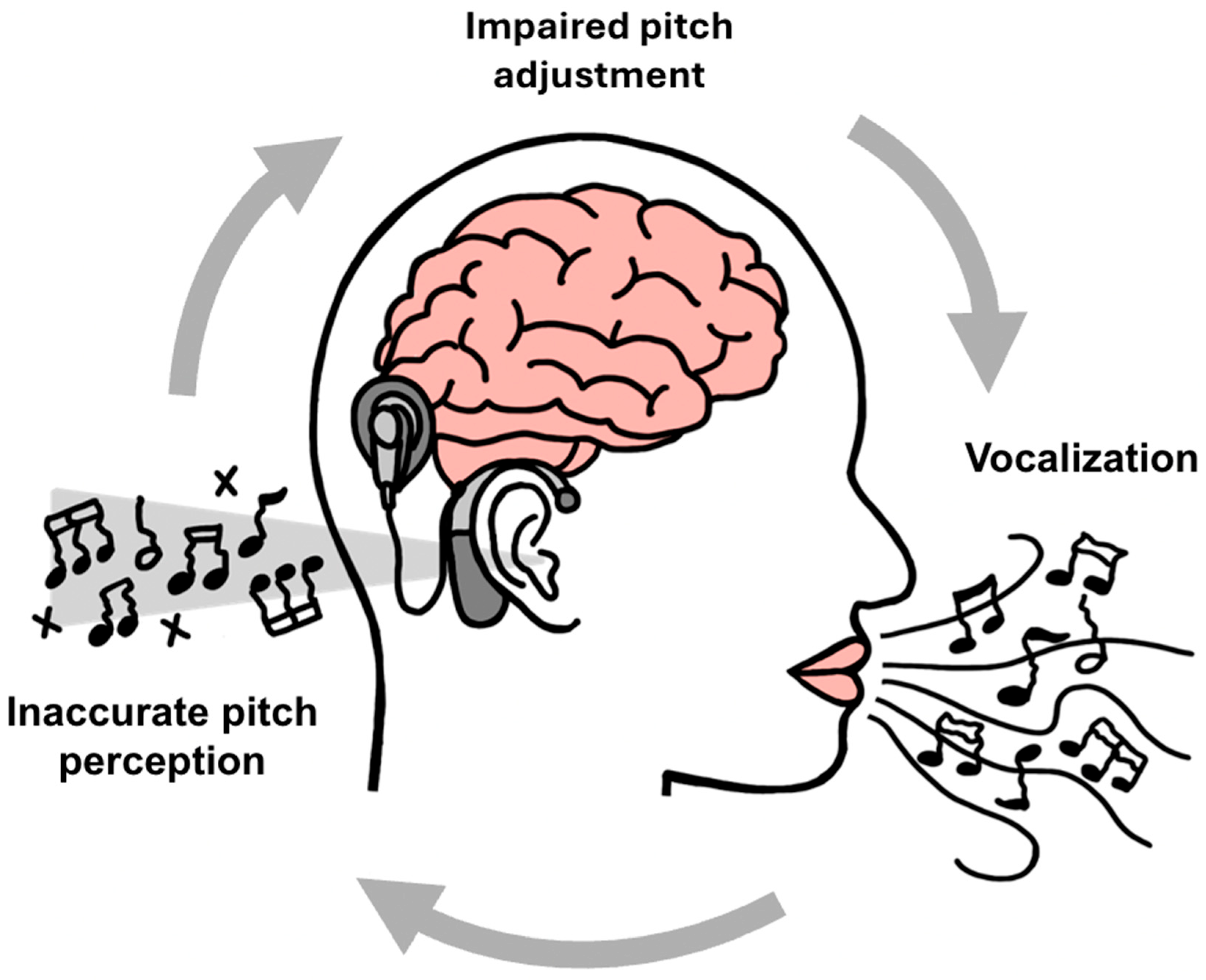

4. The Challenge of Production: The Broken Feedback Loop in Singing

4.1. The Auditory–Motor Feedback Loop: A Foundation for Vocal Control

4.2. The Impact of Degraded Auditory Feedback in Cochlear Implant Users

4.3. Consequences for Vocal Production: An Acoustic Analysis of Singing Deficits

4.3.1. Pervasive Pitch Inaccuracy and Melodic Distortion

4.3.2. Loss of Tonal Center: The Inability to Maintain a Musical Key

4.3.3. Diminished Control over Vocal Quality

5. Pathways to Improvement

5.1. Innovations in Sound-Processing Strategies

5.1.1. Enhancing Spectral Information

5.1.2. Recapturing Temporal Fine Structure (TFS)

5.2. The Role of Targeted Auditory Rehabilitation

5.2.1. From Listener to Participant: The Superiority of Auditory–Motor Training

5.2.2. Curated Listening: Computer-Based Music Training

5.2.3. Finding a Voice: Vocal Therapies and Singing Instruction

5.2.4. The Unique Benefit of Group Singing and Choirs

5.3. Neuroplasticity and Individual Patient Factors

5.3.1. The Sensitive Period for Sound

5.3.2. The Bimodal Advantage

5.3.3. Psychosocial Scaffolding

6. An Integrated Model of Musicality Post-Implantation

6.1. The Relationship Between Perception and Production

6.2. The Disconnect Between Perception and Appraisal

6.3. A Holistic Framework for Auditory Rehabilitation

7. Conclusions and Future Directions

7.1. Summary of Key Findings

7.2. Future Directions

7.3. Challenges of Implementation and Access

7.4. Clinical Implications and Recommendations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CI | Cochlear Implant |

| CIS | Continuous Interleaved Sampling |

| ACE | Advanced Combination Encoder |

| NH | Normal Hearing |

| TFS | Temporal Fine Structure |

| MBEA | Montreal Battery of Evaluation of Amusia |

| CAMP | Clinical Assessment of Music Perception |

| FSP | Fine Structure Processing |

| CBAT | Computer-Based Music Training |

| CAEP | Cortical Auditory Evoked Potential |

| ICF | International Classification of Functioning |

| NCIQ | Nijmegen Cochlear Implant Questionnaire |

| CIQOL | Cochlear Implant Quality of Life |

References

- Carlson, M.L. Cochlear implantation in adults. N. Engl. J. Med. 2020, 382, 1531–1542. [Google Scholar] [CrossRef]

- Boisvert, I.; Reis, M.; Au, A.; Cowan, R.; Dowell, R.C. Cochlear implantation outcomes in adults: A scoping review. PLoS ONE 2020, 15, e0232421. [Google Scholar] [CrossRef]

- Limb, C.J.; Roy, A.T. Technological, biological, and acoustical constraints to music perception in cochlear implant users. Hear. Res. 2014, 308, 13–26. [Google Scholar] [CrossRef] [PubMed]

- Gfeller, K.; Turner, C.; Oleson, J.; Kliethermes, S.; Driscoll, V. Accuracy of cochlear implant recipients in speech reception in the presence of background music. Ann. Otol. Rhinol. Laryngol. 2012, 121, 782–791. [Google Scholar] [CrossRef] [PubMed]

- Viswanathan, V.; Heinz, M.G.; Shinn-Cunningham, B.G. Impact of reduced spectral resolution on temporal-coherence–based source segregation. J. Acoust. Soc. Am. 2024, 156, 3862–3876. [Google Scholar] [CrossRef] [PubMed]

- Trehub, S.E.; Vongpaisal, T.; Nakata, T. Music in the lives of deaf children with cochlear implants. Ann. N. Y. Acad. Sci. 2009, 1169, 534–542. [Google Scholar] [CrossRef]

- Wohlbauer, D.M.; Dillier, N. A Hundred Ways to Encode Sound Signals for Cochlear Implants. Annu. Rev. Biomed. Eng. 2025, 27, 335–369. [Google Scholar] [CrossRef]

- Bleckly, F.; Lo, C.Y.; Rapport, F.; Clay-Williams, R. Music Perception, Appreciation, and Participation in Postlingually Deafened Adults and Cochlear Implant Users: A Systematic Literature Review. Trends Hear. 2024, 28, 23312165241287391. [Google Scholar] [CrossRef]

- Wright, R.; Uchanski, R.M. Music perception and appraisal: Cochlear implant users and simulated cochlear implant listening. J. Am. Acad. Audiol. 2012, 23, 350–365. [Google Scholar] [CrossRef]

- Looi, V.; Gfeller, K.; Driscoll, V.D. Music appreciation and training for cochlear implant recipients: A review. In Seminars in Hearing; Thieme Medical Publishers: New York, NY, USA, 2012. [Google Scholar]

- Dhanasingh, A.; Nielsen, S.B.; Beal, F.; Schilp, S.; Hessler, R.; Jolly, C.; Hochmair, I. Cochlear implant electrode design for safe and effective treatment. Front. Neurol. 2024, 15, 1348439. [Google Scholar] [CrossRef]

- Dhanasingh, A.; Jolly, C. An overview of cochlear implant electrode array designs. Hear. Res. 2017, 356, 93–103. [Google Scholar] [CrossRef]

- Carlyon, R.P.; Deeks, J.M.; Delgutte, B.; Chung, Y.; Vollmer, M.; Ohl, F.W.; Kral, A.; Tillein, J.; Litovsky, R.Y.; Schnupp, J. Limitations on temporal processing by cochlear implant users: A compilation of viewpoints. Trends Hear. 2025, 29, 23312165251317006. [Google Scholar] [CrossRef]

- Green, T.; Faulkner, A.; Rosen, S.; Macherey, O. Enhancement of temporal periodicity cues in cochlear implants: Effects on prosodic perception and vowel identification. J. Acoust. Soc. Am. 2005, 118, 375–385. [Google Scholar] [CrossRef]

- Green, T.; Faulkner, A.; Rosen, S. Spectral and temporal cues to pitch in noise-excited vocoder simulations of continuous-interleaved-sampling cochlear implants. J. Acoust. Soc. Am. 2002, 112, 2155–2164. [Google Scholar] [CrossRef] [PubMed]

- Gransier, R.; Carlyon, R.P.; Wouters, J. Electrophysiological assessment of temporal envelope processing in cochlear implant users. Sci. Rep. 2020, 10, 15406. [Google Scholar] [CrossRef] [PubMed]

- Hudspeth, A.; Martin, P. The Critical Thing about the Ear’s Sensory Hair Cells. J. Neurosci. 2024, 44, e1583242024. [Google Scholar] [CrossRef] [PubMed]

- Berg, K.A.; Noble, J.H.; Dawant, B.M.; Sunderhaus, L.W.; DeFreese, A.J.; Labadie, R.F.; Gifford, R.H. Cochlear Implant Electrode Placement and Music Perception. JAMA Otolaryngol.-Head Neck Surg. 2025, 151, 220–227. [Google Scholar] [CrossRef]

- DeFreese, A.; Berg, K.; Noble, J.; Sunderhaus, L.; Lighterink, M.; Shi, L.; Camarata, S.; Gifford, R. Electrode Placement Factors and Their Impact on Spectral Resolution in Pediatric and Adult Cochlear Implant Users. Otol. Neurotol. 2025. online ahead of print. [Google Scholar] [CrossRef]

- Fu, Q.-J.; Nogaki, G. Noise susceptibility of cochlear implant users: The role of spectral resolution and smearing. J. Assoc. Res. Otolaryngol. 2005, 6, 19–27. [Google Scholar] [CrossRef]

- Jiam, N.T.; Caldwell, M.T.; Limb, C.J. What does music sound like for a cochlear implant user? Otol. Neurotol. 2017, 38, e240–e247. [Google Scholar] [CrossRef]

- Gfeller, K.; Turner, C.; Oleson, J.; Zhang, X.; Gantz, B.; Froman, R.; Olszewski, C. Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear Hear. 2007, 28, 412–423. [Google Scholar] [CrossRef]

- Moore, B.C. The role of temporal fine structure processing in pitch perception, masking, and speech perception for normal-hearing and hearing-impaired people. J. Assoc. Res. Otolaryngol. 2008, 9, 399–406. [Google Scholar] [CrossRef]

- Heng, J.; Cantarero, G.; Elhilali, M.; Limb, C.J. Impaired perception of temporal fine structure and musical timbre in cochlear implant users. Hear. Res. 2011, 280, 192–200. [Google Scholar] [CrossRef]

- Nie, K.; Barco, A.; Zeng, F.-G. Spectral and temporal cues in cochlear implant speech perception. Ear Hear. 2006, 27, 208–217. [Google Scholar] [CrossRef] [PubMed]

- Lorenzi, C.; Gilbert, G.; Carn, H.; Garnier, S.; Moore, B.C. Speech perception problems of the hearing impaired reflect inability to use temporal fine structure. Proc. Natl. Acad. Sci. USA 2006, 103, 18866–18869. [Google Scholar] [CrossRef] [PubMed]

- Cooper, W.B.; Tobey, E.; Loizou, P.C. Music perception by cochlear implant and normal hearing listeners as measured by the Montreal Battery for Evaluation of Amusia. Ear Hear. 2008, 29, 618–626. [Google Scholar] [CrossRef]

- Kang, R.; Nimmons, G.L.; Drennan, W.; Longnion, J.; Ruffin, C.; Nie, K.; Won, J.H.; Worman, T.; Yueh, B.; Rubinstein, J. Development and validation of the University of Washington Clinical Assessment of Music Perception test. Ear Hear. 2009, 30, 411–418. [Google Scholar] [CrossRef]

- Zeng, F.-G.; Tang, Q.; Lu, T. Abnormal pitch perception produced by cochlear implant stimulation. PLoS ONE 2014, 9, e88662. [Google Scholar] [CrossRef]

- Mehta, A.H.; Oxenham, A.J. Vocoder simulations explain complex pitch perception limitations experienced by cochlear implant users. J. Assoc. Res. Otolaryngol. 2017, 18, 789–802. [Google Scholar] [CrossRef]

- Landsberger, D.M.; Svrakic, M.; Roland, J.T., Jr.; Svirsky, M. The relationship between insertion angles, default frequency allocations, and spiral ganglion place pitch in cochlear implants. Ear Hear. 2015, 36, e207–e213. [Google Scholar] [CrossRef] [PubMed]

- Dutrieux, N.; Quatre, R.; Péan, V.; Schmerber, S. Correlation between cochlear length, insertion angle, and tonotopic mismatch for MED-EL FLEX28 electrode arrays. Otol. Neurotol. 2022, 43, 48–55. [Google Scholar] [CrossRef]

- Jiam, N.T.; Gilbert, M.; Cooke, D.; Jiradejvong, P.; Barrett, K.; Caldwell, M.; Limb, C.J. Association between flat-panel computed tomographic imaging–guided place-pitch mapping and speech and pitch perception in Cochlear implant users. JAMA Otolaryngol.–Head Neck Surg. 2019, 145, 109–116. [Google Scholar] [CrossRef]

- Reiss, L.A.; Turner, C.W.; Erenberg, S.R.; Gantz, B.J. Changes in pitch with a cochlear implant over time. J. Assoc. Res. Otolaryngol. 2007, 8, 241–257. [Google Scholar] [CrossRef]

- Reiss, L.A.; Turner, C.W.; Karsten, S.A.; Gantz, B.J. Plasticity in human pitch perception induced by tonotopically mismatched electro-acoustic stimulation. Neuroscience 2014, 256, 43–52. [Google Scholar] [CrossRef] [PubMed]

- Tan, C.-T.; Martin, B.; Svirsky, M.A. Pitch matching between electrical stimulation of a cochlear implant and acoustic stimuli presented to a contralateral ear with residual hearing. J. Am. Acad. Audiol. 2017, 28, 187–199. [Google Scholar] [CrossRef] [PubMed]

- Galvin, J.J., III; Fu, Q.J.; Shannon, R.V. Melodic contour identification and music perception by cochlear implant users. Ann. N. Y. Acad. Sci. 2009, 1169, 518–533. [Google Scholar] [CrossRef]

- Caldwell, M.T.; Jiradejvong, P.; Limb, C.J. Impaired perception of sensory consonance and dissonance in cochlear implant users. Otol. Neurotol. 2016, 37, 229–234. [Google Scholar] [CrossRef] [PubMed]

- Spitzer, E.R.; Landsberger, D.M.; Friedmann, D.R.; Galvin, J.J., III. Pleasantness ratings for harmonic intervals with acoustic and electric hearing in unilaterally deaf cochlear implant patients. Front. Neurosci. 2019, 13, 922. [Google Scholar] [CrossRef]

- Donnelly, P.J.; Guo, B.Z.; Limb, C.J. Perceptual fusion of polyphonic pitch in cochlear implant users. J. Acoust. Soc. Am. 2009, 126, EL128–EL133. [Google Scholar] [CrossRef]

- Paquette, S.; Ahmed, G.; Goffi-Gomez, M.; Hoshino, A.; Peretz, I.; Lehmann, A. Musical and vocal emotion perception for cochlear implants users. Hear. Res. 2018, 370, 272–282. [Google Scholar] [CrossRef]

- Alter, I.L.; Chern, A.; Kuhlmey, M.E.; Despotidis, M.A.; Kelly, S.; Denham, M.W.; Leiderman, A.S.; Lalwani, A.K. Emotional Responses to Music Are Diminished in Bilateral Cochlear Implant Users. Audiol. Neurotol. 2025, 30, 381–391. [Google Scholar] [CrossRef]

- Prentiss, S.M.; Friedland, D.R.; Fullmer, T.; Crane, A.; Stoddard, T.; Runge, C.L. Temporal and spectral contributions to musical instrument identification and discrimination among cochlear implant users. World J. Otorhinolaryngol.-Head Neck Surg. 2016, 2, 148–156. [Google Scholar] [CrossRef]

- Kim, S.J.; Cho, Y.S.; Kim, E.Y.; Yoo, G.E. Can young adolescents with cochlear implants perceive different timbral cues? Cochlear Implant. Int. 2015, 16, 61–68. [Google Scholar] [CrossRef] [PubMed]

- Jiam, N.T.; Limb, C.J. Rhythm processing in cochlear implant−mediated music perception. Ann. N. Y. Acad. Sci. 2019, 1453, 22–28. [Google Scholar] [CrossRef] [PubMed]

- Caldwell, M.; Rankin, S.K.; Jiradejvong, P.; Carver, C.; Limb, C.J. Cochlear implant users rely on tempo rather than on pitch information during perception of musical emotion. Cochlear Implant. Int. 2015, 16, S114–S120. [Google Scholar] [CrossRef] [PubMed]

- Giannantonio, S.; Polonenko, M.J.; Papsin, B.C.; Paludetti, G.; Gordon, K.A. Experience changes how emotion in music is judged: Evidence from children listening with bilateral cochlear implants, bimodal devices, and normal hearing. PLoS ONE 2015, 10, e0136685. [Google Scholar] [CrossRef]

- Jiam, N.; Caldwell, M.; Deroche, M.; Chatterjee, M.; Limb, C. Voice emotion perception and production in cochlear implant users. Hear. Res. 2017, 352, 30–39. [Google Scholar] [CrossRef]

- Kalathottukaren, R.T.; Purdy, S.C.; Ballard, E. Prosody perception and musical pitch discrimination in adults using cochlear implants. Int. J. Audiol. 2015, 54, 444–452. [Google Scholar] [CrossRef]

- Planalp, S. Varieties of cues to emotion in naturally occurring situations. Cogn. Emot. 1996, 10, 137–154. [Google Scholar] [CrossRef]

- Everhardt, M.K.; Sarampalis, A.; Coler, M.; Başkent, D.; Lowie, W. Meta-Analysis on the Identification of Linguistic and Emotional Prosody in Cochlear Implant Users and Vocoder Simulations; LWW: Philadelphia, PA, USA, 2020; pp. 1092–1102. [Google Scholar]

- Harding, E.E.; Gaudrain, E.; Tillmann, B.; Maat, B.; Harris, R.L.; Free, R.H.; Başkent, D. Vocal and musical emotion perception, voice cue discrimination, and quality of life in cochlear implant users with and without acoustic hearing. Q. J. Exp. Psychol. 2024. online ahead of print. [Google Scholar] [CrossRef]

- Luo, X.; Fu, Q.-J.; Galvin, J.J., III. Cochlear implants special issue article: Vocal emotion recognition by normal-hearing listeners and cochlear implant users. Trends Amplif. 2007, 11, 301–315. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, M.; Zion, D.J.; Deroche, M.L.; Burianek, B.A.; Limb, C.J.; Goren, A.P.; Kulkarni, A.M.; Christensen, J.A. Voice emotion recognition by cochlear-implanted children and their normally-hearing peers. Hear. Res. 2015, 322, 151–162. [Google Scholar] [CrossRef]

- Barrett, K.C.; Chatterjee, M.; Caldwell, M.T.; Deroche, M.L.; Jiradejvong, P.; Kulkarni, A.M.; Limb, C.J. Perception of child-directed versus adult-directed emotional speech in pediatric cochlear implant users. Ear Hear. 2020, 41, 1372–1382. [Google Scholar] [CrossRef]

- Zarate, J.M.; Zatorre, R.J. Experience-dependent neural substrates involved in vocal pitch regulation during singing. Neuroimage 2008, 40, 1871–1887. [Google Scholar] [CrossRef]

- Guenther, F.H. Cortical interactions underlying the production of speech sounds. J. Commun. Disord. 2006, 39, 350–365. [Google Scholar] [CrossRef]

- Hickok, G.; Houde, J.; Rong, F. Sensorimotor integration in speech processing: Computational basis and neural organization. Neuron 2011, 69, 407–422. [Google Scholar] [CrossRef]

- Gautam, A.; Brant, J.A.; Ruckenstein, M.J.; Eliades, S.J. Real-time feedback control of voice in cochlear implant recipients. Laryngoscope Investig. Otolaryngol. 2020, 5, 1156–1162. [Google Scholar] [CrossRef]

- Xu, L.; Yang, J.; Hahn, E.; Uchanski, R.; Davidson, L. Pitch accuracy of vocal singing in deaf children with bimodal hearing and bilateral cochlear implants. Ear Hear. 2022, 43, 1336–1346. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, M.; Nutter, H.; Zhang, Y.; Zhou, Q.; Liu, Q.; Wu, W.; Xie, D.; Xu, L. Acoustic properties of vocal singing in prelingually-deafened children with cochlear implants or hearing aids. Int. J. Pediatr. Otorhinolaryngol. 2013, 77, 1833–1840. [Google Scholar] [CrossRef] [PubMed]

- Holden, L.K.; Firszt, J.B.; Reeder, R.M.; Uchanski, R.M.; Dwyer, N.Y.; Holden, T.A. Factors affecting outcomes in cochlear implant recipients implanted with a perimodiolar electrode array located in scala tympani. Otol. Neurotol. 2016, 37, 1662–1668. [Google Scholar] [CrossRef] [PubMed]

- Boisvert, I.; McMahon, C.M.; Dowell, R.C.; Lyxell, B. Long-term asymmetric hearing affects cochlear implantation outcomes differently in adults with pre-and postlingual hearing loss. PLoS ONE 2015, 10, e0129167. [Google Scholar] [CrossRef]

- Kenway, B.; Tam, Y.C.; Vanat, Z.; Harris, F.; Gray, R.; Birchall, J.; Carlyon, R.; Axon, P. Pitch discrimination: An independent factor in cochlear implant performance outcomes. Otol. Neurotol. 2015, 36, 1472–1479. [Google Scholar] [CrossRef] [PubMed]

- Xu, L.; Zhou, N.; Chen, X.; Li, Y.; Schultz, H.M.; Zhao, X.; Han, D. Vocal singing by prelingually-deafened children with cochlear implants. Hear. Res. 2009, 255, 129–134. [Google Scholar] [CrossRef] [PubMed]

- Shakrawal, N.; Sonkhya, N.; Agarwal, S.; Grover, M. The effect of age at cochlear implantation on speech and auditory performances in prelingually deaf children. Indian J. Otolaryngol. Head Neck Surg. 2022, 74, 52–61. [Google Scholar] [CrossRef]

- Aronoff, J.M.; Kirchner, A.; Abbs, E.; Harmon, B. When singing with cochlear implants, are two ears worse than one for perilingually/postlingually deaf individuals? J. Acoust. Soc. Am. 2018, 143, EL503–EL508. [Google Scholar] [CrossRef]

- Van De Velde, D.J.; Schiller, N.O.; Levelt, C.C.; Van Heuven, V.J.; Beers, M.; Briaire, J.J.; Frijns, J.H. Prosody perception and production by children with cochlear implants. J. Child Lang. 2019, 46, 111–141. [Google Scholar] [CrossRef]

- Campisi, P.; Low, A.; Papsin, B.; Mount, R.; Cohen-Kerem, R.; Harrison, R. Acoustic analysis of the voice in pediatric cochlear implant recipients: A longitudinal study. Laryngoscope 2005, 115, 1046–1050. [Google Scholar] [CrossRef]

- Allegro, J.; Papsin, B.; Harrison, R.; Campisi, P. Acoustic analysis of voice in cochlear implant recipients with post-meningitic hearing loss. Cochlear Implant. Int. 2009, 11, 100–116. [Google Scholar] [CrossRef]

- Baudonck, N.; D’haeseleer, E.; Dhooge, I.; Van Lierde, K. Objective vocal quality in children using cochlear implants: A multiparameter approach. J. Voice 2011, 25, 683–691. [Google Scholar] [CrossRef] [PubMed]

- Chari, D.A.; Barrett, K.C.; Patel, A.D.; Colgrove, T.R.; Jiradejvong, P.; Jacobs, L.Y.; Limb, C.J. Impact of auditory-motor musical training on melodic pattern recognition in cochlear implant users. Otol. Neurotol. 2020, 41, e422–e431. [Google Scholar] [CrossRef]

- Sharma, A.; Nash, A.A.; Dorman, M. Cortical development, plasticity and re-organization in children with cochlear implants. J. Commun. Disord. 2009, 42, 272–279. [Google Scholar] [CrossRef]

- Brown, C.J.; Jeon, E.-K.; Driscoll, V.; Mussoi, B.; Deshpande, S.B.; Gfeller, K.; Abbas, P.J. Effects of long-term musical training on cortical auditory evoked potentials. Ear Hear. 2017, 38, e74–e84. [Google Scholar] [CrossRef] [PubMed]

- Gfeller, K.; Witt, S.; Adamek, M.; Mehr, M.; Rogers, J.; Stordahl, J.; Ringgenberg, S. Effects of training on timbre recognition and appraisal by postlingually deafened cochlear implant recipients. J. Am. Acad. Audiol. 2002, 13, 132–145. [Google Scholar] [CrossRef] [PubMed]

- Galvin, J.J., III; Fu, Q.-J.; Nogaki, G. Melodic contour identification by cochlear implant listeners. Ear Hear. 2007, 28, 302–319. [Google Scholar] [CrossRef]

- Arenberg Bierer, J. Probing the electrode-neuron interface with focused cochlear implant stimulation. Trends Amplif. 2010, 14, 84–95. [Google Scholar] [CrossRef]

- Firszt, J.B.; Koch, D.B.; Downing, M.; Litvak, L. Current steering creates additional pitch percepts in adult cochlear implant recipients. Otol. Neurotol. 2007, 28, 629–636. [Google Scholar] [CrossRef]

- Sharma, A.; Dorman, M.F. Central auditory development in children with cochlear implants: Clinical implications. Adv. Oto Rhino Laryngol. 2006, 64, 66–88. [Google Scholar]

- Torppa, R.; Huotilainen, M. Why and how music can be used to rehabilitate and develop speech and language skills in hearing-impaired children. Hear. Res. 2019, 380, 108–122. [Google Scholar] [CrossRef]

- Bartov, T.; Most, T. Song recognition by young children with cochlear implants: Comparison between unilateral, bilateral, and bimodal users. J. Speech Lang. Hear. Res. 2014, 57, 1929–1941. [Google Scholar] [CrossRef]

- Gagnon, E.B.; Eskridge, H.; Brown, K.D.; Park, L.R. The impact of cumulative cochlear implant wear time on spoken language outcomes at age 3 years. J. Speech Lang. Hear. Res. 2021, 64, 1369–1375. [Google Scholar] [CrossRef] [PubMed]

- Gfeller, K.; Mallalieu, R. Psychosocial and auditory factors that influence successful music-based auditory training in pediatric cochlear implant recipients. Front. Hum. Neurosci. 2023, 17, 1308712. [Google Scholar] [CrossRef] [PubMed]

- Lo, C.Y.; McMahon, C.M.; Looi, V.; Thompson, W.F. Melodic contour training and its effect on speech in noise, consonant discrimination, and prosody perception for cochlear implant recipients. Behav. Neurol. 2015, 2015, 352869. [Google Scholar] [CrossRef]

- Vandali, A.; Sly, D.; Cowan, R.; Van Hoesel, R. Training of cochlear implant users to improve pitch perception in the presence of competing place cues. Ear Hear. 2015, 36, e1–e13. [Google Scholar] [CrossRef]

- Magele, A.; Wirthner, B.; Schoerg, P.; Sprinzl, G.M. Effects of Musical Training in Music Therapy Following Cochlear Implantation—A Case Report. Audiol. Res. 2024, 14, 217–226. [Google Scholar] [CrossRef]

- Yang, J.; Liang, Q.; Chen, H.; Liu, Y.; Xu, L. Singing proficiency of members of a choir formed by prelingually deafened children with cochlear implants. J. Speech Lang. Hear. Res. 2019, 62, 1561–1573. [Google Scholar] [CrossRef]

- Fallon, J.B.; Irvine, D.R.; Shepherd, R.K. Cochlear implants and brain plasticity. Hear. Res. 2008, 238, 110–117. [Google Scholar] [CrossRef]

- Glennon, E.; Svirsky, M.A.; Froemke, R.C. Auditory cortical plasticity in cochlear implant users. Curr. Opin. Neurobiol. 2020, 60, 108–114. [Google Scholar] [CrossRef]

- Looi, V.; Torppa, R.; Prvan, T.; Vickers, D. The role of music in families of children with hearing loss and normal hearing in Australia, Finland, and the UK. Front. Neurosci. 2019, 13, 1002. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, M.; Feller, A.; Kulkarni, A.M.; Galvin, J.J. Emotional prosody perception and production are linked in prelingually deaf children with cochlear implants. JASA Express Lett. 2023, 3, 127601. [Google Scholar] [CrossRef]

- Lo, C.Y.; Looi, V.; Thompson, W.F.; McMahon, C.M. Music training for children with sensorineural hearing loss improves speech-in-noise perception. J. Speech Lang. Hear. Res. 2020, 63, 1990–2015. [Google Scholar] [CrossRef] [PubMed]

- Organization, W.H. International Classification of Functioning, Disability, and Health: Children & Youth Version: ICF-CY; World Health Organization: Geneva, Switzerland, 2007. [Google Scholar]

- Hinderink, J.B.; Krabbe, P.F.; Van Den Broek, P. Development and application of a health-related quality-of-life instrument for adults with cochlear implants: The Nijmegen cochlear implant questionnaire. Otolaryngol.-Head Neck Surg. 2000, 123, 756–765. [Google Scholar] [CrossRef] [PubMed]

- McRackan, T.R.; Hand, B.N.; Velozo, C.A.; Dubno, J.R. Development of the cochlear implant quality of life item bank. Ear Hear. 2019, 40, 1016–1024. [Google Scholar] [CrossRef] [PubMed]

- Dritsakis, G.; van Besouw, R.M.; Kitterick, P.; Verschuur, C.A. A Music-Related Quality of Life measure to guide music rehabilitation for adult CI users. Am. J. Audiol. 2017, 26, 268–282. [Google Scholar] [CrossRef]

- D’Onofrio, K.L.; Gifford, R.H. Bimodal benefit for music perception: Effect of acoustic bandwidth. J. Speech Lang. Hear. Res. 2021, 64, 1341–1353. [Google Scholar] [CrossRef] [PubMed]

- Quimby, A.E.; Venkatesh, S.; Corsten, M.; McDonald, J.T.; Hwa, T.P.; Bigelow, D.C.; Ruckenstein, M.J.; Johnson-Obaseki, S.; Brant, J.A. Socioeconomic status among cochlear implant candidates and association with surgical pursuance. JAMA Otolaryngol.-Head Neck Surg. 2023, 149, 891–898. [Google Scholar] [CrossRef]

- Dornhoffer, J.R.; Kinkhabwala, C.M.; Chidarala, S.; Ma, C.; Schvartz-Leyzac, K.C.; Meyer, T.A.; Sterba, K.R.; Dubno, J.R.; McRackan, T.R. Patient-related factors do not predict use of computer-based auditory training by new adult cochlear implant recipients. Otol. Neurotol. 2023, 44, e81–e87. [Google Scholar] [CrossRef]

- Dornhoffer, J.R.; Chidarala, S.; Patel, T.; Khandalavala, K.R.; Nguyen, S.A.; Schvartz-Leyzac, K.C.; Dubno, J.R.; Carlson, M.L.; Moberly, A.C.; McRackan, T.R. Systematic review of auditory training outcomes in adult cochlear implant recipients and meta-analysis of outcomes. J. Clin. Med. 2024, 13, 400. [Google Scholar] [CrossRef]

- Sorkin, D.L. Cochlear implantation in the world’s largest medical device market: Utilization and awareness of cochlear implants in the United States. Cochlear Implant. Int. 2013, 14, S12–S14. [Google Scholar] [CrossRef]

- Sorkin, D.L.; Buchman, C.A. Cochlear implant access in six developed countries. Otol. Neurotol. 2016, 37, e161–e164. [Google Scholar] [CrossRef]

| Auditory Domain | Specific Perceptual Deficit | Primary CI Limitation(s) | Real-World Consequence |

|---|---|---|---|

| Pitch & Melody [23] | Poor melody recognition; difficulty discriminating small pitch changes. | Poor spectral resolution; loss of Temporal Fine Structure (TFS). | Music sounds “out of tune”; difficulty recognizing familiar songs without lyrics. |

| Timbre [24] | Difficulty distinguishing between different musical instruments or singers’ voices. | Coarse spectral resolution; channel interaction (“spectral smearing”). | Instruments sound muddled or similar; difficulty appreciating orchestral texture. |

| Vocal Prosody [25] | Impaired recognition of vocal emotion, sarcasm, and pragmatic intent. | Poor pitch perception due to degraded spectral cues and loss of TFS. | Frequent misunderstandings in conversation; reduced social connection and empathy. |

| Speech-in-Noise [26] | Significantly reduced ability to understand speech in the presence of background noise. | All of the above; inability to use fine structure cues to separate sound sources. | Difficulty in restaurants, group conversations, and other complex listening environments. |

| Authors | Design/Methodology | Population | Key Quantifiable Result (CI Users) | Limitations/Nuances |

|---|---|---|---|---|

| Gautam et al., (2020) [59] | Real-time sensorimotor adaptation paradigm using vocal pitch perturbation. | Adult CI recipients. | Compensatory vocal response initiated only at 6 semitone feedback shifts. | Small, specialized cohort; measures compensatory response threshold, not everyday pitch accuracy. |

| Xu et al., (2009, 2022) [60,65] | Acoustic analysis of sung melodies; correlation analysis of residual hearing. | Pediatric CI users (prelingually deafened), including bimodal and bilateral users. | Mean note deviation: 2.3 to 2.7 semitones; Melodic contour accuracy: 52.3%. Bimodal advantage correlated to residual hearing (r = 0.582). | Focus on prelingually deaf children, whose developmental trajectories differ from adults. Wide variability in individual performance. |

| Gfeller et al., (2007) [22] | Auditory discrimination tasks (pure-tone frequency difference limens). | Adult CI users. | Pitch discrimination threshold: ~3 semitones. | Measures perception of isolated tones in a controlled setting, which may overestimate ability to process complex pitch in real music. |

| Chari et al., (2020) [72] | Controlled training study comparing Auditory–Motor vs. Auditory-Only training. | Adult CI users. | Auditory–motor training provided significantly greater improvement in melodic contour recognition. | Short training duration (1 month); findings relate to perception (recognition) improvement, not directly to sustained production (singing) accuracy. |

| Sharma et al., (2009) [73] | P1 Cortical Auditory Evoked Potential (CAEP) biomarker measurement. | Pediatric CI Users. | Sensitive period closes around age 7; best outcomes when implanted before 3.5 years. | CAEP is a neurophysiological biomarker; correlation with behavioral singing outcome requires further long-term study. |

| Wright & Uchanski (2012) [9] | Objective perception tests (melody, timbre, rhythm) alongside subjective appraisal ratings. | Adult CI users and simulated CI listeners. | Objective scores did not correlate statistically with subjective appraisal ratings (Perception-Appraisal Gap). | Relies on subjective, self-reported appraisal ratings; inclusion of CI simulators may not perfectly mirror electric hearing. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Younan, S.M.; Lin, E.Y.; Barry, B.; Kurup, A.; Barrett, K.C.; Jiam, N.T. Overcoming the Challenge of Singing Among Cochlear Implant Users: An Analysis of the Disrupted Feedback Loop and Strategies for Improvement. Brain Sci. 2025, 15, 1192. https://doi.org/10.3390/brainsci15111192

Younan SM, Lin EY, Barry B, Kurup A, Barrett KC, Jiam NT. Overcoming the Challenge of Singing Among Cochlear Implant Users: An Analysis of the Disrupted Feedback Loop and Strategies for Improvement. Brain Sciences. 2025; 15(11):1192. https://doi.org/10.3390/brainsci15111192

Chicago/Turabian StyleYounan, Stephanie M., Emmeline Y. Lin, Brooke Barry, Arjun Kurup, Karen C. Barrett, and Nicole T. Jiam. 2025. "Overcoming the Challenge of Singing Among Cochlear Implant Users: An Analysis of the Disrupted Feedback Loop and Strategies for Improvement" Brain Sciences 15, no. 11: 1192. https://doi.org/10.3390/brainsci15111192

APA StyleYounan, S. M., Lin, E. Y., Barry, B., Kurup, A., Barrett, K. C., & Jiam, N. T. (2025). Overcoming the Challenge of Singing Among Cochlear Implant Users: An Analysis of the Disrupted Feedback Loop and Strategies for Improvement. Brain Sciences, 15(11), 1192. https://doi.org/10.3390/brainsci15111192