A Review on Smartphone Keystroke Dynamics as a Digital Biomarker for Understanding Neurocognitive Functioning

Abstract

1. Introduction

1.1. Defining Cognition and Its Domains

1.2. Intraindividual Variability

2. Methods

2.1. Search Strategy, Eligibility Criteria, and Selection Process

2.2. Data Extraction

3. Keystroke Dynamics and Affected Cognitive Domains in Neurodegenerative Disorders

3.1. Alzheimer’s Disease and Mild Cognitive Impairment

3.2. Multiple Sclerosis

4. Keystroke Dynamics and Affected Cognitive Domains in Mood Disorders

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pew Research Center. Mobile Fact Sheet; Pew Research Center: Washington, DC, USA, 2021. [Google Scholar]

- Shiffman, S.; Stone, A.A.; Hufford, M.R. Ecological Momentary Assessment. Annu. Rev. Clin. Psychol. 2008, 4, 1–32. [Google Scholar] [CrossRef]

- Meyerowitz-Katz, G.; Ravi, S.; Arnolda, L.; Feng, X.; Maberly, G.; Astell-Burt, T. Rates of Attrition and Dropout in App-Based Interventions for Chronic Disease: Systematic Review and Meta-Analysis. J. Med. Internet Res. 2020, 22, e20283. [Google Scholar] [CrossRef]

- Khokhlov, I.; Reznik, L.; Ajmera, S. Sensors in Mobile Devices Knowledge Base. IEEE Sens. Lett. 2020, 4, 1–4. [Google Scholar] [CrossRef]

- Parry, D.A.; Davidson, B.I.; Sewall, C.J.R.; Fisher, J.T.; Mieczkowski, H.; Quintana, D.S. A systematic review and meta-analysis of discrepancies between logged and self-reported digital media use. Nat. Hum. Behav. 2021, 5, 1535–1547. [Google Scholar] [CrossRef] [PubMed]

- Slegers, K.; van Boxtel, M.; Jolles, J. Effects of computer training and internet usage on cognitive abilities in older adults: A randomized controlled study. Aging Clin. Exp. Res. 2009, 21, 43–54. [Google Scholar] [CrossRef] [PubMed]

- Kliegel, M.; McDaniel, M.A.; Einstein, G.O. Prospective Memory: Cognitive, Neuroscience, Developmental, and Applied Perspectives; Psychology Press: London, UK, 2007. [Google Scholar]

- Dagum, P. Digital biomarkers of cognitive function. NPJ Digit. Med. 2018, 1, 10. [Google Scholar] [CrossRef]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-mental state”: A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Hodkinson, H.M. Evaluation of a Mental Test Score for Assessment of Mental Impairment in the Elderly. Age Ageing 1972, 1, 233–238. [Google Scholar] [CrossRef]

- Kahn, R.L.; Goldfarb, A.I.; Pollack, M.A.X.; Peck, A. Brief Objective Measures for the Determination of Mental Status in the Aged. Am. J. Psychiatry 1960, 117, 326–328. [Google Scholar] [CrossRef]

- Pfeiffer, E. A Short Portable Mental Status Questionnaire for the Assessment of Organic Brain Deficit in Elderly Patients. J. Am. Geriatr. Soc. 1975, 23, 433–441. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bedirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef] [PubMed]

- Jiang, X.; Jokinen, J.P.P.; Oulasvirta, A.; Ren, X. Learning to type with mobile keyboards: Findings with a randomized keyboard. Comput. Hum. Behav. 2022, 126, 106992. [Google Scholar] [CrossRef]

- Alfalahi, H.; Khandoker, A.H.; Chowdhury, N.; Iakovakis, D.; Dias, S.B.; Chaudhuri, K.R.; Hadjileontiadis, L.J. Diagnostic accuracy of keystroke dynamics as digital biomarkers for fine motor decline in neuropsychiatric disorders: A systematic review and meta-analysis. Sci. Rep. 2022, 12, 7690. [Google Scholar] [CrossRef] [PubMed]

- National Institue of Mental Health. Definitions of the RDoC Domains and Constructs; National Institue of Mental Health: Bethesda, MD, USA, 2022.

- Sanislow, C.A.; Pine, D.S.; Quinn, K.J.; Kozak, M.J.; Garvey, M.A.; Heinssen, R.K.; Wang, P.S.; Cuthbert, B.N. Developing constructs for psychopathology research: Research domain criteria. J. Abnorm. Psychol. 2010, 119, 631–639. [Google Scholar] [CrossRef] [PubMed]

- Bublak, P.; Redel, P.; Finke, K. Spatial and non-spatial attention deficits in neurodegenerative diseases: Assessment based on Bundesen’s theory of visual attention (TVA). Restor. Neurol. Neurosci. 2006, 24, 287–301. [Google Scholar] [PubMed]

- Uc, E.Y.; Rizzo, M. Driving and neurodegenerative diseases. Curr. Neurol. Neurosci. Rep. 2008, 8, 377–383. [Google Scholar] [CrossRef]

- O’Callaghan, C.; Kveraga, K.; Shine, J.M.; Adams, R.B., Jr.; Bar, M. Predictions penetrate perception: Converging insights from brain, behaviour and disorder. Conscious. Cogn. 2017, 47, 63–74. [Google Scholar] [CrossRef]

- Lazar, S.M.; Evans, D.W.; Myers, S.M.; Moreno-De Luca, A.; Moore, G.J. Social cognition and neural substrates of face perception: Implications for neurodevelopmental and neuropsychiatric disorders. Behav. Brain Res. 2014, 263, 1–8. [Google Scholar] [CrossRef]

- Robbins, T.W.; Arnsten, A.F. The neuropsychopharmacology of fronto-executive function: Monoaminergic modulation. Annu. Rev. Neurosci. 2009, 32, 267–287. [Google Scholar] [CrossRef]

- Ahmed, S.; Haigh, A.M.; de Jager, C.A.; Garrard, P. Connected speech as a marker of disease progression in autopsy-proven Alzheimer’s disease. Brain 2013, 136, 3727–3737. [Google Scholar] [CrossRef]

- Sperling, R.; Mormino, E.; Johnson, K. The evolution of preclinical Alzheimer’s disease: Implications for prevention trials. Neuron 2014, 84, 608–622. [Google Scholar] [CrossRef] [PubMed]

- Chan, J.C.S.; Stout, J.C.; Vogel, A.P. Speech in prodromal and symptomatic Huntington’s disease as a model of measuring onset and progression in dominantly inherited neurodegenerative diseases. Neurosci. Biobehav. Rev. 2019, 107, 450–460. [Google Scholar] [CrossRef]

- Montero-Odasso, M.; Verghese, J.; Beauchet, O.; Hausdorff, J.M. Gait and cognition: A complementary approach to understanding brain function and the risk of falling. J. Am. Geriatr. Soc. 2012, 60, 2127–2136. [Google Scholar] [CrossRef]

- Nestor, P.J.; Fryer, T.D.; Hodges, J.R. Declarative memory impairments in Alzheimer’s disease and semantic dementia. Neuroimage 2006, 30, 1010–1020. [Google Scholar] [CrossRef] [PubMed]

- Christopher, G.; MacDonald, J. The impact of clinical depression on working memory. Cogn. Neuropsychiatry 2005, 10, 379–399. [Google Scholar] [CrossRef] [PubMed]

- Hultsch, D.F.; MacDonald, S.W.; Hunter, M.A.; Levy-Bencheton, J.; Strauss, E. Intraindividual variability in cognitive performance in older adults: Comparison of adults with mild dementia, adults with arthritis, and healthy adults. Neuropsychology 2000, 14, 588–598. [Google Scholar] [CrossRef] [PubMed]

- Christ, B.U.; Combrinck, M.I.; Thomas, K.G.F. Both Reaction Time and Accuracy Measures of Intraindividual Variability Predict Cognitive Performance in Alzheimer’s Disease. Front. Hum. Neurosci. 2018, 12, 124. [Google Scholar] [CrossRef]

- Duchek, J.M.; Balota, D.A.; Tse, C.S.; Holtzman, D.M.; Fagan, A.M.; Goate, A.M. The utility of intraindividual variability in selective attention tasks as an early marker for Alzheimer’s disease. Neuropsychology 2009, 23, 746–758. [Google Scholar] [CrossRef]

- Mazerolle, E.L.; Wojtowicz, M.A.; Omisade, A.; Fisk, J.D. Intra-individual variability in information processing speed reflects white matter microstructure in multiple sclerosis. Neuroimage Clin. 2013, 2, 894–902. [Google Scholar] [CrossRef]

- Holtzer, R.; Foley, F.; D’Orio, V.; Spat, J.; Shuman, M.; Wang, C. Learning and cognitive fatigue trajectories in multiple sclerosis defined using a burst measurement design. Mult. Scler. 2013, 19, 1518–1525. [Google Scholar] [CrossRef]

- De Meijer, L.; Merlo, D.; Skibina, O.; Grobbee, E.J.; Gale, J.; Haartsen, J.; Maruff, P.; Darby, D.; Butzkueven, H.; Van der Walt, A. Monitoring cognitive change in multiple sclerosis using a computerized cognitive battery. Mult. Scler. J. Exp. Transl. Clin. 2018, 4, 2055217318815513. [Google Scholar] [CrossRef] [PubMed]

- Riegler, K.E.; Cadden, M.; Guty, E.T.; Bruce, J.M.; Arnett, P.A. Perceived Fatigue Impact and Cognitive Variability in Multiple Sclerosis. J. Int. Neuropsychol. Soc. 2022, 28, 281–291. [Google Scholar] [CrossRef]

- Wojtowicz, M.; Mazerolle, E.L.; Bhan, V.; Fisk, J.D. Altered functional connectivity and performance variability in relapsing-remitting multiple sclerosis. Mult. Scler. 2014, 20, 1453–1463. [Google Scholar] [CrossRef] [PubMed]

- Chow, R.; Rabi, R.; Paracha, S.; Vasquez, B.P.; Hasher, L.; Alain, C.; Anderson, N.D. Reaction Time Intraindividual Variability Reveals Inhibitory Deficits in Single- and Multiple-Domain Amnestic Mild Cognitive Impairment. J. Gerontol. B Psychol. Sci. Soc. Sci. 2022, 77, 71–83. [Google Scholar] [CrossRef] [PubMed]

- Haynes, B.I.; Bauermeister, S.; Bunce, D. A Systematic Review of Longitudinal Associations Between Reaction Time Intraindividual Variability and Age-Related Cognitive Decline or Impairment, Dementia, and Mortality. J. Int. Neuropsychol. Soc. 2017, 23, 431–445. [Google Scholar] [CrossRef] [PubMed]

- Christensen, H.; Dear, K.B.; Anstey, K.J.; Parslow, R.A.; Sachdev, P.; Jorm, A.F. Within-occasion intraindividual variability and preclinical diagnostic status: Is intraindividual variability an indicator of mild cognitive impairment? Neuropsychology 2005, 19, 309–317. [Google Scholar] [CrossRef]

- Lovden, M.; Li, S.C.; Shing, Y.L.; Lindenberger, U. Within-person trial-to-trial variability precedes and predicts cognitive decline in old and very old age: Longitudinal data from the Berlin Aging Study. Neuropsychologia 2007, 45, 2827–2838. [Google Scholar] [CrossRef]

- Bielak, A.A.; Hultsch, D.F.; Strauss, E.; Macdonald, S.W.; Hunter, M.A. Intraindividual variability in reaction time predicts cognitive outcomes 5 years later. Neuropsychology 2010, 24, 731–741. [Google Scholar] [CrossRef]

- Scott, B.M.; Austin, T.; Royall, D.R.; Hilsabeck, R.C. Cognitive intraindividual variability as a potential biomarker for early detection of cognitive and functional decline. Neuropsychology 2023, 37, 52–63. [Google Scholar] [CrossRef]

- Weizenbaum, E.L.; Fulford, D.; Torous, J.; Pinsky, E.; Kolachalama, V.B.; Cronin-Golomb, A. Smartphone-Based Neuropsychological Assessment in Parkinson’s Disease: Feasibility, Validity, and Contextually Driven Variability in Cognition. J. Int. Neuropsychol. Soc. 2022, 28, 401–413. [Google Scholar] [CrossRef]

- Moore, R.C.; Ackerman, R.A.; Russell, M.T.; Campbell, L.M.; Depp, C.A.; Harvey, P.D.; Pinkham, A.E. Feasibility and validity of ecological momentary cognitive testing among older adults with mild cognitive impairment. Front. Digit. Health 2022, 4, 946685. [Google Scholar] [CrossRef]

- Moore, R.C.; Swendsen, J.; Depp, C.A. Applications for self-administered mobile cognitive assessments in clinical research: A systematic review. Int. J. Methods Psychiatr. Res. 2017, 26, e1562. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Jankovic, F.; Marinsek, N.; Foschini, L.; Kourtis, L.; Signorini, A.; Pugh, M.; Shen, J.; Yaari, R.; Maljkovic, V.; et al. Developing Measures of Cognitive Impairment in the Real World from Consumer-Grade Multimodal Sensor Streams. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2145–2155. [Google Scholar]

- Ntracha, A.; Iakovakis, D.; Hadjidimitriou, S.; Charisis, V.S.; Tsolaki, M.; Hadjileontiadis, L.J. Detection of Mild Cognitive Impairment Through Natural Language and Touchscreen Typing Processing. Front. Digit. Health 2020, 2, 567158. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.H.; Leow, A.; Ross, M.K.; DeLuca, J.; Chiaravalloti, N.; Costa, S.L.; Genova, H.M.; Weber, E.; Hussain, F.; Demos, A.P. Associations between smartphone keystroke dynamics and cognition in MS. Digit. Health 2022, 8, 20552076221143234. [Google Scholar] [CrossRef] [PubMed]

- Lam, K.H.; Meijer, K.A.; Loonstra, F.C.; Coerver, E.M.E.; Twose, J.; Redeman, E.; Moraal, B.; Barkhof, F.; de Groot, V.; Uitdehaag, B.M.J.; et al. Real-world keystroke dynamics are a potentially valid biomarker for clinical disability in multiple sclerosis. Mult. Scler. J. 2020, 27, 1421–1431. [Google Scholar] [CrossRef]

- Lam, K.H.; Twose, J.; Lissenberg-Witte, B.; Licitra, G.; Meijer, K.; Uitdehaag, B.; De Groot, V.; Killestein, J. The Use of Smartphone Keystroke Dynamics to Passively Monitor Upper Limb and Cognitive Function in Multiple Sclerosis: Longitudinal Analysis. J. Med. Internet Res. 2022, 24, e37614. [Google Scholar] [CrossRef] [PubMed]

- Hoeijmakers, A.; Licitra, G.; Meijer, K.; Lam, K.H.; Molenaar, P.; Strijbis, E.; Killestein, J. Disease severity classification using passively collected smartphone-based keystroke dynamics within multiple sclerosis. Sci. Rep. 2023, 13, 1871. [Google Scholar] [CrossRef]

- Ning, E.; Cladek, A.T.; Ross, M.K.; Kabir, S.; Barve, A.; Kennelly, E.; Hussain, F.; Duffecy, J.; Langenecker, S.L.; Nguyen, T.; et al. Smartphone-derived Virtual Keyboard Dynamics Coupled with Accelerometer Data as a Window into Understanding Brain Health: Smartphone Keyboard and Accelerometer as Window into Brain Health. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; p. 326. [Google Scholar]

- Ross, M.K.; Demos, A.P.; Zulueta, J.; Piscitello, A.; Langenecker, S.A.; McInnis, M.; Ajilore, O.; Nelson, P.C.; Ryan, K.A.; Leow, A. Naturalistic smartphone keyboard typing reflects processing speed and executive function. Brain Behav. 2021, 11, e2363. [Google Scholar] [CrossRef]

- Zulueta, J.; Piscitello, A.; Rasic, M.; Easter, R.; Babu, P.; Langenecker, S.A.; McInnis, M.; Ajilore, O.; Nelson, P.C.; Ryan, K.; et al. Predicting Mood Disturbance Severity with Mobile Phone Keystroke Metadata: A BiAffect Digital Phenotyping Study. J. Med. Internet Res. 2018, 20, e241. [Google Scholar] [CrossRef]

- Mastoras, R.E.; Iakovakis, D.; Hadjidimitriou, S.; Charisis, V.; Kassie, S.; Alsaadi, T.; Khandoker, A.; Hadjileontiadis, L.J. Touchscreen typing pattern analysis for remote detection of the depressive tendency. Sci. Rep. 2019, 9, 13414. [Google Scholar] [CrossRef]

- Blennow, K.; de Leon, M.J.; Zetterberg, H. Alzheimer’s disease. Lancet 2006, 368, 387–403. [Google Scholar] [CrossRef] [PubMed]

- Szatloczki, G.; Hoffmann, I.; Vincze, V.; Kalman, J.; Pakaski, M. Speaking in Alzheimer’s Disease, is That an Early Sign? Importance of Changes in Language Abilities in Alzheimer’s Disease. Front. Aging Neurosci. 2015, 7, 195. [Google Scholar] [CrossRef] [PubMed]

- McFarlin, D.E.; McFarland, H.F. Multiple Sclerosis (first of two parts). N. Engl. J. Med. 1982, 307, 1183–1188. [Google Scholar] [CrossRef]

- McFarlin, D.E.; McFarland, H.F. Multiple Sclerosis (second of two parts). N. Engl. J. Med. 1982, 307, 1246–1251. [Google Scholar] [CrossRef] [PubMed]

- Lebrun, C.; Blanc, F.; Brassat, D.; Zephir, H.; de Seze, J.; Cfsep. Cognitive function in radiologically isolated syndrome. Mult. Scler. 2010, 16, 919–925. [Google Scholar] [CrossRef]

- Pratap, A.; Grant, D.; Vegesna, A.; Tummalacherla, M.; Cohan, S.; Deshpande, C.; Mangravite, L.; Omberg, L. Evaluating the Utility of Smartphone-Based Sensor Assessments in Persons With Multiple Sclerosis in the Real-World Using an App (elevateMS): Observational, Prospective Pilot Digital Health Study. JMIR Mhealth Uhealth 2020, 8, e22108. [Google Scholar] [CrossRef] [PubMed]

- Porter, R.J.; Robinson, L.J.; Malhi, G.S.; Gallagher, P. The neurocognitive profile of mood disorders—A review of the evidence and methodological issues. Bipolar Disord. 2015, 17, 21–40. [Google Scholar] [CrossRef]

- Rock, P.L.; Roiser, J.P.; Riedel, W.J.; Blackwell, A.D. Cognitive impairment in depression: A systematic review and meta-analysis. Psychol. Med. 2014, 44, 2029–2040. [Google Scholar] [CrossRef]

- Malhi, G.S.; Ivanovski, B.; Hadzi-Pavlovic, D.; Mitchell, P.B.; Vieta, E.; Sachdev, P. Neuropsychological deficits and functional impairment in bipolar depression, hypomania and euthymia. Bipolar Disord. 2007, 9, 114–125. [Google Scholar] [CrossRef]

- Bourne, C.; Aydemir, O.; Balanza-Martinez, V.; Bora, E.; Brissos, S.; Cavanagh, J.T.; Clark, L.; Cubukcuoglu, Z.; Dias, V.V.; Dittmann, S.; et al. Neuropsychological testing of cognitive impairment in euthymic bipolar disorder: An individual patient data meta-analysis. Acta Psychiatr. Scand. 2013, 128, 149–162. [Google Scholar] [CrossRef]

- Kurtz, M.M.; Gerraty, R.T. A meta-analytic investigation of neurocognitive deficits in bipolar illness: Profile and effects of clinical state. Neuropsychology 2009, 23, 551–562. [Google Scholar] [CrossRef] [PubMed]

- Robinson, L.J.; Thompson, J.M.; Gallagher, P.; Goswami, U.; Young, A.H.; Ferrier, I.N.; Moore, P.B. A meta-analysis of cognitive deficits in euthymic patients with bipolar disorder. J. Affect. Disord. 2006, 93, 105–115. [Google Scholar] [CrossRef]

- McDermott, L.M.; Ebmeier, K.P. A meta-analysis of depression severity and cognitive function. J. Affect. Disord. 2009, 119, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Austin, M.P.; Mitchell, P.; Goodwin, G.M. Cognitive deficits in depression: Possible implications for functional neuropathology. Br. J. Psychiatry 2001, 178, 200–206. [Google Scholar] [CrossRef] [PubMed]

- McTeague, L.M.; Huemer, J.; Carreon, D.M.; Jiang, Y.; Eickhoff, S.B.; Etkin, A. Identification of Common Neural Circuit Disruptions in Cognitive Control Across Psychiatric Disorders. Am. J. Psychiatry 2017, 174, 676–685. [Google Scholar] [CrossRef] [PubMed]

- Fales, C.L.; Barch, D.M.; Rundle, M.M.; Mintun, M.A.; Snyder, A.Z.; Cohen, J.D.; Mathews, J.; Sheline, Y.I. Altered Emotional Interference Processing in Affective and Cognitive-Control Brain Circuitry in Major Depression. Biol. Psychiatry 2008, 63, 377–384. [Google Scholar] [CrossRef]

- Crowe, S.F. The differential contribution of mental tracking, cognitive flexibility, visual search, and motor speed to performance on parts A and B of the Trail Making Test. J. Clin. Psychol. 1998, 54, 585–591. [Google Scholar] [CrossRef]

- Reitan, R.M. Validity of the Trail Making Test as an indicator of organic brain damage. Percept. Mot. Ski. 1958, 8, 271–276. [Google Scholar] [CrossRef]

- Arbuthnott, K.; Frank, J. Trail Making Test, Part B as a Measure of Executive Control: Validation Using a Set-Switching Paradigm. J. Clin. Exp. Neuropsychol. 2000, 22, 518–528. [Google Scholar] [CrossRef]

- Brouillette, R.M.; Foil, H.; Fontenot, S.; Correro, A.; Allen, R.; Martin, C.K.; Bruce-Keller, A.J.; Keller, J.N. Feasibility, reliability, and validity of a smartphone based application for the assessment of cognitive function in the elderly. PLoS ONE 2013, 8, e65925. [Google Scholar] [CrossRef]

- Faurholt-Jepsen, M.; Vinberg, M.; Frost, M.; Debel, S.; Margrethe Christensen, E.; Bardram, J.E.; Kessing, L.V. Behavioral activities collected through smartphones and the association with illness activity in bipolar disorder. Int. J. Methods Psychiatr. Res. 2016, 25, 309–323. [Google Scholar] [CrossRef] [PubMed]

- Chang, J.M.; Fang, C.C.; Ho, K.H.; Kelly, N.; Wu, P.Y.; Ding, Y.; Chu, C.; Gilbert, S.; Kamal, A.E.; Kung, S.Y. Capturing Cognitive Fingerprints from Keystroke Dynamics. IT Prof. 2013, 15, 24–28. [Google Scholar] [CrossRef]

- Opoku Asare, K.; Moshe, I.; Terhorst, Y.; Vega, J.; Hosio, S.; Baumeister, H.; Pulkki-Råback, L.; Ferreira, D. Mood ratings and digital biomarkers from smartphone and wearable data differentiates and predicts depression status: A longitudinal data analysis. Pervasive Mob. Comput. 2022, 83, 101621. [Google Scholar] [CrossRef]

- Saeb, S.; Zhang, M.; Karr, C.J.; Schueller, S.M.; Corden, M.E.; Kording, K.P.; Mohr, D.C. Mobile Phone Sensor Correlates of Depressive Symptom Severity in Daily-Life Behavior: An Exploratory Study. J. Med. Internet Res. 2015, 17, e175. [Google Scholar] [CrossRef]

- Moshe, I.; Terhorst, Y.; Opoku Asare, K.; Sander, L.B.; Ferreira, D.; Baumeister, H.; Mohr, D.C.; Pulkki-Raback, L. Predicting Symptoms of Depression and Anxiety Using Smartphone and Wearable Data. Front. Psychiatry 2021, 12, 625247. [Google Scholar] [CrossRef]

- Voigt, P.; Von dem Bussche, A. The eu general data protection regulation (GDPR). In A Practical Guide, 1st ed.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10, pp. 9–249. [Google Scholar]

- Throuvala, M.A.; Pontes, H.M.; Tsaousis, I.; Griffiths, M.D.; Rennoldson, M.; Kuss, D.J. Exploring the Dimensions of Smartphone Distraction: Development, Validation, Measurement Invariance, and Latent Mean Differences of the Smartphone Distraction Scale (SDS). Front. Psychiatry 2021, 12, 642634. [Google Scholar] [CrossRef]

- Hadar, A.; Hadas, I.; Lazarovits, A.; Alyagon, U.; Eliraz, D.; Zangen, A. Answering the missed call: Initial exploration of cognitive and electrophysiological changes associated with smartphone use and abuse. PLoS ONE 2017, 12, e0180094. [Google Scholar] [CrossRef]

- Demirci, K.; Akgonul, M.; Akpinar, A. Relationship of smartphone use severity with sleep quality, depression, and anxiety in university students. J. Behav. Addict. 2015, 4, 85–92. [Google Scholar] [CrossRef]

- Choi, J.S.; Park, S.M.; Roh, M.S.; Lee, J.Y.; Park, C.B.; Hwang, J.Y.; Gwak, A.R.; Jung, H.Y. Dysfunctional inhibitory control and impulsivity in Internet addiction. Psychiatry Res. 2014, 215, 424–428. [Google Scholar] [CrossRef]

- Felisoni, D.D.; Godoi, A.S. Cell phone usage and academic performance: An experiment. Comput. Educ. 2018, 117, 175–187. [Google Scholar] [CrossRef]

- Wade, N.E.; Ortigara, J.M.; Sullivan, R.M.; Tomko, R.L.; Breslin, F.J.; Baker, F.C.; Fuemmeler, B.F.; Delrahim Howlett, K.; Lisdahl, K.M.; Marshall, A.T.; et al. Passive Sensing of Preteens’ Smartphone Use: An Adolescent Brain Cognitive Development (ABCD) Cohort Substudy. JMIR Ment. Health 2021, 8, e29426. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.H.; Lin, Y.C.; Lee, Y.H.; Lin, P.H.; Lin, S.H.; Chang, L.R.; Tseng, H.W.; Yen, L.Y.; Yang, C.C.; Kuo, T.B. Time distortion associated with smartphone addiction: Identifying smartphone addiction via a mobile application (App). J. Psychiatr Res. 2015, 65, 139–145. [Google Scholar] [CrossRef] [PubMed]

- Oulasvirta, A.; Rattenbury, T.; Ma, L.; Raita, E. Habits make smartphone use more pervasive. Pers. Ubiquitous Comput. 2011, 16, 105–114. [Google Scholar] [CrossRef]

- Heitmayer, M.; Lahlou, S. Why are smartphones disruptive? An empirical study of smartphone use in real-life contexts. Comput. Hum. Behav. 2021, 116, 106637. [Google Scholar] [CrossRef]

- Lee, M.; Han, M.; Pak, J. Analysis of Behavioral Characteristics of Smartphone Addiction Using Data Mining. Appl. Sci. 2018, 8, 1191. [Google Scholar] [CrossRef]

- Stothart, C.; Mitchum, A.; Yehnert, C. The attentional cost of receiving a cell phone notification. J. Exp. Psychol. Hum. Percept. Perform. 2015, 41, 893–897. [Google Scholar] [CrossRef]

- Ralph, B.C.; Thomson, D.R.; Cheyne, J.A.; Smilek, D. Media multitasking and failures of attention in everyday life. Psychol. Res. 2014, 78, 661–669. [Google Scholar] [CrossRef] [PubMed]

- Toh, W.X.; Ng, W.Q.; Yang, H.; Yang, S. Disentangling the effects of smartphone screen time, checking frequency, and problematic use on executive function: A structural equation modelling analysis. Curr. Psychol. 2021, 42, 4225–4242. [Google Scholar] [CrossRef]

- Hartanto, A.; Lee, K.Y.X.; Chua, Y.J.; Quek, F.Y.X.; Majeed, N.M. Smartphone use and daily cognitive failures: A critical examination using a daily diary approach with objective smartphone measures. Br. J. Psychol. 2023, 114, 70–85. [Google Scholar] [CrossRef]

- Chen, Q.; Yan, Z. Does multitasking with mobile phones affect learning? A review. Comput. Hum. Behav. 2016, 54, 34–42. [Google Scholar] [CrossRef]

- van der Schuur, W.A.; Baumgartner, S.E.; Sumter, S.R.; Valkenburg, P.M. The consequences of media multitasking for youth: A review. Comput. Hum. Behav. 2015, 53, 204–215. [Google Scholar] [CrossRef]

- Sharifian, N.; Zahodne, L.B. Social Media Bytes: Daily Associations Between Social Media Use and Everyday Memory Failures Across the Adult Life Span. J. Gerontol. B Psychol. Sci. Soc. Sci. 2020, 75, 540–548. [Google Scholar] [CrossRef]

- Odgers, C.L.; Jensen, M.R. Annual Research Review: Adolescent mental health in the digital age: Facts, fears, and future directions. J. Child Psychol. Psychiatry 2020, 61, 336–348. [Google Scholar] [CrossRef] [PubMed]

- Ivie, E.J.; Pettitt, A.; Moses, L.J.; Allen, N.B. A meta-analysis of the association between adolescent social media use and depressive symptoms. J. Affect. Disord. 2020, 275, 165–174. [Google Scholar] [CrossRef] [PubMed]

- Ward, A.F.; Duke, K.; Gneezy, A.; Bos, M.W. Brain Drain: The Mere Presence of One’s Own Smartphone Reduces Available Cognitive Capacity. J. Assoc. Consum. Res. 2017, 2, 140–154. [Google Scholar] [CrossRef]

- Kushlev, K.; Proulx, J.; Dunn, E.W. Silence Your Phones. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 1011–1020. [Google Scholar]

- Frost, P.; Donahue, P.; Goeben, K.; Connor, M.; Cheong, H.S.; Schroeder, A. An examination of the potential lingering effects of smartphone use on cognition. Appl. Cogn. Psychol. 2019, 33, 1055–1067. [Google Scholar] [CrossRef]

- Hartmann, M.; Martarelli, C.S.; Reber, T.P.; Rothen, N. Does a smartphone on the desk drain our brain? No evidence of cognitive costs due to smartphone presence in a short-term and prospective memory task. Conscious. Cogn. 2020, 86, 103033. [Google Scholar] [CrossRef]

- Dupont, D.; Zhu, Q.; Gilbert, S.J. Value-based routing of delayed intentions into brain-based versus external memory stores. J. Exp. Psychol. Gen. 2023, 152, 175–187. [Google Scholar] [CrossRef]

- Klimova, B.; Valis, M. Smartphone Applications Can Serve as Effective Cognitive Training Tools in Healthy Aging. Front. Aging Neurosci. 2017, 9, 436. [Google Scholar] [CrossRef]

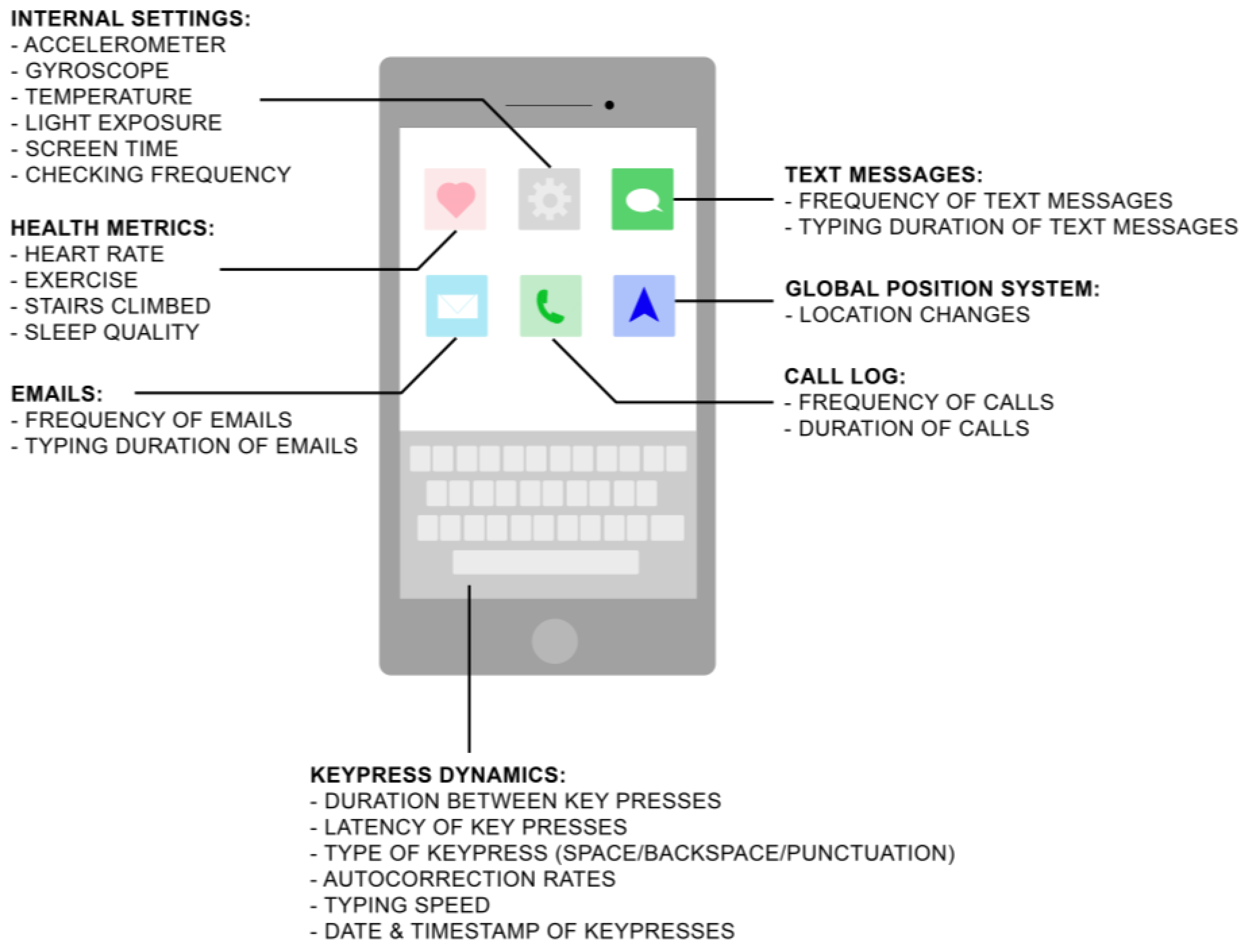

| Type | Definition | Examples | Implementable Digital Devices |

|---|---|---|---|

| Active Data Collection | Data acquisition from participants requiring active participation, allowing for subjective data measurements | Ecological momentary assessments, mood/cognitive/motor self-reported assessments | Smartphones, tablets, smartwatches |

| Passive Data Collection | Unobtrusive data acquisition from participants from digital technology, where participants are unaware of collection, allowing for objective data measurements | Keystroke dynamics, accelerometer, GPS, screen time, temperature, phone-checking frequency, physical activity, number of text messages and emails, duration and frequency of phone calls made | Smartphones, tablets, smartwatches, sleep monitors, fitness trackers |

| Study | N Participants | Digital Technologies Implemented | Data Collected | Findings | Statistical Analysis | Machine Learning Models and Validation Metrics |

|---|---|---|---|---|---|---|

| Chen, R. et al., 2019 [46] | 24 people with mild cognitive impairment (MCI), 7 people with mild AD dementia, 84 healthy controls | iPhone 7 Plus, Apple Watch Series 2, 10.5” iPad Pro with a smart keyboard, Beddit sleep monitoring device | Passive: Number of text messages sent and received, time duration to send a text message, typing speeds, accelerometer, gyroscope, stairs climbed, stand hours, workout sessions, heart rate, sleep sensors, application usage time, phone unlocks, breathe sessions Active: Daily energy surveys, tapping task, dragging task, typed narrative task, verbal narrative task, video, and audio bi-weekly | Symptomatic participants (with MCI or with mild AD dementia) typed slower, had a less regular routine (measured via first and last phone acceleration), took their first steps of the day later (measured via phone’s pedometer), sent and received fewer text messages, relied more on applications suggested by Siri, and had worse survey compliance than healthy controls | Not Applicable (N/A) | Model: Extreme gradient boosting algorithm Validation: training/testing: 70/30 Results: demographics AUROC: 0.757; device-derived features AUROC: 0.771 (±0.016, 95% CI); demographics + device derived features = 0.804 (±0.015, 95% CI); age-matched demographics AUROC: 0.519 (±0.018, 95% CI); age-matched device features AUROC: 0.726 (±0.021, 95% CI); age-matched demographics + device features AUROC: 0.725 (±0.022, 95% CI) |

| Ntracha, A. et al., 2020 [47] | 11 people with MCI, 12 healthy controls | Android smartphones | Passive: Keystroke dynamics (timestamps of keypresses and releases, backspace, pauses, number of characters typed, typing session duration) Active: PHQ-9 Questionnaire, written assignments for natural language processing (typing up to four paragraphs on a given topic) | Participants with mild cognitive impairment (MCI) were able to be distinguished from healthy controls using passive and active data in natural learning processing models, keystroke models, and fused models. Participants with MCI had bradykinesia and rigidity detected from their keystroke dynamics when compared to healthy controls | N/A | Models: k-Nearest Neighbors (k-NN), logistical regression (LR), random forest, ensemble method Validation: leave one subject out method for training and testing Results: Keystroke features with kNN classifier: AUC: 0.78 (0.68–0.88, 95% CI), specificity/sensitivity: 0.64/0.92 Natural Language Processing (NLP) features with LR classifier: AUC: 0.76 (0.65–0.85, 95% CI), specificity/sensitivity: 0.80/0.71 Ensemble model fusion of keystroke and NLP features: AUC: 0.75 (0.63–0.86, 95% CI), specificity/sensitivity: 0.90/0.60 |

| Chen, M. et al., 2022 [48] | 16 people with multiple sclerosis (MS), 10 healthy controls | iOS and Android smartphones | Passive: Keystroke dynamics (keypress type, timestamp, relative distance between consecutive keystrokes, distance between the keystroke and the center of the keyboard), accelerometer Active: Digital neuropsychological tests (symbol digit modalities test, digit span, trail-making test, Delis–Kaplan executive function system (D-KEFS) color-word interference test, controlled oral word association test or D-KEFS verbal fluency test, California verbal learning test, Rey auditory verbal learning test, or Hopkins verbal learning test-revised), symptom rating scales (modified fatigue impact scale, Chicago multiscale depression inventory, state-trait anxiety inventory) | Participants with MS with less severe symptoms had higher uses of the backspace key and a faster typing speed. Faster typing speed was associated with better performance on measures of processing speed, attention, and executive functioning as well as having less impact from fatigue and having less severe anxiety symptoms | Method: Multilevel models (level 1: keystroke dynamics within typing session; level 2: subjects) Significant results: Features evaluated using Welch’s t-test: number of days of data collection (mean number): −1.86, p = 0.076 proportion of time spent using one hand to type (%): 542.70, p < 0.001 number of characters per typing session (mean): 0.01, p < 0.107 median inter-key delay (typing speed) per session (seconds): −1.45, p < 0.001 inter-key delay median absolute deviation per session (seconds): 0.11, p = 0.032 | N/A |

| Lam, K.H. et al., 2020 [49] | 102 people with MS, 24 healthy controls | iOS and Android smartphones | Passive: Keypress dynamics (type of keypress (alphanumeric, backspace, space key, punctuation), time and date of keypresses, successive keypress latencies and releases Active: Assessments, including expanded disability status scale, nine-hole peg test, symbol digit modalities test (SDMT) | Participants with MS had higher keypress latencies, release latencies, flight time, post-punctuation pause, pre-correction and post-correction slowing compared to healthy controls | Method: Pearson’s correlation coefficient Significant results: SDMT with: press-press latency: −0.525, p > 0.01 release-release latency −0.553, p < 0.01 hold time: −0.286, p < 0.01 flight time: −0.525, p < 0.01 pre-correction slowing: −0.300, p < 0.01 post-correction slowing −0.444, p < 0.01 correction duration: −0.162, p < 0.05 after punctuation pause: −0.317, p < 0.01 | N/A |

| Lam, K.H. et al., 2022 [50] | 102 people with MS | iOS and Android smartphones | Passive: Keypress dynamics (type of keypress (alphanumeric, backspace, space key, punctuation), time and date of keypresses, successive keypress latencies and releases Active: Assessments, including expanded disability status scale, nine-hole peg test, symbol digit modalities test (SDMT) | Participants with MS with worse arm function had higher latency between keypresses and participants with worse processing speed corresponded with higher latency using punctuation and backspace keys | Method: Linear mixed-models Significant results: cognitive score cluster associated with SDMT: −8.57 (−12.02 to −5.12, 95% CI), p < 0.001, random effect variance: 82.7%, explained variance: 25.4%; cognitive score cluster and covariances (age, sex, level of education): −5.02 (−9.02 to −1.02), p = 0.02, random effect variance: 77.1%, explained variance: 30.4%; hybrid model (including covariates): between subjects: −11.25 (−17.28 to −5.21), p < 0.001; within subjects: −0.35 (−5.60 to 4.89), p = 0.9 | N/A |

| Hoeijmakers, A. et al., 2023 [51] | 102 people with multiple sclerosis (MS), 24 healthy controls | iOS and Android smartphones | Passive: Keypress dynamics (type of keypress (alphanumeric, backspace, space key, punctuation), time and date of keypresses, successive keypress latencies and releases Active: Assessments, including expanded disability status scale, nine-hole peg test, symbol digit modalities test | Participants with MS could be discerned from healthy controls by using clinical outcome measures as targets for machine learning (ML) techniques, with ML techniques being able to estimate level of disease severity, manual dexterity, and cognitive capabilities | N/A | Models: Binary classifications: random forest, logistical regression, k-nearest neighbors, support vector machine, Gaussian naive Bayes Validation: training/testing: 80/20 Results from cross-validation: AUC = 0.762 (0.677–0.828, 95% CI) AUC-ROC = 0.726, sensitivity/specificity/accuracy: 0.750/0.429/0.48 estimating level of fine motor skills AUROC: 0.753 |

| Ning, E. et al., 2023 [52] | 64 participants with mood disorders (major depressive disorder, bipolar I/II, persistent depressive disorder, or cyclothymia), 26 healthy controls | iOS and Android smartphones | Passive: Keystroke dynamics (category of keypress (i.e., alphanumeric, backspace, punctuation), associated timestamps, autocorrection events), accelerometer, gyroscope Active: Digital trail-making tests part B | Participants with mood disorders showed lower cognitive performance on the trail-making test. There were also diurnal pattern differences between participant with mood disorders and healthy controls, where individuals with higher cognitive performances had faster keypresses and were less sensitive to the time of day | Method: longitudinal mixed effects Significant results: aging effect: typing slowed ~20 ms/7 years; sessions with lower accuracy had shorter IKDs ~10 ms, b = −0.89, p < 0.001; more variable IKD within a session has slower session typing, b = 434.57, p < 0.001; more typing, faster typing, b = −4.35, p < 0.001 | N/A |

| Ross, M. et al., 2021 [53] | 11 people with BP, 8 healthy controls | Samsung Galaxy Note 4 Android smartphone | Passive: Keystroke dynamics (category of keypress (i.e., alphanumeric, backspace, punctuation), associated timestamps, autocorrection events) Active: Digital trail-making tests part B (dTMT-B) | Participants with mood disorders had significantly different keystroke dynamics from healthy controls when compared to depression ratings and the trail-making test | Method: longitudinal mixed effects Significant results: subject-centered HDRS-17 score predicting dTMT-B: b = 0.038, p = 0.004; subject-centered typing speed predicting dTMT-B: b = 0.032, p = 0.004; faster grand mean centered typing speed suggesting faster dTMT-B completion time: b = 0.189, p < 0.001 | N/A |

| Zulueta, J. et al., 2018 [54] | 9 people with bipolar disorder (BP) (5 with BP I, 4 with BP II) | Samsung Galaxy Note 4 Android smartphones | Passive: Keystroke dynamics (keystroke entry date and time, duration of keypress, latency between keypresses, distance from last key along two axes, and autocorrection, backspace, space switching-keyboard, and other behaviors), accelerometer Active: Hamilton depression rating scale, Young mania rating scale | Participants with bipolar disorder who were in a potentially more manic state (had higher mania symptoms) used the backspace key less and while in a potentially more depressive state had an increase in autocorrection rates | Method: mixed effects regression Significant results: average accelerometer displacement with HDRS: 3.20 (1.20 to 5.21, 95% CI), p = 0.0017; average accelerometer displacement with YMRS: 0.39 (0.15 to 0.64, 95% CI) p = 0.003; autocorrect rate with HDRS: 2.67 (0.87 to 4.47, 95% CI), p = 0.0036; backspace ratio with YMRS −0.30 (−0.53 to −0.070, 95% CI), p = 0.014 | N/A |

| Mastoras, R.E. et al., 2019 [55] | 11 people with depressive tendencies, 14 healthy controls | Android smartphones | Passive: Keystroke dynamics (timestamps of keypresses and releases, delete rate, number of characters typed and typing session duration) Active: Patient Health Questionnaire-9 | Participants with depressive tendencies held down keypresses for longer and had longer pauses between keypresses compared to healthy controls | N/A | Models: Random forest, gradient boosting classifier, support vector machine classifier Validation: leave one subject out method for training and testing Results: random forest (best performing pipeline): AUC = 0.89 (0.72–1.00, 95% CI), sensitivity/specificity: 0.82/0.86 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, T.M.; Leow, A.D.; Ajilore, O. A Review on Smartphone Keystroke Dynamics as a Digital Biomarker for Understanding Neurocognitive Functioning. Brain Sci. 2023, 13, 959. https://doi.org/10.3390/brainsci13060959

Nguyen TM, Leow AD, Ajilore O. A Review on Smartphone Keystroke Dynamics as a Digital Biomarker for Understanding Neurocognitive Functioning. Brain Sciences. 2023; 13(6):959. https://doi.org/10.3390/brainsci13060959

Chicago/Turabian StyleNguyen, Theresa M., Alex D. Leow, and Olusola Ajilore. 2023. "A Review on Smartphone Keystroke Dynamics as a Digital Biomarker for Understanding Neurocognitive Functioning" Brain Sciences 13, no. 6: 959. https://doi.org/10.3390/brainsci13060959

APA StyleNguyen, T. M., Leow, A. D., & Ajilore, O. (2023). A Review on Smartphone Keystroke Dynamics as a Digital Biomarker for Understanding Neurocognitive Functioning. Brain Sciences, 13(6), 959. https://doi.org/10.3390/brainsci13060959