BCI Applications to Creativity: Review and Future Directions, from little-c to C2

Abstract

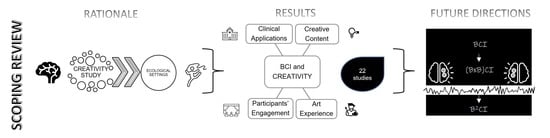

1. Introduction

1.1. Brain–Computer Interface

1.2. Creativity: Some Definitions

1.3. The Role of BCI in the Study of Creativity

2. Materials and Methods

2.1. Stage 1: Identifying the Research Question

2.2. Stage 2: Identifying Relevant Studies

2.3. Stage 3: Study Selection

2.4. Stage 4: Charting the Data

2.5. Stage 5: Collating, Summarizing, and Reporting the Results

3. Results

3.1. Characteristics of the Studies

3.2. Clinical Applications

3.3. Art Experience in Real-World Settings

3.4. Content Creation

3.4.1. Proto-Creativity

3.4.2. Creative Artifacts Production

3.5. Participants’ Engagement

4. Discussion, Conclusions, and Future Directions

4.1. Highlights from the Scoping Review

4.2. Future Directions and Perspectives

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rêgo, G.G.; Gonçalves, Ó.F.; Boggio, P.S. Attention neuroenhancement through tDCS or neurofeedback: A randomized, single-blind, controlled trial. Sci. Rep. 2022, 12, 17613. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Sui, L. Alpha band neurofeedback training based on a portable device improves working memory performance of young people. Biomed. Signal Process. Control 2023, 80, 104308. [Google Scholar] [CrossRef]

- Shaw, S.B.; Nicholson, A.A.; Ros, T.; Harricharan, S.; Terpou, B.; Densmore, M.; Theberge, J.; Frewen, P.; Lanius, R.A. Increased top-down control of emotions during symptom provocation working memory tasks following a RCT of alpha-down neurofeedback in PTSD. NeuroImage Clin. 2023, 37, 103313. [Google Scholar] [CrossRef] [PubMed]

- Chang, M.; Ando, H.; Maeda, T.; Naruse, Y. Behavioral effect of mismatch negativity neurofeedback on foreign language learning. PLoS ONE 2021, 16, e0254771. [Google Scholar] [CrossRef]

- Sho’ouri, N.; Firoozabadi, M.; Badie, K. The effect of beta/alpha neurofeedback training on imitating brain activity patterns in visual artists. Biomed. Signal Process. Control 2020, 56, 101661. [Google Scholar] [CrossRef]

- Song, X.; Zeng, Y.; Tong, L.; Shu, J.; Yang, Q.; Kou, J.; Sun, M.; Yan, B. A collaborative brain-computer interface framework for enhancing group detection performance of dynamic visual targets. Comput. Intell. Neurosci. 2022, 2022, 4752450. [Google Scholar] [CrossRef]

- Vanutelli, M.E.; Lucchiari, C. “Hyperfeedback” as a Tool to Assess and Induce Interpersonal Synchrony: The Role of Applied Social Neurosciences for Research, Training, and Clinical Practice. J. Health Med. Sci. 2022, 5, 11–18. [Google Scholar] [CrossRef]

- Lakoff, G.; Johnson, M. Metaphors We Live by; University of Chicago Press: Chicago, IL, USA, 1989. [Google Scholar]

- Simonton, D.K. What is a creative idea? Little-c versus Big-C creativity. In Handbook of Research on Creativity; Thomas, K., Chan, J., Eds.; Edward Elgar Publishing: Cheltenham, UK, 2013; pp. 69–83. [Google Scholar]

- Bijvoet-van den Berg, S.; Hoicka, E. Individual differences and age-related changes in divergent thinking in toddlers and preschoolers. Dev. Psychol. 2014, 50, 1629–1639. [Google Scholar] [CrossRef]

- Runco, M.A.; Millar, G.; Acar, S.; Cramond, B. Torrance tests of creative thinking as predictors of personal and public achievement: A fifty-year follow-up. Creat. Res. J. 2010, 22, 361–368. [Google Scholar] [CrossRef]

- Lucchiari, C.; Sala, P.M.; Vanutelli, M.E. Promoting Creativity Through Transcranial Direct Current Stimulation (tDCS). A Critical Review. Front. Behav. Neurosci. 2018, 12, 167. [Google Scholar] [CrossRef]

- Limb, C.J. Neural substrates of spontaneous musical improvisation. J. Acoust. Soc. Am. 2010, 127, 1950. [Google Scholar] [CrossRef]

- Dietrich, A. Functional neuroanatomy of altered states of consciousness: The transient hypofrontality hypothesis. Conscious. Cogn. 2003, 12, 231–256. [Google Scholar] [CrossRef] [PubMed]

- McPherson, M.; Limb, C.J. Difficulties in the neuroscience of creativity: Jazz improvisation and the scientific method. Ann. N. Y. Acad. Sci. 2013, 1303, 80–83. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L. PRISMA extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef] [PubMed]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef]

- Levicán, C.; Vega, A.; Aparicio, A.; Cádiz, R.F. EnoBiO2OSC: Brain-computer interface for musical creation. In Proceedings of the International Conference on Mathematics and Computing, Varanasi, India, 9–11 January 2018; Volume 7, p. 2. [Google Scholar]

- Münßinger, J.I.; Halder, S.; Kleih, S.C.; Furdea, A.; Raco, V.; Hösle, A.; Kübler, A. Brain painting: First evaluation of a new brain–computer interface application with ALS-patients and healthy volunteers. Front. Neurosci. 2010, 4, 182. [Google Scholar] [CrossRef]

- Miranda, E.R. Brain-computer music interface for composition and performance. Int. J. Disabil. Hum. Dev. 2006, 5, 119–126. [Google Scholar] [CrossRef]

- Pedersen, I.; Mirza-Babaei, P.; Gale, N. iMind: Paul Klee, Dialogism & BCI. In Proceedings of the Adjunct Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, Osaka, Japan, 7–11 September 2015; pp. 557–562.

- Herrera-Arcos, G.; Tamez-Duque, J.; Acosta-De-Anda, E.Y.; Kwan-Loo, K.; De-Alba, M.; Tamez-Duque, U.; Contreras-Vidal, J.L.; Soto, R. Modulation of neural activity during guided viewing of visual art. Front. Hum. Neurosci. 2017, 11, 581. [Google Scholar] [CrossRef]

- Todd, D.A.; McCullagh, P.J.; Mulvenna, M.D.; Lightbody, G. Investigating the use of brain-computer interaction to facilitate creativity. In Proceedings of the 3rd Augmented Human International Conference on-AH’12, Megève, France, 8–9 March 2012; ACM Press: New York, NY, USA, 2012; pp. 1–8. [Google Scholar]

- Tang, Z.; Wang, X.; Wu, J.; Ping, Y.; Guo, X.; Cui, Z. A BCI painting system using a hybrid control approach based on SSVEP and P300. Comput. Biol. Med. 2022, 150, 106118. [Google Scholar] [CrossRef]

- Hamadicharef, B.; Xu, M.; Aditya, S. Brain-Computer Interface (BCI) based musical composition. In Proceedings of the 2010 International Conference on Cyberworlds, Washington, DC, USA, 20–22 October 2010; pp. 282–286. [Google Scholar]

- Pinegger, A.; Wriessnegger, S.C.; Müller-Putz, G.R. Sheet music by mind: Towards a brain-computer interface for composing. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; IEEE, 2015; pp. 1053–1056. [Google Scholar]

- Vamvakousis, Z.; Ramirez, R. P300 harmonies: A brain-computer musical interface. In Proceedings of the ICMC Proceedings, Jinzhou, China, 3–5 July 2014; pp. 725–729. [Google Scholar]

- Riccio, P.; Galati, F.; Zuluaga, M.A.; De Martin, J.C.; Nichele, S. Translating Emotions from EEG to Visual Arts. In Proceedings of the Artificial Intelligence in Music, Sound, Art and Design: 11th International Conference, EvoMUSART 2022, Held as Part of EvoStar 2022, Madrid, Spain, 20–22 April 2022; Springer, 2022; pp. 243–258. [Google Scholar]

- Kim, H.; Kim, S. Know Yourself: Self-portrait with Emotion Expressed in the EEG Data. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 371–374. [Google Scholar]

- Folgieri, R.; Zichella, M. A BCI-based application in music: Conscious playing of single notes by brainwaves. Comput. Entertain. 2012, 10, 1–10. [Google Scholar] [CrossRef]

- Folgieri, R.; Dei Cas, L.; Dei Cas, D.; Vanutelli, M.E.; Lucchiari, C. The Creative Mind–DRACLE. In Proceedings of the Electronic Visualisation and the Arts (EVA 2017), London, UK, 11–13 July 2017; pp. 290–295. [Google Scholar] [CrossRef]

- Cádiz, R.F.; de la Cuadra, P. Kara: A BCI approach to composition. In Proceedings of the ICMC Proceedings, Jinzhou, China, 3–5 July 2014; pp. 350–354. [Google Scholar]

- Tokunaga, T.; Lyons, M.J. Enactive mandala: Audio-visualizing brain waves. arXiv 2020, arXiv:2010.13035. [Google Scholar] [CrossRef]

- Yan, S.; Ding, G.; Li, H.; Sun, N.; Wu, Y.; Guan, Z.; Zhang, L.; Huang, T. Enhancing audience engagement in performing arts through an adaptive virtual environment with a brain-computer interface. In Proceedings of the 21st International Conference on Intelligent User Interfaces, Sonoma, CA, USA, 7–10 March 2016; pp. 306–316. [Google Scholar]

- Ramchurn, R.; Wilson, M.L.; Martindale, S.; Benford, S. Scanners 2-the MOMENT: A new brain-controlled movie. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–4. [Google Scholar]

- Ramchurn, R.; Chamberlian, A.; Benford, S. Designing musical soundtracks for Brain Controlled Interface (BCI) systems. In Proceedings of the Audio Mostly 2018 on Sound in Immersion and Emotion, Wrexham, UK, 12–14 September 2018; pp. 1–8. [Google Scholar]

- Marchesi, M.; Farella, E.; Riccò, B.; Guidazzoli, A. Mobie: A movie brain interactive editor. In Proceedings of the SIGGRAPH Asia 2011 Emerging Technologies, Hong Kong, China, 12–15 December 2011; p. 1. [Google Scholar]

- Marchesi, M. From Mobie to Neu: 3d animated contents controlled by a Brain-Computer Interface. In Proceedings of the 2012 Virtual Reality International Conference, Laval, France, 28–30 March 2012; pp. 1–3. [Google Scholar]

- Zioga, P.; Pollick, F.; Ma, M.; Chapman, P.; Stefanov, K. “Enheduanna—A Manifesto of Falling” live brain-computer cinema performance: Performer and audience participation, cognition and emotional engagement using multi-brain BCI interaction. Front. Neurosci. 2018, 12, 191. [Google Scholar] [CrossRef]

- Pope, A.T.; Bogart, E.H.; Bartolome, D.S. Biocybernetic system evaluates indices of operator engagement in automated task. Biol. Psychol. 1995, 40, 187–195. [Google Scholar] [CrossRef]

- Taft, R. Creativity: Hot and cold. J. Pers. 1971, 39, 345–361. [Google Scholar] [CrossRef] [PubMed]

- Reed, S.; Akata, Z.; Yan, X.; Logeswaran, L.; Schiele, B.; Lee, H. Generative adversarial text to image synthesis. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1060–1069. [Google Scholar]

- Acar, S.; Tadik, H.; Myers, D.; Van der Sman, C.; Uysal, R. Creativity and well-being: A meta-analysis. J. Creat. Behav. 2021, 55, 738–751. [Google Scholar] [CrossRef]

- Zajonc, R.B. Feeling and thinking: Preferences need no inferences. Am. Psychol. 1980, 35, 151. [Google Scholar] [CrossRef]

- Amabile, T.M. How to Kill Creativity; Harvard Business School Publishing: Boston, MA, USA, 1998; Volume 87. [Google Scholar]

- Hasson, U.; Ghazanfar, A.A.; Galantucci, B.; Garrod, S.; Keysers, C. Brain-to-brain coupling: A mechanism for creating and sharing a social world. Trends Cogn. Sci. 2012, 16, 114–121. [Google Scholar] [CrossRef]

- Yeshurun, Y.; Nguyen, M.; Hasson, U. The default mode network: Where the idiosyncratic self meets the shared social world. Nat. Rev. Neurosci. 2021, 22, 181–192. [Google Scholar] [CrossRef] [PubMed]

- Lucchiari, C.; Sala, P.M.; Vanutelli, M.E. The effects of a cognitive pathway to promote class creative thinking. An experimental study on Italian primary school students. Think. Ski. Creat. 2019, 31, 156–166. [Google Scholar] [CrossRef]

- Clark, A.; Chalmers, D. The extended mind. Analysis 1998, 58, 7–19. [Google Scholar] [CrossRef]

| Authors | Year | Country | Sample | Creativity | Assessment | BCI | Main Results |

|---|---|---|---|---|---|---|---|

| Clinical applications | |||||||

| Levican et al. [19] | 2018 | Chile | N = 1 | Music | Music composition | Enobio-8 EEG | - |

| Münßinger et al. [20] | 2010 | Germany | N = 3 ALS patients N = 10 healthy participants | Painting | Brain Painting | 16-channel USBamp | Of patients, 2/3 reached above 89% accuracy. |

| Miranda [21] | 2006 | UK | - | Music | Music composition | EEG (geodesic net, 19 ch) | - |

| Art experience in real-world settings | |||||||

| Pedersen et al. [22] | 2015 | Canada | - | Painting | Selection of Klee’s art | Muse | - |

| Herrera-Arcos et al. [23] | 2017 | Mexico and USA | N = 25 | Painting appreciation | Favorite piece | Muse | Suppression of beta while viewing favorite piece over frontal sites. |

| BCI for creative content creation | |||||||

| Proto-creativity | |||||||

| Todd et al. [24] | 2012 | UK | N = 8 | Proto-painting | Painting (free and copy) | EEG with BCI2000 software suite | Task 3 (free drawing) was perceived as the one which allowed for a greater sense of control and was the most enjoyable. Task 2 (copy) was the preferred task. |

| Tang et al. [25] | 2022 | China | N = 20 | Proto-painting | Painting (free and copy) | EEG BioSemi (12 ch) | Hybrid stimulus interface (P300 + SSVEP) was more accurate than P300 alone (88.92%). |

| Hamadicharef et al. [26] | 2010 | Singapore | - | Proto-music | Music composition | EEG (15 ch) | - |

| Pinegger et al. [27] | 2015 | Austria | N = 5 | Proto-music | Music composition | Mobita EEG system (8 ch) | Three participants reached accuracies above 77% and could produce a given melody. |

| Vamvakousis and Ramirez [28] | 2014 | Spain | N = 4 | Proto-music | Arpeggio shift | Emotiv Epoc (14 ch) | Selection accuracy from 83 to 100%. |

| Creative outcomes | |||||||

| Riccio et al. [29] | 2022 | Spain, Norway, and Italy | - | Painting | Emotion categorization | EEG database | Happiness, fear, and sadness had the highest sensitivity (>58%) while anger just reached 22% (with a higher sensitivity). |

| Kim H.-J. and Kim S.-Y. [30] | 2015 | Korea | - | Painting | Self portrait | Neurosky Mindwave | - |

| Folgieri and Zichella [31] | 2012 | Italy | Task 1: N = 7 Task 2: N = 4 | Music | Music composition | Neurosky Mindwave | After a few minutes of training, participants were able to reproduce the notes by thinking of them, with 40–50% immediate success. |

| Folgieri et al. [32] | 2017 | Italy | - | Sound and visual display | DRACLE | Neurosky Mindwave | - |

| Cádiz and de la Cuadra [33] | 2014 | Chile | - | Sound and visual display | Multisensorial performance | KARA1: Neurosky Mindwave KARA2: Emotiv Epoc | - |

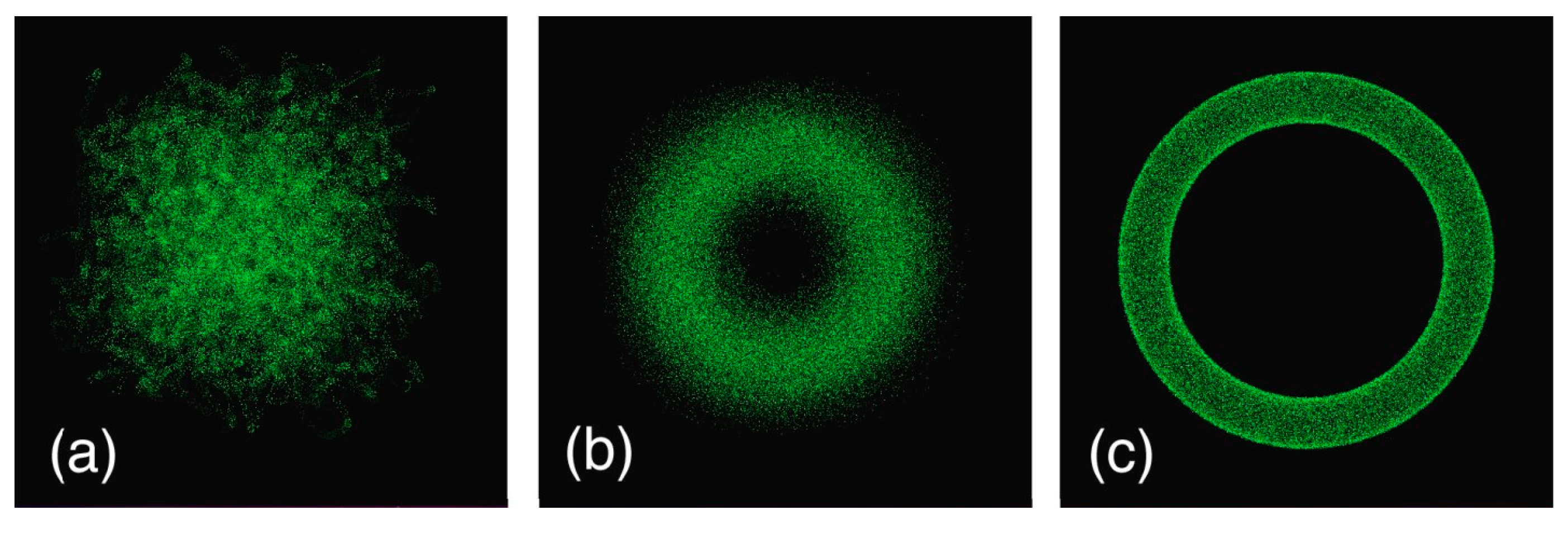

| Tokunaga and Lyons [34] | 2020 | Japan | - | Sound and visual display | Mandala | Neurosky Mindwave | - |

| Participants’ engagement | |||||||

| Yan et al. [35] | 2016 | China | N = 48 | Sound and visual display | Adaptive theatre performance | Emotiv Epoc (14 ch) | It is possible to detect significant decreasing thresholds during adaptive theatre performance. There was a better recall of the performance content when using performing cues. The audience was more attracted by multiple performing cues than single performing cues during opera. |

| Ramchurn et al. [36] | 2018 | UK | - | Movie composition | Brain-controlled movie | Neurosky Mindwave | - |

| Ramchurn et al. [37] | 2018 | UK | N = 33 questionnaires | Music composition | Musical Soundtracks for BCI Systems | Neurosky Mindwave | The users understood the presence of a relation between the visual elements of the film and the soundtrack. |

| Marchesi et al. [38] | 2011 | Italy | - | Movie composition | Brain-interactive movie | Neurosky Mindwave | - |

| Marchesi [39] | 2012 | Italy | - | Correlates of mood during cinema | Video editing | Neurosky Mindwave | - |

| Zioga et al. [40] | 2018 | UK | N = 7 | Live performance | Live performance and video projection | MyndPlay Brain-BandXL | Correlation between the participants’ answers, special elements of the performance, and the audience’s attention, and emotional engagement. The performer’s results were consistent with the recall of representations and the increase in cognitive load. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vanutelli, M.E.; Salvadore, M.; Lucchiari, C. BCI Applications to Creativity: Review and Future Directions, from little-c to C2. Brain Sci. 2023, 13, 665. https://doi.org/10.3390/brainsci13040665

Vanutelli ME, Salvadore M, Lucchiari C. BCI Applications to Creativity: Review and Future Directions, from little-c to C2. Brain Sciences. 2023; 13(4):665. https://doi.org/10.3390/brainsci13040665

Chicago/Turabian StyleVanutelli, Maria Elide, Marco Salvadore, and Claudio Lucchiari. 2023. "BCI Applications to Creativity: Review and Future Directions, from little-c to C2" Brain Sciences 13, no. 4: 665. https://doi.org/10.3390/brainsci13040665

APA StyleVanutelli, M. E., Salvadore, M., & Lucchiari, C. (2023). BCI Applications to Creativity: Review and Future Directions, from little-c to C2. Brain Sciences, 13(4), 665. https://doi.org/10.3390/brainsci13040665