Abstract

Electroencephalography (EEG) is recorded by electrodes from different areas of the brain and is commonly used to measure neuronal activity. EEG-based methods have been widely used for emotion recognition recently. However, most current methods for EEG-based emotion recognition do not fully exploit the relationship of EEG channels, which affects the precision of emotion recognition. To address the issue, in this paper, we propose a novel method for EEG-based emotion recognition called CR-GCN: Channel-Relationships-based Graph Convolutional Network. Specifically, topological structure of EEG channels is distance-based and tends to capture local relationships, and brain functional connectivity tends to capture global relationships among EEG channels. Therefore, in this paper, we construct EEG channel relationships using an adjacency matrix in graph convolutional network where the adjacency matrix captures both local and global relationships among different EEG channels. Extensive experiments demonstrate that CR-GCN method significantly outperforms the state-of-the-art methods. In subject-dependent experiments, the average classification accuracies of 94.69% and 93.95% are achieved for valence and arousal. In subject-independent experiments, the average classification accuracies of 94.78% and 93.46% are obtained for valence and arousal.

1. Introduction

Emotion recognition is a significant research direction in affective computing, which is the main technology to achieve high-level human–computer interaction. The purpose of emotion recognition is to allow machines to perceive human emotional states, so as to enhance the humanization level of machines [1,2]. In addition, this type of study may also be used to understand humans [3]. Traditional emotion recognition methods mostly use easily accessible facial expression images [4], body gestures, and speech signals [5,6]. However, the validity and reliability of nonphysiological signals are often difficult to guarantee in practical applications [7]. Physiological signals, such as EEG, electrooculogram, and electromyography, are not easily controlled by subjective consciousness and have been shown to reveal important information about human emotional states [8,9]. In recent years, EEG-based emotion recognition has attracted more and more attention in both research [10,11] and applications [12].

At present, the research on emotion model mainly includes two categories: discrete method and dimension method [13]. The discrete method classifies emotions into discrete status, as Ekman et al. [14] classified emotions into joy, sadness, surprise, fear, anger, and disgust. The dimension method describes emotions as two dimensions (valence and arousal) or three dimensions (valence, arousal, and dominance) [15].

Many researchers have used deep learning for EEG-based emotion recognition. Tripathi et al. [16] used a convolutional neural network (CNN) to extract features of different EEG channels to realize emotion recognition. However, in fact, the distribution of EEG channels is not gridlike but is irregularly connected. In recent decades, graph convolutional network (GCN) has been shown to effectively use adjacency matrices to capture interchannel relationships and extract graph domain features to realize emotion recognition. Zhong et al. [17] and Yin et al. [18] employed a distance-based method to exploit the relationship among EEG channels using GCN. However, these methods only capture interchannel relationships in a single way and do not fully exploit the relationship of EEG channels, which affects the precision of emotion recognition. Neuroscience studies have shown that emotional patterns are related to functional connectivity of brain regions [19,20].

To address the problem, we propose a new channel-relationships-based graph convolutional network (CR-GCN) method by exploiting the relationships among EEG channels. Specifically, 3 s of baseline EEG data of the subjects collected before watching the video is first used to eliminate the noise that the brain produces spontaneously. Then, a time window of 6 s is used to partition the data. From each segment, power spectral density (PSD) is extracted and normalized to construct feature cube. Second, an adjacency matrix is constructed using both the topological structure and functional connectivity of EEG channels simultaneously. Third, the feature cube of each segment and adjacency matrix are used as the input of GCN model, and softmax layer outputs are used to predict classification results. The major contributions of our paper are as follows.

- A novel emotion recognition method by exploiting multiple relationships among EEG channels is proposed. The topological structure of EEG channels represents local relationships, and the brain functional connectivity represents global relationships. Our method combines both relationships, which captures both local and global relationships among EEG channels, and can more accurately reflect the interaction between EEG signals.

- A fusion method for relationships among EEG channels is proposed. Graph is used to represent the topological relationship and functional connectivity relationship of EEG channels. EEG channel relationships are constructed by an adjacency matrix in GCN. The adjacency matrix is constructed from the corresponding adjacency matrices represented by two graphs.

- Experimental results demonstrate that the CR-GCN method achieves better classification results than the state-of-the-art methods.

2. Related Work

2.1. Features of EEG Signals

Musha et al. [21] were the first to use EEG signals to realize emotion recognition and extracted features from ten channels. In recent decades, many methods have been used to extract EEG features [22,23]. The features extracted from EEG signals mainly include three categories: time domain, frequency domain, and time–frequency domain. Time domain features include Hjorth feature [24], high-order crossing feature [25], and fractal dimension feature [26]. Frequency domain features include differential asymmetry (DASM) [26], differential entropy (DE) [27,28], PSD [28], approximate entropy [29], sample entropy [30], and rational asymmetry (RASM) [31]. Commonly used time–frequency domain features are short-time Fourier transform [32] and wavelet transform [33].

Inspired by several studies [34,35,36], PSD is used to extract EEG features in this paper. According to the relevant biological study [2], the higher frequency bands (such as theta, alpha, beta, and gamma band) are more associated with emotional activities, while the lower frequency bands (such as delta band) are less associated with emotional activities. Therefore, this paper uses theta, alpha, beta, and gamma band to extract PSD.

2.2. Graph Convolutional Network

Traditional CNN is limited in handling irregular and non-Euclidean domain data. Compared with it, GCN [37] can handle irregular and non-Euclidean domain data, so it has more advantages in processing discrete spatial domain signals [38]. More importantly, GCN has been shown to effectively use adjacency matrices to capture interchannel relationships and extract graph domain features to realize emotion recognition [17,18,34,35,39]. Many researchers have used GCN for EEG emotion recognition. Song et al. [34] designed a dynamical graph convolutional neural network (DGCNN) to exploit the relationship between irregular EEG channels but dynamically updating the adjacency matrix resulted in more model parameters and a long calculation period. Zheng et al. [35] extracted six types of features from five frequency bands and input these features into a hierarchical graph convolutional network (HGCN), but HGCN only considered the horizontal and vertical relationship of the overall EEG channels and did not specifically exploit the relationship between individual channels. Zhong et al. [17] introduced a regularized graph neural network (RGNN) by considering the biological topology and brain asymmetry to exploit the local and global relationships of different channels to construct an adjacency matrix to realize emotion recognition, but they did not take into account the functional connectivity of brain channels. Yin et al. [18] designed a novel emotion recognition method based on a deep learning model (ERDL) by extracting differential entropy from EEG data to construct feature cubes and used GCN and long short-term memory (LSTM) to realize emotion recognition, but they only used the distance to design the interchannel relationship. Inspired by DGCNN [34], Jin et al. [39] applied GCN with learnable EEG electrode relationships in a goal-driven manner for emotion recognition, but it still needed a great amount of calculation to determine the adjacency matrix every time.

Although the above methods have applied GCN to realize emotion recognition and used adjacency matrices to capture interchannel relationships, they have not fully exploited the interchannel relationships. Therefore, this paper attempts to design a method that can more accurately reflect the interaction between EEG signals to improve the accuracy of emotion recognition.

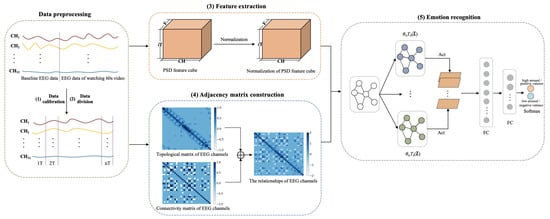

3. CR-GCN Method

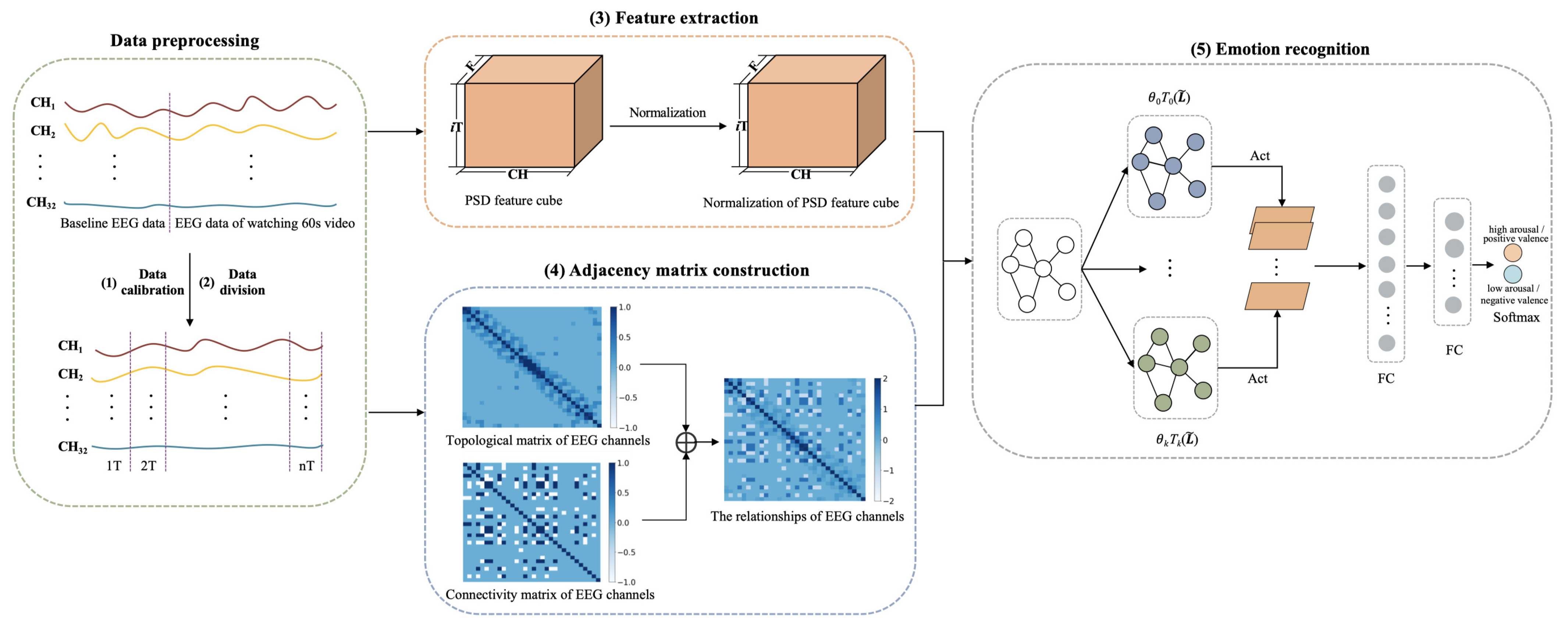

The framework of CR-GCN is shown in Figure 1. The CR-GCN method includes five parts.

Figure 1.

Framework of CR-GCN. Act denotes ReLU activation. FC denotes full connection.

(1) Data calibration. First, 3 s of baseline EEG data of the subjects collected before watching the video is averaged and then replicated 20 times to form the 60 s data. Then, the corresponding baseline data is subtracted from the EEG data of watching 60 s video. With this, EEG signals have a high probability of removing noise signals that are not related to emotions [18,40].

(2) Data division. After (1), the data is partitioned into segments for each video where T and S are respectively set to 6 and 3 in the following experiments.

(3) Feature extraction. PSD is adopted to extract EEG features in this work. We introduce a method for normalization of EEG features of subjects for better emotion recognition.

(4) Adjacency matrix construction. The topological matrix of EEG channels is the distance-based method and tends to study local relationships among EEG channels. The connectivity matrix of EEG channels is based on the functional connectivity method and tends to study global relationships among EEG channels. Therefore, in order to describe the relationship among EEG channels more accurately, we propose a method that combines distance and functional connectivity among channels to construct the adjacency matrix, which captures both local and global relationships among EEG channels.

(5) Emotion recognition. This paper adopts the GCN for emotion recognition, in which the normalization of PSD feature cube is used as the node representation, and the adjacency matrix is served as the node relationships. The softmax layer outputs are used to predict emotion classification results.

3.1. Feature Extraction

PSD is used to calculate the signal power in different frequency bands according to Fourier transform, which is widely used in signal processing. Suppose we have a power signal f(t); in order to be able to perform Fourier transform on it, we intercept a section with a time length of 2T and perform Fourier transform on the signal within this time period. It is defined in Equation (1):

According to the Parseval formula, we can get Equation (2)

where =, ] denotes the Fourier transform.

When the time T tends to infinity, it can be known that (t) can be approximately equivalent to f(t), and (w) can also be equivalent to F(w). So, we take the time of the formula toward infinity and divide by 2T. The formula for calculating the PSD can be obtained as follows:

According to the characteristics of EEG signals, in this paper, we extract PSD from four bands; then, we calculate the average value of PSD in different frequency bands and then normalize PSD. The standard deviation and the normalization formula are respectively given in Equations (5) and (6):

where is the standard deviation of PSD of frequency band, N is the number of samples, is the value of PSD of the frequency band of each sample, is the mean value of PSD of the frequency band, z is the normalized PSD, and x is the value of PSD of the frequency band.

3.2. Design of Channel Relationships Based GCN

3.2.1. Graph Representation

Inspired by DGCNN [34] and RGNN [17], in this paper, each EEG channel is represented as a node in a graph. The graph can be defined as G = {V, , A}, where V is a set of nodes, is a set of edges between nodes in V, and is the adjacency matrix. A represents the relationship of EEG channels and N represents the number of EEG channels. The value is learnable and represents the relationship between node i and j.

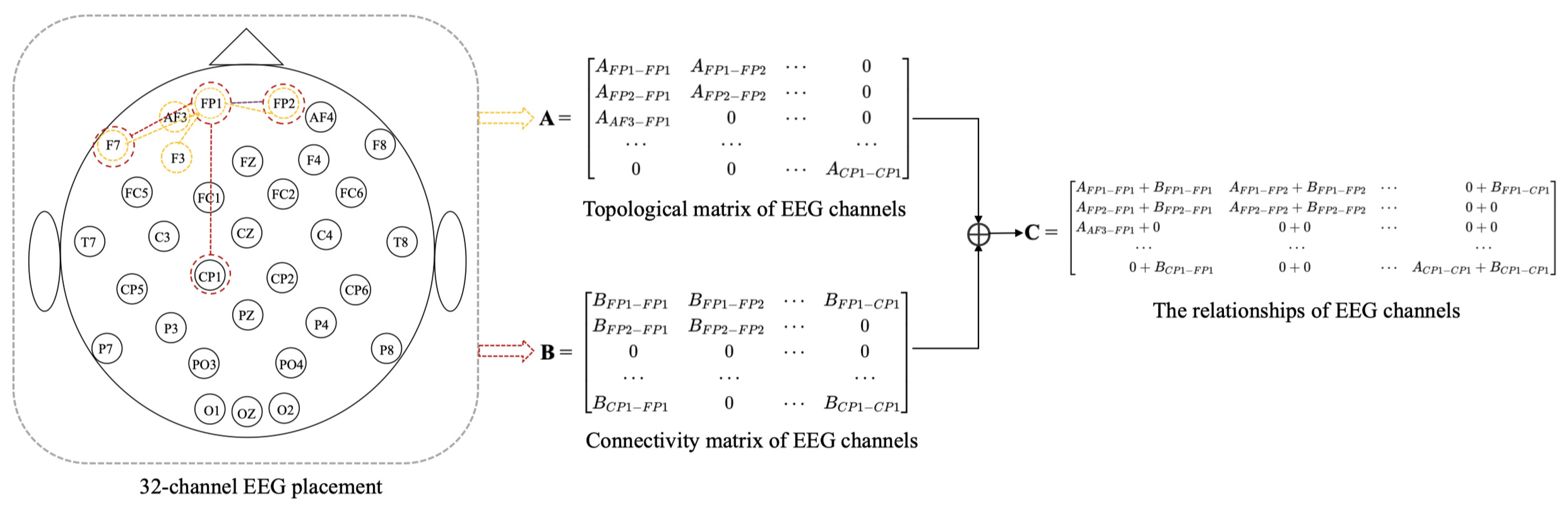

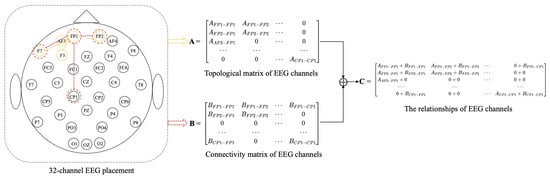

There are three methods to calculate the value of , such as functional connectivity [41], distance-based [17,18], and neural network. EEG can be described as the result of randomly distributed dipoles [42], and the dipole-driven nature of the EEG results in electrodes that are associated with distant electrodes. Since the adjacency matrix constructed by distance-based method [17,18] only considers the relationship between electrodes within a short distance and ignores there are also correlations between distant electrodes. Therefore, we propose a method that combines distance-based method and functional connectivity among channels to construct the adjacency matrix, in which it captures both local and global relationships of EEG channels. To better describe the position and adjacency matrix correspondence, the two-dimensional locations of EEG electrodes and adjacency matrix construction are shown in Figure 2. We construct adjacent matrix as a symmetric matrix with at most (N + 1)N/2 parameters instead of to reduce overfitting.

Figure 2.

Two-dimensional placement for 32-channel EEG and adjacency matrix construction.

(1) Topological matrix of EEG channels. The construction of the adjacency matrix A is the distance-based method. We construct local relationships using distance-based method among EEG channels in the adjacency matrix A. In this paper, A is constructed by calculating the Euclidean distance between EEG channels in 3D space, and the 3D coordinates of EEG channels can be obtained from the recorded EEG data. Salvador et al. [43] found that the strength of connectivity between brain regions was generally related to distance by an inverse square law. Zhong et al. [17] and Yin et al. [18] have used distance-based method to construct adjacency matrix. Therefore, the formula for constructing is as follows:

where , i, j = 1, 2, …, n, represents Euclidean distance between nodes i and j, and represents calibration constant. According to the relevant study [44], retaining 20% of connections can improve the efficiency of model. Thus, is set to be the same as [17], so that about 20% of connections in A will be retained. We also treat the connections with values > 0.1 as nonignorable connections.

(2) Connectivity matrix of EEG channels. The construction of the adjacency matrix B is based on the functional connectivity method. We construct global relationships using the functional connectivity method among EEG channels in the adjacency matrix B. In this paper, adjacency matrix B of graph G is constructed based on the similarity between nodes. The similarity between nodes is calculated as follows:

where functions cov and E denote covariance and expectation, respectively, x and y denote the values of the corresponding node, and denote the mean value of eigenvectors, and and denote the variance.

The formula for constructing is defined as

where , i, j = 1, 2, …, n, represents the correlation coefficient between nodes i and j, and is set to the correlation coefficient threshold. If the absolute value of the correlation between nodes > , the connection will be retained. After repeated experiments, with the increase of , the accuracy of the model is higher. When is greater than 0.98, the accuracy of the model decreases significantly. is set to 0.98 in the following experiments.

Our method based on the combination of distance and functional connectivity among EEG channels is proposed to construct adjacency matrix C by adding Equations (7) and (9) to perform matrix fusion. The adjacency matrix C is based on the fusion of local and global connectivity.

3.2.2. Spectral Graph Filtering

Spectral graph filtering is called graph convolution, which is a common signal processing method for graph data operation. Let L represent the standard Laplacian matrix of graph G. It is defined as

where D is a diagonal matrix, , and E is an identity matrix.

The decomposition of L is as follows:

where is the eigenvector matrix of Laplacian matrix L. is the eigenvalue diagonal matrix. is the eigenvector of L, and is the eigenvalue of L.

The convolution of signals x and y on graph *G is as follows

Using g(·), x can be filtered as follows:

where is as follows:

Using K-order Chebyshev polynomials, we can get , which is

where is the coefficient of the Chebyshev polynomial and (·) is the calculation method of the Chebyshev polynomial. The formula is as follows:

Using (15), (13) can be transformed as

where . ChebNet [45] does not require feature decomposition of the Laplacian matrix, and the convolution kernel has only K+1 learnable parameters. The complexity of the parameters is significantly reduced, and the operation speed can be greatly improved.

3.3. Algorithm of CR-GCN

The loss function of CR-GCN is as follows

where l represents the actual label vector, represents the predicted one, w represents the parameters of the model, is the regularization coefficient, and regularization term is designed to reduce overfitting. The algorithm of CR-GCN is shown in Algorithm 1.

| Algorithm 1: The algorithm of CR-GCN |

| Input: EEG features, class labels, the number of Chebyshev polynomial K, the number of iterations , learning rate , stop iteration threshold e; Ouput: The desired parameters of CR-GCN;

|

4. Experiments

4.1. Dataset

A database for emotion analysis using physiological signals (DEAP) [46] is published for study of human emotional states. In this dataset, 32 subjects are involved in 40 trials of emotion-oriented music videos, where each music video lasts 60 s. DEAP uses the international 10–20 lead system to collect physiological data, which includes 32 channels of EEG signals and 8 channels of peripheral physiological signals. After each subject watched the music video, each subject was required to make self-assessments of valence, arousal, dominance, and liking on a scale of 1 to 9 by directly pressing the mouse at the corresponding location. In addition, the recorded data of each video of all subjects includes 3 s of baseline data and 60 s of experimental data. The data introduction of each subject is shown in Table 1. 32 channels of EEG signal data are used in our paper. According to the characteristics of the dataset and the representation of emotions in other studies, we use valence and arousal to represent emotions and define valence greater than 5 as positive, less than 5 as negative, arousal greater than 5 as high, and less than 5 as low.

Table 1.

The structure of DEAP.

4.2. Evaluation Metrics and Model Settings

The classification accuracy and F1-score are used to evaluate the CR-GCN method. Their calculation formulas are as follows

where , , , and are true positive, false positive, true negative, and false negative.

For hyperparameters of CR-GCN in all experiments, the Chebyshev polynomial order is K = 2, the last layer is softmax activation function, the remaining activation functions are ReLU activation function, the number of graph nodes is 32, the maximum number of iterations is 1000, the dropout rate is set to 0.2, the batch size is 128, the learning rate , and the stop iteration threshold e = 0.0001. The model is trained and tested on NVIDIA GeForce GTX 1080 Ti and is implemented by Python 3.8.5 with PyTorch 1.7.0. For subject-dependent experiments, 80% of EEG data are used for training and 20% for testing for each subject. For subject-independent experiments, 80% of EEG data are used for training and 20% for testing for all subjects. Fivefold cross-validation with random strategy is adopted in all experiments.

4.3. Results

In this part, we conduct subject-dependent experiments, subject-independent experiments, and ablation experiments. In subject-dependent experiments, the training set and test set are from the same subject’s data. In subject-independent experiments, the training set and test set are not from the same subject’s data. In order to test the effectiveness and generalization of our method, we conducted two types of experiments instead of one.

4.3.1. Subject-Dependent Experiments

In this part, the subject-dependent average classification results are shown in Table 2. The minimum, maximum, and average classification accuracies of the CR-GCN method on all subjects are 80.63%, 99.34%, and 94.69%, respectively, and the minimum, maximum, and average F1-score are 78.90%, 99.32%, and 94.40% on valence. The minimum, maximum, and average classification accuracies of the CR-GCN method on all subjects are 80.00%, 99.54%, and 93.95%, and the minimum, maximum, and average F1-score are 78.17%, 99.53%, and 92.78% on arousal.

Table 2.

Subject-dependent average classification results.

Meanwhile, we compare CR-GCN with other methods in subject-dependent experiments. Results are shown in Table 3. CR-GCN is 3.89% higher than CNN and recurrent neural network (RNN) method [47] on valence and 2.92% higher on arousal. CR-GCN is 7.62% higher than the normalized frequency domain features (FREQNORM) and support vector machine (SVM) method [48] on valence and 6.97% higher on arousal. CR-GCN is 2.39% higher than multimodal residual long-short-term memory (MMResLSTM) [49] on valence and 1.08% higher on arousal. Furthermore, CR-GCN is 4.24% higher than ERDL [18] on valence and 3.35% higher on arousal.

Table 3.

Average classification accuracies of other methods in subject-dependent experiments.

According to the above results, we may conclude that CR-GCN is the most effective method. It also indicates that node feature normalization and combining adjacency matrices based on distance and functional connectivity are helpful for emotion recognition.

4.3.2. Subject-Independent Experiments

In this part, the purpose of these experiments is to investigate whether CR-GCN can effectively reduce the differences among subjects so that we can obtain better emotion classification results in subject-independent experiments. Thus, we compare CR-GCN with other methods, and average classification accuracies are shown in Table 4. CR-GCN is 8.33% higher than correlated attention network (CAN) [50] on valence and 8.67% higher on arousal where the method applied a correlation attention network. CR-GCN is 13.68% higher than the Stack AutoEncoder (SAE) and LSTM method [51] on valence and 19.08% higher on arousal where the method used SAE and LSTM to realize emotion recognition. CR-GCN is 4.22% higher than emotion recognition based on hierarchy graph convolution network (ERHGCN) [35] on valence and 4.67% higher on arousal where the method extracted six types of features from five frequency bands and input them into HGCN model to realize emotion recognition. CR-GCN is 9.97% higher than ERDL [18] on valence and 8.19% higher on arousal where the method extracted differential entropy from EEG data to construct feature cubes and used GCN+LSTM to realize emotion recognition. CR-GCN is 10.95% higher than three-dimensional (3D) feature maps and CNN (3DCNER) [40] on valence and 8.93% higher on arousal where the method used 3D feature maps and CNN to realize emotion recognition. CR-GCN is 2.29% higher than spatial folding ensemble network (SFE-Net) [52] on valence and 1.52% higher on arousal where the method used an EEG-based symmetric spatial to realize emotion recognition.

Table 4.

Average classification accuracies of other methods in subject-independent experiments.

ERDL [18] achieved good results in subject-dependent classification but not in subject-independent classification. Our proposed CR-GCN method achieves more than 93.46% in both classification effects, which shows the effectiveness and generalization of our method. It also shows that CR-GCN uses graph domain features of EEG data to obtain good classification results.

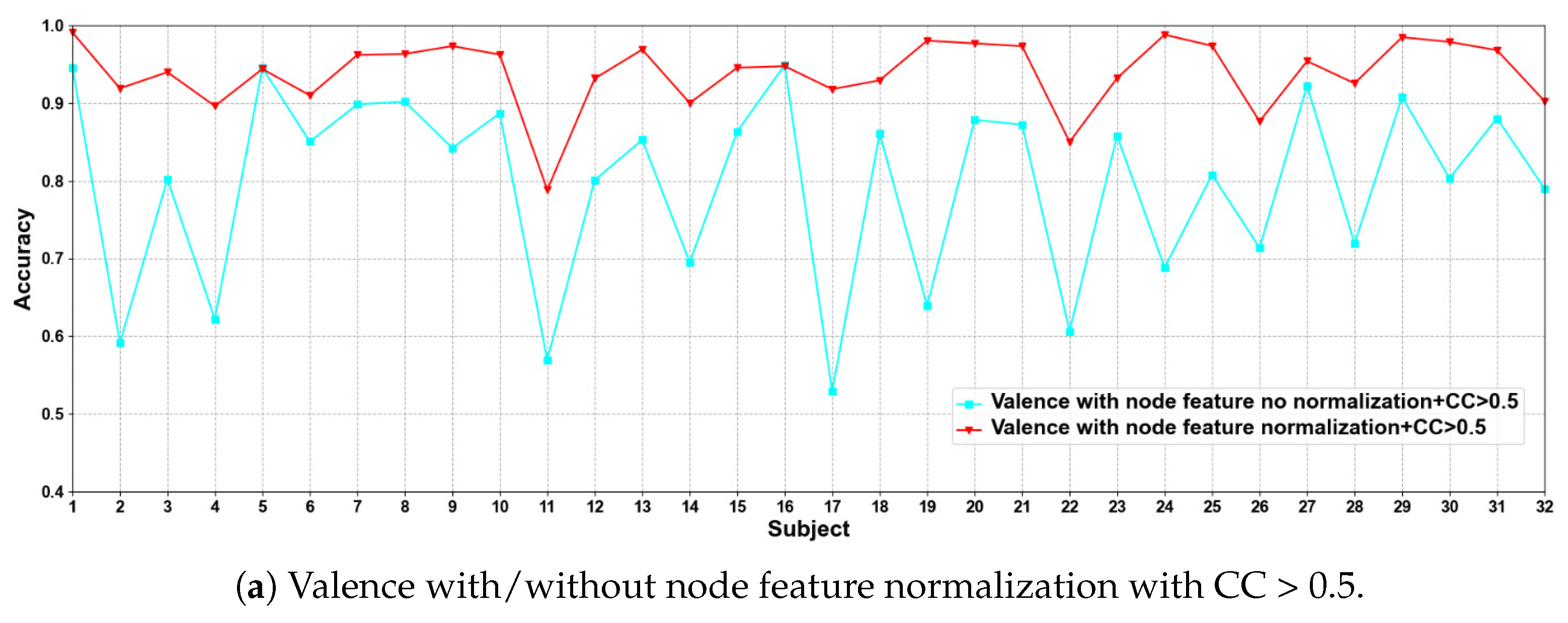

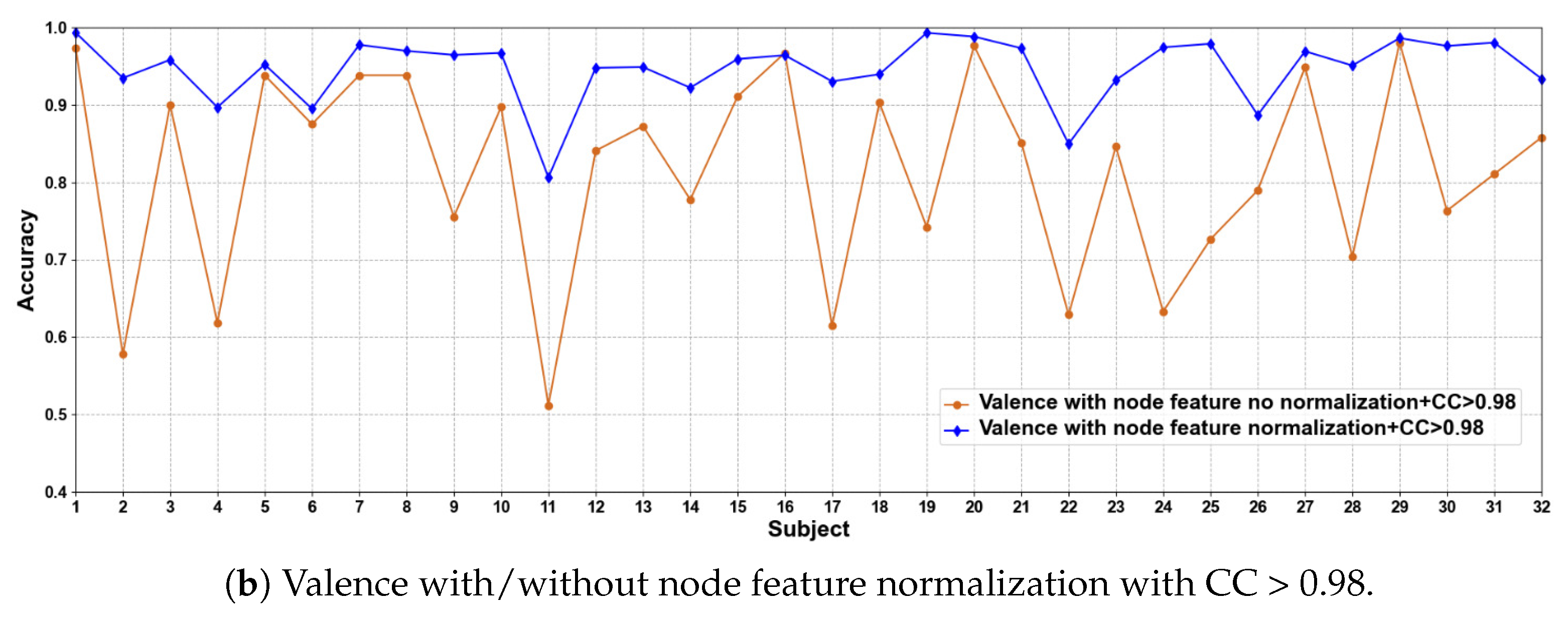

4.3.3. Ablation Experiments

In this part, the purpose of these experiments is to explore the contribution of each important part in this method. The first ablation experiments are to explore whether node feature normalization can be beneficial to improve classification accuracy. The second ablation experiments are to explore whether our proposed adjacency matrix can help to improve classification accuracy. Fivefold cross-validation with random strategy is adopted in each experiment. In the following, CC represents the absolute value of correlation coefficient, the value 0.5 is randomly selected, and 0.98 is the result of our choice by repeated experiments.

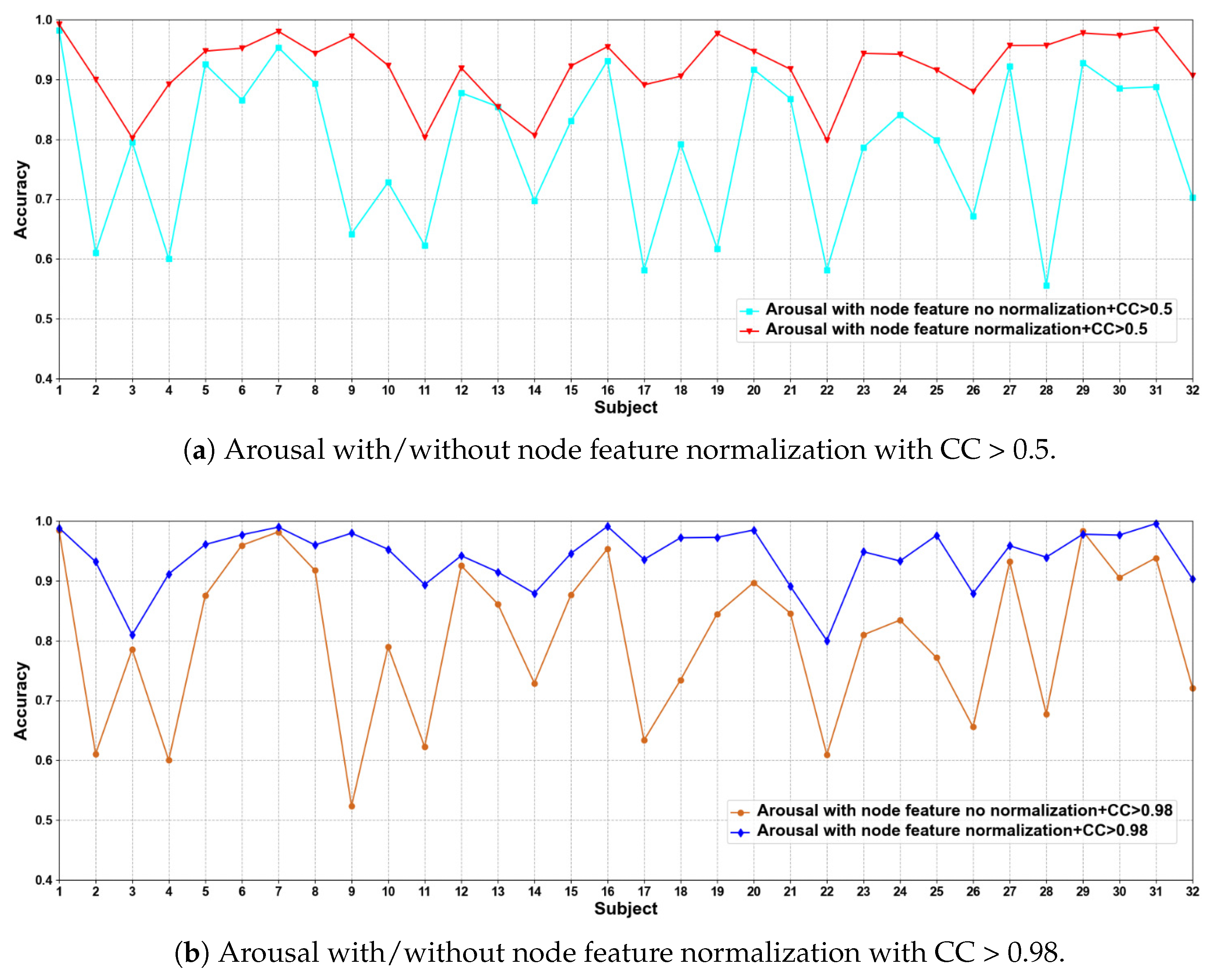

(1) EEG Node Feature Normalization and No Normalization

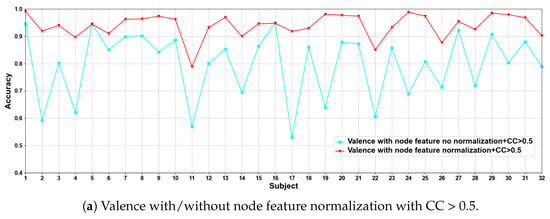

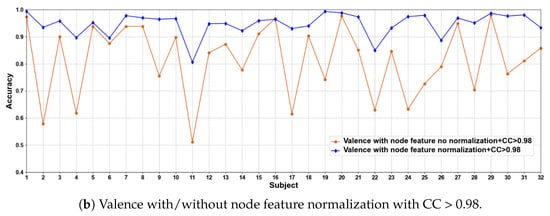

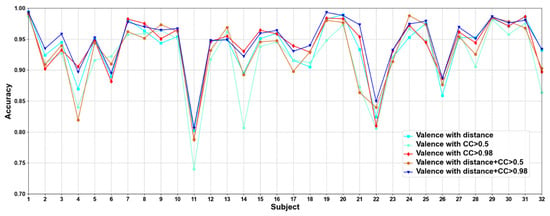

In this part of the ablation experiments, we compare two methods to verify the results under the condition that the CC > 0.5 and 0.98, including node feature normalization and no normalization. The experimental results are shown in Figure 3 and Figure 4.

Figure 3.

Classification accuracies on each subject on valence with/without normalization.

Figure 4.

Classification accuracies on each subject on arousal with/without normalization.

We compare the classification results of node feature normalization and no normalization. It can be seen that whether CC > 0.5 or CC > 0.98, the normalization of node feature is better than no normalization in most cases. In particular, the method has higher model performance accuracy when the CC > 0.98. At the same time, the average classification accuracies on all subjects are calculated. Results are shown in Table 5.

Table 5.

Average classification accuracies on all subjects with/without node feature normalization.

It can also be seen that the normalization of node feature is at least 13% higher than the method without normalization under the same conditions. We can also see that node feature normalization can improve classification accuracy. In particular, the method has higher classification accuracies when the CC > 0.98.

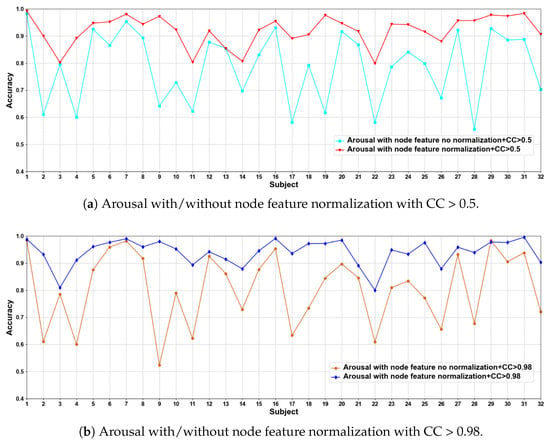

(2) The Different Construction Methods of Adjacency Matrix

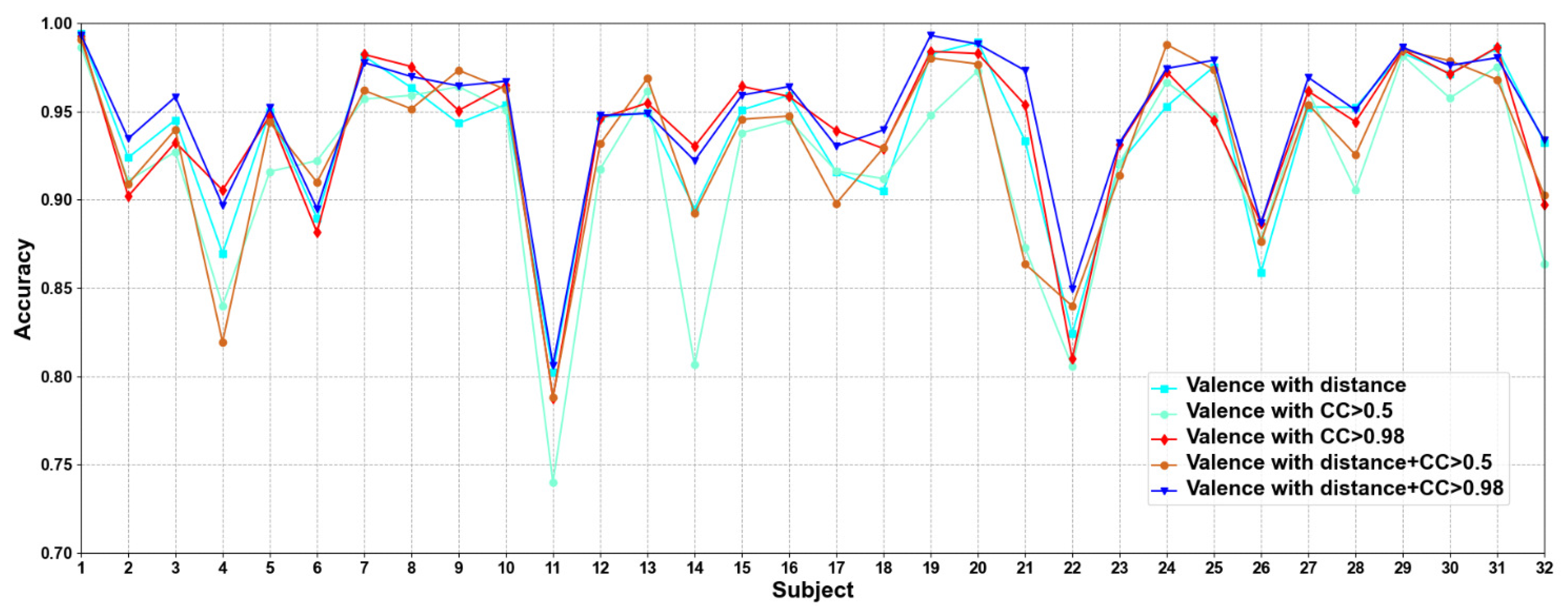

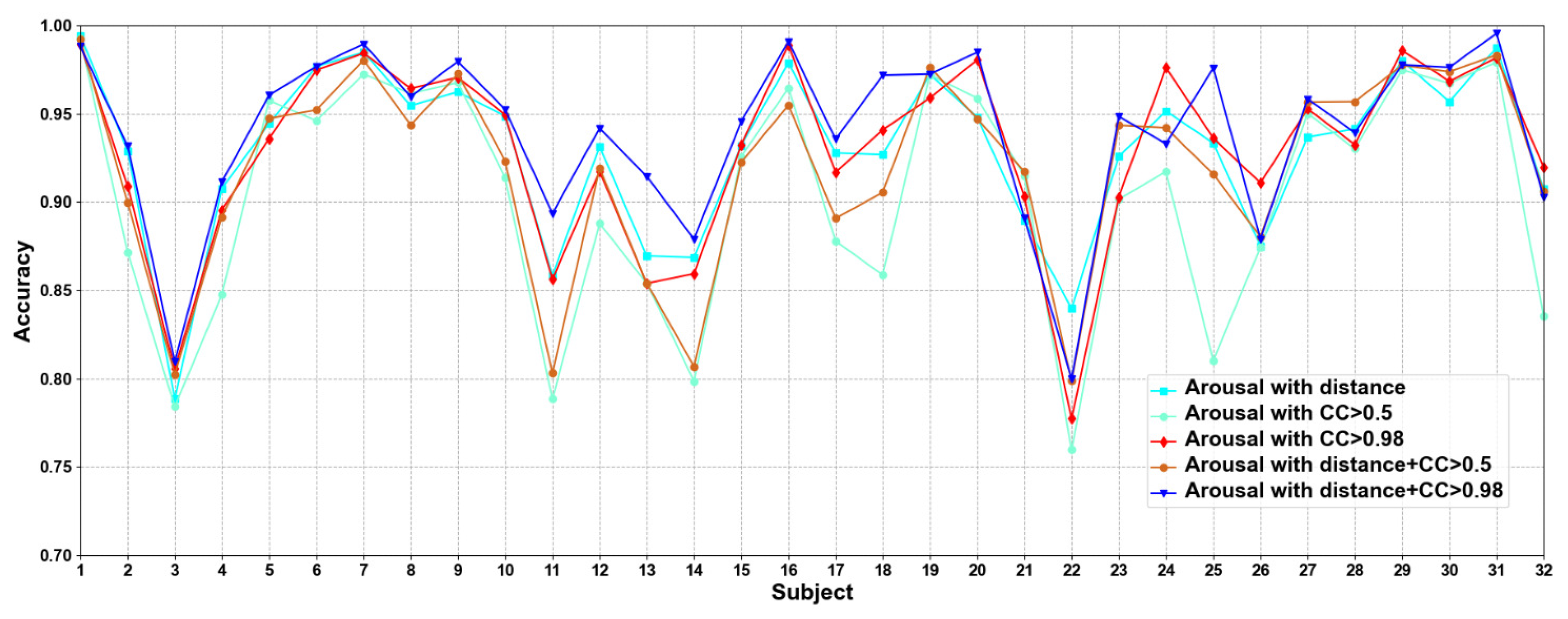

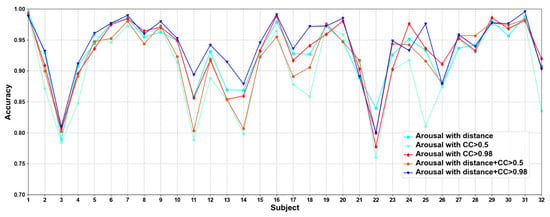

In this part, we compare five methods to construct adjacency matrices, including distance-based method, the CC based on functional connectivity > 0.5 and 0.98, and the fusion of distance-based method and functional connectivity-based when the CC > 0.5 and 0.98. Results are shown in Figure 5 and Figure 6.

Figure 5.

Classification accuracies on each subject on valence under construction of different adjacency matrices.

Figure 6.

Classification accuracies on each subject on arousal under construction of different adjacency matrices.

We compare different construction methods of adjacency matrix. It can be seen that although the effect of distance-based method is better than that functional connectivity-based method in most cases, there are also some subjects in the functional connectivity-based method achieve better performance than distance-based method, as in valence sub7, sub8, and sub10, etc., in arousal sub8, sub 9, and sub10, etc. This shows that only relying on the distance-based adjacency matrix (which tends to study local relationships) to construct the adjacency matrix without considering the correlation among channels (which tends to study global relationships) is not good for classification. It can be seen that the adjacency matrix based on the combination of distance and functional connectivity has the best classification effect when the CC > 0.98. At the same time, the average classification accuracies on all subjects are calculated. Results are shown in Table 6.

Table 6.

Average classification accuracies on all subjects under construction of different adjacency matrices.

It can be seen that CC > 0.98 are higher than CC > 0.5 in functional connectivity-based methods, which indicates that too many added channel relationships are not conducive to model performance. The average classification accuracies of distance-based construction adjacency matrix are higher than that based on functional connectivity, which is the same as the research of Zhong et al. [17]. Since the adjacency matrix constructed by only distance-based method ignores the correlation among channels, we propose a method that combines distance and functional connectivity among channels to construct the adjacency matrix, in which it captures both local and global relationships of EEG channels. In addition, we can also see that the proposed adjacency matrix based on the fusion of distance and functional connectivity achieves better classification results, which again shows that the correct construction of adjacency matrix can effectively improve the performance of model.

5. Conclusions

A new method called CR-GCN by exploiting multiple relationships among EEG channels is proposed. Both subject-dependent and subject-independent experiments on DEAP are carried out, and the experimental results indicate that CR-GCN achieves better recognition performance than the state-of-the-art methods. In addition, ablation experiments show that the proposed normalization of node feature and adjacency matrix have significantly improved the performance of our method. The better emotion recognition result is attributed to the following points.

- The design of adjacency matrix captures local and global relationships among EEG channels and describes the relationships among EEG channels more accurately. The adjacency matrix design not only considers the biological topology but also considers the functional connectivity among EEG channels. Therefore, CR-GCN describes the relationships among EEG channels more accurately than ERDL [18];

- The graph representation of CR-GCN provides a better method to capture interchannel relationships and extract graph domain features, which is beneficial to realize emotion recognition.

Although the proposed CR-GCN has been shown to be a better method to deal with emotion recognition, the existing EEG emotion datasets are still relatively small in size and the data collection standards are not uniform (e.g., the selection of stimulus materials, the number of channels, and the time of data collection), which may restrict further performance improvements in our study. In the future, it is necessary to design data collection standards and build a larger EEG emotion database for emotion recognition.

Author Contributions

Conceptualization, J.J. and B.Z.; methodology, J.J.; formal analysis, J.J. and H.L. (Hehe Lv); writing—original draft preparation, J.J.; writing—review and editing, J.J., B.Z., H.L. (Hehe Lv), Z.X., S.H. and H.L. (Haiyan Li). All authors have read and agreed to the published version of the manuscript.

Funding

This researchwas funded by National Key R&D Program of China grant number 2017YFC0907505.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The database used in this study is publicly available at websites: DEAP: http://www.eecs.qmul.ac.uk/mmv/datasets/deap/, accessed on 23 June 2022.

Acknowledgments

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cowie, R.; Douglas-Cowie, E.; Tsapatsoulis, N.; Votsis, G.; Kollias, S.; Fellenz, W.; Taylor, J.G. Emotion recognition in human-computer interaction. IEEE Signal Process. Mag. 2001, 18, 32–80. [Google Scholar] [CrossRef]

- Wang, Z.; Tong, Y.; Heng, X. Phase-locking value based graph convolutional neural networks for emotion recognition. IEEE Access 2019, 7, 93711–93722. [Google Scholar] [CrossRef]

- Álvarez-Pato, V.M.; Sánchez, C.N.; Domínguez-Soberanes, J.; Méndoza-Pérez, D.E.; Velázquez, R. A Multisensor Data Fusion Approach for Predicting Consumer Acceptance of Food Products. Foods 2020, 9, 774. [Google Scholar] [CrossRef] [PubMed]

- Álvarez, V.M.; Sánchez, C.N.; Gutiérrez, S.; Domínguez-Soberanes, J.; Velázquez, R. Facial emotion recognition: A comparison of different landmark-based classifiers. In Proceedings of the 2018 International Conference on Research in Intelligent and Computing in Engineering (RICE), San Salvador, El Salvador, 22–24 August 2018; pp. 1–4. [Google Scholar]

- Guo, J.; Lei, Z.; Wan, J.; Avots, E.; Hajarolasvadi, N.; Knyazev, B.; Anbarjafari, G. Dominant and complementary emotion recognition from still images of faces. IEEE Access 2018, 6, 26391–26403. [Google Scholar] [CrossRef]

- West, M.J.; Copland, D.A.; Arnott, W.L.; Nelson, N.L.; Angwin, A.J. Effects of prosodic and semantic cues on facial emotion recognition in relation to autism-like traits. J. Autism Dev. Disord. 2018, 48, 2611–2618. [Google Scholar] [CrossRef] [PubMed]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. A novel emotion elicitation index using frontal brain asymmetry for enhanced EEG-based emotion recognition. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 737–746. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Huang, D.; Liu, C.; Jiang, D. Objectivity meets subjectivity: A subjective and objective feature fused neural network for emotion recognition. Appl. Soft Comput. 2022, 122, 108889. [Google Scholar] [CrossRef]

- Zhang, Y.; Cheng, C.; Wang, S.; Xia, T. Emotion recognition using heterogeneous convolutional neural networks combined with multimodal factorized bilinear pooling. Biomed. Signal Process. Control. 2022, 77, 103877. [Google Scholar] [CrossRef]

- Alarcao, S.M.; Fonseca, M.J. Emotions recognition using EEG signals: A survey. IEEE Trans. Affect. Comput. 2017, 10, 374–393. [Google Scholar] [CrossRef]

- Guo, J.Y.; Cai, Q.; An, J.P.; Chen, P.Y.; Ma, C.; Wan, J.H.; Gao, Z.K. A Transformer based neural network for emotion recognition and visualizations of crucial EEG channels. Phys. A Stat. Mech. Its Appl. 2022, 603, 127700. [Google Scholar] [CrossRef]

- Acharya, U.R.; Sudarshan, V.K.; Adeli, H.; Santhosh, J.; Koh, J.E.; Adeli, A. Computer-aided diagnosis of depression using EEG signals. Eur. Neurol. 2015, 73, 329–336. [Google Scholar] [CrossRef]

- Zheng, W.; Tang, H.; Huang, T.S.; Konar, A.; Charkraborty, A. Emotion recognition from non-frontal facial images. Pattern Anal. Approach 2014, 1, 183–213. [Google Scholar]

- Ekman, P.; Keltner, D. Universal facial expressions of emotion. Nonverbal Commun. Where Nat. Meets Cult. 1997, 27, 46. [Google Scholar]

- Zheng, W.L.; Lu, B.L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Tripathi, S.; Acharya, S.; Sharma, R.D.; Mittal, S.; Bhattacharya, S. Using deep and convolutional neural networks for accurate emotion classification on deap dataset. In Proceedings of the 29th IAAI Conference, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zhong, P.; Wang, D.; Miao, C. EEG-Based Emotion Recognition Using Regularized Graph Neural Networks. IEEE Trans. Affect. Comput. 2020, accepted. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, X.; Hu, B.; Zhang, Y.; Cui, X. EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl. Soft Comput. 2021, 100, 106954. [Google Scholar] [CrossRef]

- Kober, H.; Barrett, F.; Joseph, J.; Bliss-moreau, E.; Lindquist, K.; Wager, T.D. Functional grouping and cortical—Subcortical interactions in emotion: A meta-analysis of neuroimaging studies. Neuroimage 2008, 42, 998–1031. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.J.; Loucks, R.A.; Palmer, A.L.; Brown, A.C.; Solomon, K.M.; Marchante, A.N.; Whalen, P.J. The structural and functional connectivity of the amygdala: From normal emotion to pathological anxiety. Behav. Brain Res. 2011, 223, 403–410. [Google Scholar] [CrossRef] [Green Version]

- Musha, T.; Terasaki, Y.; Haque, H.A.; Ivamitsky, G.A. Feature extraction from EEGs associated with emotions. Artif. Life Robot. 1997, 1, 15–19. [Google Scholar] [CrossRef]

- Aftanas, L.I.; Reva, N.V.; Varlamov, A.A.; Pavlov, S.V.; Makhnev, V.P. Analysis of evoked EEG synchronization and desynchronization in conditions of emotional activation in humans: Temporal and topographic characteristics. Neurosci. Behav. Physiol. 2004, 34, 859–867. [Google Scholar] [CrossRef]

- Urigüen, J.A.; Garcia-Zapirain, B. EEG artifact removal—state-of-the-art and guidelines. J. Neural Eng. 2015, 12, 031001. [Google Scholar] [CrossRef]

- Hjorth, B. EEG analysis based on time domain properties. Electroencephalogr. Clin. Neurophysiol. 1970, 29, 306–310. [Google Scholar] [CrossRef]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion recognition from EEG using higher order crossings. IEEE Trans. Inf. Technol. Biomed. 2009, 14, 186–197. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Sourina, O. Real-Time Fractal-Based Valence Level Recognition from EEG. Trans. Comput. Sci. XVIII 2013, 7848, 101–120. [Google Scholar]

- Sh, L.; Jiao, Y.; Lu, B. Differential entropy feature for EEG-based vigilance estimation. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 6627–6630. [Google Scholar]

- Zheng, W.L.; Liu, W.; Lu, Y.; Lu, B.L.; Cichocki, A. Emotionmeter: A multimodal framework for recognizing human emotions. IEEE Trans. Cybern. 2018, 49, 1110–1122. [Google Scholar] [CrossRef] [PubMed]

- Lin, O.; Liu, G.Y.; Yang, J.M.; Du, Y.Z. Neurophysiological markers of identifying regret by 64 channels EEG signal. In Proceedings of the 12th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 18–20 December 2015; pp. 395–399. [Google Scholar]

- Shi, Y.; Zheng, X.; Li, T. Unconscious emotion recognition based on multi-scale sample entropy. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine, Madrid, Spain, 3–6 December 2018; pp. 1221–1226. [Google Scholar]

- Jenke, R.; Peer, A.; Buss, M. Feature extraction and selection for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2014, 5, 327–339. [Google Scholar] [CrossRef]

- Lin, Y.P.; Wang, C.H.; Jung, T.P.; Wu, T.L.; Jeng, S.K.; Duann, J.R.; Chen, J.H. EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar] [PubMed]

- Sbargoud, F.; Djeha, M.; Guiatni, M.; Ababou, N. WPT-ANN and Belief Theory Based EEG/EMG Data Fusion for Movement Identification. Trait. Signal 2019, 36, 383–391. [Google Scholar] [CrossRef]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG Emotion Recognition Using Dynamical Graph Convolutional Neural Networks. IEEE Trans. Affect. Comput. 2020, 11, 532–541. [Google Scholar] [CrossRef] [Green Version]

- Zheng, F.; Hu, B.; Zhang, S.; Li, Y.; Zheng, X. EEG Emotion Recognition based on Hierarchy Graph Convolution Network. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houston, TX, USA, 9–12 December 2021; pp. 1628–1632. [Google Scholar]

- Chao, H.; Dong, L.; Liu, Y.; Lu, B. Emotion recognition from multiband EEG signals using CapsNet. Sensors 2019, 19, 2212. [Google Scholar] [CrossRef] [Green Version]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Such, F.P.; Sah, S.; Dominguez, M.A.; Pillai, S.; Zhang, C.; Michael, A.; Ptucha, R. Robust spatial filtering with graph convolutional neural networks. IEEE J. Sel. Top. Signal Process. 2017, 11, 884–896. [Google Scholar] [CrossRef] [Green Version]

- Jin, M.; Chen, H.; Li, Z.; Li, J. EEG-based Emotion Recognition Using Graph Convolutional Network with Learnable Electrode Relations. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Virtual Event, 1–5 November 2021; pp. 5953–5957. [Google Scholar]

- Zheng, X.; Yu, X.; Yin, Y.; Li, T.; Yan, X. Three-dimensional feature maps and convolutional neural network-based emotion recognition. Int. J. Intell. Syst. 2021, 36, 6312–6336. [Google Scholar] [CrossRef]

- Ou, Y.; Xue, Y.; Yuan, Y.; Xu, T.; Pisztora, V.; Li, J.; Huang, X. Semi-supervised cervical dysplasia classification with learnable graph convolutional network. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 4–7 April 2020; pp. 1720–1724. [Google Scholar]

- De Munck, J.; Vijn, P.; Lopes da Silva, F. A random dipole model for spontaneous brain activity. IEEE Trans. Biomed. Eng. 1992, 39, 791–804. [Google Scholar] [CrossRef]

- Salvador, R.; Suckling, J.; Coleman, M.R.; Pickard, J.D.; Menon, D.; Bullmore, E.D. Neurophysiological architecture of functional magnetic resonance images of human brain. Cereb. Cortex 2005, 15, 1332–1342. [Google Scholar] [CrossRef] [Green Version]

- Achard, S.; Bullmore, E. Efficiency and cost of economical brain functional networks. PLoS Comput. Biol. 2007, 3, e17. [Google Scholar] [CrossRef]

- Jang, S.; Moon, S.E.; Lee, J.S. EEG-based video identification using graph signal modeling and graph convolutional neural network. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Calgary, AB, Canada, 15–20 April 2018; pp. 3066–3070. [Google Scholar]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Wu, Q.; Qiu, M.; Wang, Y.; Chen, X. Emotion recognition from multi-channel EEG through parallel convolutional recurrent neural network. In Proceedings of the International Joint Conference on Neural Networks, Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Chen, J.X.; Zhang, P.W.; Mao, Z.J.; Huang, Y.F.; Jiang, D.M.; Zhang, Y.N. Accurate EEG-based emotion recognition on combined features using deep convolutional neural networks. IEEE Access 2019, 7, 44317–44328. [Google Scholar] [CrossRef]

- Ma, J.; Tang, H.; Zheng, W.L.; Lu, B.L. Emotion Recognition using Multimodal Residual LSTM Network. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 176–183. [Google Scholar]

- Qiu, J.L.; Li, X.Y.; Hu, K. Correlated attention networks for multimodal emotion recognition. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Barcelona, Spain, 4–8 May 2018; pp. 2656–2660. [Google Scholar]

- Xing, X.; Li, Z.; Xu, T.; Shu, L.; Hu, B.; Xu, X. SAE+LSTM: A New Framework for Emotion Recognition From Multi-Channel EEG. Front. Neurorobot. 2019, 13, 37. [Google Scholar] [CrossRef]

- Deng, X.; Zhu, J.; Yang, S. SFE-Net: EEG-based Emotion Recognition with Symmetrical Spatial Feature Extraction. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 2391–2400. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).