Abstract

In recent years, evidence has been provided that individuals with dyslexia show alterations in the anatomy and function of the auditory cortex. Dyslexia is considered to be a learning disability that affects the development of music and language capacity. We set out to test adolescents and young adults with dyslexia and controls (N = 52) for their neurophysiological differences by investigating the auditory evoked P1–N1–P2 complex. In addition, we assessed their ability in Mandarin, in singing, their musical talent and their individual differences in elementary auditory skills. A discriminant analysis of magnetencephalography (MEG) revealed that individuals with dyslexia showed prolonged latencies in P1, N1, and P2 responses. A correlational analysis between MEG and behavioral variables revealed that Mandarin syllable tone recognition, singing ability and musical aptitude (AMMA) correlated with P1, N1, and P2 latencies, respectively, while Mandarin pronunciation was only associated with N1 latency. The main findings of this study indicate that the earlier P1, N1, and P2 latencies, the better is the singing, the musical aptitude, and the ability to link Mandarin syllable tones to their corresponding syllables. We suggest that this study provides additional evidence that dyslexia can be understood as an auditory and sensory processing deficit.

1. Introduction

Dyslexia is one of the most common neurodevelopmental disorders in children and adolescents, with a worldwide prevalence ranging between 5–10% [1,2]. Across languages, individuals with dyslexia have learning impairments leading to lower language and music performance. Regarding language performance, dyslexia is often associated with lower phonological, spelling, decoding, and word-recognition skills [3,4,5]. In addition, dyslexics show several deficits in musical performance [3,6,7,8]. Impairments were found in both the discrimination of elementary and complex auditory sounds [8], as well as in musical performance [7]. Compared to controls, individuals with dyslexia performed worse when introduced to singing or playing musical instruments [7]. There is growing evidence that lower musical perceptual ability, and lower musical and language performance in dyslexics, is associated with the altered anatomy and function of the auditory cortex [3,8,9]. Therefore, particular attention has been paid to the auditory evoked P1–N1–P2 complex to study individual differences in auditory processing [3,10,11,12,13,14,15].

While dyslexics show deficits in music and language ability, musicians or individuals with high musical talent are on the other side of the spectrum. Musical training enhances auditory perception accuracy [16,17,18,19,20,21], which in turn is important for language development and capacity [21,22,23,24]. Studies on positive music-to-language transfer can be roughly subdivided into two main dimensions: studies using perceptual musical measurements and their comparison to language perception and performance tasks [25,26]; and studies using music performance measures (e.g., instrument playing and singing) or music-training measures and their comparison to language capacities including, among others, pronunciation [27,28,29,30,31] and reading [32]. Interestingly, accurate perception does not always predict accurate reproduction. Individuals performing well in perceiving foreign languages do not necessarily pronounce these utterances accurately [33]. The literature concerning the relationship between singing and pitch perception [34] is contradictory, with some studies reporting a relationship and others suggesting no link.

The speech perception of unfamiliar languages is perceived more precisely by musicians and by individuals with higher musical ability [28,35,36]. In this respect, several hypotheses have been put forward that focus on perceptual parameters with the objective to provide crucial information about the underlying dimensions as to why musical training improves language functions [37]. Patel’s OPERA hypothesis, for instance, proposes musical training to impose five conditions leading to adaptive plasticity in networks that are relevant for speech processing [37]. These are: overlap, precision, emotion, repetition, and attention. Two of them, namely, overlap and precision, are important for the acquisition of tone languages. Pitch discrimination ability in speech improves through musical training [38], which is why overlaps between music and language are rather salient in Mandarin, a tone language in which tones determine semantic information. The acquisition of the so-called tone syllables requires precise tonal ability. Mandarin has four different tones, which are generally referred to as ‘high level tone’, ‘(high) rising tone’, ‘falling-rising tone’, and ‘falling tone’ [39]. Mandarin native speakers are sensitive to tonal information in language, which provides a possible explanation why tone language speakers, compared to non-tone language speakers, possess enhanced pitch memory, higher tonal skills, and improved pitch processing ability [40]. This is in line with findings that musical training facilitates lexical tone identification [41] and the learning of Mandarin in general [42].

While there is a large body of literature on music and language functions, studies focusing on music production tasks such as singing and its relationship to language functions are limited. In general, there are two main branches of singing studies that focus on the positive transfer from singing to language: studies using singing as a tool to learn new vocabulary [43,44], and studies focusing on singing ability and its relationship to language ability. The latter used phonetic aptitude measures in which utterances of unfamiliar foreign languages had to be pronounced [36,45,46] or foreign accents had to be imitated [31] (so-called delayed mimicry paradigm [47]). These studies provide growing evidence that singing ability is particularly associated with the ability to mimic foreign accents and the ability to imitate and to pronounce foreign words and longer sequences of phrases [31,45,46,48,49]. In general, musical training is associated with the improved processing of acoustic input. Both singing ability and language pronunciation rely on vocal-motor skills and the integration of sensory and vocal-tract-related motor representations [27,28,35,45]. This has also been suggested to be a possible reason why singing ability is more strongly associated with language pronunciation than perceptual musical measures [28,35]. On the other hand, singing as a musical performance can be defined as a musical production task. The same is true when individuals are instructed to learn to pronounce new words in an unknown language. In addition, pronunciation tasks provide information about individual differences in phonological ability [31,36,45,46].

Another well-studied language component is phonemic coding ability. It describes the process in which sounds need to be retained and linked to a phonetic symbol. The ability to connect sounds and symbols is the fundamental basis for reading and writing processes. Research has shown that individuals diagnosed with dyslexia have particular difficulty with reading, spelling, and writing [4,50]. The Precise Auditory Timing Hypothesis (PATH) suggests that auditory musical training not only improves musical performance but also phonological awareness and phonemic coding ability [51]. Indeed, research has shown that musical training improves reading ability in first and foreign languages [32], and, in addition, it has been associated with phonemic awareness [52].

So far, our research has been mainly focused on music-related aspects, including the evaluation of differences in musical ability and on the auditory cortex of dyslexics compared to non-affected controls. As music and language share a large set of characteristics, our goal was to uncover distinct mechanisms for language measures in dyslexics and controls [8,9]. Therefore, we used our previously developed tone-language measures in Mandarin, in which the ‘high level tone’, the ‘(high) rising tone’, the ‘falling-rising tone’, and the ‘falling tone’ were embedded in Mandarin discrimination and Mandarin sound-syllable tone recognition tasks, and we also employed Mandarin pronunciation tasks of a former study [53]. In addition, we used previous research design in which music perception and music performance (singing) tasks were assessed. Our aim was to uncover whether we would also detect similar relationships between tone language performance and the auditory evoked responses than we found in previous studies which focused on musical capacities.

2. Materials and Methods

2.1. Participants

Fifty-two participants were included in this study, twenty six in each of the two groups (dyslexia and controls). Further information about the participants is contained in the Supplementary Materials (see Table S1). All the subjects were tested for their ability to discriminate and to detect tonal changes in paired Mandarin statements. We also assessed the participants’ ability to pronounce Mandarin and introduced measures that provide information about individual differences in elementary auditory skills, musical aptitude, and singing ability. None of the participants reported being able to speak or to comprehend Mandarin. All participants were native German speakers. The participants of this study were part of the larger combined cross-sectional and longitudinal research project “AMseL” (Audio- and Neuroplasticity of Musical Learning). This research has mainly focused on the impact of musical practice on cognition and the brain. The AMseL project was conducted at the University of Heidelberg (2009–2020) and founded by the German Federal Ministry of Education and Research (BMBF) and the German research foundation (DFG).

Dyslexics were diagnosed according to the Pediatric Neurology standards of the University Hospital Heidelberg, using the Ein Leseverständnistest für Erst- bis Sechstklässler (ELFE 1-6) [54] to assess spelling skills and the Hamburger Schreib-Probe (H-LAD) to assess phoneme discrimination [55]. All participants had normal hearing (defined as ≤20 dB HL pure-tone thresholds from 250 to 8000 Hz). Participants reported no comorbidities or history of neurological disorders.

2.2. Musical Background

The musical competence of each participant was assessed by obtaining the musical experience (questionnaire) and by calculating an index of cumulative musical practice (IMP). The IMP was defined as the product of the number of years of formal music education and the number of hours per week spent practicing a musical instrument [3,8,56]. However, compared to dyslexics (M = 7.30, SE = 1.55), the control group showed a tendency to higher musical experience (M = 9.67, SE = 1.20); the difference was statistically non-significant (t(50) = 1.21, p = 0.23; r = 0.17).

2.3. Measuring Mandarin Ability

Three Mandarin measures comprising tone discrimination, syllable recognition, and pronunciation tasks were developed to assess individual differences in Mandarin ability. The collection of our Mandarin measures included all four syllable tones: the ‘high level tone’ (5-5), the ‘(high) rising tone’ (3-5), the ‘falling-rising tone’ (2-1-4), and the ‘falling tone’ (5-1) [39]. However, we did not analyze tones separately, and we used scores like we used in a previous study [53].

2.3.1. Tone Discrimination Task (Mandarin D)

The tone discrimination task consisted of 18 paired samples. The paired samples were either identical or contained a single tonal change in a particular syllable in the second statement (e.g., bùzhì versus bùzhī). The 18 samples comprised between two and eleven syllables. The first played statement was separated by a pause of 1 s. from the second statement. After listening to the statements, the participants had to indicate whether both statements were the same or different. Prior to performing the Mandarin D measure, the participants received a practice unit comprising 4 items (iterations permitted). After a short break of about five minutes, the participants had to take the test in a single run.

2.3.2. Syllable Tone Recognition Task (Mandarin S)

Assessing individual differences in phonetic coding ability can be performed by using well-known language measures. For instance, the Phonetic Script, which is a subtest of the Modern Language Aptitude Test (MLAT) by Carroll and Sapon [57], or the more recently developed LLAMA E sound-symbol correspondence task by Meara [58] would be appropriate tools to evaluate individual differences in the ability link sounds to symbols. However, while the MLAT uses English as the target language, LLAMA is based on a British Columbian Indian language. To our best knowledge, there is no Mandarin sound-symbol correspondence task that is based on tone syllable recognition. Therefore, we developed a similar sound-symbol correspondence task in which we additionally embedded a tonal change of a tone syllable.

For the syllable tone recognition task, the participants had to listen to two paired samples and decide in which second statement syllable the tonal change occurred. The syllable tone recognition task consisted of 16 paired samples. Syllables were presented in visual form, and each syllable was indicated as a separate unit. As the paired statements only exhibited one tonal difference, the wording and syllable structure were equal for both statements, thereby only removing the diacritics. The word and phrase length of the samples varied between two and seven syllables. After completing a practice unit (repeated iterations permitted), participants took a five-minute break before they had to perform the tasks in a single session.

2.3.3. Mandarin Pronunciation Task (Mandarin P)

The language production task consisted of nine Mandarin phrases (spoken by native speakers) of seven, nine, and eleven syllables. The subjects were instructed to repeat the phrases after listening to them for the third time. We used Mandarin samples that had been developed for assessing individual differences in Mandarin pronunciation in previous studies [36,45,46,53]. Recordings of the participants were normalized for their loudness and rated by Mandarin native speakers. Raters were instructed to evaluate how well participants globally imitated the Mandarin samples. Scores ranged between zero and ten. The interrater reliability was assessed by intra-class coefficient analysis, as provided in the Supplementary (see Table S2).

2.4. Gordon’s Musical Ability Test

The Advanced Measures of Musical Audiation (AMMA test) by Gordon [59] was used to assess the musical ability of the participants. It consists of rhythmic and tonal discrimination tasks with which the ability to internalize musical structures is assessed. The paired musical statements are embedded in one single test construction. Participants must indicate whether the paired statements are equal or different. In the different condition, participants additionally have to indicate whether the recognized difference was of tonal or rhythmical origin. The test consists of 33 items, including 3 familiarization/training tasks to start with. The score of all subtests which is generated is indicated by AMMA total in this study.

2.5. Tone Frequency and Duration Test

To assess individual differences in basic sound discrimination abilities, we used two subtests of the primary auditory threshold measure KLAWA (Klangwahrnehmung): tone frequency and duration. Tone frequency measures the ability to discriminate between high and low tones, while duration provides information about individual differences in distinguishing between short and long tones. KLAWA is an inhouse computer-based threshold measure. Difference limes are measured for tone frequency (“low vs. high”) and duration (“short vs. long”). Based on an “alternative-forced choice” [60], we used these measurements for assessing individual perceptual thresholds to study auditory processing (cent = 1/100 semitone for recording the pitch, and milliseconds (ms) for time measurements). In a forced-choice paradigm, reference and test tones (sinusoids), separated by an inter-stimulus interval of 500 ms, were presented. Participants were asked to decide which of the presented tones sounds “higher” or “lower” in the tone frequency subtests, and “shorter” or “longer” in the duration subtest, respectively. If the participant’s answer was incorrect, the difference between the paired tones became larger. If the answer was correct, the difference became smaller. This procedure allows for the calculation of individual threshold values based on convergence behavior.

2.6. Singing Ability and Singing Behavior during Childhood

We employed a song singing task that had already been used in previous studies [27,35,45,46,48] to assess individual differences in singing ability. Generally, such tasks are laymen (vs. professional musicians) tailored [46,61,62]. Participants are introduced to singing Happy Birthday to the best of their ability in a key to suit their own singing voice.

The performance assessment was evaluated by singing experts (2 male and 2 female raters). Raters had to score four aspects (melody, vocal range, quality of voice, and rhythm). All four criteria were collapsed into a single score (singing total). Intra-class correlation coefficients were calculated to assess the interrater reliability. Ratings were found to be reliable (see Supplementary Table S3).

In addition, a multi-item scale concept was used, to obtain participants’ singing behavior during childhood and adolescence. Furthermore, participants reported average hours per week spent singing. Additional instructions were given to ensure that participants referred to the same time period. Childhood was defined as the age-span up to 11 years, and the adolescence period was defined as between 12 and 18 years. It is known that the singing voice reaches approximately two octaves at the age of 10 [63], which is similar to adults without vocal training [36]. The described concepts consist of eight questions each and have been used previously [36,45]. The internal consistencies of the concepts were found to be reliable. Questions and reliability analysis are contained in the Supplementary (see Tables S4 and S5).

2.7. Neurophysiological Measurement: Magnetencephalography (MEG)

The Neuromag-122 whole-head MEG system was used to measure the brain responses to seven different sampled instrumental tones (piano, guitar, flute, bass clarinet, trumpet, violin, and drums) and four artificial simple harmonic complex tones. The measurement session duration was 15 min. The procedure is equivalent to previous studies [3,7,8,12,58,64]. The measured auditory evoked fields (AEFs) include the primary auditory P1 response occurring about 30–80 ms after tone onset and the following secondary auditory N1 and P2 responses occurring about 90–250 ms after tone onset. Regarding the temporal dynamics of auditory processing, research identified the first positive auditory evoked response complex (P1) to be one of the most important predictors to explain individual differences in elementary sound perception [3,8]. The following N1 component is proposed to reflect (pre-)attentional processes, sensory stimuli processing [11], and learning-induced plasticity [12], while the P2 response is postulated to be a marker of complex auditory tasks, and sensory integration [13], and it is seen as a biological marker of learning [14]. The primary P1 component and the N1 component are said to be modulated by musical training [3,10], while the P2 response is said to be enhanced after persistent training over longer periods [15].

A bandpass filter of 0.00 (DC)–330 Hz and a sampling rate of 1000 Hz were used to record the AEFs. All 11 tones (instrumental and artificial simple harmonic complex tones) were presented in a pseudorandomized order (tone length 500 ms, inter-stimulus interval range 300–400 ms) and repeated about 100 times. These iterations ensure an optimal signal-to-noise ratio as a prerequisite for robust source modeling enabling analyses of the time course, latencies, and amplitudes of the AEFs. Prior to the MEG recording, subjects’ preparation included the following aspects. The locations of the four head position coils and a set of 35 surface points, including nasion and two pre-auricular points, were determined to identify the participants’ head positions inside the dewar helmet. Participants were introduced to listen passively to the presented sounds. To decrease possible motion artifacts, participants were instructed to listen to the sounds in a relaxed state. Foam earpieces (Ethymotic ER3) were connected via 90 cm plastic tubes (diameter 3 mm) to small, shielded transducers that were fixed in boxes next to the subject’s chair. Stimuli were presented binaurally. The volume was adjusted (70 dB SPL as determined by a Brüel and Kjaer artificial ear (type 4152)). BESA Research 6.0 software (MEGIS Software GmbH, Graefelfing, Germany) was used for data analysis. Before averaging, external artifacts were excluded by applying the BESA research event-related fields (ERF) module. Thus, on average, 3–7 noisy (bad) channels were removed, and around 10% of all epochs exceeding a gradient of 600 fT/cm × s and amplitudes either exceeding 3000 fT/cm or falling below 100 fT/cm were rejected from further analysis. This automatic artifact scan tool removes a substantial part of endogenous artifacts (e.g., eye blinks, eye movements, cardiac activity, face movements, and muscle tensions). A baseline-amplitude calculated over the 100 ms interval before the onset of the tones was subtracted from the signals. The AEFs of each participant were averaged (approximately 1000 artifact-free epochs after the rejection of 10% of artifact afflicted or noisy epochs) using the time window of 100 ms pre-stimulus to 400 ms post-stimulus. Spatio-temporal source modeling was performed applying the spherical head model [65,66] to separate the primary response from the following secondary response complex using the two-dipole model with one equivalent dipole in each hemisphere [10,64]. The P1 wave as a composite response complex comprising separate peaks, including primary and secondary auditory activity, has high inter-individual variability with respect to the shape, the number of sub-peaks, and the timing of peak latencies. Therefore, the fitting interval was adjusted from the peak onset time either toward the saddle point in the case of a two-peak complex or toward the main peak latency in the case of a merged single P1 peak. The P1 response complex undergoes a developmental maturation, while the P1 response complex onset in adults occurs 30–70 ms after tone onset [64]; it can be found in adolescents 40–90 ms and in primary school children 60–110 ms [3,8,11,67] after tone onset. The secondary N1 response typically starts to develop between 8 and 10 years of age [3] and can clearly be separated from the preceding P1 response complex. In a first step, the primary source activity was modeled based on one regional source in each hemisphere using the predefined fitting intervals. In a second step, the location of the fitted regional sources was kept fixed, and then the dipole orientation was fitted towards the highest global field power while keeping its main orientation towards the vertex. Independent of the exact source location in the AC, the high temporal accuracy of P1 peak latency was maintained [12]. The following variables were considered: right and left P1; N1 and P2 peak latencies; and the composite scores for the left and right P1, N1, and P2 latencies (the P1 latency right and left (mean), the N1 latency right and left (mean), and the P2 latency right and left (mean); “mean” indicates the average across hemispheres). In addition, indirect measures of functional lateralization, including the absolute P1 asynchrony |R-L| [P1(Peak){right − left}], the absolute N1 latency asynchrony |R-L| [N1(Peak)(|right − left|)], and the P2 latency asynchrony |R-L| [P2(Peak)(|right − left|)] were considered.

2.8. Statistical Analysis

The statistical analyses were performed using the software package IBM SPSS Statistics Version 27.0. In order to contrast the individual differences of the groups, we ran a number of independent t-tests for the MEG and the behavioral variables. As a follow-up analysis, we performed two discriminant analyses as this procedure also takes the relationships between variables into account. One discriminant analysis was performed for the MEG variables and another for the behavioral ones. This aimed at illustrating which of the MEG and behavioral variables differentiate our groups best. Finally, in the third step, we performed correlations between the MEG and behavioral variables that separated our groups best, with the aim to show their relationships.

3. Results

3.1. MEG Variables

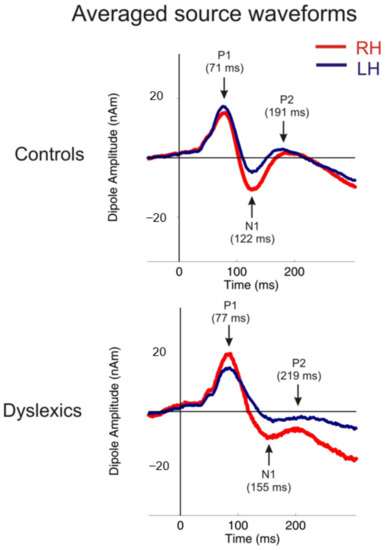

In the following section, we provide the results of the MEG variables. First, we provide averaged source waveforms for dyslexics and controls in Figure 1, followed by independent t-tests and a discriminant analysis. Individuals with dyslexia showed prolonged latencies in the composite scores for the left and right P1, and N1 and P2, latencies (see Table 1 and Table 2).

Figure 1.

The averaged source waveforms for the dyslexics and controls. The figure demonstrates the averaged source waveforms of the P1–N1–P2 complex in response to various sounds for the right (red) and the left (blue) hemisphere. The controls show a well-balanced hemispheric response pattern, while individuals with dyslexia demonstrate the prolonged response patterns of the entire P1–N1–P2 complex.3.1.1. Independent t-tests: MEG.

3.1.1. Independent t-Tests: MEG

Table 1 below shows the independent t-tests and the descriptives of the MEG variables of the controls and the individuals with diagnosed dyslexia. In order to avoid an accumulation of the alpha error for multiple testing, we applied a Benjamini–Hochberg correction. Descriptions of the MEG variables are provided in Section 2.8.

Table 1.

The independent t-tests and the descriptives of the MEG variables.

Table 1.

The independent t-tests and the descriptives of the MEG variables.

| Variables | Controls: Mean ± SE | Controls: Min.|Max. | Dyslexia: Mean ± SE | Dyslexia: Min.|Max. | p | r |

|---|---|---|---|---|---|---|

| P1 latency right and left (mean) + | 70.6 4 ± 1.94 | 56.50|97.50 | 77.71 ± 2.75 | 59.50|101.00 | p < 0.041 | r = 0.29 |

| absolute P1 latency asynchrony |R-L| | 3.89 ± 0.75 | 0.00|13.00 | 8.65 ± 2.66 | 1.00|70.00 | p < 0.091 | r = 0.24 |

| N1 latency right and left (mean) + | 121.52 ± 2.38 | 104.00|163.00 | 154.58 ± 9.18 | 106.50|236.50 | p < 0.001 | r = 0.44 |

| absolute N1 latency asynchrony |R-L| | 9.62 ± 2.53 | 0.00|52.00 | 16.85 ± 6.16 | 4.00|141.00 | p < 0.283 | r = 0.15 |

| P2 latency right and left (mean) + | 191.15 ± 6.29 | 144.00|251.50 | 219.50 ± 12.31 | 144.50|357.00 | p < 0.046 | r = 0.28 |

| absolute P2 latency asynchrony |R-L| | 11.81 ± 2.37 | 0.20|28.80 | 21.27 ± 4.56 | 0.10|31.20 | p < 0.071 | r = 0.25 |

+ indicates that the t-test remains significant after Benjamini–Hochberg correction for multiple testing (p < 0.05).

3.1.2. Discriminant Analysis: MEG

The results of the discriminant function revealed that the P1, N1, and P2 latencies separated the dyslexics from the control group best, Λ = 0.69, χ2(6) = 17.42, p < 0.008, and the canonical R2 = 0.31. The correlations between the MEG variables and the discriminant function revealed that the following variables: N1 latency right and left (r = 0.74), P1 latency right and left (r = 0.44), and P2 latency right and left (r = 0.43), which showed loads above the statistical acceptable cut off value of 0.4 [68] on the discriminant function. Table 2 below illustrates the structure matrix of the MEG variables and illustrates the loads of the MEG predictors on the discriminant function.

Table 2.

The discriminant function of the MEG variables. The table shows the correlations of the outcome variables and the discriminant function of the MEG variables. We used the statistically accepted cutoff value of 0.40 to decide which of the variables were large enough to discriminate between the groups (see bold numbers).

Table 2.

The discriminant function of the MEG variables. The table shows the correlations of the outcome variables and the discriminant function of the MEG variables. We used the statistically accepted cutoff value of 0.40 to decide which of the variables were large enough to discriminate between the groups (see bold numbers).

| MEG Predictors | |

|---|---|

| N1 latency right and left (mean) | 0.736 |

| P1 latency right and left (mean) | 0.444 |

| P2 latency right and left (mean) | 0.433 |

| absolute P2 latency asynchrony |R-L| | 0.389 |

| absolute P1 latency asynchrony |R-L| | 0.364 |

| absolute P2 latency asynchrony |R-L| | 0.229 |

3.2. Behavioral Variables

3.2.1. Independent t-Tests: Behavioral

Table 3 below shows the independent t-tests of the music and language variables of the controls and the individuals with diagnosed dyslexia. The results revealed that the control group performed significantly better than the individuals with dyslexia in all the Mandarin subtests, in the music measures, and in the elementary auditory skills except for duration (see Table 3). Singing hours per week, singing behavior during childhood, and singing behavior during adolescence are not provided in Table 3 since they failed to be significantly different across groups, even if we had decided to use a one tailed approach (see Supplementary Table S7). In order to avoid an accumulation of the alpha error for multiple testing, we applied a Benjamini–Hochberg correction. The independent t-tests and the descriptives for the subscores of the music and the singing variables are contained in the Supplementary (Tables S6 and S7).

Table 3.

The independent t-tests and the descriptives of the variables of the investigation.

3.2.2. Discriminant Analysis: Behavioral

The results of the discriminant function revealed that most of our music and language variables separated the groups well, Λ = 0.42, χ2(7) = 40.02, p < 0.001, and the canonical R2 = 0.58. The correlations between the considered variables and the discriminant function revealed that tone frequency showed the highest load on the discriminant function, followed by Mandarin P, singing total, AMMA total, and Mandarin S, which were all above the statistical acceptable cut off value of 0.4 [68]. Mandarin D and duration failed to discriminate the groups and were below the statistical acceptable cut off value. Table 4 below illustrates the structure matrix of the behavioral predictor variables and the loads of each predictor variable on the discriminant function.

Table 4.

The discriminant function of the behavioral variables. The table shows the correlations of the outcome variables and the discriminant function of the behavioral predictor variables. We used the statistically accepted cutoff value of 0.40 to decide which of the variables were large enough to discriminate between the groups.

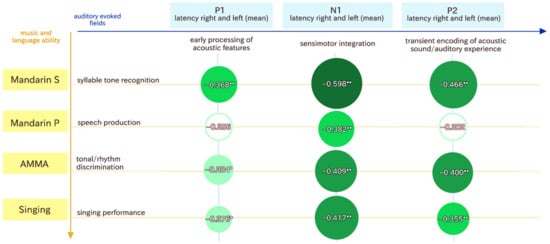

3.3. Correlational Analysis

The two discriminant functions were the basis for the follow-up correlational analysis. Therefore, we used the variables that differentiated between our groups (controls versus dyslexia) best and correlated them in order to outline which of the MEG variables are associated with the music and language variables. Figure 2 shows the most important behavioral measures, which also correlated with the MEG variables. A correlational analysis between the MEG and behavioral variables revealed that Mandarin syllable tone recognition, singing ability, and musical aptitude (AMMA) correlated with the P1, N1, and P2 latencies, while Mandarin pronunciation was only associated with N1 latency.

Figure 2.

This figure shows the correlations between the P1, N1, and P2 responses and the behavioral variables. * means significant at the 0.05 level (2-tailed), and ** means that correlations are significant at the 0.01 level. The color green represents the effect size of the correlation coefficient. The darker the green, the larger the effect.

4. Discussion

We assessed the language ability in Mandarin, the ability to sing, the musical talent, the individual differences in elementary auditory skills, and the auditory evoked the P1–N1–P2 complex of 52 adolescents and young adults with dyslexia and unaffected controls. The analysis revealed that most music and language measures differed significantly between dyslexics and controls. The discriminant analysis showed that individuals with dyslexia performed lower than controls in discriminating high versus low tones (frequency), in musical ability tasks, in singing, and in Mandarin pronunciation and the sound-syllable tone recognition task. In addition, the discriminant analysis for the auditory-evoked field variables revealed that individuals with dyslexia showed prolonged latency (right and left mean) of the P1, N1, and P2 response components. As a follow-up, correlations between the behavioral and the MEG variables were performed in order to uncover whether they are interrelated. Correlational analysis revealed that tone frequency was the only behavioral variable that did not correlate with any of the MEG variables. In the following sections, we discuss the P1, N1, and P2 responses and their correlations with the behavioral variables.

In general, the primary and secondary auditory cortex are the areas from which the P1 and N1 components of the event-related fields are said to emerge [69]. Both P1 and N1 represent early feature processing, with the P1 response being known to occur around 50–80 ms after tone onsets in adolescents [15] and the N1 response after around 80–110 ms [70]. The following P2 response is seen about 160–200 ms after tone onset [15]. The P1 complex has been associated with the ability to perceive auditory stimuli [3,8,10]. It can already be detected in early childhood [71]. While the individual differences of the P1 complex can already be measured in childhood, the N1 response usually emerges later, between 8 and 10 years of age [67,71]. The N1 response is presumed to be an intermediate stage in auditory analysis contributing to sound detection [72]. Further, the N1 response reflects integrative processes and shows a strong context dependency and learning-induced plasticity [12]. Additionally, studies suggested that the N1 response component reflects sensory stimuli processing and sensorimotor integration [70,73], as well as attention-specific processes [74]. The following P2 response has been postulated to be sensitive to auditory experience [75] and seems to be a marker of complex auditory task processing such as melody and rhythm recognition. However, the P2 complex has also been associated with sensory integration [13] and sensory processes, such as the transient encoding of acoustic sound features, as well as those involved in forming short-term sound representation in sensory memory [72]. In addition, the P2 complex has also been defined as a biological marker of learning [75].

There is evidence from previous studies that the P1 source waveform responses are well-balanced in controls, whereas individuals with dyslexia show prolonged P1 [7,8] and N1 latencies [7]. Beside prolonged P1 and P2 latencies, the P2 response was shown to be larger in the participants who were diagnosed dyslexia. In general, reduced latencies are associated with higher attention, and faster and more precise auditory processing [7,8,76]. Considering that N1 and P2 responses are also associated with sensory processing, reduced latency can also be understood as an enhanced sensory processing skill. This claim can also be supported by language disorder frameworks, which suggest that dyslexia is a deficit of impaired auditory sensory integration of incoming acoustic signals [77,78]. In former studies, we used various musical measures to uncover relationships between auditory processing and musical ability. Findings indicated that individuals with dyslexia showed impairments in elementary (e.g., frequency and duration) and complex auditory sound discrimination (e.g., AMMA measures) [3]. These findings were linked to deficits in P1 responses. In a follow-up study, we noted that lower musical performance (e.g., playing an instrument or singing) was also associated with prolonged N1 latencies. Therefore, we presume that the lower musical performances of patients with dyslexia could also be understood as auditory sensory impairment [7].

The results of our behavioral measures provide further evidence that dyslexia might be a disorder featuring auditory and sensory deficits. Thereby, individuals with dyslexia performed worse in music and language measures regardless of whether they were classified as perception or production variables. The discriminant analysis revealed that the sound-symbol correspondence task (Mandarin S), Mandarin pronunciation, and singing ability and musical ability (AMMA) were the most important behavioral variables separating the groups best. The latter, musical ability, has already been investigated in detail. It is understood that the P1, N1, and subsequent P2 responses are modulated by musical input and training, including singing [3,10,79]. The P1 and N1 responses were prolonged and faster in musicians [3,80], and the P2 response complex was considerably enlarged as a result of musical long-term experience [79], which underlines that musical training modulates the entire P1–N1–P2 complex.

The Mandarin S task requires individuals to detect a tonal change in the second statement. This includes that more than one process takes place at the same time. First, they have to recognize a tonal change and second link the tonal change to a specific syllable—an ability that is linked to individual differences in reading and writing skills. In particular, this task can be considered to be a sound-symbol correspondence task and has a strong musical component in which individuals have to recognize a change of the tone syllable. In this respect, this task requires the precise ability to discriminate between auditory stimuli and high attentional skills and is more complex than, for instance, language pronunciation tasks. This may be one possible explanation for why we detected that the Mandarin S task is negatively correlated with the P1, N1, and P2 responses, which means that the lower the latencies, the better the Mandarin S performance. The Mandarin pronunciation task is indeed simpler than the Mandarin S task and is of high ecological validity as it simulates a situation in which new languages are acquired [36,45,46]. Therefore, Mandarin pronunciation tasks provide information about phonological skills and the ability to imitate new words. Pronunciation tasks such as our Mandarin task, as well as their relationship to musical ability across all ages, have often been employed in language aptitude research [30,36,45,46]. Musical ability, particularly singing ability, is related to the ability to pronounce, retrieve, and imitate new language material. Behavioral research suggests that enhanced vocal flexibility [27], vocal control [28,35], and vocal-motor and auditory-motor integration [81,82] are some of the underlying mechanisms that explain their close relationship.

In consideration of the findings of this study, the results of the aforementioned behavioral studies are supported by the MEG results. Both the ability to sing and to pronounce Mandarin were negatively correlated to the N1 latency, meaning the lower the latency, the better the singing and pronunciation performances. The N1 response component reflects sensory stimuli processing and sensorimotor integration [70,73]. Therefore, this study provides additional evidence that sensory ability is the reason why singing and the ability to learn to pronounce new languages have been found to be very interrelated in behavioral testing [27,30,35].

We are aware of several limitations in our study. Hence, future studies should include larger sample sizes and outline whether similar or distinct findings will be made when EEG recording is conducted.

5. Implications and Future Research Directions

Music and language exhibit overlaps on multiple dimensions. In this study, we provided additional evidence that language and music processing are interrelated in the auditory cortex. This supports the commonly accepted frameworks on music and language relationships such as the OPERA hypothesis [37], which proposes that musical training and ability improves language capacity.

Former studies provided evidence that professional musicians outperformed non-musicians in detecting Mandarin syllable discrimination tasks and in the ability to pronounce Mandarin [53]. Musical training studies made similar observations and have outlined that pitch processing in Mandarin improves through the playing of instruments [83]. Consequently, the acquisition of Mandarin tone syllables requires the precise ability to process tonal material as an additional cognitive capacity. This may be a further aspect that should be addressed when individuals with dyslexia acquire tone languages, and we suggest that at least two crucial aspects have to be addressed in future studies. First, such studies should assess whether musical training may be a beneficial tool to support individuals with dyslexia when they learn a tone language. Second, they should consider that learning Mandarin requires being able to link syllable tones to Mandarin orthography—a rather complex process. Research also noted that the orthographic complexity of specific languages exacerbates the symptoms of dyslexia [84], which is why future research should also study dyslexia and Mandarin in more detail. Our findings suggest that dyslexics also have deficits in sensory stimuli processing and the sensorimotor integration of vocalization regardless of whether they sing or imitate new languages. Various therapies have been developed in which singing plays a major role in improving fluency in speech. For instance, (intoned) singing is part of Melodic Intonation Therapy and aims to improve the spontaneous speech production in patients who suffer from non-fluent aphasia [85]. Singing has also been employed as a tool to reduce stuttering and to gain better vocal motor control [86]. However, we want to note that singing facilitates pronunciation skills in general. Therefore, singing should not be used to learn syllable tones in Mandarin as the latter become neutralized when they are sung [53]. Moreover, we propose singing to be a good tool to train self-monitoring, to integrate vocal tract-related skills, and to develop sensory motor representations in order to gain better vocal motor control while articulating [87]. This suggests that future research directions should investigate whether singing would be a useful tool to improve the ability to learn new words in individuals who are diagnosed with dyslexia.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/brainsci12060744/s1, Table S1: Description of participants; Table S2: Mandarin intraclass coefficients; Table S3: singing intraclass coefficients; Table S4: Cronbach’s α coefficients for the singing during childhood questionnaire; Table S5: Cronbach’s α coefficients for the singing during adolescent questionnaire; Table S6: Independent t-tests and descriptives of the musical sub-variables; Table S7: Independent t-tests and descriptives of the singing sub-variables.

Author Contributions

Conceptualization, M.C. and C.G.; methodology, M.C. and C.G.; formal analysis, M.C. and C.G.; investigation, M.C., C.G., V.B. and B.L.S.; resources, M.C.; data curation, M.C. and C.G.; writing—original draft preparation, M.C. and C.G.; writing—review and editing, M.C., C.G., V.B., B.L.S., J.R., J.B. and S.S.-L.; visualization, V.B.; supervision, P.S.; and mandarin task development, J.R. and M.C. All authors have read and agreed to the published version of the manuscript.

Funding

Open Access Funding by the University of Graz. This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of the Medical Faculty of Heidelberg S-778/2018.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are contained within the article or Supplementary Materials.

Acknowledgments

Markus Christiner is funded within the Post-Doc Track Programme of the OeAW. The authors acknowledge open-access funding from the University of Graz. The authors thank L. Chan for creating Figure 1.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Polanczyk, G.; de Lima, M.S.; Horta, B.L.; Biederman, J.; Rohde, L.A. The worldwide prevalence of ADHD: A systematic review and metaregression analysis. Am. J. Psychiatry 2007, 164, 942–948. [Google Scholar] [CrossRef]

- Shaywitz, S.E.; Shaywitz, B.A. Dyslexia (specific reading disability). Pediatrics Rev. 2003, 24, 147–153. [Google Scholar] [CrossRef]

- Seither-Preisler, A.; Parncutt, R.; Schneider, P. Size and synchronization of auditory cortex promotes musical, literacy, and attentional skills in children. J. Neurosci. 2014, 34, 10937–10949. [Google Scholar] [CrossRef]

- Hämäläinen, J.A.; Lohvansuu, K.; Ervast, L.; Leppänen, P.H.T. Event-related potentials to tones show differences between children with multiple risk factors for dyslexia and control children before the onset of formal reading instruction. Int. J. Psychophysiol. 2015, 95, 101–112. [Google Scholar] [CrossRef] [PubMed]

- Tallal, P.; Miller, S.; Fitch, R.H. Neurobiological basis of speech: A case for the preeminence of temporal processing. Ann. N. Y. Acad. Sci. 1993, 682, 27–47. [Google Scholar] [CrossRef]

- Huss, M.; Verney, J.P.; Fosker, T.; Mead, N.; Goswami, U. Music, rhythm, rise time perception and developmental dyslexia: Perception of musical meter predicts reading and phonology. Cortex 2011, 47, 674–689. [Google Scholar] [CrossRef] [PubMed]

- Groß, C.; Serrallach, B.L.; Möhler, E.; Pousson, J.E.; Schneider, P.; Christiner, M.; Bernhofs, V. Musical Performance in Adolescents with ADHD, ADD and Dyslexia—Behavioral and Neurophysiological Aspects. Brain Sci. 2022, 12, 127. [Google Scholar] [CrossRef] [PubMed]

- Serrallach, B.; Groß, C.; Bernhofs, V.; Engelmann, D.; Benner, J.; Gündert, N.; Blatow, M.; Wengenroth, M.; Seitz, A.; Brunner, M.; et al. Neural biomarkers for dyslexia, ADHD, and ADD in the auditory cortex of children. Front. Neurosci. 2016, 10, 324. [Google Scholar] [CrossRef]

- Turker, S.; Reiterer, S.M.; Seither-Preisler, A.; Schneider, P. “When Music Speaks”: Auditory Cortex Morphology as a Neuroanatomical Marker of Language Aptitude and Musicality. Front. Psychol. 2017, 8, 2096. [Google Scholar] [CrossRef]

- Schneider, P.; Scherg, M.; Dosch, H.G.; Specht, H.J.; Gutschalk, A.; Rupp, A. Morphology of Heschl’s gyrus reflects enhanced activation in the auditory cortex of musicians. Nat. Neurosci. 2002, 5, 688–694. [Google Scholar] [CrossRef]

- Sharma, A.; Kraus, N.; McGee, T.J.; Nicol, T.G. Developmental changes in P1 and N1 central auditory responses elicited by consonant-vowel syllables. Electroencephalogr. Clin. Neurophysiol. Evoked Potentials Sect. 1997, 104, 540–545. [Google Scholar] [CrossRef]

- Wengenroth, M.; Blatow, M.; Heinecke, A.; Reinhardt, J.; Stippich, C.; Hofmann, E.; Schneider, P. Increased volume and function of right auditory cortex as a marker for absolute pitch. Cereb. Cortex 2014, 24, 1127–1137. [Google Scholar] [CrossRef]

- Benner, J.; Wengenroth, M.; Reinhardt, J.; Stippich, C.; Schneider, P.; Blatow, M. Prevalence and function of Heschl’s gyrus morphotypes in musicians. Brain Struct. Funct. 2017, 222, 3587–3603. [Google Scholar] [CrossRef]

- Tremblay, K.L.; Ross, B.; Inoue, K.; McClannahan, K.; Collet, G. Is the auditory evoked P2 response a biomarker of learning? Front. Syst. Neurosci. 2014, 8, 28. [Google Scholar] [CrossRef]

- Seppänen, M.; Hämäläinen, J.; Pesonen, A.-K.; Tervaniemi, M. Music training enhances rapid neural plasticity of N1 and P2 source activation for unattended sounds. Front. Hum. Neurosci. 2012, 6, 43. [Google Scholar] [CrossRef]

- Shahin, A.; Roberts, L.E.; Trainor, L.J. Enhancement of auditory cortical development by musical experience in children. NeuroReport 2004, 15, 1917. [Google Scholar] [CrossRef]

- Shahin, A.; Roberts, L.E.; Pantev, C.; Trainor, L.J.; Ross, B. Modulation of P2 auditory-evoked responses by the spectral complexity of musical sounds. NeuroReport 2005, 16, 1781–1785. [Google Scholar] [CrossRef] [PubMed]

- Kraus, N.; Chandrasekaran, B. Music training for the development of auditory skills. Nat. Rev. Neurosci. 2010, 11, 599–605. [Google Scholar] [CrossRef]

- Kraus, N.; White-Schwoch, T. Neurobiology of Everyday Communication: What Have We Learned from Music? Neuroscientist 2017, 23, 287–298. [Google Scholar] [CrossRef]

- Magne, C.; Schön, D.; Besson, M. Musician Children Detect Pitch Violations in Both Music and Language Better than Nonmusician Children: Behavioral and Electrophysiological Approaches. J. Cogn. Neurosci. 2006, 18, 199–211. [Google Scholar] [CrossRef]

- Musso, M.; Fürniss, H.; Glauche, V.; Urbach, H.; Weiller, C.; Rijntjes, M. Musicians use speech-specific areas when processing tones: The key to their superior linguistic competence? Behav. Brain Res. 2020, 390, 112662. [Google Scholar] [CrossRef] [PubMed]

- Wong, P.C.M.; Skoe, E.; Russo, N.M.; Dees, T.; Kraus, N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nat. Neurosci. 2007, 10, 420–422. [Google Scholar] [CrossRef] [PubMed]

- Franklin, M.S.; Sledge Moore, K.; Yip, C.-Y.; Jonides, J.; Rattray, K.; Moher, J. The effects of musical training on verbal memory. Psychol. Music 2008, 36, 353–365. [Google Scholar] [CrossRef]

- Jentschke, S.; Koelsch, S. Musical training modulates the development of syntax processing in children. NeuroImage 2009, 47, 735–744. [Google Scholar] [CrossRef]

- Milovanov, R. Musical aptitude and foreign language learning skills: Neural and behavioural evidence about their connections, 2009. In Proceedings of the 7th 94 Triennial Conference of European Society for the Cognitive Sciences of Music (ESCOM 2009), Jyväskylä, Finland, 12–16 August 2009; pp. 338–342. Available online: https://jyx.jyu.fi/dspace/bitstream/handle/123456789/20935/urn_nbn_fi_jyu-2009411285.pdf (accessed on 9 October 2012).

- Milovanov, R.; Tervaniemi, M. The Interplay between musical and linguistic aptitudes: A review. Front. Psychol. 2011, 2, 321. [Google Scholar] [CrossRef] [PubMed]

- Christiner, M.; Reiterer, S.M. Song and speech: Examining the link between singing talent and speech imitation ability. Front. Psychol. 2013, 4, 874. [Google Scholar] [CrossRef] [PubMed]

- Christiner, M.; Reiterer, S.M. A Mozart is not a Pavarotti: Singers outperform instrumentalists on foreign accent imitation. Front. Hum. Neurosci. 2015, 9, 482. [Google Scholar] [CrossRef]

- Christiner, M.; Reiterer, S.M. Early influence of musical abilities and working memory on speech imitation abilities: Study with pre-school children. Brain Sci. 2018, 8, 169. [Google Scholar] [CrossRef]

- Christiner, M.; Rüdegger, S.; Reiterer, S.M. Sing Chinese and tap Tagalog? Predicting individual differences in musical and phonetic aptitude using language families differing by sound-typology. Int. J. Multiling. 2018, 15, 455–471. [Google Scholar] [CrossRef]

- Coumel, M.; Christiner, M.; Reiterer, S.M. Second language accent faking ability depends on musical abilities, not on working memory. Front. Psychol. 2019, 10, 257. [Google Scholar] [CrossRef]

- Fonseca-Mora, C.; Jara-Jiménez, P.; Gómez-Domínguez, M. Musical plus phonological input for young foreign language readers. Front. Psychol. 2015, 6, 286. [Google Scholar] [CrossRef]

- Golestani, N.; Pallier, C. Anatomical correlates of foreign speech sound production. Cereb. Cortex 2007, 17, 929–934. [Google Scholar] [CrossRef] [PubMed]

- Berkowska, M.; Dalla Bella, S. Acquired and congenital disorders of sung performance: A review. Adv. Cogn. Psychol. 2009, 5, 69–83. [Google Scholar] [CrossRef]

- Christiner, M.; Reiterer, S. Music, song and speech. In The Internal Context of Bilingual Processing; Truscott, J., Sharwood Smith, M., Eds.; John Benjamins: Amsterdam, The Netherlands, 2019; pp. 131–156. [Google Scholar]

- Christiner, M. Musicality and Second Language Acquisition: Singing and Phonetic Language Aptitude Phonetic Language Aptitude. Ph.D. Thesis, University of Vienna, Vienna, Austria, 2020. [Google Scholar]

- Patel, A.D. Why would musical training benefit the neural encoding of speech? The OPERA hypothesis. Front. Psychol. 2011, 2, 142. [Google Scholar] [CrossRef] [PubMed]

- Moreno, S. Can music influence language and cognition? Contemp. Music Rev. 2009, 28, 329–345. [Google Scholar] [CrossRef]

- Chao, Y.R. A Grammar of Spoken Chinese; University of California Press: Berkeley, CA, USA, 1965. [Google Scholar]

- Bidelman, G.M.; Hutka, S.; Moreno, S. Tone language speakers and musicians share enhanced perceptual and cognitive abilities for musical pitch: Evidence for bidirectionality between the domains of language and music. PLoS ONE 2013, 8, e60676. [Google Scholar] [CrossRef]

- Lee, C.-Y.; Hung, T.-H. Identification of Mandarin tones by English-speaking musicians and nonmusicians. J. Acoust. Soc. Am. 2008, 124, 3235–3248. [Google Scholar] [CrossRef]

- Han, Y.; Goudbeek, M.; Mos, M.; Swerts, M. Mandarin Tone Identification by Tone-Naïve Musicians and Non-musicians in Auditory-Visual and Auditory-Only Conditions. Front. Commun. 2019, 4, 70. [Google Scholar] [CrossRef]

- Apfelstadt, H. Effects of melodic perception instruction on pitch discrimination and vocal accuracy of kindergarten children. J. Res. Music. Educ. 1984, 32, 15–24. [Google Scholar] [CrossRef]

- Ludke, K.M.; Ferreira, F.; Overy, K. Singing can facilitate foreign language learning. Mem. Cogn. 2014, 42, 41–52. [Google Scholar] [CrossRef]

- Christiner, M.; Bernhofs, V.; Groß, C. Individual Differences in Singing Behavior during Childhood Predicts Language Performance during Adulthood. Languages 2022, 7, 72. [Google Scholar] [CrossRef]

- Christiner, M.; Gross, C.; Seither-Preisler, A.; Schneider, P. The Melody of Speech: What the Melodic Perception of Speech Reveals about Language Performance and Musical Abilities. Languages 2021, 6, 132. [Google Scholar] [CrossRef]

- Flege, J.E.; Hammond, R.M. Mimicry of Non-distinctive Phonetic Differences Between Language Varieties. Stud. Sec. Lang. Acquis. 1982, 5, 1–17. [Google Scholar] [CrossRef][Green Version]

- Christiner, M. Singing Performance and Language Aptitude: Behavioural Study on Singing Performance and Its Relation to the Pronunciation of a Second Language. Master’s Thesis, University of Vienna, Vienna, Austria, 2013. [Google Scholar]

- Christiner, M. Let the music speak: Examining the relationship between music and language aptitude in pre-school children. In Exploring Language Aptitude: Views from Psychology, the Language Sciences, and Cognitive Neuroscience; Reiterer, S.M., Ed.; Springer Nature: Cham, Switzerland, 2018; pp. 149–166. [Google Scholar]

- Hebert, M.; Kearns, D.M.; Hayes, J.B.; Bazis, P.; Cooper, S. Why Children with Dyslexia Struggle with Writing and How to Help Them. Lang. Speech Hear. Serv. Sch. 2018, 49, 843–863. [Google Scholar] [CrossRef]

- Tierney, A.; Kraus, N. Auditory-motor entrainment and phonological skills: Precise auditory timing hypothesis (PATH). Front. Hum. Neurosci. 2014, 8, 949. [Google Scholar] [CrossRef] [PubMed]

- Gromko, J.E. The Effect of Music Instruction on Phonemic Awareness in Beginning Readers. J. Res. Music. Educ. 2005, 53, 199–209. [Google Scholar] [CrossRef]

- Christiner, M.; Renner, J.; Christine, G.; Seither-Preisler, A.; Jan, B.; Schneider, P. Singing Mandarin? What elementary short-term memory capacity, basic auditory skills, musical and singing abilities reveal about learning Mandarin. Front. Psychol. 2022. [Google Scholar] [CrossRef]

- Lenhard, W. ELFE 1–6. Ein Leseverständnistest für Erst-bis Sechstklässler; Hogrefe: Göttingen, Germany, 2006. [Google Scholar]

- Brunner, M.; Baeumer, C.; Dockter, S.; Feldhusen, F.; Plinkert, P.; Proeschel, U. Heidelberg Phoneme Discrimination Test (HLAD): Normative data for children of the third grade and correlation with spelling ability. Folia Phoniatr. Logop. 2008, 60, 157–161. [Google Scholar] [CrossRef]

- Serrallach, B.L.; Groß, C.; Christiner, M.; Wildermuth, S.; Schneider, P. Neuromorphological and Neurofunctional Correlates of ADHD and ADD in the Auditory Cortex of Adults. Front. Neurosci. 2022, 16, 631. [Google Scholar] [CrossRef] [PubMed]

- Carroll, J.B.; Sapon, S. Modern Language Aptitude Test (MLAT); The Psychological Corporation: New York, NY, USA, 1959. [Google Scholar]

- Meara, P. LLAMA Language Aptitude Tests; Lognostics: Swansea, UK, 2005. [Google Scholar]

- Gordon, E. Advanced Measures of Music Audiation; GIA Publications Inc.: Chicago, IL, USA, 1989. [Google Scholar]

- Jepsen, M.L.; Ewert, S.D.; Dau, T. A computational model of human auditory signal processing and perception. J. Acoust. Soc. Am. 2008, 124, 422–438. [Google Scholar] [CrossRef]

- Dalla Bella, S.; Giguère, J.-F.; Peretz, I. Singing proficiency in the general population. J. Acoust. Soc. Am. 2007, 121, 1182–1189. [Google Scholar] [CrossRef] [PubMed]

- Dalla Bella, S.; Berkowska, M. Singing proficiency in the majority: Normality and “phenotypes” of poor singing. Ann. N. Y. Acad. Sci. 2009, 1169, 99–107. [Google Scholar] [CrossRef] [PubMed]

- Welch, G.F.; Himonides, E.; Saunders, J.; Papageorgi, I.; Rinta, T.; Preti, C.; Stewart, C.; Lani, J.; Hill, J. Researching the first year of the national singing programme sing up in England: An initial impact evaluation. Psychomusicology 2011, 21, 83–97. [Google Scholar] [CrossRef]

- Schneider, P.; Sluming, V.; Roberts, N.; Bleeck, S.; Rupp, A. Structural, functional, and perceptual differences in Heschl’s gyrus and musical instrument preference. Ann. N. Y. Acad. Sci. 2005, 1060, 387–394. [Google Scholar] [CrossRef]

- Hämäläinen, M.S.; Sarvas, J. Feasibility of the homogeneous head model in the interpretation of neuromagnetic fields. Phys. Med. Biol. 1987, 32, 91–97. [Google Scholar] [CrossRef]

- Sarvas, J. Basic mathematical and electromagnetic concepts of the biomagnetic inverse problem. Phys. Med. Biol. 1987, 32, 11–22. [Google Scholar] [CrossRef]

- Ponton, C.W.; Don, M.; Eggermont, J.J.; Waring, M.D.; Masuda, A. Maturation of Human Cortical Auditory Function: Differences between Normal-Hearing Children and Children with Cochlear Implants. Ear Hear. 1996, 17, 430. [Google Scholar] [CrossRef]

- Warner, R.M. Applied Statistics: From Bivariate through Multivariate Techniques, 2nd ed.; Sage: Los Angeles, CA, USA, 2013. [Google Scholar]

- Butler, B.E.; Trainor, L.J. Sequencing the Cortical Processing of Pitch-Evoking Stimuli Using EEG Analysis and Source Estimation. Front. Psychol. 2012, 3, 180. [Google Scholar] [CrossRef]

- Näätänen, R.; Picton, T. The N1 wave of the human electric and magnetic response to sound: A review and an analysis of the component structure. Psychophysiology 1987, 24, 375–425. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A.; Campbell, J.; Cardon, G. Developmental and cross-modal plasticity in deafness: Evidence from the P1 and N1 event related potentials in cochlear implanted children. Int. J. Psychophysiol. 2015, 95, 135–144. [Google Scholar] [CrossRef]

- Näätänen, R.; Winkler, I. The concept of auditory stimulus representation in cognitive neuroscience. Psychol. Bull. 1999, 125, 826–859. [Google Scholar] [CrossRef]

- Giard, M.H.; Perrin, F.; Echallier, J.F.; Thévenet, M.; Froment, J.C.; Pernier, J. Dissociation of temporal and frontal components in the human auditory N1 wave: A scalp current density and dipole model analysis. Electroencephalogr. Clin. Neurophysiol. Evoked Potentials Sect. 1994, 92, 238–252. [Google Scholar] [CrossRef]

- Sharma, A.; Martin, K.; Roland, P.; Bauer, P.; Sweeney, M.H.; Gilley, P.; Dorman, M. P1 latency as a biomarker for central auditory development in children with hearing impairment. J. Am. Acad. Audiol. 2005, 16, 564–573. [Google Scholar] [CrossRef]

- Tremblay, K.; Kraus, N.; McGee, T.; Ponton, C.; Otis, B. Central auditory plasticity: Changes in the N1-P2 complex after speech-sound training. Ear Hear. 2001, 22, 79–90. [Google Scholar] [CrossRef]

- Seither-Preisler, A.; Schneider, P. Positive Effekte des Musizierens auf Wahrnehmung und Kognition aus Neurowissenschaftlicher Perspektive. In Musik und Medizin: Chancen für Therapie, Prävention und Bildung; Bernatzky, G., Kreutz, G., Eds.; Springer Vienna: Vienna, Austria, 2015; pp. 375–393. [Google Scholar]

- Goswami, U. A temporal sampling framework for developmental dyslexia. Trends Cogn. Sci. 2011, 15, 3–10. [Google Scholar] [CrossRef] [PubMed]

- Fiveash, A.; Bedoin, N.; Gordon, R.L.; Tillmann, B. Processing rhythm in speech and music: Shared mechanisms and implications for developmental speech and language disorders. Neuropsychology 2021, 35, 771–791. [Google Scholar] [CrossRef]

- Trainor, L.J.; Shahin, A.; Roberts, L.E. Effects of musical training on the auditory cortex in children. Ann. N. Y. Acad. Sci. 2003, 999, 506–513. [Google Scholar] [CrossRef]

- Baumann, S.; Meyer, M.; Jäncke, L. Enhancement of auditory-evoked potentials in musicians reflects an influence of expertise but not selective attention. J. Cogn. Neurosci. 2008, 20, 2238–2249. [Google Scholar] [CrossRef] [PubMed]

- Halwani, G.F.; Loui, P.; Rüber, T.; Schlaug, G. Effects of practice and experience on the arcuate fasciculus: Comparing singers, instrumentalists, and non-musicians. Front. Psychol. 2011, 2, 156. [Google Scholar] [CrossRef]

- Kleber, B.; Veit, R.; Birbaumer, N.; Gruzelier, J.; Lotze, M. The brain of opera singers: Experience-dependent changes in functional activation. Cereb. Cortex 2010, 20, 1144–1152. [Google Scholar] [CrossRef]

- Nan, Y.; Liu, L.; Geiser, E.; Shu, H.; Gong, C.C.; Dong, Q.; Gabrieli, J.D.E.; Desimone, R. Piano training enhances the neural processing of pitch and improves speech perception in Mandarin-speaking children. Proc. Natl. Acad. Sci. USA 2018, 115, E6630–E6639. [Google Scholar] [CrossRef] [PubMed]

- Landerl, K.; Ramus, F.; Moll, K.; Lyytinen, H.; Leppänen, P.H.T.; Lohvansuu, K.; O’Donovan, M.; Williams, J.; Bartling, J.; Bruder, J. Predictors of developmental dyslexia in European orthographies with varying complexity. J. Child Psychol. Psychiatry 2013, 54, 686–694. [Google Scholar] [CrossRef]

- Schlaug, G.; Norton, A.; Marchina, S.; Zipse, L.; Wan, C.Y. From singing to speaking: Facilitating recovery from nonfluent aphasia. Future Neurol. 2010, 5, 657–665. [Google Scholar] [CrossRef] [PubMed]

- Falk, S.; Schreier, R.; Russo, F.A. Singing and stuttering. In The Routledge Companion to Interdisciplinary Studies in Singing: Volume III: Wellbeing; Heydon, R., Fancourt, D., Cohen, A.J., Eds.; Routledge: New York, NY, USA, 2020; pp. 50–60. [Google Scholar]

- Stager, S.V.; Jeffries, K.J.; Braun, A.R. Common features of fluency-evoking conditions studied in stuttering subjects and controls: An H215O PET study. J. Fluen. Disord. 2003, 28, 319–336. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).