Development and Evaluation of Automated Tools for Auditory-Brainstem and Middle-Auditory Evoked Potentials Waves Detection and Annotation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Collection

2.2. Stimulus and Acquisition Parameters

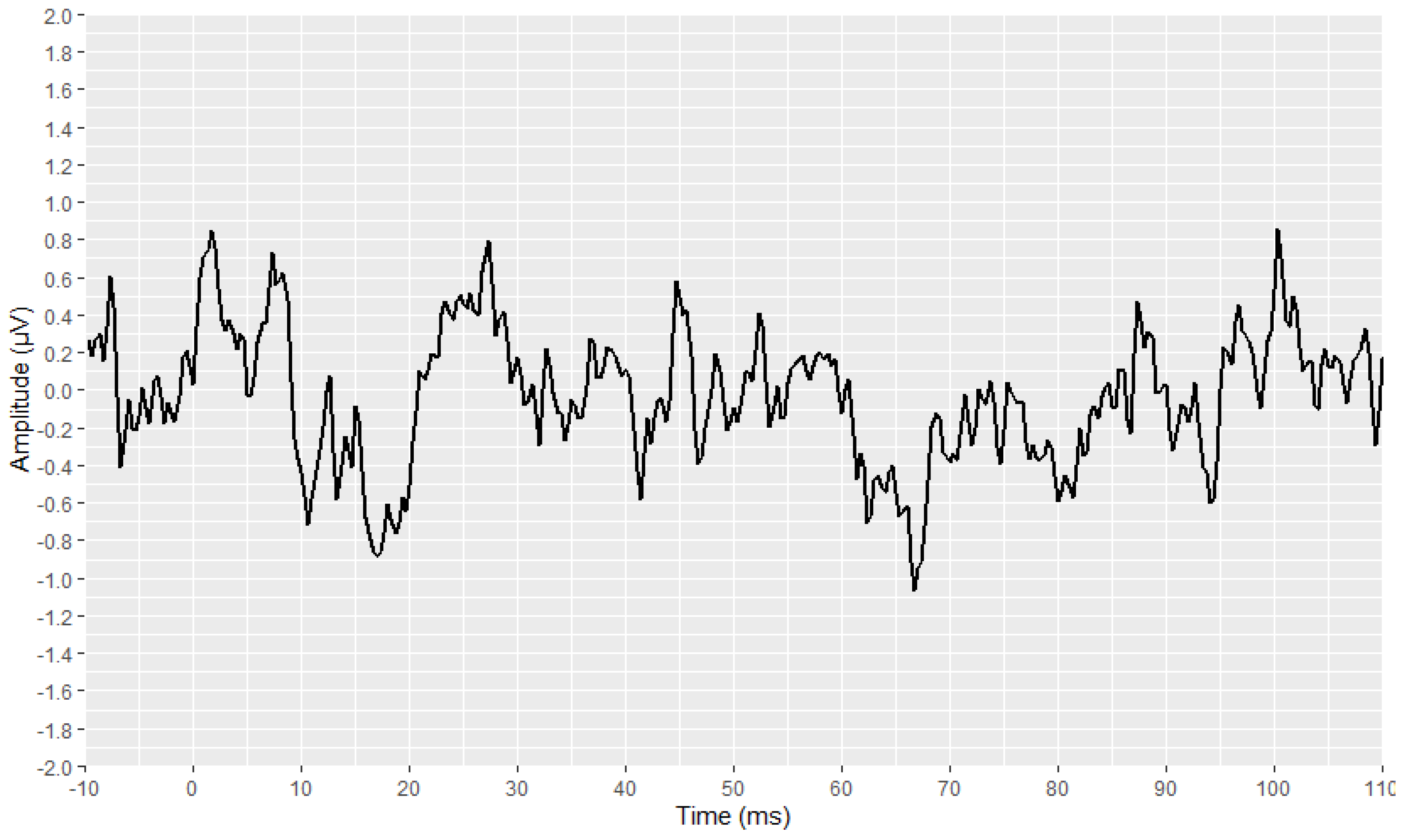

2.3. Reading Raw Data

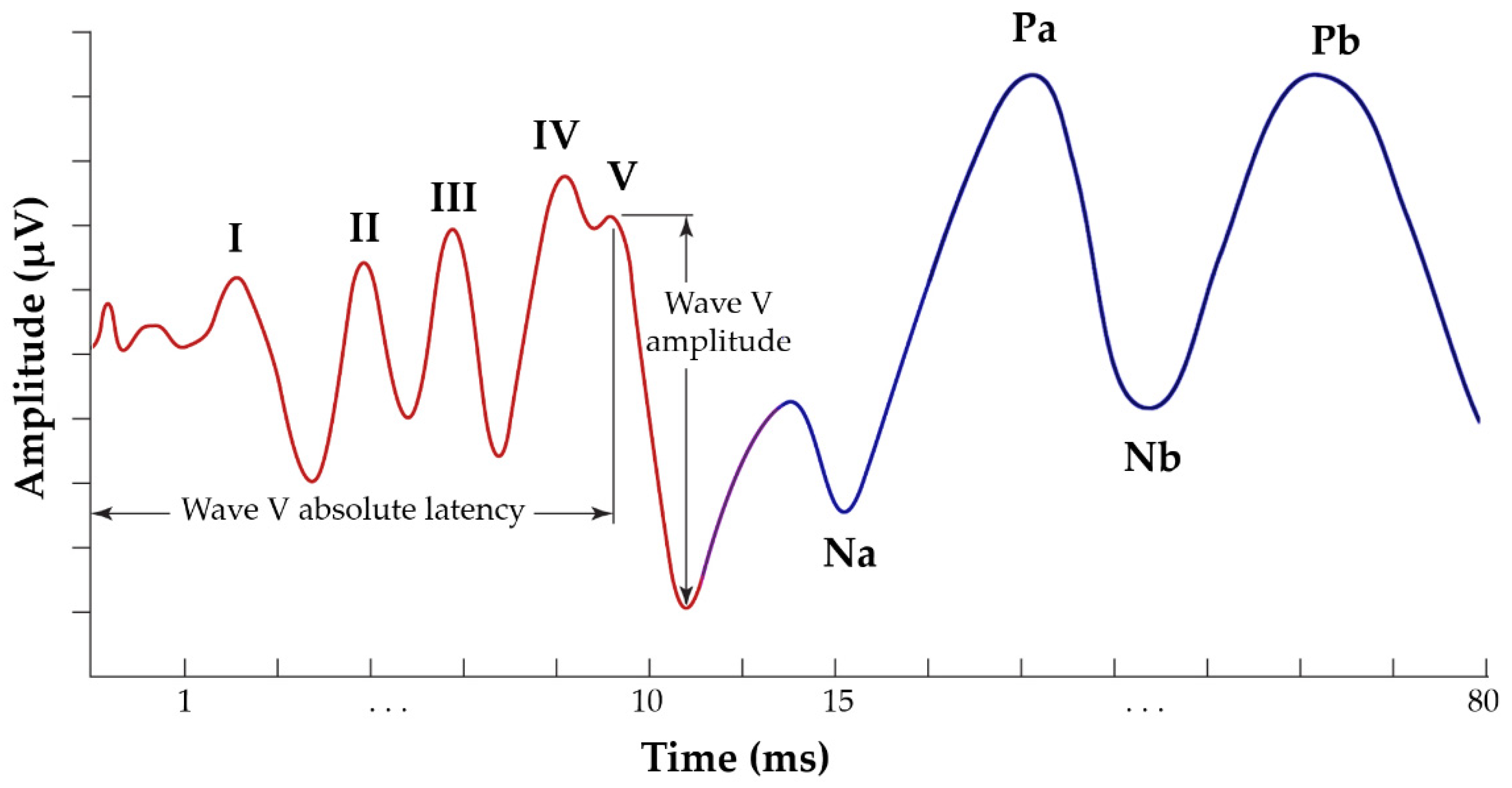

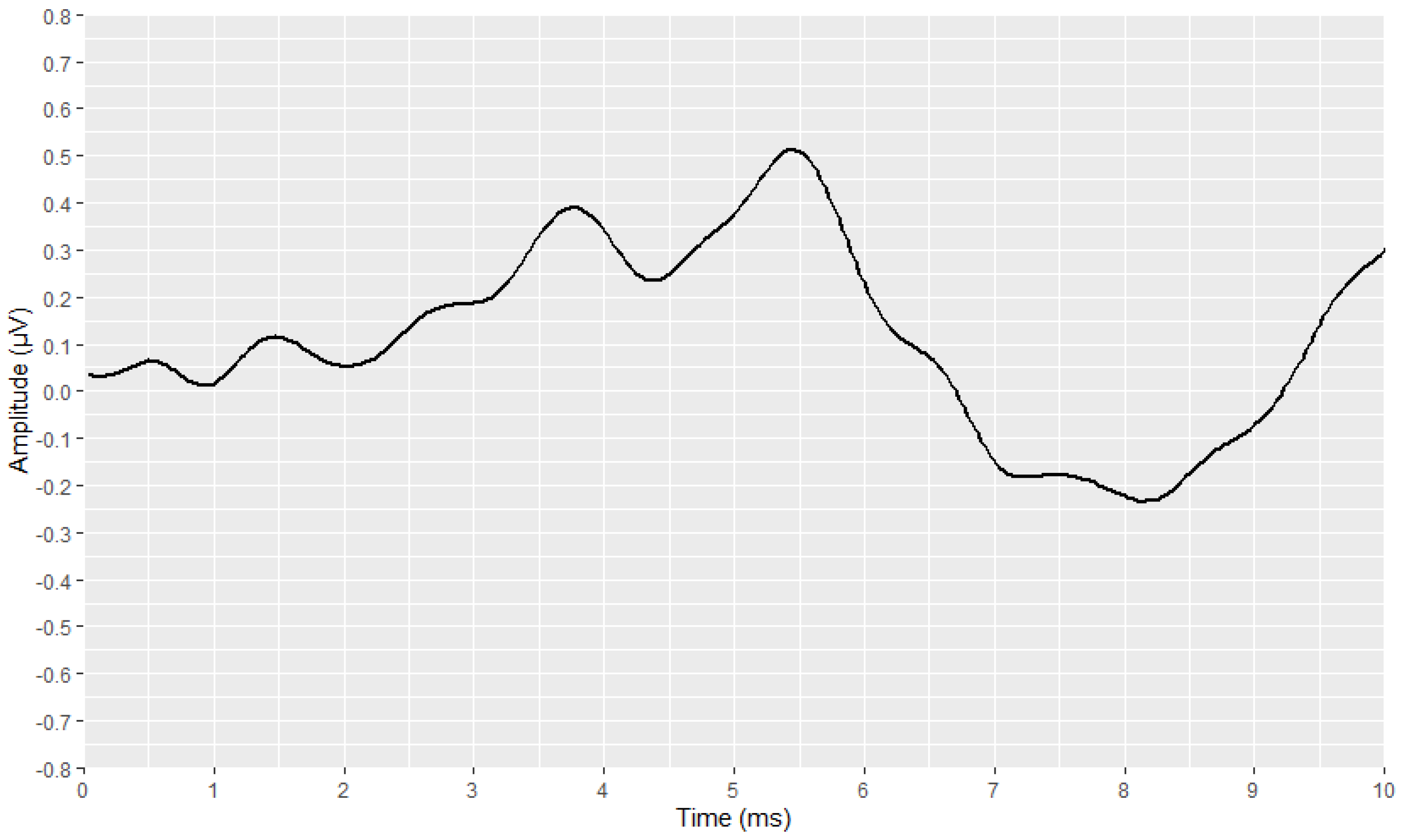

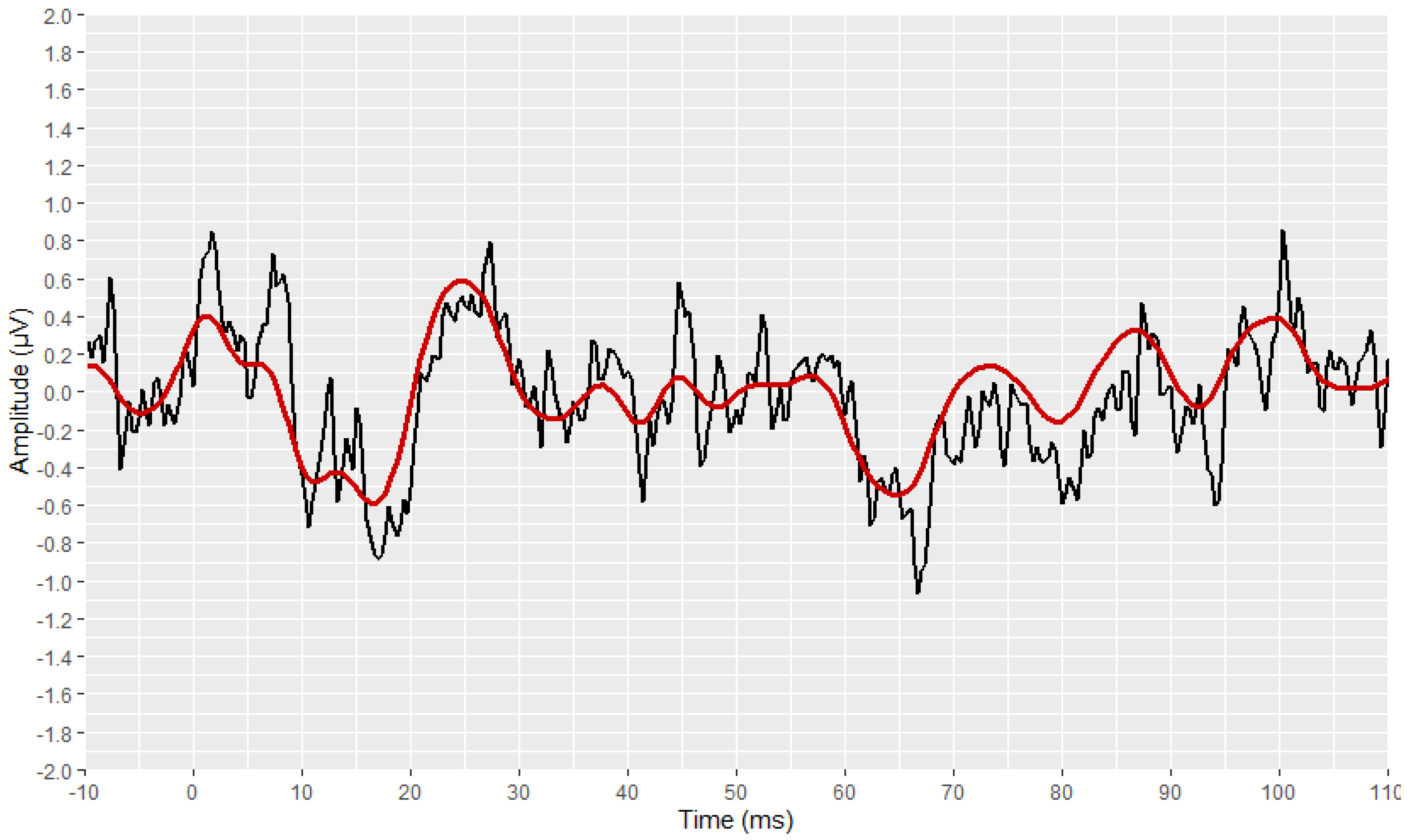

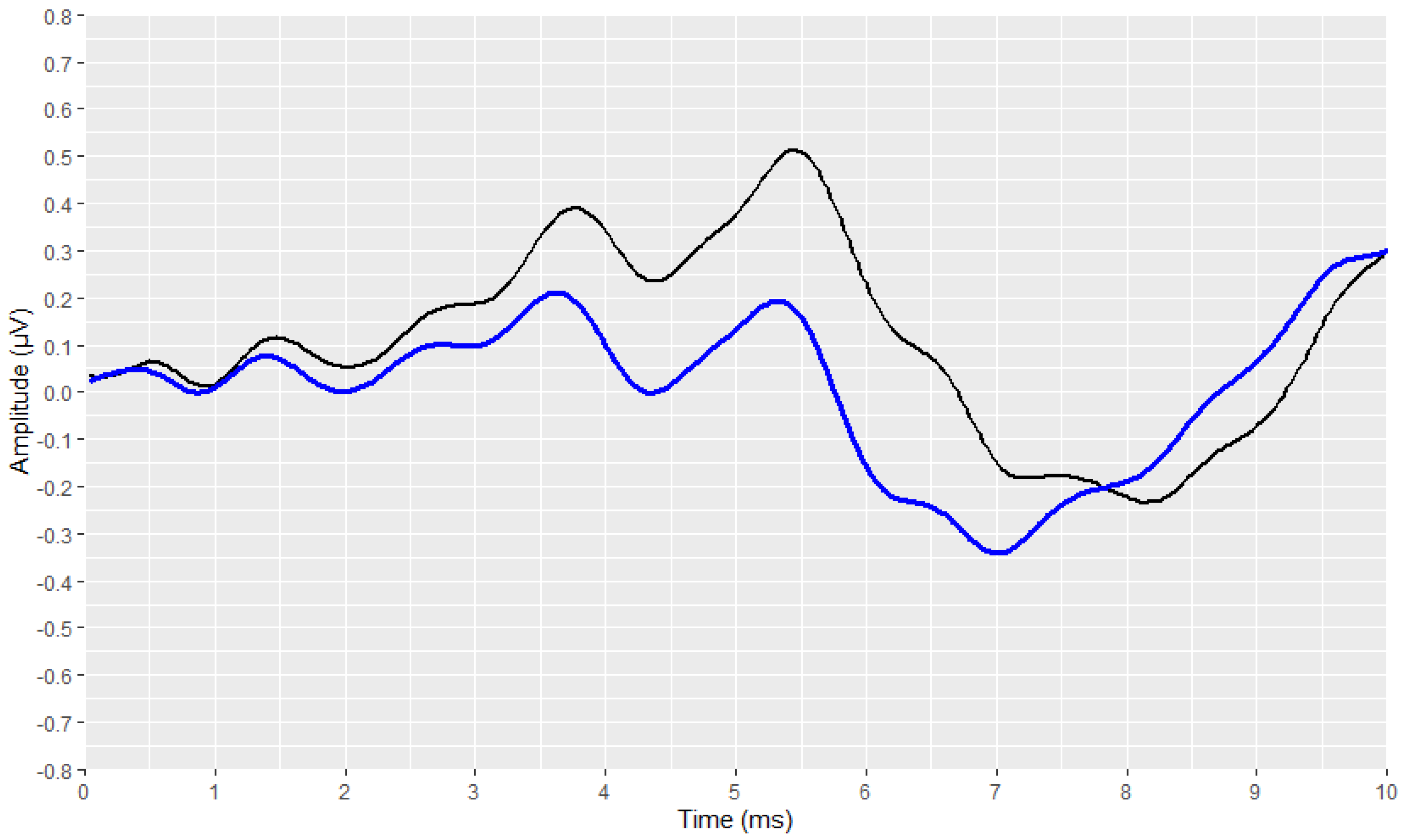

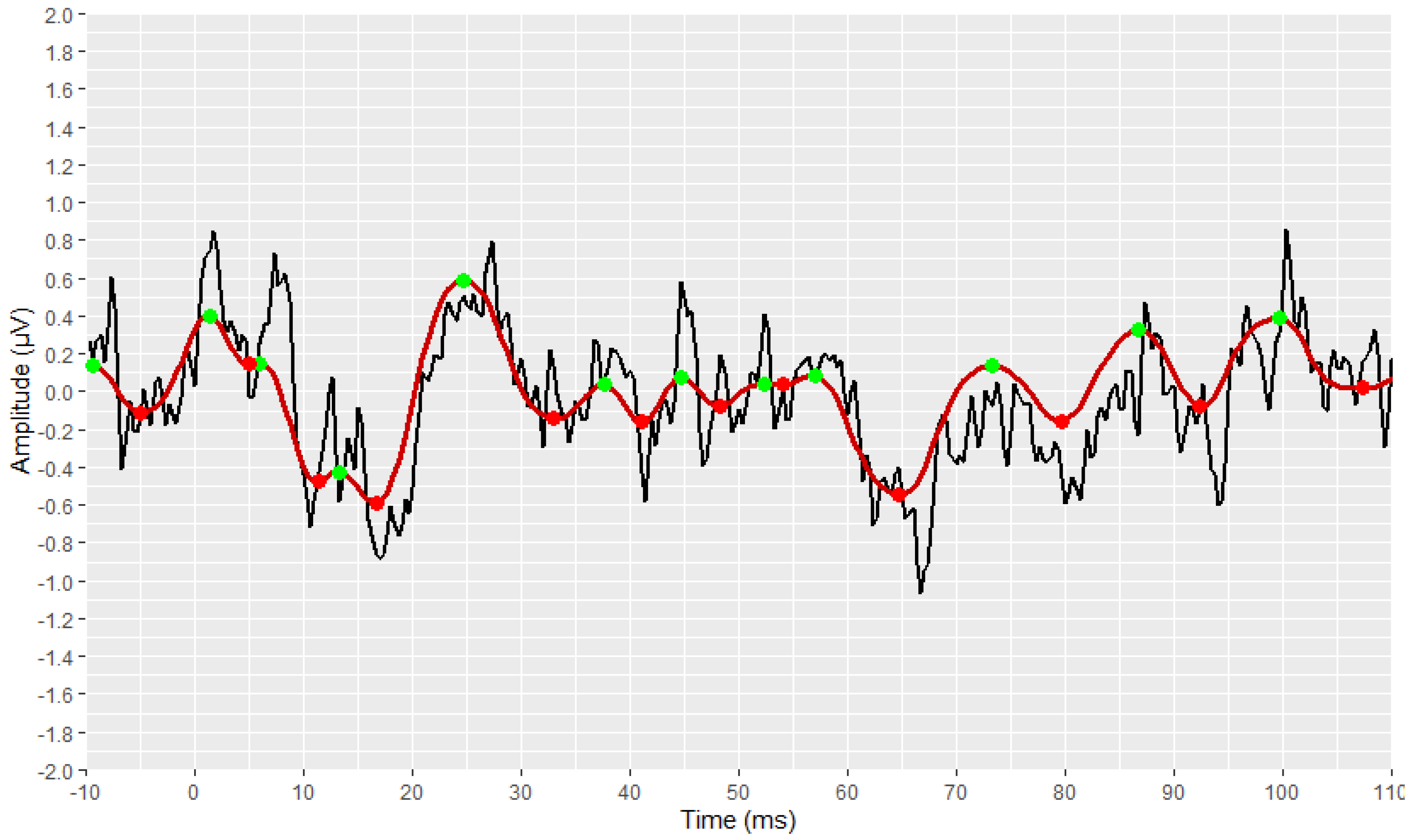

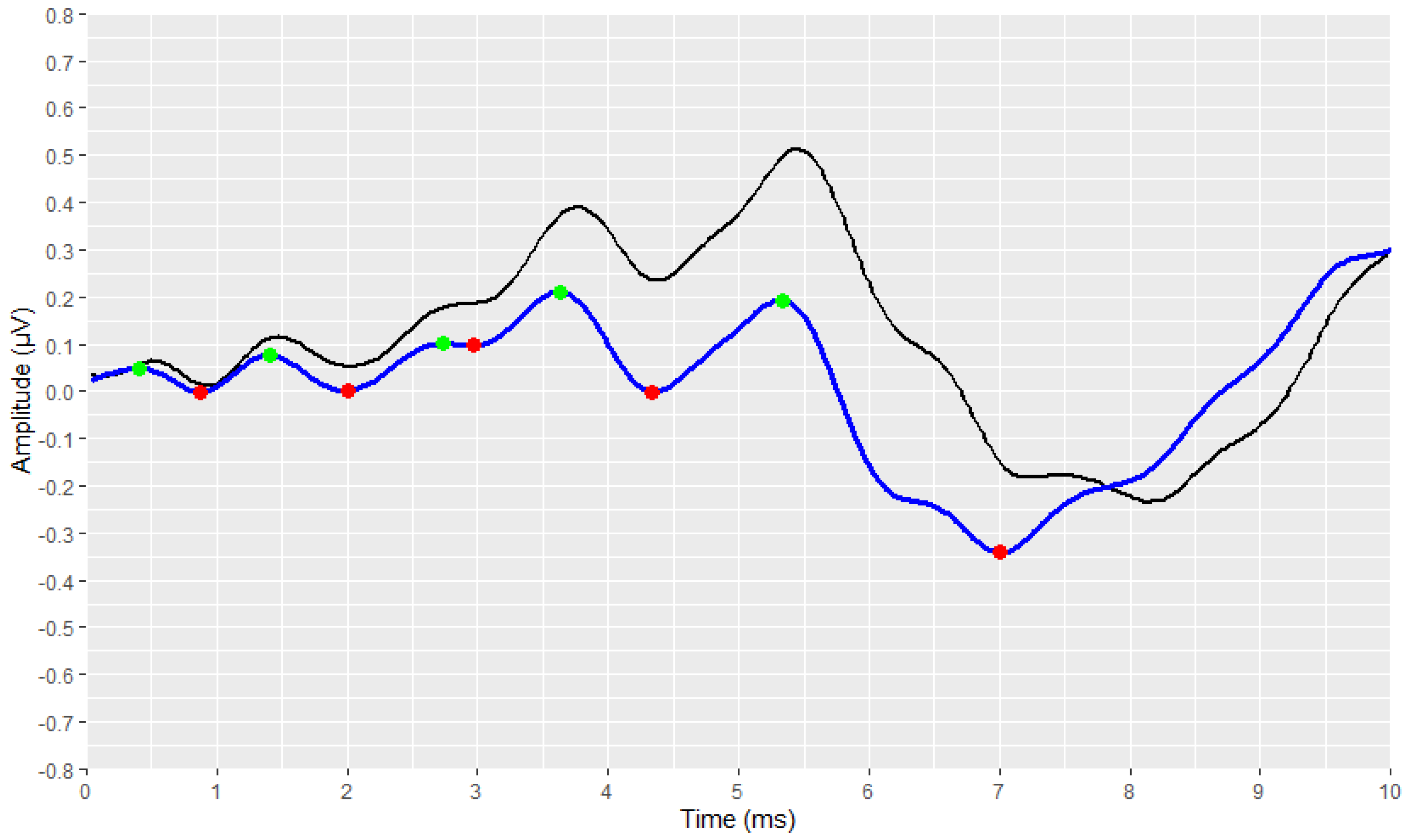

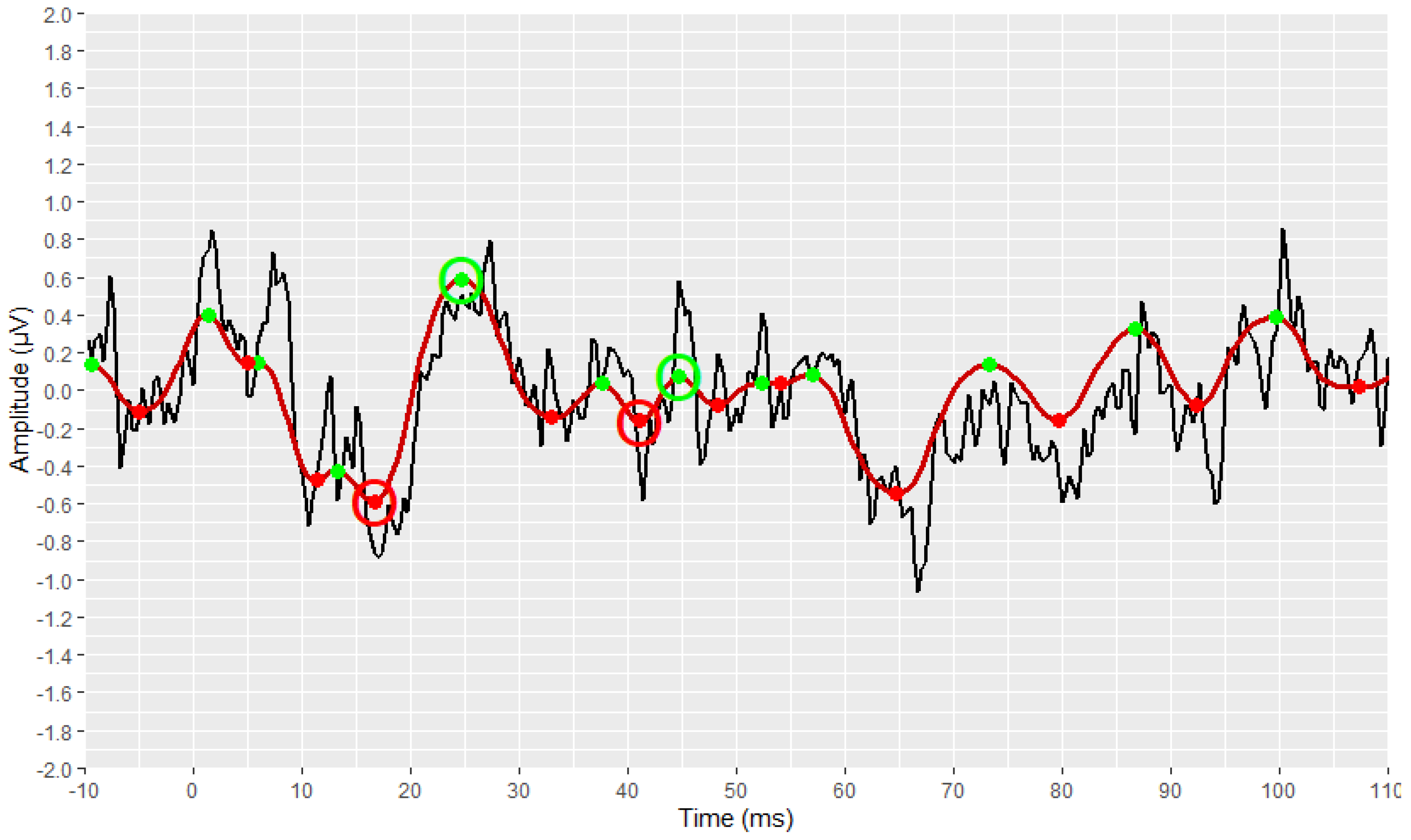

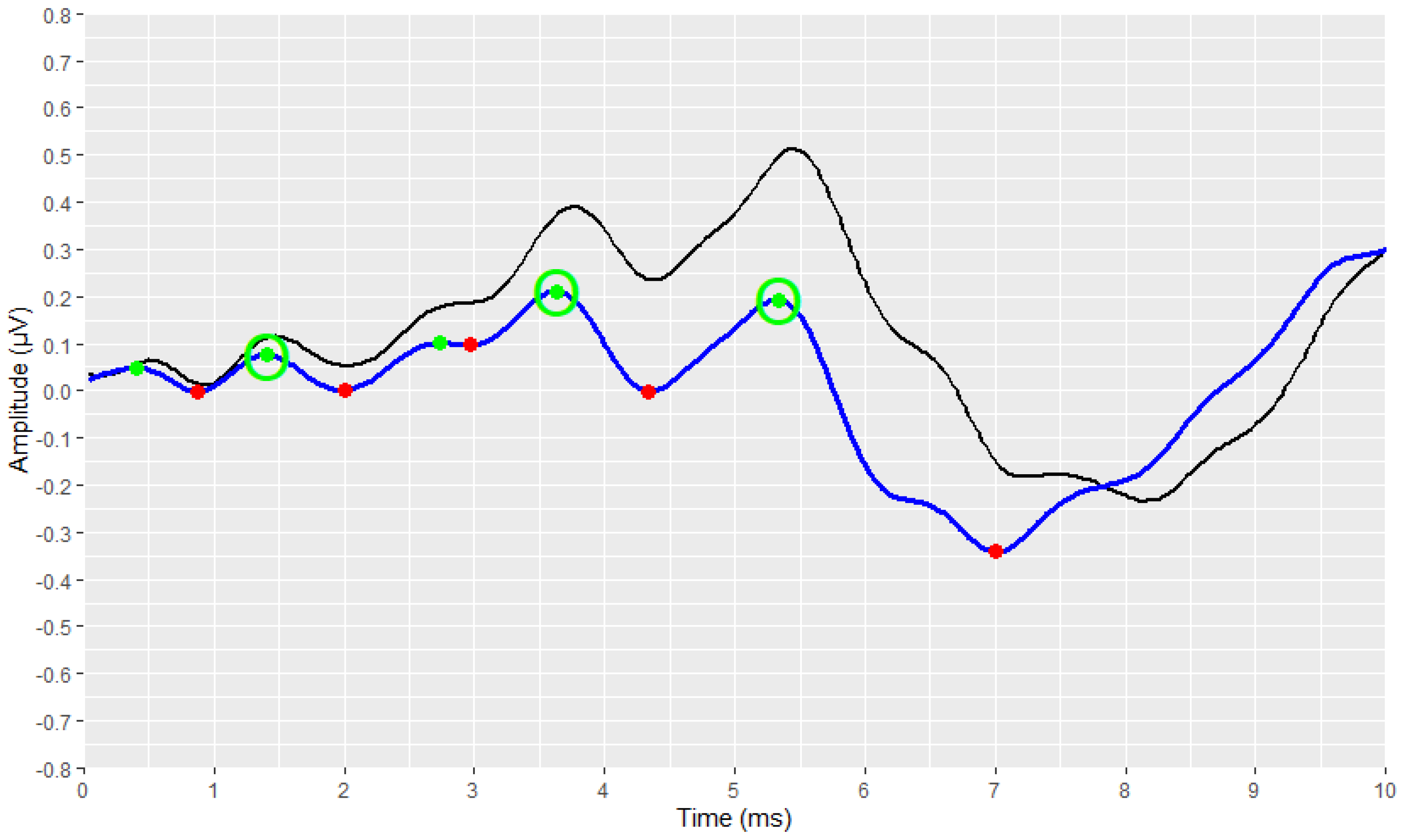

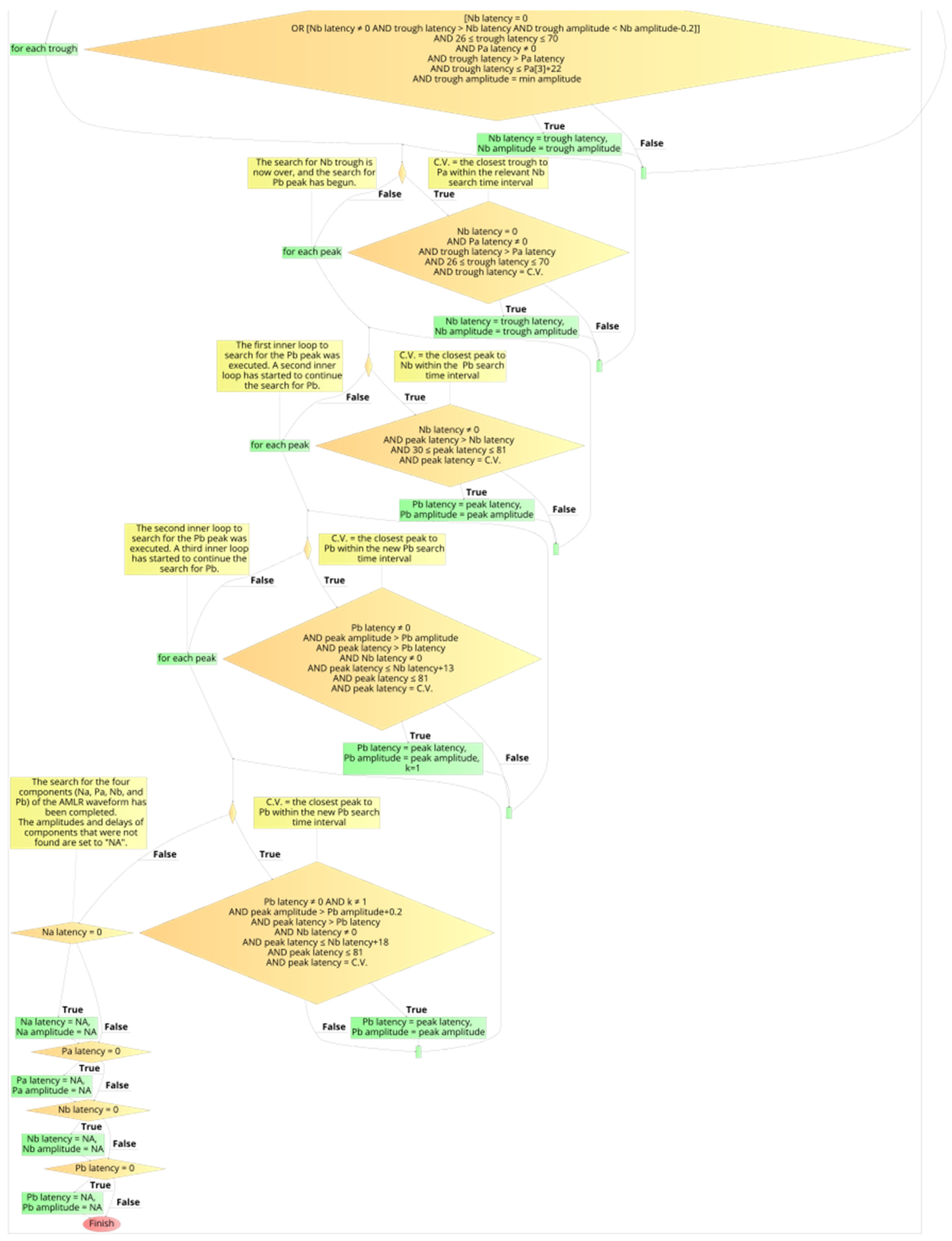

2.4. Visual Display Filtering and Wave Detection

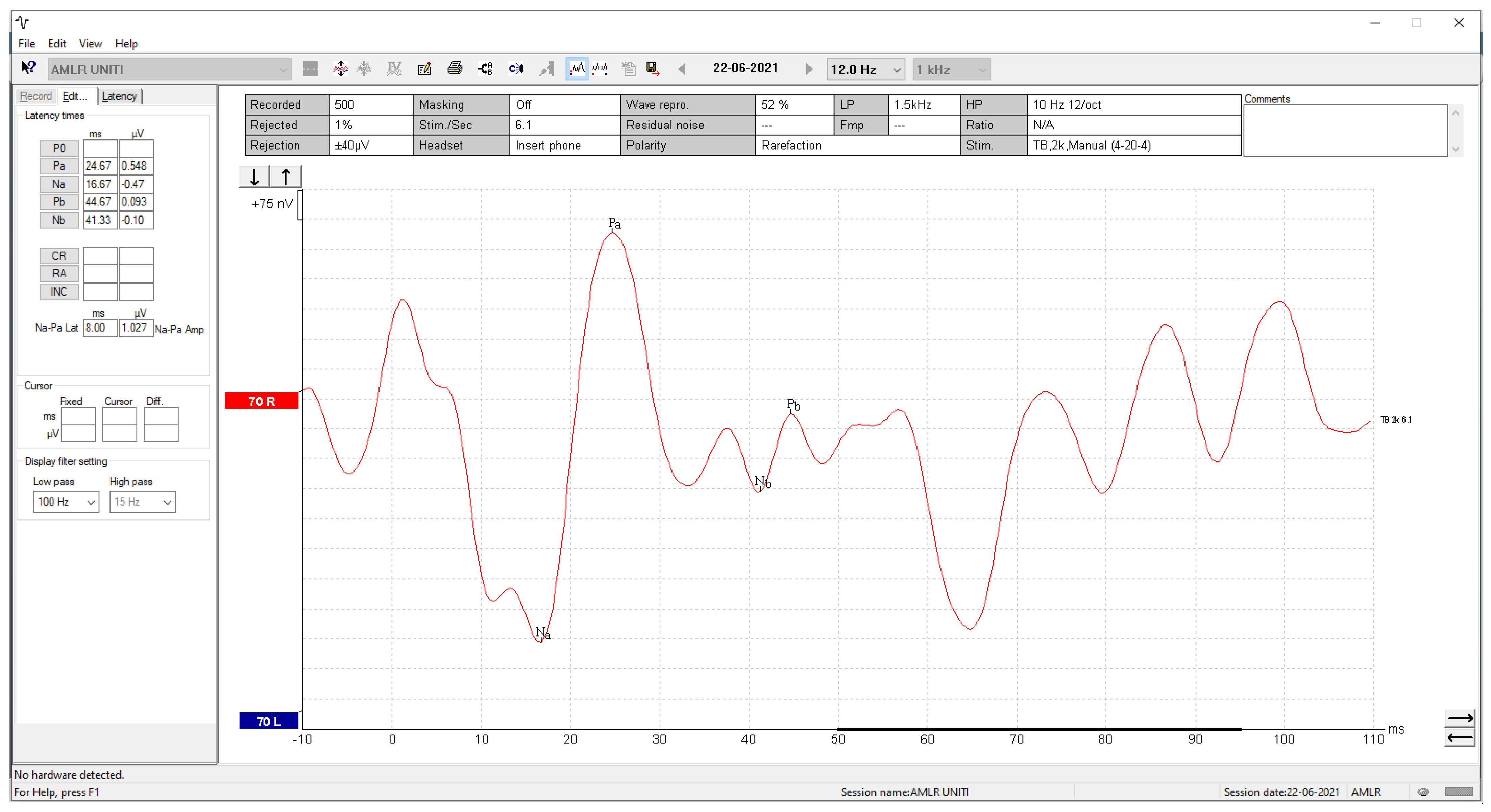

2.5. Visualisation

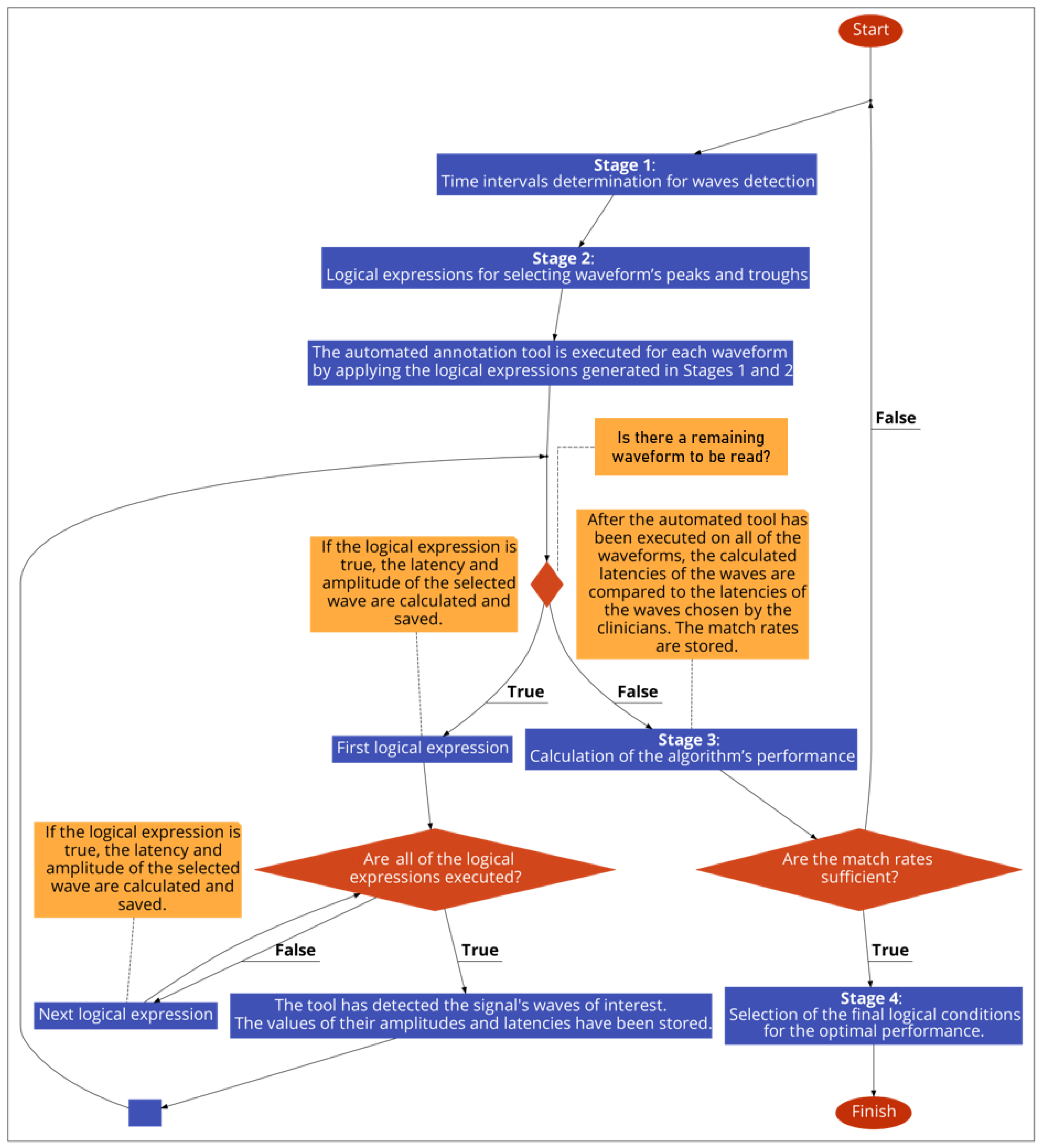

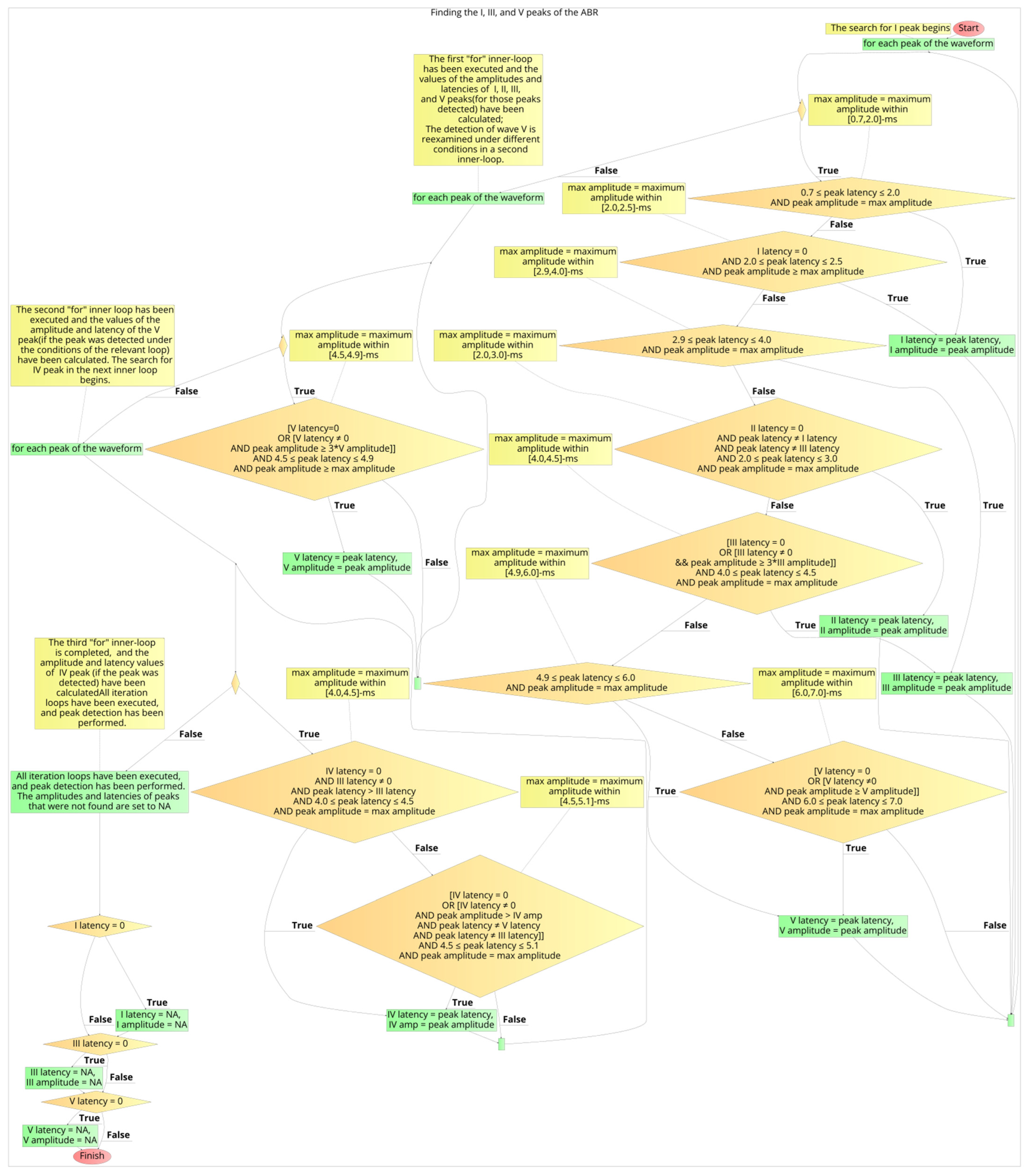

2.6. Development Process of the Automated Annotation Tools

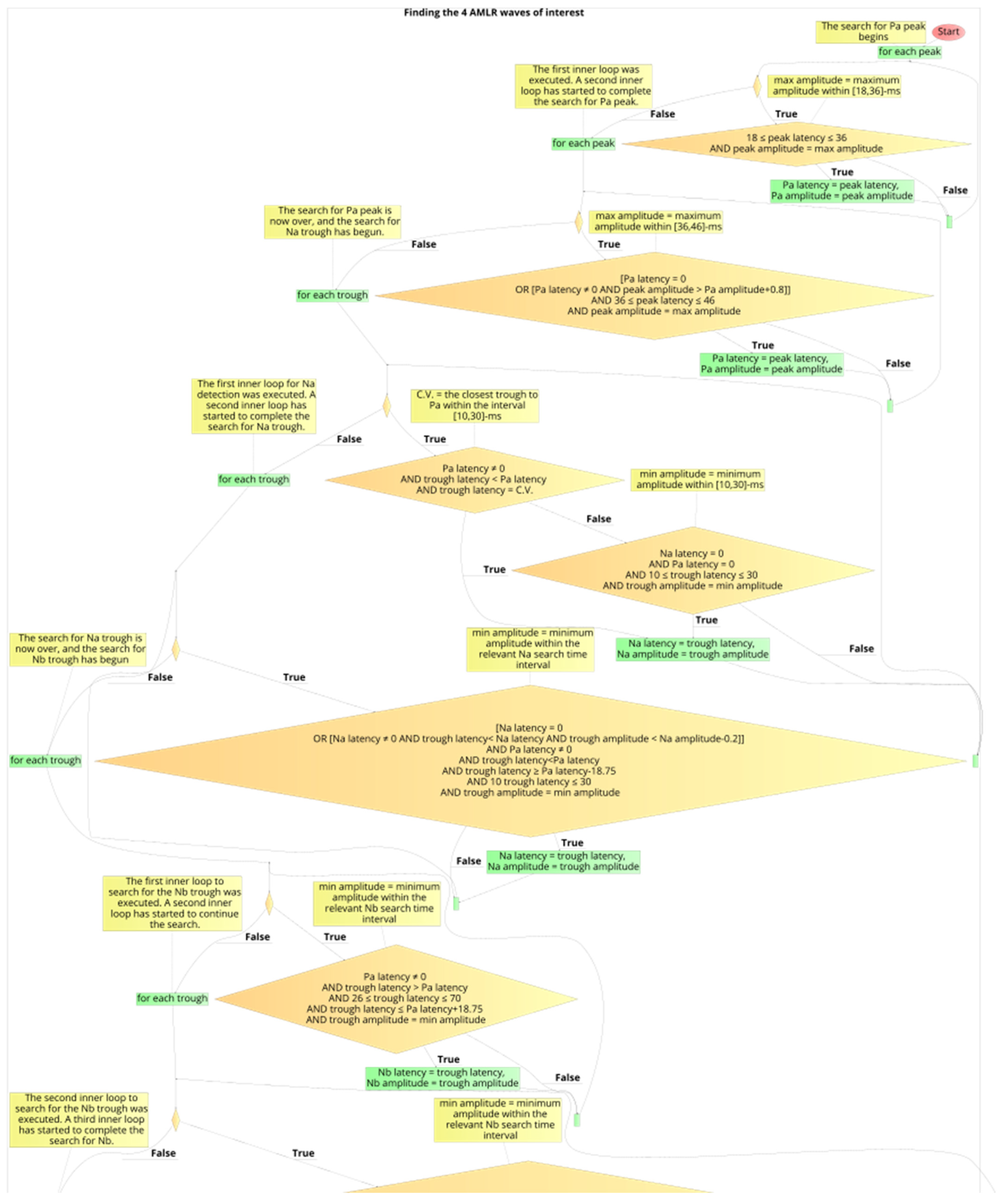

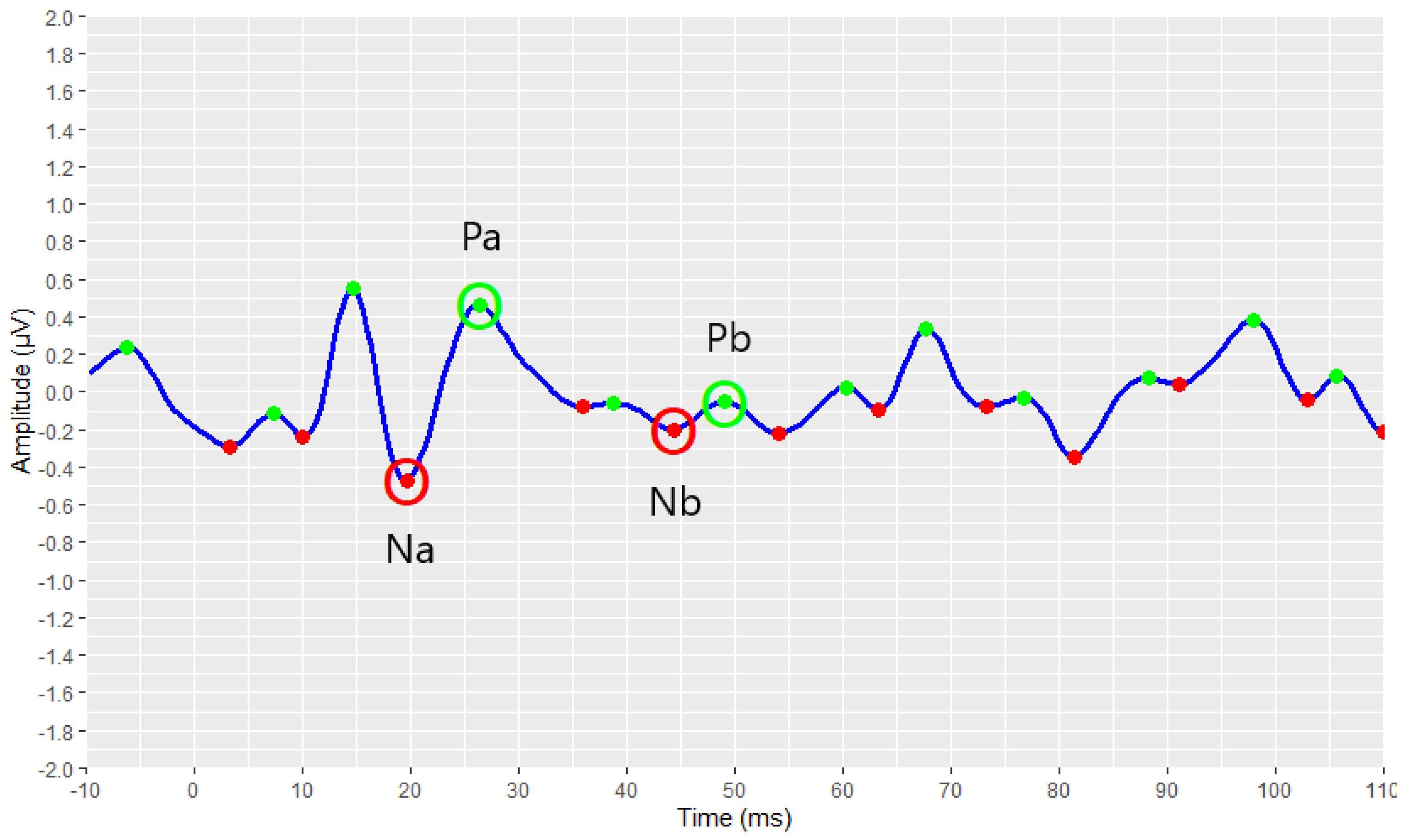

- Determination of appropriate time-intervals for each wave of interest under detection;

- Development of logical (Boolean) expressions for selecting the waveform’s peaks and troughs;

- Calculation of the algorithm’s performance, by comparing its selected latencies with the clinicians’ latencies, and optimisation of the logical conditions, by adjusting the parameters of stages 1 and 2. Iteration of stage 3, after each change in parameter of the earlier stages, and storing of the performance values in a data frame;

- Selection of the final logical conditions that result in the optimal performance.

2.7. Determination of Presence of PAM Artefacts on the AMLR Waveforms

3. Results

3.1. Data Collection

3.2. Reading Raw Data

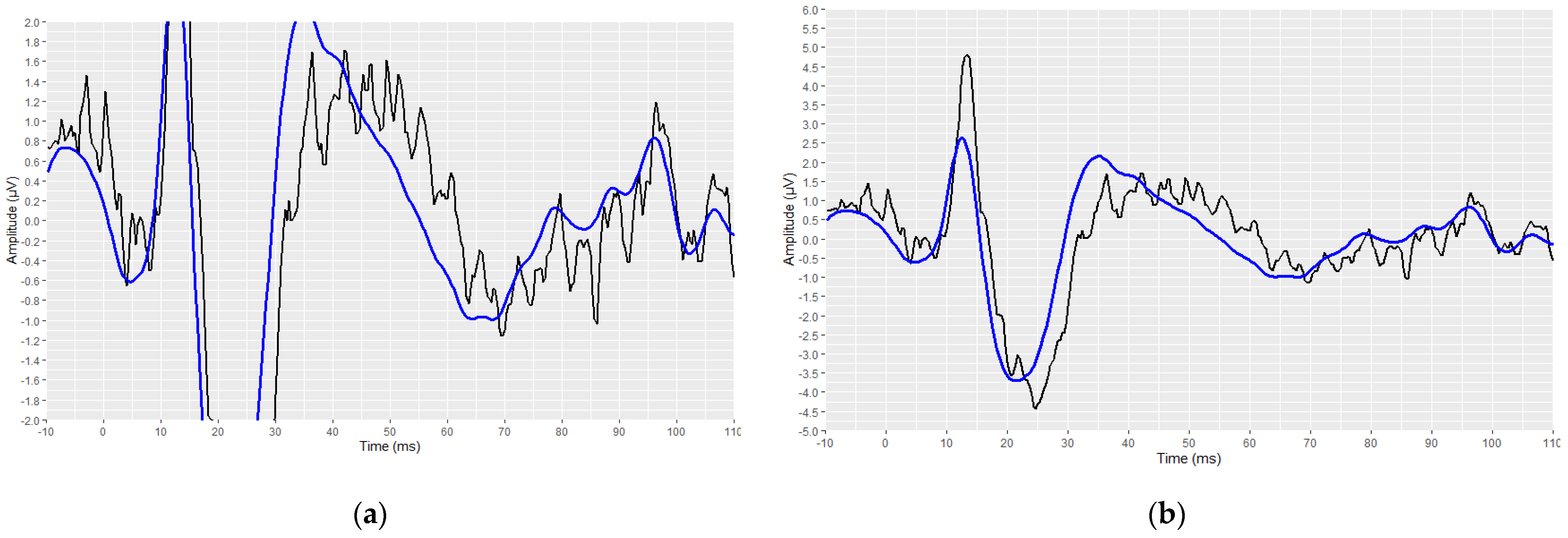

3.3. Visual Display Filtering, Wave Detection and Visualisation

3.4. Developed Automated Annotation Tools and Validation of Their Performances

3.4.1. Validation of the ABR Automated Annotation Tool

3.4.2. Validation of the AMLR Automated Annotation Tool for All Waveforms

- 91.89% for Na trough;

- 90.77% for Pa peak;

- 79.90% for Nb trough;

- 76.99% for Pb peak.

3.4.3. Validation of the AMLR Automated Annotation Tool for the PAM–Absence Waveforms

- A total of 93.21% for the Na trough;

- A total of 92.25% for the Pa peak;

- A total of 83.35% for the Nb trough;

- A total of 79.27% for the Pb peak.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Picton, T.W.; John, M.S.; Purcell, D.W.; Plourde, G. Human Auditory Steady-State Responses: The Effects of Recording Technique and State of Arousal. Anesth. Analg. 2003, 97, 1396–1402. [Google Scholar] [CrossRef] [PubMed]

- Paulraj, M.P.; Subramaniam, K.; Bin Yaccob, S.; Bin Adom, A.H.; Hema, C.R. Auditory Evoked Potential Response and Hearing Loss: A Review. Open Biomed. Eng. J. 2015, 9, 17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Polonenko, M.J.; Maddox, R.K. The Parallel Auditory Brainstem Response. Trends Hear. 2019, 23, 2331216519871395. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Manta, O.; Sarafidis, M.; Schlee, W.; Consoulas, C.; Kikidis, D.; Koutsouris, D. Electrophysiological Differences in Distinct Hearing Threshold Level Individuals with and without Tinnitus Distress. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; Volume 2022, pp. 1630–1633. [Google Scholar] [CrossRef]

- Hall, J.W. Handbook of Auditory Evoked Responses; Hall, M., III, Ed.; Pearson Education, Inc.: London, UK, 2015; ISBN 0205135668. [Google Scholar]

- Sörnmo, L.; Laguna, P. Evoked Potentials. Bioelectr. Signal Process. Card. Neurol. Appl. 2005, 181–336. [Google Scholar] [CrossRef]

- Winkler, I.; Denham, S.; Escera, C. Auditory Event-Related Potentials. Encycl. Comput. Neurosci. 2013, 1–29. [Google Scholar] [CrossRef] [Green Version]

- Young, A.; Cornejo, J.; Spinner, A. Auditory Brainstem Response. 2022. Available online: https://www.ncbi.nlm.nih.gov/books/NBK564321/ (accessed on 4 December 2022).

- Milloy, V.; Fournier, P.; Benoit, D.; Noreña, A.; Koravand, A. Auditory Brainstem Responses in Tinnitus: A Review of Who, How, and What? Front. Aging Neurosci. 2017, 9, 237. [Google Scholar] [CrossRef] [Green Version]

- Melcher, J.R.; Kiang, N.Y.S. Generators of the Brainstem Auditory Evoked Potential in Cat. III: Identified Cell Populations. Hear. Res. 1996, 93, 52–71. [Google Scholar] [CrossRef]

- Chalak, S.; Kale, A.; Deshpande, V.K.; Biswas, D.A. Establishment of Normative Data for Monaural Recordings of Auditory Brainstem Response and Its Application in Screening Patients with Hearing Loss: A Cohort Study. J. Clin. Diagn. Res. 2013, 7, 2677–2679. [Google Scholar] [CrossRef]

- Schoisswohl, S.; Langguth, B.; Schecklmann, M.; Bernal-Robledano, A.; Boecking, B.; Cederroth, C.R.; Chalanouli, D.; Cima, R.; Denys, S.; Dettling-Papargyris, J.; et al. Unification of Treatments and Interventions for Tinnitus Patients (UNITI): A Study Protocol for a Multi-Center Randomized Clinical Trial. Trials 2021, 22, 875. [Google Scholar] [CrossRef]

- Watson, D.R. The Effects of Cochlear Hearing Loss, Age and Sex on the Auditory Brainstem Response. Int. J. Audiol. 2007, 35, 246–258. [Google Scholar] [CrossRef]

- Kim, L.-S.; Ahn, Y.-M.; Yoo, K.-H.; Heo, S.-D.; Park, H.-S. Normative Data of Auditory Middle Latency Responses in Adults. Korean J. Audiol. 1997, 1, 48–56. [Google Scholar]

- Konadath, S.; Manjula, P. Auditory Brainstem Response and Late Latency Response in Individuals with Tinnitus Having Normal Hearing. Intractable Rare Dis. Res. 2016, 5, 262–268. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Eggermont, J.J. Auditory Brainstem Response. Handb. Clin. Neurol. 2019, 160, 451–464. [Google Scholar] [CrossRef]

- Eclipse|Evoked Potentials Testing|Interacoustics. Available online: https://www.interacoustics.com/abr/eclipse (accessed on 7 October 2021).

- SmartEP—Auditory Evoked Potentials—Intelligent Hearing Systems. Available online: https://ihsys.info/site/en/diagnostics/smartep/ (accessed on 7 October 2021).

- ERS: Evoked Response|Auditory Evoked Response (AER) & Jewett Sequence|Research|BIOPAC. Available online: https://www.biopac.com/application/ers-evoked-response/advanced-feature/auditory-evoked-response-aer-jewett-sequence/ (accessed on 7 October 2021).

- Brain Products GmbH—Solutions for Neurophysiological Research. Available online: https://www.brainproducts.com/index.php (accessed on 7 October 2021).

- OAE Screening System—NEURO-AUDIO—Neurosoft—ABR Screening System/Audiometer/for Pediatric Audiometry. Available online: https://www.medicalexpo.com/prod/neurosoft/product-69506-670943.html (accessed on 24 October 2022).

- Simulated Auditory Brainstem Response (SABR) Software|School of Audiology & Speech Sciences. Available online: https://audiospeech.ubc.ca/research/brane/sabr-software/ (accessed on 7 October 2021).

- Ballas, A.; Katrakazas, P. Ωto_abR: A Web Application for the Visualization and Analysis of Click-Evoked Auditory Brainstem Responses. Digital 2021, 1, 188–197. [Google Scholar] [CrossRef]

- Schlee, W.; Schoisswohl, S.; Staudinger, S.; Schiller, A.; Lehner, A.; Langguth, B.; Schecklmann, M.; Simoes, J.; Neff, P.; Marcrum, S.C.; et al. Towards a Unification of Treatments and Interventions for Tinnitus Patients: The EU Research and Innovation Action UNITI. In Progress in Brain Research; Elsevier B.V.: Amsterdam, The Netherlands, 2021; Volume 260, pp. 441–451. ISBN 9780128215869. [Google Scholar]

- Interacoustics Eclipse EP25 Manuals|ManualsLib. Available online: https://www.manualslib.com/products/Interacoustics-Eclipse-Ep25-11647463.html (accessed on 18 October 2022).

- Lang, D.T. Tools for Parsing and Generating XML within R and S-Plus [R Package XML Version 3.99-0.11]. 2022. Available online: https://cran.r-project.org/web/packages/XML/index.html (accessed on 4 December 2022).

- Parse XML [R Package Xml2 Version 1.3.3]. 2021. Available online: https://cran.r-project.org/web/packages/xml2/index.html (accessed on 4 December 2022).

- Wickham, H. Ggplot2; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- R-Forge: Signal: Project Home. Available online: https://r-forge.r-project.org/projects/signal/ (accessed on 18 October 2022).

- Sueur, J.; Aubin, T.; Simonis, C. Seewave, a free modular tool for sound analysis and synthesis. Bioacoustics 2012, 18, 213–226. [Google Scholar] [CrossRef]

- Analysis of Music and Speech [R Package TuneR Version 1.4.0]. 2022. Available online: https://cran.r-project.org/web/packages/tuneR/index.html (accessed on 4 December 2022).

- Van Boxtel, G. Gsignal: Signal Processing 2021. Available online: https://github.com/gjmvanboxtel/gsignal (accessed on 4 December 2022).

- John, D.; Tang, Q.; Albinali, F.; Intille, S. An Open-Source Monitor-Independent Movement Summary for Accelerometer Data Processing. J. Meas. Phys. Behav. 2019, 2, 268–281. [Google Scholar] [CrossRef] [PubMed]

- Base Package—RDocumentation. Available online: https://rdocumentation.org/packages/base/versions/3.6.2 (accessed on 18 October 2022).

- Katz, J. Handbook of Clinical Audiology, 7th ed.; Wolters Kluwer Health: Philadelphia, PA, USA, 2016; Volume 122, ISBN 9781451191639. [Google Scholar]

- Musiek, F.; Charette, L.; Kelly, T.; Lee, W.W.; Musiek, E. Hit and False-Positive Rates for the Middle Latency Response in Patients with Central Nervous System Involvement. J. Am. Acad. Audiol. 1999, 10, 124–132. [Google Scholar] [CrossRef]

- Goldstein, R.; Rodman, L.B. Early Components of Averaged Evoked Responses to Rapidly Repeated Auditory Stimuli. J. Speech Hear. Res. 1967, 10, 697–705. [Google Scholar] [CrossRef]

- Hall, J.W. New Handbook for Auditory Evoked Responses; Introduction to Auditory Evoked Response Measurement; Pearson: San Antonio, TX, USA, 2007. [Google Scholar]

- O’Beirne, G.A.; Patuzzi, R.B. Basic Properties of the Sound-Evoked Post-Auricular Muscle Response (PAMR). Hear. Res. 1999, 138, 115–132. [Google Scholar] [CrossRef]

- Picton, T.; Hunt, M.; Mowrey, R.; Rodriguez, R.; Maru, J. Evaluation of Brain-Stem Auditory Evoked Potentials Using Dynamic Time Warping. Electroencephalogr. Clin. Neurophysiol. 1988, 71, 212–225. [Google Scholar] [CrossRef]

- Valderrama, J.T.; De la Torre, A.; Alvarez, I.; Segura, J.C.; Thornton, A.R.D.; Sainz, M.; Vargas, J.L. Automatic Quality Assessment and Peak Identification of Auditory Brainstem Responses with Fitted Parametric Peaks. Comput. Methods Programs Biomed. 2014, 114, 262–275. [Google Scholar] [CrossRef] [PubMed]

- Krumbholz, K.; Hardy, A.J.; de Boer, J. Automated Extraction of Auditory Brainstem Response Latencies and Amplitudes by Means of Non-Linear Curve Registration. Comput. Methods Programs Biomed. 2020, 196, 105595. [Google Scholar] [CrossRef] [PubMed]

| Stimulus Parameters | Acquisition Parameters | ||

|---|---|---|---|

| Type of transducer | Insert phone | Analysis time | 15 ms |

| Sample Rate | 30 kHz | Sweeps | 4000 |

| Type of stimulus | Click | Mode | Monaural |

| Polarity | Alternate | Electrode montage | Vertical (Fpz, Cz, M1/M2) |

| Repetition rate | Stimuli per second: 22 Hz | Filter setting for input amplifier | Low Pass: 1500 Hz; High Pass: 33 Hz 6 dB/octave |

| Intensity | 80 dB nHL; 90 dB nHL | Preliminary display settings—Visual display filters | Low pass: 1500 Hz; High Pass: 150 Hz |

| Masking | Off | ||

| Stimulus Parameters | Acquisition Parameters | ||

|---|---|---|---|

| Type of transducer | Insert phone | Analysis time | 150 ms |

| Sample Rate | 3 kHz | Sweeps | 500 |

| Type of stimulus | 2 kHz Tone Burst | Mode | Monaural |

| Duration of stimulus | 28 sine waves in total; Rise/fall: 4; plateau: 20 | Electrode montage | Vertical (Fpz, Cz, M1/M2) |

| Polarity | Rarefaction | Filter setting for input amplifier | Low Pass: 1500 Hz; High Pass: 10 Hz, 12 dB/octave |

| Repetition rate | Stimuli per second: 6.1 Hz | Preliminary display settings—Visual display filters | Low pass: 100 Hz; High Pass: 15 Hz |

| Intensity | 70 dB nHL | ||

| Masking | Off | ||

| Wave of Interest | ABR 80 dB | ABR 90 dB |

|---|---|---|

| Peak I | 94.88% | 93.86% |

| Peak III | 98.51% | 98.85% |

| Peak V | 92.97% | 91.51% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manta, O.; Sarafidis, M.; Vasileiou, N.; Schlee, W.; Consoulas, C.; Kikidis, D.; Vassou, E.; Matsopoulos, G.K.; Koutsouris, D.D. Development and Evaluation of Automated Tools for Auditory-Brainstem and Middle-Auditory Evoked Potentials Waves Detection and Annotation. Brain Sci. 2022, 12, 1675. https://doi.org/10.3390/brainsci12121675

Manta O, Sarafidis M, Vasileiou N, Schlee W, Consoulas C, Kikidis D, Vassou E, Matsopoulos GK, Koutsouris DD. Development and Evaluation of Automated Tools for Auditory-Brainstem and Middle-Auditory Evoked Potentials Waves Detection and Annotation. Brain Sciences. 2022; 12(12):1675. https://doi.org/10.3390/brainsci12121675

Chicago/Turabian StyleManta, Ourania, Michail Sarafidis, Nikolaos Vasileiou, Winfried Schlee, Christos Consoulas, Dimitris Kikidis, Evgenia Vassou, George K. Matsopoulos, and Dimitrios D. Koutsouris. 2022. "Development and Evaluation of Automated Tools for Auditory-Brainstem and Middle-Auditory Evoked Potentials Waves Detection and Annotation" Brain Sciences 12, no. 12: 1675. https://doi.org/10.3390/brainsci12121675

APA StyleManta, O., Sarafidis, M., Vasileiou, N., Schlee, W., Consoulas, C., Kikidis, D., Vassou, E., Matsopoulos, G. K., & Koutsouris, D. D. (2022). Development and Evaluation of Automated Tools for Auditory-Brainstem and Middle-Auditory Evoked Potentials Waves Detection and Annotation. Brain Sciences, 12(12), 1675. https://doi.org/10.3390/brainsci12121675