Pleasantness Ratings of Musical Dyads in Cochlear Implant Users

Abstract

1. Introduction

2. Methods

2.1. Participants

2.2. Materials and Procedure

2.3. Calibration

2.4. Modulation Detection

2.5. Fundamental Frequency Discrimination

2.6. Consonance Identification

2.7. Pleasantness Ratings

2.8. Speech Reception in Multi-Talker Background Noise

2.9. The Goldsmith Musical Sophistication Index

2.10. Data Analysis

3. Results

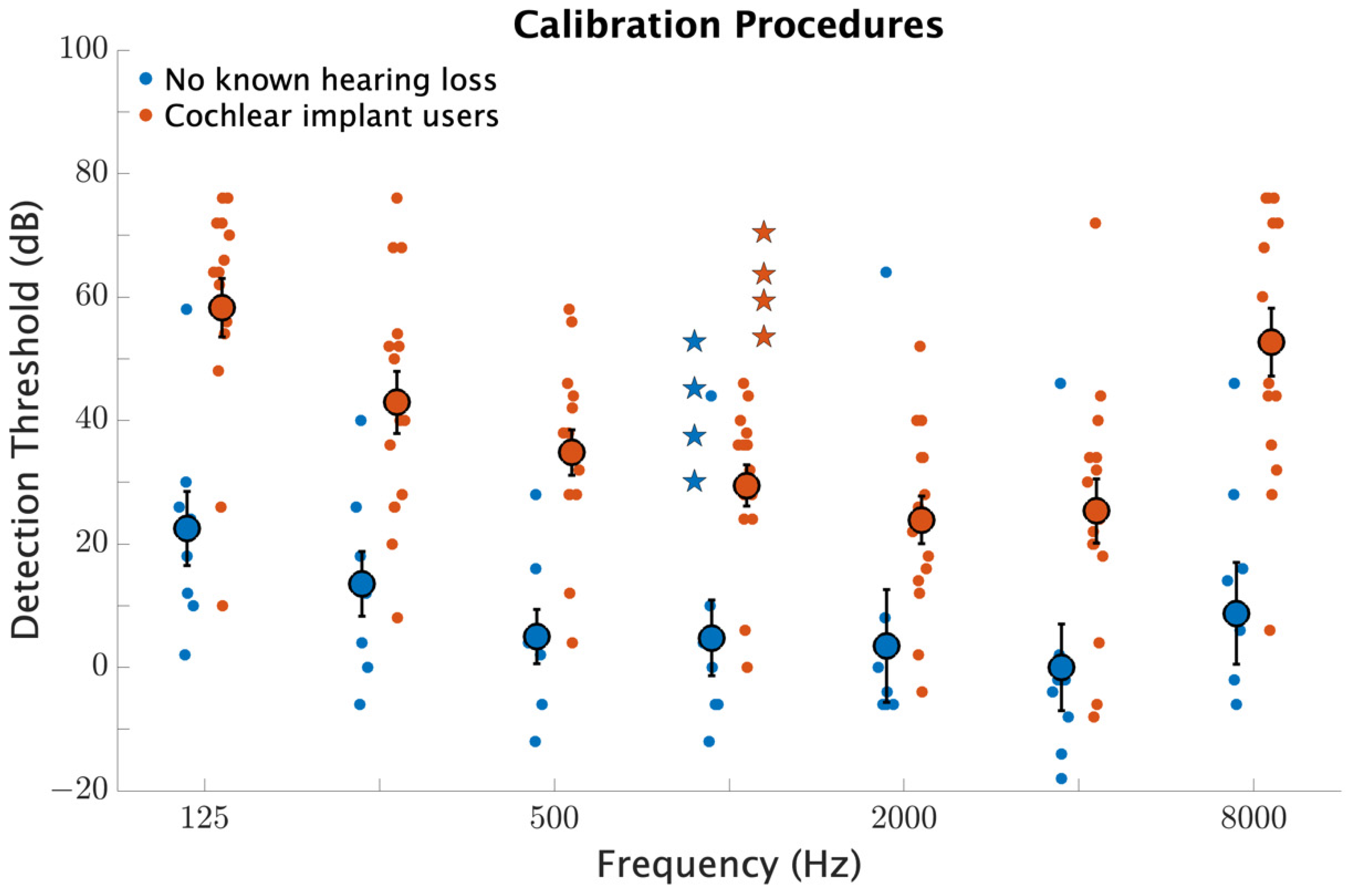

3.1. Calibration Procedures

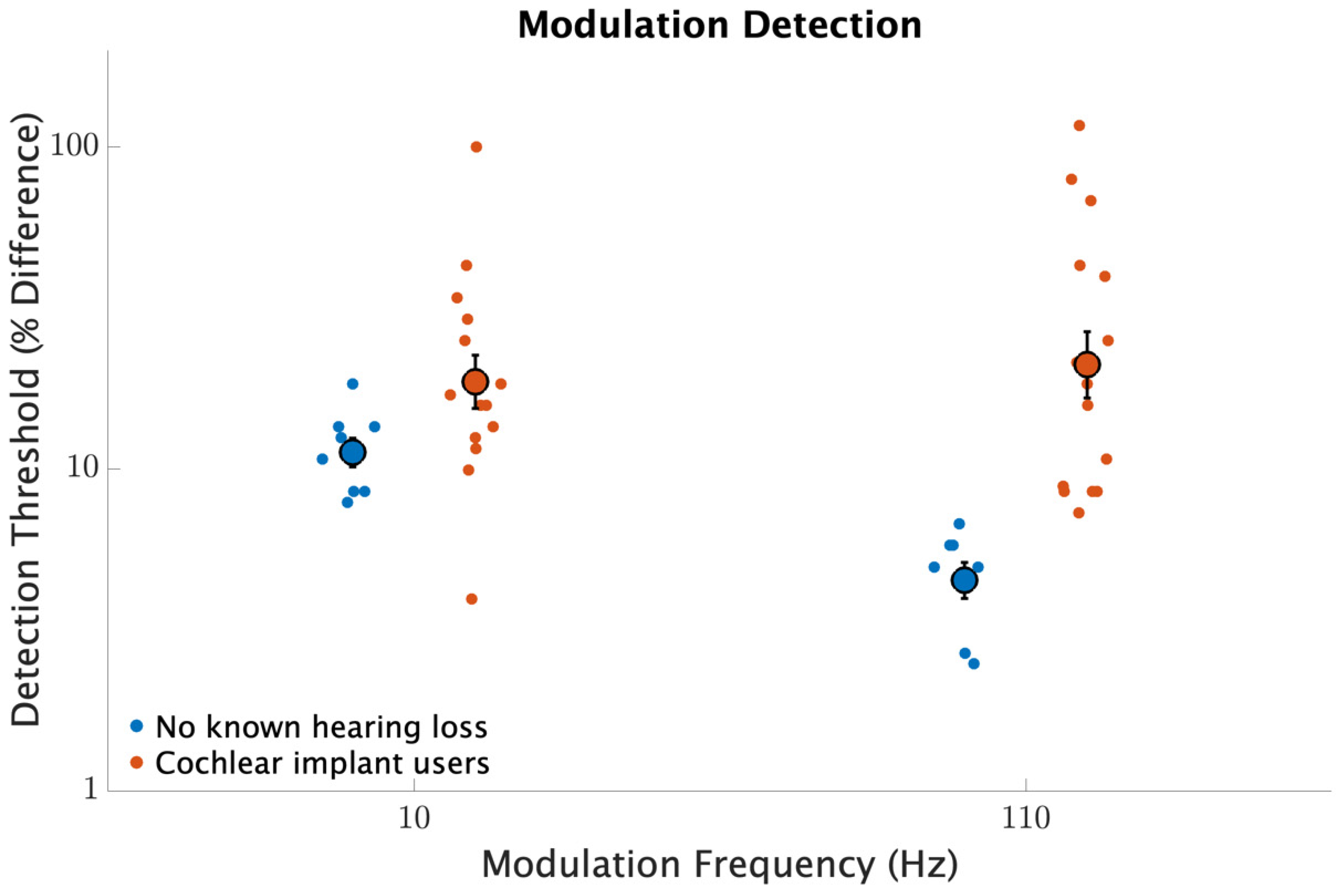

3.2. Modulation Detection

3.3. Fundamental Frequency Discrimination

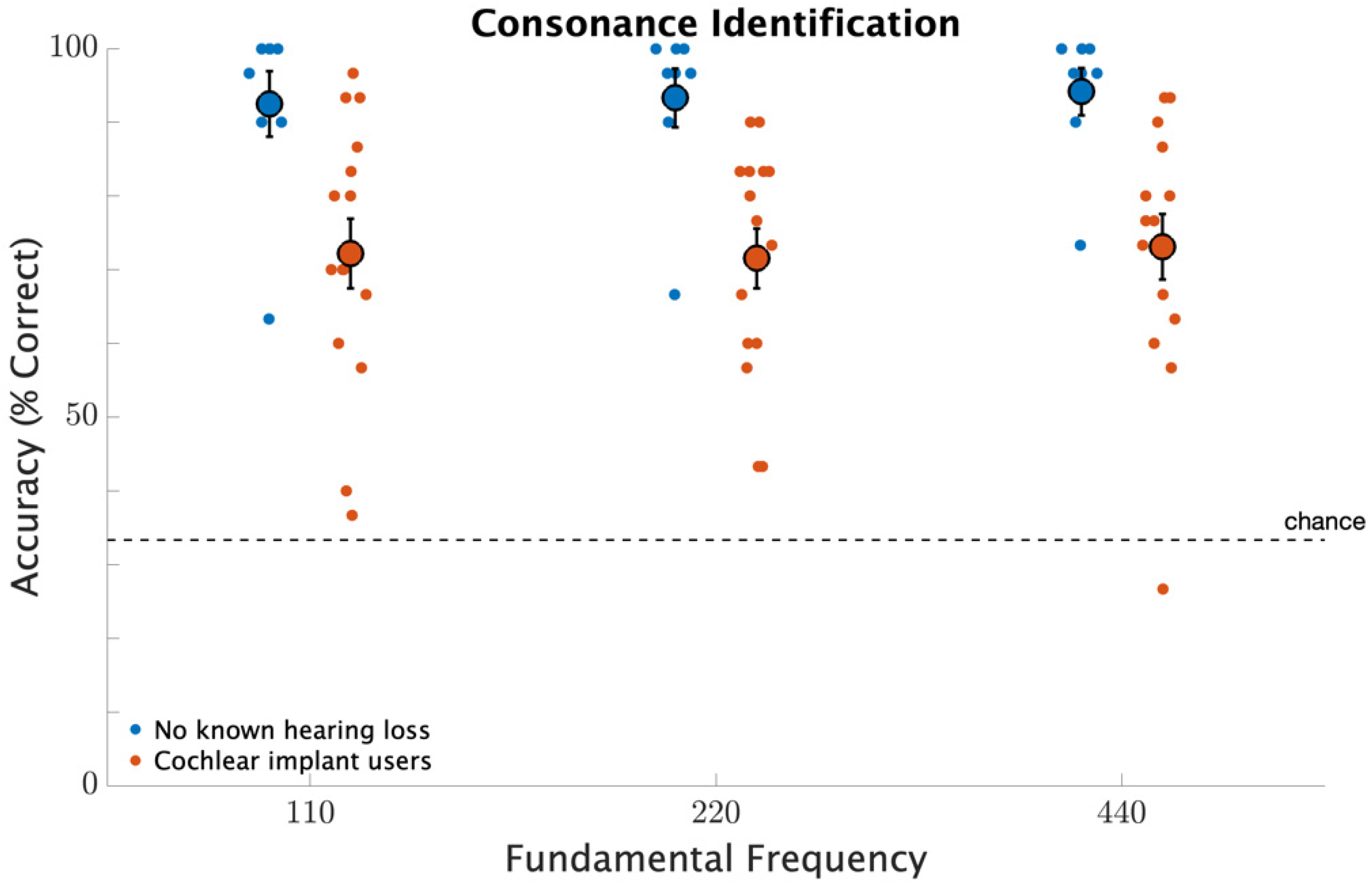

3.4. Consonance Identification

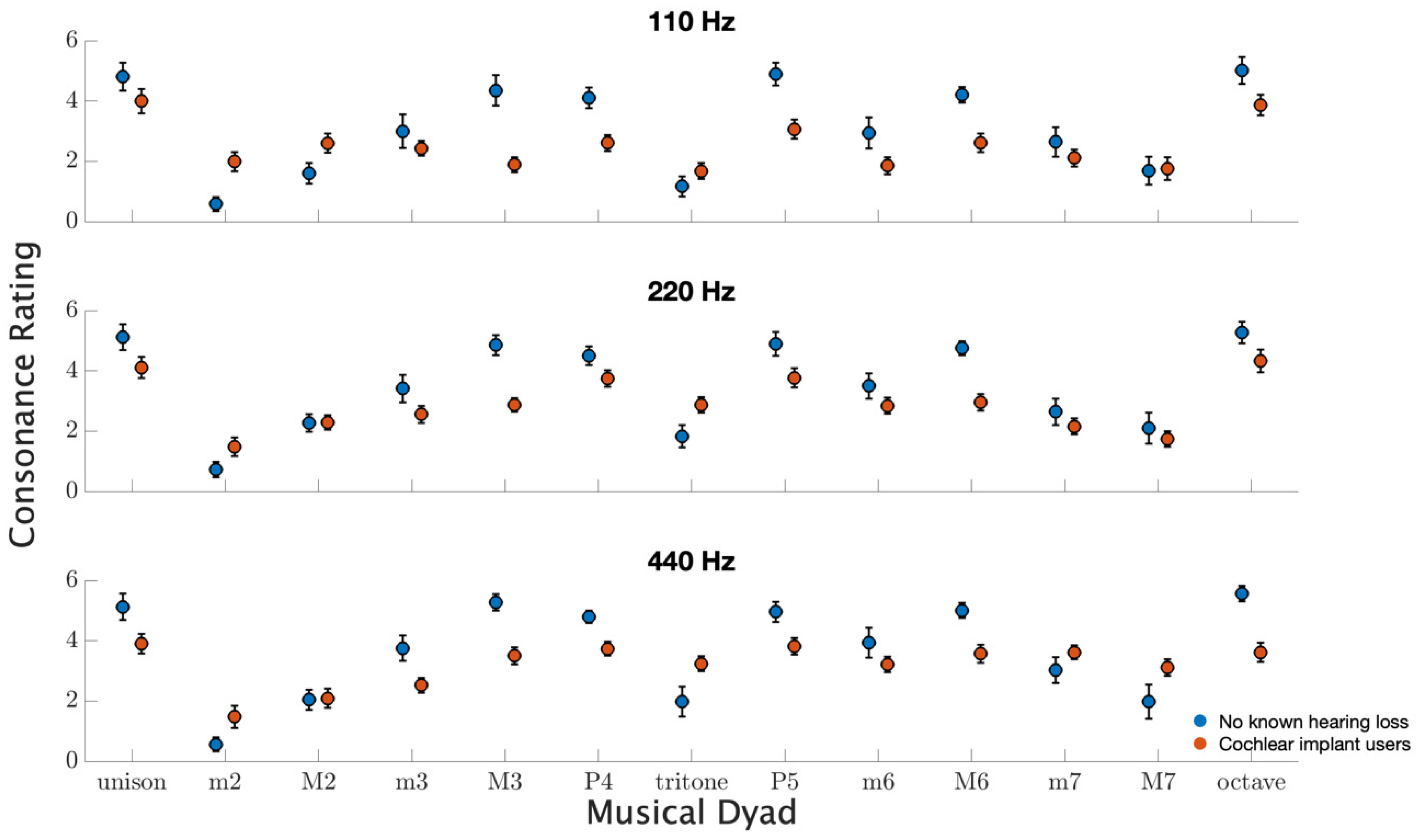

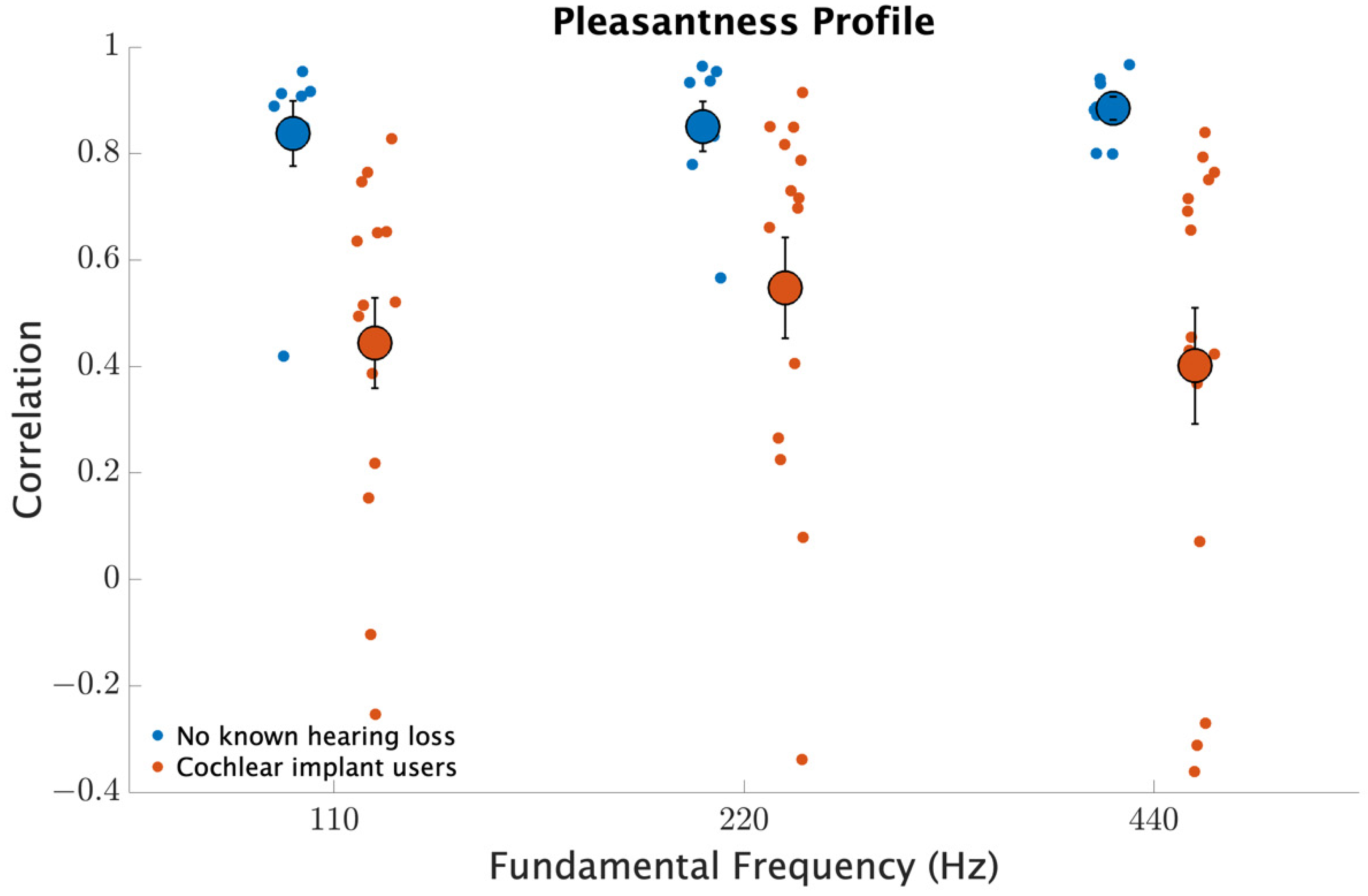

3.5. Pleasantness Ratings

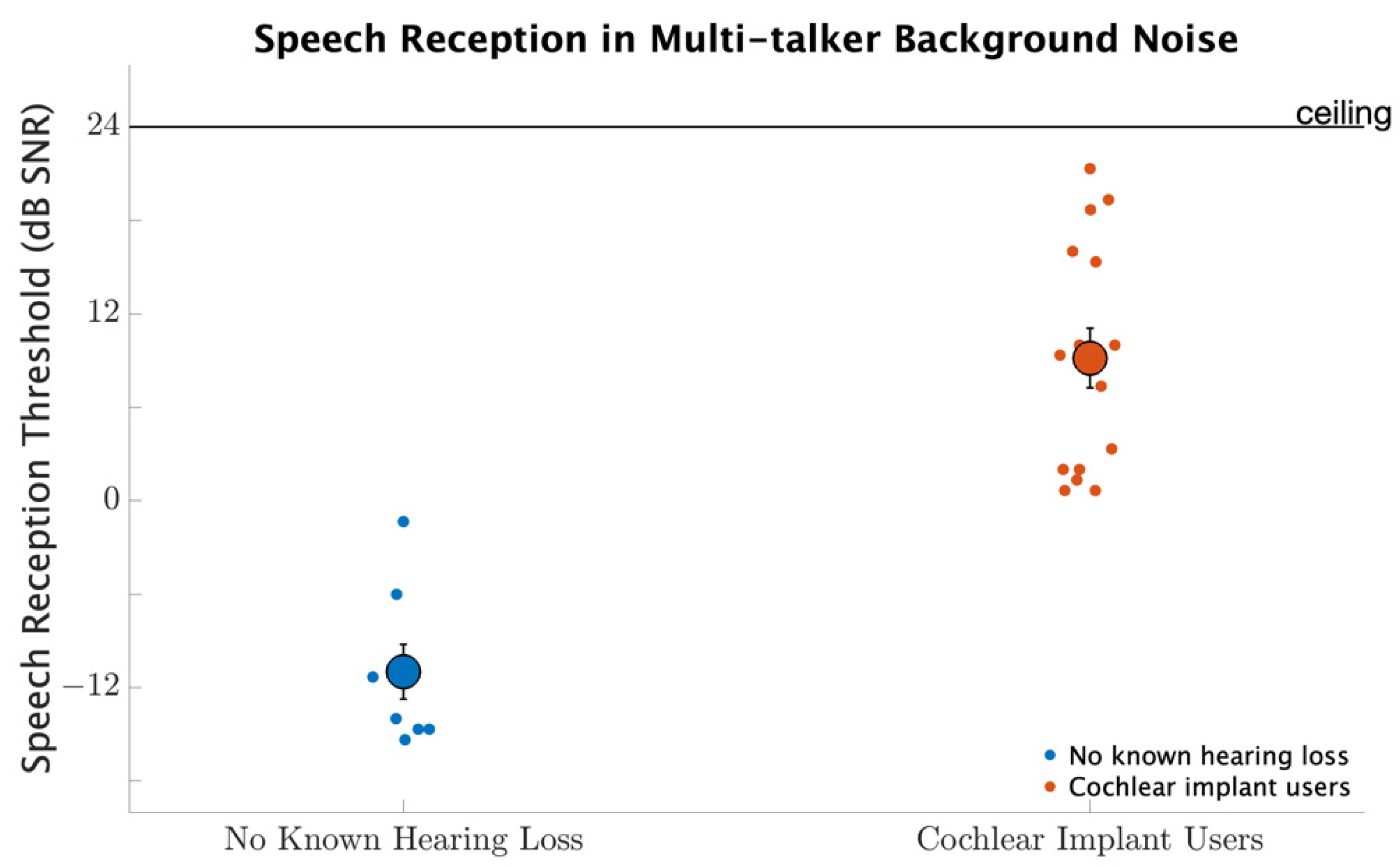

3.6. Speech Reception in Multi-Talker Background Noise

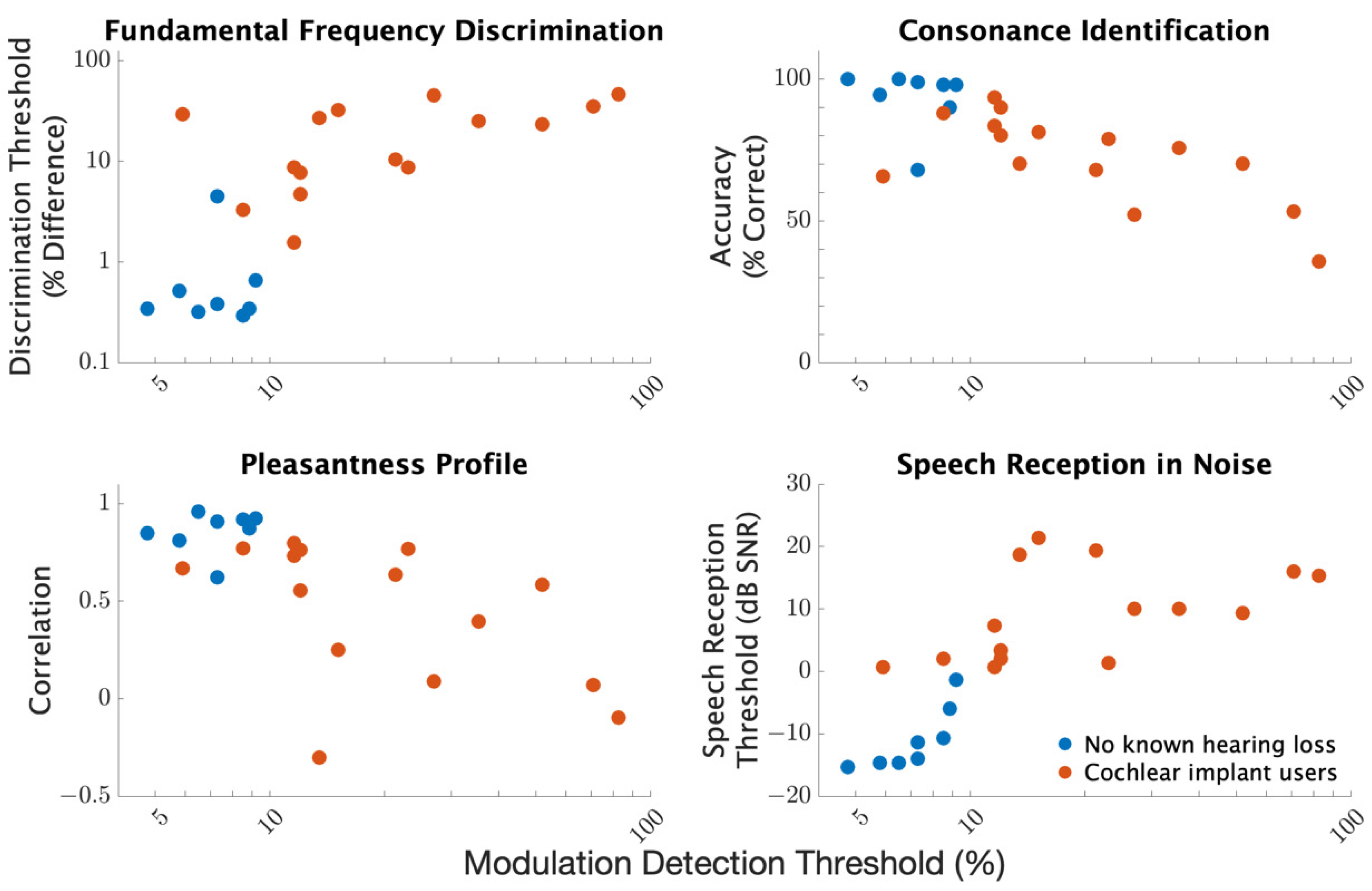

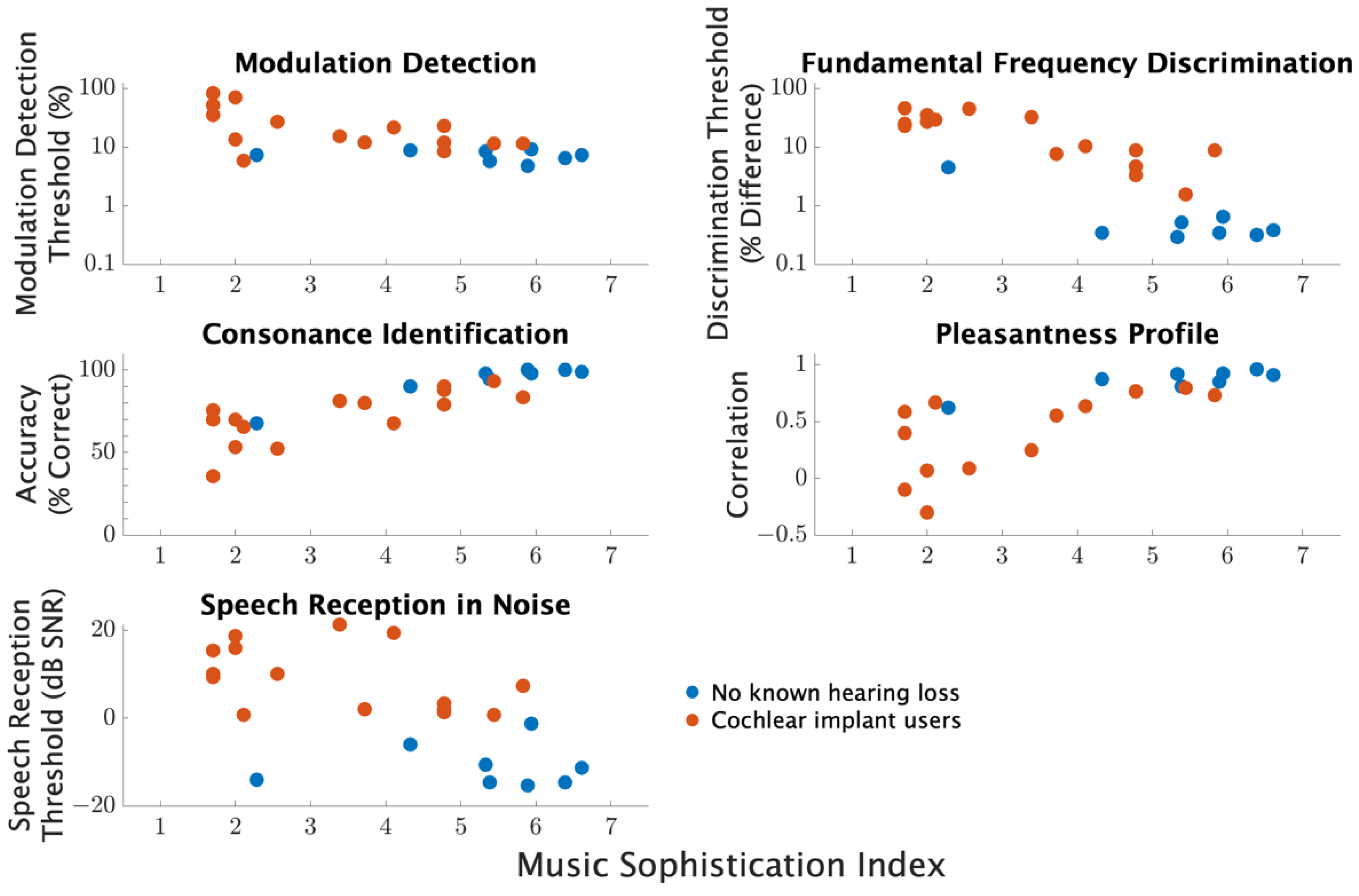

3.7. Correlation Analyses

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gfeller, K.; Turner, C.; Oleson, J.; Zhang, X.; Gantz, B.; Froman, R.; Olszewski, C. Accuracy of Cochlear Implant Recipients on Pitch Perception, Melody Recognition, and Speech Reception in Noise. Ear Hear. 2007, 28, 412. [Google Scholar] [CrossRef]

- Cullington, H.E.; Zeng, F.-G. Speech Recognition with Varying Numbers and Types of Competing Talkers by Normal-Hearing, Cochlear-Implant, and Implant Simulation Subjects. J. Acoust. Soc. Am. 2008, 123, 450–461. [Google Scholar] [CrossRef]

- Gfeller, K.; Lansing, C.R. Melodic, Rhythmic, and Timbral Perception of Adult Cochlear Implant Users. J. Speech Lang. Hear. Res. 1991, 34, 916–920. [Google Scholar] [CrossRef] [PubMed]

- Gfeller, K.E.; Olszewski, C.; Turner, C.; Gantz, B.; Oleson, J. Music Perception with Cochlear Implants and Residual Hearing. AUD 2006, 11, 12–15. [Google Scholar] [CrossRef] [PubMed]

- Gfeller, K.; Oleson, J.; Knutson, J.F.; Breheny, P.; Driscoll, V.; Olszewski, C. Multivariate Predictors of Music Perception and Appraisal by Adult Cochlear Implant Users. J. Am. Acad. Audiol. 2008, 19, 120–134. [Google Scholar] [CrossRef]

- Eisenberg, L.S. Use of the Cochlear Implant by the Prelingually Deaf. Ann. Otol. Rhinol. Laryngol. Suppl. 1982, 91, 62–66. [Google Scholar] [PubMed]

- Wouters, J.; McDermott, H.J.; Francart, T. Sound Coding in Cochlear Implants: From Electric Pulses to Hearing. IEEE Signal. Process. Mag. 2015, 32, 67–80. [Google Scholar] [CrossRef]

- Limb, C.J.; Roy, A.T. Technological, Biological, and Acoustical Constraints to Music Perception in Cochlear Implant Users. Hear. Res. 2014, 308, 13–26. [Google Scholar] [CrossRef] [PubMed]

- Dorman, M.F.; Wilson, B.S. The Design and Function of Cochlear Implants: Fusing Medicine, Neural Science and Engineering, These Devices Transform Human Speech into an Electrical Code That Deafened Ears Can Understand. Am. Sci. 2004, 92, 436–445. [Google Scholar] [CrossRef]

- Barda, S.; Vir, D.; Singh, S. Coding and Analysis of Speech in Cochlear Implant: A Review. Int. J. Adv. Res. Comput. Sci. 2018, 9, 118–125. [Google Scholar] [CrossRef]

- Loizou, P.C. Signal Processing for Cochlear Prosthesis: A Tutorial Review. In Proceedings of the 40th Midwest Symposium on Circuits and Systems, Dedicated to the Memory of Professor Mac Van Valkenburg, Sacramento, CA, USA, 6 August 1997; Volume 2, pp. 881–885. [Google Scholar]

- Looi, V.; She, J. Music Perception of Cochlear Implant Users: A Questionnaire, and Its Implications for a Music Training Program. Int. J. Audiol. 2010, 49, 116–128. [Google Scholar] [CrossRef]

- Gfeller, K.; Driscoll, V.; Smith, R.S.; Scheperle, C. The Music Experiences and Attitudes of a First Cohort of Prelingually Deaf Adolescent and Young Adult Cochlear Implant Recipients. Semin. Hear. 2012, 33, 346–360. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Cariani, P.A.; Delgutte, B. Neural Correlates of the Pitch of Complex Tones. I. Pitch and Pitch Salience. J. Neurophysiol. 1996, 76, 1698–1716. [Google Scholar] [CrossRef] [PubMed]

- Cariani, P.A.; Delgutte, B. Neural Correlates of the Pitch of Complex Tones. II. Pitch Shift, Pitch Ambiguity, Phase Invariance, Pitch Circularity, Rate Pitch, and the Dominance Region for Pitch. J. Neurophysiol. 1996, 76, 1717–1734. [Google Scholar] [CrossRef] [PubMed]

- Plack, C.J.; Oxenham, A.J. The Psychophysics of Pitch. In Pitch: Neural Coding and Perception; Plack, C.J., Fay, R.R., Oxenham, A.J., Popper, A.N., Eds.; Springer Handbook of Auditory Research; Springer: New York, NY, USA, 2005; pp. 7–55. ISBN 978-0-387-28958-8. [Google Scholar]

- Oxenham, A.J.; Micheyl, C.; Keebler, M.V.; Loper, A.; Santurette, S. Pitch Perception beyond the Traditional Existence Region of Pitch. Proc. Natl. Acad. Sci. USA 2011, 108, 7629–7634. [Google Scholar] [CrossRef]

- Cedolin, L.; Delgutte, B. Spatiotemporal Representation of the Pitch of Harmonic Complex Tones in the Auditory Nerve. J. Neurosci. 2010, 30, 12712–12724. [Google Scholar] [CrossRef] [PubMed]

- Oxenham, A.J. Pitch Perception | Journal of Neuroscience. Available online: http://www.jneurosci.org/content/32/39/13335.long (accessed on 22 April 2019).

- Shackleton, T.M.; Carlyon, R.P. The Role of Resolved and Unresolved Harmonics in Pitch Perception and Frequency Modulation Discrimination. J. Acoust. Soc. Am. 1994, 95, 3529–3540. [Google Scholar] [CrossRef]

- Carlyon, R.P.; Shackleton, T.M. Comparing the Fundamental Frequencies of Resolved and Unresolved Harmonics: Evidence for Two Pitch Mechanisms? J. Acoust. Soc. Am. 1994, 95, 3541–3554. [Google Scholar] [CrossRef]

- Carlyon, R.P.; Deeks, J.M. Limitations on Rate Discrimination. J. Acoust. Soc. Am. 2002, 112, 1009–1025. [Google Scholar] [CrossRef]

- Venter, P.J.; Hanekom, J.J. Is There a Fundamental 300 Hz Limit to Pulse Rate Discrimination in Cochlear Implants? JARO 2014, 15, 849–866. [Google Scholar] [CrossRef]

- Zeng, F.-G. Temporal Pitch in Electric Hearing. Hear. Res. 2002, 174, 101–106. [Google Scholar] [CrossRef]

- McKay, C.M.; McDermott, H.J.; Carlyon, R.P. Place and Temporal Cues in Pitch Perception: Are They Truly Independent? Acoust. Res. Lett. Online 2000, 1, 25–30. [Google Scholar] [CrossRef]

- Carlyon, R.P.; Deeks, J.M.; McKay, C.M. The Upper Limit of Temporal Pitch for Cochlear-Implant Listeners: Stimulus Duration, Conditioner Pulses, and the Number of Electrodes Stimulated. J. Acoust. Soc. Am. 2010, 127, 1469–1478. [Google Scholar] [CrossRef]

- Rossing, T.D. The Science of Sound; Addison Wesley: Reading, MA, USA, 1989. [Google Scholar]

- McAdams, S.; Winsberg, S.; Donnadieu, S.; De Soete, G.; Krimphoff, J. Perceptual Scaling of Synthesized Musical Timbres: Common Dimensions, Specificities, and Latent Subject Classes. Psychol. Res. 1995, 58, 177–192. [Google Scholar] [CrossRef] [PubMed]

- Kong, Y.-Y.; Mullangi, A.; Marozeau, J.; Epstein, M. Temporal and Spectral Cues for Musical Timbre Perception in Electric Hearing. J. Speech Lang. Hear. Res. 2011, 54, 981–994. [Google Scholar] [CrossRef]

- Patil, K.; Pressnitzer, D.; Shamma, S.; Elhilali, M. Music in Our Ears: The Biological Bases of Musical Timbre Perception. PLoS Comput. Biol. 2012, 8, e1002759. [Google Scholar] [CrossRef]

- Plomp, R.; Steeneken, H. Pitch versus Timbre. In Proceedings of the 7th International Congress of Acoustics, Budapest, Hungary, 18–26 August 1971; pp. 377–380. [Google Scholar]

- Dowling, W.J.; Harwood, J.L. Music Cognition, 1st ed.; Academic Press: Orlando, FL, USA, 1986. [Google Scholar]

- Deutsch, D. Music Perception. Front. Biosci. 2007, 12, 4473–4482. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Krishnan, A. Neural Correlates of Consonance, Dissonance, and the Hierarchy of Musical Pitch in the Human Brainstem. J. Neurosci. 2009, 29, 13165–13171. [Google Scholar] [CrossRef]

- Tramo, M.J.; Cariani, P.A.; Delgutte, B.; Braida, L.D. Neurobiological Foundations for the Theory of Harmony in Western Tonal Music. Ann. N. Y. Acad. Sci. 2001, 930, 92–116. [Google Scholar] [CrossRef] [PubMed]

- McDermott, J.H.; Lehr, A.J.; Oxenham, A.J. Individual Differences Reveal the Basis of Consonance. Curr. Biol. 2010, 20, 1035–1041. [Google Scholar] [CrossRef]

- Knobloch, M.; Verhey, J.L.; Ziese, M.; Nitschmann, M.; Arens, C.; Böckmann-Barthel, M. Musical Harmony in Electric Hearing. Music Percept. 2018, 36, 40–52. [Google Scholar] [CrossRef]

- Crew, J.D.; Galvin, J.J.; Landsberger, D.M.; Fu, Q.-J. Contributions of Electric and Acoustic Hearing to Bimodal Speech and Music Perception. PLoS ONE 2015, 10, e0120279. [Google Scholar] [CrossRef]

- Perception of Sung Speech in Bimodal Cochlear Implant Users—Joseph D. Crew, John J. Galvin, Qian-Jie Fu. 2016. Available online: https://journals.sagepub.com/doi/full/10.1177/2331216516669329 (accessed on 31 July 2021).

- Spitzer, J.; Mancuso, D.; Cheng, M.-Y. Development of a Clinical Test of Musical Perception: Appreciation of Music in Cochlear Implantees (AMICI). J. Am. Acad. Audiol. 2008, 19.1, 56–81. [Google Scholar] [CrossRef] [PubMed]

- LoPresto, M.C. Measuring Musical Consonance and Dissonance. Phys. Teach. 2015, 53, 225–229. [Google Scholar] [CrossRef]

- Looi, V.; Gfeller, K.; Driscoll, V.D. Music Appreciation and Training for Cochlear Implant Recipients: A Review. Semin. Hear. 2012, 33, 307–334. [Google Scholar] [CrossRef]

- Kaernbach, C. Simple Adaptive Testing with the Weighted Up-Down Method. Percept. Psychophys. 1991, 49, 227–229. [Google Scholar] [CrossRef]

- Wilson, R.H.; McArdle, R.; Watts, K.L.; Smith, S.L. The Revised Speech Perception in Noise Test (R-SPIN) in a Multiple Signal-to-Noise Ratio Paradigm. J. Am. Acad. Audiol. 2012, 23, 590–605. [Google Scholar] [CrossRef]

- Müllensiefen, D.; Gingras, B.; Musil, J.; Stewart, L. The Musicality of Non-Musicians: An Index for Assessing Musical Sophistication in the General Population. PLoS ONE 2014, 9, e89642. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J. A Power Primer. Psychol. Bull. 1992, 112, 155–159. [Google Scholar] [CrossRef] [PubMed]

- Firestone, G.M.; McGuire, K.; Liang, C.; Zhang, N.; Blankenship, C.M.; Xiang, J.; Zhang, F. A Preliminary Study of the Effects of Attentive Music Listening on Cochlear Implant Users’ Speech Perception, Quality of Life, and Behavioral and Objective Measures of Frequency Change Detection. Front. Hum. Neurosci. 2020, 14, 110. [Google Scholar] [CrossRef]

- Goldsworthy, R.L.; Delhorne, L.A.; Braida, L.D.; Reed, C.M. Psychoacoustic and Phoneme Identification Measures in Cochlear-Implant and Normal-Hearing Listeners. Trends Amplif. 2013, 17, 27–44. [Google Scholar] [CrossRef] [PubMed]

- Goldsworthy, R.L. Correlations Between Pitch and Phoneme Perception in Cochlear Implant Users and Their Normal Hearing Peers. JARO 2015, 16, 797–809. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, M.; Peng, S.-C. Processing F0 with Cochlear Implants: Modulation Frequency Discrimination and Speech Intonation Recognition. Hear. Res. 2008, 235, 143–156. [Google Scholar] [CrossRef] [PubMed]

- Garadat, S.N.; Zwolan, T.A.; Pfingst, B.E. Across-Site Patterns of Modulation Detection: Relation to Speech Recognition. J. Acoust. Soc. Am. 2012, 131, 4030–4041. [Google Scholar] [CrossRef]

- Luo, X.; Fu, Q.-J.; Wei, C.-G.; Cao, K.-L. Speech Recognition and Temporal Amplitude Modulation Processing by Mandarin-Speaking Cochlear Implant Users. Ear Hear. 2008, 29, 957. [Google Scholar] [CrossRef] [PubMed]

- Milczynski, M.; Chang, J.E.; Wouters, J.; van Wieringen, A. Perception of Mandarin Chinese with Cochlear Implants Using Enhanced Temporal Pitch Cues. Hear. Res. 2012, 285, 1–12. [Google Scholar] [CrossRef]

- Vliegen, J.; Moore, B.C.J.; Oxenham, A.J. The Role of Spectral and Periodicity Cues in Auditory Stream Segregation, Measured Using a Temporal Discrimination Task. J. Acoust. Soc. Am. 1999, 106, 938–945. [Google Scholar] [CrossRef]

| ID | Age | Gender | Etiology | Ear Tested | Age at Onset | Years Implanted | CI Company & Processor | Implant Model | Duration of Deafness | Method of Streaming |

|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 36 | F | Unknown | Left/Right/Both Together | 15 | L:9 R:13 | Cochlear N7s | L:CI24RE (CA) R:CI24RE (CA) | L:5 R:1 | Mini Mic2 |

| 3 | 75 | F | Progressive Nerve Loss | Left/Right/Both Together | 40 | L:21 R:17 | Cochlear N6s | L:CI24R (CS) R:CI24RE (CA) | L:1 R:5 | Cochlear Binaural Cable |

| 5 | 83 | M | Noise Induced | Right | 40 | 13 | Cochlear N6 | CI24RE (CA) | 20 | Juster Multimedia Speaker SP-689 |

| 13 | 59 | M | Mumps Disease | Right | 14 | 3 | Med-El Sonnet | Sonata | 42 | I-loop streaming |

| 15 | 57 | M | Ototoxic Medicine | Left | 54 | 1 | Advanced Bionics Naida | HiRes Ultra 3D CI with HiFocus Mid-Scala Electrode | 1 | Bluetooth/Compilot |

| 17 | 74 | F | Unknown | Left/Right/Both Together | Birth | L:20 R:15 | Cochlear N6s | L:CI24R (CS) R:CI24RE (CA) | L:9 R:9 | Free Field through HP Computer Speakers |

| 18 | 72 | F | Measles In Utero | Right | Birth | L:12 R:10 | Cochlear N6s | L:CI24RE (CA) R:CI512 | L:1 R:1 | Computer Speakers |

| 20 | 66 | F | Unknown | Right | 18 | L:4 R:5 | L:Cochlear N6 R:Cochlear N7 | L:CI522 R:CI522 | L:14 R:16 | Free Field through iPad Speakers |

| 25 | 68 | M | Unknown | Right | 44 | 4 | Cochlear N6 | CI552 | 20 | Mini Mic2 |

| All Participants | F0DT | CID | PR | SRT | |

| MDT | 0.72 *** | 0.76 *** | 0.66 *** | 0.73 *** | |

| F0DT | 0.85 *** | 0.79 *** | 0.84 *** | ||

| CID | 0.80 *** | 0.66 *** | |||

| PR | 0.75 *** | ||||

| No Known Hearing Loss | F0DT | CID | PR | SRT | |

| MDT | 0.09 | 0.16 | 0.18 † | 0.81 * | |

| F0DT | 0.92 ** | 0.89 ** | 0.09 † | ||

| CID | 0.91 ** | 0.12 † | |||

| PR | 0.34 † | ||||

| CI Users | F0DT | CID | PR | SRT | |

| MDT | 0.54 * | 0.69 ** | 0.50 | 0.49 | |

| F0DT | 0.80 *** | 0.75 *** | 0.62 * | ||

| CID | 0.70 ** | 0.48 | |||

| PR | 0.72 ** |

| MDT | F0DT | CID | PR | SRT | ||

| All Participants | MSI | 0.65 *** | 0.83 *** | 0.85 *** | 0.77 *** | 0.60 ** |

| No Known Hearing Loss | MSI | 0.20 | 0.81 * | 0.96 *** | 0.87 ** | 0.06 † |

| CI Users | MSI | 0.56 * | 0.85 *** | 0.72 ** | 0.70 ** | 0.45 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Camarena, A.; Manchala, G.; Papadopoulos, J.; O’Connell, S.R.; Goldsworthy, R.L. Pleasantness Ratings of Musical Dyads in Cochlear Implant Users. Brain Sci. 2022, 12, 33. https://doi.org/10.3390/brainsci12010033

Camarena A, Manchala G, Papadopoulos J, O’Connell SR, Goldsworthy RL. Pleasantness Ratings of Musical Dyads in Cochlear Implant Users. Brain Sciences. 2022; 12(1):33. https://doi.org/10.3390/brainsci12010033

Chicago/Turabian StyleCamarena, Andres, Grace Manchala, Julianne Papadopoulos, Samantha R. O’Connell, and Raymond L. Goldsworthy. 2022. "Pleasantness Ratings of Musical Dyads in Cochlear Implant Users" Brain Sciences 12, no. 1: 33. https://doi.org/10.3390/brainsci12010033

APA StyleCamarena, A., Manchala, G., Papadopoulos, J., O’Connell, S. R., & Goldsworthy, R. L. (2022). Pleasantness Ratings of Musical Dyads in Cochlear Implant Users. Brain Sciences, 12(1), 33. https://doi.org/10.3390/brainsci12010033