Design and Implementation of an EEG-Based Learning-Style Recognition Mechanism

Abstract

1. Introduction

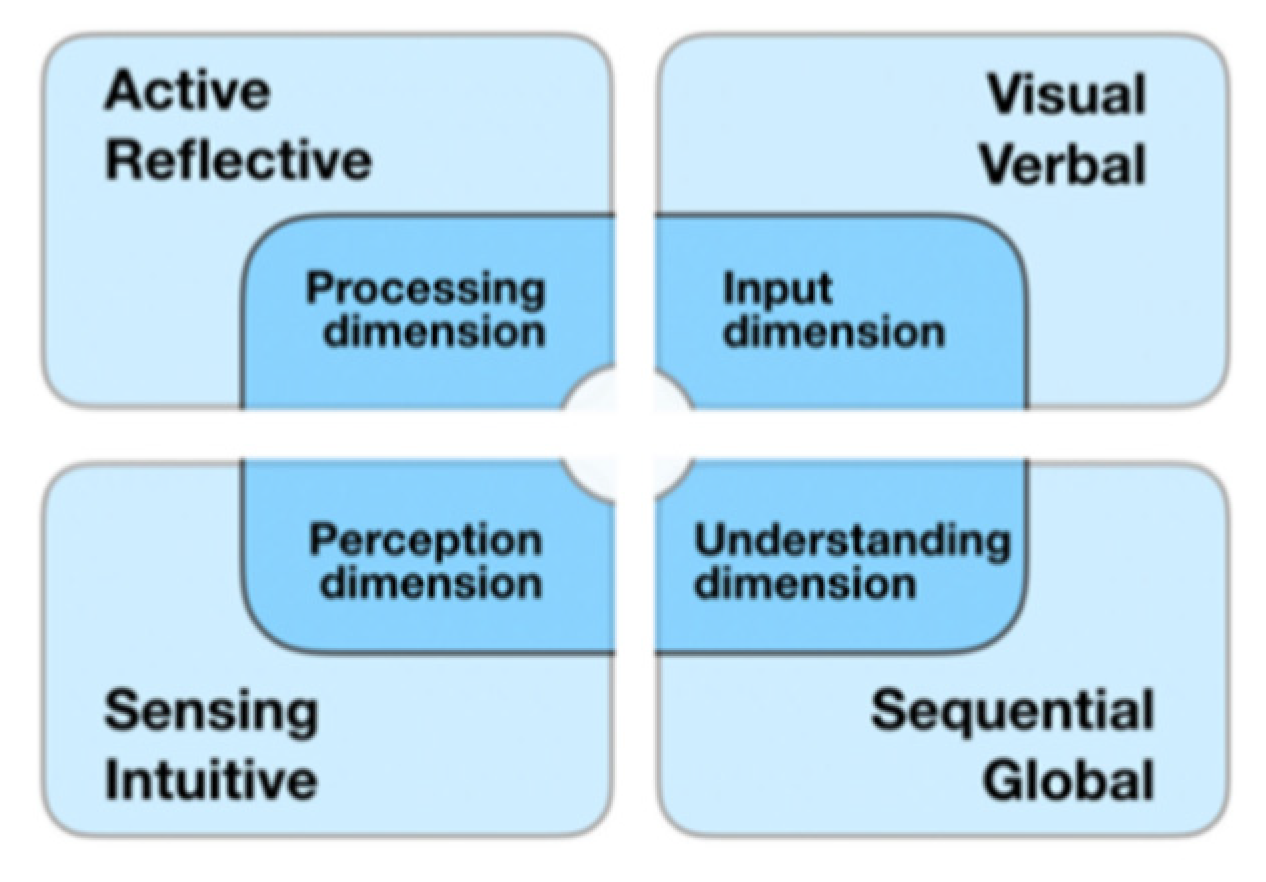

1.1. Overview of Learning Styles

1.2. Current State of Learning-Style Recognition Methods

- (1)

- Explicit recognition calculates scores from the Index of Learning Styles (ILS) questionnaire [12] to judge subjects’ learning styles [13]. Researchers such as Surjono [14], Hwang [15], and Wang [16] have built learning-style models based on ILS. Table 1 summarizes the advantages and disadvantages of explicit recognition.

- (2)

- Implicit recognition mines and analyzes learners’ interactive behavior data using online learning systems (e.g., learning behavior logs and social behavior data) to indirectly grasp learning styles. Thus, there is no need for participants to fill out the ILS. Many researchers have studied the implicit recognition mechanism. Taking the number of clicks on certain buttons, time spent on activities, quiz results, number of posts in forums, and other behavior data as inputs, Cha et al. [17] used a decision tree and hidden Markov model to recognize learning styles. Villaverde et al. [18], meanwhile, used the following as input sources: which types of learning materials learners prefer, whether learners modified answers before submitting, and whether learners actively participated in forums; on that basis, artificial neural networks were used for recognition. Subsequent studies that used online interactive behavior for learning-style recognition have employed decision trees [19,20], Bayesian networks [21], neural networks [22,23], genetic algorithms [24], and the J48 algorithm [25]. The abovementioned studies all used conventional online learning-behavior features as their data sources; our study, however, used EEG signals for learning-style recognition, the advantages of which will be discussed below.

| Method | Advantages | Disadvantages |

|---|---|---|

| Explicit recognition |

|

|

| Implicit recognition |

1.3. Applying EEG Signals to Learning-Style Recognition

1.4. Experimental Questions

- (1)

- How should an experimental method be designed to stimulate internal state differences in the processing dimension of learning styles?

- (2)

- Can the student’s learning style be recognized based on EEG signals?

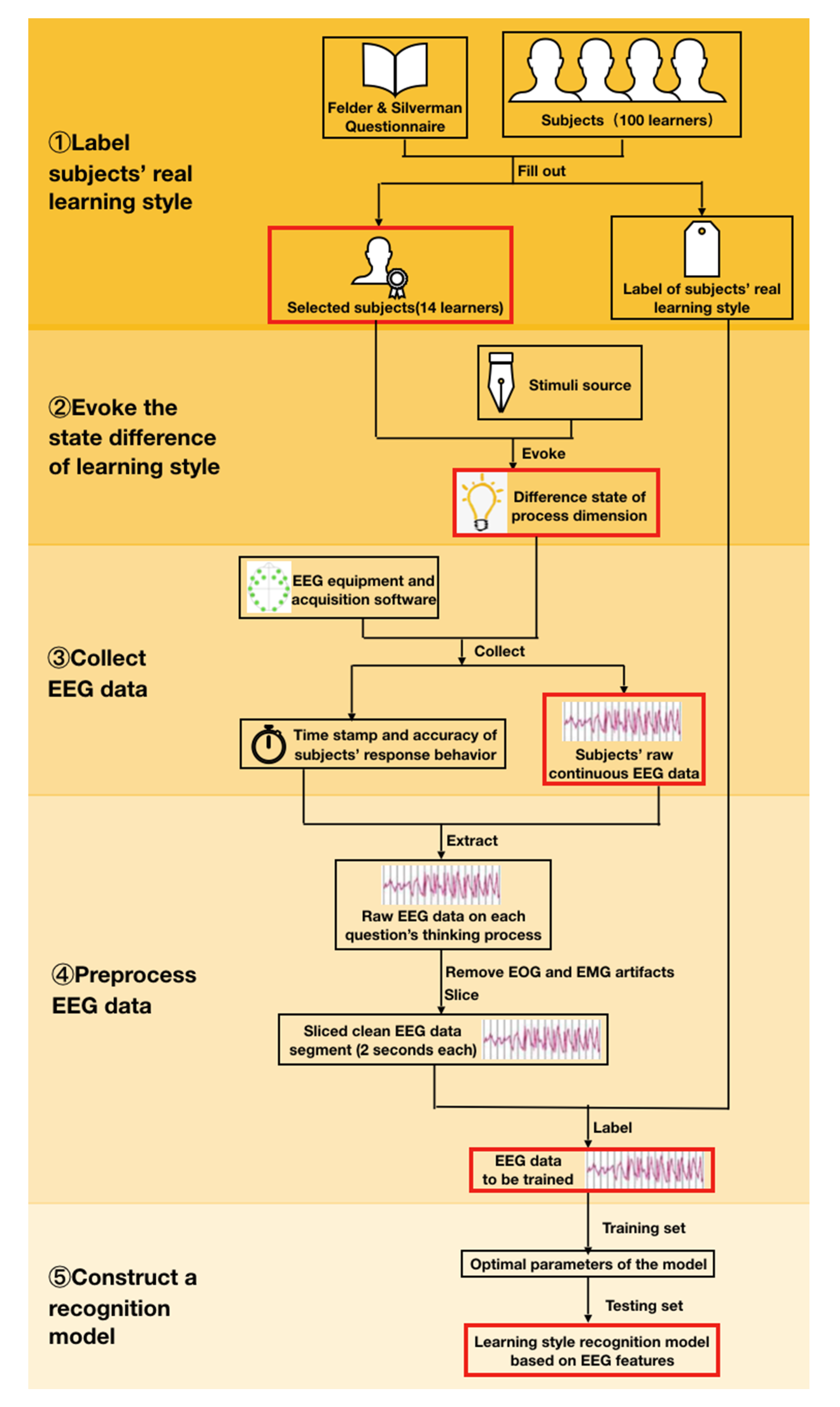

2. Experimental Design

2.1. Labeling Subjects’ Real Learning Styles

2.1.1. Labeling Method

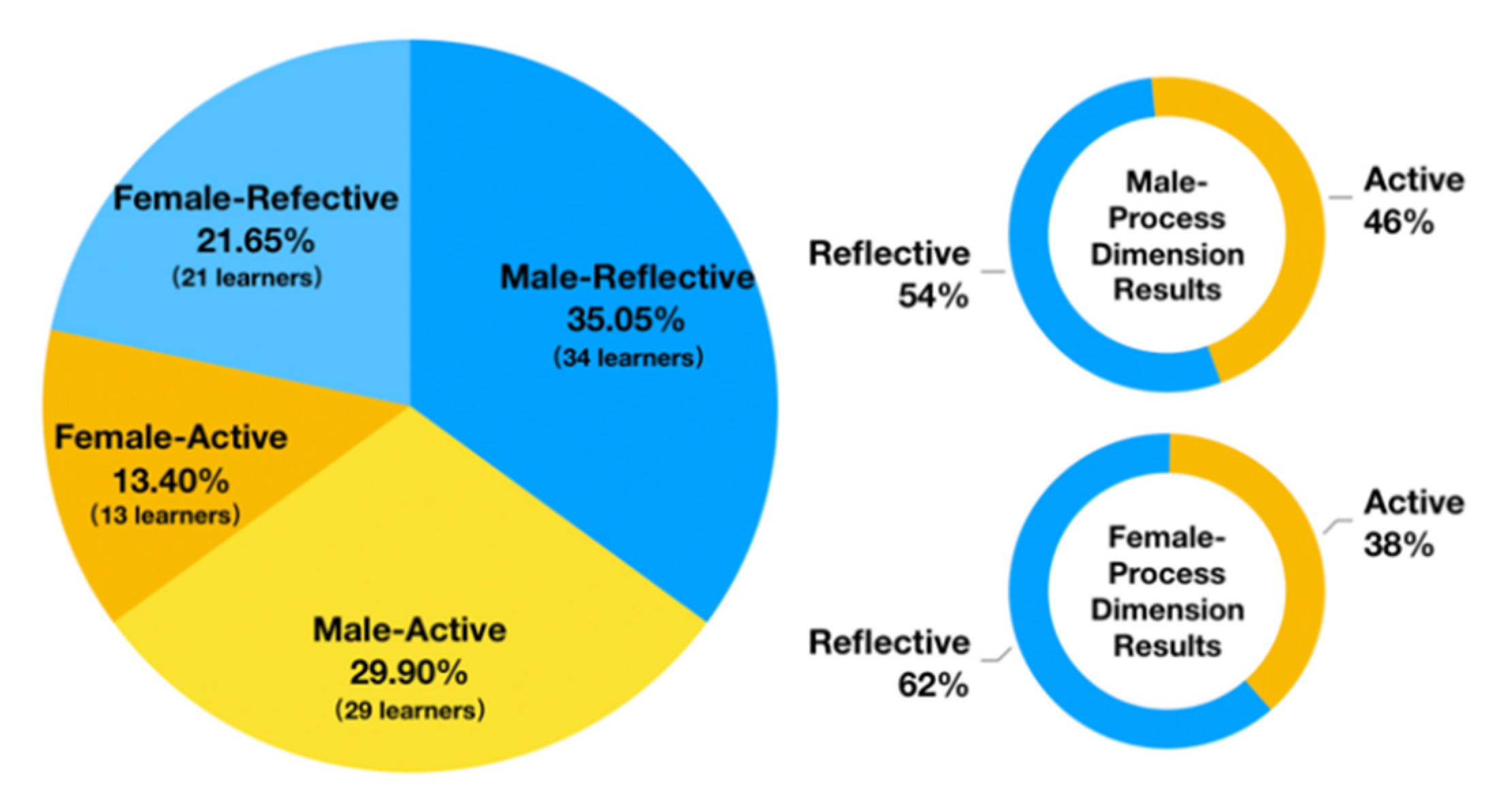

2.1.2. ILS Results

2.1.3. Screening Subjects

2.2. Evoking the State Difference of Learning Style

2.2.1. Principles for Selecting the Stimulus Mode

- (1)

- How could we effectively stimulate individual differences in the subjects’ learning styles in the processing dimension?

- (2)

- How could we ensure that the designed stimulus mode would generate as few invalid signals as possible (e.g., from insufficient time for subjects’ information processing or bodily movements that would interfere with the quality of the internal signals)?

2.2.2. Confirming the Stimulus Source

- (1)

- RAPM asks subjects to think logically based on the rules associated with the symbols in the matrix diagram. They must fill in vacant positions using the appropriate options. Figure 4 shows the schematic diagram for RAPM test questions. RAPM is often used to assess thinking ability, observational ability, and the ability to use information to solve problems. Using RAPM as a stimulus can prompt subjects to undertake logical thinking that will stimulate brain processing.

- (2)

- Easy questions will reduce the length of cognitive processing, but too difficult ones will generate fatigue and cognitive load, which will affect the quality of the signals. The overall difficulty level of the RAPM is moderate, which can ensure good signal quality. Besides, for younger subjects, the Raven’s Standard Progressive Matrices (RSPM) can adaptively be used instead of the RAPM.

- (1)

- The RAPM test is largely nontextual. Thus, since subjects do not need to read (test questions, for example), it will reduce the amount of noncognitive processing, which will ensure to the greatest extent that the stimulated signals reflect the brain’s thinking processes.

- (2)

- The RAPM items are presented in the form of multiple-choice questions. Subjects can click the corresponding option to complete their response, which minimizes unnecessary body movement. This can reduce the influence of body movement and other signals on the data.

2.3. Collecting the EEG Data

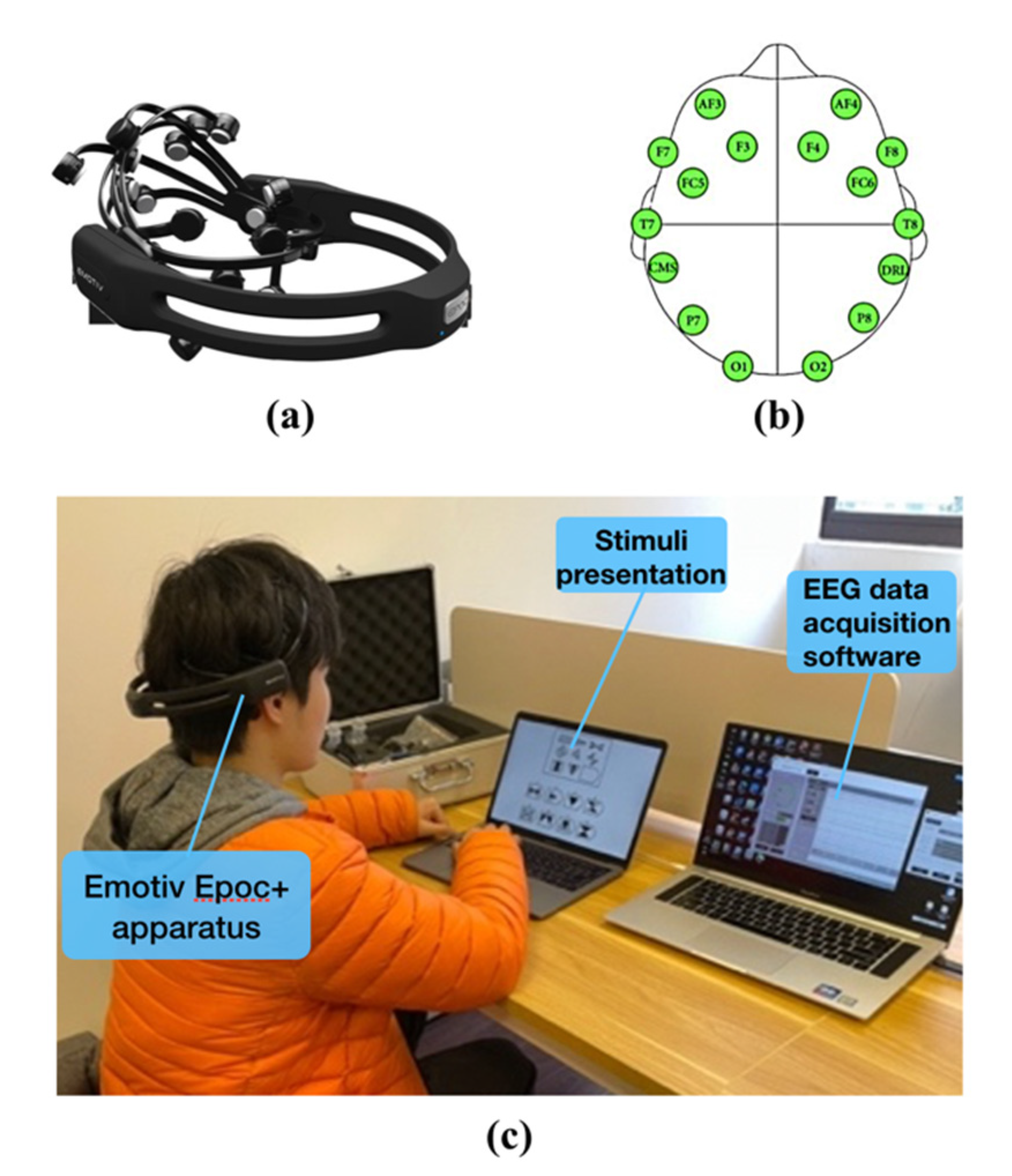

2.3.1. Data-Collection Apparatus

2.3.2. Data-Collection Environment

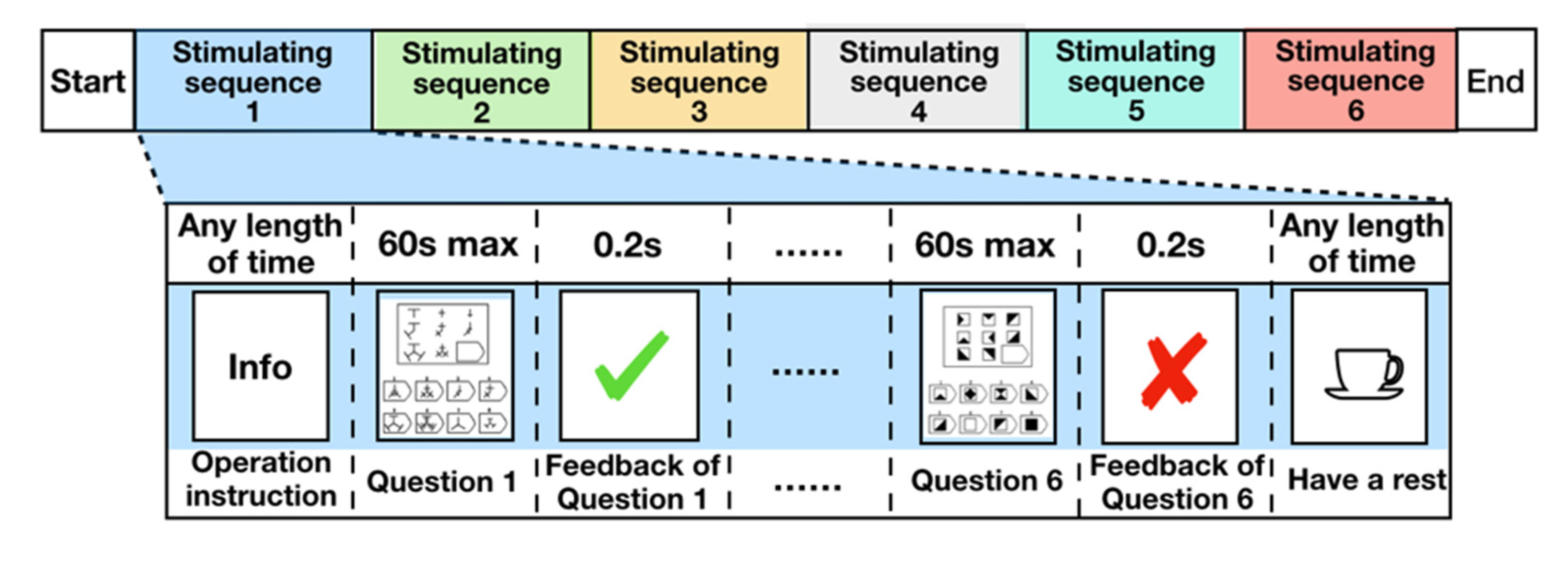

2.3.3. Data-Collection Process

2.4. Preprocessing the EEG Data

2.4.1. Extraction of Effective EEG Data Segments

2.4.2. EEG Filtering and Artifact Removal

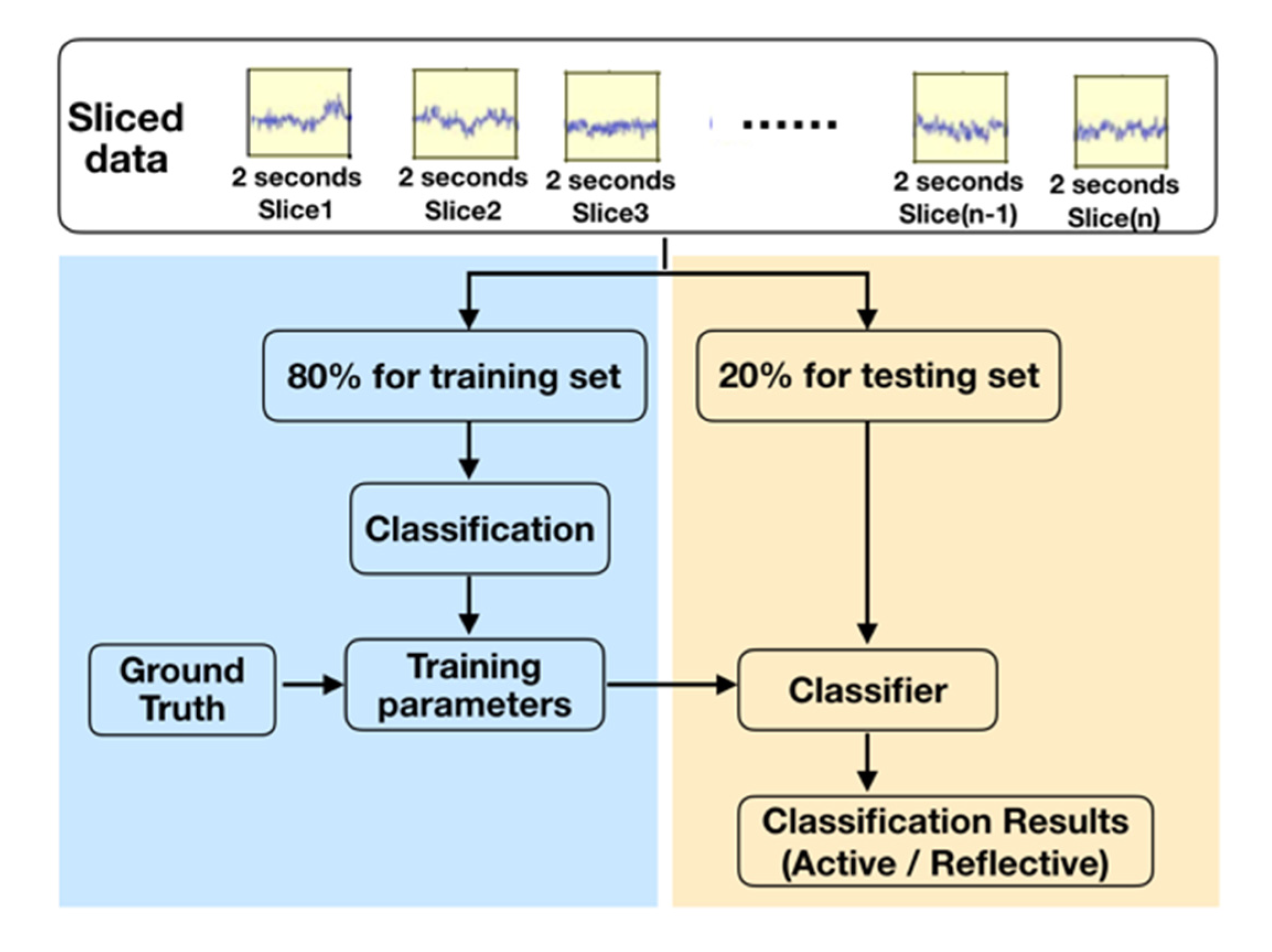

2.4.3. Data Slicing

2.4.4. Labeling EEG Data to Be Trained

2.5. Constructing the Recognition Model

2.5.1. Training Process

2.5.2. Recognition Process

3. Experimental Results and Analysis

3.1. Verifying the Experimental Design

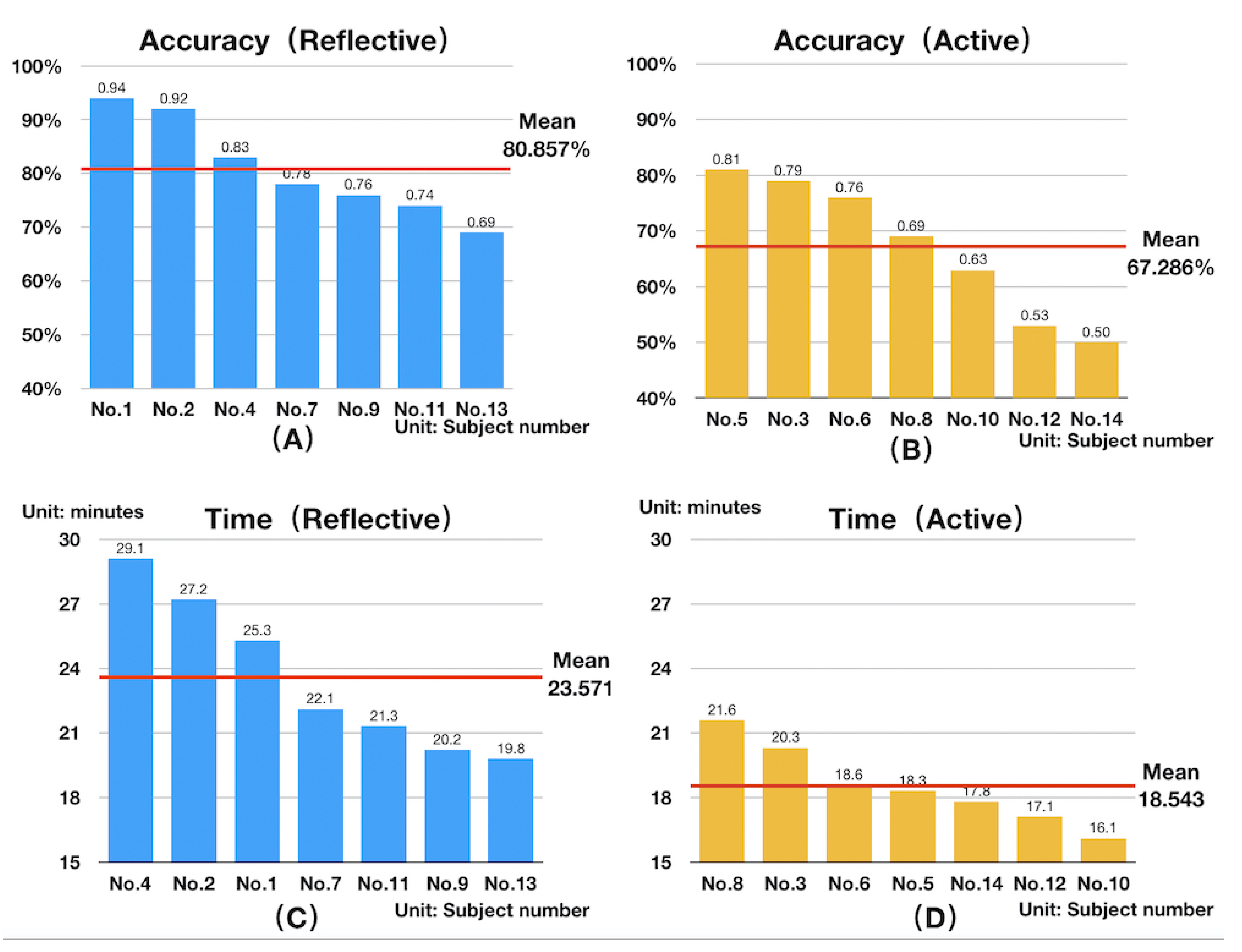

3.1.1. Data Visualization Analysis of Subjects’ Experimental Results

3.1.2. Statistical Analysis

Verify Significant Differences in Answer Results

Verify Significant Differences in Answer Time

Analysis of Statistical Conclusion

3.2. Effectiveness of EEG-Based Learning-Style Recognition

4. Discussion and Conclusions

- (1)

- We designed and verified an experimental method that effectively stimulated internal state differences in the subjects’ different learning styles in the processing dimension. Based on Felder–Silverman’s processing-dimension theory, we conducted an experiment to stimulate subjects’ state differences in the processing dimension. The validity of the processing-dimension state differences stimulated by the experiment was then verified through a statistical analysis of the subjects’ behavioral states.

- (2)

- We confirmed the validity of learning-style recognition based on EEG signals. We designed an appropriate experimental acquisition environment, collected EEG signals from the subjects’ processing dimension, processed the collected EEG data, and constructed a 1-DCNN model for recognition. The recognition result was 71.2%, showing that an EEG-based learning-style recognition algorithm has promising classification ability. This also confirmed that the 1-DCNN recognition algorithm could improve the accuracy of the EEG-based learning-style recognition model. In addition, we compared the accuracy of the proposed method with that of other mature recognition methods and further verified the effectiveness and potential of EEG-based learning-style recognition.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- De Bello, T.C. Comparison of Eleven Major Learning Styles Models: Variables, Appropriate Populations, Validity of Instrumentation, and the Research Behind Them. J. Read. Writ. Learn. Disabil. Int. 1990, 6, 203–222. [Google Scholar] [CrossRef]

- Grey, S.; Williams, J.N.; Rebuschat, P. Individual differences in incidental language learning: Phonological working memory, learning styles, and personality. Learn. Individ. Differ. 2015, 38, 44–53. [Google Scholar] [CrossRef]

- Oxman, S.; Wong, W. White Paper: Adaptive Learning Systems; DeVry Education Group and Integrated Education Solutions: Chicago, IL, USA, 2014. [Google Scholar]

- Saba, G.W.; Chou, C.L.; Satterfield, J.; Teherani, A.; Hauer, K.; Poncelet, A.; Chen, H.C. Teaching patient-centered communication skills: A telephone follow-up curriculum for medical students. Med. Educ. Online 2014, 19, 22522. [Google Scholar] [CrossRef] [PubMed]

- Coffield, F.; Moseley, D.V.; Hall, E.; Ecclestone, K. Should We Be Using Learning Styles? What Research Has to Say to Practice; Learning & Skills Research Centre: London, UK, 2004. [Google Scholar]

- Hawk, T.F.; Shah, A.J. Using Learning Style Instruments to Enhance Student Learning. Decis. Sci. J. Innov. Educ. 2007, 5, 1–19. [Google Scholar] [CrossRef]

- Kolb, D.A. Learning styles and disciplinary differences. In The Modern American College; Chickering, A.W., Ed.; Jossey-Bass: San Francisco, CA, USA, 1981; pp. 232–255. [Google Scholar]

- Felder, R.M. Learning and teaching styles in engineering education. Eng. Educ. 1988, 78, 674–681. [Google Scholar] [CrossRef]

- Fleming, N.D.; Mills, C. Not Another Inventory, Rather a Catalyst for Reflection. Improv. Acad. 1992, 11, 137–143. [Google Scholar] [CrossRef]

- Ali, O.; Ayşegül, A.; Kadriye, F.B. Determination of Learning Style Based on Gregorc Learning Style Model: Faculty of Engineering. BEST Dergi. 2018, 2, 115–123. [Google Scholar]

- Jiang, Q.; Zhao, W.; Du, X. Study on the Users Learning Style Model of Correction under Felder-Silverman Questionnaire. Mod. Distance Educ. 2010, 1, 62–66. [Google Scholar]

- Felder-Soloman Index of Learning Style. E. coli. Available online: http://www.engr.ncsu.edu/learningstyles/ilsweb.html (accessed on 16 September 2018).

- D’Amore, A.; James, S.; Mitchell, E.K. Learning styles of first-year undergraduate nursing and midwifery students: A cross-sectional survey utilising the Kolb Learning Style Inventory. Nurse Educ. Today 2012, 32, 506–515. [Google Scholar] [CrossRef]

- Surjono, H.D. The evaluation of a moodle based adaptive e-learning system. Int. J. Inf. Educ. Technol. 2014, 4, 89–92. [Google Scholar] [CrossRef][Green Version]

- Yang, T.C.; Hwang, G.J.; Yang, J.H.S. Development of an adaptive learning system with multiple perspectives based on students’ learning styles and cognitive styles. J. Educ. Technol. Soc. 2013, 185–200. [Google Scholar]

- Wang, Q.F. The Research of Distance Learner Learning Style Analysis System. Ph.D. Thesis, Shandong Normal University, Jinan, China, 2009. [Google Scholar]

- Cha, H.J.; Kim, Y.S.; Park, S.H.; Yoon, T.B.; Jung, Y.M.; Lee, J.H. Learning Style Diagnosis Based on User Interface Behavior for the Customization of Learning Interfaces in an Intelligent Tutoring System. In Proceedings of the 8th International Conference on Intelligent Tutoring Systems, Jhongli, Taiwan, 26 June 2006; Springer: Berlin, Germany, 2006. [Google Scholar] [CrossRef]

- Villaverde, J.E.; Godoy, D.; Amandi, A. Learning styles’ recognition in e-learning environments with feed-forward neural networks. J. Comput. Assist. Learn. 2006, 22, 197–206. [Google Scholar] [CrossRef]

- Özpolat, E.; Akar, G.B. Automatic detection of learning styles for an e-learning system. Comput. Educ. 2009, 53, 355–367. [Google Scholar] [CrossRef]

- Crockett, K.; Latham, A.; Whitton, N. On predicting learning styles in conversational intelligent tutoring systems using fuzzy decision trees. Int. J. Hum. Comput. Stud. 2017, 97, 98–115. [Google Scholar] [CrossRef]

- Garcia, P.; Schiaffino, S.; Amandi, A. An enhanced Bayesian model to detect students’ learning styles in Web-based courses. J. Comput. Assist. Learn. 2008, 24, 305–315. [Google Scholar] [CrossRef]

- Kolekar, S.V.; Sanjeevi, S.G.; Bormane, D.S. Learning style recognition using Artificial Neural Network for adaptive user interface in e-learning. In Proceedings of the 2010 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), Coimbatore, India, 31 January 2011. [Google Scholar]

- Cabada, R.Z.; Estrada, M.L.B.; Angulo, V.P.; García, A.J.; García, C.A.R. Identification of Felder-Silverman Learning Styles with a Supervised Neural Network. In Proceedings of Advanced Intelligent Computing Theories and Applications with Aspects of Artificial Intelligence (ICIC), Changsha, China, 18 August 2010; Springer: Berlin, Germany, 2010; pp. 479–486. [Google Scholar]

- Yannibelli, V.; Godoy, D.; Amandi, A. A genetic algorithm approach to recognise students’ learning styles. Interact. Learn. Environ. 2006, 14, 55–78. [Google Scholar] [CrossRef]

- Liyanage, M.P.P.; Gunawardena, K.L.; Hirakawa, M. Detecting Learning Styles in Learning Management Systems Using Data Mining. J. Inf. Process. 2016, 24, 740–749. [Google Scholar] [CrossRef]

- Feldman, J.; Monteserin, A.; Amandi, A. Automatic detection of learning styles: State of the art. Artif. Intell. Rev. 2015, 44, 157–186. [Google Scholar] [CrossRef]

- Ramirez-Arellano, A.; Bory-Reyes, J.; Hernández-Simón, L.M. Learning Object Assembly Based on Learning Styles; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Guan, M.-H.; Yin, C.-T.; Xiong, Z. Research on Online Learning Style Based on Felder-Silverman Learning Style Model. J. Chongqing Univ. Technol. (Nat. Sci.) 2017, 31, 102–109. [Google Scholar]

- Dag, F.; Geçer, A. Relations between online learning and learning styles. Procedia Soc. Behav. Sci. 2009, 1, 862–871. [Google Scholar] [CrossRef]

- Sheehy, N. Electroencephalography: Basic Principles, Clinical Applications and Related Fields. J. Neurol. Neurosurg. Psychiatry 1983, 47, 654. [Google Scholar] [CrossRef][Green Version]

- Garg, D.; Verma, G.K. Emotion Recognition in Valence-Arousal Space from Multi-channel EEG data and Wavelet based Deep Learning Framework. Procedia Comput. Sci. 2020, 171, 857–867. [Google Scholar] [CrossRef]

- Buvaneswari, B.; Reddy, T.K. A review of EEG based human facial expression recognition systems in cognitive sciences. In Proceedings of the International Conference on Energy, Communication, Data Analytics and Soft Computing, Chennai, India, 1 August 2017. [Google Scholar]

- Katona, J.; Kovari, A. The Evaluation of BCI and PEBL-based Attention Tests. Acta Polytech. Hung. 2018, 15, 225–249. [Google Scholar] [CrossRef]

- Zarjam, P.; Epps, J.; Lovell, N.H. Beyond Subjective Self-Rating: EEG Signal Classification of Cognitive Workload. IEEE Trans. Auton. Ment. Dev. 2015, 7, 301–310. [Google Scholar] [CrossRef]

- Magorzata, P.W.; Borys, M.; Tokovarov, M.; Kaczorowska, M. Measuring Cognitive Workload in Arithmetic Tasks Based on Response Time and EEG Features. In Proceedings of the 38th International Conference on Information Systems Architecture and Technology (ISAT), Szklarska Poręba, Poland, 17 September 2017; Springer: Berlin/Heidelberg, Germany. [Google Scholar] [CrossRef]

- Xu, T.; Zhou, Y.; Hou, Z.; Zhang, W. Decode Brain System: A Dynamic Adaptive Convolutional Quorum Voting Approach for Variable-Length EEG Data. Complexity 2020, 2020, 6929546. [Google Scholar] [CrossRef]

- Davelaar, E.J.; Barnby, J.M.; Almasi, S.; Eatough, V. Differential Subjective Experiences in Learners and Non-learners in Frontal Alpha Neurofeedback: Piloting a Mixed-Method Approach. Front. Hum. Neurosci. 2018, 12, 402. [Google Scholar] [CrossRef] [PubMed]

- Liao, C.Y.; Chen, R.C.; Tai, S.K. Emotion stress detection using EEG signal and deep learning technologies. In Proceedings of the IEEE International Conference on Applied System Innovation (ICASI), Chiba, Tokyo, Japan, 13 April 2018. [Google Scholar]

- Arns, M.; Etkin, A.; Hegerl, U.; Williams, L.M.; Debattista, C.; Palmer, D.M.; Fitzgerald, P.B.; Harris, A.; Debeuss, R.; Gordon, E. Frontal and rostral anterior cingulate (rACC) theta EEG in depression: Implications for treatment outcome? Eur. Neuropsychopharmacol. 2015, 25, 1190–1200. [Google Scholar] [CrossRef]

- Fiscon, G.; Weitschek, E.; De Cola, M.C.; Felici, G.; Bertolazzi, P. An integrated approach based on EEG signals processing combined with supervised methods to classify Alzheimer’s disease patients. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine(BIBM), Madrid, Spain, 3 December 2018. [Google Scholar]

- Yin, Z.; Zhang, J. Task-generic mental fatigue recognition based on neurophysiological signals and dynamical deep extreme learning machine. Neurocomputing 2018, 283, 266–281. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, G.; Graham, D.; Holtzhauer, A. Towards an EEG-based brain-computer interface for online robot control. Multimedia Tools Appl. 2016, 75, 7999–8017. [Google Scholar] [CrossRef]

- Lim, J.; Quevenco, F.-C.; Kwok, K. EEG alpha activity is associated with individual differences in post-break improvement. NeuroImage 2013, 76, 81–89. [Google Scholar] [CrossRef] [PubMed]

- Kanimozhi, A.; Raj, V.C. An adaptive e-learning environment centred on learner’s emotional behaviour. In Proceedings of the 2017 International Conference on Algorithms, Methodology, Models and Applications in Emerging Technologies (ICAMMAET), Chennai, India, 16 February, 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Cartocci, G.; Maglione, A.G.; Rossi, D.; Modica, E.; Borghini, G.; Malerba, P.; Piccioni, L.O.; Babiloni, F. Alpha and theta EEG variations as indices of listening effort to be implemented in neurofeedback among cochlear implant users. In Proceedings of the International Workshop on Symbiotic Interaction, Eindhoven, The Netherlands, 18–19 December 2017; Springer: Cham, Switzerland, 2017; pp. 30–41. [Google Scholar] [CrossRef]

- Foxe, J.J.; Snyder, A.C. The Role of Alpha-Band Brain Oscillations as a Sensory Suppression Mechanism during Selective Attention. Front. Psychol. 2011, 2, 154. [Google Scholar] [CrossRef]

- Fiscon, G.; Weitschek, E.; Felici, G.; Bramanti, P.; De Cola, M.C. Alzheimer’s disease patients classification through EEG signals processing. In Proceedings of the IEEE CIDM 2014: 2014 IEEE Symposium on Computational Intelligence and Data Mining, Orlando, FL, USA, 9 December 2014; pp. 105–112. [Google Scholar] [CrossRef]

- Balconi, M.; Lucchiari, C. Consciousness and arousal effects on emotional face processing as revealed by brain oscillations. A gamma band analysis. Int. J. Psychophysiol. 2008, 67, 41–46. [Google Scholar] [CrossRef] [PubMed]

- Cartocci, G.; Giorgi, A.; Inguscio, B.M.S.; Scorpecci, A.; Giannantonio, S.; De Lucia, A.; Garofalo, S.; Grassia, R.; Leone, C.A.; Longo, P.; et al. Higher Right Hemisphere Gamma Band Lateralization and Suggestion of a Sensitive Period for Vocal Auditory Emotional Stimuli Recognition in Unilateral Cochlear Implant Children: An EEG Study. Front. Neurosci. 2021, 15, 149. [Google Scholar] [CrossRef]

- Ömer, Ş.; Nilüfer, A.; Mustafa, M.I.; Yüksel, D.A. Diagnosis of learning styles based on active/reflective dimension of felder and silverman’s learning style model in a learning management system. In Proceedings of the 2010 International Conference on Computational Science and Its Applications (ICCSA′10) Fukuoka, Japan, 23 March 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 544–555. [Google Scholar]

- McHugh, M.L. The Chi-square test of independence. Biochem. Medica 2013, 23, 143–149. [Google Scholar] [CrossRef]

- Wang, S.; Gwizdka, J.; Chaovalitwongse, W.A. Using Wireless EEG Signals to Assess Memory Workload in the n -Back Task. IEEE Trans. Hum. Mach. Syst. 2016, 46, 1–12. [Google Scholar] [CrossRef]

- Anderson, E.W.; Potter, K.C.; Matzen, L.E.; Shepherd, J.F.; Preston, G.A.; Silva, C.T. A User Study of Visualization Effectiveness Using EEG and Cognitive Load. Comput. Graph. Forum 2011, 30, 791–800. [Google Scholar] [CrossRef]

- Knoll, A.; Wang, Y.; Chen, F.; Xu, J.; Ruiz, N.; Epps, J.; Zarjam, P. Measuring cognitive workload with low-cost electroencepha- lograph. In Proceedings of INTERACT; Campos, P., Graham, N., Jorge, J., Nunes, N., Palanque, P., Winckler, M., Eds.; Springer: Berlin, Germany, 2011; pp. 568–571. [Google Scholar]

- Nadim, N. The Mann-Whitney U: A Test for Assessing Whether Two Independent Samples Come from the Same Distribution. Tutor. Quant. Methods Psychol. 2008, 4, 13–20. [Google Scholar]

- Quang, D.P.; Florea, A.M. An approach for detecting learning styles in learning management systems based on learners’ behaviours. Int. Proc. Econ. Dev. Res. 2012. [Google Scholar] [CrossRef]

- Karagiannis, I.; Satratzemi, M. An adaptive mechanism for Moodle based on automatic detection of learning styles. Educ. Inf. Technol. 2018, 23, 1–27. [Google Scholar] [CrossRef]

- Liyanage, M.P.P.; Gunawardena, K.S.L.; Hirakawa, M. Using Learning Styles to Enhance Learning Management Systems. Int. J. Adv. ICT Emerg. Reg. (ICTer) 2015, 7. [Google Scholar] [CrossRef]

- Kappel, P.G.; Graf, S. Adaptivity in Learning Management Systems Focusing on Learning Styles. Ph.D. Thesis, Vienna University of Technology, Vienna, Austria, 2007. [Google Scholar]

- Bernard, J.; Chang, T.-W.; Popescu, E.; Graf, S. Learning style Identifier: Improving the precision of learning style identification through computational intelligence algorithms. Expert Syst. Appl. 2017, 75. [Google Scholar] [CrossRef]

| EEG Signal Band | Frequency | Meaning |

|---|---|---|

| Delta | 0.5 Hz to 4 Hz | Deep sleep [44] |

| Theta | 4 Hz to 7 Hz | Drowsiness or mediation [44], working memory and processing [45] |

| Alpha | 8 Hz to 12 Hz | Sensory suppression mechanism during selective attention [46], awakening [44], inhibition of irrelevant stimuli [45] |

| Beta | 13 Hz to 30 Hz | Active thinking and attention, outside world, and problems solving [47] |

| Gamma | Above 30 Hz | Consciousness [48], cognitive control during detecting emotional expressions [49] |

| Answer Results\Processing Dimension | Reflective Learners | Active Learners |

|---|---|---|

| Correct | 204 | 169 |

| Wrong | 48 | 83 |

| Method | Data Source | Precision |

|---|---|---|

| Proposed | EEG features | 69.2% |

| Quang and Florea, 2012 [56] | Online interactive behavior log | 72.7% |

| Karagiannis and Satratzemi, 2017 [57] | Online interactive behavior log | 70.0% |

| Liyanage et al., 2014 [58] | Online interactive behavior log | 65.0% |

| Kappel and Graf, 2007 [59] | Online interactive behavior log | 62.5% |

| Ömer et al., 2010 [50] | Online interactive behavior log | 79.6% |

| Bernard et al., 2017 [60] | Online interactive behavior log | 81.9% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Chai, C.; Yin, Z.; Shi, Y. Design and Implementation of an EEG-Based Learning-Style Recognition Mechanism. Brain Sci. 2021, 11, 613. https://doi.org/10.3390/brainsci11050613

Zhang B, Chai C, Yin Z, Shi Y. Design and Implementation of an EEG-Based Learning-Style Recognition Mechanism. Brain Sciences. 2021; 11(5):613. https://doi.org/10.3390/brainsci11050613

Chicago/Turabian StyleZhang, Bingxue, Chengliang Chai, Zhong Yin, and Yang Shi. 2021. "Design and Implementation of an EEG-Based Learning-Style Recognition Mechanism" Brain Sciences 11, no. 5: 613. https://doi.org/10.3390/brainsci11050613

APA StyleZhang, B., Chai, C., Yin, Z., & Shi, Y. (2021). Design and Implementation of an EEG-Based Learning-Style Recognition Mechanism. Brain Sciences, 11(5), 613. https://doi.org/10.3390/brainsci11050613