Development of the Mechanisms Underlying Audiovisual Speech Perception Benefit

Abstract

1. Introduction

2. Our Natural Environments Are Noisy and Multimodal

3. Adults Rely on the Multimodal Nature of Speech to Compensate for Noisy Environments

4. A Coherent Account of Audiovisual Speech Perception Development Has Yet to Emerge

5. Mechanisms of Audiovisual Speech Perception Benefit: Temporal and Phonetic

5.1. General Perceptual, Temporal Mechanisms

5.2. Speech-Specific, Phonetic/Lexical Mechanisms

6. Development of the Mechanisms of Audiovisual Speech Perception Benefit

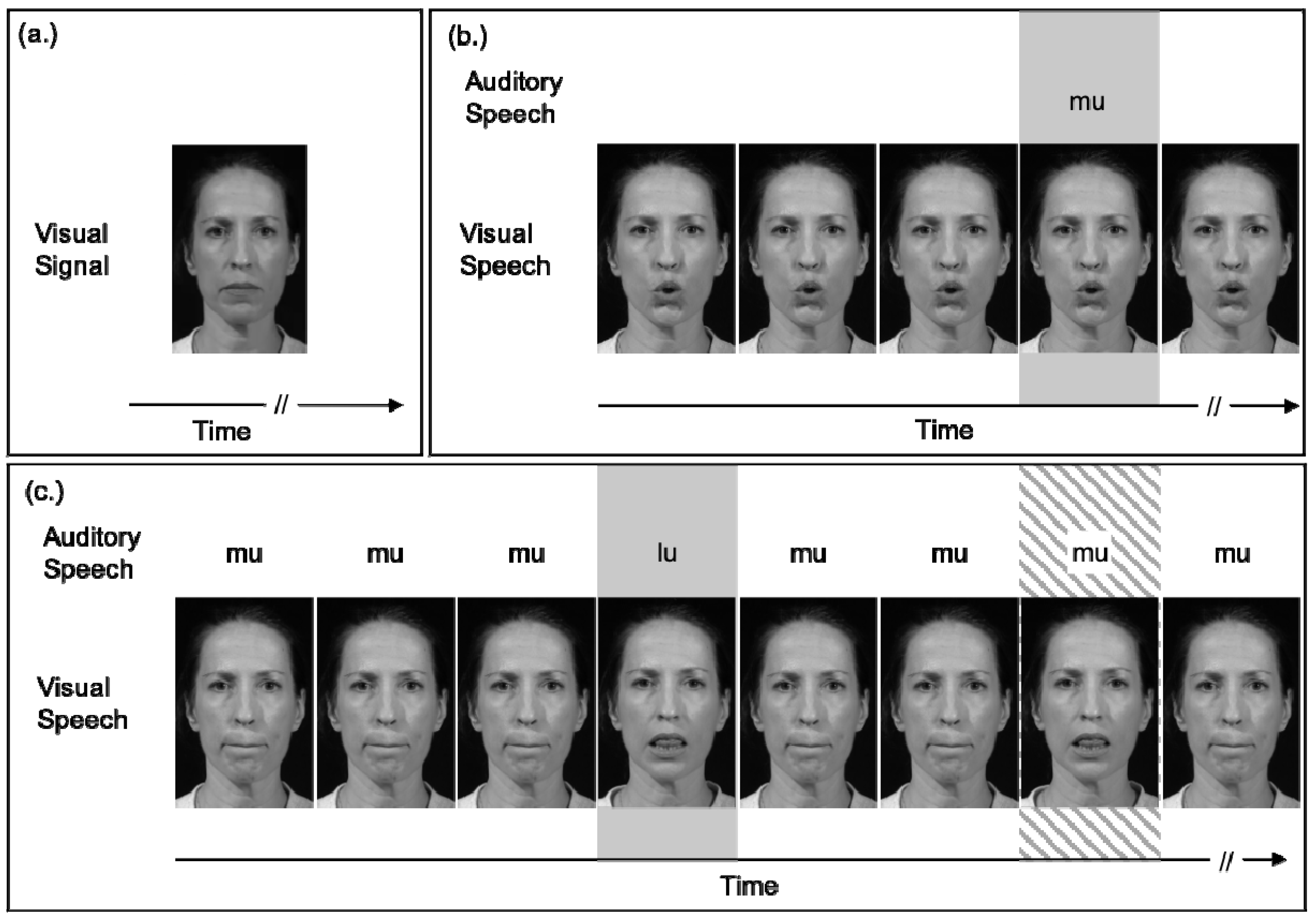

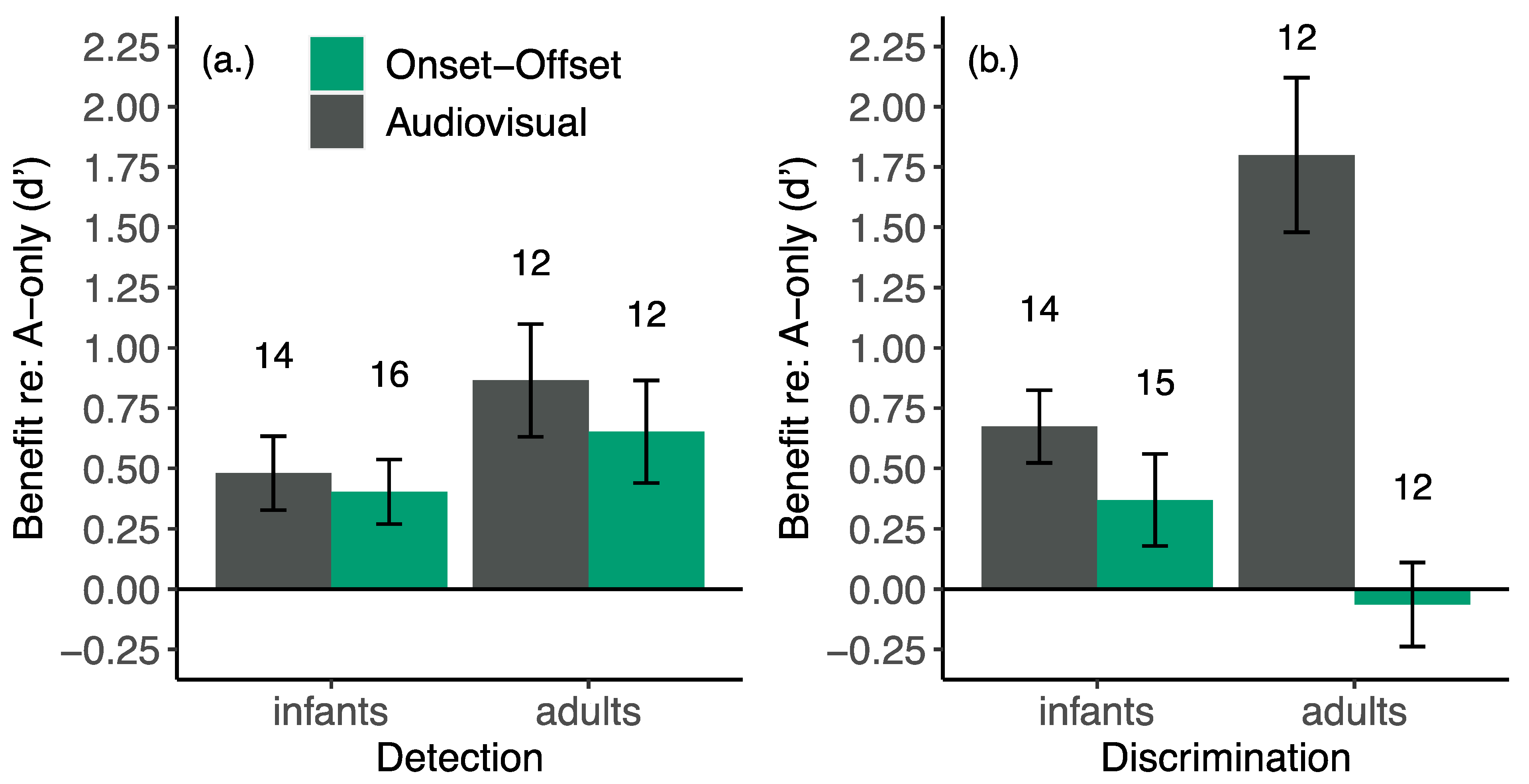

6.1. Distinguishing Use of Temporal and Phonetic Cues in Development

6.1.1. Distinguishing Use of Temporal and Phonetic Cues in Development with Sine Wave Speech

6.1.2. Distinguishing Use of Temporal and Phonetic Cues in Development: Limiting Visual Cues

6.1.3. Distinguishing Use of Temporal and Phonetic Cues in Development: Varying Level of Perceptual Processing Required by a Task

6.1.4. Distinguishing Use of Temporal and Phonetic Cues in Development: Examining Errors for Patterns Consistent with Use of Visual Phonetic Cues

6.1.5. Distinguishing Use of Temporal and Phonetic Cues in Development: Neural Responses

6.2. Development of the Use of Phonetic Cues for Audiovisual Speech Perception Benefit

6.3. Development of the Use of Lexical, Semantic, and Syntactic Cues for Audiovisual Speech Perception Benefit

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Saffran, J.R.; Werker, J.F.; Werner, L.A. The infant’s auditory world: Hearing, speech, and the beginnings of language. In Handbook of Child Psychology; Kuhn, D., Siegler, R.S., Damon, W., Lerner, R.M., Eds.; John Wiley & Sons Inc.: New York, NY, USA, 2007; Volume 2, pp. 58–108. [Google Scholar]

- Jusczyk, P.W. The Discovery of Spoken Language; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Erickson, L.C.; Newman, R.S. Influences of background noise on infants and children. Curr. Dir. Psychol. Sci. 2017, 26, 451–457. [Google Scholar] [CrossRef] [PubMed]

- Lapierre, M.A.; Piotrowski, J.T.; Linebarger, D.L. Background television in the homes of US children. Pediatrics 2012, 130, 839–846. [Google Scholar] [CrossRef] [PubMed]

- Manlove, E.E.; Frank, T.; Vernon-Feagans, L. Why should we care about noise in classrooms and child care settings? Child and Youth Care Forum 2001, 30, 55–64. [Google Scholar] [CrossRef]

- Voss, P. Noise in children’s daycare centres. Mag. Eur. Agency Saf. Health Work 2005, 8, 23–25. [Google Scholar]

- Picard, M. Characteristics of the noise, reverberation time and speech-to-noise ratio found in day-care centers. Can. Acoust. 2004, 32, 30–31. [Google Scholar]

- Bahrick, L.E.; Lickliter, R. The role of intersensory redundancy in early perceptual, cognitive, and social development. In Multisensory Development; Bremner, A.J., Lewkowicz, D.J., Spence, C., Eds.; Oxford University Press: Oxford, UK, 2012; pp. 183–206. [Google Scholar]

- Lewkowicz, D.J.; Kraebel, K.S. The value of multisensory redundancy in the development of intersensory perception. In The Handbook of Multisensory Processes; Calvert, G.A., Spence, C., Stein, B.E., Eds.; The MIT Press: Cambridge, MA, USA, 2004; pp. 655–678. [Google Scholar]

- Flom, R.; Bahrick, L.E. The development of infant discrimination of affect in multimodal and unimodal stimulation: The role of intersensory redundancy. Dev. Psychol. 2007, 43, 238–252. [Google Scholar] [CrossRef] [PubMed]

- Lewkowicz, D.J. Sensory dominance in infants: I. Six-month-old infants’ response to auditory-visual compounds. Dev. Psychol. 1988, 24, 155–171. [Google Scholar] [CrossRef]

- Lewkowicz, D.J. Sensory dominance in infants: II. Ten-month-old infants’ response to auditory-visual compounds. Dev. Psychol. 1988, 24, 172–182. [Google Scholar] [CrossRef]

- Lewkowicz, D.J. Infants’ response to the audible and visible properties of the human face. I: Role of lexical-syntactic content, temporal synchrony, gender, and manner of speech. Dev. Psychol. 1998, 32, 347–366. [Google Scholar] [CrossRef]

- Reynolds, G.D.; Bahrick, L.E.; Lickliter, R.; Guy, M.W. Neural correlates of intersensory processing in 5-month-old infants. Dev. Psychobiol. 2013, 56, 355–372. [Google Scholar] [CrossRef]

- Vaillant-Molina, M.; Bahrick, L.E. The role of intersensory redundancy in the emergence of social referencing in 5½-month-old infants. Dev. Psychol. 2012, 48, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Lewkowicz, D.J. Infants’ responsiveness to the auditory and visual attributes of a sounding/moving stimulus. Percept. Psychophys. 1992, 52, 519–528. [Google Scholar] [CrossRef] [PubMed]

- Lewkowicz, D.J. Perception of auditory–visual temporal synchrony in human infants. J. Exp. Psychol. Hum. Percept. Perform. 1996, 22, 1094–1106. [Google Scholar] [CrossRef] [PubMed]

- Lewkowicz, D.J. Infants’ perception of the audible, visible, and bimodal attributes of multimodal syllables. Child Dev. 2000, 71, 1241–1257. [Google Scholar] [CrossRef]

- Dodd, B. Lip reading in infants: Attention to speech presented in- and out-of-synchrony. Cogn. Psychol. 1979, 11, 478–484. [Google Scholar] [CrossRef]

- Chandrasekaran, C.; Trubanova, A.; Stillittano, S.; Caplier, A.; Ghazanfar, A.A. The natural statistics of audiovisual speech. PLoS Comput. Biol. 2009, 5, e1000436. [Google Scholar] [CrossRef]

- Yehia, H.C.; Rubin, P.; Vatikiotis-Bateson, E. Quantitative association of vocal-tract and facial behavior. Speech Commun. 1998, 26, 23–43. [Google Scholar] [CrossRef]

- Munhall, K.G.; Vaikiotis-Bateson, E. Spatial and temporal constraints on audiovisual speech perception. In The Handbook of Multisensory Processes; Calvert, G.A., Spence, C., Stein, B.E., Eds.; The MIT Press: Cambridge, MA, USA, 2004; pp. 177–188. [Google Scholar]

- Munhall, K.; Jones, J.A.; Callan, D.E.; Kuratate, T.; Vatikiotis-Bateson, E. Visual prosody and speech intelligibility. Psychol. Sci. 2004, 15, 133–137. [Google Scholar] [CrossRef]

- O’Neill, J.J. Contributions of the visual components of oral symbols to speech comprehension. J. Speech Hear. Disord. 1954, 19, 429–439. [Google Scholar] [CrossRef]

- Sumby, W.H.; Pollack, I. Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 2005, 26, 212–215. [Google Scholar] [CrossRef]

- Grant, K.W.; Seitz, P.-F. The use of visible speech cues for improving auditory detection of spoken sentences. J. Acoust. Soc. Am. 2000, 108, 1197–1208. [Google Scholar] [CrossRef] [PubMed]

- Lalonde, K.; Holt, R.F. Audiovisual speech perception development at varying levels of perceptual processing. J. Acoust. Soc. Am. 2016, 139, 1713–1723. [Google Scholar] [CrossRef] [PubMed]

- Ross, L.A.; Saint-Amour, D.; Leavitt, V.M.; Javitt, D.C.; Foxe, J.J. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cereb. Cortex 2006, 17, 1147–1153. [Google Scholar] [CrossRef] [PubMed]

- MacLeod, A.; Summerfield, Q. Quantifying the contribution of vision to speech perception in noise. Br. J. Audiol. 1987, 21, 131–141. [Google Scholar] [CrossRef]

- Bernstein, L.E.; Auer, E.T.; Takayanagi, S. Auditory speech detection in noise enhanced by lipreading. Speech Commun. 2004, 44, 5–18. [Google Scholar] [CrossRef]

- Fraser, S.; Gagné, J.-P.; Alepins, M.; Dubois, P. Evaluating the effort expended to understand speech in noise using a dual-task paradigm: The effects of providing visual speech cues. J. Speech Lang. Hear. Res. 2010, 53, 18–33. [Google Scholar] [CrossRef]

- Mishra, S.; Lunner, T.; Stenfelt, S.; Rönnberg, J.; Rudner, M. Seeing the talker’s face supports executive processing of speech in steady state noise. Front. Syst. Neurosci. 2013, 7, 96. [Google Scholar] [CrossRef]

- Mishra, S.; Estenfelt, S.; Elunner, T.; Rönnberg, J.; Rudner, M. Cognitive spare capacity in older adults with hearing loss. Front. Aging Neurosci. 2014, 6, 96. [Google Scholar] [CrossRef]

- Ross, L.A.; Molholm, S.; Blanco, D.; Gomez-Ramirez, M.; Saint-Amour, D.; Foxe, J.J. The development of multisensory speech perception continues into the late childhood years. Eur. J. Neurosci. 2011, 33, 2329–2337. [Google Scholar] [CrossRef]

- Wightman, F.L.; Kistler, D.; Brungart, D. Informational masking of speech in children: Auditory-visual integration. J. Acoust. Soc. Am. 2006, 119, 3940–3949. [Google Scholar] [CrossRef]

- Aldridge, M.A.; Braga, E.S.; Walton, G.E.; Bower, T.G.R. The intermodal representation of speech in newborns. Dev. Sci. 1999, 2, 42–46. [Google Scholar] [CrossRef]

- Kuhl, P.K.; Meltzoff, A.N. The bimodal perception of speech in infancy. Science 1982, 218, 1138–1141. [Google Scholar] [CrossRef]

- Kuhl, P.K.; Meltzoff, A.N. The intermodal representation of speech in infants. Infant Behav. Dev. 1984, 7, 361–381. [Google Scholar] [CrossRef]

- Patterson, M.L.; Werker, J.F. Matching phonetic information in lips and voice is robust in 4.5-month-old infants. Infant Behav. Dev. 1999, 22, 237–247. [Google Scholar] [CrossRef]

- Patterson, M.L.; Werker, J.F. Two-month-old infants match phonetic information in lips and voice. Dev. Sci. 2003, 6, 191–196. [Google Scholar] [CrossRef]

- Yeung, H.H.; Werker, J.F. Lip movements affect infants’ audiovisual speech perception. Psychol. Sci. 2013, 24, 603–612. [Google Scholar] [CrossRef] [PubMed]

- MacKain, K.; Studdert-Kennedy, M.; Spieker, S.; Stern, D. Infant intermodal speech perception is a left-hemisphere function. Science 1983, 219, 1347–1349. [Google Scholar] [CrossRef]

- Baart, M.; Vroomen, J.; Shaw, K.; Bortfeld, H. Degrading phonetic information affects matching of audiovisual speech in adults, but not in infants. Cognition 2014, 130, 31–43. [Google Scholar] [CrossRef]

- Guellaï, B.; Streri, A.; Chopin, A.; Rider, D.; Kitamura, C. Newborns’ sensitivity to the visual aspects of infant-directed speech: Evidence from point-line displays of talking faces. J. Exp. Psychol. Hum. Percept. Perform. 2016, 42, 1275–1281. [Google Scholar] [CrossRef]

- Shaw, K.E.; Bortfeld, H. Sources of confusion in infant audiovisual speech perception research. Front. Psychol. 2015, 6, 1844. [Google Scholar] [CrossRef]

- Lewkowicz, D.J. Infant perception of audio-visual speech synchrony. Dev. Psychol. 2010, 46, 66–77. [Google Scholar] [CrossRef] [PubMed]

- Lewkowicz, D.J.; Flom, R. The audiovisual temporal binding window narrows in early childhood. Child Dev. 2014, 85, 685–694. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.-Y.; Cheung, E.F.C.; Chan, R.C.K. Audiovisual temporal integration: Cognitive processing, neural mechanisms, developmental trajectory and potential interventions. Neuropsychology 2020, 140, 107396. [Google Scholar] [CrossRef] [PubMed]

- McGurk, H.; Macdonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- Rosenblum, L.D.; Schmuckler, M.A.; Johnson, J.A. The McGurk effect in infants. Percept. Psychophys. 1997, 59, 347–357. [Google Scholar] [CrossRef] [PubMed]

- Lalonde, K.; Werner, L.A. Infants and adults use visual cues to improve detection and discrimination of speech in noise. J. Speech Lang. Hear. Res. 2019, 62, 3860–3875. [Google Scholar] [CrossRef]

- Lalonde, K.; McCreery, R.W. Audiovisual enhancement of speech perception in noise by school-age children who are hard of hearing. Ear Hear. 2020, 41, 705–719. [Google Scholar] [CrossRef]

- Werner, L.A. Observer-based approaches to human infant psychoacoustics. In Methods in Comparative Psychoacoustics; Klump, G.M., Dooling, R.J., Fay, R.R., Stebbins, W.C., Eds.; Birkhauser: Boston, MA, USA, 1995; pp. 135–146. [Google Scholar]

- Meredith, M.A.; Stein, B.E. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J. Neurophysiol. 1986, 56, 640–662. [Google Scholar] [CrossRef]

- Kim, H.; Hahm, J.; Lee, H.; Kang, E.; Kang, H.; Lee, D.S. Brain networks engaged in audiovisual integration during speech perception revealed by persistent homology-based network filtration. Brain Connect. 2015, 5, 245–258. [Google Scholar] [CrossRef]

- Senkowski, D.; Schneider, T.R.; Foxe, J.J.; Engel, A.K. Crossmodal binding through neural coherence: Implications for multisensory processing. Trends Neurosci. 2008, 31, 401–409. [Google Scholar] [CrossRef]

- Calvert, G.A.; Thesen, T. Multisensory integration: Methodological approaches and emerging principles in the human brain. J. Physiol. 2004, 98, 191–205. [Google Scholar] [CrossRef] [PubMed]

- Schroeder, C.E.; Foxe, J.J. Multisensory contributions to low-level, ‘unisensory’ processing. Curr. Opin. Neurobiol. 2005, 15, 454–458. [Google Scholar] [CrossRef] [PubMed]

- Cappe, C.; Rouiller, E.M.; Barone, P. Multisensory anatomical pathways. Hear. Res. 2009, 258, 28–36. [Google Scholar] [CrossRef]

- Kayser, C.; Petkov, C.I.; Logothetis, N.K. Visual modulation of neurons in auditory cortex. Cereb. Cortex 2008, 18, 1560–1574. [Google Scholar] [CrossRef] [PubMed]

- Kayser, C.; Petkov, C.I.; Augath, M.; Logothetis, N.K. Functional imaging reveals visual modulation of specific fields in auditory cortex. J. Neurosci. 2007, 27, 1824–1835. [Google Scholar] [CrossRef]

- Eskelund, K.; Tuomainen, J.; Andersen, T.S. Multistage audiovisual integration of speech: Dissociating identification and detection. Exp. Brain Res. 2011, 208, 447–457. [Google Scholar] [CrossRef]

- Klucharev, V.; Möttönen, R.; Sams, M. Electrophysiological indicators of phonetic and non-phonetic multisensory interactions during audiovisual speech perception. Cogn. Brain Res. 2003, 18, 65–75. [Google Scholar] [CrossRef]

- Miller, L.M.; D’Esposito, M. Perceptual fusion and stimulus coincidence in the cross-modal integration of speech. J. Neurosci. 2005, 25, 5884–5893. [Google Scholar] [CrossRef]

- Peelle, J.E.; Sommers, M.S. Prediction and constraint in audiovisual speech perception. Cortex 2015, 68, 169–181. [Google Scholar] [CrossRef]

- Baart, M.; Bortfeld, H.; Vroomen, J. Phonetic matching of auditory and visual speech develops during childhood: Evidence from sine-wave speech. J. Exp. Child Psychol. 2015, 129, 157–164. [Google Scholar] [CrossRef]

- Kim, J.; Davis, C. Investigating the audio–visual speech detection advantage. Speech Commun. 2004, 44, 19–30. [Google Scholar] [CrossRef]

- Tye-Murray, N.; Spehar, B.; Myerson, J.; Sommers, M.S.; Hale, S. Crossmodal enhancement of speech detection in young and older adults: Does signal content matter? Ear Hear. 2011, 32, 650–655. [Google Scholar] [CrossRef] [PubMed]

- Bonino, A.Y.; Leibold, L.J.; Buss, E. Effect of signal temporal uncertainty in children and adults: Tone detection in noise and a random-frequency masker. J. Acoust. Soc. Am. 2013, 134, 4446. [Google Scholar] [CrossRef] [PubMed]

- Eramudugolla, R.; Henderson, R.; Mattingly, J.B. Effects of audio-visual integration on the detection of masked speech and non-speech sounds. Brain Cogn. 2011, 75, 60–66. [Google Scholar] [CrossRef] [PubMed]

- Stekelenburg, J.J.; Vroomen, J. Neural correlates of multisensory integration of ecologically valid audiovisual events. J. Cogn. Neurosci. 2007, 19, 1964–1973. [Google Scholar] [CrossRef] [PubMed]

- van Wassenhove, V.; Grant, K.W.; Poeppel, D. Visual speech speeds up the neural processing of auditory speech. Proc. Natl. Acad. Sci. USA 2005, 102, 1181–1186. [Google Scholar] [CrossRef]

- Simon, D.M.; Wallace, M.T. Integration and temporal processing of asynchronous audiovisual speech. J. Cogn. Neurosci. 2018, 30, 319–337. [Google Scholar] [CrossRef]

- Baart, M. Quantifying lip-read-induced suppression and facilitation of the auditory N1 and P2 reveals peak enhancements and delays. Psychophysiology 2016, 53, 1295–1306. [Google Scholar] [CrossRef]

- Luo, H.; Poeppel, D. Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex. Neuron 2007, 54, 1001–1010. [Google Scholar] [CrossRef]

- Peelle, J.E.; Davis, M.H. Neural oscillations carry speech rhythm through to comprehension. Front. Psychol. 2012, 3, 320. [Google Scholar] [CrossRef]

- Luo, H.; Liu, Z.; Poeppel, D. Auditory cortex tracks both auditory and visual stimulus dynamics using low-frequency neuronal phase modulation. PLoS Biol. 2010, 8, e1000445. [Google Scholar] [CrossRef]

- Golumbic, E.Z.; Cogan, G.B.; Schroeder, C.E.; Poeppel, D. Visual input enhances selective speech envelope tracking in auditory cortex at a “cocktail party”. J. Neurosci. 2013, 33, 1417–1426. [Google Scholar] [CrossRef]

- Grant, K.W.; Bernstein, J.G.W. Toward a model of auditory-visual speech intelligibility. In Multisensory Processes: The Auditory Perspective; Lee, A.K.C., Wallace, M.T., Coffin, A.B., Popper, A.N., Fay, R.R., Eds.; Springer: Cham, Switzerland, 2019; pp. 33–57. [Google Scholar]

- Grant, K.W. The effect of speechreading on masked detection thresholds for filtered speech. J. Acoust. Soc. Am. 2001, 109, 2272–2275. [Google Scholar] [CrossRef]

- Kim, J.; Davis, C. Hearing foreign voices: Does knowing what is said affect visual-masked-speech detection? Perception 2003, 32, 111–120. [Google Scholar] [CrossRef] [PubMed]

- Lalonde, K. Effects of natural variability in cross-modal temporal correlations on audiovisual speech recognition benefit. In Proceedings of the INTERSPEECH 2019, Graz, Austria, 15–19 September 2019; pp. 2260–2264. [Google Scholar] [CrossRef]

- Yuan, Y.; Wayland, R.; Oh, Y. Visual analog of the acoustic amplitude envelope benefits speech perception in noise. J. Acoust. Soc. Am. 2020, 147, EL246. [Google Scholar] [CrossRef]

- Owens, E.; Blazek, B. Visemes observed by hearing-impaired and normal-hearing adult viewers. J. Speech Lang. Hear. Res. 1985, 28, 381–393. [Google Scholar] [CrossRef] [PubMed]

- Lalonde, K.; Holt, R.F. Preschoolers benefit from visually salient speech cues. J. Speech Lang. Hear. Res. 2015, 58, 135–150. [Google Scholar] [CrossRef] [PubMed]

- Tye-Murray, N.; Sommers, M.S.; Spehar, B. Auditory and visual lexical neighborhoods in audiovisual speech perception. Trends Amplif. 2007, 11, 233–241. [Google Scholar] [CrossRef] [PubMed]

- Nath, A.R.; Beauchamp, M.S. Dynamic changes in superior temporal sulcus connectivity during perception of noisy audiovisual speech. J. Neurosci. 2011, 31, 1704–1714. [Google Scholar] [CrossRef] [PubMed]

- Luce, P.A.; Pisoni, D.B. Recognizing spoken words: The neighborhood activation model. Ear Hear. 1998, 19, 1–36. [Google Scholar] [CrossRef]

- Mattys, S.L.; Bernstein, L.E.; Auer, E.T. Stimulus-based lexical distinctiveness as a general word-recognition mechanism. Percept. Psychophys. 2002, 64, 667–679. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Fort, M.; Spinelli, E.; Savariaux, C.; Kandel, S. The word superiority effect in audiovisual speech perception. Speech Commun. 2010, 52, 525–532. [Google Scholar] [CrossRef]

- Bushara, K.O.; Grafman, J.; Hallett, M. Neural correlates of auditory–visual stimulus onset asynchrony detection. J. Neurosci. 2001, 21, 300–304. [Google Scholar] [CrossRef] [PubMed]

- Eggermont, J.J.; Moore, J.K. Morphological and functional development of the auditory nervous system. In Human Auditory Development; Werner, L.A., Fay, R.R., Popper, A.N., Eds.; Springer: New York, NY, USA, 2012; pp. 61–105. [Google Scholar]

- Lewkowicz, D.J. The development of intersensory temporal perception: An epigenetic systems/limitations view. Psychol. Bull. 2000, 126, 281–308. [Google Scholar] [CrossRef] [PubMed]

- Altvater-Mackensen, N.; Mani, N.; Grossmann, T. Audiovisual speech perception in infancy: The influence of vowel identity and infants’ productive abilities on sensitivity to (mis)matches between auditory and visual speech cues. Dev. Psychol. 2016, 52, 191–204. [Google Scholar] [CrossRef] [PubMed]

- Pejovic, J.; Yee, E.; Molnar, M. Speaker matters: Natural inter-speaker variation affects 4-month-olds’ perception of audio-visual speech. First Lang. 2019, 40, 113–127. [Google Scholar] [CrossRef]

- Weatherhead, D.; White, K.S. Read my lips: Visual speech influences word processing in infants. Cognition 2017, 160, 103–109. [Google Scholar] [CrossRef]

- Jerger, S.; Damian, M.F.; Tye-Murray, N.; Abdi, H. Children use visual speech to compensate for non-intact auditory speech. J. Exp. Child Psychol. 2014, 126, 295–312. [Google Scholar] [CrossRef]

- Remez, R.E.; Rubin, P.E.; Pisoni, D.B.; Carrell, T.D. Speech perception without traditional speech cues. Science 1981, 212, 947–949. [Google Scholar] [CrossRef]

- Vroomen, J.; Stekelenburg, J.J. Perception of intersensory synchrony in audiovisual speech: Not that special. Cognition 2011, 118, 75–83. [Google Scholar] [CrossRef]

- Tuomainen, J.; Andersen, T.S.; Tiippana, K.; Sams, M. Audio–visual speech perception is special. Cognition 2005, 96, B13–B22. [Google Scholar] [CrossRef] [PubMed]

- Stekelenburg, J.J.; Vroomen, J. Electrophysiological evidence for a multisensory speech-specific mode of perception. Neuropsychologia 2012, 50, 1425–1431. [Google Scholar] [CrossRef] [PubMed]

- Hollich, G.; Newman, R.S.; Jusczyk, P.W. Infants’ use of synchronized visual information to separate streams of speech. Child Dev. 2005, 76, 598–613. [Google Scholar] [CrossRef] [PubMed]

- Baart, M.; Stekelenburg, J.J.; Vroomen, J. Electrophysiological evidence for speech-specific audiovisual integration. Neuropsychologia 2014, 53, 115–121. [Google Scholar] [CrossRef] [PubMed]

- Kaganovich, N.; Schumaker, J. Audiovisual integration for speech during mid-childhood: Electrophysiological evidence. Brain Lang. 2014, 139, 36–48. [Google Scholar] [CrossRef] [PubMed]

- Gogtay, N.; Giedd, J.N.; Lusk, L.; Hayashi, K.M.; Greenstein, D.; Vaituzis, A.C.; Nugent, T.F.; Herman, D.H.; Clasen, L.S.; Toga, A.W.; et al. Dynamic mapping of human cortical development during childhood through early adulthood. Proc. Natl. Acad. Sci. USA 2004, 101, 8174–8179. [Google Scholar] [CrossRef]

- Dick, A.S.; Solodkin, A.; Small, S.L. Neural development of networks for audiovisual speech comprehension. Brain Lang. 2010, 114, 101–114. [Google Scholar] [CrossRef]

- Teinonen, T.; Aslin, R.N.; Alku, P.; Csibra, G. Visual speech contributes to phonetic learning in 6-month-old infants. Cognition 2008, 108, 850–855. [Google Scholar] [CrossRef]

- Kushnerenko, E.V.; Teinonen, T.; Volein, A.; Csibra, G. Electrophysiological evidence of illusory audiovisual speech percept in human infants. Proc. Natl. Acad. Sci. USA 2008, 105, 11442–11445. [Google Scholar] [CrossRef]

- Binnie, C.A.; Montgomery, A.A.; Jackson, P.L. Auditory and visual contributions to the perception of consonants. J. Speech Hear. Res. 1974, 17, 619–630. [Google Scholar] [CrossRef]

- Fort, M.; Spinelli, E.; Savariaux, C.; Kandel, S. Audiovisual vowel monitoring and the word superiority effect in children. Int. J. Behav. Dev. 2012, 36, 457–467. [Google Scholar] [CrossRef]

- Kaganovich, N.; Ancel, E. Different neural processes underlie visual speech perception in school-age children and adults: An event-related potentials study. J. Exp. Child Psychol. 2019, 184, 98–122. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lalonde, K.; Werner, L.A. Development of the Mechanisms Underlying Audiovisual Speech Perception Benefit. Brain Sci. 2021, 11, 49. https://doi.org/10.3390/brainsci11010049

Lalonde K, Werner LA. Development of the Mechanisms Underlying Audiovisual Speech Perception Benefit. Brain Sciences. 2021; 11(1):49. https://doi.org/10.3390/brainsci11010049

Chicago/Turabian StyleLalonde, Kaylah, and Lynne A. Werner. 2021. "Development of the Mechanisms Underlying Audiovisual Speech Perception Benefit" Brain Sciences 11, no. 1: 49. https://doi.org/10.3390/brainsci11010049

APA StyleLalonde, K., & Werner, L. A. (2021). Development of the Mechanisms Underlying Audiovisual Speech Perception Benefit. Brain Sciences, 11(1), 49. https://doi.org/10.3390/brainsci11010049