Single-Trial Recognition of Video Gamer’s Expertise from Brain Haemodynamic and Facial Emotion Responses

Abstract

1. Introduction

- : It is not possible to recognise gamer’s expertise level from fNIRS neural responses from the PFC with recognition performance (measured as cross-validated f-scores) better than a 95% confidence interval difference to the results from a baseline (rule 0) classifier.

- : It is possible to recognise gamer’s expertise level from fNIRS neural responses from the PFC with recognition performance better than a 95% confidence interval difference to the results from a baseline (rule0) classifier.

- : Assert and the adding of facial expression emotions does not bolster the recognition of expertise with statistical difference of .

- : Assert and the adding of facial expression emotions bolsters the recognition of expertise with statistical difference of .

- : Assert and/or and there are not statistical differences of performance () in the recognition form each benchmarked classifier.

- : Assert and/or and there are statistical differences of performance () in the recognition form each benchmarked classifier.

- This work successfully recognizes the expertise level of gamers with high accuracy (91.44%) using brain signals acquired from a portable neuroimaging modality (fNIRS).

- This is also a first study that integrates brain signals with the emotional states of the participants, derived from the visual cues provided by their facial expressions, in the classification paradigm to investigate the interlink between the emotional state of a participant and their expertise level.

- This work also found that unsupervised deep feature extraction for time-series boosted recognition performance specially when applied to fNIRS signals.

2. Background

2.1. Mind-Controlled Games

2.2. On Game Experience and Sensors

2.3. On fNIRS during Video Gaming

3. Related Works

3.1. Game Experience and Cognition

3.2. Expertise with fNIRS

4. Materials and Methods

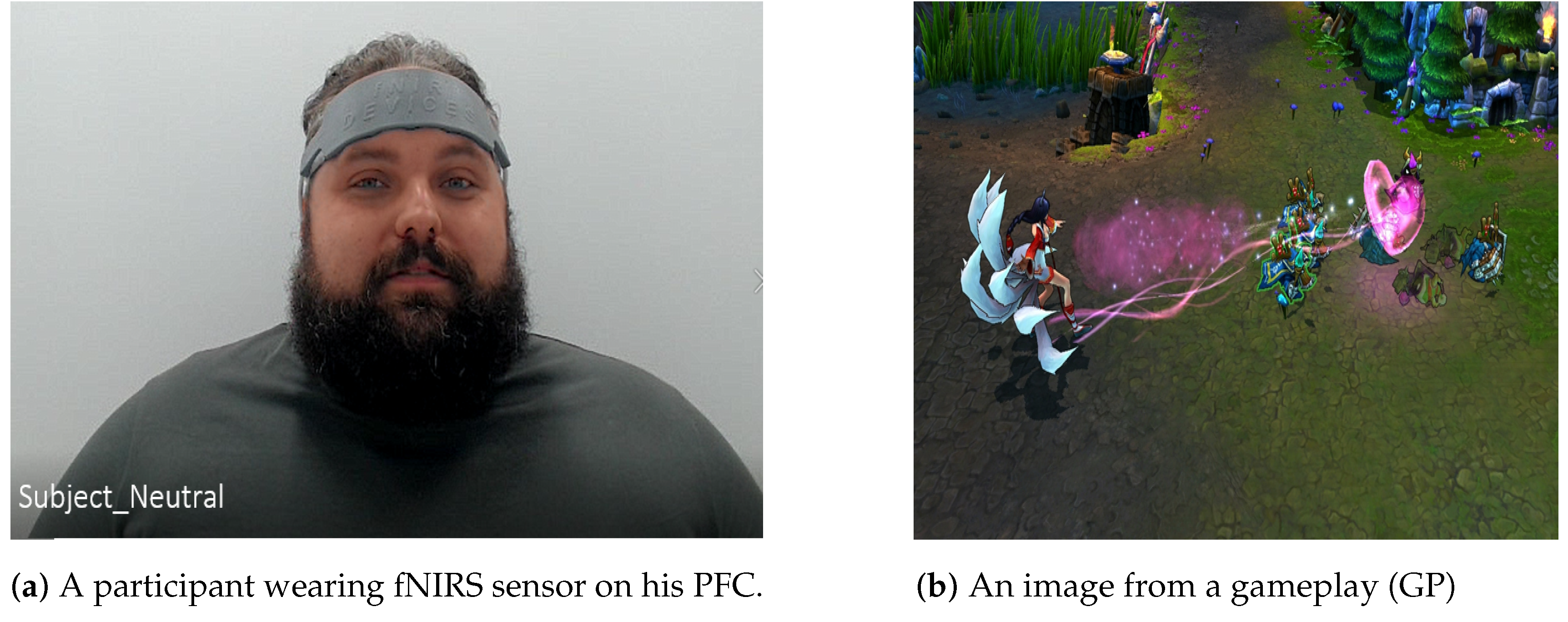

4.1. Experimental Details

4.2. fNIRS Data

- The data from any channel that fails to meet the set pass criterion is eliminated from subsequent data analysis. The most common reasons for failing to meet the pass criterion were significant light leakage, low signal levels, and/or saturated signals.

- The data from the passed channels is low pass filtered (cut off frequency 0.14 Hz) and compared with baseline signal to compute change in optical density using modified Beer-Lambert law.

- Outlier data beyond the 3 standard deviations from the mean is removed before any features are calculated.

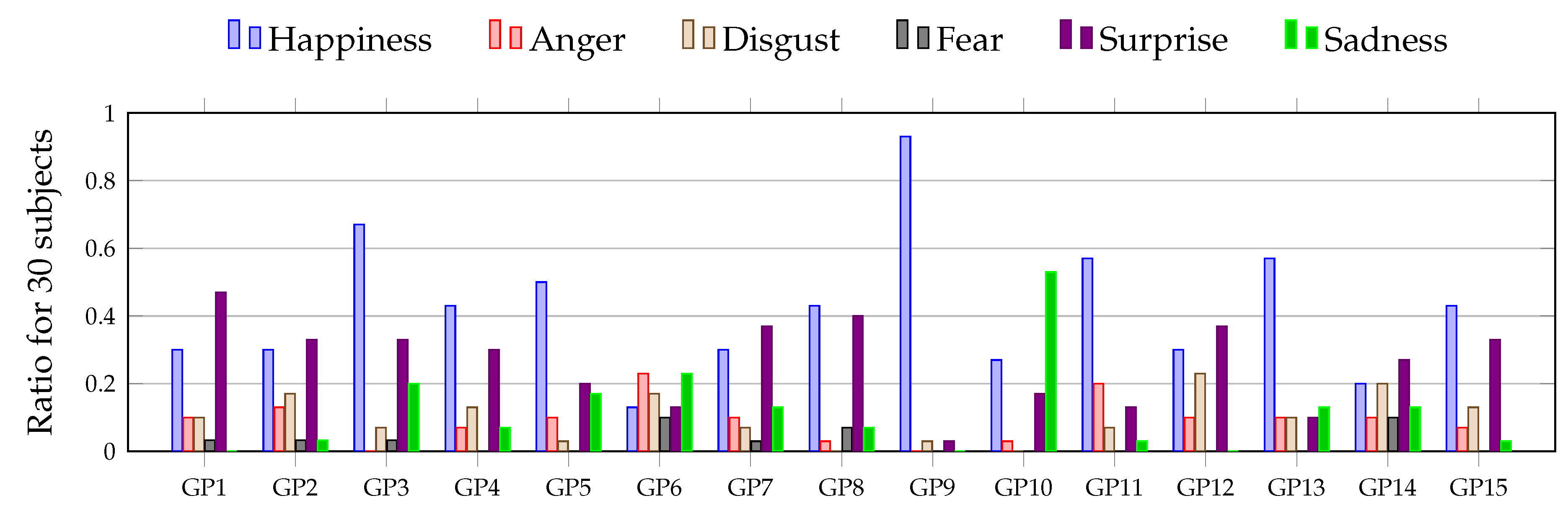

4.3. From Facial Expression’s Data to Emotion Scores

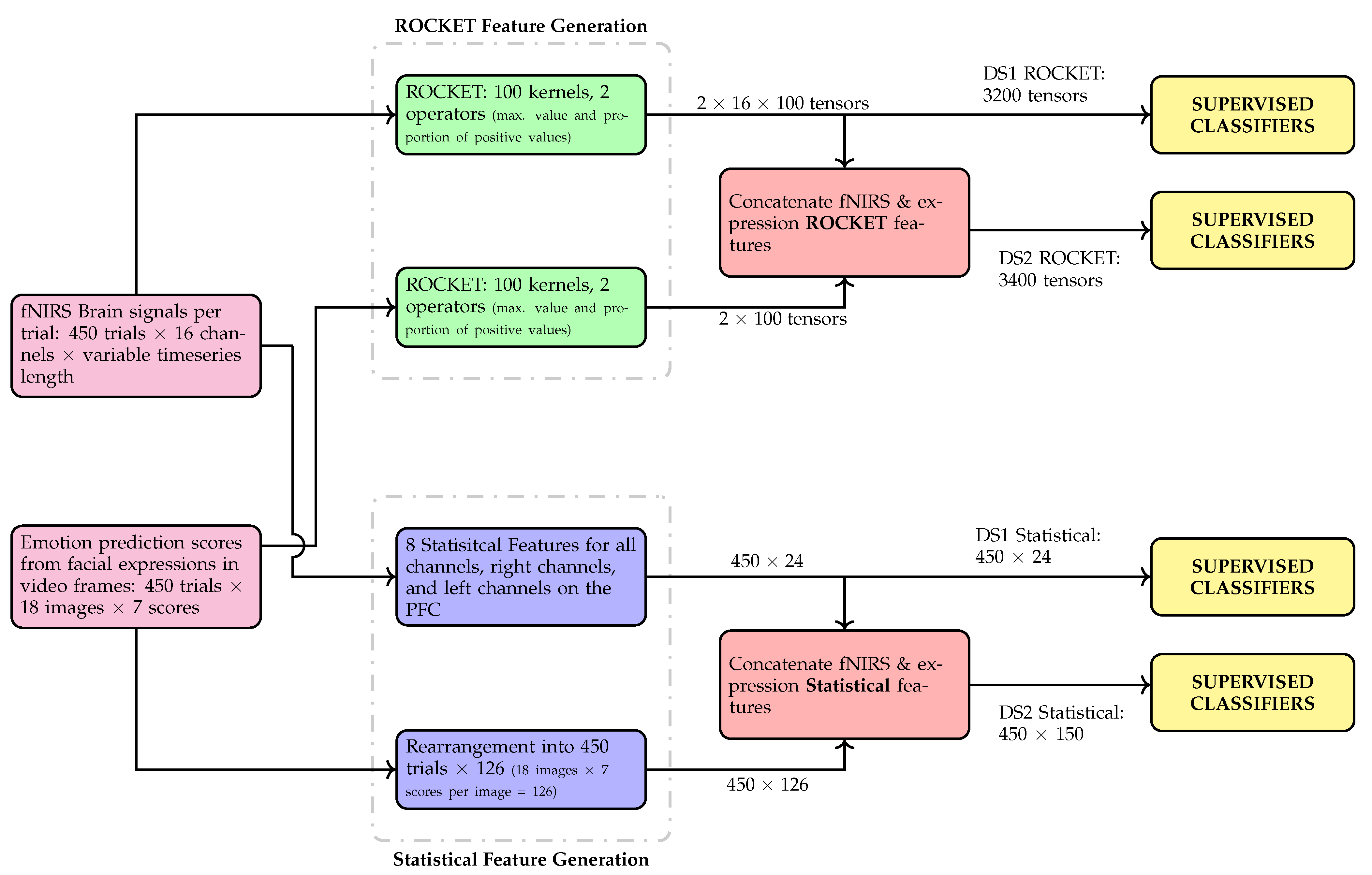

4.4. Classification Paradigms

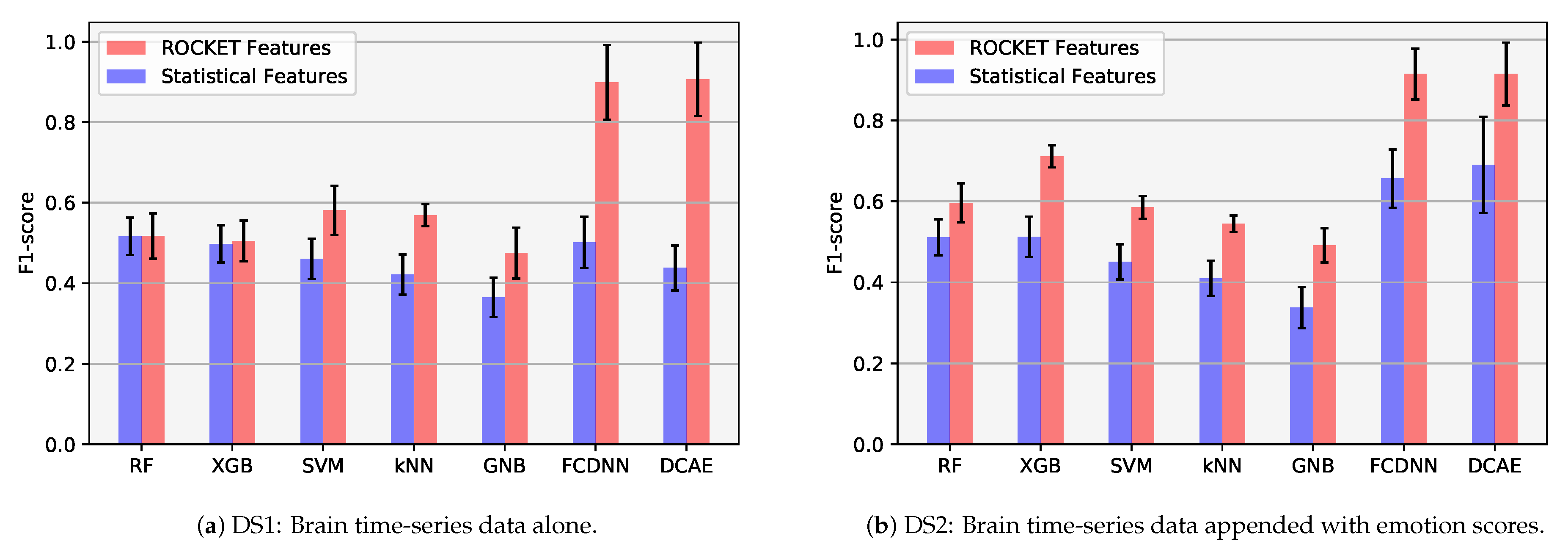

- DS1: Brain time-series data acquired from fNIRS alone.

- DS2: Brain time-series data acquired from fNIRS appended with predicted emotion scores, on participant’s expression data obtained from VGG19, on a per trial basis.

4.4.1. Statistical Feature Learning for Time Series

4.4.2. Unsupervised Deep Feature Learning for Time Series

4.4.3. Supervised Classifiers

- Random Forest (RF): A RF is a ensemble learning method that incorporates multiple decision tree classifiers on varying sub-samples of the input data set. RF improve the predictive accuracy, and control over-fitting by averaging the decision of the multiple decision tree classifiers [39]. In this work the number of multiple decision tree classifiers used are 30, each with a maximum depth of 10. We used the implementation in Scikit-learn [39] library.

- Support Vector Machines (SVM): SVM is inherently a supervised discriminative classifier constructed by a separating hyper plane in a multi-dimensional space [40]. SVM optimize the hyper plane definition by maximizing the distance to the nearest data point of any class. In this work, non-linear kernel radial basis function is used with gamma set at auto. We used the implementation in Scikit-learn [41] library.

- k-Nearest Neighbors (kNN): kNN capitalizes on the similarity idea that data points from a given class would have more similarity with each other in comparison to data points from different classes. The measure of similarity can be computed using different metrics such as distance, proximity, or closeness [42]. The number of neighbors used in this work for computing the similarity is 3. We used the implementation in Scikit-learn [41] library.

- Gaussian Naive Bayes (GNB): GNB is an effective inductive learning algorithms based on applying Bayes’ theorem. The term ‘naive’ appears because of the underlying assumption of conditional independence between every pair of features used in the implementation of GNB [43]. In this work, the default parameters are used for GNB. We used the implementation in Scikit-learn [41] library.

- XGradient Boost (XGB): XGB provides parallel tree boosting machine learning algorithms using an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable [44]. In this work, the XGB objective is set to multiclass with fraction of columns to be subsampled as 0.3, learning rate as 0.1, maximum depth as 3, alpha as 5, nestimators as 15, and numclass as 3. We used the implementation in Scikit-learn [41] library.

- Fully Connected Deep Neural Network (FCDNN): The FCDNN consists of 5 blocks. Each block consists of:

- -

- Fully connected (FC) layer.

- -

- Rectified linear unit (ReLU) activation.

- -

- Each FC layer also dropouts neurons to ensure FCDNN is not over fitting with any of the DSs.

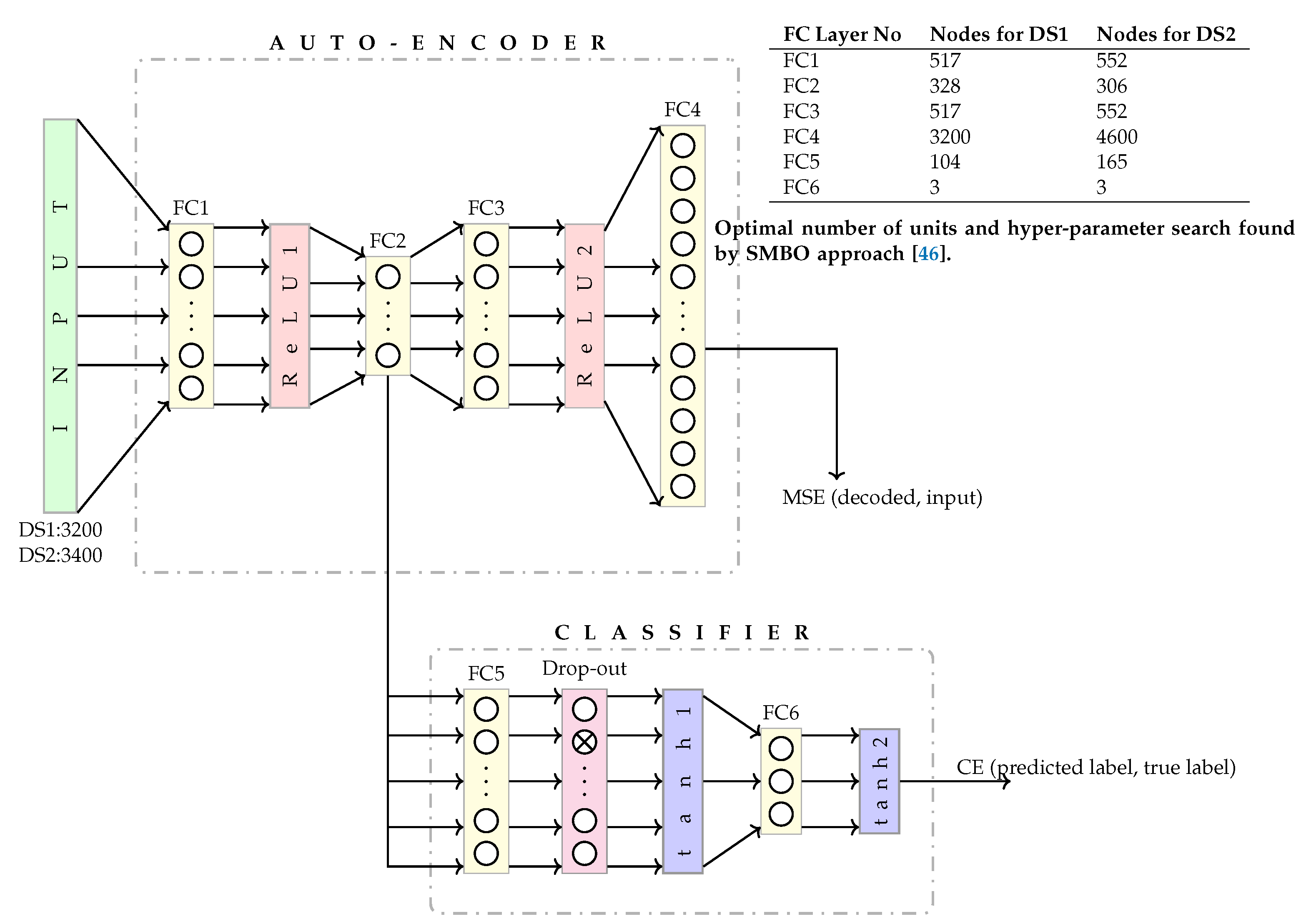

- Deep Classifier Auto Encoder (DCAE): The architecture of the DCAE along with its hyper parameters i.e., the number of nodes for each layer, dropout layer rate, for both DS1, and DS2 are shown in Figure 6. It consists of an encoder, decoder, and a classifier. This was implemented with custom code in PyTorch 1.3.1 [45] library.

- Encoder: The number of inputs to the encoder are 3200 inputs for DS1 and 4600 inputs for DS2. The encoder consists of a fully connected layer (FC1), Rectifier Linear Unit (ReLU1) activation, and FC2.

- -

- Fully Connected Layer (FC1): A fully connected layer that performs a linear transformation (i.e., a weight matrix and bias) with ReLU1 activation. It has 517 hidden units that encode 3200 inputs for DS1, and 551 hidden units to encode 4600 inputs for DS2.

- -

- Fully Connected Layer (FC2): A fully connected layer that performs a linear transformation with 328 hidden units for DS1, and 306 units for DS2. The output of FC2 is the output of the encoder.

- Decoder: The number of inputs to the decoder are 328 inputs for DS1 and 306 inputs for DS2. The decoder consists of FC3, ReLU2 activation, and FC4.

- -

- Fully Connected Layer (FC3): A fully connected layer that performs a linear transformation with 517 hidden units for DS1, and 551 hidden units for DS2, with ReLU2 activation that decode from 328 hidden units for DS1, and 306 units for DS2.

- -

- Fully Connected Layer (FC4): A fully connected layer that performs a linear transformation which decode 517 encoded inputs to 3200 decoded inputs for DS1, and decode 551 encoded inputs to 4600 decoded inputs for DS2. The output of FC4 is the output of the decoder.

- Classifier: The input to the classifier is the output of FC2 i.e., 328 inputs for DS1 and 306 inputs for DS2. It consists of FC5, drop-out, hyperbolic tangent activation function (tanh1), FC6 and tanh2 layers, as outlined below:

- -

- Fully Connected Layer (FC5): A fully connected layer that performs a linear transformation with 328 inputs, and 104 outputs for DS1, and 306 inputs and 165 outputs for DS2.

- -

- Dropout: FC5 output undergoes 39.22% dropout for DS1, and 49.96% for DS2 followed by hyperbolic tangent activation function (tanh1).

- -

- Fully Connected Layer (FC6): A fully connected layer that performs a linear transformation with 104 inputs for DS1, and 165 inputs for DS2, and 3 outputs followed by hyperbolic tangent activation function (tanh2).

5. Expertise Classification Results

5.1. Hypotheses Testing

5.1.1.

5.1.2.

5.1.3.

6. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wingfield, N. In eSports, video gamers draw real crowds and big money. New York Times, 30 August 2014. [Google Scholar]

- Wagner, M.G. On the Scientific Relevance of eSports. In Proceedings of the 2006 International Conference on Internet Computing and Conference on Computer Game Development, ICOMP 2006, Las Vegas, NV, USA, 26–29 June 2006; pp. 437–442. [Google Scholar]

- Heimer, R. How Many People Play Leage of Legends. 2019. Available online: https://www.unrankedsmurfs.com/blog (accessed on 1 April 2020).

- Jenny, S.E.; Manning, R.D.; Keiper, M.C.; Olrich, T.W. Virtual(ly) Athletes: Where eSports Fit Within the Definition of “Sport”. Quest 2016, 69, 1–18. [Google Scholar] [CrossRef]

- Montag, C.; Weber, B.; Trautner, P.; Newport, B.; Markett, S.; Walter, N.T.; Felten, A.; Reuter, M. Does Excessive Play of Violent First-Person-Shooter-Video-Games Dampen Brain Activity in Response to Emotional Stimuli? Biol. Psychol. 2012, 89, 107–111. [Google Scholar] [CrossRef] [PubMed]

- Montag, C.; Flierl, M.; Markett, S.; Walter, N.; Jurkiewicz, M.; Reuter, M. Internet Addiction and Personality in First-Person-Shooter Video Gamers. J. Media Psychol. Theor. Methods Appl. 2011, 23, 163–173. [Google Scholar] [CrossRef]

- Palaus, M.; Marron, E.M.; Viejo-Sobera, R.; Redolar-Ripoll, D. Neural Basis of Video Gaming: A Systematic Review. Front. Hum. Neurosci. 2017, 11. [Google Scholar] [CrossRef]

- Bringula, R.; Lugtu, K.; Aviles, A. “How Do You Feel?”: Emotions Exhibited While Playing Computer Games and Their Relationship to Gaming Behaviors. Int. J. Cyber Soc. Educ. 2015, 8, 39–48. [Google Scholar] [CrossRef]

- Bailey, K.; West, R. The Effects of an Action Video Game on Visual and Affective Information Processing. Brain Res. 2013, 1504, 35–46. [Google Scholar] [CrossRef]

- Bavelier, D.; Green, C.S.; Han, D.H.; Renshaw, P.F.; Merzenich, M.M.; Gentile, D.A. Brains on video games. Nat. Rev. Neurosci. 2011, 12, 763–768. [Google Scholar] [CrossRef]

- Takahashi, D. Valve Psychologist Explores Controlling Games Directly with Your Brain. 2019. Available online: https://venturebeat.com (accessed on 1 April 2020).

- Kotsia, I.; Zafeiriou, S.; Fotopoulos, S. Affective Gaming: A Comprehensive Survey. In Proceedings of the 26th IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 663–670. [Google Scholar] [CrossRef]

- Coyle, D.; Principe, J.; Lotte, F.; Nijholt, A. Brain/neuronal-Computer game interfaces and interaction. IEEE Trans. Comput. Intell. AI Games 2013, 5, 77–81. [Google Scholar] [CrossRef][Green Version]

- Krol, L.R.; Freytag, S.C.; Zander, T.O. Meyendtris: A hands-free, multimodal tetris clone using eye tracking and passive BCI for intuitive neuroadaptive gaming. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 433–437. [Google Scholar] [CrossRef]

- Fairclough, S.H. Fundamentals of physiological computing. Interact. Comput. 2009, 21, 133–145. [Google Scholar] [CrossRef]

- Dutta, S.; Banerjee, T.; Roy, N.D.; Chowdhury, B.; Biswas, A. Development of a BCI-based gaming application to enhance cognitive control in psychiatric disorders. Innov. Syst. Softw. Eng. 2020. [Google Scholar] [CrossRef]

- Drachen, A.; Nacke, L.E.; Yannakakis, G.N.; Pedersen, A.L. Correlation between heart rate, electrodermal activity and player experience in first-person shooter games. In Sandbox ’10: Proceedings of the 5th ACM SIGGRAPH Symposium on Video Games; ACM Press: New York, NY, USA, 2010; pp. 49–54. [Google Scholar] [CrossRef]

- Van den Hoogen, W.; IJsselsteijn, W.A.; de Kort, Y.A. Exploring behavioral expressions of player experience in digital games. In Proceedings of the Workshop on Facial and Bodily Expression for Control and Adaptation of Games ECAG, Amsterdam, The Netherlands, 16 September 2008; pp. 11–19. [Google Scholar]

- Gramann, K.; Jung, T.P.; Ferris, D.P.; Lin, C.T.; Makeig, S. Toward a new cognitive neuroscience: Modeling natural brain dynamics. Front. Hum. Neurosci. 2014, 8, 444. [Google Scholar] [CrossRef] [PubMed]

- Bandara, D.; Velipasalar, S.; Bratt, S.; Hirshfield, L. Building predictive models of emotion with functional near-infrared spectroscopy. Int. J. Hum. Comput. Stud. 2018, 110, 75–85. [Google Scholar] [CrossRef]

- McKendrick, R.; Parasuraman, R.; Ayaz, H. Wearable functional near infrared spectroscopy (fNIRS) and transcranial direct current stimulation (tDCS): Expanding vistas for neurocognitive augmentation. Front. Syst. Neurosci. 2015, 9, 27. [Google Scholar] [CrossRef] [PubMed]

- Cakir, M.P.; Çakir, N.A.; Ayaz, H.; Lee, F. An Optical Brain Imaging Study on the Improvements in Mathematical Fluency from Game-based Learning. In Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in Play, London, UK, 5–7 October 2015; pp. 209–219. [Google Scholar] [CrossRef]

- Bunce, S.C.; Izzetoglu, K.; Ayaz, H.; Shewokis, P.; Izzetoglu, M.; Pourrezaei, K.; Onaral, B. Implementation of fNIRS for Monitoring Levels of Expertise and Mental Workload. In Foundations of Augmented Cognition. Directing the Future of Adaptive Systems; Springer: Berlin/Heidelberg, Germany, 2011; pp. 13–22. [Google Scholar] [CrossRef]

- Matsuda, G.; Hiraki, K. Sustained decrease in oxygenated hemoglobin during video games in the dorsal prefrontal cortex: A NIRS study of children. Neuroimage 2006, 29, 706–711. [Google Scholar] [CrossRef] [PubMed]

- Hyun, G.J.; Shin, Y.W.; Kim, B.N.; Cheong, J.H.; Jin, S.N.; Han, D.H. Increased cortical thickness in professional on-line gamers. Psychiatry Investig. 2013, 10, 388–392. [Google Scholar] [CrossRef]

- Gong, D.; Yao, Y.; Gan, X.; Peng, Y.; Ma, W.; Yao, D. A Reduction in Video Gaming Time Produced a Decrease in Brain Activity. Front. Hum. Neurosci. 2019, 13, 134. [Google Scholar] [CrossRef]

- Kokkinakis, A.V.; Cowling, P.I.; Drachen, A.; Wade, A.R. Exploring the relationship between video game expertise and fluid intelligence. PLoS ONE 2017, 12, 763–768. [Google Scholar] [CrossRef]

- Andreu-Perez, J.; Leff, D.R.; Shetty, K.; Darzi, A.; Yang, G.Z. Disparity in Frontal Lobe Connectivity on a Complex Bimanual Motor Task Aids in Classification of Operator Skill Level. Brain Connect. 2016, 6, 375–388. [Google Scholar] [CrossRef]

- Gupta, A.; Agrawal, R.K.; Kirar, J.S.; Andreu-Perez, J.; Ding, W.-P.; Lin, C.-T.; Prasad, M. On the Utility of Power Spectral Techniques With Feature Selection Techniques for Effective Mental Task Classification in Noninvasive BCI. IEEE Trans. Syst. Man Cybern. Syst. 2019, 99, 1–13. [Google Scholar] [CrossRef]

- Sweeney, K.T.; Ward, T.E.; McLoone, S.F. Artifact Removal in Physiological Signals—Practices and Possibilities. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 488–500. [Google Scholar] [CrossRef]

- Kiani, M.; Andreu-Perez, J.; Hagras, H.; Papageorgiou, E.I.; Prasad, M.; Lin, C.T. Effective Brain Connectivity for fNIRS with Fuzzy Cognitive Maps in Neuroergonomics. IEEE Trans. Cogn. Dev. Syst. 2019. [Google Scholar] [CrossRef]

- Izzetoglu, K.; Ayaz, H.; Hing, J.T.; Shewokis, P.A.; Bunce, S.C.; Oh, P.; Onaral, B. UAV operators workload assessment by optical brain imaging technology (fNIR). In Handbook of Unmanned Aerial Vehicles; Springer: Dordrecht, The Netherlands, 2015; pp. 2475–2500. [Google Scholar]

- Miller, E.K.; Cohen, J.D. An Integrative Theory of Prefrontal Cortex Function. Annu. Rev. Neurosci. 2001, 24, 167–202. [Google Scholar] [CrossRef] [PubMed]

- Hart, A. Mann Whitney test is not just a test of medians: Differences in spread can be important. BMJ 2001, 323, 391–393. [Google Scholar] [CrossRef] [PubMed]

- Kiani, M.; Andreu-Perez, J.; Hagras, H.; Andreu, A.R.; Pinto, M.; Andreu, J.; Reddy, P.; Izzetoglu, K. Towards Gamers’ Experience Level Decoding with Optical Brain Imaging. In Proceedings of the IEEE 11th Computer Science and Electronic Engineering (CEEC 2019), Colchester, UK, 18–20 September 2019; pp. 47–52. [Google Scholar] [CrossRef]

- Fei, Y.; Jiao, G. Research on Facial Expression Recognition Based on Voting Model. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 646, p. 012054. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. Neural Netw. Spec. Issue Deep Learn. Represent. 2015, 64, 59–63. [Google Scholar] [CrossRef]

- Dempster, A.; Petitjean, F.; Webb, G.I. ROCKET: Exceptionally fast and accurate time series classification using random convolutional kernels. arXiv 2019, arXiv:1910.13051. [Google Scholar]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; Volume 1, pp. 278–282. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Altman, N.S. An Introduction to Kernel and Nearest-Neighbor Nonparametric Regression. Am. Stat. 1992, 46, 175–185. [Google Scholar]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4 August 2001; Volume 3, pp. 41–46. [Google Scholar]

- Chen, T.; Guestrin, C.E. XGBoost: A Scalable Tree Boosting System. In Proceedings of the Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’16), San Francisco, CA, USA, 13–17 August 2016; Volume 12, pp. 785–794. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., Alche-Buc, F., Fox, E., Garnett, R., Eds.; The MIT Press: Cambridge, MA, USA, 2019; pp. 8026–8037. [Google Scholar]

- Bergstra, J.S.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for Hyper-Parameter Optimization. In Advances in Neural Information Processing Systems 24; Shawe-Taylor, J., Zemel, R.S., Bartlett, P.L., Pereira, F., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2011; pp. 2546–2554. [Google Scholar]

- Kruskal, W.H.; Wallis, W.A. Use of Ranks in One-Criterion Variance Analysis. J. Am. Stat. Assoc. 1952, 47, 583–621. [Google Scholar] [CrossRef]

- Dinno, A. Nonparametric Pairwise Multiple Comparisons in Independent Groups using Dunn’s Test. Stata J. 2015, 15, 292–300. [Google Scholar] [CrossRef]

- Ballesteros, S.; Voelcker-Rehage, C.; Bherer, L. Editorial: Cognitive and Brain Plasticity Induced by Physical Exercise, Cognitive Training, Video Games, and Combined Interventions. Front. Hum. Neurosci. 2018, 12, 169. [Google Scholar] [CrossRef] [PubMed]

| Expertise Level | Victory Points | Hours Played Per Week |

|---|---|---|

| Novices (NVs) | 0–400 | |

| Intermediates (ITs) | 200–400 | |

| 400–1000 | ||

| Experts (EXs) | More than 1000 |

| FC Layer No. | Hyper Parameters | DS1 | DS2 |

|---|---|---|---|

| FC1 | Number of nodes | 244 | 244 |

| Dropout | 0.333 | 0.231 | |

| FC2 | Number of nodes | 313 | 148 |

| Dropout | 0.344 | 0.433 | |

| FC3 | Number of nodes | 160 | 119 |

| Dropout | 0.453 | 0.265 | |

| FC4 | Number of nodes | 149 | 102 |

| Dropout | 0.346 | 0.391 | |

| FC5 | Number of nodes | 65 | 182 |

| Dropout for FC5 | 0.395 | 0.691 |

| (a) Classification results for DS1: Brain data acquired from fNIRS alone. | |||||

| Classifier | Features | Accuracy | Precision | Recall | F1-Score |

| RF | ROCKET | 52.06 ± 5.56 | 53.95 ± 10.92 | 51.77 ± 12.17 | 51.72 ± 5.63 |

| Statistical | 51.69 ± 4.95 | 52.13 ± 7.74 | 51.39 ± 10.09 | 51.43 ± 4.96 | |

| XGB | ROCKET | 50.79 ± 4.99 | 52.39 ± 8.87 | 50.47 ± 10.96 | 50.50 ± 5.10 |

| Statistical | 50.51 ± 5.05 | 51.86 ± 9.20 | 50.05 ± 10.62 | 50.23 ± 5.10 | |

| SVM | ROCKET | 58.23 ± 6.07 | 62.02 ± 14.06 | 57.72 ± 12.91 | 58.11 ± 6.20 |

| Statistical | 46.60 ± 4.92 | 46.97 ± 8.12 | 45.66 ± 14.34 | 45.69 ± 5.04 | |

| kNN | ROCKET | 56.89 ± 3.06 | 59.87 ± 12.11 | 56.62 ± 10.94 | 56.87 ± 2.78 |

| Statistical | 42.44 ± 4.18 | 43.85 ± 8.12 | 42.11 ± 11.63 | 41.97 ± 4.34 | |

| GNB | ROCKET | 47.86 ± 6.86 | 50.45 ± 10.62 | 47.27 ± 12.34 | 47.49 ± 6.38 |

| Statistical | 41.49 ± 3.70 | 46.29 ± 13.90 | 39.27 ± 30.91 | 35.95 ± 4.29 | |

| FCDNN | ROCKET | 89.84 ± 9.31 | 89.93 ± 9.19 | 89.84 ± 9.31 | 89.84 ± 9.28 |

| Statistical | 51.20 ± 6.07 | 50.40 ± 6.14 | 52.89 ± 7.02 | 50.10 ± 6.38 | |

| DCAE | ROCKET | 90.70 ± 7.84 | 90.65 ± 8.65 | 90.70 ± 7.62 | 90.64 ± 9.14 |

| Statistical | 45.97 ± 4.81 | 44.86 ± 4.96 | 47.95 ± 6.15 | 43.79 ± 5.56 | |

| (b) Classification results for DS2: Brain data acquired from fNIRS appended with predicted emotion scores on participant’s expression data. | |||||

| Classifier | Features | Accuracy | Precision | Recall | F1-Score |

| RF | ROCKET | 59.93 ± 4.64 | 60.90 ± 7.90 | 59.85 ± 10.69 | 59.66 ± 4.80 |

| Statistical | 51.96 ± 4.83 | 52.61 ± 7.91 | 51.49 ± 11.20 | 51.59 ± 4.97 | |

| XGB | ROCKET | 71.55 ± 2.72 | 72.04 ± 5.57 | 72.06 ± 12.12 | 71.12 ± 2.74 |

| Statistical | 51.82 ± 4.17 | 53.38 ± 8.15 | 51.10 ± 11.86 | 51.36 ± 4.19 | |

| SVM | ROCKET | 58.69 ± 2.67 | 61.99 ± 11.79 | 58.62 ± 11.03 | 58.53 ± 2.83 |

| Statistical | 46.67 ± 4.36 | 48.06 ± 8.94 | 45.57 ± 17.09 | 45.37 ± 4.79 | |

| kNN | ROCKET | 54.63 ± 1.93 | 59.76 ± 16.05 | 54.35 ± 13.68 | 54.46 ± 2.06 |

| Statistical | 41.84 ± 4.56 | 42.55 ± 7.61 | 41.54 ± 12.78 | 41.15 ± 4.56 | |

| GNB | ROCKET | 49.21 ± 4.34 | 51.84 ± 10.19 | 48.94 ± 8.93 | 49.16 ± 4.29 |

| Statistical | 34.07 ± 4.56 | 34.43 ± 8.16 | 33.81 ± 14.57 | 32.80 ± 4.89 | |

| FCDNN | ROCKET | 91.44 ± 6.32 | 91.66 ± 6.24 | 91.44 ± 6.32 | 91.48 ± 6.28 |

| Statistical | 66.00 ± 7.08 | 65.69 ± 7.14 | 66.85 ± 7.13 | 65.65 ± 7.20 | |

| DCAE | ROCKET | 91.43 ± 9.97 | 91.47 ± 9.88 | 91.60 ± 12.73 | 91.48 ± 7.78 |

| Statistical | 69.62 ± 10.80 | 69.25 ± 10.97 | 71.17 ± 11.50 | 69.01 ± 11.86 | |

| (a) tested on the distribution of F1-scores | |||

| Classifier | Dataset | p-Value | |

| RF | DS1 | 0.08 | Accepted |

| XGB | DS1 | Rejected | |

| SVM | DS1 | Rejected | |

| kNN | DS1 | Rejected | |

| GNB | DS1 | Rejected | |

| FCDNN | DS1 | Rejected | |

| DCAE | DS1 | Rejected | |

| (b) tested on the distribution of F1-scores | |||

| Classifier | p-Value | ||

| RF | p< 0.05 | Rejected | |

| XGB | p< 0.05 | Rejected | |

| SVM | p< 0.05 | Rejected | |

| kNN | p< 0.05 | Rejected | |

| GNB | 0.1494 | Accepted | |

| FCDNN | 0.1698 | Accepted | |

| DCAE | 0.0812 | Accepted | |

| (a) | |||||

|---|---|---|---|---|---|

| Classifier | Classifier | p-Value | Lower Bound | Estimate | Upper Bound |

| RF | XGB | 1.0000 | −48.95 | 12.34 | 73.63 |

| SVM | p< 0.05 | −129.55 | −68.26 | −6.97 | |

| kNN | p< 0.05 | −129.15 | −67.86 | −6.57 | |

| GNB | 0.6197 | −20.75 | 40.54 | 101.83 | |

| FCDNN | p< 0.05 | −232.12 | −170.83 | −109.54 | |

| DCAE | p< 0.05 | −237.44 | −176.15 | −114.86 | |

| XGB | RF | 1.0000 | −48.95 | 12.34 | 73.63 |

| SVM | p< 0.05 | −141.89 | −80.60 | −19.31 | |

| kNN | p< 0.05 | −141.45 | −80.20 | −18.91 | |

| GNB | 0.9763 | −33.09 | 28.20 | 89.49 | |

| FCDNN | p< 0.05 | −244.46 | −183.17 | −121.88 | |

| DCAE | p< 0.05 | −249.78 | −188.49 | −127.20 | |

| SVM | RF | p< 0.05 | −129.55 | −68.26 | −6.97 |

| XGB | p< 0.05 | −141.89 | −80.60 | −19.31 | |

| kNN | 1.0000 | −60.89 | 0.40 | 61.69 | |

| GNB | p< 0.05 | 47.51 | 108.80 | 170.09 | |

| FCDNN | p< 0.05 | −163.86 | −102.57 | −41.28 | |

| DCAE | p< 0.05 | −169.18 | −107.89 | −46.60 | |

| kNN | RF | p< 0.05 | −129.15 | −67.86 | −6.57 |

| XGB | p< 0.05 | −141.45 | −80.20 | −18.91 | |

| SVM | 1.0000 | −60.89 | 0.40 | 61.69 | |

| GNB | p< 0.05 | 47.11 | 108.40 | 169.69 | |

| FCDNN | p< 0.05 | −164.26 | −102.97 | −41.68 | |

| DCAE | p< 0.05 | −169.58 | −108.29 | −47.00 | |

| GNB | RF | 0.6197 | −20.75 | 40.54 | 101.83 |

| XGB | 0.9763 | −33.09 | 28.20 | 89.49 | |

| SVM | p< 0.05 | 47.51 | 108.80 | 170.09 | |

| kNN | p< 0.05 | 47.11 | 108.40 | 169.69 | |

| FCDNN | p< 0.05 | −272.66 | −211.37 | −150.08 | |

| DCAE | p< 0.05 | −277.98 | −216.69 | −155.40 | |

| FCDNN | RF | p< 0.05 | −232.12 | −170.83 | −109.54 |

| XGB | p< 0.05 | −244.46 | −183.17 | −121.88 | |

| SVM | p< 0.05 | −163.86 | −102.57 | −41.28 | |

| kNN | p< 0.05 | −164.26 | −102.97 | −41.68 | |

| GNB | p< 0.05 | −272.66 | −211.37 | −150.08 | |

| DCAE | 1.0000 | −66.61 | −5.32 | 55.97 | |

| DCAE | RF | p< 0.05 | −237.44 | −176.15 | −114.86 |

| XGB | p< 0.05 | −249.78 | −188.49 | −127.20 | |

| SVM | p< 0.05 | −169.18 | −107.89 | −46.60 | |

| kNN | p< 0.05 | −169.58 | −108.29 | −47.00 | |

| GNB | p< 0.05 | −277.98 | −216.69 | −155.40 | |

| FCDNN | 1.0000 | −66.61 | −5.32 | 55.97 | |

| (b) | |||||

| Classifier | Classifier | p-Value | Lower Bound | Estimate | Upper Bound |

| RF | XGB | p< 0.05 | −156.77 | −95.54 | −34.31 |

| SVM | 1.0000 | −54.37 | 6.86 | 68.09 | |

| kNN | p< 0.05 | 6.23 | 67.46 | 128.69 | |

| GNB | p< 0.05 | 54.23 | 115.46 | 176.69 | |

| FCDNN | p< 0.05 | −199.34 | −138.11 | −76.88 | |

| DCAE | p< 0.05 | −203.14 | −141.91 | −80.68 | |

| XGB | RF | p< 0.05 | −156.77 | −95.54 | −34.31 |

| SVM | p< 0.05 | 41.17 | 102.40 | 163.63 | |

| kNN | p< 0.05 | 101.77 | 163.00 | 224.23 | |

| GNB | p< 0.05 | 149.77 | 211.00 | 272.23 | |

| FCDNN | 0.5278 | −103.80 | −42.57 | 18.66 | |

| DCAE | 0.3694 | −107.60 | −46.37 | 14.86 | |

| SVM | RF | 1.0000 | −54.37 | 6.86 | 68.09 |

| XGB | p< 0.05 | 41.17 | 102.40 | 163.63 | |

| kNN | 0.0552 | −0.63 | 60.60 | 121.83 | |

| GNB | p< 0.05 | 47.37 | 108.60 | 169.83 | |

| FCDNN | p< 0.05 | −206.20 | −144.97 | −83.74 | |

| DCAE | p< 0.05 | −210.00 | −148.77 | −87.54 | |

| kNN | RF | p< 0.05 | 6.23 | 67.46 | 128.69 |

| XGB | p< 0.05 | 101.77 | 163.00 | 224.23 | |

| SVM | 0.0552 | −0.63 | 60.60 | 121.83 | |

| GNB | 0.3098 | −13.23 | 48.00 | 109.23 | |

| FCDNN | p< 0.05 | −266.80 | −205.57 | −144.34 | |

| DCAE | p< 0.05 | −270.60 | −209.37 | −148.14 | |

| GNB | RF | p< 0.05 | 54.23 | 115.46 | 176.69 |

| XGB | p< 0.05 | 149.77 | 211.00 | 272.23 | |

| SVM | p< 0.05 | 47.37 | 108.60 | 169.83 | |

| kNN | 0.3098 | −13.23 | 48.00 | 109.23 | |

| FCDNN | p< 0.05 | −312.99 | −253.43 | −193.86 | |

| DCAE | p< 0.05 | −318.60 | −257.37 | −196.14 | |

| FCDNN | RF | p< 0.05 | −199.34 | −138.11 | −76.88 |

| XGB | 0.5278 | −103.80 | −42.57 | 18.66 | |

| SVM | p< 0.05 | −206.20 | −144.97 | −83.74 | |

| kNN | p< 0.05 | −266.39 | −206.83 | −147.27 | |

| GNB | p< 0.05 | −312.99 | −253.43 | −193.86 | |

| DCAE | 1.0000 | −65.03 | −3.80 | 57.43 | |

| DCAE | RF | p< 0.05 | −203.14 | −141.91 | −80.68 |

| XGB | 0.3694 | −107.60 | −46.37 | 14.86 | |

| SVM | p< 0.05 | −210.00 | −148.77 | −87.54 | |

| kNN | p< 0.05 | −270.60 | −209.37 | −148.14 | |

| GNB | p< 0.05 | −318.60 | −257.37 | −196.14 | |

| FCDNN | 1.0000 | −65.03 | −3.80 | 57.43 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Andreu-Perez, A.R.; Kiani, M.; Andreu-Perez, J.; Reddy, P.; Andreu-Abela, J.; Pinto, M.; Izzetoglu, K. Single-Trial Recognition of Video Gamer’s Expertise from Brain Haemodynamic and Facial Emotion Responses. Brain Sci. 2021, 11, 106. https://doi.org/10.3390/brainsci11010106

Andreu-Perez AR, Kiani M, Andreu-Perez J, Reddy P, Andreu-Abela J, Pinto M, Izzetoglu K. Single-Trial Recognition of Video Gamer’s Expertise from Brain Haemodynamic and Facial Emotion Responses. Brain Sciences. 2021; 11(1):106. https://doi.org/10.3390/brainsci11010106

Chicago/Turabian StyleAndreu-Perez, Ana R., Mehrin Kiani, Javier Andreu-Perez, Pratusha Reddy, Jaime Andreu-Abela, Maria Pinto, and Kurtulus Izzetoglu. 2021. "Single-Trial Recognition of Video Gamer’s Expertise from Brain Haemodynamic and Facial Emotion Responses" Brain Sciences 11, no. 1: 106. https://doi.org/10.3390/brainsci11010106

APA StyleAndreu-Perez, A. R., Kiani, M., Andreu-Perez, J., Reddy, P., Andreu-Abela, J., Pinto, M., & Izzetoglu, K. (2021). Single-Trial Recognition of Video Gamer’s Expertise from Brain Haemodynamic and Facial Emotion Responses. Brain Sciences, 11(1), 106. https://doi.org/10.3390/brainsci11010106