Cortico-Hippocampal Computational Modeling Using Quantum Neural Networks to Simulate Classical Conditioning Paradigms

Abstract

1. Introduction

2. Materials and Methods

2.1. Qubit

2.2. Quantum Rotation Gate

2.3. Qubit Neuron Model

2.4. Proposed Model

- The hippocampal region has an AQNN that uses both instar and outstar rules to reproduce the inputs and generate the internal representations, which are forwarded to the cortical region in intact systems but not in lesioned ones.

- The cortical region has an ASLFFQNN that uses both instar and Widrow–Hoff rule to update its weights and quantum parameters.

2.4.1. General QNN Architecture

Input Layer (I)

Hidden Layer (H)

Output Layer (O)

2.4.2. Hippocampal Module Network

2.4.3. Cortical Module Network

3. Results

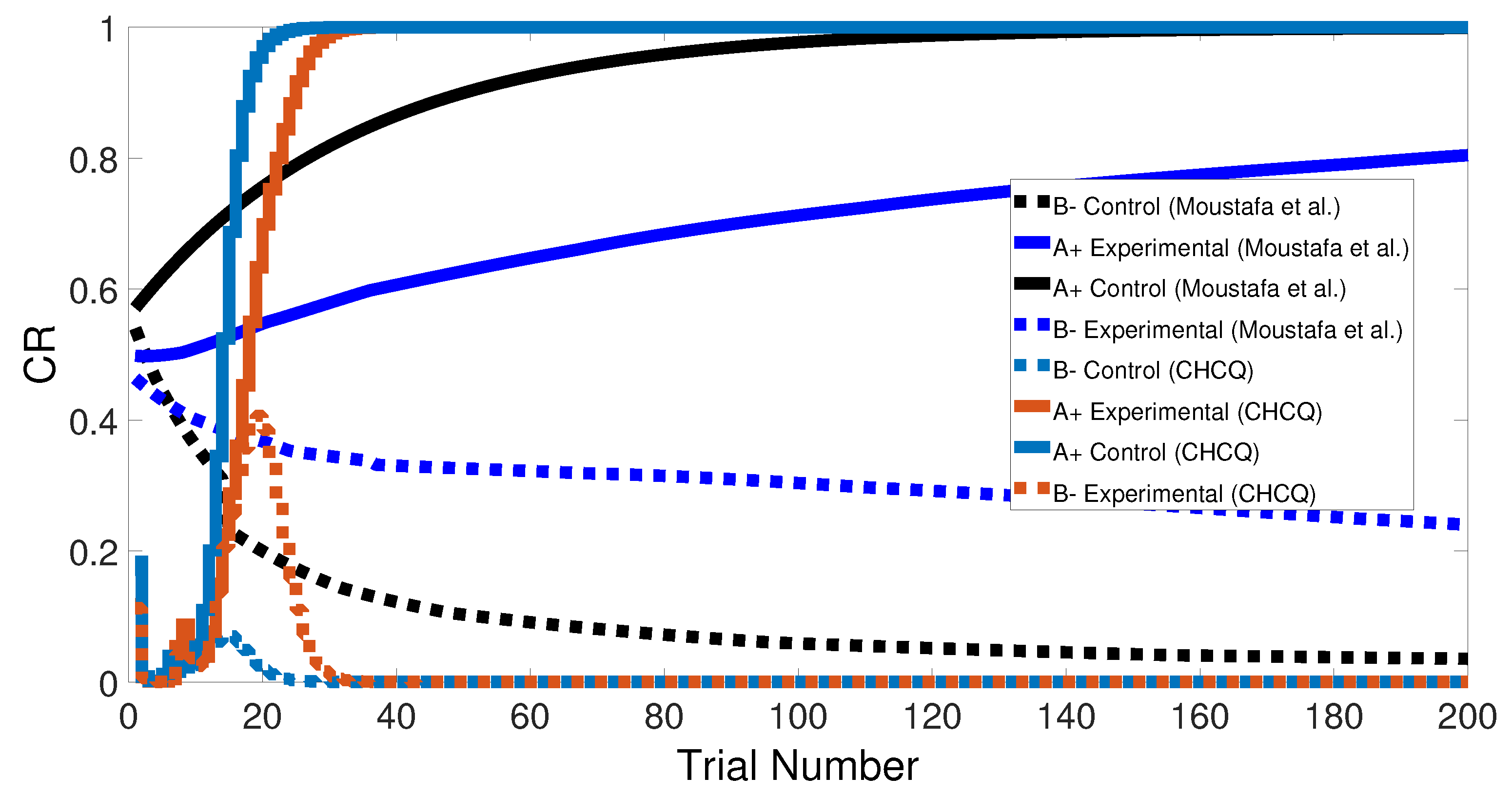

3.1. Primitive Tasks

3.2. Stimulus Discrimination

3.3. Discrimination Reversal

3.4. Blocking

3.5. Overshadowing

3.6. Easy–Hard Transfer

3.7. Latent Inhibition

3.8. Generic Feedforward Multilayer Network

3.9. Sensory Preconditioning

3.10. Compound Preconditioning

3.11. Context Sensitivity

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CHCQ | Cortico-hippocampal computational quantum |

| ANNs | artificial neural networks |

| CS | Conditioned stimulus |

| US | Unconditioned stimulus |

| CR | Conditioned response |

| QNN | Quantum neural network |

| ASLFFQNN | Adaptive single-layer feedforward quantum neural network |

| AQNN | Autoencoder quantum neural network |

| I | Input layer |

| H | Hidden layer |

| O | Output layer |

| qubit | Quantum bit |

References

- Daskin, A. A quantum implementation model for artificial neural networks. Quanta 2018, 7, 7–18. [Google Scholar] [CrossRef]

- Liu, C.Y.; Chen, C.; Chang, C.T.; Shih, L.M. Single-hidden-layer feed-forward quantum neural network based on Grover learning. Neural Netw. 2013, 45, 144–150. [Google Scholar] [CrossRef] [PubMed]

- Lukac, M.; Abdiyeva, K.; Kameyama, M. CNOT-Measure Quantum Neural Networks. In Proceedings of the 2018 IEEE 48th International Symposium on Multiple-Valued Logic (ISMVL), Linz, Austria, 16–18 May 2018; pp. 186–191. [Google Scholar]

- Li, F.; Xiang, W.; Wang, J.; Zhou, X.; Tang, B. Quantum weighted long short-term memory neural network and its application in state degradation trend prediction of rotating machinery. Neural Netw. 2018, 106, 237–248. [Google Scholar] [CrossRef]

- Janson, N.B.; Marsden, C.J. Dynamical system with plastic self-organized velocity field as an alternative conceptual model of a cognitive system. Sci. Rep. 2017, 7, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Kriegeskorte, N.; Douglas, P. Cognitive computational neuroscience. Nat. Neurosci. 2018, 21, 1148–1160. [Google Scholar] [CrossRef]

- Liu, X.; Liu, W.; Liu, Y.; Wang, Z.; Zeng, N.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Da Silva, A.J.; Ludermir, T.B.; de Oliveira, W.R. Quantum perceptron over a field and neural network architecture selection in a quantum computer. Neural Netw. 2016, 76, 55–64. [Google Scholar] [CrossRef]

- Altaisky, M.V.; Zolnikova, N.N.; Kaputkina, N.E.; Krylov, V.A.; Lozovik, Y.E.; Dattani, N.S. Entanglement in a quantum neural network based on quantum dots. Photonics Nanostruct. Appl. 2017, 24, 24–28. [Google Scholar] [CrossRef]

- Deutsch, D. Quantum Computational Networks. Proc. R. Soc. Lond. Ser. A Math. Phys. Sci. 1989, 425, 73–90. [Google Scholar]

- Shor, P.W. Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. SIAM J. Comput. 1997, 26, 1484–1509. [Google Scholar] [CrossRef]

- Grover, L. A fast quantum mechanical algorithm for database search. In Proceedings of the Twenty-Eighth Annual ACM Symposium on Theory of Computing, STOC’96, Philadelphia, PA, USA, 22–24 May 1996; Volume 129452, pp. 212–219. [Google Scholar]

- Altaisky, M.V.; Zolnikova, N.N.; Kaputkina, N.E.; Krylov, V.A.; Lozovik, Y.E.; Dattani, N.S. Decoherence and Entanglement Simulation in a Model of Quantum Neural Network Based on Quantum Dots. In EPJ Web of Conferences; EDP Sciences: Les Ulis, France, 2016; Volume 108, p. 2006. [Google Scholar]

- Clark, T.; Murray, J.S.; Politzer, P. A perspective on quantum mechanics and chemical concepts in describing noncovalent interactions. Phys. Chem. Chem. Phys. 2018, 2, 376–382. [Google Scholar] [CrossRef] [PubMed]

- Ganjefar, S.; Tofighi, M.; Karami, H. Fuzzy wavelet plus a quantum neural network as a design base for power system stability enhancement. Neural Netw. 2015, 71, 172–181. [Google Scholar] [CrossRef] [PubMed]

- Cui, Y.; Shi, J.; Wang, Z. Complex Rotation Quantum Dynamic Neural Networks (CRQDNN) using Complex Quantum Neuron (CQN): Applications to time series prediction. Neural Netw. 2015, 71, 11–26. [Google Scholar] [CrossRef] [PubMed]

- Gandhi, V.; Prasad, G.; Coyle, D.; Behera, L.; McGinnity, T.M. Evaluating Quantum Neural Network filtered motor imagery brain-computer interface using multiple classification techniques. Neurocomputing 2015, 170, 161–167. [Google Scholar] [CrossRef]

- Schuld, M.; Sinayskiy, I.; Petruccione, F. The quest for a Quantum Neural Network. Quantum Inf. Process. 2014, 13, 2567–2586. [Google Scholar] [CrossRef]

- Takahashi, K.; Kurokawa, M.; Hashimoto, M. Multi-layer quantum neural network controller trained by real-coded genetic algorithm. Neurocomputing 2014, 134, 159–164. [Google Scholar] [CrossRef]

- Khalid, M.; Wu, J.; Ali, T.M.; Moustafa, A.A.; Zhu, Q.; Xiong, R. Green model to adapt classical conditioning learning in the hippocampus. Neuroscience 2019. [Google Scholar] [CrossRef]

- Gluck, M.A.; Myers, C.E. Hippocampal mediation of stimulus representation: A computational theory. Hippocampus 1993, 3, 491–516. [Google Scholar] [CrossRef]

- Moustafa, A.A.; Myers, C.E.; Gluck, M.A. A neurocomputational model of classical conditioning phenomena: A putative role for the hippocampal region in associative learning. Brain Res. 2009, 1276, 180–195. [Google Scholar] [CrossRef]

- Grossberg, S. Embedding fields: A theory of learning with physiological implications. J. Math. Psychol. 1969, 6, 209–239. [Google Scholar] [CrossRef]

- Widrow, B.; Hoff, M.E. Adaptive Switching Circuits. In Proceedings of the 1960 IRE WESCON Convention Record, Los Angeles, CA, USA, 23–26 August 1960; Reprinted in Neurocomputing. MIT Press: Cambridge, MA, USA, 1988; pp. 96–104. [Google Scholar]

- Zhu, H.; Paschalidis, I.C.; Hasselmo, M.E. Neural circuits for learning context-dependent associations of stimuli. Neural Netw. 2018, 107, 48–60. [Google Scholar] [CrossRef] [PubMed]

- Kuchibhotla, K.V.; Gill, J.V.; Lindsay, G.W.; Papadoyannis, E.S.; Field, R.E.; Sten, T.A.H.; Miller, K.D.; Froemke, R.C. Parallel processing by cortical inhibition enables context-dependent behavior. Nat. Neurosci. 2017, 20, 62–71. [Google Scholar] [CrossRef] [PubMed]

- Newman, S.E.; Nicholson, L.R. The Effects of Context Stimuli on Paired-Associate Learning. Am. J. Psychol. 1976, 89, 293–301. [Google Scholar] [CrossRef]

- Bliss-Moreau, E.; Moadab, G.; Santistevan, A.; Amaral, D.G. The effects of neonatal amygdala or hippocampus lesions on adult social behavior. Behav. Brain Res. 2017, 322, 123–137. [Google Scholar] [CrossRef] [PubMed]

- Ito, R.; Robbins, T.W.; McNaughton, B.L.; Everitt, B.J. Selective excitotoxic lesions of the hippocampus and basolateral amygdala have dissociable effects on appetitive cue and place conditioning based on path integration in a novel Y-maze procedure. Eur. J. Neurosci. 2006, 23, 3071–3080. [Google Scholar] [CrossRef]

- Ito, R.; Everitt, B.J.; Robbins, T.W. The hippocampus and appetitive Pavlovian conditioning: Effects of excitotoxic hippocampal lesions on conditioned locomotor activity and autoshaping. Hippocampus 2005, 15, 713–721. [Google Scholar] [CrossRef] [PubMed]

- Eichenbaum, H.; Fagan, A.; Mathews, P.; Cohen, N.J. Hippocampal System Dysfunction and Odor Discrimination Learning in Rats: Impairment or Facilitation Depending on Representational Demands. Behav. Neurosci. 1988, 102, 331–339. [Google Scholar] [CrossRef]

- Schmaltz, L.W.; Theios, J. Acquisition and extinction of a classically conditioned response in hippocampectomized rabbits (Oryctolagus cuniculus). J. Comp. Physiol. Psychol. 1972, 79, 328–333. [Google Scholar] [CrossRef]

- Clawson, W.P.; Wright, N.C.; Wessel, R.; Shew, W.L. Adaptation towards scale-free dynamics improves cortical stimulus discrimination at the cost of reduced detection. PLoS Comput. Biol. 2017, 13, e1005574. [Google Scholar] [CrossRef]

- Lonsdorf, T.B.; Haaker, J.; Schümann, D.; Sommer, T.; Bayer, J.; Brassen, S.; Bunzeck, N.; Gamer, M.; Kalisch, R. Sex differences in conditioned stimulus discrimination during context-dependent fear learning and its retrieval in humans: The role of biological sex, contraceptives and menstrual cycle phases. J. Psychiatry Neurosci. 2015, 40, 368–375. [Google Scholar] [CrossRef][Green Version]

- Hanggi, E.B.; Ingersoll, J.F. Stimulus discrimination by horses under scotopic conditions. Behav. Process. 2009, 82, 45–50. [Google Scholar] [CrossRef] [PubMed]

- McDonald, R.J.; Ko, C.H.; Hong, N.S. Attenuation of context-specific inhibition on reversal learning of a stimulus–response task in rats with neurotoxic hippocampal damage. Behav. Brain Res. 2002, 136, 113–126. [Google Scholar] [CrossRef]

- McDonald, R.J.; King, A.L.; Hong, N.S. Context-specific interference on reversal learning of a stimulus-response habit. Behav. Brain Res. 2001, 121, 149–165. [Google Scholar] [CrossRef]

- Azorlosa, J.L.; Cicala, G.A. Increased conditioning in rats to a blocked CS after the first compound trial. Bull. Psychon. Soc. 1988, 26, 254–257. [Google Scholar] [CrossRef]

- Chang, H.P.; Ma, Y.L.; Wan, F.J.; Tsai, L.Y.; Lindberg, F.P.; Lee, E.H.Y. Functional blocking of integrin-associated protein impairs memory retention and decreases glutamate release from the hippocampus. Neuroscience 2001, 102, 289–296. [Google Scholar] [CrossRef]

- Maes, E.; Boddez, Y.; Alfei, J.M.; Krypotos, A.; D’Hooge, R.; De Houwer, J.; Beckers, T. The elusive nature of the blocking effect: 15 failures to replicate. J. Exp. Psychol. Gen. 2016, 145, e49–e71. [Google Scholar] [CrossRef]

- Sanderson, D.J.; Jones, W.S.; Austen, J.M. The effect of the amount of blocking cue training on blocking of appetitive conditioning in mice. Behav. Process. 2016, 122, 36–42. [Google Scholar] [CrossRef]

- Pineno, O.; Urushihara, K.; Stout, S.; Fuss, J.; Miller, R.R. When more is less: Extending training of the blocking association following compound training attenuates the blocking effect. Learn. Behav. 2006, 34, 21–36. [Google Scholar] [CrossRef]

- Holland, P.C.; Fox, G.D. Effects of Hippocampal Lesions in Overshadowing and Blocking Procedures. Behav. Neurosci. 2003, 117, 650–656. [Google Scholar] [CrossRef]

- Todd Allen, M.; Padilla, Y.; Myers, C.E.; Gluck, M.A. Selective hippocampal lesions disrupt a novel cue effect but fail to eliminate blocking in rabbit eyeblink conditioning. Cogn. Affect. Behav. Neurosci. 2002, 2, 318–328. [Google Scholar] [CrossRef]

- Gallo, M.; Cándido, A. Dorsal Hippocampal Lesions Impair Blocking but Not Latent Inhibition of Taste Aversion Learning in Rats. Behav. Neurosci. 1995, 109, 413–425. [Google Scholar] [CrossRef] [PubMed]

- Kamin, L.J. Predictability, Surprise, Attention, and Conditioning. In Punishment Aversive Behavior; Campbell, B.A., Church, R.M., Eds.; Appleton-Century-Crofts: New York, NY, USA, 1969; pp. 279–296. [Google Scholar]

- Sherratt, T.N.; Whissell, E.; Webster, R.; Kikuchi, D.W. Hierarchical overshadowing of stimuli and its role in mimicry evolution. Anim. Behav. 2015, 108, 73–79. [Google Scholar] [CrossRef]

- Stockhorst, U.; Hall, G.; Enck, P.; Klosterhalfen, S. Effects of overshadowing on conditioned and unconditioned nausea in a rotation paradigm with humans. Exp. Brain Res. 2014, 232, 2651–2664. [Google Scholar] [CrossRef] [PubMed]

- Stout, S.; Arcediano, F.; Escobar, M.; Miller, R.R. Overshadowing as a function of trial number: Dynamics of first- and second-order comparator effects. Learn. Behav. 2003, 31, 85–97. [Google Scholar] [CrossRef] [PubMed]

- Urushihara, K.; Miller, R.R. CS-duration and partial-reinforcement effects counteract overshadowing in select situations. Learn. Behav. 2007, 35, 201–213. [Google Scholar] [CrossRef][Green Version]

- Wisniewski, M.G.; Church, B.A.; Mercado, E.; Radell, M.L.; Zakrzewski, A.C. Easy-to-hard effects in perceptual learning depend upon the degree to which initial trials are “easy”. Psychon. Bull. Rev. 2019. [Google Scholar] [CrossRef]

- Sanjuan, M.D.C.; Nelson, J.B.; Alonso, G. An easy-to-hard effect after nonreinforced preexposure in a sweetness discrimination. Learn. Behav. 2014, 42, 209–214. [Google Scholar] [CrossRef]

- Liu, E.H.; Mercado, E.; Church, B.A.; Orduña, I. The Easy-to-Hard Effect in Human (Homo sapiens) and Rat (Rattus norvegicus) Auditory Identification. J. Comp. Psychol. 2008, 122, 132–145. [Google Scholar] [CrossRef]

- Scahill, V.; Mackintosh, N. The easy to hard effect and perceptual learning in flaor aversion conditioning. J. Exp. Psychol.-Anim. Behav. Process. 2004, 30, 96–103. [Google Scholar] [CrossRef]

- Williams, D.I.; Williams, D.I. Discrimination learning in the pigeon with two relevant cues, one hard and one easy. Br. J. Psychol. 1972, 63, 407–409. [Google Scholar] [CrossRef]

- Doan, H.M.K. Effects of Correction and Non-Correction Training Procedures on ‘Easy’ and ‘Hard’ Discrimination Learning in Children. Psychol. Rep. 1970, 27, 459–466. [Google Scholar] [CrossRef]

- Terrace, H.S.; Terrace, H.S. Discrimination learning with and without “errors”. J. Exp. Anal. Behav. 1963, 6, 1–27. [Google Scholar] [CrossRef] [PubMed]

- Revillo, D.A.; Gaztañaga, M.; Aranda, E.; Paglini, M.G.; Chotro, M.G.; Arias, C. Context-dependent latent inhibition in preweanling rats. Dev. Psychobiol. 2014, 56, 1507–1517. [Google Scholar] [CrossRef] [PubMed]

- Swerdlow, N.R.; Braff, D.L.; Hartston, H.; Perry, W.; Geyer, M.A. Latent inhibition in schizophrenia. Schizophr. Res. 1996, 20, 91–103. [Google Scholar] [CrossRef]

- Lubow, R.E.; Markman, R.E.; Allen, J. Latent inhibition and classical conditioning of the rabbit pinna response. J. Comp. Physiol. Psychol. 1968, 66, 688–694. [Google Scholar] [CrossRef] [PubMed]

- Rumelhart, D.E.; McClelland, J.L.; PDP Research Group (Eds.) Parallel Distributed Processing: Explorations in the Microstructure of Cognition, Volume 1: Foundations; MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Renaux, C.; Riviere, V.; Craddock, P.; Miller, R. Role of spatial contiguity in sensory preconditioning with humans. Behav. Process. 2017, 142, 141–145. [Google Scholar] [CrossRef]

- Holmes, N.M.; Westbrook, R.F. A dangerous context changes the way that rats learn about and discriminate between innocuous events in sensory preconditioning. Learn. Mem. 2017, 24, 440–448. [Google Scholar] [CrossRef] [PubMed]

- Robinson, S.; Todd, T.P.; Pasternak, A.R.; Luikart, B.W.; Skelton, P.D.; Urban, D.J.; Bucci, D.J. Chemogenetic silencing of neurons in retrosplenial cortex disrupts sensory preconditioning. J. Neurosci. 2014, 34, 10982–10988. [Google Scholar] [CrossRef]

- Cerri, D.H.; Saddoris, M.P.; Carelli, R.M. Nucleus accumbens core neurons encode value-independent associations necessary for sensory preconditioning. Behav. Neurosci. 2014, 128, 567–578. [Google Scholar] [CrossRef]

- Matsumoto, Y.; Hirashima, D.; Mizunami, M. Analysis and modeling of neural processes underlying sensory preconditioning. Neurobiol. Learn. Mem. 2013, 101, 103–113. [Google Scholar] [CrossRef]

- Rodriguez, G.; Alonso, G. Stimulus comparison in perceptual learning: Roles of sensory preconditioning and latent inhibition. Behav. Process. 2008, 77, 400–404. [Google Scholar] [CrossRef] [PubMed]

- Espinet, A.; González, F.; Balleine, B.W. Inhibitory sensory preconditioning. Q. J. Exp. Psychol. Sect. B 2004, 57, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Muller, D.; Gerber, B.; Hellstern, F.; Hammer, M.; Menzel, R. Sensory preconditioning in honeybees. J. Exp. Biol. 2000, 203, 1351–1364. [Google Scholar] [PubMed]

- Nicholson, D.A.; Freeman, J.H., Jr. Lesions of the perirhinal cortex impair sensory preconditioning in rats. Behav. Brain Res. 2000, 112, 69–75. [Google Scholar] [CrossRef]

- Rodriguez, G.; Angulo, R. Simultaneous stimulus preexposure enhances human tactile perceptual learning. Psicologica 2014, 35, 139–148. [Google Scholar]

- Hayes, S.M.; Baena, E.; Truong, T.K.; Cabeza, R. Neural Mechanisms of Context Effects on Face Recognition: Automatic Binding and Context Shift Decrements. J. Cogn. Neurosci. 2010, 22, 2541–2554. [Google Scholar] [CrossRef]

- Weiner, I. The ‘two-headed’ latent inhibition model of schizophrenia: Modeling positive and negative symptoms and their treatment. Psychopharmacology 2003, 169, 257–297. [Google Scholar] [CrossRef]

- Talk, A.; Stoll, E.; Gabriel, M. Cingulate Cortical Coding of Context-Dependent Latent Inhibition. Behav. Neurosci. 2005, 119, 1524–1532. [Google Scholar] [CrossRef] [PubMed]

- Gray, N.S.; Williams, J.; Fernandez, M.; Ruddle, R.A.; Good, M.A.; Snowden, R.J. Context dependent latent inhibition in adult humans. Q. J. Exp. Psychol. Sect. B 2001, 54, 233–245. [Google Scholar] [CrossRef] [PubMed]

| No. | Task Name | Phase 1 | Phase 2 | Phase 3 |

|---|---|---|---|---|

| 1A | A+ | AX+ | — | — |

| 1B | A− | AX− | — | — |

| 2 | Stimulus discrimination | AX+, BX− | — | — |

| 3 | Discrimination reversal | AX+, BX− | AX−, BX+ | — |

| 4 | Blocking | AX+ | ABX+ | BX− |

| 5 | Overshadowing | ABX+ | AX+; BX+ | — |

| 6 | Easy–Hard transfer | A1X+, A2X− | A3X+, A4X− | — |

| 7 | Latent inhibition | AX− | AX+ | — |

| 8 | Sensory preconditioning | ABX− | AX+ | BX− |

| 9 | Compound preconditioning | ABX− | AX+, BX− | — |

| 10A | Context sensitivity (Context shift) | AX+ | AY+ | — |

| 10B | Context sensitivity of latent inhibition | AX− | AY+ | — |

| 11 | Generic feedforward multilayer network | AX− | AX+ | — |

| Phase 1 | Phase 2 | Phase 3 | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Intact | Lesioned | Intact | Lesioned | Intact | Lesioned | ||||||||||||||

| (a) | (b) | (c) | (d) | Improvement | (e) | (f) | (g) | (h) | Improvement | (i) | (j) | (k) | (l) | Improvement | |||||

| No. | Task Name | M1 | CHCQ | M1 | CHCQ | b vs. a | d vs. c | M1 | CHCQ | M1 | CHCQ | f vs. e | h vs. g | M1 | CHCQ | M1 | CHCQ | j vs. i | l vs. k |

| 1 | Stimulus discrimination | >200 | 24 | >200 | 17 | 88.0% | 91.5% | — | — | — | — | — | — | — | — | — | — | — | |

| 2 | Reversal learning | >200 | 24 | >200 | 17 | 88.0% | 91.5% | >200 | 22 | >400 | 32 | 89.0% | 92.0% | — | — | — | — | — | — |

| 3 | Easy–Hard transfer learning | >200 | 27 | >200 | 19 | 86.5% | 90.5% | >1000 | 34 | >1000 | 20 | 96.6% | 98% | — | — | — | — | — | — |

| 4 | Latent inhibition | 50 | 50 | 50 | 50 | 00.0% | 00.0% | >100 | 31 | >100 | 18 | 69.0% | 82.0% | — | — | — | — | — | — |

| 5 | Sensory preconditioning | 200 | 50 | — | — | 75.0% | — | >100 | 31 | — | — | 69.0% | — | 50 | 50 | — | — | 00.0% | — |

| 6 | Compound preconditioning | 20 | 20 | — | — | 00.0% | — | >100 | 32 | — | — | 68.0% | — | — | — | — | — | — | — |

| 7 | Generic feedforward multilayer network | — | — | 100 | 50 | — | 50.0% | — | — | >200 | 21 | — | 89.5% | — | — | — | — | — | — |

| 8 | Contextual sensitivity | >200 | 24 | >200 | 17 | 88.0% | 91.5% | >200 | 1 | >200 | 1 | 99.5% | 99.5% | — | — | — | — | — | — |

| Phase 1 | Phase 2 | Phase 3 | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Intact | Lesioned | Intact | Lesioned | Intact | Lesioned | ||||||||||||||

| (a) | (b) | (c) | (d) | Improvement | (e) | (f) | (g) | (h) | Improvement | (i) | (j) | (k) | (l) | Improvement | |||||

| No. | Task Name | M2 | CHCQ | M2 | CHCQ | b vs. a | d vs. c | M2 | CHCQ | M2 | CHCQ | f vs. e | h vs. g | M2 | CHCQ | M2 | CHCQ | j vs. i | l vs. k |

| 1 | A+ | >100 | 23 | >100 | 18 | 77.0% | 82.0% | — | — | — | — | — | — | — | — | — | — | — | — |

| 2 | A− | >100 | 2 | >100 | 2 | 98.0% | 98.0% | — | — | — | — | — | — | — | — | — | — | — | — |

| 3 | Sensory preconditioning | 100 | 50 | — | — | 50.0% | — | >100 | 31 | — | — | 69.0% | — | 50 | 50 | — | — | 00.0% | — |

| 4 | Latent inhibition | 50 | 50 | 50 | 50 | 00.0% | 00.0% | >100 | 31 | >100 | 18 | 69.0% | 82.0% | — | — | — | — | — | — |

| 5 | Context shift | 100 | 50 | — | — | 50.0% | — | 1 | 1 | — | — | 00.0% | — | — | — | — | — | — | — |

| 6 | Context sensitivity of latent inhibition | 100 | 50 | — | — | 50.0% | — | 1 | 1 | — | — | 00.0% | — | — | — | — | — | — | — |

| 7 | Easy–Hard transfer learning | >100 | 17 | — | — | 83.0% | — | >100 | 19 | — | — | 81.0% | — | — | — | — | — | — | — |

| 8 | Blocking | >100 | 23 | >100 | 18 | 77.0% | 82.0% | >100 | 24 | >100 | 17 | 76.0% | 83.0% | >100 | 12 | >100 | 3 | 88.0% | 97.0% |

| 9 | Compound preconditioning | 100 | 20 | — | — | 80.0% | — | >200 | 32 | — | — | 84.0% | — | — | — | — | — | — | — |

| 10 | Overshadowing | 100 | 20 | 100 | 20 | 80.0% | 80.0% | >100 | 25 | >100 | 22 | 75.0% | 78.0% | — | — | — | — | — | — |

| Phase 1 | Phase 2 | Phase 3 | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Intact | Lesioned | Intact | Lesioned | Intact | Lesioned | ||||||||||||||

| (a) | (b) | (c) | (d) | Improvement | (e) | (f) | (g) | (h) | Improvement | (i) | (j) | (k) | (l) | Improvement | |||||

| No. | Task Name | G | CHCQ | G | CHCQ | b vs. a | d vs. c | G | CHCQ | G | CHCQ | f vs. e | h vs. g | G | CHCQ | G | CHCQ | j vs. i | l vs. k |

| 1A | A+ | 32 | 23 | 28 | 18 | 28.1% | 35.7% | — | — | — | — | — | — | — | — | — | — | — | — |

| 1B | A− | 2 | 2 | 2 | 2 | 00.0% | 00.0% | — | — | — | — | — | — | — | — | — | — | — | — |

| 2 | Stimulus discrimination | 33 | 24 | 20 | 17 | 27.2% | 15.0% | — | — | — | — | — | — | — | — | — | — | — | — |

| 3 | Discrimination reversal | 33 | 24 | 20 | 17 | 27.2% | 15.0% | 31 | 22 | 38 | 32 | 29.0% | 15.7% | — | — | — | — | — | — |

| 4 | Blocking | 32 | 23 | 28 | 18 | 28.1% | 35.7% | 32 | 24 | 28 | 17 | 25.0% | 39.2% | 23 | 12 | 7 | 3 | 47.8% | 57.1% |

| 5 | Overshadowing | 20 | 20 | 20 | 20 | 00.0% | 00.0% | 26 | 25 | 27 | 22 | 03.8% | 18.5% | — | — | — | — | — | — |

| 6 | Easy–Hard transfer | 35 | 27 | 25 | 19 | 82.5% | 87.5% | 38 | 34 | 27 | 20 | 10.5% | 25.9% | — | — | — | — | — | — |

| 7 | Latent inhibition | 50 | 50 | 50 | 50 | 00.0% | 00.0% | 41 | 31 | 24 | 18 | 24.3% | 25.0% | — | — | — | — | — | — |

| 8 | Sensory preconditioning | 50 | 50 | — | — | 00.0% | — | 37 | 31 | — | — | 16.2% | — | 50 | 50 | — | — | 00.0% | — |

| 9 | Compound preconditioning | 20 | 20 | — | — | 00.0% | — | 34 | 32 | — | — | 05.8% | — | — | — | — | — | — | — |

| 10A | Context sensitivity | 33 | 24 | 20 | 17 | 27.7% | 15.0% | 1 | 1 | 1 | 1 | 00.0% | 00.0% | — | — | — | — | — | — |

| 10B | Context sensitivity of latent inhibition | 50 | 50 | — | — | 00.0% | — | 1 | 1 | — | — | 00.0% | — | — | — | — | — | — | — |

| 11 | Generic feedforward multilayer network | — | — | 50 | 50 | — | 00.0% | — | — | 24 | 21 | — | 12.5% | — | — | — | — | — | — |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khalid, M.; Wu, J.; M. Ali, T.; Ameen, T.; Moustafa, A.A.; Zhu, Q.; Xiong, R. Cortico-Hippocampal Computational Modeling Using Quantum Neural Networks to Simulate Classical Conditioning Paradigms. Brain Sci. 2020, 10, 431. https://doi.org/10.3390/brainsci10070431

Khalid M, Wu J, M. Ali T, Ameen T, Moustafa AA, Zhu Q, Xiong R. Cortico-Hippocampal Computational Modeling Using Quantum Neural Networks to Simulate Classical Conditioning Paradigms. Brain Sciences. 2020; 10(7):431. https://doi.org/10.3390/brainsci10070431

Chicago/Turabian StyleKhalid, Mustafa, Jun Wu, Taghreed M. Ali, Thaair Ameen, Ahmed A. Moustafa, Qiuguo Zhu, and Rong Xiong. 2020. "Cortico-Hippocampal Computational Modeling Using Quantum Neural Networks to Simulate Classical Conditioning Paradigms" Brain Sciences 10, no. 7: 431. https://doi.org/10.3390/brainsci10070431

APA StyleKhalid, M., Wu, J., M. Ali, T., Ameen, T., Moustafa, A. A., Zhu, Q., & Xiong, R. (2020). Cortico-Hippocampal Computational Modeling Using Quantum Neural Networks to Simulate Classical Conditioning Paradigms. Brain Sciences, 10(7), 431. https://doi.org/10.3390/brainsci10070431