Movidea: A Software Package for Automatic Video Analysis of Movements in Infants at Risk for Neurodevelopmental Disorders

Abstract

1. Introduction

2. Materials and Methods

2.1. Video Database of the NIDA Network

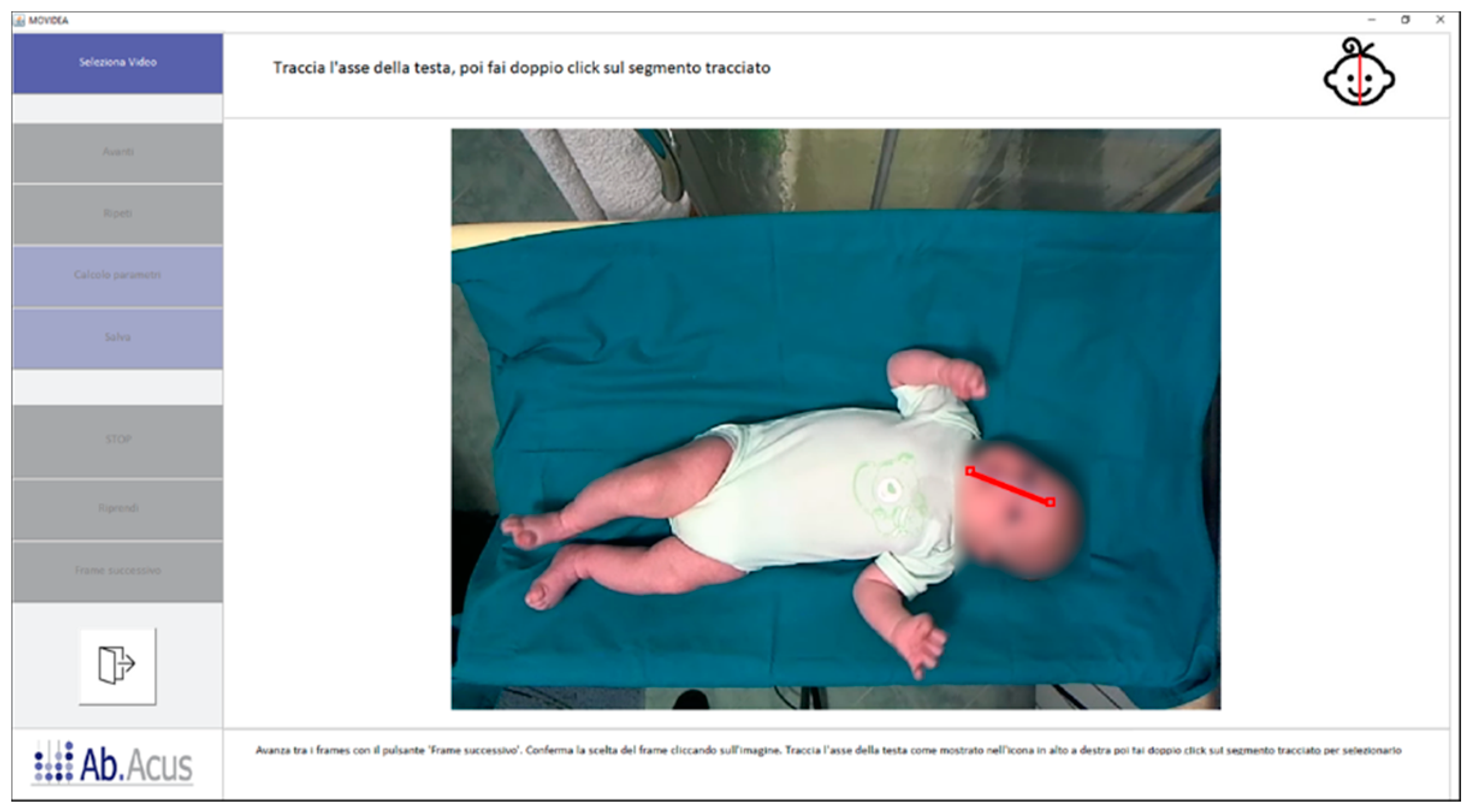

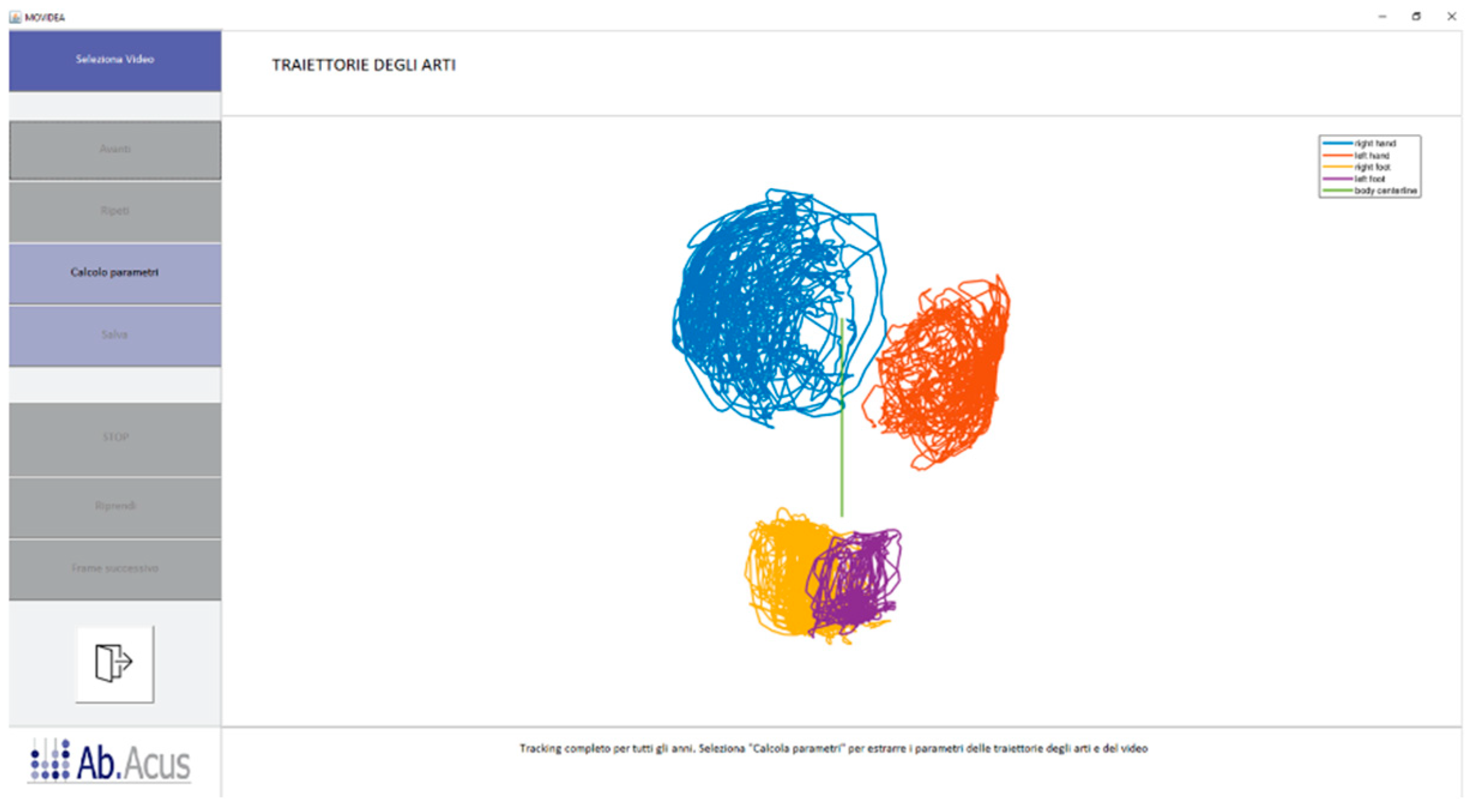

2.2. Movidea Software

2.3. Movement Tracking

2.4. Image Processing

2.5. Software Validation

3. Results

4. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Ismail, F.Y.; Fatemi, A.; Johnston, M.V. Cerebral plasticity: Windows of opportunity in the developing brain. Eur. J. Paediatr. Neurol. 2017, 21, 23–48. [Google Scholar] [CrossRef]

- Ghassabian, A.; Sundaram, R.; Bell, E.; Bello, S.C.; Kus, C.; Yeung, E. Gross motor milestones and subsequent development. Pediatrics 2016, 138, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Van Batenburg-Eddes, T.; Henrichs, J.; Schenk, J.J.; Sincer, I.; De Groot, L.; Hofman, A.; Jaddoe, V.W.V.; Verhulst, F.C.; Tiemeier, H. Early infant neuromotor assessment is associated with language and nonverbal cognitive function in toddlers: The generation R study. J. Dev. Behav. Pediatr. 2013, 34, 326–334. [Google Scholar] [CrossRef]

- Brown, T.; Lalor, A. The Movement Assessment Battery for Children—Second edition (MABC-2): A review and critique. Phys. Occup. Ther. Pediatr. 2009, 29, 86–103. [Google Scholar] [CrossRef] [PubMed]

- Kakebeeke, T.H.; Chaouch, A.; Knaier, E.; Caflisch, J.; Rousson, V.; Largo, R.H.; Jenni, O.G. A quick and qualitative assessment of gross motor development in preschool children. Eur. J. Pediatr. 2019, 178, 565–573. [Google Scholar] [CrossRef] [PubMed]

- Kjølbye, C.B.; Bo Drivsholm, T.; Ertmann, R.K.; Lykke, K.; Køster-Rasmussen, R. Motor function tests for 0-2-year-old children—A systematic review. Dan. Med. J. 2018, 65, 1–8. [Google Scholar]

- Prechtl, H.F.R. Qualitative changes of spontaneous movements in fetus and preterm infant are a marker of neurological dysfunction. Early Hum. Dev. 1990, 23, 151–158. [Google Scholar] [CrossRef]

- Hadders-Algra, M. Neural substrate and clinical significance of general movements: An update. Dev. Med. Child Neurol. 2018, 60, 39–46. [Google Scholar] [CrossRef]

- Einspieler, C.; Sigafoos, J.; Bartl-Pokorny, K.D.; Landa, R.; Marschik, P.B.; Bölte, S. Highlighting the first 5 months of life: General movements in infants later diagnosed with autism spectrum disorder or Rett syndrome. Res. Autism Spectr. Disord. 2014, 8, 286–291. [Google Scholar] [CrossRef]

- Hadders-Algra, M. Putative neural substrate of normal and abnormal general movements. Neurosci. Biobehav. Rev. 2007, 31, 1181–1190. [Google Scholar] [CrossRef]

- Einspieler, C.; Bos, A.F.; Libertus, M.E.; Marschik, P.B. The general movement assessment helps us to identify preterm infants at risk for cognitive dysfunction. Front. Psychol. 2016, 7, 406. [Google Scholar] [CrossRef]

- Einspieler, C.; Prechtl, H.F.R.; Ferrari, F.; Cioni, G.; Bos, A.F. The qualitative assessment of general movements in preterm, term and young infants—Review of the methodology. Early Hum. Dev. 1997, 50, 47–60. [Google Scholar] [CrossRef]

- Adde, L.; Rygg, M.; Lossius, K.; Øberg, G.K.; Støen, R. General movement assessment: Predicting cerebral palsy in clinical practise. Early Hum. Dev. 2007, 83, 13–18. [Google Scholar] [CrossRef] [PubMed]

- Adde, L.; Helbostad, J.L.; Jensenius, A.R.; Taraldsen, G.; Støen, R. Using computer-based video analysis in the study of fidgety movements. Early Hum. Dev. 2009, 85, 541–547. [Google Scholar] [CrossRef] [PubMed]

- Waldmeier, S.; Grunt, S.; Delgado-Eckert, E.; Latzin, P.; Steinlin, M.; Fuhrer, K.; Frey, U. Correlation properties of spontaneous motor activity in healthy infants: A new computer-assisted method to evaluate neurological maturation. Exp. Brain Res. 2013, 227, 433–446. [Google Scholar] [CrossRef] [PubMed]

- Karch, D.; Kim, K.S.; Wochner, K.; Pietz, J.; Dickhaus, H.; Philippi, H. Quantification of the segmental kinematics of spontaneous infant movements. J. Biomech. 2008, 41, 2860–2867. [Google Scholar] [CrossRef]

- Breitbach-Faller, N.; Rau, G.; Damen, R.; Meinecke, L.; Bartz, C.; Disselhorst-Klug, C. Movement analysis in the early detection of newborns at risk for developing spasticity due to infantile cerebral palsy. Hum. Mov. Sci. 2006, 25, 125–144. [Google Scholar]

- Ouss, L.; Le Normand, M.T.; Bailly, K.; Gille, M.L.; Gosme, C.; Simas, R.; Wenke, J.; Jeudon, X.; Thepot, S.; Da Silva, T.; et al. Developmental trajectories of hand movements in typical infants and those at risk of developmental disorders: An observational study of kinematics during the first year of life. Front. Psychol. 2018, 9, 1–15. [Google Scholar] [CrossRef]

- Hesse, N.; Pujades, S.; Black, M.; Arens, M.; Hofmann, U.; Schroeder, S. Learning and Tracking the 3D Body Shape of Freely Moving Infants from RGB-D sequences. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 14, 1. [Google Scholar] [CrossRef]

- Marcroft, C.; Khan, A.; Embleton, N.D.; Trenell, M.; Plötz, T. Movement recognition technology as a method of assessing spontaneous general movements in high risk infants. Front. Neurol. 2015, 6, 284. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the 7th International Joint Conference on Artificial Intelligence, University of British Columbia, Vancouver, BC, Canada, 24–28 August 1981. [Google Scholar]

- Adde, L.; Helbostad, J.L.; Jensenius, A.R.; Taraldsen, G.; Grunewaldt, K.H.; StØen, R. Early prediction of cerebral palsy by computer-based video analysis of general movements: A feasibility study. Dev. Med. Child Neurol. 2010, 52, 773–778. [Google Scholar] [CrossRef] [PubMed]

- McGraw, K.O.; Wong, S.P. Forming Inferences about Some Intraclass Correlation Coefficients. Psychol. Methods 1996, 1, 30. [Google Scholar] [CrossRef]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- Cicchetti, D.V. Guidelines, Criteria, and Rules of Thumb for Evaluating Normed and Standardized Assessment Instruments in Psychology. Psychol. Assess. 1994, 6, 284–290. [Google Scholar] [CrossRef]

- Garrido, D.; Petrova, D.; Watson, L.R.; Garcia-Retamero, R.; Carballo, G. Language and motor skills in siblings of children with autism spectrum disorder: A meta-analytic review. Autism. Res. 2017, 10, 1737–1750. [Google Scholar] [CrossRef] [PubMed]

- Marchi, V.; Hakala, A.; Knight, A.; D’Acunto, F.; Scattoni, M.L.; Guzzetta, A.; Vanhatalo, S. Automated pose estimation captures key aspects of General Movements at eight to 17 weeks from conventional videos. Acta Paediatr. 2019, 108, 1817–1824. [Google Scholar] [CrossRef]

- Orlandi, S.; Guzzetta, A.; Bandini, A.; Belmonti, V.; Barbagallo, S.D.; Tealdi, G.; Mazzotti, S.; Scattoni, M.L.; Manfredi, C. AVIM—A contactless system for infant data acquisition and analysis: Software architecture and first results. Biomed. Signal Process. Control 2015, 20, 85–99. [Google Scholar] [CrossRef]

| Subjects | Age of Recording | |||||

|---|---|---|---|---|---|---|

| Risk | Sex | 10 days | 6 weeks | 12 weeks | 18 weeks | 24 weeks |

| n | n | n | n | n | ||

| Low risk | M | 14 | 23 | 22 | 20 | 18 |

| F | 8 | 15 | 16 | 9 | 11 | |

| High risk | M | 13 | 14 | 16 | 16 | 13 |

| F | 13 | 14 | 16 | 16 | 13 | |

| Subject | Risk | Sex | Age of Recording |

|---|---|---|---|

| 1 | Low risk | F | 12 weeks |

| 1 | Low risk | F | 18 weeks |

| 1 | Low risk | F | 24 weeks |

| 2 | Low risk | M | 12 weeks |

| 2 | Low risk | M | 24 weeks |

| Limb | Axis | Correlation Coefficient |

|---|---|---|

| Right Hand | x | 0.991 ± 0.004 |

| y | 0.990 ± 0.005 | |

| Left Hand | x | 0.992 ± 0.003 |

| y | 0.980 ± 0.035 | |

| Fight Foot | x | 0.989 ± 0.005 |

| y | 0.966 ± 0.037 | |

| Left Foot | x | 0.973 ± 0.028 |

| y | 0.964 ± 0.034 |

| Feature | ICC |

|---|---|

| Mean velocity | 0.98 |

| Mean acceleration | 0.99 |

| Area from moving average | 0.97 |

| Cross-correlation coefficient | 0.96 |

| Intersections mean distance | 0.87 |

| Total number of intersections | 0.94 |

| Periodicity | 0.97 |

| Feature | Name | z Contribution (%) |

|---|---|---|

| Amarh | Area from moving average right hand | 36.7 ± 3.4 |

| Amalh | Area from moving average left hand | 41.6 ± 5.5 |

| Amarf | Area from moving average right foot | 37.9 ± 4.4 |

| Amalf | Area from moving average left foot | 35.7 ± 1.4 |

| Intersections mean distance right hand | 16.8 ± 6.9 | |

| Intersections mean distance left hand | 11.3 ± 1.1 | |

| Intersections mean distance right foot | 16.5 ± 6.2 | |

| Intersections mean distance left foot | 18.0 ± 4.3 | |

| Total number of intersections right hand | 44.0 ± 10.0 | |

| Total number of intersections left hand | 53.9 ± 2.5 | |

| Total number of intersections right foot | 45.9 ± 10.8 | |

| Total number of intersections left foot | 43.4 ± 8.1 | |

| Periodicity right hand | 46.2 ± 10.1 | |

| Periodicity left hand | 52.4 ± 1.7 | |

| Periodicity right foot | 49.1 ± 11.8 | |

| Periodicity left foot | 47.2 ± 12.7 |

| End-Effector | Failure (%) |

|---|---|

| Right hand | 9.7 ± 6.7 |

| Left hand | 10.3 ± 6.7 |

| Right foot | 15.2 ± 9.3 |

| Left foot | 14.5 ± 9.2 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baccinelli, W.; Bulgheroni, M.; Simonetti, V.; Fulceri, F.; Caruso, A.; Gila, L.; Scattoni, M.L. Movidea: A Software Package for Automatic Video Analysis of Movements in Infants at Risk for Neurodevelopmental Disorders. Brain Sci. 2020, 10, 203. https://doi.org/10.3390/brainsci10040203

Baccinelli W, Bulgheroni M, Simonetti V, Fulceri F, Caruso A, Gila L, Scattoni ML. Movidea: A Software Package for Automatic Video Analysis of Movements in Infants at Risk for Neurodevelopmental Disorders. Brain Sciences. 2020; 10(4):203. https://doi.org/10.3390/brainsci10040203

Chicago/Turabian StyleBaccinelli, Walter, Maria Bulgheroni, Valentina Simonetti, Francesca Fulceri, Angela Caruso, Letizia Gila, and Maria Luisa Scattoni. 2020. "Movidea: A Software Package for Automatic Video Analysis of Movements in Infants at Risk for Neurodevelopmental Disorders" Brain Sciences 10, no. 4: 203. https://doi.org/10.3390/brainsci10040203

APA StyleBaccinelli, W., Bulgheroni, M., Simonetti, V., Fulceri, F., Caruso, A., Gila, L., & Scattoni, M. L. (2020). Movidea: A Software Package for Automatic Video Analysis of Movements in Infants at Risk for Neurodevelopmental Disorders. Brain Sciences, 10(4), 203. https://doi.org/10.3390/brainsci10040203