Advances in Multimodal Emotion Recognition Based on Brain–Computer Interfaces

Abstract

1. Introduction

- (1)

- We update and extend the definition of multimodal aBCI. Three main types of multimodal aBCI were devised: aBCI based on a combination of behavior and brain signals, aBCI based on various hybrid neurophysiology modalities and aBCI based on heterogeneous sensory stimuli;

- (2)

- For each type of aBCI, we have reviewed several representative multimodal aBCI systems and analyze the main components of each system, including design principles, stimuli paradigms, fusion methods, experimental results and relative advantages. We find that emotion-recognition models based on multimodal BCI achieve better performances than do models based on single-modality BCI;

- (3)

- Finally, we identify the current challenges in the academic research and engineering application of emotion recognition and provide some potential solutions.

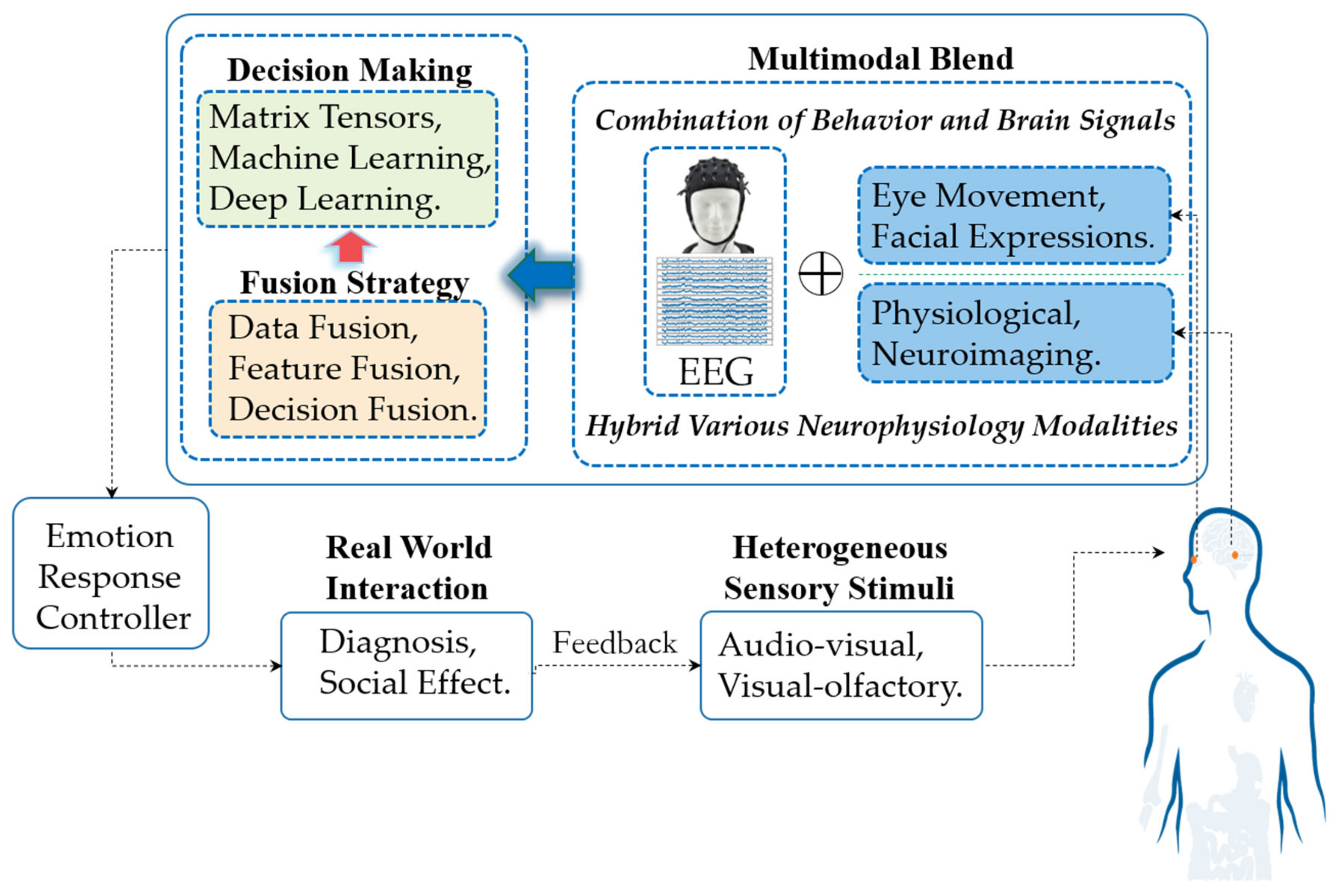

2. Overview of Multimodal Emotion Recognition Based on BCI

2.1. Multimodal Affective BCI

- (1)

- In the signal acquisition and input stage, modality data from combined brain signals and behavior modalities (such as expression and eye movement) or various hybrid neurophysiology modalities (such as functional Near-infrared spectroscopy (fNIRS) and fMRI) induced by heterogeneous sensory stimuli (such as audio-visual stimulation) during interaction with other groups or by certain events are captured by the system’s signal acquisition devices. At this stage, multimodal aBCI can be divided into three main categories: aBCI based on a combination of behavior and brain signals, aBCI based on various hybrid neurophysiology modalities and aBCI based on heterogeneous sensory stimuli. For each type of multimodal aBCI, the principles are further summarized, and several representative aBCI systems are highlighted in Section 3, Section 4 and Section 5.

- (2)

- In signal processing, obtaining pure EEG signals containing emotional signals is critical. For neurophysiological signals, the preprocessing process is to denoise and remove artifacts from the collected original signals. For image signals such as expressions, irrelevant information in images is eliminated and information representing emotional state is restored and enhanced. In the stage of modalities fusion, we can use different fusion strategies, including data fusion, feature fusion and decision fusion. In decision-making, emotional state decision output can select spatial tensors [16,17], machine learning [18,19], or deep learning methods [20,21], as the final classification decision-maker.

- (3)

- Multimodal aBCI systems output relevant emotional states. If the task involves only emotional recognition, it does not require emotional feedback control; the feedback occurs through a relevant interface (such as diagnosis). However, if the task involves social effects (such as wheelchair control), the user’s state information needs to be included directly in the closed-loop system to automatically modify the behavior of the interface with which the user is interacting. Many aBCI studies are based on investigating and recognizing user’ emotional states. The applied domains for these studies are varied and include such fields as medicine, education, driverless vehicle and entertainment.

2.2. The Foundations of Multimodal Emotion Recognition

2.2.1. Multimodal Fusion Method

2.2.2. The Multimodal Open Database and Its Research on Representativeness

3. Combination of Behavior and Brain Signals

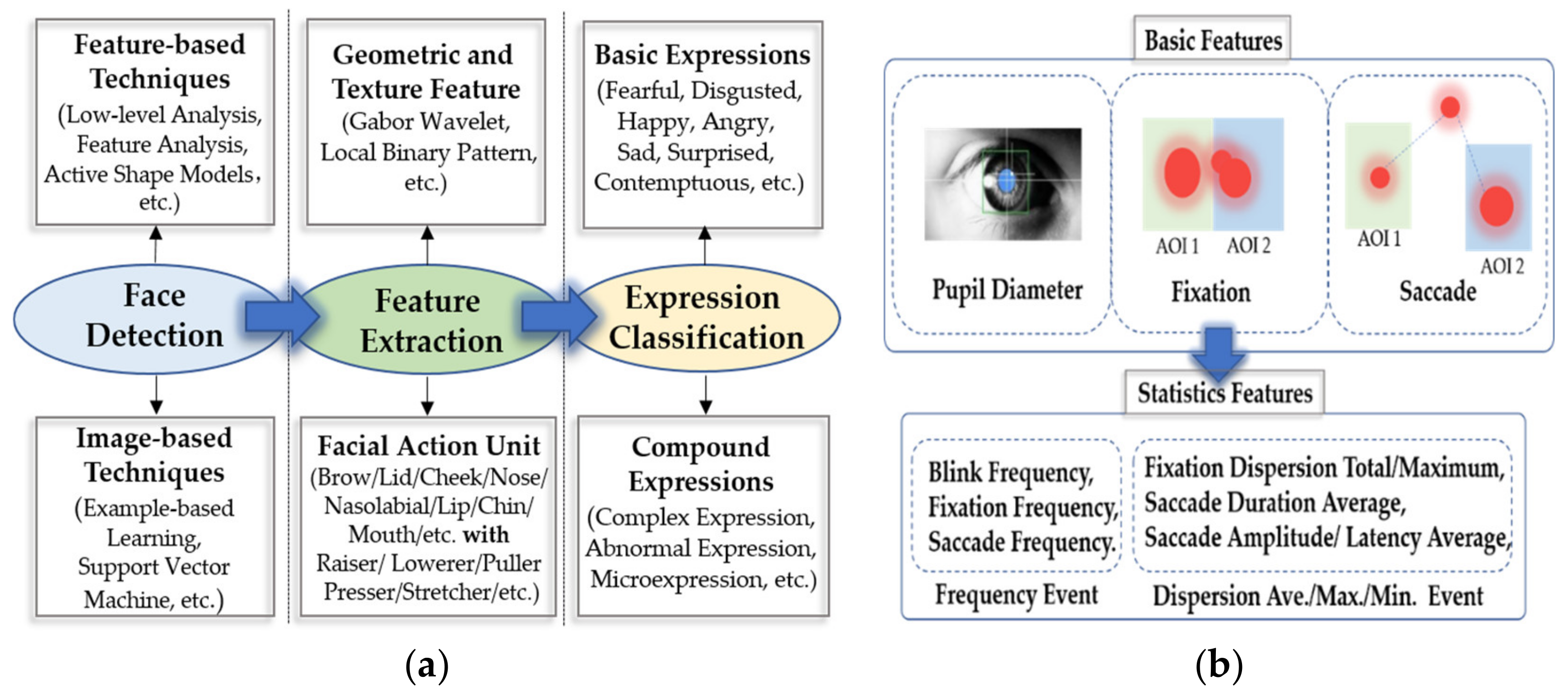

3.1. EEG and Eye Movement

3.2. EEG and Facial Expressions

4. Various Hybrid Neurophysiology Modalities

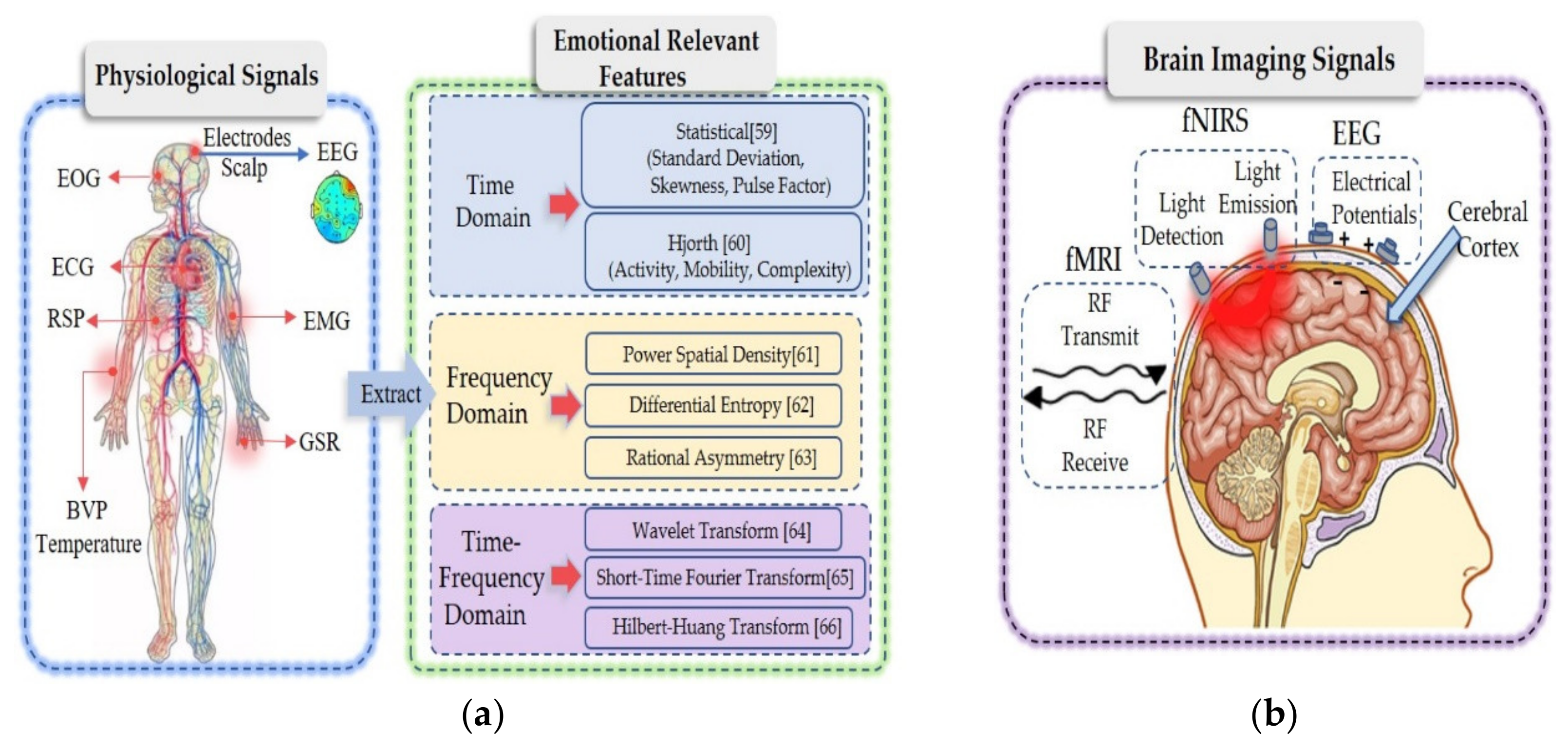

4.1. EEG and Peripheral Physiology

4.2. EEG and Other Neuroimaging Modality

5. Heterogeneous Sensory Stimuli

5.1. Audio-Visual Emotion Recognition

5.2. Visual-Olfactory Emotion Recognition

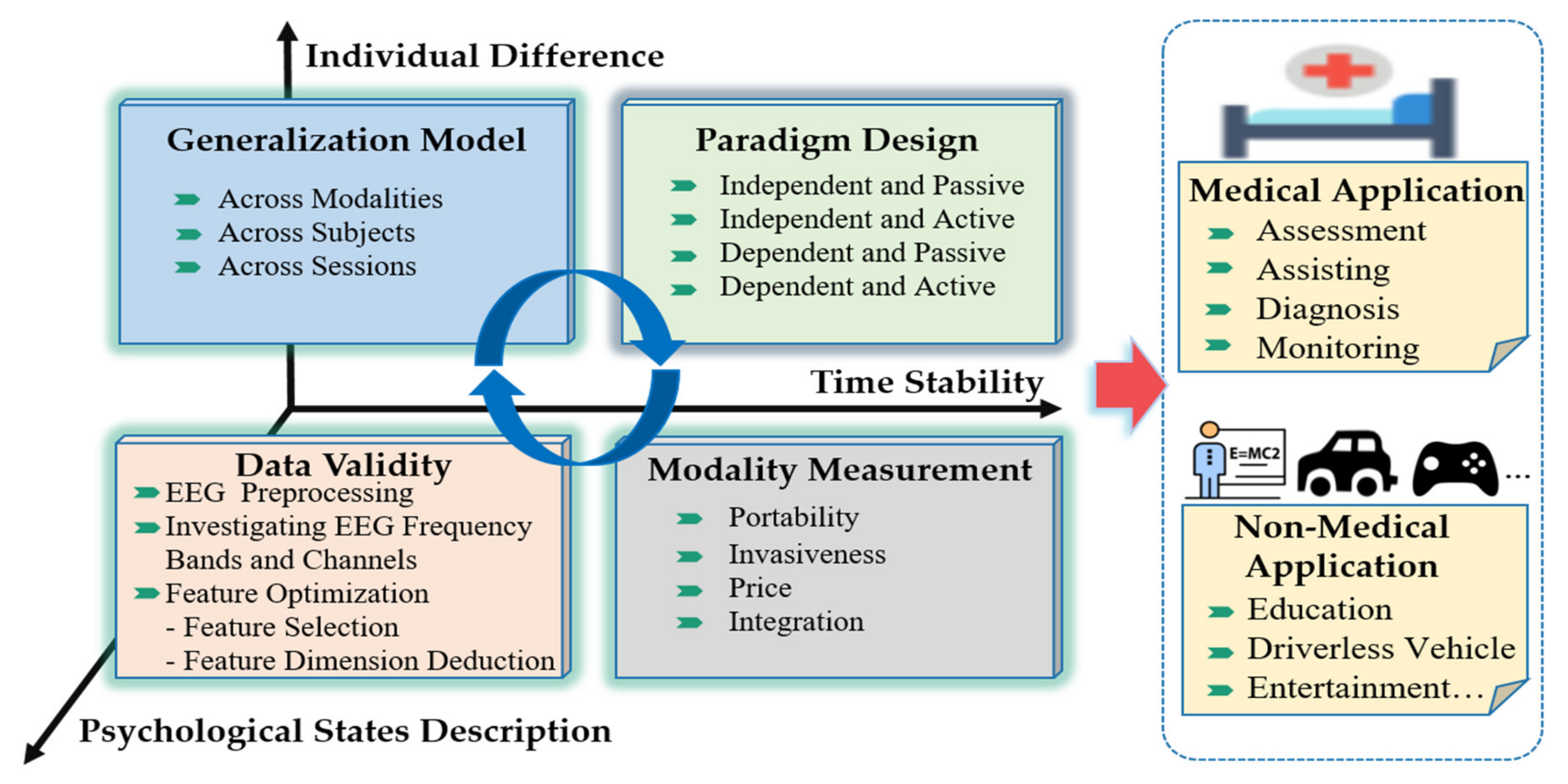

6. Open Challenges and Opportunities

6.1. Paradigm Design

6.1.1. Stimulus-Independent and Passive Paradigms

6.1.2. Stimulus-Independent and Active Paradigms

6.1.3. Stimulus-Dependent and Passive Paradigms

6.1.4. Stimulus-Dependent and Active Paradigms

6.2. Modality Measurement

6.3. Data Validity

6.3.1. EEG Noise Reduction and Artifact Removal

6.3.2. EEG Bands and Channels Selecting

6.3.3. Feature Optimization

- Feature selection. Selecting features with a strong ability to represent emotional state is highly important in emotion recognition tasks. Many algorithms exist that can reduce dimensionality by removing redundant or irrelevant features, such as particle swarm optimization (PSO) [122] and the genetic algorithm (GA) [123].

- Feature dimension deduction. The essence of feature dimension reduction is a mapping function that maps high-dimensional data to a low-dimensional space and creates new features through linear or nonlinear transformations of the original eigenvalues. The dimensionality reduction methods are divided into linear and nonlinear dimensionality reduction. Examples of linear dimensionality reduction include principal component analysis (PCA) [124] and independent component analysis (ICA) [125], etc. Nonlinear dimensionality reduction is divided into methods based on kernel functions and methods based on eigenvalues, such as kernel-PCA [126].

6.4. Generalization of Model

- (1)

- A general classifier is trained, but the traditional machine-learning algorithms are based on the assumption that the training data and the test data are independently and identically distributed. Therefore, when the general classifier encounters new domain data, its performance often degrades sharply.

- (2)

- Look for effective and stable feature patterns related to emotion. The authors of [69] found that the lateral temporal areas activate more for positive emotions than for negative emotions in the beta and gamma bands, that the neural patterns of neutral emotions have higher alpha responses at parietal and occipital sites and that negative emotional patterns have significantly higher delta responses at parietal and occipital sites and higher gamma responses at prefrontal sites. Their experimental results also indicate that the EEG patterns that remain stable across sessions exhibit consistency among repeated EEG measurements for same participant. However, more stable patterns still need to be explored.

- (3)

- For the problem of differences in data distribution, the most mature and effective approach is to use transfer-learning to reduce the differences between different domains as much as possible. Assume that and represent the source domain and the target domain, respectively, where is the sample data and the label. To overcome the three challenges, we need to find a feature transformation function that makes the edge probability distribution and the conditional probability distribution satisfy and , respectively.

- For the subject–subject problem, other objects are the source domain, and the new object is the target domain. Due to the large differences in EEG among different subjects, it is traditional to train a model for each subject, but this practice of user dependence is not in line with our original intention and cannot meet the model generalization requirements. In [127], the authors proposed a novel method for personalizing EEG-based affective models with transfer-learning techniques. The affective models are personalized and constructed for a new target subject without any labeled training information. The experimental results demonstrated that their transductive parameter transfer approach significantly outperforms other approaches in terms of accuracy. Transductive parameter transfer [128] can capture the similarity between data distributions by taking advantage of kernel functions and can learn a mapping from the data distributions to the classifier parameters using the regression framework.

- For the session–session problem, similar to the cross-subject problem, the previous session is the source domain, and the new session is the target domain. A novel domain adaptation method was proposed in [129] for EEG emotion recognition that showed superiority for both cross-session and cross-subject adaptation. It integrates task-invariant features and task-specific features in a unified framework and requires no labeled information in the target domain to accomplish joint distribution adaptation (JDA). The authors compared it with a series of conventional and recent transfer-learning algorithms, and the results demonstrated that the method significantly outperformed other approaches in terms of accuracy. The visualization analysis offers insights into the influence of JDA on the representations.

- For the modality–modality problem, the complete modality data are the source domain, and the missing modality data are the target domain. The goal is to achieve knowledge transfer between the different modality signals. The authors of [127] proposed a novel semisupervised multiview deep generative framework for multimodal emotion recognition with incomplete data. Under this framework, each modality of the emotional data is treated as one view, and the importance of each modality is inferred automatically by learning a nonuniformly weighted Gaussian mixture posterior approximation for the shared latent variable. The labeled-data-scarcity problem is naturally addressed within our framework by casting the semisupervised classification problem as a specialized missing data imputation task. The incomplete-data problem is elegantly circumvented by treating the missing views as latent variables and integrating them out. The results of experiments carried out on the multiphysiological signal dataset DEAP and the EEG eye-movement SEED-iv dataset confirm the superiority of this framework.

6.5. Application

6.5.1. Medical Applications

6.5.2. Non-Medical Applications

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain–computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Mühl, C.; Nijholt, A.; Allison, B.; Dunne, S.; Heylen, D. Affective brain-computer interfaces (aBCI 2011). In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, Memphis, TN, USA, 9–12 October 2011; p. 435. [Google Scholar]

- Mühl, C.; Allison, B.; Nijholt, A.; Chanel, G. A survey of affective brain computer interfaces: Principles, state-of-the-art, and challenges. Brain-Comput. Interfaces 2014, 1, 66–84. [Google Scholar] [CrossRef]

- Van den Broek, E.L. Affective computing: A reverence for a century of research. In Cognitive Behavioural Systems; Springer: Berlin/Heidelberg, Germany, 2012; pp. 434–448. [Google Scholar]

- Ekman, P.E.; Davidson, R.J. The Nature of Emotion: Fundamental Questions; Oxford University Press: Oxford, UK, 1994. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Niemic, C. Studies of Emotion: A Theoretical and Empirical Review of Psychophysiological Studies of Emotion. Psychophy 2004, 1, 15–18. [Google Scholar]

- Chanel, G.; Kierkels, J.J.; Soleymani, M.; Pun, T. Short-term emotion assessment in a recall paradigm. Int. J. Hum.-Comput. Stud. 2009, 67, 607–627. [Google Scholar] [CrossRef]

- Li, Y.; Pan, J.; Long, J.; Yu, T.; Wang, F.; Yu, Z.; Wu, W. Multimodal BCIs: Target Detection, Multidimensional Control, and Awareness Evaluation in Patients With Disorder of Consciousness. Proc. IEEE 2016, 104, 332–352. [Google Scholar]

- Huang, H.; Xie, Q.; Pan, J.; He, Y.; Wen, Z.; Yu, R.; Li, Y. An EEG-based brain computer interface for emotion recognition and its application in patients with Disorder of Consciousness. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Wang, F.; He, Y.; Qu, J.; Xie, Q.; Lin, Q.; Ni, X.; Chen, Y.; Pan, J.; Laureys, S.; Yu, R. Enhancing clinical communication assessments using an audiovisual BCI for patients with disorders of consciousness. J. Neural Eng. 2017, 14, 046024. [Google Scholar] [CrossRef] [PubMed]

- D’mello, S.K.; Kory, J. A review and meta-analysis of multimodal affect detection systems. ACM Comput. Surv. (CSUR) 2015, 47, 1–36. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A review of affective computing: From unimodal analysis to multimodal fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Ebrahimi, T.; Vesin, J.; Garcia, G. Guest editorial braincomputer interface technology: A review of the second international meeting. IEEE Signal Process. Mag. 2003, 20, 14–24. [Google Scholar] [CrossRef]

- Calvo, R.A.; D’Mello, S. Affect detection: An interdisciplinary review of models, methods, and their applications. IEEE Trans. Affect. Comput. 2010, 1, 18–37. [Google Scholar] [CrossRef]

- Phan, A.H.; Cichocki, A. Tensor decompositions for feature extraction and classification of high dimensional datasets. Nonlinear Theory Its Appl. IEICE 2010, 1, 37–68. [Google Scholar] [CrossRef]

- Congedo, M.; Barachant, A.; Bhatia, R. Riemannian geometry for EEG-based brain-computer interfaces; a primer and a review. Brain-Comput. Interfaces 2017, 4, 155–174. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Dong, B.-N.; Lu, B.-L. Multimodal emotion recognition using EEG and eye tracking data. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 5040–5043. [Google Scholar]

- Soleymani, M.; Pantic, M.; Pun, T. Multimodal emotion recognition in response to videos. IEEE Trans. Affect. Comput. 2011, 3, 211–223. [Google Scholar] [CrossRef]

- Liu, W.; Zheng, W.-L.; Lu, B.-L. Emotion recognition using multimodal deep learning. In Proceedings of the International Conference on Neural Information Processing, Kyoto, Japan, 16–21 October 2016; pp. 521–529. [Google Scholar]

- Yin, Z.; Zhao, M.; Wang, Y.; Yang, J.; Zhang, J. Recognition of emotions using multimodal physiological signals and an ensemble deep learning model. Comput. Methods Programs Biomed. 2017, 140, 93–110. [Google Scholar] [CrossRef]

- Huang, Y.; Yang, J.; Liu, S.; Pan, J. Combining Facial Expressions and Electroencephalography to Enhance Emotion Recognition. Future Internet 2019, 11, 105. [Google Scholar] [CrossRef]

- Minotto, V.P.; Jung, C.R.; Lee, B. Multimodal multi-channel on-line speaker diarization using sensor fusion through SVM. IEEE Trans. Multimed. 2015, 17, 1694–1705. [Google Scholar] [CrossRef]

- Poria, S.; Chaturvedi, I.; Cambria, E.; Hussain, A. Convolutional MKL based multimodal emotion recognition and sentiment analysis. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; pp. 439–448. [Google Scholar]

- Haghighat, M.; Abdel-Mottaleb, M.; Alhalabi, W. Discriminant correlation analysis: Real-time feature level fusion for multimodal biometric recognition. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1984–1996. [Google Scholar] [CrossRef]

- Zhalehpour, S.; Onder, O.; Akhtar, Z.; Erdem, C.E. BAUM-1: A spontaneous audio-visual face database of affective and mental states. IEEE Trans. Affect. Comput. 2016, 8, 300–313. [Google Scholar] [CrossRef]

- Wu, P.; Liu, H.; Li, X.; Fan, T.; Zhang, X. A novel lip descriptor for audio-visual keyword spotting based on adaptive decision fusion. IEEE Trans. Multimed. 2016, 18, 326–338. [Google Scholar] [CrossRef]

- Gunes, H.; Piccardi, M. Bi-modal emotion recognition from expressive face and body gestures. J. Netw. Comput. Appl. 2007, 30, 1334–1345. [Google Scholar] [CrossRef]

- Koelstra, S.; Patras, I. Fusion of facial expressions and EEG for implicit affective tagging. Image Vis. Comput. 2013, 31, 164–174. [Google Scholar] [CrossRef]

- Soleymani, M.; Asghari-Esfeden, S.; Pantic, M.; Fu, Y. Continuous emotion detection using EEG signals and facial expressions. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo (ICME), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar]

- Freund, Y.; Schapire, R.E. Experiments with a new boosting algorithm. In Proceedings of the ICML, Bari, Italy, 3–6 July 1996; pp. 148–156. [Google Scholar]

- Ponti, M.P., Jr. Combining classifiers: From the creation of ensembles to the decision fusion. In Proceedings of the 2011 24th SIBGRAPI Conference on Graphics, Patterns, and Images Tutorials, Alagoas, Brazil, 28–30 August 2011; pp. 1–10. [Google Scholar]

- Chang, Z.; Liao, X.; Liu, Y.; Wang, W. Research of decision fusion for multi-source remote-sensing satellite information based on SVMs and DS evidence theory. In Proceedings of the Fourth International Workshop on Advanced Computational Intelligence, Wuhan, China, 19–21 October 2011; pp. 416–420. [Google Scholar]

- Nefian, A.V.; Liang, L.; Pi, X.; Liu, X.; Murphy, K. Dynamic Bayesian networks for audio-visual speech recognition. EURASIP J. Adv. Signal Process. 2002, 2002, 783042. [Google Scholar] [CrossRef]

- Murofushi, T.; Sugeno, M. An interpretation of fuzzy measures and the Choquet integral as an integral with respect to a fuzzy measure. Fuzzy Sets Syst. 1989, 29, 201–227. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Liu, W.; Lu, Y.; Lu, B.-L.; Cichocki, A. Emotionmeter: A multimodal framework for recognizing human emotions. IEEE Trans. Cybern. 2018, 49, 1110–1122. [Google Scholar] [CrossRef]

- Savran, A.; Ciftci, K.; Chanel, G.; Mota, J.; Hong Viet, L.; Sankur, B.; Akarun, L.; Caplier, A.; Rombaut, M. Emotion detection in the loop from brain signals and facial images. In Proceedings of the eNTERFACE 2006 Workshop, Dubrovnik, Croatia, 17 July–11 August 2006. [Google Scholar]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A Multimodal Database for Affect Recognition and Implicit Tagging. IEEE Trans. Affect. Comput. 2012, 3, 42–55. [Google Scholar] [CrossRef]

- Correa, J.A.M.; Abadi, M.K.; Sebe, N.; Patras, I. Amigos: A dataset for affect, personality and mood research on individuals and groups. IEEE Trans. Affect. Comput. 2018. [Google Scholar] [CrossRef]

- Conneau, A.-C.; Hajlaoui, A.; Chetouani, M.; Essid, S. EMOEEG: A new multimodal dataset for dynamic EEG-based emotion recognition with audiovisual elicitation. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 28 August–2 September 2017; pp. 738–742. [Google Scholar]

- Subramanian, R.; Wache, J.; Abadi, M.K.; Vieriu, R.L.; Winkler, S.; Sebe, N. ASCERTAIN: Emotion and personality recognition using commercial sensors. IEEE Trans. Affect. Comput. 2016, 9, 147–160. [Google Scholar] [CrossRef]

- Song, T.; Zheng, W.; Lu, C.; Zong, Y.; Zhang, X.; Cui, Z. MPED: A multi-modal physiological emotion database for discrete emotion recognition. IEEE Access 2019, 7, 12177–12191. [Google Scholar] [CrossRef]

- Liu, W.; Zheng, W.-L.; Lu, B.-L. Multimodal emotion recognition using multimodal deep learning. arXiv 2016, arXiv:1602.08225. [Google Scholar]

- Ma, J.; Tang, H.; Zheng, W.-L.; Lu, B.-L. Emotion recognition using multimodal residual LSTM network. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 176–183. [Google Scholar]

- Tang, H.; Liu, W.; Zheng, W.-L.; Lu, B.-L. Multimodal emotion recognition using deep neural networks. In Proceedings of the International Conference on Neural Information Processing, Guangzhou, China, 14–18 November 2017; pp. 811–819. [Google Scholar]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Troster, G. Eye movement analysis for activity recognition using electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 741–753. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Bakshi, U.; Singhal, R. A survey on face detection methods and feature extraction techniques of face recognition. Int. J. Emerg. Trends Technol. Comput. Sci. 2014, 3, 233–237. [Google Scholar]

- Võ, M.L.H.; Jacobs, A.M.; Kuchinke, L.; Hofmann, M.; Conrad, M.; Schacht, A.; Hutzler, F. The coupling of emotion and cognition in the eye: Introducing the pupil old/new effect. Psychophysiology 2008, 45, 130–140. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.-A.; Munoz, D.P. A circuit for pupil orienting responses: Implications for cognitive modulation of pupil size. Curr. Opin. Neurobiol. 2015, 33, 134–140. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Bradley, M.M.; Miccoli, L.; Escrig, M.A.; Lang, P.J. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 2008, 45, 602–607. [Google Scholar] [CrossRef]

- Wu, X.; Zheng, W.-L.; Lu, B.-L. Investigating EEG-Based Functional Connectivity Patterns for Multimodal Emotion Recognition. arXiv 2020, arXiv:2004.01973. [Google Scholar]

- SensoMotoric Instruments. Begaze 2.2 Manual; SensoMotoric Instruments: Teltow, Germany, 2009. [Google Scholar]

- Xu, X.; Quan, C.; Ren, F. Facial expression recognition based on Gabor Wavelet transform and Histogram of Oriented Gradients. In Proceedings of the 2015 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 2–5 August 2015; pp. 2117–2122. [Google Scholar]

- Huang, X.; He, Q.; Hong, X.; Zhao, G.; Pietikainen, M. Improved spatiotemporal local monogenic binary pattern for emotion recognition in the wild. In Proceedings of the 16th International Conference on Multimodal Interaction, Istanbul, Turkey, 12–16 November 2014; pp. 514–520. [Google Scholar]

- Saeed, A.; Al-Hamadi, A.; Niese, R. The effectiveness of using geometrical features for facial expression recognition. In Proceedings of the 2013 IEEE International Conference on Cybernetics (CYBCO), Lausanne, Switzerland, 13–15 June 2013; pp. 122–127. [Google Scholar]

- Ekman, R. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: New York, NY, USA, 1997. [Google Scholar]

- Huang, Y.; Yang, J.; Liao, P.; Pan, J. Fusion of facial expressions and EEG for multimodal emotion recognition. Comput. Intell. Neurosci. 2017, 2017. [Google Scholar] [CrossRef] [PubMed]

- Sokolov, S.; Velchev, Y.; Radeva, S.; Radev, D. Human Emotion Estimation from EEG and Face Using Statistical Features and SVM. In Proceedings of the Fourth International Conference on Computer Science and Information Technology, Geneva, Switzerland, 25–26 March 2017. [Google Scholar]

- Chang, C.Y.; Tsai, J.S.; Wang, C.J.; Chung, P.C. Emotion recognition with consideration of facial expression and physiological signals. In Proceedings of the IEEE Conference on Computational Intelligence in Bioinformatics & Computational Biology, Nashville, TN, USA, 30 March–2 April 2009. [Google Scholar]

- Chunawale, A.; Bedekar, D. Human Emotion Recognition using Physiological Signals: A Survey. In Proceedings of the 2nd International Conference on Communication & Information Processing (ICCIP), Tokyo, Japan, 20 April 2020. [Google Scholar]

- Vijayakumar, S.; Flynn, R.; Murray, N. A comparative study of machine learning techniques for emotion recognition from peripheral physiological signals. In Proceedings of the ISSC 2020 31st Irish Signals and System Conference, Letterkenny, Ireland, 11–12 June 2020. [Google Scholar]

- Liu, Y.; Sourina, O. Real-Time Subject-Dependent EEG-Based Emotion Recognition Algorithm. In Transactions on Computational Science XXIII; Springer: Berlin/Heidelberg, Germany, 2014; pp. 199–223. [Google Scholar]

- Bo, H. EEG analysis based on time domain properties. Electroencephalogr. Clin. Neurophysiol. 1970, 29, 306–310. [Google Scholar]

- Thammasan, N.; Moriyama, K.; Fukui, K.I.; Numao, M. Continuous Music-Emotion Recognition Based on Electroencephalogram. IEICE Trans. Inf. Syst. 2016, 99, 1234–1241. [Google Scholar] [CrossRef]

- Shi, L.C.; Jiao, Y.Y.; Lu, B.L. Differential entropy feature for EEG-based vigilance estimation. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 6627–6630. [Google Scholar]

- Zheng, W.-L.; Zhu, J.-Y.; Lu, B.-L. Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2017, 10, 417–429. [Google Scholar] [CrossRef]

- Akin, M. Comparison of Wavelet Transform and FFT Methods in the Analysis of EEG Signals. J. Med. Syst. 2002, 26, 241–247. [Google Scholar] [CrossRef] [PubMed]

- Kıymık, M.K.; Güler, N.; Dizibüyük, A.; Akın, M. Comparison of STFT and wavelet transform methods in determining epileptic seizure activity in EEG signals for real-time application. Comput. Biol. Med. 2005, 35, 603–616. [Google Scholar] [CrossRef] [PubMed]

- Hadjidimitriou, S.K.; Hadjileontiadis, L.J. Toward an EEG-Based Recognition of Music Liking Using Time-Frequency Analysis. IEEE Trans. Biomed. Eng. 2012, 59, 3498–3510. [Google Scholar] [CrossRef]

- Liao, J.; Zhong, Q.; Zhu, Y.; Cai, D. Multimodal Physiological Signal Emotion Recognition Based on Convolutional Recurrent Neural Network. IOP Conf. Ser. Mater. Sci. Eng. 2020, 782, 032005. [Google Scholar] [CrossRef]

- Yea-Hoon, K.; Sae-Byuk, S.; Shin-Dug, K. Electroencephalography Based Fusion Two-Dimensional (2D)-Convolution Neural Networks (CNN) Model for Emotion Recognition System. Sensors 2018, 18, 1383. [Google Scholar]

- Rahimi, A.; Datta, S.; Kleyko, D.; Frady, E.P.; Olshausen, B.; Kanerva, P.; Rabaey, J.M. High-Dimensional Computing as a Nanoscalable Paradigm. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 64, 2508–2521. [Google Scholar] [CrossRef]

- Montagna, F.; Rahimi, A.; Benatti, S.; Rossi, D.; Benini, L. PULP-HD: Accelerating Brain-Inspired High-Dimensional Computing on a Parallel Ultra-Low Power Platform. In Proceedings of the 2018 55th ACM/ESDA/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 24–28 June 2018. [Google Scholar]

- Rahimi, A.; Kanerva, P.; Millán, J.D.R.; Rabaey, J.M. Hyperdimensional Computing for Noninvasive Brain–Computer Interfaces: Blind and One-Shot Classification of EEG Error-Related Potentials. In Proceedings of the 10th EAI International Conference on Bio-Inspired Information and Communications Technologies (Formerly BIONETICS), Hoboken, NJ, USA, 15–17 March 2017. [Google Scholar]

- Rahimi, A.; Kanerva, P.; Benini, L.; Rabaey, J.M. Efficient Biosignal Processing Using Hyperdimensional Computing: Network Templates for Combined Learning and Classification of ExG Signals. Proc. IEEE 2019, 107, 123–143. [Google Scholar] [CrossRef]

- Chang, E.-J.; Rahimi, A.; Benini, L.; Wu, A.-Y.A. Hyperdimensional Computing-based Multimodality Emotion Recognition with Physiological Signals. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hsinchu, Taiwan, 18–20 March 2019. [Google Scholar]

- Huppert, T.J.; Hoge, R.D.; Diamond, S.G.; Franceschini, M.A.; Boas, D.A. A temporal comparison of BOLD, ASL, and NIRS hemodynamic responses to motor stimuli in adult humans. Neuroimage 2006, 29, 368–382. [Google Scholar] [CrossRef] [PubMed]

- Jasdzewski, G.; Strangman, G.; Wagner, J.; Kwong, K.K.; Poldrack, R.A.; Boas, D.A. Differences in the hemodynamic response to event-related motor and visual paradigms as measured by near-infrared spectroscopy. Neuroimage 2003, 20, 479–488. [Google Scholar] [CrossRef]

- Malonek, D.; Grinvald, A. Interactions between electrical activity and cortical microcirculation revealed by imaging spectroscopy: Implications for functional brain mapping. Science 1996, 272, 551–554. [Google Scholar] [CrossRef] [PubMed]

- Ayaz, H.; Shewokis, P.A.; Curtin, A.; Izzetoglu, M.; Izzetoglu, K.; Onaral, B. Using MazeSuite and functional near infrared spectroscopy to study learning in spatial navigation. J. Vis. Exp. 2011, 56, e3443. [Google Scholar] [CrossRef]

- Liao, C.H.; Worsley, K.J.; Poline, J.-B.; Aston, J.A.; Duncan, G.H.; Evans, A.C. Estimating the delay of the fMRI response. NeuroImage 2002, 16, 593–606. [Google Scholar] [CrossRef]

- Buxton, R.B.; Uludağ, K.; Dubowitz, D.J.; Liu, T.T. Modeling the hemodynamic response to brain activation. Neuroimage 2004, 23, S220–S233. [Google Scholar] [CrossRef]

- Duckett, S.G.; Ginks, M.; Shetty, A.K.; Bostock, J.; Gill, J.S.; Hamid, S.; Kapetanakis, S.; Cunliffe, E.; Razavi, R.; Carr-White, G. Invasive acute hemodynamic response to guide left ventricular lead implantation predicts chronic remodeling in patients undergoing cardiac resynchronization therapy. J. Am. Coll. Cardiol. 2011, 58, 1128–1136. [Google Scholar] [CrossRef]

- Sun, Y.; Ayaz, H.; Akansu, A.N. Neural correlates of affective context in facial expression analysis: A simultaneous EEG-fNIRS study. In Proceedings of the 2015 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Orlando, FL, USA, 14–16 December 2015; pp. 820–824. [Google Scholar]

- Morioka, H.; Kanemura, A.; Morimoto, S.; Yoshioka, T.; Oba, S.; Kawanabe, M.; Ishii, S. Decoding spatial attention by using cortical currents estimated from electroencephalography with near-infrared spectroscopy prior information. Neuroimage 2014, 90, 128–139. [Google Scholar] [CrossRef]

- Ahn, S.; Nguyen, T.; Jang, H.; Kim, J.G.; Jun, S.C. Exploring neuro-physiological correlates of drivers’ mental fatigue caused by sleep deprivation using simultaneous EEG, ECG, and fNIRS data. Front. Hum. Neurosci. 2016, 10, 219. [Google Scholar] [CrossRef]

- Fazli, S.; Mehnert, J.; Steinbrink, J.; Curio, G.; Villringer, A.; Müller, K.-R.; Blankertz, B. Enhanced performance by a hybrid NIRS–EEG brain computer interface. Neuroimage 2012, 59, 519–529. [Google Scholar] [CrossRef]

- Tomita, Y.; Vialatte, F.-B.; Dreyfus, G.; Mitsukura, Y.; Bakardjian, H.; Cichocki, A. Bimodal BCI using simultaneously NIRS and EEG. IEEE Trans. Biomed. Eng. 2014, 61, 1274–1284. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Rios, C.; Zhang, N.; Yang, L.; Chen, W.; He, B. Linear and nonlinear relationships between visual stimuli, EEG and BOLD fMRI signals. Neuroimage 2010, 50, 1054–1066. [Google Scholar] [CrossRef] [PubMed]

- Pistoia, F.; Carolei, A.; Iacoviello, D.; Petracca, A.; Sarà, M.; Spezialetti, M.; Placidi, G. EEG-detected olfactory imagery to reveal covert consciousness in minimally conscious state. Brain Inj. 2015, 29, 1729–1735. [Google Scholar] [CrossRef]

- Zander, T.O.; Kothe, C. Towards passive brain–computer interfaces: Applying brain–computer interface technology to human–machine systems in general. J. Neural Eng. 2011, 8, 025005. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International affective picture system (IAPS): Technical manual and affective ratings. NIMH Center Study Emot. Atten. 1997, 1, 39–58. [Google Scholar]

- Pan, J.; Li, Y.; Wang, J. An EEG-based brain-computer interface for emotion recognition. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 2063–2067. [Google Scholar]

- Morris, J.D. Observations: SAM: The Self-Assessment Manikin; an efficient cross-cultural measurement of emotional response. J. Advert. Res. 1995, 35, 63–68. [Google Scholar]

- Kim, J.; André, E. Emotion recognition based on physiological changes in music listening. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 2067–2083. [Google Scholar] [CrossRef]

- Iacoviello, D.; Petracca, A.; Spezialetti, M.; Placidi, G. A real-time classification algorithm for EEG-based BCI driven by self-induced emotions. Comput. Methods Programs Biomed. 2015, 122, 293–303. [Google Scholar] [CrossRef]

- An, X.; Höhne, J.; Ming, D.; Blankertz, B. Exploring combinations of auditory and visual stimuli for gaze-independent brain-computer interfaces. PLoS ONE 2014, 9, e111070. [Google Scholar] [CrossRef]

- Wang, F.; He, Y.; Pan, J.; Xie, Q.; Yu, R.; Zhang, R.; Li, Y. A novel audiovisual brain-computer interface and its application in awareness detection. Sci. Rep. 2015, 5, 1–12. [Google Scholar]

- Gilleade, K.; Dix, A.; Allanson, J. Affective videogames and modes of affective gaming: Assist me, challenge me, emote me. In Proceedings of the International Conference on Changing Views, Vancouver, BC, Canada, 16–20 June 2005. [Google Scholar]

- Pan, J.; Xie, Q.; Qin, P.; Chen, Y.; He, Y.; Huang, H.; Wang, F.; Ni, X.; Cichocki, A.; Yu, R. Prognosis for patients with cognitive motor dissociation identified by brain-computer interface. Brain 2020, 143, 1177–1189. [Google Scholar] [CrossRef]

- George, L.; Lotte, F.; Abad, R.V.; Lécuyer, A. Using scalp electrical biosignals to control an object by concentration and relaxation tasks: Design and evaluation. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 6299–6302. [Google Scholar]

- Hjelm, S.I. Research+ Design: The Making of Brainball. Interactions 2003, 10, 26–34. [Google Scholar] [CrossRef]

- Brousseau, B.; Rose, J.; Eizenman, M. Hybrid Eye-Tracking on a Smartphone with CNN Feature Extraction and an Infrared 3D Model. Sensors 2020, 20, 543. [Google Scholar] [CrossRef]

- Boto, E.; Holmes, N.; Leggett, J.; Roberts, G.; Shah, V.; Meyer, S.S.; Muñoz, L.D.; Mullinger, K.J.; Tierney, T.M.; Bestmann, S. Moving magnetoencephalography towards real-world applications with a wearable system. Nature 2018, 555, 657–661. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Ma, Y.; Song, J.; Lai, C.-F.; Hu, B. Smart clothing: Connecting human with clouds and big data for sustainable health monitoring. Mob. Netw. Appl. 2016, 21, 825–845. [Google Scholar] [CrossRef]

- Lim, J.Z.; Mountstephens, J.; Teo, J. Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges. Sensors 2020, 20, 2384. [Google Scholar] [CrossRef]

- Von Lühmann, A.; Wabnitz, H.; Sander, T.; Müller, K.-R. M3BA: A mobile, modular, multimodal biosignal acquisition architecture for miniaturized EEG-NIRS-based hybrid BCI and monitoring. IEEE Trans. Biomed. Eng. 2016, 64, 1199–1210. [Google Scholar] [CrossRef]

- Radüntz, T.; Scouten, J.; Hochmuth, O.; Meffert, B. Automated EEG artifact elimination by applying machine learning algorithms to ICA-based features. J. Neural Eng. 2017, 14, 046004. [Google Scholar] [CrossRef] [PubMed]

- Du, X.; Li, Y.; Zhu, Y.; Ren, Q.; Zhao, L. Removal of artifacts from EEG signal. Sheng Wu Yi Xue Gong Cheng Xue Za Zhi J. Biomed. Eng. Shengwu Yixue Gongchengxue Zazhi 2008, 25, 464–467, 471. [Google Scholar]

- Urigüen, J.A.; Garcia-Zapirain, B. EEG artifact removal—State-of-the-art and guidelines. J. Neural Eng. 2015, 12, 031001. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.K.; Rastegarnia, A.; Yang, Z. Methods for artifact detection and removal from scalp EEG: A review. Neurophysiol. Clin. Clin. Neurophysiol. 2016, 46, 287–305. [Google Scholar] [CrossRef] [PubMed]

- Jung, T.P.; Makeig, S.; Humphries, C.; Lee, T.W.; Mckeown, M.J.; Iragui, V.; Sejnowski, T.J. Removing electroencephalographic artifacts by blind source separation. Psychophysiology 2000, 37, 163–178. [Google Scholar] [CrossRef] [PubMed]

- Dong, L.; Zhang, Y.; Zhang, R.; Zhang, X.; Gong, D.; Valdes-Sosa, P.A.; Xu, P.; Luo, C.; Yao, D. Characterizing nonlinear relationships in functional imaging data using eigenspace maximal information canonical correlation analysis (emiCCA). Neuroimage 2015, 109, 388–401. [Google Scholar] [CrossRef]

- Makeig, S.; Bell, A.J.; Jung, T.-P.; Sejnowski, T.J. Independent component analysis of electroencephalographic data. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 2–5 December 1996; pp. 145–151. [Google Scholar]

- LeDoux, J. The Emotional Brain: The Mysterious Underpinnings of Emotional Life; Simon and Schuster: New York, NY, USA, 1998. [Google Scholar]

- Li, M.; Lu, B.-L. Emotion classification based on gamma-band EEG. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 2–6 September 2009; pp. 1223–1226. [Google Scholar]

- Zheng, W.-L.; Lu, B.-L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Samara, A.; Menezes, M.L.R.; Galway, L. Feature extraction for emotion recognition and modelling using neurophysiological data. In Proceedings of the 2016 15th International Conference on Ubiquitous Computing and Communications and 2016 International Symposium on Cyberspace and Security (IUCC-CSS), Granada, Spain, 14–16 December 2016; pp. 138–144. [Google Scholar]

- Li, Z.; Qiu, L.; Li, R.; He, Z.; Xiao, J.; Liang, Y.; Wang, F.; Pan, J. Enhancing BCI-Based Emotion Recognition Using an Improved Particle Swarm Optimization for Feature Selection. Sensors 2020, 20, 3028. [Google Scholar] [CrossRef]

- Gharavian, D.; Sheikhan, M.; Nazerieh, A.; Garoucy, S. Speech emotion recognition using FCBF feature selection method and GA-optimized fuzzy ARTMAP neural network. Neural Comput. Appl. 2012, 21, 2115–2126. [Google Scholar] [CrossRef]

- Nawaz, R.; Cheah, K.H.; Nisar, H.; Voon, Y.V. Comparison of different feature extraction methods for EEG-based emotion recognition. Biocybern. Biomed. Eng. 2020, 40, 910–926. [Google Scholar] [CrossRef]

- Kang, J.-S.; Kavuri, S.; Lee, M. ICA-evolution based data augmentation with ensemble deep neural networks using time and frequency kernels for emotion recognition from EEG-data. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Aithal, M.B.; Sahare, S.L. Emotion Detection from Distorted Speech Signal using PCA-Based Technique. Emotion 2015, 2, 14–19. [Google Scholar]

- Du, C.; Du, C.; Wang, H.; Li, J.; Zheng, W.L.; Lu, B.L.; He, H. Semi-supervised Deep Generative Modelling of Incomplete Multi-Modality Emotional Data. In Proceedings of the ACM Multimedia Conference, Seoul, Korea, 22–26 October 2018. [Google Scholar]

- Sangineto, E.; Zen, G.; Ricci, E.; Sebe, N. We are not all equal: Personalizing models for facial expression analysis with transductive parameter transfer. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 357–366. [Google Scholar]

- Li, J.; Qiu, S.; Du, C.; Wang, Y.; He, H. Domain Adaptation for EEG Emotion Recognition Based on Latent Representation Similarity. IEEE Trans. Cogn. Dev. Syst. 2020, 12, 344–353. [Google Scholar] [CrossRef]

- Joshi, J.; Goecke, R.; Alghowinem, S.; Dhall, A.; Wagner, M.; Epps, J.; Parker, G.; Breakspear, M. Multimodal assistive technologies for depression diagnosis and monitoring. J. Multimodal User Interfaces 2013, 7, 217–228. [Google Scholar] [CrossRef]

- Samson, A.C.; Hardan, A.Y.; Podell, R.W.; Phillips, J.M.; Gross, J.J. Emotion regulation in children and adolescents with autism spectrum disorder. Autism Res. 2015, 8, 9–18. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez, H.A.; Yoo, J.; Elfadel, I.A.M. EEG-based Emotion Detection Using Unsupervised Transfer Learning. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 694–697. [Google Scholar]

- Elatlassi, R. Modeling Student Engagement in Online Learning Environments Using Real-Time Biometric Measures: Electroencephalography (EEG) and Eye-Tracking. Master’s Thesis, Oregon State University, Corvallis, OR, USA, 2018. [Google Scholar]

- Park, C.; Shahrdar, S.; Nojoumian, M. EEG-based classification of emotional state using an autonomous vehicle simulator. In Proceedings of the 2018 IEEE 10th Sensor Array and Multichannel Signal Processing Workshop (SAM), Sheffield, UK, 8–11 July 2018; pp. 297–300. [Google Scholar]

- Sourina, O.; Wang, Q.; Liu, Y.; Nguyen, M.K. A Real-time Fractal-based Brain State Recognition from EEG and its Applications. In Proceedings of the BIOSIGNALS 2011—The International Conference on Bio-Inspired Systems and Signal Processing, Rome, Italy, 26–29 January 2011. [Google Scholar]

| Database Access | Modality | Sub. | Stimuli | Emotional Model/States |

|---|---|---|---|---|

| DEAP [36] www.eecs.qmul.ac.uk/mmv/datasets/deap/ | EEG, EOG, EMG, GSR, RSP, BVP, ST, facial videos | 32 | video | dimensional/valence, arousal, liking |

| SEED-IV [37] bcmi.sjtu.edu.cn/~seed/seed-iv.html | EEG, eye tracking | 15 | video | discrete/positive, negative, neutral, fear |

| ENTERFACE’06 [38] www.enterface.net/results/ | EEG, fNIRS, facial videos | 5 | IAPS | discrete/positive, negative, calm |

| MANHOB-HCI [39] mahnob-db.eu/hci-tagging/ | EEG, GSR, ECG, RSP, ST, Eye-gaze, facial videos | 27 | video | dimensional/valence, arousal |

| AMIGOS [40] www.eecs.qmul.ac.uk/mmv/datasets/amigos/index.html | EEG, ECG, GSR | 40 | video | dimensional/valence, arousal, dominance, liking, familiarity; discrete/basic emotions |

| EMOEEG [41] www.tsi.telecom-paristech.fr/aao/en/2017/03/03/ | EEG, EOG, EMG, ECG, skin conductance temperature | 8 | IAPS, video | dimensional/valence, arousal |

| ASCERTAIN [42] mhug.disi.unitn.it/index.php/datasets/ascertain/ | EEG, ECG, GSR and visual | 58 | video | dimensional/valence, arousal |

| MPED [43] | EEG, ECG, RSP, GSR | 23 | video | discrete/joy, funny, anger, fear, disgust, disgust and neutrality |

| Ref. | Database (Modality) | Feature Extraction | Results (Standard/#) | Innovation |

|---|---|---|---|---|

| [44] | SEED-IV (EEG + EYE) DEAP (EEG + PPS) | SEED-IV: EEG: PSD, DE in four bands. EYE: pupil diameter, fixation, blink duration, saccade, event statistics. DEAP: preprocessed data. | SEED-IV: 91.01% (avg./4) DEAP: 83.25% (avg. (valence/arousal/dominance/liking)/2) | Using BDAE to fuse EEG features and other features, this study shows that shared representation is a good feature for distinguishing different threads. |

| [45] | DEAP (EEG + PPS) | EEG + PPS: implicitly extract high-level temporal features. | 92.87% (arousal/2) 92.30% (valence/2) | The MMResLSTM learns complex high-level features and the time correlation between two modalities through weight sharing. |

| [46] | SEED-IV (EEG + EYE) DEAP (EEG + PPS) | SEED-IV: EEG: DE in five bands. EYE: PSD and DE features of pupil diameters. DEAP: EEG: DE in four bands. PPS: statistical feature. | SEED-IV: 93.97% (avg./4) DEAP: 83.23% (arousal/2) 83.82% (valence/2) | The bimodal-LSTM model uses both temporal and frequency–domain information of features. |

| [21] | DEAP (EEG + PPS) | EEG: power differences, statistical PPS: statistical feature, frequency–domain feature. | 83.00% (valence/2) 84.10% (arousal/2) | The parsimonious structure of the MESAE can be properly identified and lead to a higher generalization capability than other state-of-the-art deep-learning models. |

| [19] | MAHNOB-HCI (EEG + EYE) | EEG: PSD in five bands, spectral power asymmetry. EYE: pupil diameter, gaze distance, eye blinking. | 76.40% (valence/3) 68.50% (arousal/3) | Using confidence summation fusion for decision-making fusion of EEG and eye movements can achieve good classification results. |

| [43] | MPED EEG, GSR, RSP, ECG | EEG: PSD in five bands, HOC, Hjorth, HHS, STFT; GSR: statistical feature; RSP + ECG: energy mean and SSE values. | 71.57% (avg./3/positive-negative-neutral) | The novel attention-LSTM (A-LSTM) strengthens the effectiveness of useful sequences to extract more discriminative features. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Z.; Li, Z.; Yang, F.; Wang, L.; Li, J.; Zhou, C.; Pan, J. Advances in Multimodal Emotion Recognition Based on Brain–Computer Interfaces. Brain Sci. 2020, 10, 687. https://doi.org/10.3390/brainsci10100687

He Z, Li Z, Yang F, Wang L, Li J, Zhou C, Pan J. Advances in Multimodal Emotion Recognition Based on Brain–Computer Interfaces. Brain Sciences. 2020; 10(10):687. https://doi.org/10.3390/brainsci10100687

Chicago/Turabian StyleHe, Zhipeng, Zina Li, Fuzhou Yang, Lei Wang, Jingcong Li, Chengju Zhou, and Jiahui Pan. 2020. "Advances in Multimodal Emotion Recognition Based on Brain–Computer Interfaces" Brain Sciences 10, no. 10: 687. https://doi.org/10.3390/brainsci10100687

APA StyleHe, Z., Li, Z., Yang, F., Wang, L., Li, J., Zhou, C., & Pan, J. (2020). Advances in Multimodal Emotion Recognition Based on Brain–Computer Interfaces. Brain Sciences, 10(10), 687. https://doi.org/10.3390/brainsci10100687