1. Introduction

Bug reports provide important information for developers to maintain a software system. Bug reports are used for developers to share and discuss information with others when fixing bugs, which account for approximately 40% of software maintenance tasks. Even after a bug is fixed, bug reports are used for various purposes, such as checking the history of past changes and referring to relevant bug fixes [

1]. In general, bug reports are maintained in bug-tracking systems such as Bugzilla and Jira.

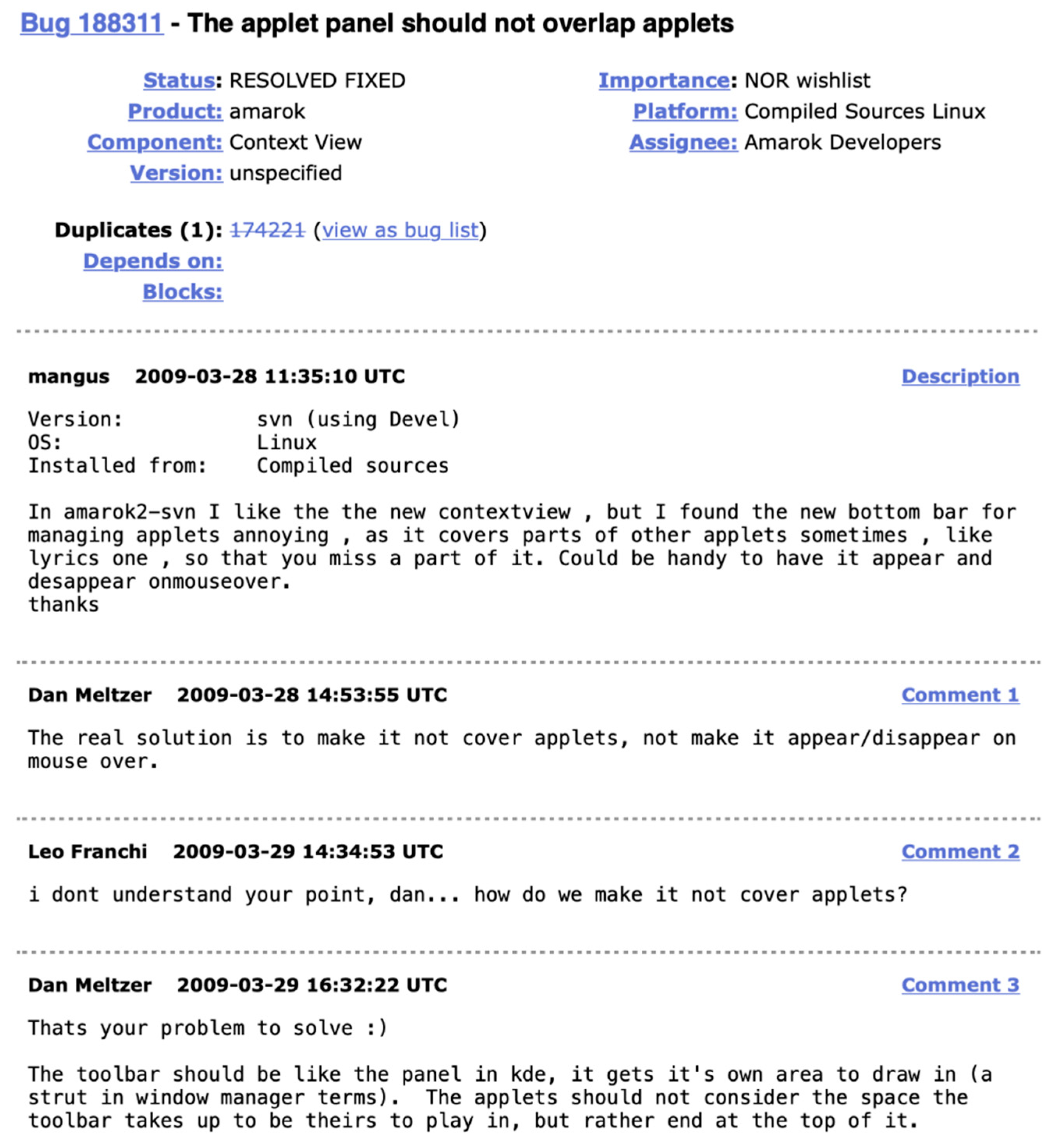

Figure 1 shows an example of a bug report on the Bugzilla platform.

As shown in

Figure 1, each bug report contains a detailed description of the bug with a unique number and a title. Below the description of the bug, comments are listed that contain the developer's discussion on how to reproduce or fix the bug. Due to the characteristics of these bug reports, bug reports are generally considered as a document in the form of a sequential conversation. In addition, there are various metadata in bug reports, such as tags indicating the type or status of bugs and information about developers (e.g., bug manager and assignee), and links to the relevant bug reports.

One example of when bug reports are utilized during software maintenance is a bug report duplicate detection task [

2]. This task determines whether similar bug reports exist for the same bug and tag the bug reports with “duplicates.” To determine whether similar bug reports exist for the same bug, developers often perform keyword-based searches in bug-tracking systems. When bug reports are retrieved, a developer reads the bug reports one by one, which is very time consuming. Because a bug report is in the form of a conversation, the bug report is often long and verbose. Therefore, developers sometimes have difficulty finding the desired information in the bug report. To solve the problem, researchers have proposed using a summary of bug reports. As an alternative to this approach, a developer can write a summary of the bug when a bug is fixed. However, this requires additional work by the developer and, thus, may not be adopted in practice. As a result, researchers are working on automatic methods for summarizing bug reports.

To propose a method for automatically generating a summary of bug reports, Rastkar et al. [

2] applied an existing supervised learning technique to bug reports and conducted experiments using the summary of bug reports so that actual developers can detect duplicate bug reports. The experiment showed that the time spent on the detection work was reduced by approximately 30% without affecting the quality of the output. In addition, when conducting a questionnaire survey on the developers who participated in the work, they found that developers responded that when they used the summary of bug reports, they could easily understand the bug reports. This showed the effectiveness of using the summary statement. Since then, various bug report summarization methods have been studied to improve the quality of bug report summaries. In most cases, however, researchers have focused on learning methods, such as adopting machine learning algorithms [

3,

4,

5]. They have not focused on how to exploit specific domain knowledge, such as the characteristics of bug reports. Jiang et al. [

6] used the characteristics of the bug report to improve the quality of bug report summaries. They considered that existing bug report summarization methods are used for detecting duplicate bugs and proposed a bug report summarization method using duplicate bug reports. Jiang et al. [

6] showed that using duplicates relationships among bug reports can improve the quality of bug report summaries. However, among various possible bug report relationships (Cf.

Table 1), they used only duplicates relationships with the consequence that the effect of applying their method to bug reports is limited.

In this paper, we propose a method to automatically generate a summary of a bug report, which we call the Relation-based Bug Report Summarizer (RBRS). It extends the existing summary method [

6] with the ‘blocks’ and ‘depends-on’ dependencies as well as the ‘duplicates’ relationship. In order to consider the significance of multiple relationships simultaneously, we used weighted-PageRank algorithm, which extends PageRank algorithm used in previous study [

6]. In addition, we extended the BRC (Bug Report Corpus) corpus, which was previously used as the subject of a bug report summary study, and created a corpus that can show the relationship between various bug reports. We evaluated our proposed method with the corpus as test data. As a result, we showed that the precision, recall, and F1 score all improved in terms of the quality of the bug report compared to the existing method [

6]. In addition, we also showed that using more relationships can extend the application scope of the summary method [

6].

This paper is organized as follows.

Section 2 introduces existing studies related to bug report summarization methods.

Section 3 explains the background knowledge of bug reports and then describes our proposed method for summarizing the bug reports based on weighted-PageRank using dependency relationships.

Section 4 explains the experimental set-up.

Section 5 evaluates our implemented bug report summary method.

Section 6 discusses the additional experimental result.

Section 7 discusses the limitations and improvements in our work. Finally,

Section 8 concludes our work and discusses future research directions.

3. A Bug Report Summarization Method Based on Dependency Relationships

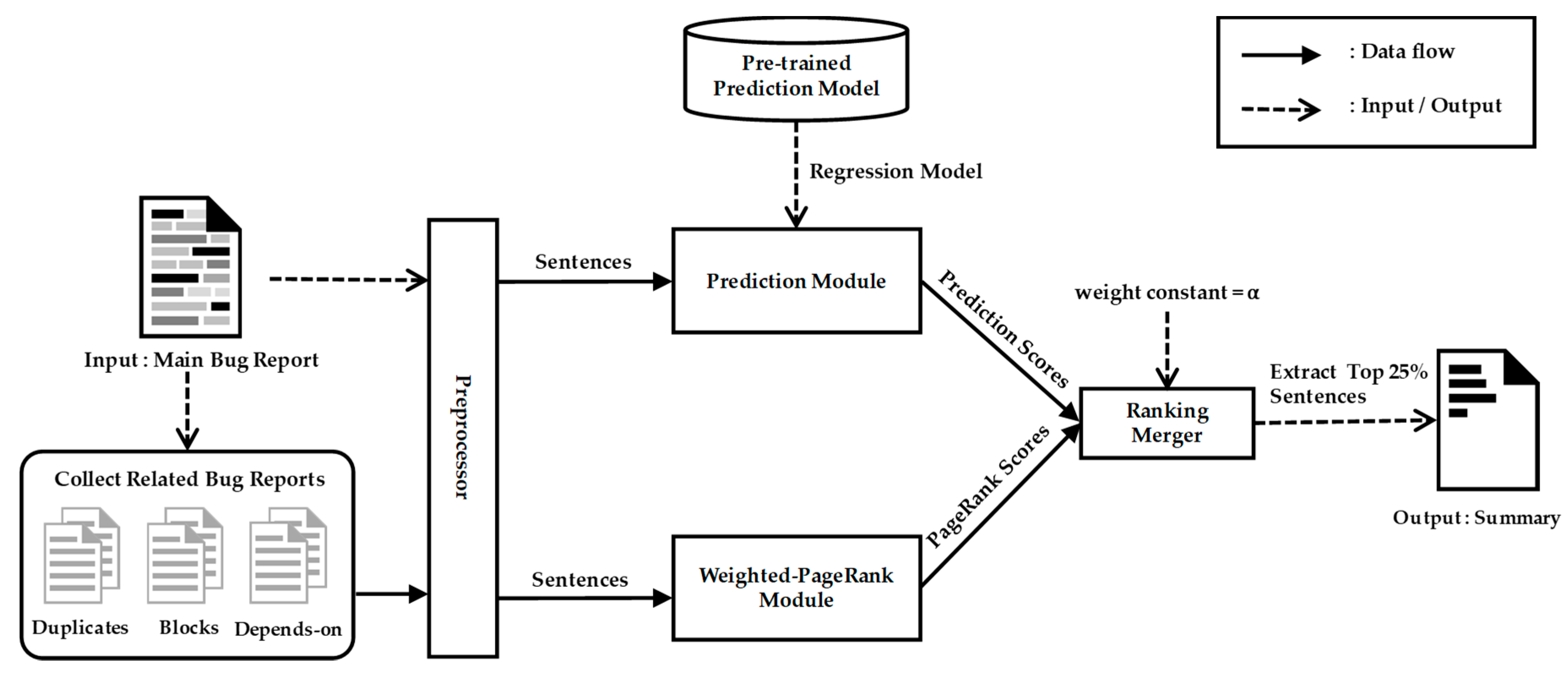

We propose a summary method that utilizes the dependency relationships between two bug reports in addition to duplicate relationships. Our proposed method, relation-based bug report summarizer (RBRS), is shown in

Figure 2. As shown in

Figure 2, the input is the bug report that a user wants to summarize. Then, RBRS analyzes the duplicates, blocks, or depends-on associations of the bug report in the bug report repository and collects the relevant bug reports. Next, RBRS preprocesses the bug reports by using the preprocessing module. Afterward, RBRS forms a bug report graph with the preprocessed bug reports and their relationships. Then, RBRS performs the weighted-PageRank algorithm to assign a PageRank score to each sentence of the bug report. Additionally, RBRS assigns a score to each sentence of the bug report, based on Rastkar et al.’s [

9] supervised summarization method. RBRS combines the two scores by weighting them using the ranking merger module. The combined score is the final score for each sentence. RBRS composes a summary by selecting the top 25% of sentences according to their final scores.

Section 3.1 introduces the relationships between bug reports.

Section 3.2 describes the process of forming a graph among bug reports to apply the weighted-PageRank algorithm.

Section 3.3 describes the preprocessing of bug reports.

Section 3.4 explains how to apply the weighted-PageRank algorithm to our method. Finally,

Section 3.5 describes the process of integrating the weighted-PageRank algorithm with Rastkar et al.’s [

2] method by using the ranking merger module.

3.1. Association Relationships between Bug Reports

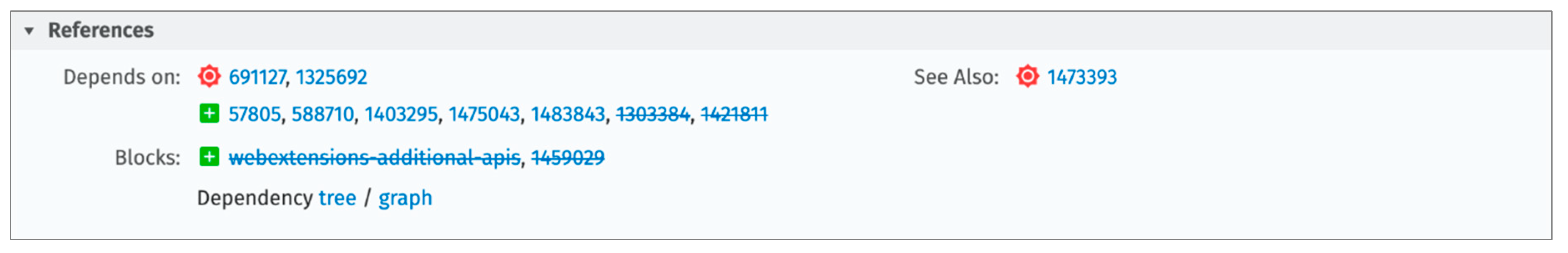

In general, a bug-tracking platform uses a variety of metadata to manage bug reports. A bug report contains a variety of information, not only the issue name, the author, and the date of the issue but also the type of bug, the situation, and the assignee who is responsible for bug resolution. In a bug report, association relationships between bug reports are specified as references metadata to help fix the bug or to help resolve other relevant bugs after the bug fix. For example, as shown in

Figure 3, on the Bugzilla platform, various bug reports are explicitly linked in the references of the bug report according to the type of relationship. The association relationship of these bug reports occurs at various times. When creating a bug report, the author of the bug report may link other bug reports if needed. In the bug fix phase, developers may discuss the issue and add additional links to other bug reports. Even after the bug is fixed, developers can add links if the bug has an additional relationship with other bug reports.

There are various kinds of association relationships between bug reports. Various bug-tracking platforms, such as Jira and Bugzilla, have defined the association relationships that are possible. As shown in

Table 1, there are 10 or more relationships.

In practice, three association relationships are most commonly used, of which the first is the duplicates. When A duplicates B, A and B are duplicated bug reports that contain the same content. Duplicated bug reports exist between bug reports because when a user or developer reports a bug or issue, there is no guarantee that the bug or issue has already been reported. A user or developer can perform keyword-based searches on a bug-tracking platform. However, there are practical difficulties in having all users and developers search prior to writing bug reports. Therefore, once a bug report is created, it is common for the developer assigned the bug fix to recognize the duplicated bug report, create a duplicates link to the bug report, and close the bug report. The duplicated bug reports contain the same bugs or issues, so textual similarities between the duplicated bug reports exist.

The second and third association relationships are blocks and depends-on relationships. Here, we define the two relationships, blocks and depends-on, as the dependency relationship between bug reports. The two are inverses of each other. If A blocks B or B depends on A, then bug A must be fixed before bug B can be fixed. For requirements such as adding a feature, not just bug fixing, you can use blocks and depends-on relationships, which means that adding A functionality must be preceded by adding B functionality. For example, in the Firefox browser on iOS, Bug 1473704 blocks Bug 1473716. In this case, Bug 1473704 is a feature that requires a user-configurable setting for the Firefox browser's dark mode (dimming the screen). Bug 1473716 is a report related to the bug that the slide bar to control dark mode needs to be redesigned. As in the example, most of the bug reports that have dependency relationships with each other tend to deal with similar subjects, which means that major keywords are shared and there is also a textual similarity. Therefore, this shows the textual similarity between these bug reports. We view that the dependency relationships can be used to improve the summary quality of bug reports as the duplicates relationship was used to do so in [

6].

3.2. Generating a Bug Report Graph

To apply the PageRank algorithm, there is a need to build a graph using bug reports. This process consists of two steps. The first step is to build a graph with directionality of bug reports as nodes using a breadth-first-search (BFS) algorithm. The second step is to refine the node of the bug report graph at the sentence level. The graph completed through these two steps is called a bug report correlation graph. When building a bug report correlation graph, there are two configurable variables. The first is the depth of search when applying the BFS algorithm. The second is a weighted value set for each edge.

The first step is to create a graph with a direction that uses bug reports as nodes. For this purpose, the BFS algorithm is applied. First, RBRS sets the main bug report as the root node. Next, RBRS analyzes the metadata of the bug report to find all associated bug reports for which there is an explicitly linked association relationship. Then, RBRS makes each bug report a new node and associates it with the main bug report. At this time, a graph is formed according to the relationships with the main bug report as follows.

3.2.1. Duplicates Relationship

This adds a bidirectional edge between nodes of all duplicates relationships to form a complete graph. Then, each edge is weighted with (default value = 1.0).

3.2.2. Blocks Relationship

This creates an edge with a weight of (default value = 1.0) from the main bug report to a bug report of a blocks relationship and an edge with a weight of (default value = 1.0) in the opposite direction.

3.2.3. Depends-On Relationship

This creates an edge with a weight of (default value = 1.0) from the main bug report to a bug report of a depends-on relationship and an edge with a weight of (default value = 1.0) in the opposite direction.

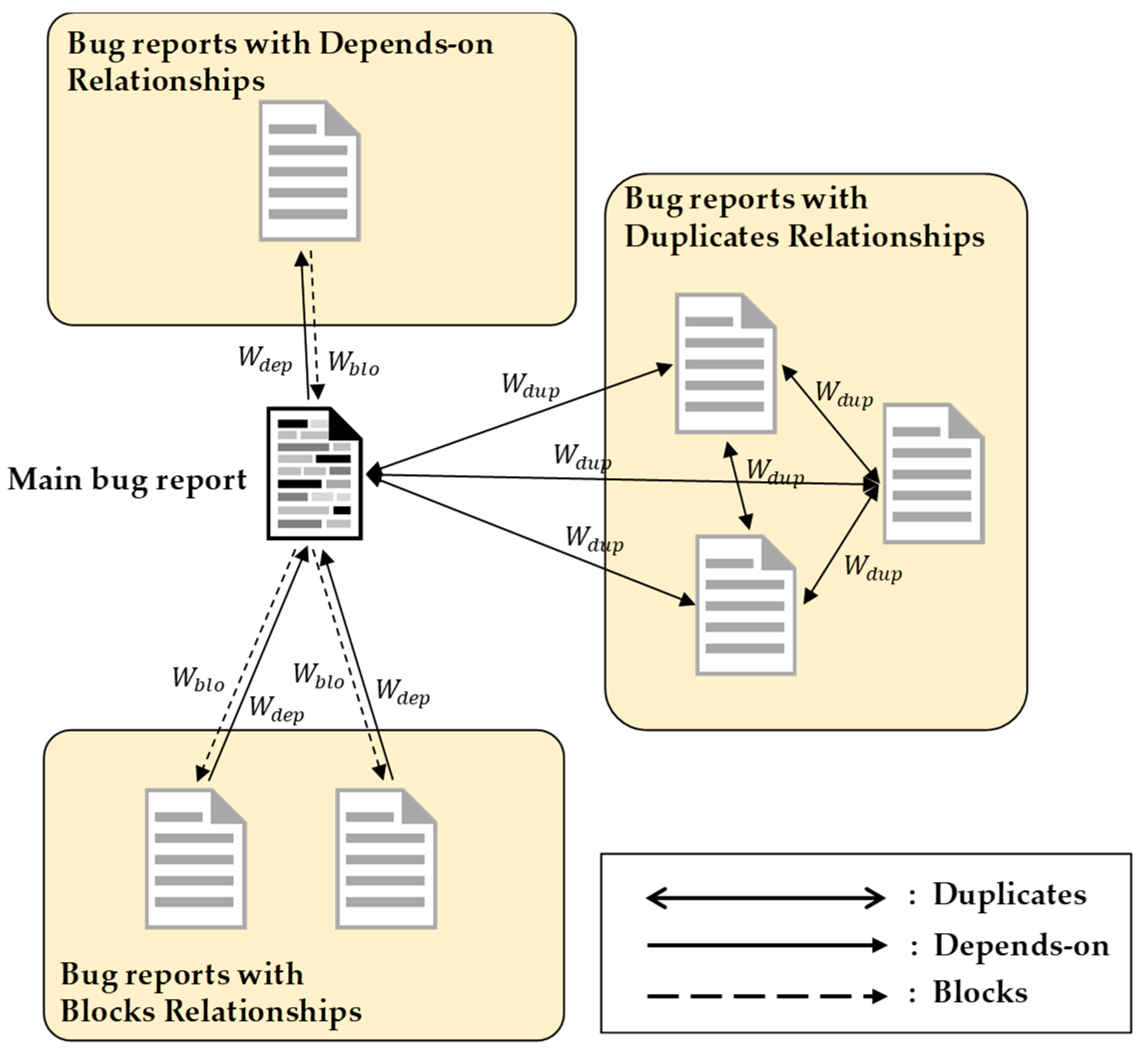

The above step describes a BFS search process with a depth of 1.

Figure 4 shows a bug report graph at depth 1 for a bug report with one block report, two depends-on bug reports, and three duplicates bug reports. The process is repeated for all nodes according to the predefined depth of search. A bug report graph with depth N is a graph that contains all the bug reports that can be reached at most N links (duplicates, blocks, depends-on relationship links) from the main bug report.

The second step is to refine the nodes of the bug report graph. In the first step, each node in the bug report graph represents a bug report. However, to calculate the similarity and score of the sentences using the PageRank algorithm, each node needs to be separated in units of sentences. Therefore, RBRS refines a node of the bug report into sentence nodes of the bug report. At this time, the edge of the graph is processed as follows. Let us assume that bug report node A and bug report node B exist. If bug report A has 10 sentences, and bug report B has 5 sentences, node A is divided into nodes through , and node B is divided into nodes through . After that, if there is an edge from node A to B, the edge is refined into the edges from node to nodes –, from node to nodes –, … from node to nodes ~. In total, 10 × 5 = 50 edges are created. The opposite edge is refined in the same way. Additionally, as the nodes from to are the sentences belonging to the same bug report A, the internal nodes are connected to each other in a bidirectional edge of weight in a complete graph where each pair of distinct nodes is connected. At the end of this step, a bug report correlation graph is completely formed.

3.3. Preprocessing

Preprocessing consists of three steps. The first step is bug report filtering, the second step is comment filtering of bug reports, and the third step is sentence preprocessing.

The bug report filtering step excludes certain kinds of bug reports from the graph. The bug tracking platform groups bugs or issues in a variety of ways. The most common method uses meta bug reports. For example, if there are various bug reports in the application's push notifications, the platform creates a META report named <[META] Tracking bugs for push notification>, and then links the depends-on relationship to the bug reports related to the push notifications. The platform can also link block associations in the opposite direction. In this case, bug reports can be easily categorized and managed, and developers can discuss the overall situations in META reports. However, as these META reports cover a wide range of topics, the specified relationships may not be used in their meaning; thus, the textual similarities between the linked bug reports in the META report are low. The bug reports also share few keywords. Therefore, RBRS removes META reports from the graph.

The second step is to filter the comments in the bug report. One of the major differences between a bug report and a regular document is that the bug report contains not only natural language but also non-natural statements such as code snippets and stack traces. The bug report also contains free expressions, such as listing keywords that are not finalized in sentences. Since this feature is not suitable for applying natural language processing-based methods, research has been conducted to remove such information as noise. For example, Huai et al. [

5] categorized the bug report comments into seven categories: Bug Description, Opinion Expression, Information Giving, Emotion Expressed, Fix Solution, Information Seeking, and Meta/Code.

In addition, Mani et al. [

4] improved the bug report summary quality by eliminating stack traces and code snippets. We use the results in Huai et al. [

5] and Mani et al. [

4] to remove Meta/Code, stack traces, and code snippets.

The final step of preprocessing covers sentence separation, stopword removal, and stemming. For the preprocessing, we used NLTK (Natural Language Toolkit), a Python-based natural language processing library. In our method, we used a list of more than 100 stopwords of NLTK. Additionally we defined and used stopwords such as bug, build, tag, and developer that are commonly used in bug reports.

3.4. Weighted-PageRank

The PageRank algorithm equally weights all edges connected in a node. However, when PageRank is applied to various fields, there is a need to give different weights to edges. Xing and Ghorbani [

14] and Xie et al. [

15] proposed a weighted-PageRank algorithm that assigns weights differently according to the types of links in web pages. In this paper, it was also necessary to measure the effects of different relationships (i.e., duplicates, blocks, and depends-on) on the summary. Additionally, to achieve the highest summary quality, RBRS applies the weighted-PageRank algorithm and gives different weights to the edges in different types of relationships.

To apply the weighted-PageRank algorithm, RBRS first assigns each weight value to the correlation-based bug report graph created in

Section 3.2. Next, RBRS assigns a PageRank value of 1 / (number of nodes) to all nodes. Then, when the PageRank values of all nodes converge using the PageRank algorithm, each value becomes a PageRank score of the corresponding sentence. The higher the score, the higher the importance of the sentence, and the more similar sentences the sentence has.

The score propagation of the weighted PageRank algorithm is similar to that of the original PageRank algorithm. In the case of the weighted PageRank algorithm used in our proposed method RBRS, PR(A)/C(A) multiplied by edge weight value W, 0 ≤ W ≤ 1, is propagated, instead of PR(A)/C(A).

3.5. Ranking Merger

In their method in [

6], Jiang et al. used not only the scores of the sentences calculated by the PageRank algorithm but also Rastkar et al.’s [

2] scores of sentences obtained through the supervised regression model because, when duplicated bug reports do not exist, it is difficult to apply their method to bug reports. Additionally, the combination of the two methods yields higher performance. In this paper, RBRS also uses both the scores of the sentences calculated by the PageRank algorithm and Rastkar et al.’s [

2] score obtained from the supervised learning-based regression model to calculate the final score of each sentence.

Rastkar et al. [

2] created BRC data based on 36 bug reports and a handwritten summary. Additionally, Rastkar et al. [

2] proposed a method for summarizing bug reports by learning a logistic regression model by extracting 24 features from each sentence of a bug report. Based on the summary method in [

2], RBRS trains a logistic regression model based on the same 24 features. Using the logistic regression model, the probability that each sentence of the bug report will be included in the summary sentence can be calculated, and this is defined as the score

of the prediction module. Likewise, the score of each sentence obtained through the weighted-PageRank algorithm is defined as

, and each score is normalized to have a value between 0 and 1, and then those scores are summed. The score is calculated as follows.

The weight

α has a range of 0 ≤

α ≤ 1. We experimented with the values of a by changing it from 0 to 1 by 0.25 to find its optimal value. The score obtained through the weighted sum is considered to be the final score of each sentence. Each sentence is sorted according to the score, and the summary is extracted by using the top 25% of the sentences. This extraction method by 25%, which is described in Rastkar et al. [

2], is widely used in various existing bug report summarization, including the method in [

2] and is considered to be the most appropriate length for a summary.

5. Evaluation Results

Before analyzing the effect of dependency relationships on summary quality, this section first analyzes the scope of application. Jiang et al.’s [

6] PRST effectively improved the summary quality for bug reports by using the duplicates relationships in bug reports. However, there was no significant performance improvement for bug reports that had no duplicates relationships. In contrast, we use more relationships, blocks and depends-on as well as duplicates associations, which can greatly increase the scope of bug reports that can be applied. For this coverage analysis, we analyzed 50,000 bug reports created in 2018 for the Mozilla project.

Table 3 shows the analysis results of 44,600 bug reports, except for the 5400 bug reports that were closed.

As a result, Jiang et al.’s [

6] PRST can be effectively applied to only 5.2% of all bug reports. However, our method, which uses all three relationships, is expected to be effectively applied to 37.5% of all bug reports.

Section 5.1 describes the experiments and results for the main research questions, and

Section 5.2 describes the experiments and results for the subquestions.

5.1. RQ 1. The Effect of Dependency Relationships on Summary Quality

PageRank algorithm-based summarization works on the assumption that sentences similar to other sentences are important. Jiang et al.’s [

6] PRST used the PageRank algorithm to better identify important sentences using the textual similarities of the bug reports in the duplicates relationship. Similarly, our method RBRS focuses on the fact that there are text similarities among subjects and keywords among the bug reports that are in dependency relationships. To demonstrate this, we conducted experiments to show that this text similarity can be used to summarize bug reports.

5.1.1. Experiment 1-1. Summary Quality Comparison of Bug Reports without Duplicates Relationships

If the duplicates relationships do not exist while dependency relationships of blocks and depends-on exist, PRST [

6] cannot be applied. To evaluate this condition, we compared the summary quality of the proposed method RBRS with the PRST of the previous study when the duplicates relationships of 36 bug reports were ignored. The results are shown in

Table 4.

The results for Experiment 1-1 in

Table 4 shows that when no duplicates relationships exist, the precision, recall, and F1 scores improve by 6.41%, 6.14%, 6.28%, respectively. In our experiments, the number of sentences in extracted summary

is fixed at 25% of the total number of sentences in the bug report. Also, the number of golden summary sentences

is about 24% of the total number of sentences in the bug report. As the values of

and

, which are the denominators of precision and recall, are very similar, precision and recall in our experiments show similar values as well. F1 score, the harmonic mean of precision and recall, show a similar value. As the result, in precision, recall and F1 score, RBRS made the similar percentages of improvement.

The statistics of 44,600 bug reports described in

Table 3 shows that 14,317 bug reports have no duplicates relationships but have dependency relationships, which account for approximately 32.1% of all bug reports. Therefore, we interpret that our method, RBRS, by using the dependency relationships of the bug report, can improve the summary quality by approximately 6.4% for the bug reports that account for 32.1% of the total bug report.

In addition, most bug reports that have duplicates relationships are often found to be duplicated immediately after the report, and the bug report is closed immediately. As a result, there is often a lack of content for developers to discuss or resolve the bug. In this case, certain sentences or keywords, such as the symptoms or reproducing process created during the bug report, are duplicated. It seems that the summarization result can be biased in such duplicate sentences. Because of the limitations of these duplicates relationships, this bias occurs. Using additional dependency relationships can reduce the bias, resulting in improvement in summary quality.

5.1.2. Experiment 1-2. Summary Quality Comparison of Bug Reports with Duplicates Relationships

If duplicates relationships exist while dependency relationships of blocks and depends-on exist, both PRST and RBRS can be effectively applied. To evaluate this condition, we compare the summary quality of PRST and RBRS for 36 bug reports that satisfy the above conditions. The results are shown in

Table 5.

Experimental results 1-2 show that when duplicates relationships exist, the precision, recall, and F1 scores improve by approximately 2% compared to PRST. An improvement of 2%, which is the result of Experiment 1-1, can hardly be seen as a significant improvement in accuracy. Therefore, we interpret that the dependency relationships do not yield a significant improvement in summary quality when used with the duplicates relationships. However, based on 44,600 bug reports, our method can be expected to improve accuracy by approximately 2% for the 3% of the total bug report, which is the number shown in

Table 3.

5.1.3. Experiment 1-3. The Effect of Two Dependency Relationships on Summary Quality

This experiment determines whether the dependency relationships of depends-on and blocks contribute to improving summary quality. In the experiment, we also find the optimal value of edge weighting of the dependency relationships in the weighted-PageRank algorithm. In the experiment, when creating a bug report graph, different edge weights can be assigned according to the kind of relationships between bug reports. We analyze the change in summary quality by increasing the weight of

and the weight of

by 0.25 from 0 to 1. If the weight is zero, the relationship is ignored. The weights of the inner edges of the sentences in a bug report and the edges of the duplicates relationship are both set to 1.0. The experimental results are shown in

Table 6.

As a result of the experiment, both dependency relationships of blocks and depends-on show the highest precision when the edge weight is set to 1.0. Additionally, as

decreases from 1 to 0, the average precision decreases by 3.4%. In the case of

, the precision decrease is 3.3%. Both dependencies are found to have a positive effect on summary quality when applied to summary methods. There seems to be no significant difference between the two dependencies. Therefore, when applying this summary method, we suggest that the edge weights of the dependency relationships are all set to 1.0, as with the duplicates relationships. This means that three relationships of blocks, depends-on, and duplicates have the same weights in a bug report graph, which was described in

Section 3.2.

5.2. RQ 2. The Effect of Number of Relationships on Summary Quality

5.2.1. Experiment 2-1. The Trend of Precision Change According to the Depth of Search in Breadth-First-Search (BFS)

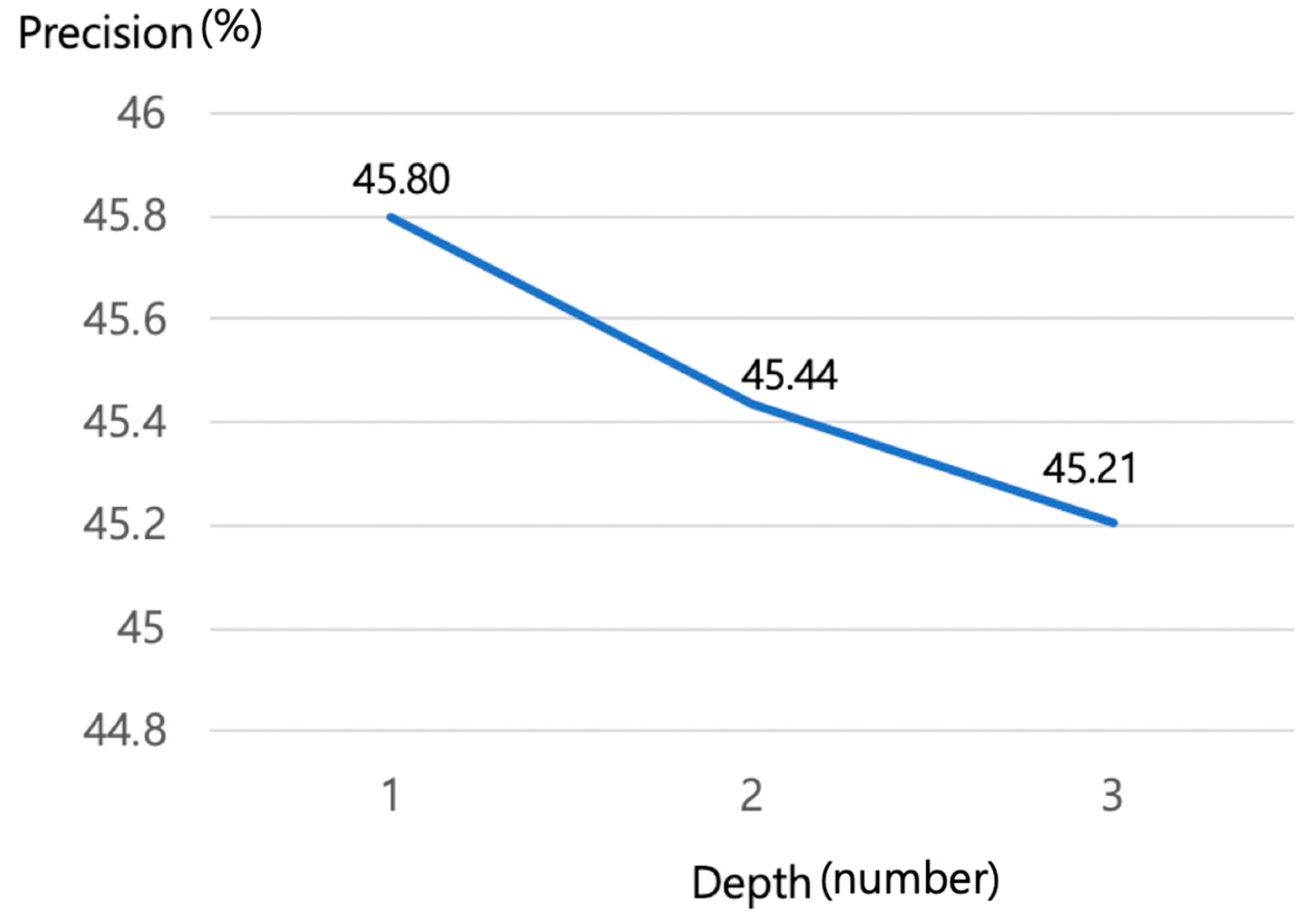

In this experiment, we analyzed the change in summary quality by increasing the BFS search depth from 1 to 3 in the bug report graph formation step. The experimental results are shown in

Figure 5. From depth 4 and above, as the depth increases, the size of the graph increases exponentially, and the algorithm execution time increases to a level where it is difficult to apply the algorithm to actual summarization. Therefore, depth 4 and above are excluded from the experiment.

As a result, when the depth is 1, the graph shows the highest precision. That is, forming the graph using only the bug reports that are directly related to the bug report is effective in bug report summarization. As the depth of the search increases, the precision decreases. However, the reduction rate is very low, 0.4%. The decrease in precision is because bug reports with a distance of 2 or more from the main bug report are significantly smaller in text similarity to the main bug report than with a bug report with a distance of 1. As bug reports with low similarity are added to the graph, they cause a decrease in the importance of the most important sentences or keywords in the main bug report. However, due to the characteristics of the PageRank algorithm, only the nodes that are directly connected have the propagation of importance.

5.2.2. Experiment 2-2. The Trend in Precision Change by Limiting the Number of Relationships

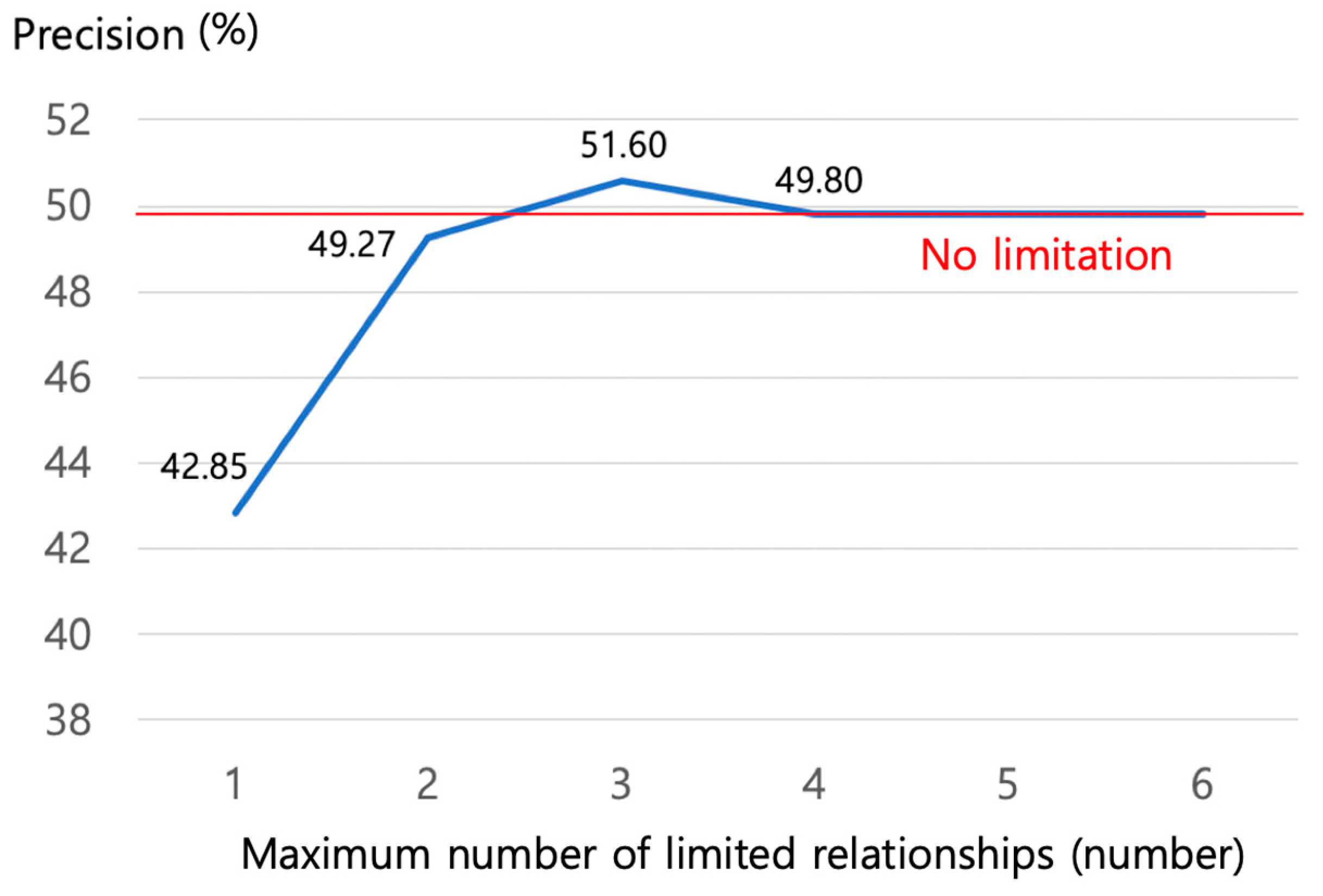

In this experiment, we analyze the change in summary quality when limiting the number of relationships per bug report to a maximum of N, which means that the three types of relationships are limited to N per bug report. In this case, each bug report can have up to 3N relationships with other bug reports. For the experiment, we arbitrarily select N related bug reports. The results of analyzing the trend in precision change by increasing the maximum association limit from 1 to 6 are shown in

Figure 6.

As a result, when the number of relationships is limited to one, the precision is very low, 42.85%. When the number of relationships is limited to three, the highest precision is 51.60%, which is higher than cases without limiting the number of relationships. If the number of relationships is limited to four or more, the same precision as the case of no limitation is shown because the number of bug reports that have more than 4 relationships among the bug reports to be tested seems to have no significant effect on precision. The low precision when the number of relationships is less than two is analyzed because there is not enough text to take advantage of the text similarity with the related bug report. When the number of relationships is three, it is expected to be the best number for applying the PageRank algorithm. Therefore, we suggest limiting the number of relationships to a maximum of three when our method is applied. In addition, for future research, we suggest a detailed experiment for change in precision as the size of text changes.

8. Conclusions

In this paper, we proposed RBRS a method for automatically summarizing bug reports using three associations (duplicates, blocks, depends-on). To evaluate RBRS, we constructed a corpus of 36 bug reports including the correlation information of bug reports. We then compared RBRS with PRST [

6]. Experimental results showed that our method RBRS yields 6.41% higher precision, 6.14% higher recall, and 6.28% higher F1 score than those of the previous method PRST.

The contributions of this paper are as follows. First, we proposed a weighted PageRank-based bug report summarization method that uses three kinds of association information for bug reports. Second, we built a corpus that can be used for bug report summary studies using the relationships of bug reports. Third, by analyzing the change in summary quality as the number and weight in relationships vary, we found a variable setting that maximizes summary quality. Fourth, we implemented the proposed method as a tool and performed a simulation to compare it with the existing method.

The bug report summary corpus constructed in this paper has a limitation that it is small and is written by persons who did not participate in building the bug reports. Previous bug report summarization research also had such limitation. So, for future research, we plan to use a larger bug report corpus to address this issue. We also found the shortcomings of the commonly used natural language processing library NLTK for preprocessing bug reports. We realized that the styles and formats of the sentences in bug reports are different from those of the normal sentences used in newspapers, novels, scientific papers, etc. Therefore, we also plan to conduct research on preprocessing techniques to enhance the quality of bug report summaries. As a final remark, although we improved the accuracy of summarizing bug reports with additional relationships of bug reports, we are not satisfied with the 50.92% F-score. For the improvement of summary accuracy, we are going to explore other machine-learning techniques, including a sequence to sequence model.