Albayzin 2018 Evaluation: The IberSpeech-RTVE Challenge on Speech Technologies for Spanish Broadcast Media

Abstract

1. Introduction

2. IberSpeech-RTVE 2018 Evaluation Data

3. IberSpeech-RTVE 2018 Evaluation Tasks

3.1. Speech-to-Text Challenge

3.1.1. Challenge Description and Databases

Training and Development Data

Training Conditions

- Closed-set condition: The closed-set condition limited the system training to use the training and development datasets of the RTVE2018 database. The use of pretrained models on data other than RTVE2018 was not allowed in this condition. Participants could use any external phonetic transcription dictionary.

- Open-set condition: The open-set training condition removed the limitations of the closed-set condition. Participants were free to use the RTVE2018 training and development set or any other data to train their systems provided that these data were fully documented in the system’s description paper.

Evaluation Data

3.1.2. Performance Measurement

Primary Metric

Alternative Metrics

3.2. Speaker Diarization Challenge

3.2.1. Challenge Description and Databases

Databases

Training Conditions

- Closed-set condition: The closed-set condition limited the use of data to the set of audio of the three partitions distributed in the challenge.

- Open-set condition: The open-set condition eliminated the limitations of the closed-set condition. Participants were free to use any dataset, as long as they were publicly accessible for all (not necessarily free).

Evaluation Data

3.2.2. Diarization Scoring

- Speaker error time: The speaker error time is the amount of time that has been assigned to an incorrect speaker. This error can occur in segments where the number of system speakers is greater than the number of reference speakers, but also in segments where the number of system speakers is lower than the number of reference speakers whenever the number of system speakers and the number of reference speakers are greater than zero.

- Missed speech time: The missed speech time refers to the amount of time that speech is present, but not labeled by the diarization system in segments where the number of system speakers is lower than the number of reference speakers.

- False alarm time: The false alarm time is the amount of time that a speaker has been labeled by the diarization system, but is not present in segments where the number of system speakers is greater than the number of reference speakers.

3.3. Multimodal Diarization Challenge

3.3.1. Challenge Description and Databases

Development and Evaluation Data

- For development, the dev2 partition contained a two-hour show “La noche en 24H” labeled with speaker and face timestamps. Enrollment files for the main characters were also provided. Enrollment files consisted of pictures and short videos with the character speaking. Additionally, the dev2 partition contained around 14 h of speaker diarization timestamps. No restrictions were placed on the use of any data outside the RTVE2018.

- For the evaluation, three television programs were distributed, one from “La Mañana” and two from “La Tarde en 24H Tertulia”, which totaled four hours. For enrollment, photos (10) and video (20 s) of the 39 characters to be labeled were provided.

3.3.2. Performance Scoring

- Speaker/face error time: The speaker/face error time is the amount of time that has been assigned to an incorrect speaker/face. This error can occur in segments where the number of system speakers/faces is greater than the number of reference speakers/faces, but also in segments where the number of system speakers/faces is lower than the number of reference speakers/faces whenever the number of system speakers/faces and the number of reference speakers/faces is greater than zero.

- Missed speech/face time: The missed speech/face time refers to the amount of time that speech/face is present, but not labeled by the diarization system in segments where the number of system speakers/faces is lower than the number of reference speakers/faces.

- False alarm time: The false alarm time is the amount of time that a speaker/face has been labeled by the diarization system, but is not present in segments where the number of system speakers/faces is greater than the number of reference speakers/faces.

4. Submitted Systems

4.1. Speech-to-Text Challenge

4.1.1. Open-Set Condition Systems

- G1-GTM-UVIGO [19]. Multimedia Technologies Group, Universidad de Vigo, Spain.G1-GTM-UVIGO submitted two systems using as the recognition engine the Kaldi toolkit http://kaldi-asr.org/. Primary and contrastive systems differed in the language model (LM) used in the rescoring stage. The primary system used the four-gram LM, and the contrastive system used the recurrent neural network language modeling toolkit (RNNLM) provided in the Kaldi distribution. The acoustic models were trained using 109 h of speech: 79 h in Spanish (2006 TC-START http://tcstar.org/) and 30 in Galician (news database of Galicia, Transcrigal http://metashare.elda.org/repository/browse/transcrigal-db/72ee3974cbec11e181b50030482ab95203851f1f95e64c00b842977a318ef641/). The RTVE2018 database text files and text corpus of 90M words from several sources were used for language model training.

- G3-GTTS-EHU. Working group on software technologies, Universidad del País Vasco, Spain. This team participated with a commercial speech-to-text conversion system, with general purpose acoustic and language models. Only a primary system was submitted.

- G5-LIMECRAFT. Visiona Ingeniería de Proyectos, Madrid, Spain.This team participated with a commercial speech-to-text conversion system, with general purpose acoustic and language models. Only a primary system was submitted.

- G6-VICOMTECH-PRHLT [20]. VICOMTECH, Visual Interacion & Communication Technologies, Donostia, Spain and Pattern Recognition and PRHLT, Human Language Technologies Research Center, Universidad Politécnica de Valencia, Spain.G6-VICOMTECH-PRHLT submitted three systems. The primary system was an evolution of an already existing E2E (end-to-end) model based on DeepSpeech2 https://arxiv.org/abs/1512.02595, which was built using the three-fold augmented SAVAS https://cordis.europa.eu/project/rcn/103572/factsheet/en, Albayzin http://catalogue.elra.info/en-us/repository/browse/ELRA-S0089/, and Multext http://catalogue.elra.info/en-us/repository/browse/ELRA-S0060/ corpora for 28 epochs. For this challenge, it evolved with two new epochs using the same corpora in addition to the three-fold augmented nearly-perfectly aligned corpus obtained from the RTVE2018 dataset. A total of 897 h was used for training. The language model was a five-gram, trained with the text data from the open-set dataset. The first contrastive system was also an evolution of the already existing E2E model, but in this case, it evolved with one epoch using the three-fold augmented corpora used in the primary system and a new YouTube RTVE corpus. The duration of the total amount of training audio was 1488 h. The language model was a five-gram trained with the text data from the open-set dataset. The second contrastive system was composed of a bidirectional LSTM-HMM (long short term memory-hidden Markov model) acoustic model combined with a three-gram language model for decoding and a nine-gram language for re-scoring lattices. The acoustic model was trained with the same data as the primary system of the open-set condition. The language models were estimated with the RTVE2018 database text files and general news data from newspapers.

- G7-MLLP-RWTH [21]. MLLP, Machine Learning and Language Processing, Universidad Politécnica de Valencia, Spain and Human Language Technology and Pattern Recognition, RWTH Aachen University, Germany.G7-MLLP-RWTH submitted only a primary system. The recognition engine was an evolution of RETURNN https://github.com/rwth-i6/returnn and RASR https://www-i6.informatik.rwth-aachen.de/rwth-asr/, and it was based on a hybrid LSTM-HMM acoustic model. Acoustic modeling was done using a bidirectional LSTM network with four layers and 512 LSTM units in each layer. Three-thousand eight-hundred hours of speech transcribed from various sources (subtitled videos of Spanish and Latin American websites) were used for training the acoustic models. The language model for the single-pass HMM decoding was a five-gram count model trained with Kneser–Ney smoothing on a large body of text data collected from multiple publicly available sources. A lexicon of 325K words with one or more variants of pronunciation was used. Neither speaker, nor domain adaptation, nor model tuning were used.

- G14-SIGMA [22]. Sigma AI, Madrid, Spain.G14-SIGMA submitted only a primary system. The ASR system was based on the open-source Kaldi Toolkit. The ASR architecture consisted of the classical sequence of three main modules: an acoustic model, a dictionary or pronunciation lexicon, and an N-gram language model. These modules were combined for training and decoding using weighted finite state transducers (WFST). The acoustic modeling was based on deep neural networks and hidden Markov models (DNNHMM). To improve robustness mainly on speaker variability, speaker adaptive training (SAT) based on i-vectors was also implemented. Acoustic models were trained using 600 h from RTVE2018 (350 h of manual transcription), VESLIM https://ieeexplore.ieee.org/document/1255449/ (103 h), and OWNMEDIA (162 h of television programs). RTVE2018 database texts, news between 2015 and 2018, interviews, and subtitles were used to train the language model.

- G21-EMPHATIC [23]. SPIN-Speech Interactive Research Group, Universidad del País Vasco, Spain and Intelligent Voice, U.K.The G21-EMPHATIC ASR system was based on the open-source Kaldi Toolkit. The Kaldi Aspire recipe https://github.com/kaldi-asr/kaldi/tree/master/egs/aspire was used for building the DNNHMM acoustic model. Albayzin, Dihana http://universal.elra.info/product_info.php?products_id=1421, CORLEC-EHU http://gtts.ehu.es/gtts/NT/fulltext/RodriguezEtal03a.pdf, and TC-START databases with a total of 352 h were used to train the acoustic models. The provided training and development audio files were subsampled to 8 kHz before being used in the training and testing processes. A three-gram LM base model trained with the transcripts of Albayzin, Dihana. CORLEC-EHU, TC-START, and a newspaper corpus (El País) was adapted using the selected training transcriptions of the RTVE2018 data.

4.1.2. Closed-Set Condition Systems

- G6-VICOMTECH-PRHLT [20]. VICOMTECH, Visual Interacion & Communication Technologies, Donostia, Spain and PRHLT, Pattern Recognition and Human Language Technologies Research Center, Universidad Politécnica de Valencia, Spain.G7-VICOMTECH-PRHLT submitted three systems. The primary system was a bidirectional LSTM-HMM based system combined with a three-gram language model for decoding and a nine-gram language model for re-scoring lattices. The training and development sets were aligned and filtered to get nearly 136 h of audio with transcription. The acoustic model was trained with a nearly perfectly aligned partition, which was three-fold augmented through the speed based augmentation technique obtaining a total of 396 h and 33 min of audio. The language models were estimated with the in-domain texts compiled from the RTVE2018 dataset. The first contrastive system was set up using the same configuration of the primary system, but the acoustic model was estimated using the three-fold augmented acoustic data of the perfectly aligned partition given a total of 258 h and 27 min of audio. The same data were used to build the second contrastive system, but it was an E2E recognition system that followed the architecture used for the open-set condition. The language model was a five-gram.

- G7-MLLP-RWTH [21]. MLLP, Machine Learning and Language Processing, Universidad Politécnica de Valencia, Spain and Human Language Technology and Pattern Recognition, RWTH Aachen University, Germany.G7-MLLP-RWTH submitted two systems. The recognition engine was the translectures-UPV toolkit (TLK) decoder [24]. The ASR consisted of a bidirectional LSTM-HMM acoustic model and a combination of both RNNLM and TV-show adapted n-gram language models. The training and development sets were aligned and filtered to get nearly 218 h of audio with transcription. For the primary system, all aligned data from train, dev1, and dev2 partitions, 218 h, were used for acoustic model training. For the contrastive system, only a reliable set of 205 h of the train and dev1 partitions were used for training the acoustic models. The language models were estimated with the in-domain texts compiled from the RTVE2018 dataset with a lexicon of 132 K words.

- G14-SIGMA [22]. Sigma AI, Madrid, Spain.G14-SIGMA submitted only a primary system. The system had the same architecture as the one submitted for the open-set conditions, but only 350 h of manual transcription of the training set were used for training the acoustic models. The language model was trained using the subtitles provided in the RTVE2018 dataset and manual transcriptions.

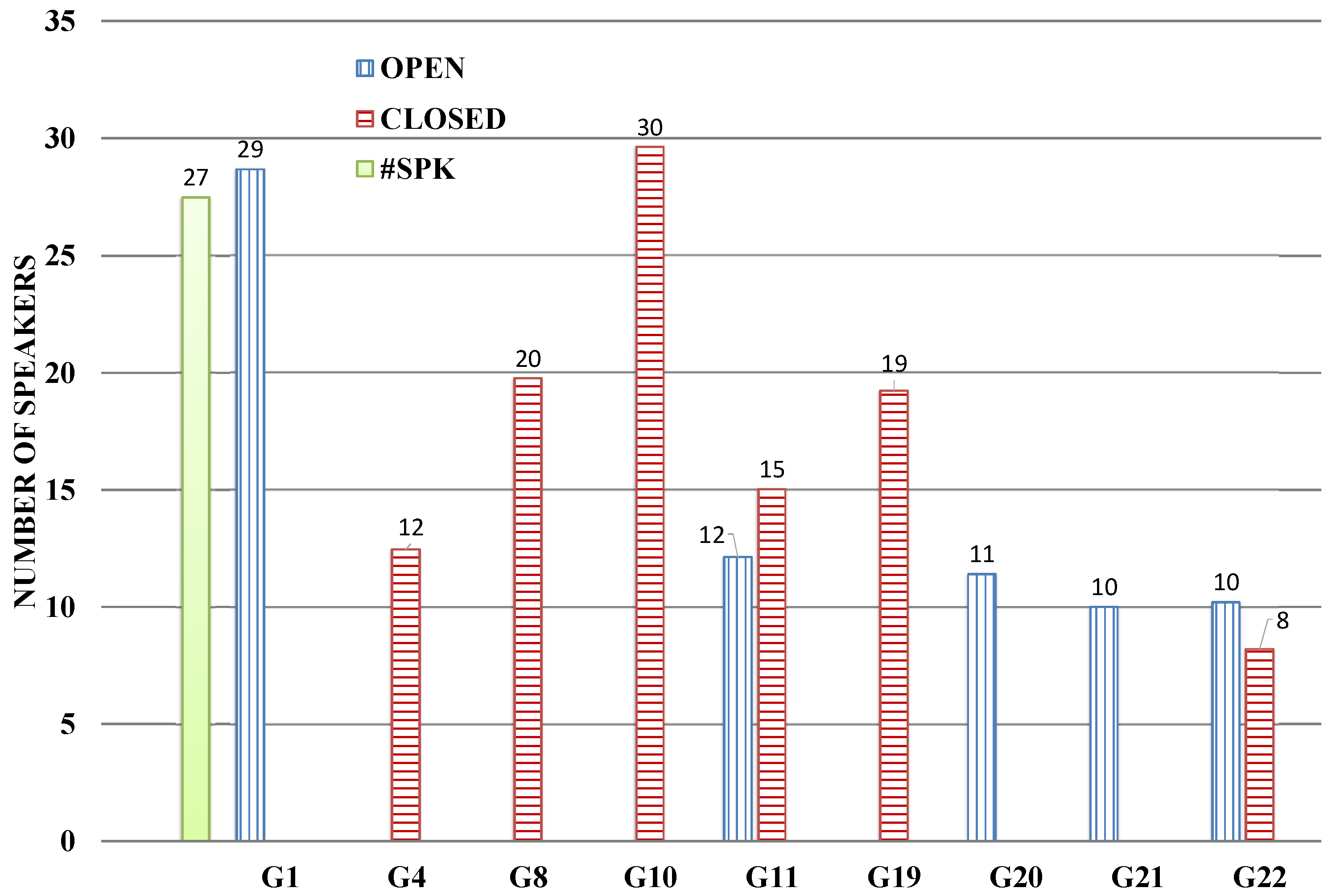

4.2. Speaker Diarization

4.2.1. Open-Set Condition Systems

- G1-GTM-UVIGO. Multimedia Technologies Group, Universidad de Vigo, Spain.A pre-trained deep neural network http://kaldi-asr.org/models.html was used with Kaldi and data from VoxCeleb1 and VoxCeleb2 databases http://www.robots.ox.ac.uk/~vgg/data/voxceleb/. This DNN mapped variable length speech segments into fixed dimension vectors called x-vectors. The strategy followed for diarization consisted of three main stages. The first stage was the extraction of the x-vector and grouping using the clustering algorithm “Chinese Whispers”. In the second stage, each of the audio segments was processed to extract one or more x-vectors using a sliding window of 10 s with half a second of displacement between successive windows. These vectors were grouped using the clustering algorithm “Chinese Whispers” obtaining the clusters that defined the result of the diarization. Finally, a music/non-music discriminator based on i-vectors and a logistic regression model were applied to eliminate those audio segments that were highly likely to correspond to music. This discriminator was also trained with external data.

- G11-ODESSA [25]. EURECOM, LIMSI, CNRS, France.A primary system resulting from the combination at a similarity matrix level of three systems, one trained according to the closed-set condition and two trained with two external databases (NIST SRE (Speaker Recognition Evaluation) and Voxceleb), was submitted. The first contrastive system used one second uniform segmentation, x-vector representation trained on NIST SRE data, and agglomerative hierarchical clustering (AHC). The second contrastive system was the same as the one for the closed-set, but where the training data were replaced with the Voxceleb data.

- G20-STAR-LAB [26]. STAR Lab, SRI International, USA.The training signals were extracted from the databases NIST SRE https://www.nist.gov/itl/iad/mig/speaker-recognition 2004–2008, NIST SRE 2012, Mixer6 https://catalog.ldc.upenn.edu/LDC2013S03, Voxceleb1, and Voxceleb2. Augmentation of data was applied using four categories of degradations including music, noise at a 5 dB signal-to-noise ratio, compression, and low levels of reverb. STAR-Lab used the embeddings and diarization system developed for the speaker recognition competition NIST 2018 [27]. It incorporated modifications in the detection of voice activity and in the calculation of embeddings for speaker recognition. The differences between systems, primary and contrast, were found in the different parameters used in the voice activity detection system.

- G21-EMPHATIC [28]. SPIN-Speech Interactive Research Group, Universidad del País Vasco, Spain, Intelligent Voice, U.K.The Switchboard corpora databases (LDC2001S13, LDC2002S06, LDC2004S07, LDC98S75, LDC99S79) and NIST SRE 2004–2010 were used for training. Data augmentation was used to provide a greater diversity of acoustic environments. The representation of speaker embeddings was obtained through an end-to-end model using convolutional (CNN) and recurrent (LSTM) networks.

- G22-JHU [29]. Center for Language and Speech Processing, Johns Hopkins University, USA.Results were presented with different databases for training. Voxceleb1 and Voxceleb2 were used with and without data augmentation, SRE12-micphn, MX6-micph, and SITW-dev-core, Fisher database, Albayzin2016, and RTVEDB2018. In relation to the diarization system, several systems based on four different types of embeddings extractors: x-vector-basic, x-vector-factored, i-vector-basic, and bottleneck features (BNF) i-vector were used. All the systems followed the structure: parameter extraction, voice activity detector, embeddings extraction, probabilistic linear discriminant analysis (PLDA) scoring, fusion and grouping of speakers.

4.2.2. Closed-Set Condition Systems

- G4-VG [30]. Voice Group, Advanced Technologies Application Center-CENATAV, Cuba.The submitted systems used a classic structure based on Bayesian information criterion (BIC) segmentation, hierarchical agglomerative grouping, and re-segmentation by hidden Markov models. The toolbox S4D https://projets-lium.univ-lemans.fr/s4d/ was used. The difference between the submitted systems was a feature extraction method ranging from classic ones as Mel frequency cepstral coefficients (MFCC), linear frequency cepstral coefficients (LFCC), and linear prediction cepstral coefficients (LPCC), to new ones such as mean Hilbert envelope coefficients [31], medium duration modulation coefficients, and power normalization cepstral coefficients [32].

- G8-AUDIAS-UAM [33]. Audio, Data Intelligence and Speech, Universidad Autónoma de Madrid, Spain.Three different systems were submitted, two based on DNN based embeddings using an architecture based on bidirectional LSTM recurrent neural network (primary and first contrastive systems) and a third one based on the classical model of total variability (second contrastive system).

- G10-VIVOLAB [34]. ViVoLAB, Universidad de Zaragoza, Spain.The system was based on the use of i-vectors with PLDA. The i-vectors were extracted from the audio in accordance with the assumption that each segment represented the intervention of a speaker. The hypothesis of diarization was obtained by grouping the i-vectors with a fully Bayesian PLDA. The number of speakers was decided by comparing multiple hypotheses according to the information provided by the PLDA. The primary system performed unsupervised PLDA adaptation, while the contrastive one did not.

- G11-ODESSA [25]. EURECOM, LIMSI, CNRS, France.The primary system was the fusion at a diarization hypothesis level of the two contrastive systems. The first contrastive system used ICMCfeatures (infinite impulse response—constant Q, Mel-frequency cepstral coefficients), one second uniform segmentation, binary key (BK) representation, and AHC, while the second one used MFCC features, bidirectional LSTM based speaker change detection, triplet-loss neural embedding representation, and affinity propagation clustering.

- G19-EML [35]. European Media Laboratory GmbH, Germany.The submitted diarization system was designed primarily for on-line applications. Every 2 s, it made a decision about the identity of the speaker without using future information. It used speaker vectors based on the transformation of a Gaussian mixture model (GMM) supervector.

- G22-JHU [29]. Center for Language and Speech Processing, Johns Hopkins University, USA.The system submitted in the closed-set condition was similar to that of the open-set condition with the difference that in this case, only embeddings based on i-vectors were used.

4.3. Multimodal Diarization

System Descriptions

- G1-GTM-UVIGO [36]. Multimedia Technologies Group, Universidad de Vigo, Spain.The proposed system used state-of-the-art algorithms based on deep neural networks for face detection and identification and speaker diarization and identification. Monomodal systems were used for faces and speaker, and the results of each system were fused to better adjust the speech of speakers.

- G2-GPS-UPC [37]. Signal processing group, Universidad Politécnica de Cataluña, Spain.The submitted system consisted of two monomodal systems and a fusion block that combined the outputs of the monomodal systems to refine the final result. The audio and video signal were processed independently, and they were merged assuming there was a temporal correlation between the speaker’s speech and face, that there were talking characters, his/her face did not appear, and faces that appeared, but did not speak.

- G9-PLUMCOT [38]. LIMSI, CNRS, France.The submitted systems (primary and two contrastive) made use of technologies based on monomodal neural networks: segmentation of the speaker, embeddings of speakers, embeddings of faces, and detection of talking faces. The PLUMCOT system tried to optimize various hyperparameters of the algorithms by jointly using visual and audio information.

- G11-ODESSA [38]. EURECOM, LIMSI, CNRS, France, IDIAP, Switzerland.The system submitted by ODESSA was the same as the one presented by PLUMCOT, with the difference that in this case, the diarization systems were totally independent, and each one was optimized in a monomodal way.

5. Results

5.1. Speech-to-Text Evaluation

5.1.1. Open-Set Condition Results

5.1.2. Closed-Set Condition Results

5.2. Speaker Diarization Evaluation

5.2.1. Open-Set Condition Results

5.2.2. Closed-set Condition Results

5.3. Multimodal Diarization Evaluation

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Garofolo, J.; Fiscus, J.; Fisher, W. Design and preparation of the 1996 HUB-4 broadcast news benchmark test corpora. In Proceedings of the DARPA Speech Recognition Workshop, Chantilly, VA, USA, 2–5 February 1997. [Google Scholar]

- Graff, D. An overview of Broadcast News corpora. Speech Commun. 2002, 37, 15–26. [Google Scholar] [CrossRef]

- Galliano, S.; Geoffrois, E.; Gravier, G.; Bonastre, J.; Mostefa, D.; Choukri, K. Corpus description of the ester evaluation campaign for the rich transcription of french broadcast news. In Proceedings of the 5th International Conference on Language Resources and Evaluation (LREC), Genoa, Italy, 22–28 May 2006; pp. 315–320. [Google Scholar]

- Galliano, S.; Gravier, G.; Chaubard, L. The ESTER 2 evaluation campaign for the rich transcription of French radio broadcasts. In Proceedings of the Interspeech, Brighton, UK, 6–10 September 2009; pp. 2583–2586. [Google Scholar]

- Mostefa, D.; Hamon, O.; Choukri, K. Evaluation of Automatic Speech Recognition and Spoken Language Translation within TC-STAR: Results from the first evaluation campaign. In Proceedings of the LREC’06, Genoa, Italy, 22–28 May 2006. [Google Scholar]

- Butko, T.; Nadeu, C. Audio segmentation of broadcast news in the Albayzin-2010 evaluation: Overview, results, and discussion. EURASIP J. Audio Speech Music Process. 2011. [Google Scholar] [CrossRef]

- Zelenák, M.; Schulz, H.; Hernando, J. Speaker diarization of broadcast news in Albayzin 2010 evaluation campaign. EURASIP J. Audio Speech Music Process. 2012. [Google Scholar] [CrossRef]

- Ortega, A.; Castan, D.; Miguel, A.; Lleida, E. The Albayzin-2012 audio segmentation evaluation. In Proceedings of the IberSpeech, Madrid, Spain, 21–23 November 2012. [Google Scholar]

- Ortega, A.; Castan, D.; Miguel, A.; Lleida, E. The Albayzin-2014 audio segmentation evaluation. In Proceedings of the IberSpeech, Las Palmas de Gran Canaria, Spain, 19–21 November 2014. [Google Scholar]

- Castán, D.; Ortega, A.; Miguel, A.; Lleida, E. Audio segmentation-by-classification approach based on factor analysis in broadcast news domain. EURASIP J. Audio Speech Music Process. 2014, 34. [Google Scholar] [CrossRef]

- Ortega, A.; Viñals, I.; Miguel, A.; Lleida, E. The Albayzin-2016 Speaker Diarization Evaluation. In Proceedings of the IberSpeech, Lisbon, Portugal, 23–25 November 2016. [Google Scholar]

- Bell, P.; Gales, M.J.F.; Hain, T.; Kilgour, J.; Lanchantin, P.; Liu, X.; McParland, A.; Renals, S.; Saz, O.; Wester, M.; et al. The MGB challenge: Evaluating multi-genre broadcast media recognition. In Proceedings of the 2015 IEEE Workshop on Automatic Speech Recognition and Understanding, ASRU 2015, Scottsdale, AZ, USA, 13–17 December 2015; pp. 687–693. [Google Scholar] [CrossRef]

- Ali, A.M.; Bell, P.; Glass, J.R.; Messaoui, Y.; Mubarak, H.; Renals, S.; Zhang, Y. The MGB-2 challenge: Arabic multi-dialect broadcast media recognition. In Proceedings of the 2016 IEEE Spoken Language Technology Workshop (SLT), San Diego, CA, USA, 13–16 December 2016; pp. 279–284. [Google Scholar] [CrossRef]

- Ali, A.; Vogel, S.; Renals, S. Speech recognition challenge in the wild: Arabic MGB-3. In Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU 2017), Okinawa, Japan, 16–20 December 2017; pp. 316–322. [Google Scholar] [CrossRef]

- Versteegh, M.; Thiollière, R.; Schatz, T.; Cao, X.; Anguera, X.; Jansen, A.; Dupoux, E. The zero resource speech challenge 2015. In Proceedings of the INTERSPEECH 2015—16th Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015; pp. 3169–3173. [Google Scholar]

- Dunbar, E.; Cao, X.; Benjumea, J.; Karadayi, J.; Bernard, M.; Besacier, L.; Anguera, X.; Dupoux, E. The zero resource speech challenge 2017. In Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU 2017), Okinawa, Japan, 16–20 December 2017; pp. 323–330. [Google Scholar] [CrossRef]

- Eskevich, M.; Aly, R.; Racca, D.N.; Ordelman, R.; Chen, S.; Jones, G.J.F. The Search and Hyperlinking Task at MediaEval 2014. In Proceedings of the Working Notes Proceedings of the MediaEval 2014 Workshop, Barcelona, Catalunya, Spain, 16–17 October 2014. [Google Scholar]

- Zelenak, M.; Schulz, H.; Hernando, J. Albayzin 2010 Evaluation Campaign: Speaker Diarization. In Proceedings of the VI Jornadas en Tecnologías del Habla—FALA 2010, Vigo, Spain, 10–12 November 2010. [Google Scholar]

- Docío-Fernández, L.; García-Mateo, C. The GTM-UVIGO System for Albayzin 2018 Speech-to-Text Evaluation. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 277–280. [Google Scholar] [CrossRef]

- Arzelus, H.; Alvarez, A.; Bernath, C.; García, E.; Granell, E.; Martinez Hinarejos, C.D. The Vicomtech- PRHLT Speech Transcription Systems for the IberSPEECH-RTVE 2018 Speech to Text Transcription Challenge. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 267–271. [Google Scholar] [CrossRef]

- Jorge, J.; Martínez-Villaronga, A.; Golik, P.; Giménez, A.; Silvestre-Cerdà, J.A.; Doetsch, P.; Císcar, V.A.; Ney, H.; Juan, A.; Sanchis, A. MLLP-UPV and RWTH Aachen Spanish ASR Systems for the IberSpeech-RTVE 2018 Speech-to-Text Transcription Challenge. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 257–261. [Google Scholar] [CrossRef]

- Perero-Codosero, J.M.; Antón-Martín, J.; Tapias Merino, D.; López-Gonzalo, E.; Hernández-Gómez, L.A. Exploring Open-Source Deep Learning ASR for Speech-to-Text TV program transcription. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 262–266. [Google Scholar] [CrossRef]

- Dugan, N.; Glackin, C.; Chollet, G.; Cannings, N. Intelligent Voice ASR system for IberSpeech 2018 Speech to Text Transcription Challenge. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 272–276. [Google Scholar] [CrossRef]

- Del Agua, M.; Giménez, A.; Serrano, N.; Andrés-Ferrer, J.; Civera, J.; Sanchis, A.; Juan, A. The translectures- UPV toolkit. In Advances in Speech and Language Technologies for Iberian Languages; Springer: Cham, Switzerland, 2014; pp. 269–278. [Google Scholar]

- Patino, J.; Delgado, H.; Yin, R.; Bredin, H.; Barras, C.; Evans, N. ODESSA at Albayzin Speaker Diarization Challenge 2018. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 211–215. [Google Scholar] [CrossRef]

- Castan, D.; McLaren, M.; Nandwana, M.K. The SRI International STAR-LAB System Description for IberSPEECH-RTVE 2018 Speaker Diarization Challenge. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 208–210. [Google Scholar] [CrossRef]

- McLaren, M.; Ferrer, L.; Castan, D.; Nandwana, M.; Travadi, R. The sri-con-usc nist 2018 sre system description. In Proceedings of the NIST 2018 Speaker Recognition Evaluation, Athens, Greece, 16–17 December 2018. [Google Scholar]

- Khosravani, A.; Glackin, C.; Dugan, N.; Chollet, G.; Cannings, N. The Intelligent Voice System for the IberSPEECH-RTVE 2018 Speaker Diarization Challenge. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 231–235. [Google Scholar] [CrossRef]

- Huang, Z.; García-Perera, L.P.; Villalba, J.; Povey, D.; Dehak, N. JHU Diarization System Description. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 236–239. [Google Scholar] [CrossRef]

- Campbell, E.L.; Hernandez, G.; Calvo de Lara, J.R. CENATAV Voice-Group Systems for Albayzin 2018 Speaker Diarization Evaluation Campaign. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 227–230. [Google Scholar] [CrossRef]

- Sadjadi, S.O.; Hansen, J.H. Mean Hilbert envelope coefficients (MHEC) for robust speaker and language identification. Speech Commun. 2015, 72, 138–148. [Google Scholar] [CrossRef]

- Kim, C.; Stern, R.M. Power-Normalized Cepstral Coefficients (PNCC) for Robust Speech Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 1315–1329. [Google Scholar] [CrossRef]

- Lozano-Diez, A.; Labrador, B.; de Benito, D.; Ramirez, P.; Toledano, D.T. DNN-based Embeddings for Speaker Diarization in the AuDIaS-UAM System for the Albayzin 2018 IberSPEECH-RTVE Evaluation. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 224–226. [Google Scholar] [CrossRef]

- Viñals, I.; Gimeno, P.; Ortega, A.; Miguel, A.; Lleida, E. In-domain Adaptation Solutions for the RTVE 2018 Diarization Challenge. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 220–223. [Google Scholar] [CrossRef]

- Ghahabi, O.; Fischer, V. EML Submission to Albayzin 2018 Speaker Diarization Challenge. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 216–219. [Google Scholar] [CrossRef]

- Ramos-Muguerza, E.; Docío-Fernández, L.; Alba-Castro, J.L. The GTM-UVIGO System for Audiovisual Diarization. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 204–207. [Google Scholar] [CrossRef]

- India Massana, M.A.; Sagastiberri, I.; Palau, P.; Sayrol, E.; Morros, J.R.; Hernando, J. UPC Multimodal Speaker Diarization System for the 2018 Albayzin Challenge. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 199–203. [Google Scholar] [CrossRef]

- Maurice, B.; Bredin, H.; Yin, R.; Patino, J.; Delgado, H.; Barras, C.; Evans, N.; Guinaudeau, C. ODESSA/PLUMCOT at Albayzin Multimodal Diarization Challenge 2018. In Proceedings of the IberSPEECH, Barcelona, Spain, 21–23 November 2018; pp. 194–198. [Google Scholar] [CrossRef]

| Show | Duration | Show Content |

|---|---|---|

| 20H | 41:35:50 | News of the day. |

| Agrosfera | 37:34:32 | Agrosfera wants to bring the news of the countryside and the sea to farmers, ranchers, fishermen, and rural inhabitants. The program also aims to bring this rural world closer to those who do not inhabit it, but who do enjoy it. |

| Al filo de lo Imposible | 11:09:57 | This show broadcasts documentaries about mountaineering, climbing, and other outdoor risky sports. It is a documentary series in which emotion, adventure, sports, and risk predominate. |

| Arranca en Verde | 05:38:05 | Contest dedicated to road safety. In it, viewers are presented with questions related to road safety in order to disseminate in a pleasant way the rules of the road and thus raise awareness about civic driving and respect for the environment. |

| Asuntos Públicos | 69:38:00 | All the analysis of the news of the day and the live broadcast of the most outstanding information events. |

| Comando Actualidad | 17:03:41 | A show that presents a current topic through the choral gaze of several street reporters. Four journalists who travel to the place where the news occurs show them as they are and bring their personal perspective to the subject. |

| Dicho y Hecho | 10:06:00 | Game show in which a group of 6 comedians and celebrities compete against each other through hilarious challenges. |

| España en Comunidad | 13:02:59 | Show that offers in-depth reports and current information about the different Spanish autonomous communities. It is made by the territorial and production centers of RTVE. |

| La Mañana | 227:47:00 | Live show, with a varied offer of content for the whole family and with the clear vocation of public service. |

| La Tarde en 24H Economia | 04:10:54 | Program about the economy. |

| La Tarde en 24H Tertulia | 26:42:00 | Talk show of political and economic news (4 to 5 people). |

| La Tarde en 24H Entrevista | 04:54:03 | In-depth interviews with personalities from different fields. |

| La Tarde en 24H el Tiempo | 02:20:12 | Weather information of Spain, Europe, and America. |

| Latinoamérica en 24H | 16:19:00 | Analysis and information show focused on Ibero-America, in collaboration with the information services of the international area and the network of correspondents of RTVE. |

| Millennium | 19:08:35 | Debate show of ideas that pretends to be useful to the spectators of today, accompanying them in the analysis of everyday events. |

| Saber y Ganar | 29:00:10 | Daily contest presented that aims to disseminate culture in an entertaining way. Three contestants demonstrate their knowledge and mental agility, through a set of general questions. |

| La Noche en 24H | 33:11:06 | Talk show with the best analysts to understand what has happened throughout the day. It contains interviews with some of the protagonists of the day. |

| Total duration | 569:22:04 |

| dev1 | Hours | Track | dev2 | Hours | Track |

|---|---|---|---|---|---|

| 20H | 9:13:13 | S2T | |||

| Asuntos Públicos | 8:11:00 | S2T | |||

| Comando Actualidad | 7:53:13 | S2T | |||

| La Mañana | 1:30:00 | S2T | |||

| Millennium | 7:42:44 | SD, S2T | |||

| La noche en 24H | 25:44:25 | S2T | La noche en 24H | 7:26:41 | SD, S2T, MD |

| 52:31:51 | 15:09:25 |

| Show | S2T | SD | MD |

|---|---|---|---|

| Al filo de lo Imposible | 4:10:03 | ||

| Arranca en Verde | 1:00:30 | ||

| Dicho y Hecho. | 1:48:00 | ||

| España en Comunidad | 8:09:32 | 8:09:32 | |

| La Mañana | 8:05:00 | 1:36:31 | 1:36:31 |

| La Tarde en 24H (Tertulia) | 8:52:20 | 8:52:20 | 2:28:14 |

| Latinoamérica en 24H | 4:06:57 | 4:06:57 | |

| Saber y Ganar | 2:54:53 | ||

| 39:07:15 | 22:45:20 | 4:04:45 |

| Show | Acronym | # of Shows | Duration | Audio Features |

|---|---|---|---|---|

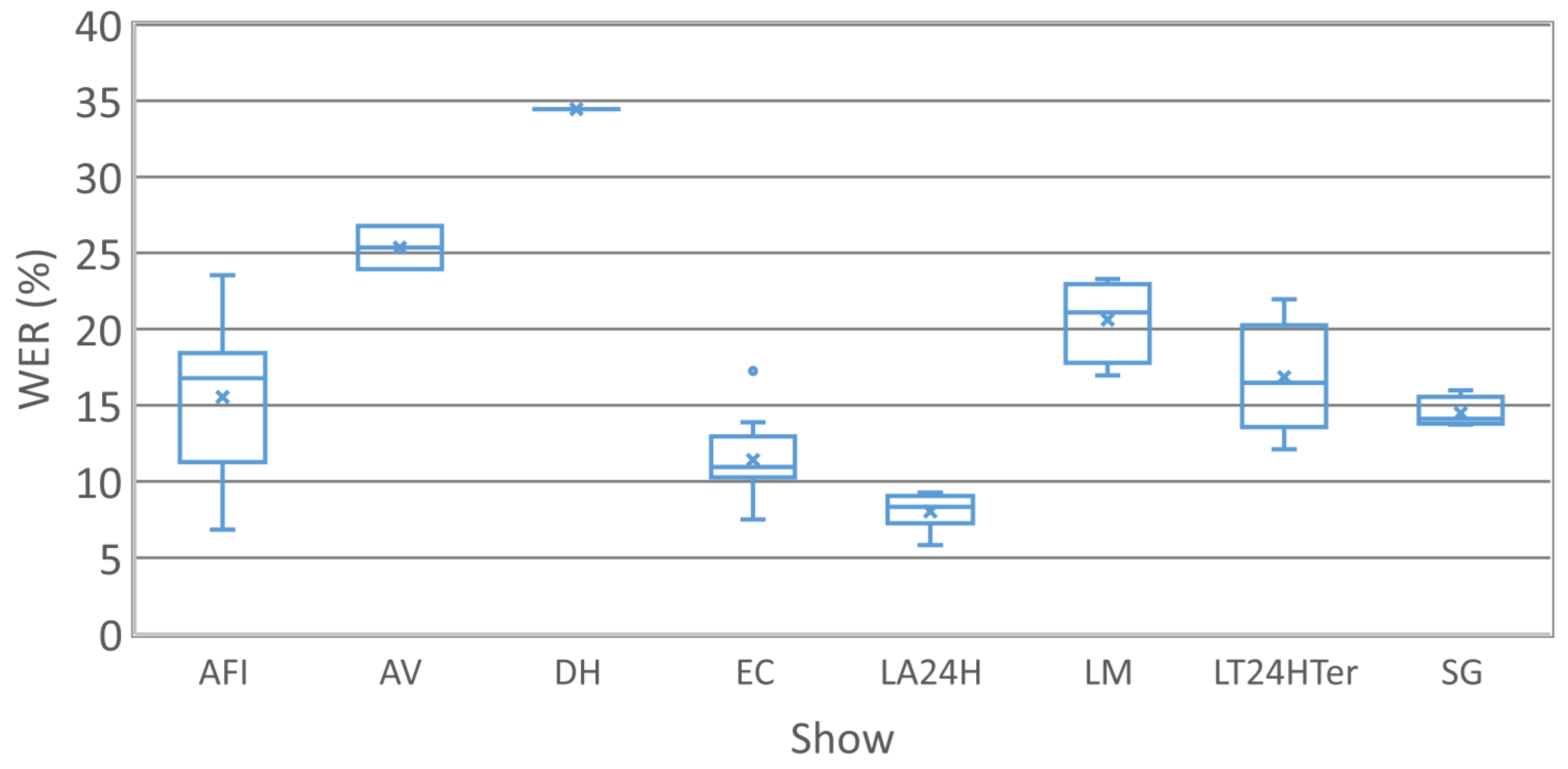

| Al filo de lo Imposible | AFI | 9 | 4:10:03 | Poor quality audio in some outdoor shots. Few speakers. Exterior shots. |

| Arranca en Verde | AV | 2 | 1:00:30 | Good audio quality in general. Most of the time, 2 speakers in a car. |

| Dicho y Hecho | DH | 1 | 1:48:00 | Much speech overlap and speech inflections. About 8 speakers, most of them comedians. Studio and exterior shots. |

| España en Comunidad | EC | 22 | 8:09:32 | Good audio quality in general. Diversity of speakers. Studio and exterior shots. |

| La Mañana | LM | 4 | 8:05:00 | Much speech overlap, speech inflections, and live audio. Studio and exterior shots. |

| La Tarde en 24H (Tertulia) | LT24HTer | 9 | 8:52:20 | Good audio quality, overlapped speech on rare occasions, up to 5 speakers. Television studio. |

| Latinoamérica en 24H | LA24H | 8 | 4:06:57 | Good audio quality. Many speakers with a Spanish Latin American accent. Studio and exterior shots. |

| Saber y Ganar | SG | 4 | 2:54:53 | Good audio quality. Up to 6 speakers per show. Television studio. |

| 59 | 39:07:15 |

| Team | G1 | G3 | G5 | G6 | G7 | G14 | G21 | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| System | P | C1 | P | P | P | C1 | C2 | P | P | P | C1 | C2 |

| TV Show | ||||||||||||

| AFI | 29.79 | 30.39 | 19.72 | 16.35 | 22.37 | 28.47 | 25.99 | 15.91 | 17.65 | 28.22 | 31.48 | 29.57 |

| AV | 54.67 | 54.68 | 47.13 | 39.97 | 40.49 | 48.36 | 42.17 | 23.94 | 28.90 | 39.14 | 54.75 | 50.21 |

| DH | 56.53 | 56.58 | 59.18 | 41.50 | 49.44 | 56.77 | 51.30 | 34.45 | 43.06 | 51.24 | 58.50 | 53.82 |

| EC | 21.86 | 22.54 | 17.99 | 15.59 | 17.64 | 23.68 | 20.81 | 11.38 | 13.54 | 22.19 | 25.60 | 23.32 |

| LA24H | 14.75 | 15.94 | 15.41 | 8.23 | 11.87 | 16.69 | 12.74 | 7.43 | 9.43 | 14.70 | 16.53 | 14.99 |

| LM | 36.74 | 37.58 | 38.35 | 27.10 | 31.72 | 44.69 | 34.40 | 21.94 | 23.96 | 45.94 | 47.70 | 46.43 |

| LT24HTer | 27.37 | 28.57 | 28.37 | 20.61 | 23.34 | 31.14 | 24.82 | 18.97 | 17.41 | 32.90 | 39.18 | 37.29 |

| SG | 25.43 | 27.28 | 31.47 | 19.66 | 22.81 | 33.82 | 22.65 | 15.97 | 14.77 | 21.32 | 21.10 | 20.16 |

| Overall WER | 29.27 | 30.19 | 28.72 | 20.92 | 24.52 | 33.00 | 26.66 | 16.45 | 18.63 | 31.61 | 35.80 | 33.90 |

| Team | G1 | G3 | G5 | G6 | G7 | G14 | G21 |

|---|---|---|---|---|---|---|---|

| TV Show | |||||||

| AFI | 27.25 | 18.35 | 15.35 | 21.41 | 14.06 | 16.04 | 26.11 |

| AV | 53.38 | 46.67 | 39.69 | 39.95 | 25.93 | 28.69 | 41.83 |

| DH | 56.82 | 60.05 | 42.76 | 49.7 | 34.62 | 43.32 | 51.16 |

| EC | 19.72 | 16.75 | 14.36 | 16.39 | 10.47 | 11.95 | 20.89 |

| LA24H | 13.11 | 14.56 | 7.49 | 11.14 | 7.27 | 8.21 | 13.97 |

| LM | 34.65 | 38.00 | 26.26 | 30.93 | 19.79 | 22.59 | 41.53 |

| LT24HTer | 25.23 | 27.76 | 19.97 | 22.31 | 15.75 | 15.42 | 29.65 |

| SG | 25.01 | 31.86 | 20.1 | 23.45 | 15.11 | 15.20 | 20.95 |

| Overall TNWER | 27.36 | 28.1 | 20.21 | 23.69 | 15.69 | 17.26 | 29.59 |

| Overall WER | 29.27 | 28.72 | 20.92 | 24.52 | 16.45 | 18.63 | 31.61 |

| Punc.Marks | Periods | Periods | Periods and Commas | ||||

|---|---|---|---|---|---|---|---|

| Team | G5 | G6 | G6 | ||||

| System | P | P | C1 | C2 | P | C1 | C2 |

| Overall WER | 20.92 | 24.52 | 33.00 | 26.66 | 24.52 | 33.00 | 26.66 |

| Overall PWER | 26.04 | 29.75 | 29.19 | 28.12 | 33.00 | 33.59 | 31.59 |

| Team | G6 | G7 | G14 | |||

|---|---|---|---|---|---|---|

| System | P | C1 | C2 | P | C1 | P |

| TV Show | ||||||

| AFI | 24.22 | 25.01 | 25.99 | 25.39 | 25.29 | 19.75 |

| AV | 33.94 | 37.75 | 42.17 | 36.17 | 37.19 | 27.75 |

| DH | 45.62 | 48.36 | 51.30 | 50.82 | 50.35 | 43.50 |

| EC | 16.70 | 17.27 | 20.81 | 16.68 | 16.56 | 15.63 |

| LA24H | 10.47 | 10.73 | 12.74 | 12.04 | 11.96 | 11.25 |

| LM | 28.28 | 29.58 | 34.40 | 26.68 | 26.60 | 23.96 |

| LT24HTer | 20.80 | 21.38 | 24.82 | 19.15 | 19.29 | 18.01 |

| SG | 17.7 | 18.92 | 22.65 | 18.79 | 18.75 | 15.43 |

| Overall WER | 22.22 | 23.16 | 26.66 | 21.98 | 21.96 | 19.57 |

| Team | G6 | G7 | G14 |

|---|---|---|---|

| Overall WER | 22.22 | 21.98 | 19.57 |

| Overall TNWER | 20.71 | 19.75 | 17.90 |

| Team | G1 | G11 | G20 | G21 | G22 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| System | P | C1 | C2 | P | C1 | C2 | P | C1 | C2 | P | P | C1 | C2 |

| DER | 11.4 | 11.7 | 12.7 | 25.9 | 20.3 | 36.7 | 30.8 | 31.8 | 33.3 | 30.96 | 28.6 | 28.2 | 31.4 |

| Missed Speech | 1.1 | 1.9 | 1.2 | 0.7 | 0.7 | 0.7 | 0.7 | 0.7 | 0.6 | 0.9 | 2.4 | 2.4 | 2.4 |

| False Speech | 3.7 | 3.2 | 3.7 | 2.8 | 2.8 | 2.8 | 3.1 | 3.7 | 4.5 | 4.8 | 1.3 | 1.3 | 1.3 |

| Speaker Error | 6.6 | 6.6 | 7.8 | 22.4 | 16.8 | 33.2 | 26.9 | 27.4 | 28.2 | 25.2 | 24.9 | 24.5 | 27.7 |

| Team | G1 | G11 | G20 | G21 | G22 |

|---|---|---|---|---|---|

| System | P | C1 | P | P | C1 |

| TV Show | |||||

| EC | 13.1 | 27.4 | 37.7 | 40.9 | 38.6 |

| LA24H | 15.0 | 29.3 | 39.5 | 36.7 | 34.3 |

| LM | 16.9 | 24.1 | 48.7 | 45.3 | 35.9 |

| LT24HTer | 7.8 | 9.9 | 18.4 | 18.2 | 15.6 |

| DER | 11.4 | 20.3 | 30.7 | 30.9 | 28.2 |

| Team | G4 | G8 | G10 | G11 | G19 | G22 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| System | P | C1 | C2 | P | C1 | C2 | P | C1 | P | C1 | C2 | P | P |

| DER | 26.7 | 26.5 | 25.4 | 34.6 | 31.4 | 28.7 | 17.3 | 17.8 | 26.6 | 30.2 | 37.6 | 26.6 | 39.1 |

| Missed Speaker | 0.4 | 0.4 | 0.4 | 3.1 | 2.5 | 4.1 | 1.1 | 1.1 | 0.7 | 0.7 | 0.7 | 1.1 | 2.4 |

| False Speaker | 4.8 | 4.8 | 4.8 | 3.1 | 3.2 | 3.5 | 2.5 | 2.5 | 2.8 | 2.9 | 2.8 | 3 | 1.3 |

| Speaker Error | 21.5 | 21.3 | 20.2 | 28.4 | 25.7 | 21.1 | 13.7 | 14.2 | 23.1 | 26.6 | 34.1 | 22.5 | 35.4 |

| Team | G4 | G8 | G10 | G11 | G19 | G22 |

|---|---|---|---|---|---|---|

| System | C2 | C2 | P | P | P | P |

| TV Show | ||||||

| EC | 34.8 | 27.8 | 17.1 | 37.6 | 30.3 | 47.0 |

| LA24H | 30.7 | 31.3 | 18.2 | 31.1 | 30.7 | 44.6 |

| LM | 29.1 | 36.0 | 41.2 | 33.7 | 35.3 | 52.4 |

| LT24HTer | 14.9 | 27.6 | 13.3 | 14.6 | 20.2 | 27.9 |

| Overall DER | 25.4 | 28.7 | 17.3 | 26.6 | 26.6 | 39.1 |

| Missed Speech | 0.4 | 4.1 | 1.1 | 0.7 | 1.1 | 2.4 |

| False Speech | 4.8 | 3.5 | 2.5 | 2.8 | 3 | 1.3 |

| Speaker Error | 20.2 | 21.1 | 13.7 | 23.1 | 22.5 | 35.4 |

| Team | G1 | G2 | G9 | G11 | ||||

|---|---|---|---|---|---|---|---|---|

| Modality/System | Face/P | SPK/P | Face/P | SPK/C1 | Face/P | SPK/P | Face/P | SPK/C1 |

| Missed Speech/Face | 28.6 | 3.0 | 22.6 | 1.1 | 12.1 | 1.2 | 12.1 | 1.1 |

| False Speech/Face | 5.2 | 9.3 | 4.7 | 14.1 | 12.2 | 12.4 | 12.2 | 12.6 |

| Error Speaker/Face | 0.9 | 5.0 | 2.3 | 47.4 | 4.9 | 4.0 | 4.9 | 15.2 |

| Overall DER | 34.7 | 17.3 | 29.6 | 62.6 | 29.2 | 17.6 | 29.2 | 28.9 |

| Multimodal DER | 26.0 | 46.1 | 23.4 | 29.1 | ||||

| TV Show | ||||||||

| LM-20170103 | 57.7 | 35.5 | 43.0 | 74.6 | 44.3 | 43.7 | 44.3 | 63.1 |

| LT24HTer-20180222 | 19.8 | 9.0 | 19.4 | 46.4 | 17.3 | 3.2 | 17.3 | 22.8 |

| LT24HTer-20180223 | 19.0 | 8.2 | 21.5 | 63.1 | 20.2 | 6.3 | 20.2 | 6.6 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lleida, E.; Ortega, A.; Miguel, A.; Bazán-Gil, V.; Pérez, C.; Gómez, M.; de Prada, A. Albayzin 2018 Evaluation: The IberSpeech-RTVE Challenge on Speech Technologies for Spanish Broadcast Media. Appl. Sci. 2019, 9, 5412. https://doi.org/10.3390/app9245412

Lleida E, Ortega A, Miguel A, Bazán-Gil V, Pérez C, Gómez M, de Prada A. Albayzin 2018 Evaluation: The IberSpeech-RTVE Challenge on Speech Technologies for Spanish Broadcast Media. Applied Sciences. 2019; 9(24):5412. https://doi.org/10.3390/app9245412

Chicago/Turabian StyleLleida, Eduardo, Alfonso Ortega, Antonio Miguel, Virginia Bazán-Gil, Carmen Pérez, Manuel Gómez, and Alberto de Prada. 2019. "Albayzin 2018 Evaluation: The IberSpeech-RTVE Challenge on Speech Technologies for Spanish Broadcast Media" Applied Sciences 9, no. 24: 5412. https://doi.org/10.3390/app9245412

APA StyleLleida, E., Ortega, A., Miguel, A., Bazán-Gil, V., Pérez, C., Gómez, M., & de Prada, A. (2019). Albayzin 2018 Evaluation: The IberSpeech-RTVE Challenge on Speech Technologies for Spanish Broadcast Media. Applied Sciences, 9(24), 5412. https://doi.org/10.3390/app9245412