Total Least-Squares Iterative Closest Point Algorithm Based on Lie Algebra

Abstract

1. Introduction

2. ICP Algorithm Based on Lie Algebra

2.1. Iterative Closest Points

2.2. Variant of the Gauss–Helmert Model

2.3. Lie Algebra and Lie Group

2.4. Jacobian of the GHM

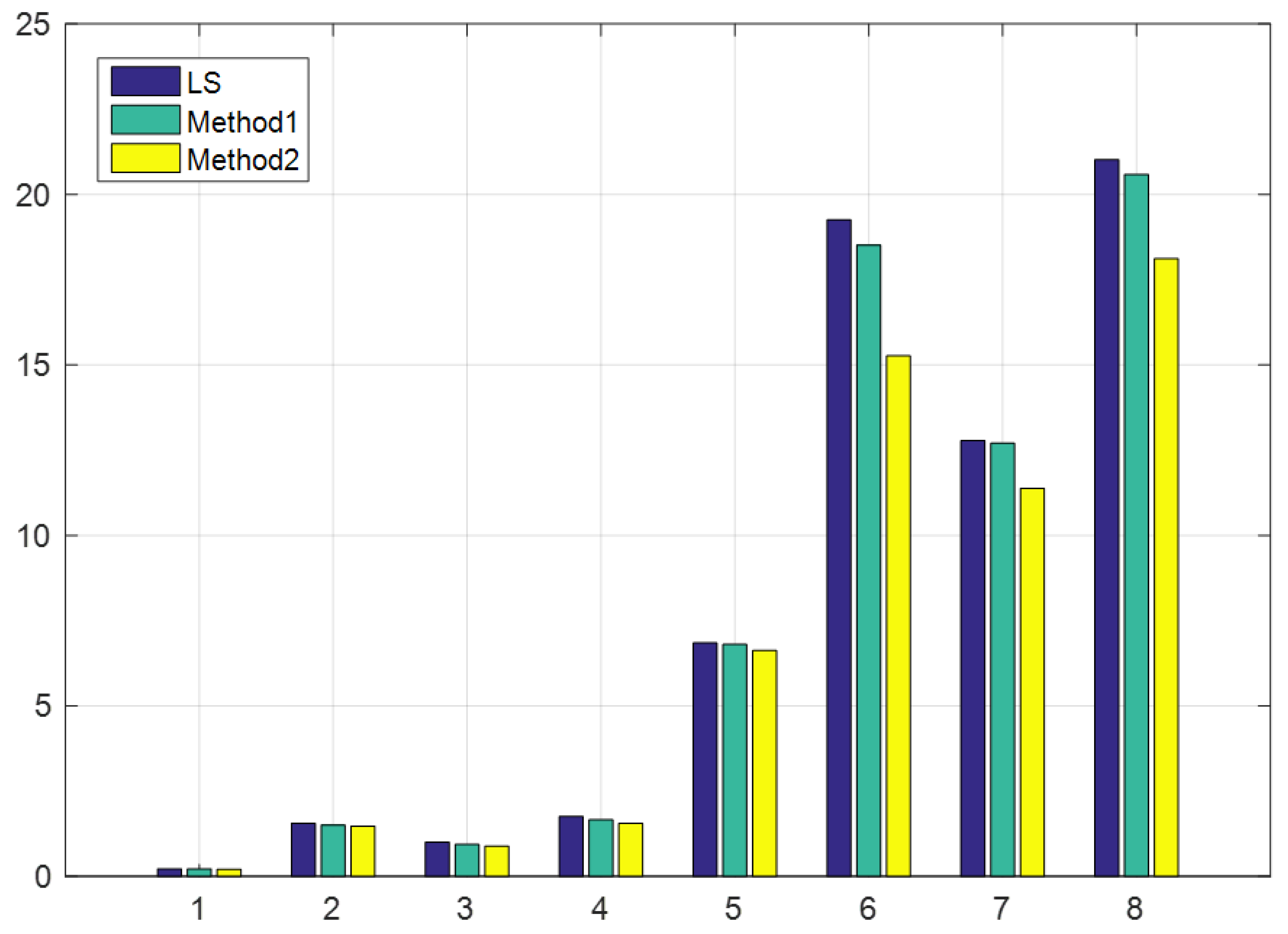

3. Experiments

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- Rusinkiewicz, S. Efficient variants of the ICP algorithm. Proc. 3DIM 2001, 1, 145–152. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. Orb-slam: A versatile and accurate monocular slam system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Kreiberg, D.; Söderström, T.; Yang-Wallentin, F. Errors-in-variables system identification using structural equation modeling. Automatica 2016, 66, 218–230. [Google Scholar] [CrossRef]

- Horn, B.K.P. Closed-form solution of absolute orientation using unit quaternions. Repr. J. Opt. Soc. Am. A 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Kummerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. An Analysis of the Total Least Squares Problem. SIAM J. Numer. Anal. 1980, 17, 883–893. [Google Scholar] [CrossRef]

- Shen, Y.; Li, B.; Chen, Y. An iterative solution of weighted total least-squares adjustment. J. Geod. 2011, 85, 229–238. [Google Scholar] [CrossRef]

- Mahboub, V. On weighted total least-squares for geodetic transformations. J. Geod. 2012, 86, 359–367. [Google Scholar] [CrossRef]

- Felus, Y.A.; Burtch, R.C. On symmetrical three-dimensional datum conversion. GPS Solut. 2009, 13, 65–74. [Google Scholar] [CrossRef]

- Estépar, R.S.J.; Brun, A.; Westin, C.F. Robust Generalized Total Least Squares Iterative Closest Point Registration. Medical Image Computing and Computer-Assisted Intervention—MICCAI 2004. MICCAI 2004. In Lecture Notes in Computer Science; Barillot, C., Haynor, D.R., Hellier, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3216. [Google Scholar]

- Ohta, N.; Kanatani, K. Optimal estimation of three-dimensional rotation and reliability evaluation. IEICE Trans. Inf. Syst. 1998, 81, 1247–1252. [Google Scholar]

- Fang, X. A total least squares solution for geodetic datum transformations. Acta Geod. Geophys. 2014, 49, 189–207. [Google Scholar] [CrossRef]

- Chang, G. On least-squares solution to 3D similarity transformation problem under Gauss–Helmert model. J. Geod. 2015, 89, 573–576. [Google Scholar] [CrossRef]

- Fang, X. Weighted total least-squares with constraints: A universal formula for geodetic symmetrical transformations. J. Geod. 2015, 89, 459–469. [Google Scholar] [CrossRef]

- Wang, B.; Li, J.; Liu, C.; Yu, J. Generalized total least squares prediction algorithm for universal 3D similarity transformation. Adv. Space Res. 2017, 59, 815–823. [Google Scholar] [CrossRef]

- Koch, K. Robust estimations for the nonlinear Gauss Helmert model by the expectation maximization algorithm. J. Geod. 2014, 88, 263–271. [Google Scholar] [CrossRef]

- Barfoot, T.D. State Estimation for Robotics; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Teunissen, P. Applications of linear and nonlinear models: Fixed effects, random effects, and total least squares. Surveyor 2012, 58, 339–340. [Google Scholar] [CrossRef]

- Schaffrin, B.; Felus, Y.A. On the multivariate total least-squares approach to empirical coordinate transformations. Three algorithms. J. Geod. 2008, 82, 373–383. [Google Scholar] [CrossRef]

| Property | SO (3) | SE (3) |

|---|---|---|

| Closure | ||

| Associativity | ||

| Identity | ||

| Invertibility |

| Point No. | Source x | Source y | Source z | Target x’ | Target y’ | Target z’ |

|---|---|---|---|---|---|---|

| 1 | 63 | 84 | 21 | 290 | 150 | 15 |

| 2 | 210 | 84 | 21 | 420 | 80 | 2 |

| 3 | 210 | 273 | 21 | 540 | 200 | 20 |

| 4 | 63 | 273 | 21 | 390 | 300 | 5 |

| Noise Number | Noise Type | x | y | z | Noise Number | Noise Type | x | y | z |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Normal | 0.1 | 0.1 | 0.1 | 5 | Uniform | 5 | 5 | 5 |

| 2 | Normal | 0.1 | 0.5 | 1 | 6 | Uniform | 5 | 10 | 20 |

| 3 | Normal | 0.1 | 0.5 | 0.5 | 7 | Uniform | 5 | 10 | 10 |

| 4 | Normal | 0.1 | 1 | 1 | 8 | Uniform | 5 | 20 | 20 |

| Estimated Parameter | Euler Method | Estimated Parameter | Method 1 | LS | Estimated Parameter | Method 2 | LS |

|---|---|---|---|---|---|---|---|

| Z-axis angle (rad) | 2.516 | 0.0207793 | 0.02066 | 151.836 | 151.834 | ||

| Y-axis angle (rad) | −3.137 | −0.011339 | −0.0112 | 175.062 | 175.066 | ||

| X-axis angle | −3.119 | −0.625356 | −0.6254 | −17.5989 | −17.6049 | ||

| 195.23 | 195.231 | 195.23 | 0.02065 | 0.02066 | |||

| 118.064 | 118.064 | 118.067 | 0.0112623 | 0.01128 | |||

| −15.138 | −15.1442 | −15.143 | −0.62536 | −0.6253 | |||

| SSE | 1.14 | SSE | 0.0564 | 68.577 | SSE | 0.005056 | 68.577 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Y.; Wang, Q.; Zhang, H. Total Least-Squares Iterative Closest Point Algorithm Based on Lie Algebra. Appl. Sci. 2019, 9, 5352. https://doi.org/10.3390/app9245352

Feng Y, Wang Q, Zhang H. Total Least-Squares Iterative Closest Point Algorithm Based on Lie Algebra. Applied Sciences. 2019; 9(24):5352. https://doi.org/10.3390/app9245352

Chicago/Turabian StyleFeng, Youyang, Qing Wang, and Hao Zhang. 2019. "Total Least-Squares Iterative Closest Point Algorithm Based on Lie Algebra" Applied Sciences 9, no. 24: 5352. https://doi.org/10.3390/app9245352

APA StyleFeng, Y., Wang, Q., & Zhang, H. (2019). Total Least-Squares Iterative Closest Point Algorithm Based on Lie Algebra. Applied Sciences, 9(24), 5352. https://doi.org/10.3390/app9245352