Comparative Analysis of Rainfall Prediction Models Using Machine Learning in Islands with Complex Orography: Tenerife Island

Abstract

:1. Introduction

2. Literature Review

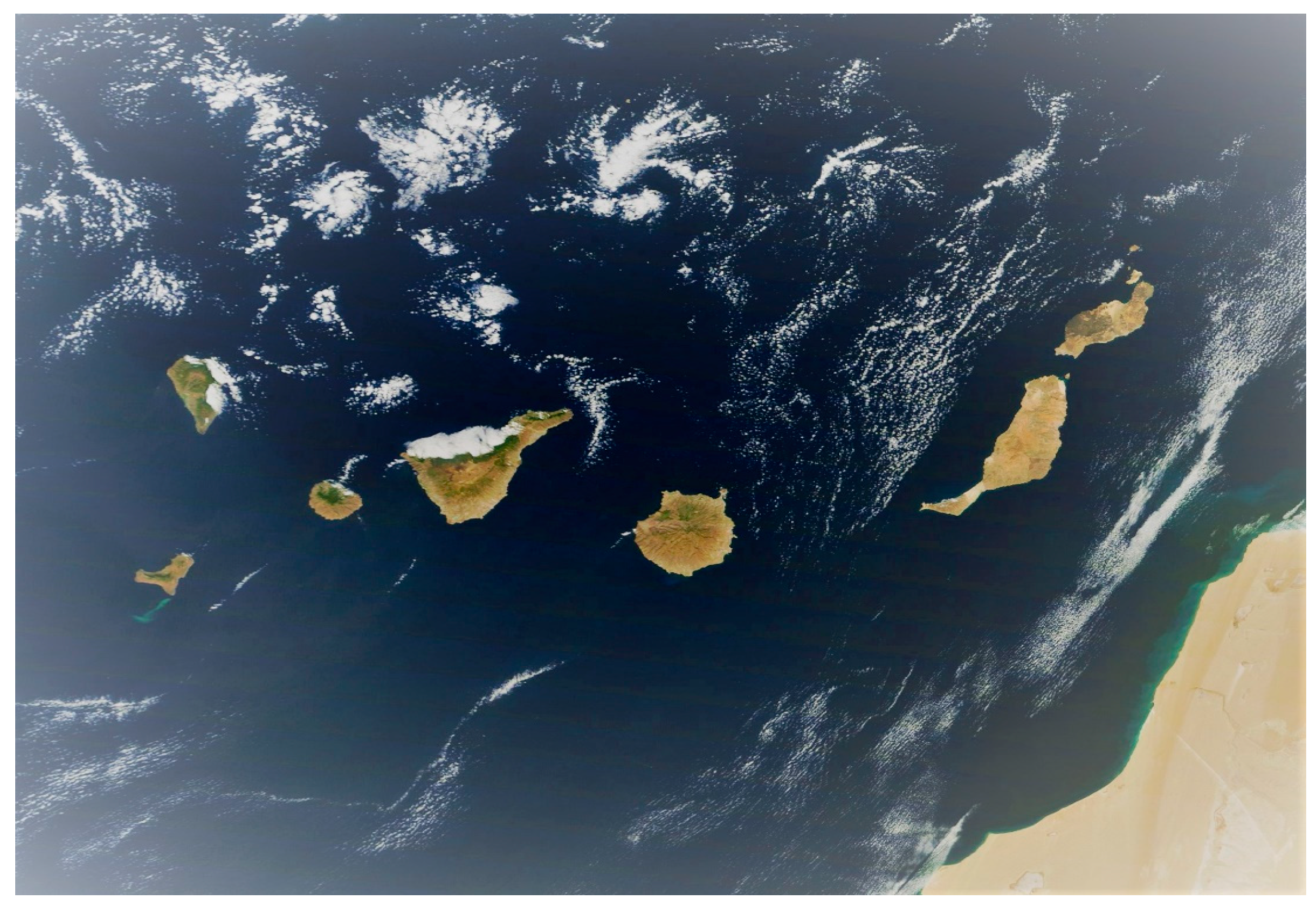

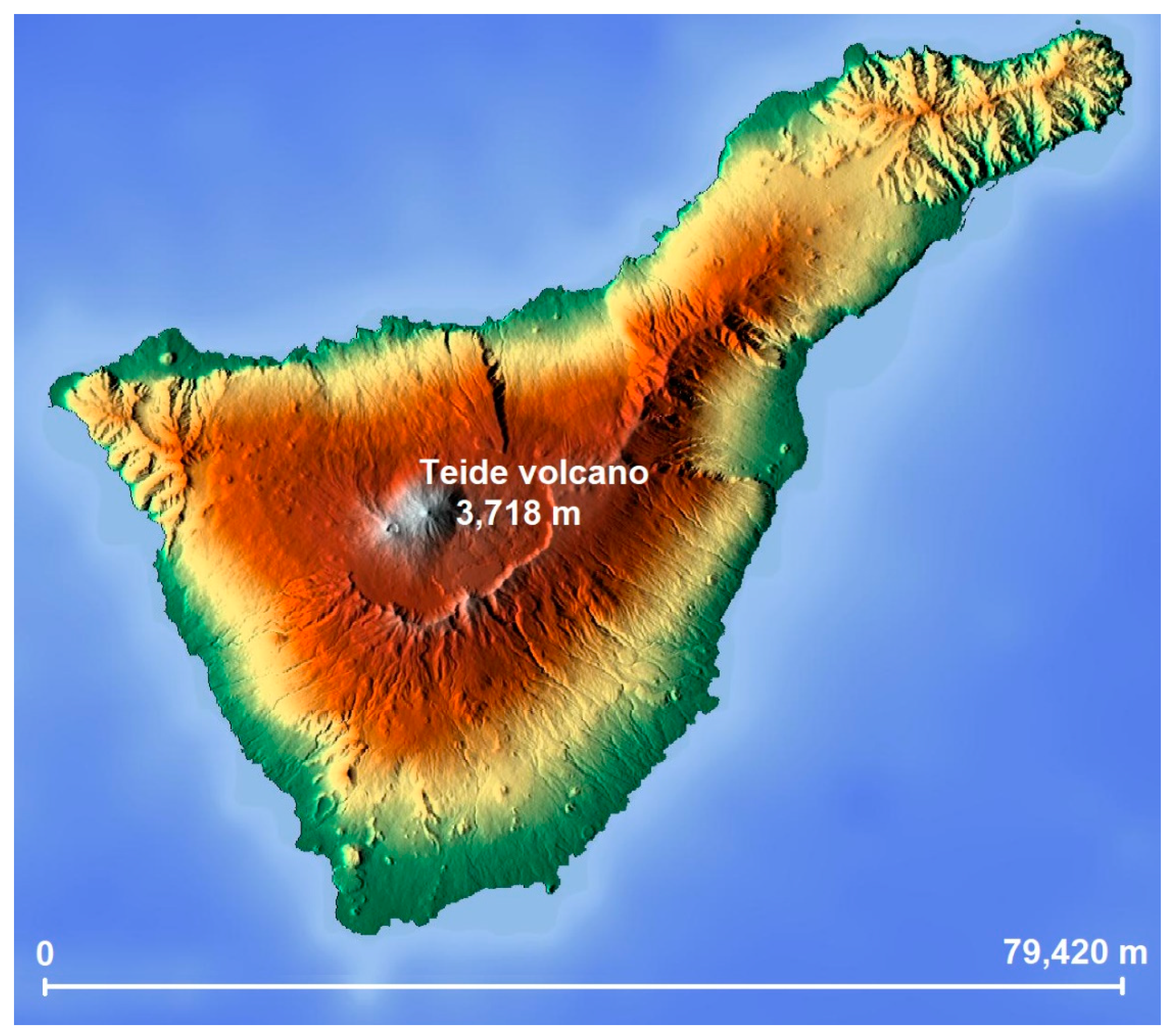

Climate in the Canary Islands

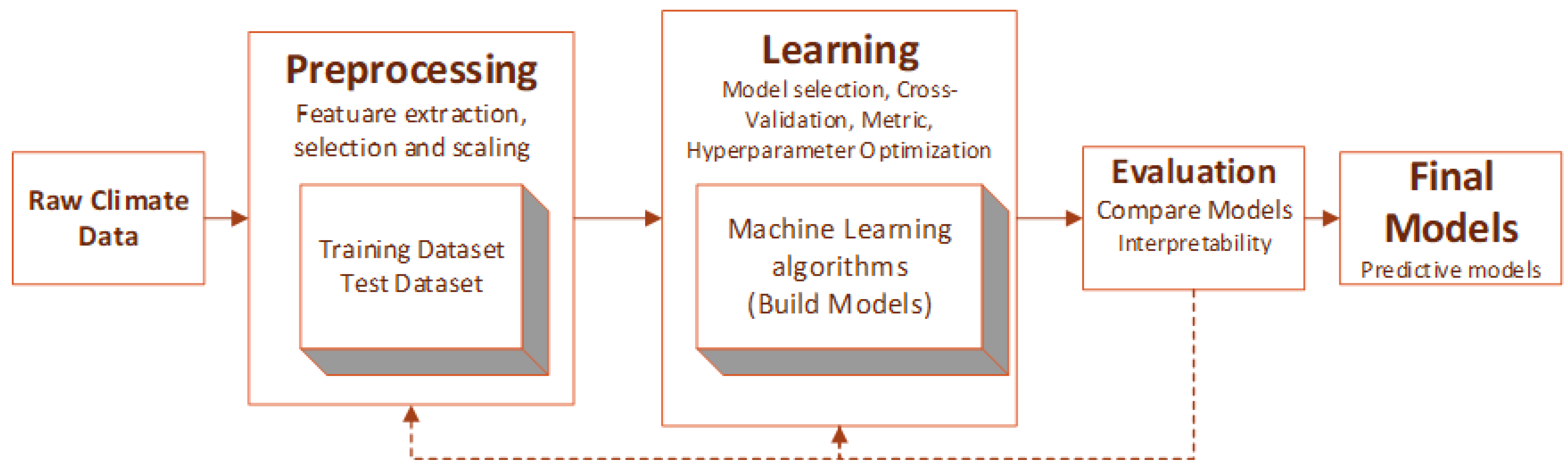

3. Materials and Methods

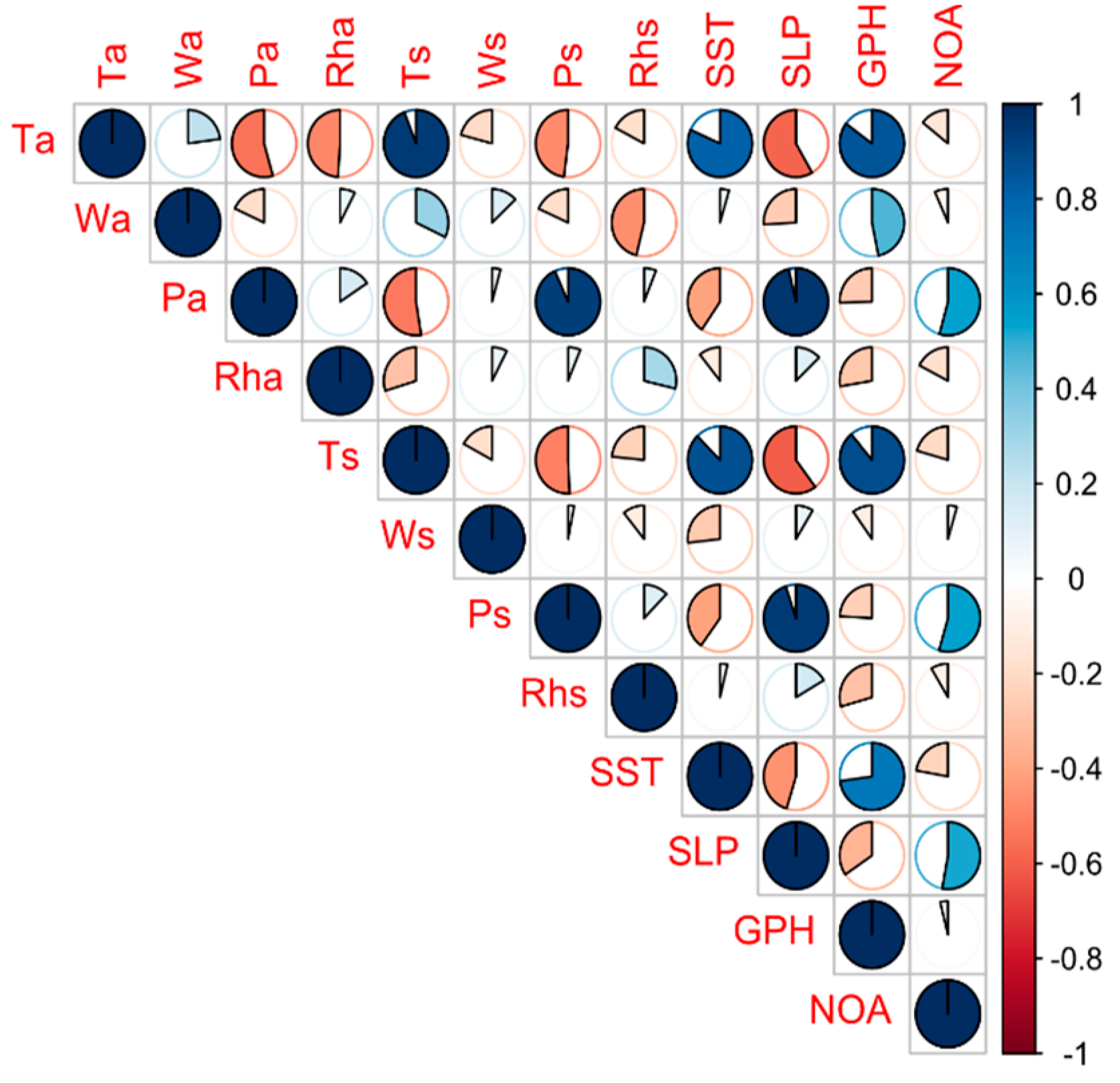

3.1. Model and Data Acquisition

3.2. Algorithms and Frameworks

3.3. Experiments

4. Discussion

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Appendix A

References

- Diez-Sierra, J.; del Jesus, M. A rainfall analysis and forecasting tool. Environ. Model. Softw. 2017, 97, 243–258. [Google Scholar] [CrossRef]

- Meteorological Data Set of the Island of Tenerife. Available online: http://dx.doi.org/10.17632/srwzh55hrz.1 (accessed on 1 September 2019).

- Azevedo, A.I.R.L.; Santos, M.F. KDD, SEMMA and CRISP-DM: A Parallel Overview. In Proceedings of the IADIS European Conference Data Mining, Amsterdam, The Netherlands, 24–26 July 2008; pp. 182–185. [Google Scholar]

- Done, J.; Davis, C.A.; Weisman, M. The next generation of NWP: Explicit forecasts of convection using the Weather Research and Forecasting (WRF) model. Atmos. Sci. Lett. 2004, 5, 110–117. [Google Scholar] [CrossRef]

- Trenberth, K.E.; Olson, J.G. An evaluation and intercomparison of global analyses from the National Meteorological Center and the European Centre for Medium Range Weather Forecasts. Bull. Am. Meteorol. Soc. 1988, 69, 1047–1057. [Google Scholar] [CrossRef]

- Basak, J.; Sudarshan, A.; Trivedi, D.; Santhanam, M.S. Weather data mining using independent component analysis. J. Mach. Learn. Res. 2004, 5, 239–253. [Google Scholar]

- Díaz, R.Z.; Montejo, A.M.; Lemus, G.C.; Suárez, A.R. Estimación de parámetros meteorológicos secundarios utilizando técnicas de Minería de Datos. Rev. Cuba. Ing. 2011, 1, 61–65. [Google Scholar]

- Kohail, S.N.; El-Halees, A.M. Implementation of data mining techniques for meteorological data analysis. Int. J. Inf. Commun. Technol. Res. (JICT) 2011, 1, 11–21. [Google Scholar]

- Krasnopolsky, V.M.; Fox-Rabinovitz, M.S. Complex hybrid models combining deterministic and machine learning components for numerical climate modeling and weather prediction. Neural Netw. 2006, 19, 122–134. [Google Scholar] [CrossRef]

- Morreale, P.; Holtz, S.; Goncalves, A. Data Mining and Analysis of Large Scale Time Series Network Data. In Proceedings of the 2013 27th International Conference on Advanced Information Networking and Applications Workshops (WAINA), Barcelona, Spain, 25–28 March 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 39–43. [Google Scholar]

- Voyant, C.; Notton, G.; Kalogirou, S.; Nivet, M.; Paoli, C.; Motte, F.; Fouilloy, A. Machine learning methods for solar radiation forecasting: A review. Renewable Energy 2017, 105, 569–582. [Google Scholar] [CrossRef]

- Sallis, P.J.; Claster, W.; Hernández, S. A machine-learning algorithm for wind gust prediction. Comput. Geosci. 2011, 37, 1337–1344. [Google Scholar] [CrossRef]

- Shanmuganathan, S.; Sallis, P.; Narayanan, A. Data Mining Techniques for Modelling the Influence of Daily Extreme Weather Conditions on Grapevine, Wine Quality and Perennial Crop Yield. In Proceedings of the CICSyN 2010: 2nd International Conference on Computational Intelligence, Communication Systems and Networks, Liverpool, UK, 28–30 July 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 90–95. [Google Scholar]

- Bartok, J.; Habala, O.; Bednar, P.; Gazak, M.; Hluchý, L. Data mining and integration for predicting significant meteorological phenomena. Procedia Comput. Sci. 2010, 1, 37–46. [Google Scholar] [CrossRef]

- Bankert, R.L.; Hadjimichael, M. Data mining numerical model output for single-station cloud-ceiling forecast algorithms. Weather Forecast. 2007, 22, 1123–1131. [Google Scholar] [CrossRef]

- Zhang, C.; Wu, M.; Chen, J.; Chen, K.; Zhang, C.; Xie, C.; Huang, B.; He, Z. Weather Visibility Prediction Based on Multimodal Fusion. IEEE Access 2019, 7, 74776–74786. [Google Scholar] [CrossRef]

- Pérez-Vega, A.; Travieso-González, C.; Hernández-Travieso, J. An Approach for Multiparameter Meteorological Forecasts. Appl. Sci. 2018, 8, 2292. [Google Scholar] [CrossRef]

- Zheng, H.; Wu, Y. A XGBoost Model with Weather Similarity Analysis and Feature Engineering for Short-Term Wind Power Forecasting. Appl. Sci. 2019, 9, 3019. [Google Scholar] [CrossRef]

- Vathsala, H.; Koolagudi, S.G. Long-range prediction of Indian summer monsoon rainfall using data mining and statistical approaches. Theor. Appl. Climatol. 2017, 130, 19–33. [Google Scholar]

- Cramer, S.; Kampouridis, M.; Freitas, A.A.; Alexandridis, A.K. An extensive evaluation of seven machine learning methods for rainfall prediction in weather derivatives. Expert Syst. Appl. 2017, 85, 169–181. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.; Wong, W.; Woo, W. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 802–810. Available online: http://papers.nips.cc/paper/5955-convolutional-lstm-network-a-machine-learning-approach-for-precipitation-nowcasting (accessed on 1 September 2019).

- Yang, Y.; Lin, H.; Guo, Z.; Jiang, J. A data mining approach for heavy rainfall forecasting based on satellite image sequence analysis. Comput. Geosci. 2007, 33, 20–30. [Google Scholar] [CrossRef]

- Chen, X.; He, G.; Chen, Y.; Zhang, S.; Chen, J.; Qian, J.; Yu, H. Short-term and local rainfall probability prediction based on a dislocation support vector machine model using satellite and in-situ observational data. IEEE Access 2019. [Google Scholar] [CrossRef]

- Alizadeh, M.J.; Kavianpour, M.R.; Kisi, O.; Nourani, V. A new approach for simulating and forecasting the rainfall-runoff process within the next two months. J. Hydrol. 2017, 548, 588–597. [Google Scholar] [CrossRef]

- Min, M.; Bai, C.; Guo, J.; Sun, F.; Liu, C.; Wang, F.; Xu, H.; Tang, S.; Li, B.; Di, D. Estimating summertime precipitation from Himawari-8 and global forecast system based on machine learning. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2557–2570. [Google Scholar] [CrossRef]

- Haidar, A.; Verma, B. Monthly Rainfall Forecasting Using One-Dimensional Deep Convolutional Neural Network. IEEE Access 2018, 6, 69053–69063. [Google Scholar] [CrossRef]

- Tran Anh, D.; Duc Dang, T.; Pham Van, S. Improved Rainfall Prediction Using Combined Pre-Processing Methods and Feed-Forward Neural Networks. J 2019, 2, 65–83. [Google Scholar] [CrossRef]

- Poornima, S.; Pushpalatha, M. Prediction of Rainfall Using Intensified LSTM Based Recurrent Neural Network with Weighted Linear Units. Atmosphere 2019, 10, 668. [Google Scholar] [CrossRef]

- Pham, Q.B.; Yang, T.; Kuo, C.; Tseng, H.; Yu, P. Combing Random Forest and Least Square Support Vector Regression for Improving Extreme Rainfall Downscaling. Water 2019, 11, 451. [Google Scholar] [CrossRef]

- Cropper, T. The weather and climate of Macaronesia: Past, present and future. Weather 2013, 68, 300–307. [Google Scholar] [CrossRef]

- Herrera, R.G.; Puyol, D.G.; MartÍn, E.H.; Presa, L.G.; Rodríguez, P.R. Influence of the North Atlantic oscillation on the Canary Islands precipitation. J. Clim. 2001, 14, 3889–3903. [Google Scholar] [CrossRef]

- Peel, M.C.; Finlayson, B.L.; McMahon, T.A. Updated world map of the Köppen-Geiger climate classification. Hydrol. Earth Syst. Sci. Discuss. 2007, 4, 439–473. [Google Scholar] [CrossRef]

- García-Herrera, R.; Gallego, D.; Hernández, E.; Gimeno, L.; Ribera, P.; Calvo, N. Precipitation trends in the Canary Islands. Int. J. Climatol. 2003, 23, 235–241. [Google Scholar] [CrossRef]

- Vathsala, H.; Koolagudi, S.G. Prediction model for peninsular Indian summer monsoon rainfall using data mining and statistical approaches. Comput. Geosci. 2017, 98, 55–63. [Google Scholar] [CrossRef]

- Queralt, S.; Hernández, E.; Barriopedro, D.; Gallego, D.; Ribera, P.; Casanova, C. North Atlantic Oscillation influence and weather types associated with winter total and extreme precipitation events in Spain. Atmos. Res. 2009, 94, 675–683. [Google Scholar] [CrossRef]

- Shearer, C. The CRISP-DM model: The new blueprint for data mining. J. Data Warehous. 2000, 5, 13–22. [Google Scholar]

- Winters, R. Practical Predictive Analytics; Packt Publishing: Birmingham, UK, 2017. [Google Scholar]

- Iannone, R. stationaRy: Get Hourly Meteorological Data from Global Stations (R package), Version 0.4.1. CRAN.R-Project.Org/package = stationaRy, Comprehensive R Archive Network. Available online: https://cran.r-project.org/web/packages/stationaRy/index.html (accessed on 1 September 2019).

- Kemp, M.U.; Van Loon, E.E.; Shamoun-Baranes, J.; Bouten, W. RNCEP: Global weather and climate data at your fingertips. Methods Ecol. Evol. 2012, 3, 65–70. [Google Scholar] [CrossRef]

- Galar, M.; Fernandez, A.; Barrenechea, E.; Bustince, H.; Herrera, F. A review on ensembles for the class imbalance problem: Bagging-, boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2012, 42, 463–484. [Google Scholar] [CrossRef]

- Liu, X.; Wu, J.; Zhou, Z. Exploratory undersampling for class-imbalance learning. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2009, 39, 539–550. [Google Scholar]

- Japkowicz, N.; Stephen, S. The class imbalance problem: A systematic study. Intell. Data Anal. 2002, 6, 429–449. [Google Scholar] [CrossRef]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Ren, Y.; Zhang, L.; Suganthan, P.N. Ensemble classification and regression-recent developments, applications and future directions. IEEE Comput. Int. Mag. 2016, 11, 41–53. [Google Scholar] [CrossRef]

- Wainer, J. Comparison of 14 Different Families of Classification Algorithms on 115 Binary Datasets. arXiv 2016, arXiv:1606.00930. [Google Scholar]

- Camps-Valls, G.; Marsheva, T.V.B.; Zhou, D. Semi-supervised graph-based hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3044–3054. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Muñoz-Marí, J.; Bovolo, F.; Gómez-Chova, L.; Bruzzone, L.; Camp-Valls, G. Semisupervised one-class support vector machines for classification of remote sensing data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3188–3197. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Friedman, J.H. Regularized discriminant analysis. J. Am. Stat. Assoc. 1989, 84, 165–175. [Google Scholar] [CrossRef]

- Landwehr, N.; Hall, M.; Frank, E. Logistic model trees. Mach. Learn. 2005, 59, 161–205. [Google Scholar] [CrossRef] [Green Version]

- McCullagh, P.; Nelder, J.A. Generalized Linear Models; CRC Press: Boca Raton, FL, USA, 1989. [Google Scholar]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A Training Algorithm for Optimal Margin Classifiers. In Proceedings of the Fifth Annual Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; ACM: New York, NY, USA, 1992; pp. 144–152. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Friedman, J.H. Stochastic gradient boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar]

- Robnik-sikonja, M.; Savicky, P. CORElearn-Classification, Regression, Feature Evaluation and Ordinal Evaluation Version 0.9.41. 2013. Available online: https://CRAN.R-project.org/package=CORElearn (accessed on 1 September 2019).

- Bischl, B.; Lang, M.; Kotthoff, L.; Schiffner, J.; Richter, J.; Studerus, E.; Casalicchio, G.; Jones, Z.M. mlr: Machine Learning in R. J. Mach. Learn. Res. 2016, 17, 5938–5942. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Kuhn, M. Caret package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar]

- Kaufman, L.; Rousseeuw, P.J. Partitioning Around Medoids (Program Pam). In Finding Groups Data: An Introduction Cluster Analysis; John, Wiley & Sons: Hoboken, NJ, USA, 1990; pp. 68–125. [Google Scholar]

- Towards a Predictive Weather Model Using Machine Learning in Tenerife (Canary Islands). Available online: https://github.com/dagoull/Predictive_Weather_ML (accessed on 2 May 2019).

- Lipton, Z.C. The Mythos of Model Interpretability. arXiv 2016, arXiv:1606.03490. [Google Scholar] [CrossRef]

| ID | Location | Altitude (m) | Latitude | Longitude |

|---|---|---|---|---|

| C406G | La Orotava | 2150 | 28°13′27″ | 16°37′35″ |

| C419X | Adeje | 130 | 28°4′53″ | 16°42′40″ |

| C428T | Arico | 418 | 28°10′52″ | 16°29′1″ |

| C429I | Tenerife Sur Aeropuerto | 64 | 28°2′49″ | 16°33′40″ |

| C430E | Izaña | 2371 | 28°18′32″ | 16°29′58″ |

| C438N | Candelaria | 463 | 28°21′32″ | 16°24′5″ |

| C439J | Güimar | 115 | 28°19′6″ | 16°22′56″ |

| C446G | San Cristóbal de La Laguna | 868 | 28°31′36″ | 16°16′50″ |

| C447A | Tenerife Norte Aeropuerto* | 632 | 28°28′39″ | 16°19′46″ |

| C449C | SC de Tenerife* | 35 | 28°27′48″ | 16°15′19″ |

| C449F | Anaga | 19 | 28°30′29″ | 16°11′44″ |

| C457I | La Victoria de Acentejo | 567 | 28°26′5″ | 16°27′17″ |

| C458A | Tacoronte | 310 | 28°29′47″ | 16°25′12″ |

| C459Z | Puerto de la Cruz | 25 | 28°25′5″ | 16°32′53″ |

| C468B | San Juan de la Rambla | 370 | 28°23′23″ | 16°37′47″ |

| C469N | Los Silos | 28 | 28°22′43″ | 16°49′3″ |

| Predictors | Representation |

|---|---|

| Monthly measurements of temperature S. Cruz de Tenerife °C | Ts |

| Monthly measurements of wind speed S. Cruz de Tenerife in meters per second | Ws |

| Monthly measurements of sea level pressure S. Cruz de Tenerife in millibar | Ps |

| Monthly measurements of relative humidity S. Cruz de Tenerife in % | Rhs |

| Monthly measurements of temperature Tenerife North Airport °C | Ta |

| Monthly measurements of wind speed Tenerife North Airport in meters per second | Wa |

| Monthly measurements of sea level pressure Tenerife North Airport in millibar | Pa |

| Monthly measurements of relative humidity Tenerife North Airport in % | Rha |

| North Atlantic Oscillation | NAO |

| Geopotential Height in 500-hPa | GPH |

| Sea Level Pressure in Pascal | SLP |

| Sea Surface Temperature °C | SST |

| Algorithm | Training Error Rate | Test Error Rate | Level of Interpretability | ||

|---|---|---|---|---|---|

| Accuracy | Kappa | Accuracy | Kappa | ||

| Logistic Model Trees (lmt) | 0.81 | 0.39 | 0.75 | 0.25 | High |

| Linear Discriminant Analysis (lda) | 0.76 | 0.41 | 0.65 | 0.28 | High |

| Generalized Linear Model (glm) | 0.77 | 0.42 | 0.64 | 0.26 | Medium |

| Support Vector Machines (svmPoly) | 0.8 | 0.47 | 0.73 | 0.34 | Low |

| Random Forest (rf) | 0.83 | 0.43 | 0.77 | 0.32 | Medium |

| Stochastic Gradient Boosting (gbm) | 0.84 | 0.48 | 0.76 | 0.32 | Low |

| eXtreme Gradient Boosting (XGBoost) | 0.86 | 0.54 | 0.77 | 0.34 | Low |

| Algorithm | Hyperparameter Optimization or Tuning (Main Parameters) |

|---|---|

| lda | none |

| lmt | iter = 1121 (number of iterations) |

| gml | none |

| svm | degree = 3, scale = 0.01, C = 8 (degree of the polynomial, Bessel or ANOVA kernel function; scaling parameter of the polynomial and tangent kernel; controls the smoothness of the fitted function) |

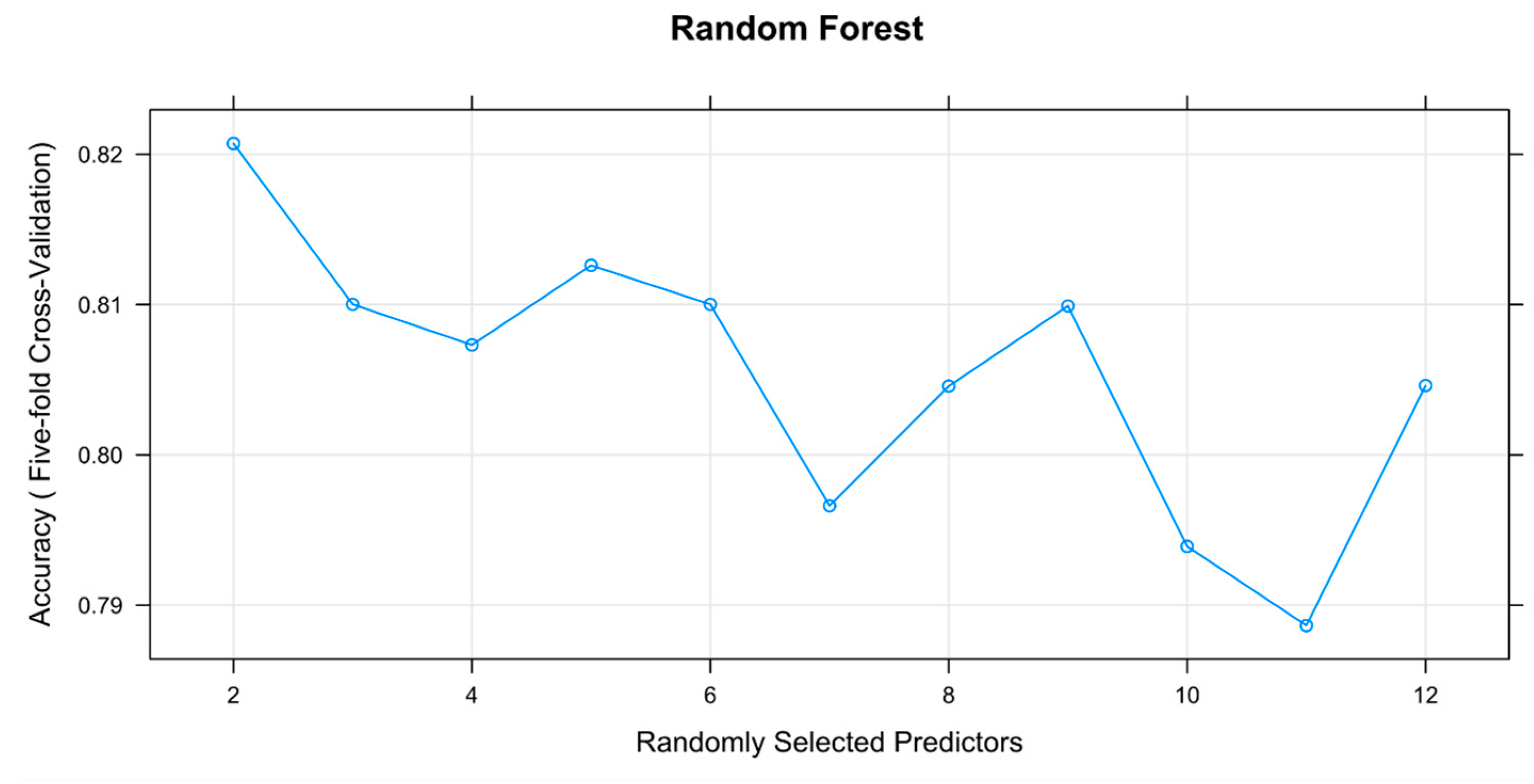

| rf | mtry = 2 (number of predictors sampled for spliting at each node) |

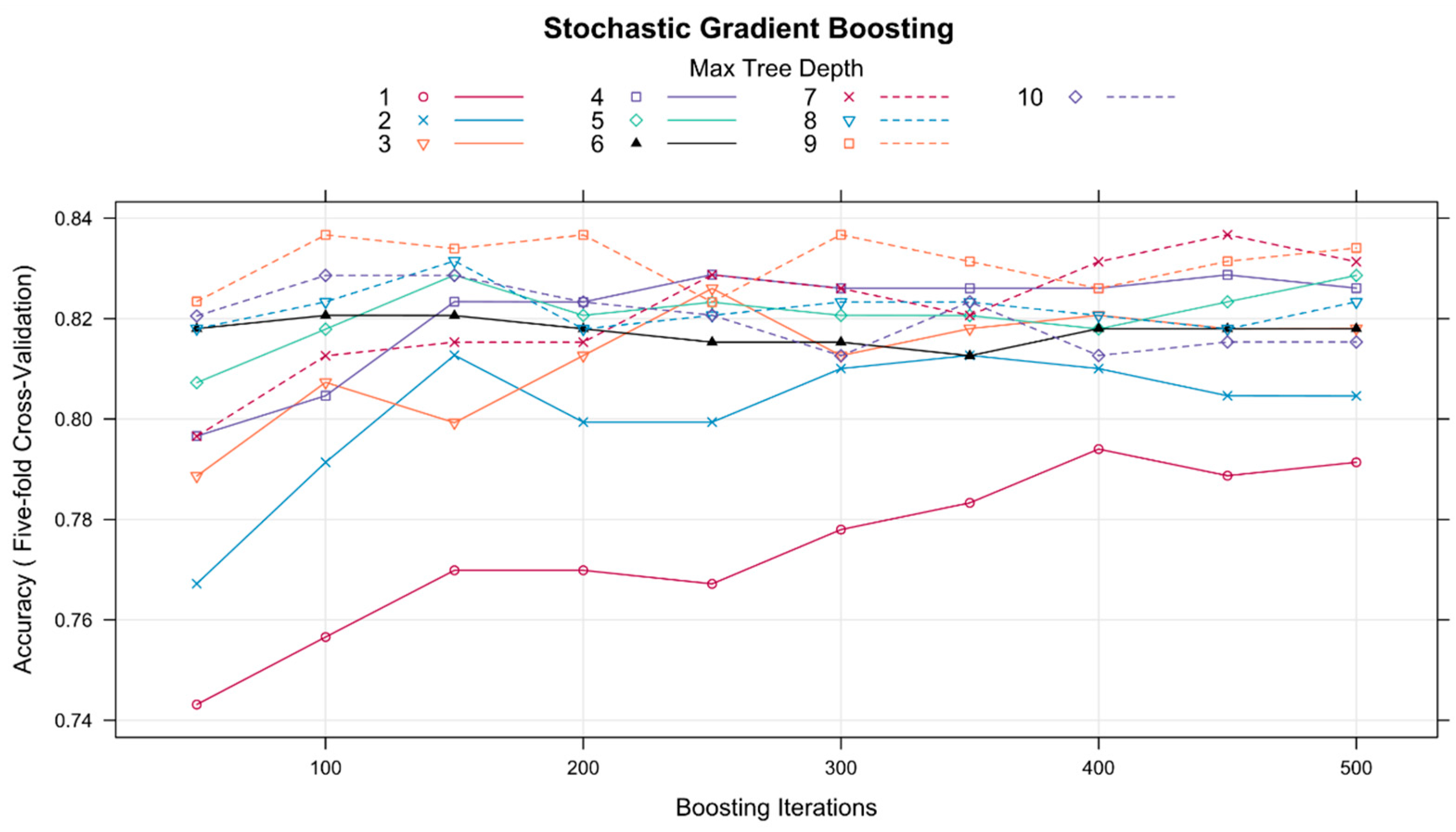

| gbm | n.trees = 1000, interaction.depth = 7, shrinkage = 0.1, n.minobsinnode = 0.1 (total number of trees to fit; maximum depth of each tree; learning rate or step-size reduction; minimum number of observations in the terminal nodes of the trees) |

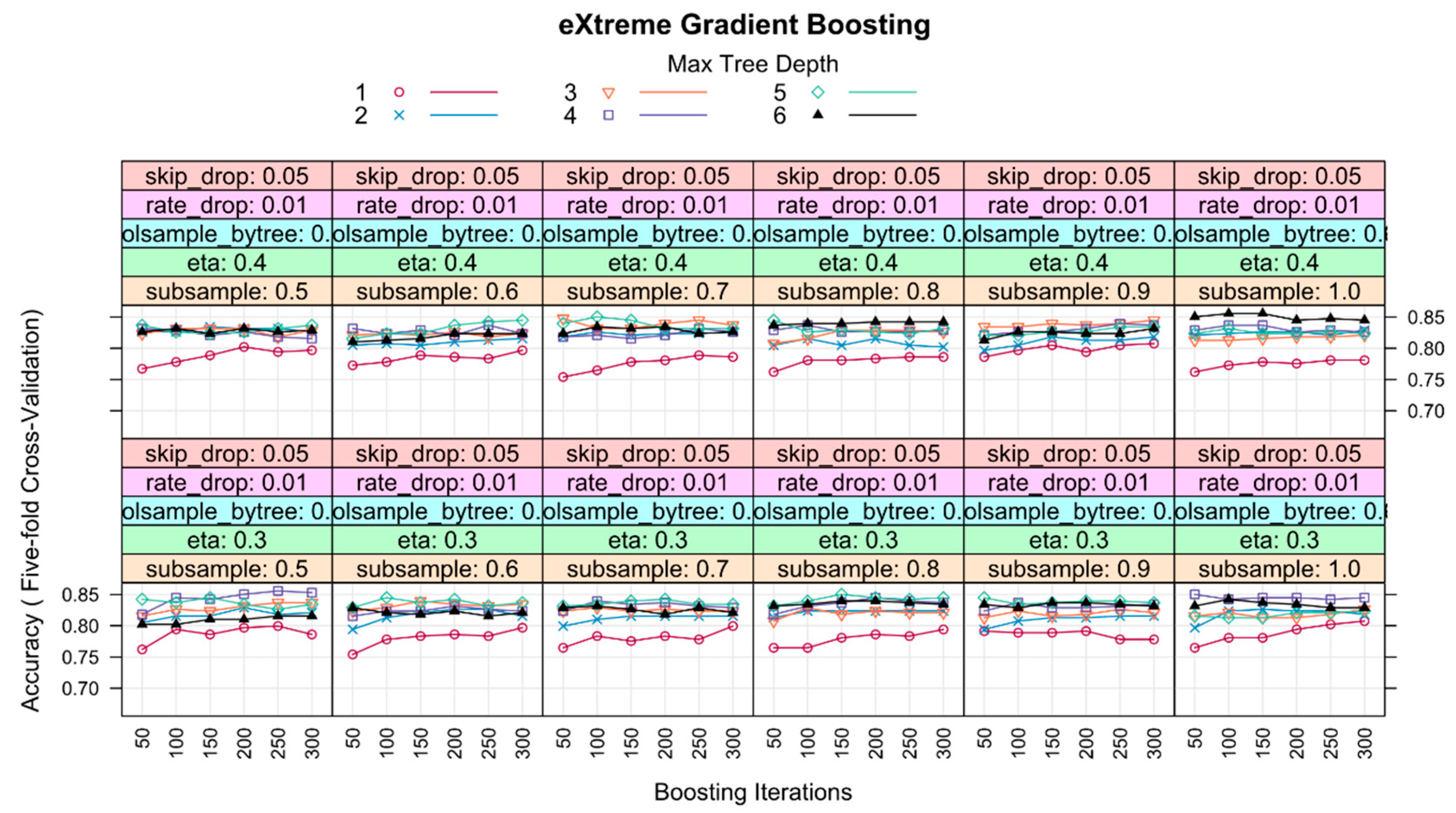

| XGBoost | nrounds = 50, max_depth = 4, eta = 0.3, gamma = 0, subsample = 0.722, colsample_bytree = 0.6, rate_drop = 0.5, skip_drop = 0.95, min_child_weight = 1 (number of rounds for boosting; maximum depth of a tree; step size shrinkage used in update to prevents overfitting; minimum loss reduction required to make a further partition on a leaf node of the tree; subsample ratio of the training instances; subsample ratio of columns when constructing each tree; dropout rate; probability of skipping the dropout procedure during a boosting iteration; minimum sum of instance weight needed in a child) |

| Gbm | XGBoost | Rf | Glm | ||||

|---|---|---|---|---|---|---|---|

| GPH | 100 | GHP | 100 | Rha | 100 | SST | 100 |

| Rha | 98.40 | Rha | 51.97 | GHP | 86.36 | GHP | 87.67 |

| Wa | 31.49 | Rhs | 49.58 | Ta | 41.93 | Ws | 63.03 |

| Ta | 19.72 | SST | 39.40 | Rhs | 40.56 | Ps | 8.11 |

| Rhs | 19.22 | Wa | 32.23 | Wa | 21.61 | Ta | 7.33 |

| SST | 17.43 | NAO | 26.52 | SST | 15.49 | Rha | 5.24 |

| Ps | 13.67 | Ws | 22.93 | NAO | 12.09 | Wa | 3.16 |

| Ws | 8.97 | Ta | 16.46 | Ps | 5.55 | Rhs | 2.10 |

| NAO | 0.00 | Ps | 0.00 | Ws | 0.00 | NAO | 0.00 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguasca-Colomo, R.; Castellanos-Nieves, D.; Méndez, M. Comparative Analysis of Rainfall Prediction Models Using Machine Learning in Islands with Complex Orography: Tenerife Island. Appl. Sci. 2019, 9, 4931. https://doi.org/10.3390/app9224931

Aguasca-Colomo R, Castellanos-Nieves D, Méndez M. Comparative Analysis of Rainfall Prediction Models Using Machine Learning in Islands with Complex Orography: Tenerife Island. Applied Sciences. 2019; 9(22):4931. https://doi.org/10.3390/app9224931

Chicago/Turabian StyleAguasca-Colomo, Ricardo, Dagoberto Castellanos-Nieves, and Máximo Méndez. 2019. "Comparative Analysis of Rainfall Prediction Models Using Machine Learning in Islands with Complex Orography: Tenerife Island" Applied Sciences 9, no. 22: 4931. https://doi.org/10.3390/app9224931