INS/Vision Integrated Navigation System Based on a Navigation Cell Model of the Hippocampus

Abstract

1. Introduction

- (1)

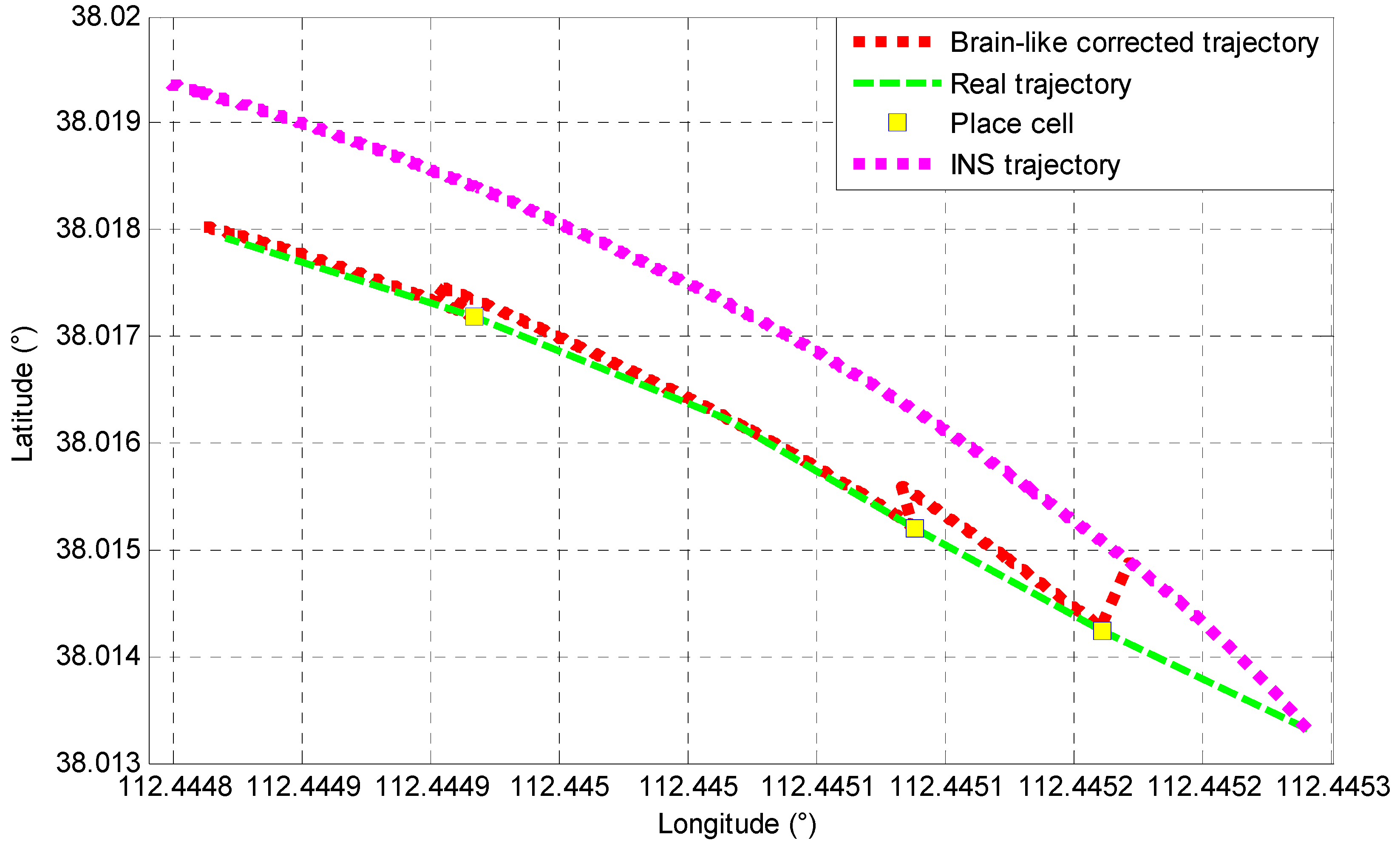

- The INS/vision brain-like navigation model is proposed. In our model, the camera is employed for environmental perception as the eyes of animals, and the INS is used for velocity and position measurement as the path integrator based on the speed cell model. The place cell nodes are established by storing feature scene pictures in the system and associating them with corresponding accurate position information. When the carrier moves, the images captured by the camera are matched with the stored feature scene pictures. When the match is successful, the place cell node is triggered, and its corresponding accurate place information is fed back to the INS to correct the accumulated position error. At the same time, the INS adjusts the path integration mechanism to reduce the error of the path integrator.

- (2)

- The INS/vision brain-like navigation system is built on an STM32 single-chip computer and LattePanda (a kind of card-type computer). The complete circuit board includes the main controller, namely STM32, and two main functional components, which are the INS and the camera based on LattePanda. The main board is composed of an ADC analog-to-digital conversion chip, Bluetooth module, various voltage regulating circuits, and so on. STM32 and LattePanda communicate through the serial port. The hardware system can realize real-time information processing of the proposed brain-like navigation system.

2. Brain-like Navigation Model

2.1. Speed Cells

2.2. Place Cells

2.3. INS/Vision Brain-like Navigation Model

3. Results Construction of INS/Vision Brain-like Navigation Hardware System

3.1. Construction of the INS Hardware System

3.1.1. Analog Signal Collection

3.1.2. Serial Data Collection

3.2. The Construction of the Vision Navigation Hardware System

3.3. The Construction of the Brain-like Combined Hardware System

4. Experiment and Verification

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, C.; Guo, C.; Zhang, D.H. Data Fusion Based on Adaptive Interacting Multiple Model for GPS/INS Integrated Navigation System. Appl. Sci. 2018, 8, 1682. [Google Scholar] [CrossRef]

- Li, Z.; Chang, G.; Gao, J.; Wang, J.; Hernandez, A. GPS/UWB/MEMS-IMU tightly coupled navigation with improved robust Kalman filter. Adv. Space Res. 2016, 58, 2424–2434. [Google Scholar] [CrossRef]

- Shen, C.; Yang, J.T.; Tang, J.; Liu, J.; Cao, H.L. Note: Parallel processing algorithm of temperature and noise error for micro-electro-mechanical system gyroscope based on variational mode decomposition and augmented nonlinear differentiator. Rev. Sci. Instrum. 2018, 89, 076107. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.Q.; Zhou, J.; Zhang, J.; Yang, Y.R.; Chen, J.F.; Zhang, J.J. Attitude Estimation Fusing Quasi-Newton and Cubature Kalman Filtering for Inertial Navigation System Aided with Magnetic Sensors. IEEE Access May 2018, 6, 28755–28767. [Google Scholar] [CrossRef]

- Cao, H.L.; Li, H.S.; Shao, X.L.; Liu, Z.Y.; Kou, Z.W.; Shan, Y.H.; Shi, Y.B.; Shen, C.; Liu, J. Sensing mode coupling analysis for dual-mass MEMS gyroscope and bandwidth expansion within wide-temperature range. Mech. Syst. Signal Process. 2018, 98, 448–464. [Google Scholar] [CrossRef]

- Shen, C.; Song, R.; Li, J.; Zhang, X.M.; Tang, J.; Liu, J.; Shi, Y.B.; Cao, H.L. Temperature drift modeling of MEMS gyroscope based on genetic-Elman neural network. Mech. Syst. Signal Process. 2016, 72–73, 897–905. [Google Scholar]

- Wang, R.; Xiong, Z.; Liu, J.Y.; Shi, L.J. A robust astro-inertial integrated navigation algorithm based on star-coordinate matching. Aerosp. Sci. Technol. 2017, 71, 68–77. [Google Scholar] [CrossRef]

- Sun, C.; Kitamura, T.; Yamamoto, J.; Martin, J.; Pignatelli, M.; Kitch, L.J.; Schnitzer, M.J.; Tonegawa, S. Distinct speed dependence of entorhinal island and ocean cells, including respective grid cells. Proc. Natl. Acad. Sci. USA 2015, 112, 9466. [Google Scholar] [CrossRef]

- Zhang, H.J.; Hernandez, D.E.; Su, Z.B.; Su, B. A Low Cost Vision-Based Road-Following System for Mobile Robots. Appl. Sci. 2018, 8, 1635. [Google Scholar] [CrossRef]

- Troiani, C.; Martinelli, A.; Laugier, C.; Scaramuzza, D. Low computational-complexity algorithms for vision-aided inertial navigation of micro aerial vehicles. Robot. Auton. Syst. 2015, 69, 80–97. [Google Scholar] [CrossRef]

- Jia, X.; Sun, F.; Li, H.; Cao, Y.; Zhang, X. Image multi-label annotation based on supervised nonnegative matrix factorization with new matching measurement. Neurocomputing 2017, 219, 518–525. [Google Scholar] [CrossRef]

- Cao, L.; Wang, C.; Li, J. Robust depth-based object tracking from a moving binocular camera. Signal Process. 2015, 112, 154–161. [Google Scholar] [CrossRef]

- Krajník, T.; Cristoforis, P.; Kusumam, K.; Neubert, P.; Duckett, T. Image features for visual teach-and-repeat navigation in changing environments. Robot. Auton. Syst. 2017, 88, 127–141. [Google Scholar] [CrossRef]

- Kropff, E.; Carmichael, J.E.; Moser, M.B.; Moser, E.I. Speed cells in the medial entorhinal cortex. Nature 2015, 523, 419–424. [Google Scholar] [CrossRef] [PubMed]

- Ye, J.; Witter, M.P.; Moser, M.B.; Moser, E.I. Entorhinal fast-spiking speed cells project to the hippocampus. Proc. Natl. Acad. Sci. USA 2018, 115, E1627–E1636. [Google Scholar] [CrossRef]

- Yan, C.; Wang, R.; Qu, J.; Chen, G. Locating and navigation mechanism based on place-cell and grid-cell models. Cogn. Neurodyn. 2016, 10, 1–8. [Google Scholar] [CrossRef]

- Knierim, J.J. From the GPS to HM: Place cells, grid cells, and memory. Hippocampus 2015, 25, 719–725. [Google Scholar] [CrossRef]

- Sanders, H.; Rennó-Costa, C.; Idiart, M.; Lisman, J. Grid Cells and Place Cells: An Integrated View of their Navigational and Memory Function. Trends Neurosci. 2015, 38, 763–775. [Google Scholar] [CrossRef]

- Kraft, M.; Schmidt, A. Toward evaluation of visual navigation algorithms on RGB-D data from the first- and second-generation Kinect. Mach. Vis. Appl. 2017, 28, 61–74. [Google Scholar] [CrossRef]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S. A Vision-Based Guidance System for UAV Navigation and Safe Landing using Natural Landmarks. J. Intell. Robot. Syst. 2010, 57, 233–257. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Zhu, J.; Wu, S.F.; Wang, X.Z.; Yang, G.Q.; Ma, L.Y. Multi-image matching for object recognition. IET Comput. Vis. 2018, 12, 350–356. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

| Latitude Position Error (m) | Longitude Position Error (m) | |||||

|---|---|---|---|---|---|---|

| Mean | RMSE | SD | Mean | RMSE | SD | |

| Brain-like method | 16.0354 | 0.0594 | 11.6656 | 24.2908 | 0.0923 | 17.6252 |

| Image matching method | 18.9697 | 0.0653 | 10.7083 | 24.6879 | 0.0980 | 22.0117 |

| Pure INS | 49.4234 | 0.1555 | 15.7400 | 25.3895 | 0.1017 | 19.2724 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Guo, X.; Zhao, D.; Shen, C.; Wang, C.; Li, J.; Tang, J.; Liu, J. INS/Vision Integrated Navigation System Based on a Navigation Cell Model of the Hippocampus. Appl. Sci. 2019, 9, 234. https://doi.org/10.3390/app9020234

Liu X, Guo X, Zhao D, Shen C, Wang C, Li J, Tang J, Liu J. INS/Vision Integrated Navigation System Based on a Navigation Cell Model of the Hippocampus. Applied Sciences. 2019; 9(2):234. https://doi.org/10.3390/app9020234

Chicago/Turabian StyleLiu, Xiaojie, Xiaoting Guo, Donghua Zhao, Chong Shen, Chenguang Wang, Jie Li, Jun Tang, and Jun Liu. 2019. "INS/Vision Integrated Navigation System Based on a Navigation Cell Model of the Hippocampus" Applied Sciences 9, no. 2: 234. https://doi.org/10.3390/app9020234

APA StyleLiu, X., Guo, X., Zhao, D., Shen, C., Wang, C., Li, J., Tang, J., & Liu, J. (2019). INS/Vision Integrated Navigation System Based on a Navigation Cell Model of the Hippocampus. Applied Sciences, 9(2), 234. https://doi.org/10.3390/app9020234