Optimization of River and Lake Monitoring Programs Using a Participative Approach and an Intelligent Decision-Support System

Abstract

1. Introduction

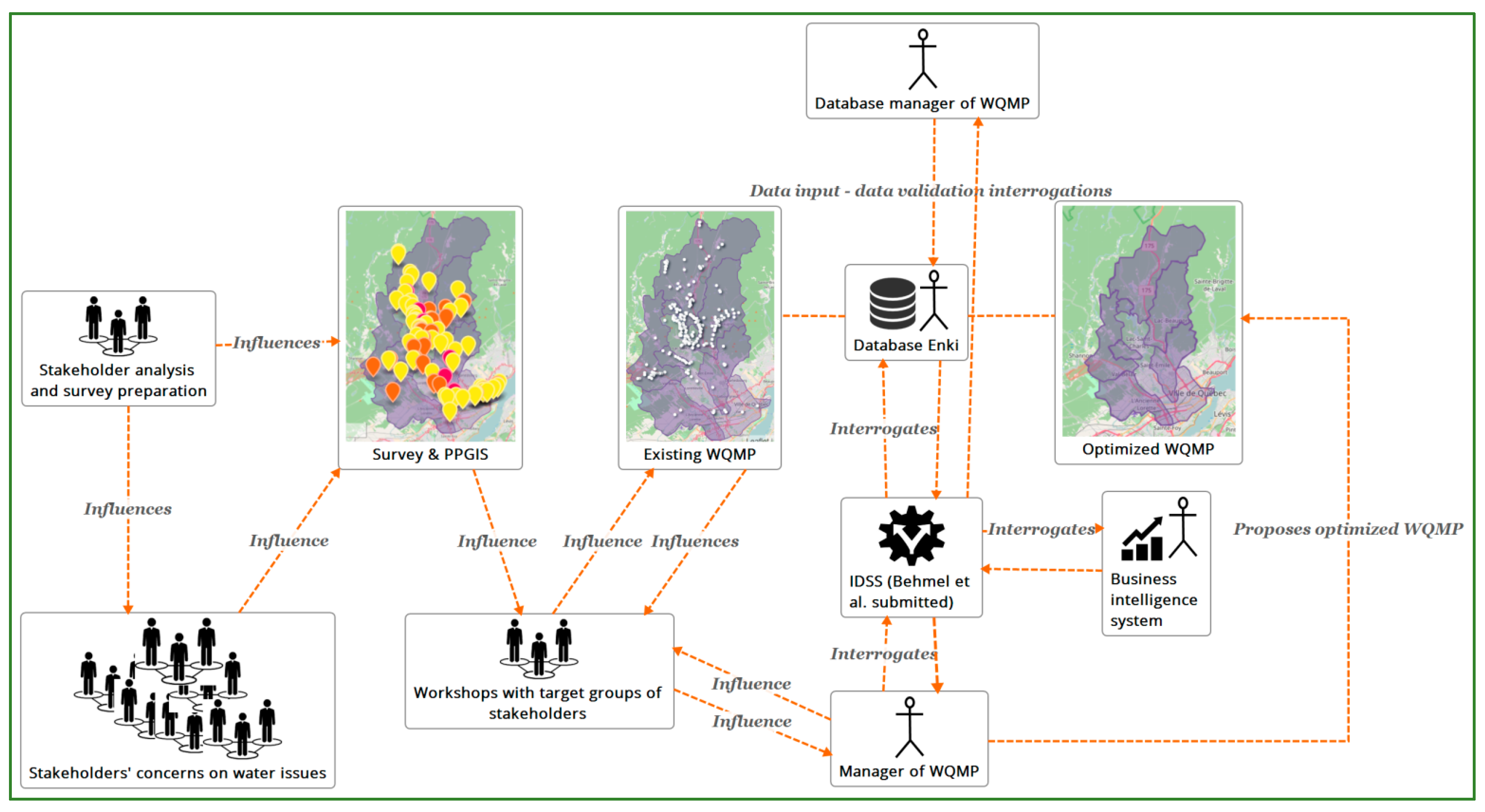

2. Methodology

- In this paper, we present the test of the IDSS and show how it contributes to understanding the initial WQMPs (Method Section 2.1),

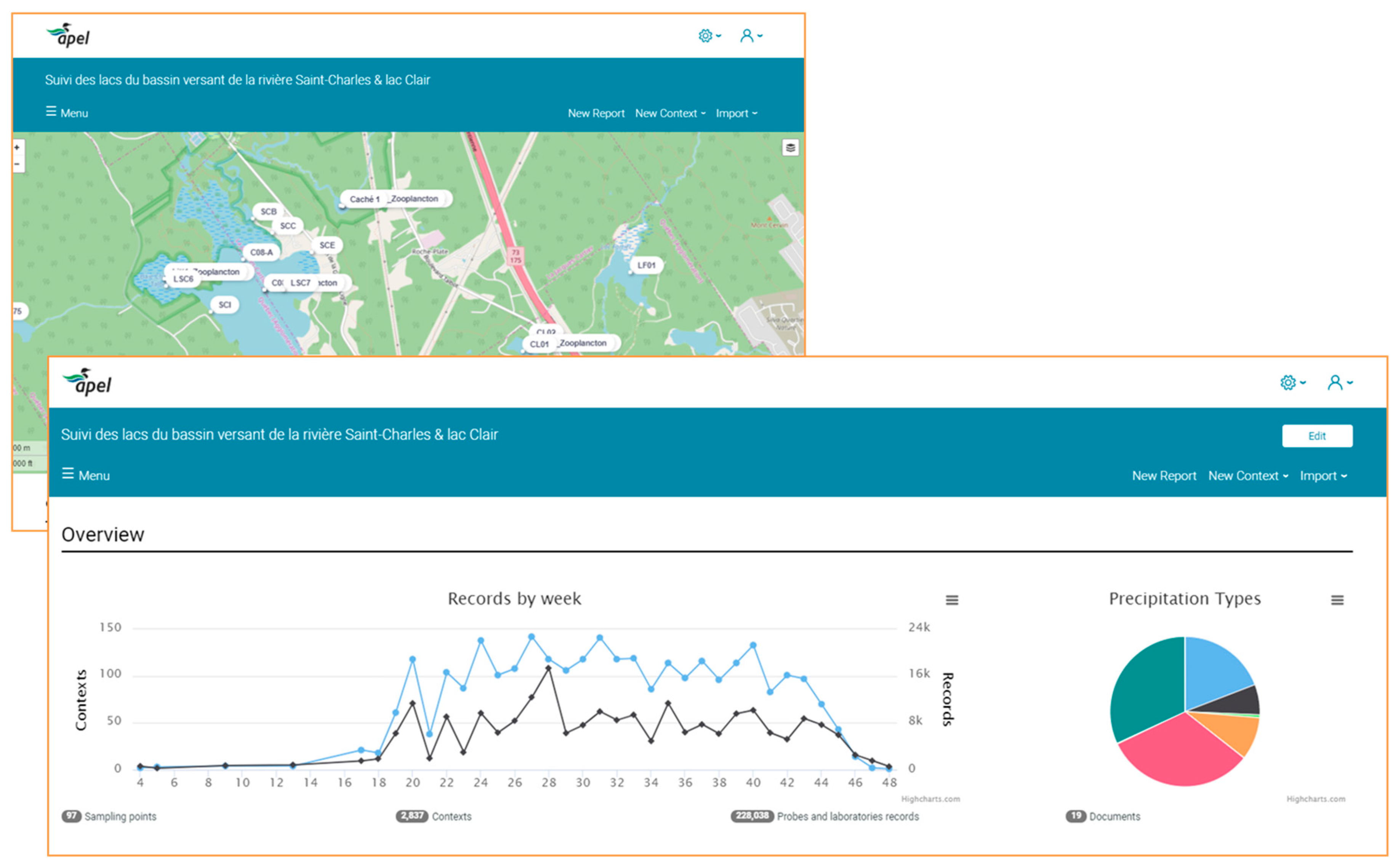

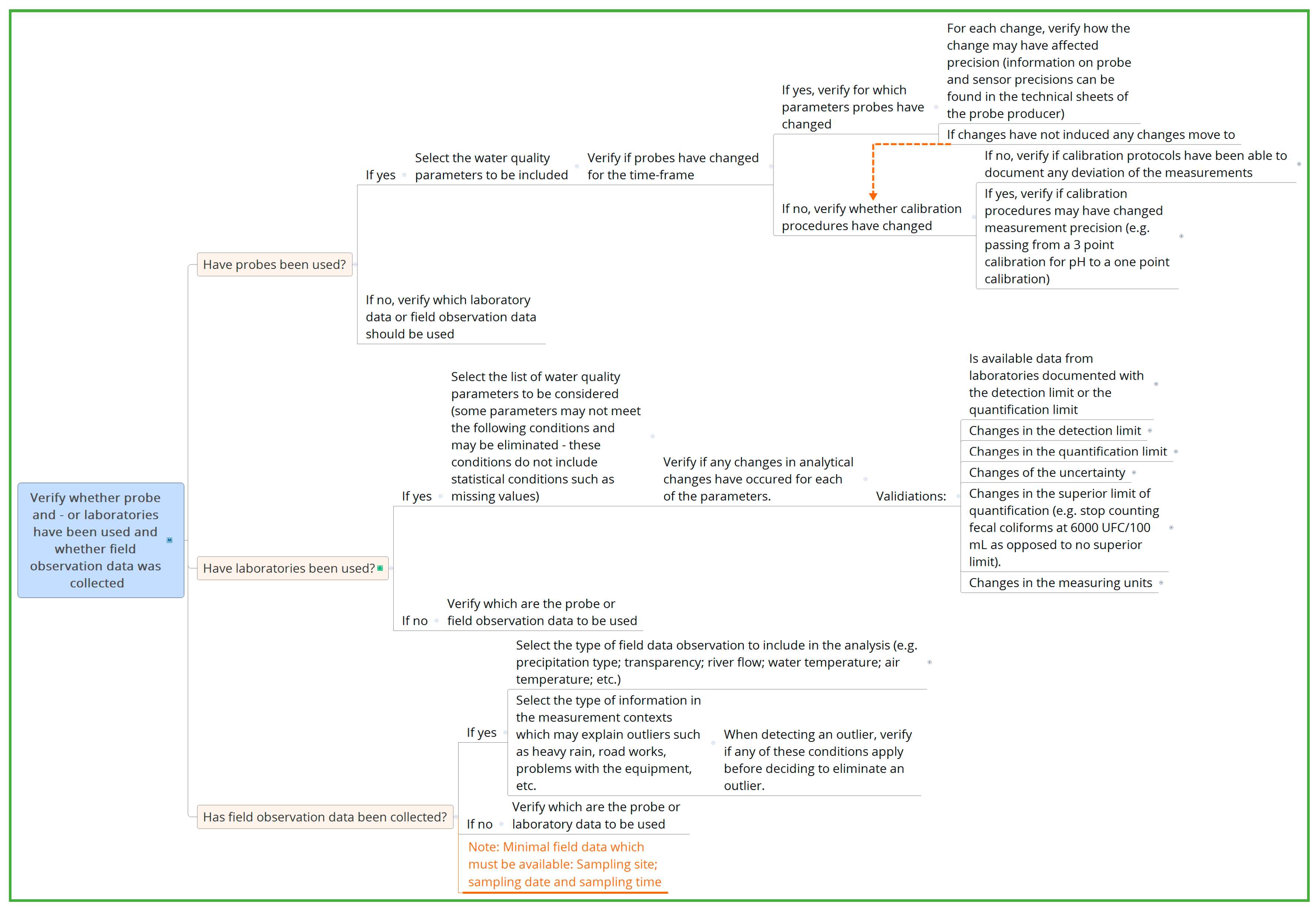

- Supplies decision support in data validation, data quality assessment and storage (Method Section 2.2),

- Proposes optimization procedures (Method Section 2),

- Contributes to the application of the optimization procedures (Method Section 2.4),

- Provides decision support to finalize the optimization procedure (Method Section 2.5),

- Is instrumental in the redesign of the WQMPs (Results and discussion Section 3).

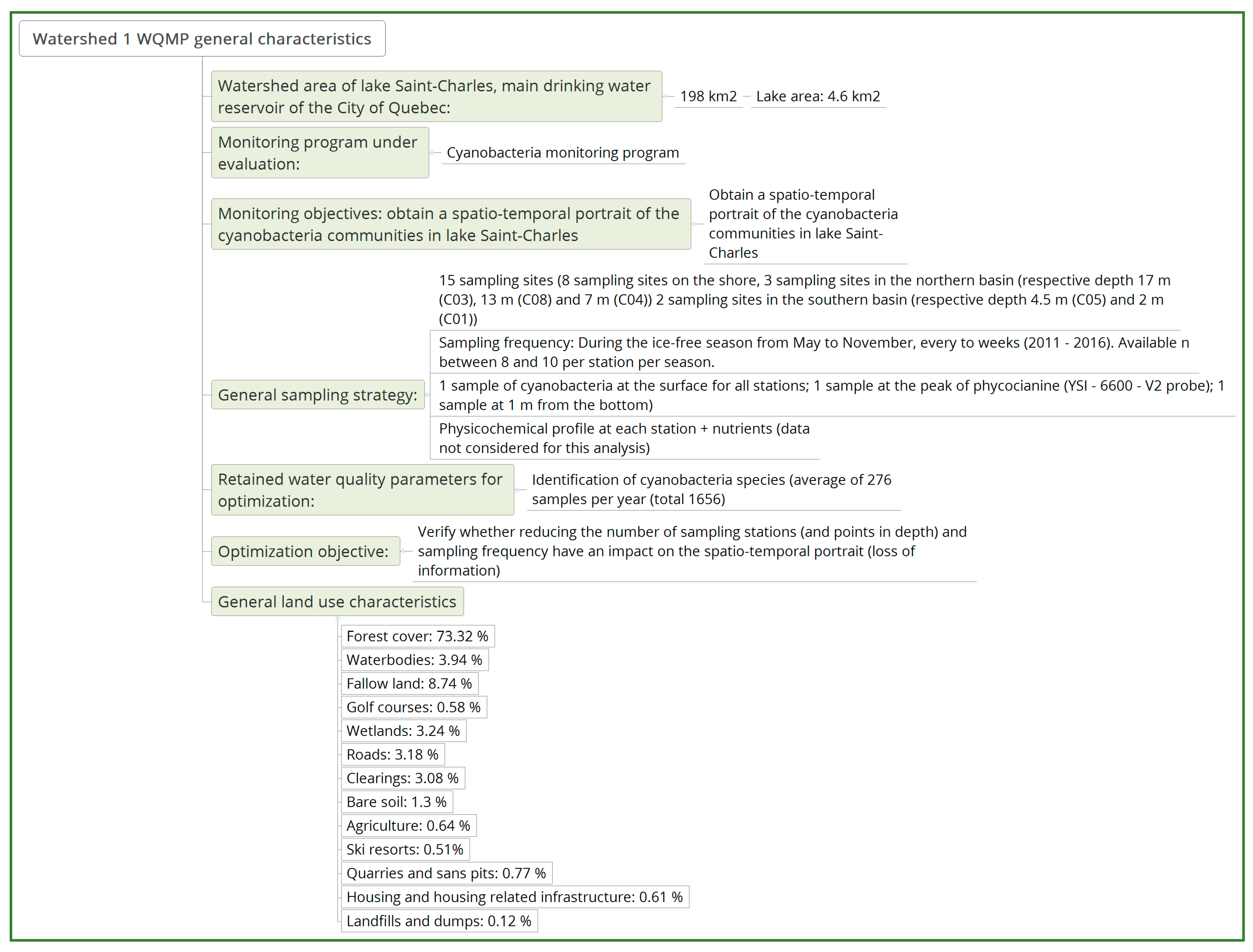

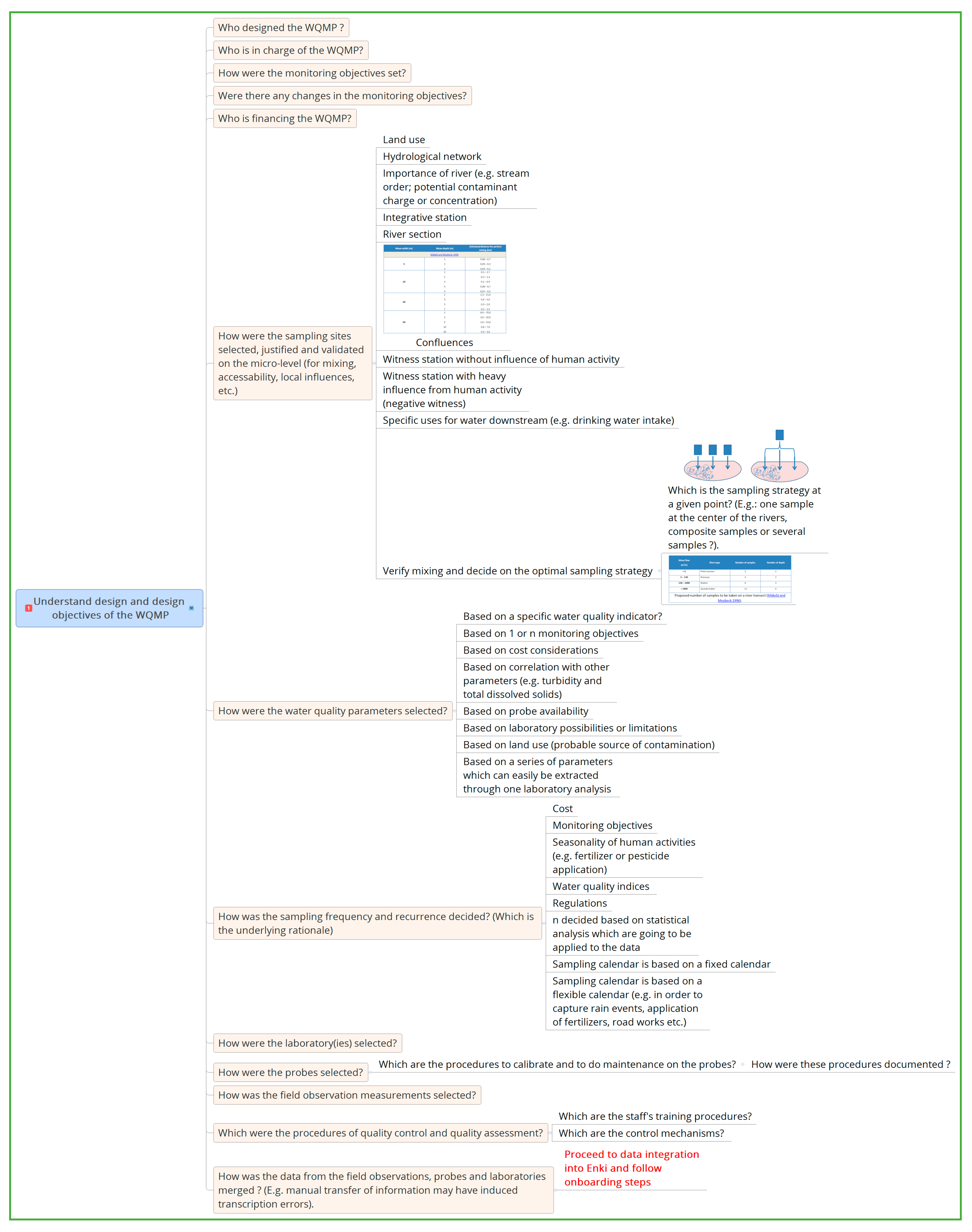

2.1. Understanding the Initial WQMPs and Data Validation and Integration

2.2. Selection of Optimization Methods

2.3. Application of Optimization Procedures

2.4. Decision Support During the Optimization Procedures

2.5. Decision Support to Complete the Optimization Procedure

- IF more than one station is visited in a lake, THEN verify the justification of the selection of each site in order to understand the rationale for each site (e.g., deepest sector of a lake; close to a major inflow; in a section of a lake with recurrent cyanobacteria blooms) [31].

- For each station, verify the employed sampling strategy. These decisions can greatly affect the results: e.g., decisions taken on the profile (probe data every 0,5 m, 1 m, etc.), decisions taken on sampling depth for laboratory parameters (chlorophyll a, total phosphorous, nitrogen compounds, cyanobacteria etc), decisions taken for the type of sampling method: e.g., horizontal bottle at a given depth, tube samplers for an integrative sample of the first n meters, etc.

- Validate whether the sampling strategy responds to the objectives and whether adding new parameters is in line with the existing sampling strategy and objectives.

- Verify whether a river WQMP is in place in order to observe changes in the watershed which may translate to the lake.

- Based on the results of the analysis provided for each station which suggest either the retention, removal or no specific action for a station, verify the following: IF a sampling site is suggested for removal, THEN verify the type of sampling site and the sampling site justification.

- IF the sampling site is an integrative station, THEN keep the sampling station

- ○

- An integrative station is a station which is downstream from a subwatershed and represents the globality of the subwatershed.

- IF the sampling site is a section of a river, THEN verify whether a particular goal is pursued and justified for the station

- ○

- A station of a section represents the water quality between two sampling sites and should be selected according to specific goals and pollution sources, and must be justified).

- IF the sampling site justification is a witness station, THEN verify if the sampling site still qualifies

- ○

- A witness station is supposed to represent the natural water quality of a subwatershed, or, if it is not possible to have a witness station for every subwatershed, there should be a witness station for every sector representing the geology of the sectors of the WQMP. Therefore, it is necessary to verify whether sufficient witness stations are available to be representative of every type of natural background in the territory subject to the WQMP.

- IF a specific goal is pursued, THEN verify whether the station is (1) still representative, (2) whether upstream and downstream stations contribute to achieving this goal, and, add, if necessary additional stations, (3) verify whether the station is still representative on the micro-level (mixing, accessibility, (new) local influences), whether water quality can be altered (improved – e.g., a waterfall – or degraded – e.g., a sewer overflow).

- Verify whether the water quality parameters taken at the station are contributive to attaining the goal (e.g., evaluate the influence of agriculture land use should include monitoring nitrogen-based compounds).

- Verify whether sufficient data were taken during wet weather conditions and, if not, adapt sampling calendar to a more flexible calendar.

- Verify whether new (additional) goals can be pursued at existing stations.

- Verify whether the sampling site justification is consistent with the stations’ location and whether there must be another station implemented to be able to respond to the justification (e.g., if one wants to find out whether there is an influence of the inflow of a river at a station, there must be some information available on that river, and there must be a station upstream from this river inflow (triangle strategy).

- IF the sampling site justification is: upstream from a wastewater treatment plant (municipal or industrial), THEN there should be a station downstream from the treatment plant.

3. Results and Discussion

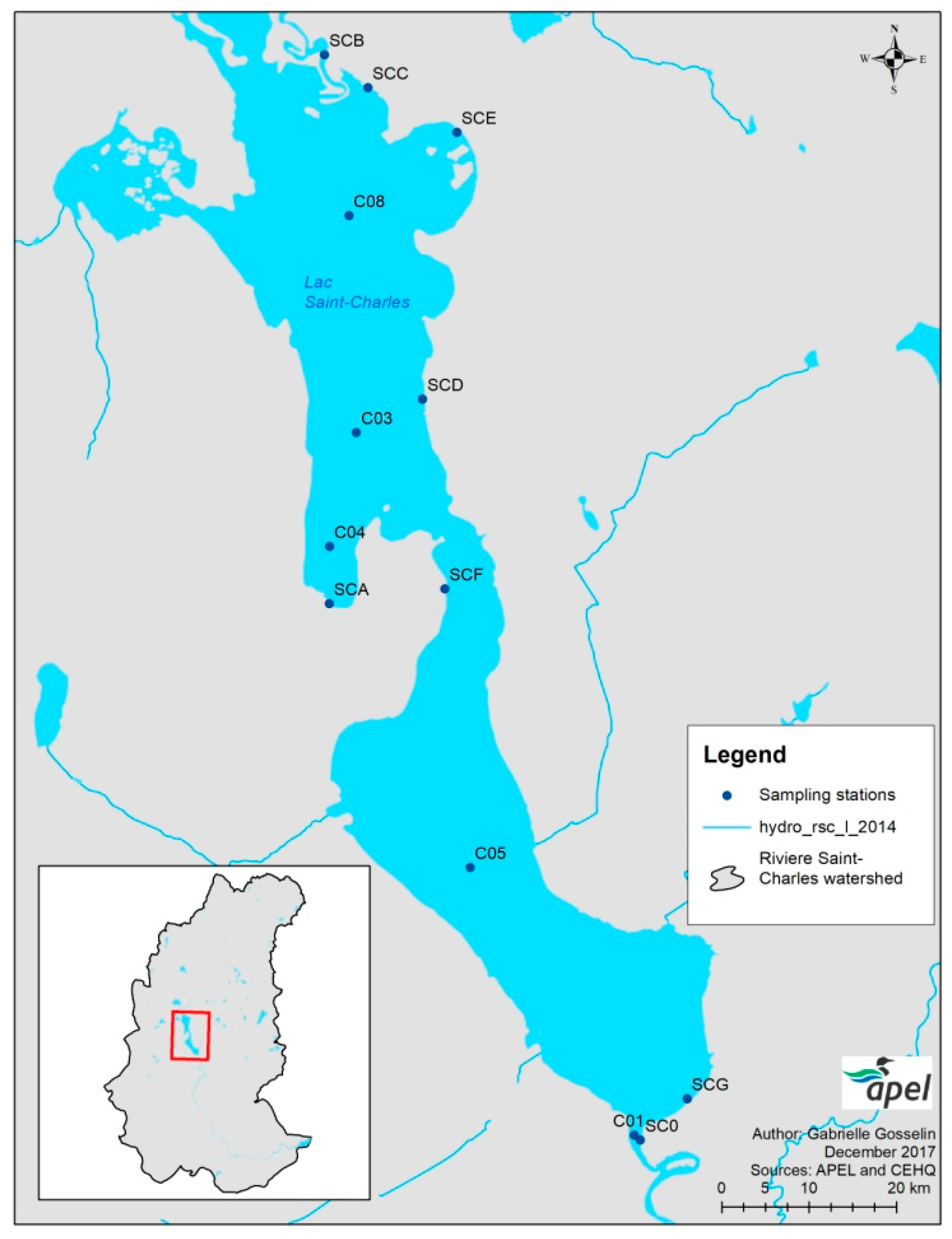

3.1. Optimization of the W1 WQMP – Results and Discussion

- Ensure that changes in the water quality of the tributaries (in particular road salts) and the ensuing impact on Lac Saint-Charles are detected in order to reinstate the present cyanobacteria program and to observe changes which they may announce.

- Verify whether other objectives followed within this WQMP are not compromised by these decisions.

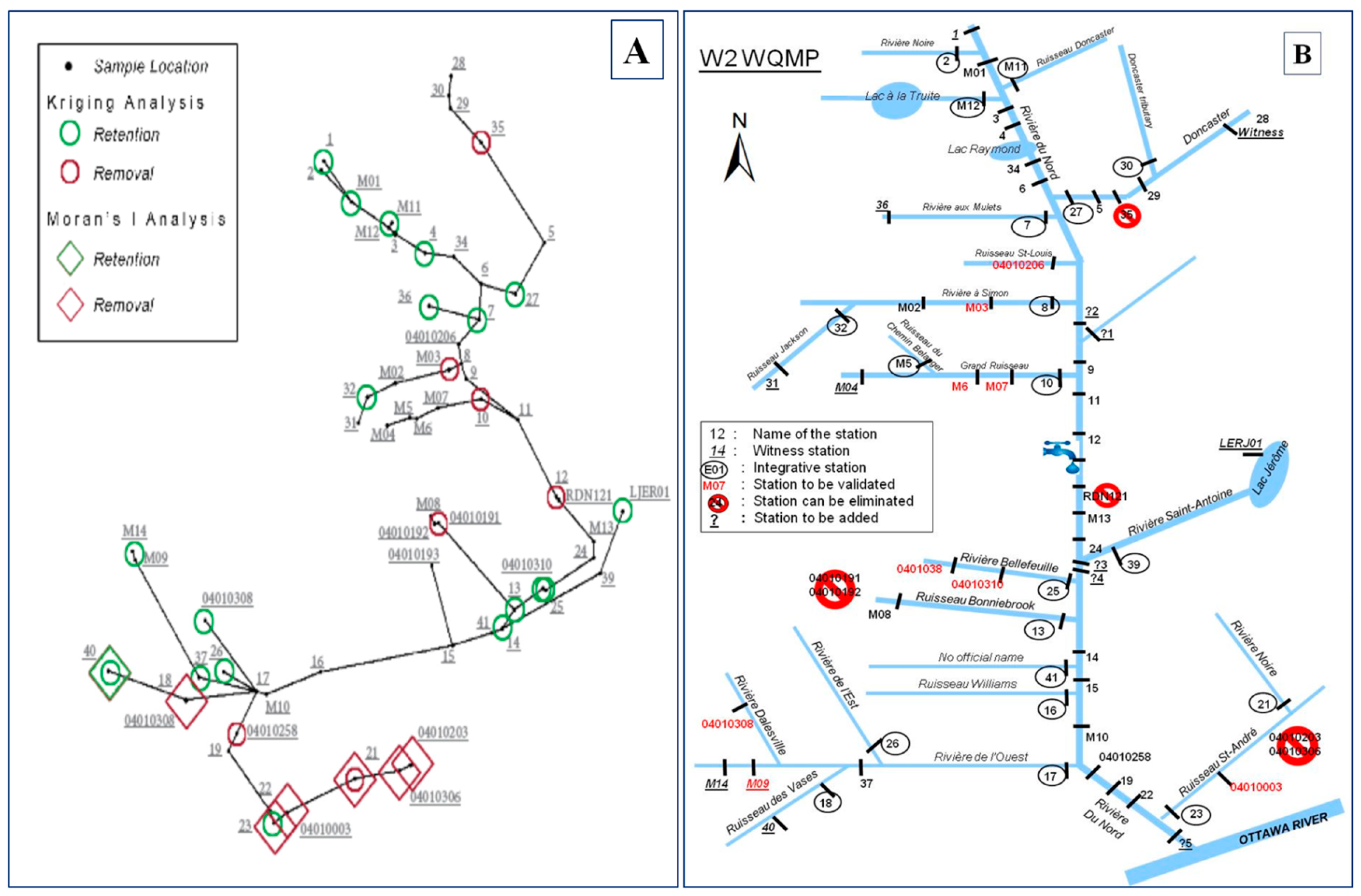

3.2. Optimization of the W2 WQMP - Results and Discussion

- Maintain the global WQMP of W2 according to the recommendations presented in Figure 10B,

- Collect samples in 50% of rain events (modality 3),

- Obtain at least 10 samples for each of these stations per year,

- Respond to the actionable tasks in Table 2, with the priority of adding an integrative (and affordable) parameter such as specific conductivity to respond to the knowledge needs on road salts and other contaminations and identify other parameters that can be financed (the WQMP can still be pursued while these tasks are completed),

- Consider adding rotating WQMPs for the subwatersheds (more stations, more water quality parameters and a higher sampling frequency.

4. Conclusions

- Understanding the initial WQMPs

- ○

- The questions asked by the IDSS are crucial to understanding the rationale of the WQMPs. Having a firm set of questions and documented answers contributes to transferring the information on the WQMP within an organization and communicating the information to the public, partners, and decision-makers.

- Decision support in data validation and integration (storage) process (quality assessment and storage)

- ○

- The optimization of a WQMP is based on the data of the existing WQMP and the underlying decisions which led to the WQMP, as well as considerations such as possible changes that have occurred over time in the WQMP. All this information needs to be documented with the appropriate metadata for convenient retrieval when optimizing a WQMP.

- ○

- The integration process of all the existing data of the case studies into the database Enki™ connected to the IDSS was key to understanding, documenting and relating information on sampling sites, sampling contexts, measured parameters, and geographical information. The integration process also showed where changes in the WQMP were made, and why. In several cases, some of these changes can affect the continuity in the available data series and the conclusions to be drawn from the data sets. On the other hand, it was very difficult to retrieve the information on why these changes were made since the staff in charge were not always available. Therefore, there is a need for a system to be able to support and document these decisions.

- Selecting optimization procedures proposed in the literature

- ○

- The IDSS proposes specific optimization procedures (methods) proposed in the literature that correspond to specific optimization objectives. The IDSS was constructed to propose these methods for specific optimization objectives and provide support in applying the optimization procedures.

- Contribution to applying the optimization procedures

- ○

- In the course of the application procedures, specific questions arise concerning the data sets. The IDSS connected to the database is able to provide a quick answer to these questions, such as variation in laboratories and data precision, changes in field protocols, changes in data series for a specific sampling site.

- Decision support (replacing experts) to finalize the optimization procedure

- ○

- This step provides very crucial additional decision support. Indeed, in all optimization methods proposed in the literature, expert input is necessary to take final decisions regarding sampling. The IDSS was designed for this task and can be used, whether or not a statistical optimization method has been used. However, it was shown that the results of an optimization method provide a very good starting point for these questions and support the justification of the choices made.

- Data-driven decision support to redesign WQMPs

- ○

- We were able to show that the database EnkiTM, the onboarding process and the decision support provided by the IDSS were instrumental in redesigning the WQMPs of our two case studies. A solid data and metadata management system are crucial to the IDSS, and cannot be separated without losing efficiency and accuracy in the process.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| Abrinord | Organisme de bassin versant de la rivière du Nord |

| APEL | Association pour la protection de l’environnement du lac Saint-Charles et des Marais du Nord |

| FC | Fecal coliforms |

| IDSS | Intelligent decision support system |

| IWM | Integrated watershed management |

| TSS | Total suspended solids |

| TP | Total phosphorous |

| PPGIS | Public participation geographical information system |

| W1 | Watershed 1 = Watershed of the Saint-Charles river |

| W2 | Watershed 2 = Watershed of the rivière du Nord |

| WQMP | Water quality monitoring program |

Appendix A

- Use of Beveridge’s et al. [13] methods to optimize the W2 WQMP: non-metric multi-dimensional scaling (NMDS), principal component analysis (PCA), Kriging, Moran’s Index and leave – one – out cross-validation.

References

- Gerlak, A.; Lautze, J.; Giordano, M. Water resources data and information exchange in transboundary water treaties. Int. Environ. Agreem. Politics Law Econ. 2011, 11, 179–199. [Google Scholar] [CrossRef]

- Timmerman, J. The need for participatory processes and its implications for water management information. Reg. Environ. Chang. 2005, 5, 162–163. [Google Scholar] [CrossRef]

- Timmerman, J.; Langaas, S. Water information: what is it good for? The use of information in transboundary water management. Reg. Environ. Chang. 2005, 5, 177–187. [Google Scholar] [CrossRef]

- Fölster, J.; Johnson, R.; Futter, M.; Wilander, A. The Swedish monitoring of surface waters: 50 years of adaptive monitoring. AMBIO 2014, 43, 3–18. [Google Scholar] [CrossRef] [PubMed]

- Government of Western Australia. Water Quality Program Design: A Guideline to the Development of Surface Water Quality Programs; Department of Water, Government of Western Australia: Perth, Western Australia, 2009; p. 35.

- Strobl, R.O.; Robillard, P.D. Network design for water quality monitoring of surface freshwaters: A review. J. Environ. Manag. 2008, 87, 639–648. [Google Scholar] [CrossRef] [PubMed]

- Ward, R.C.; Loftis, J.C.; McBride, G.B. Design of Water Quality Monitoring Systems; Van Nostrand Reinhold: New York, NY, USA, 1990. [Google Scholar]

- Behmel, S.; Damour, M.; Ludwig, R.; Rodriguez, M.J. Water quality monitoring strategies—A review and future perspectives. Sci. Total Environ. 2016, 571, 1312–1329. [Google Scholar] [CrossRef] [PubMed]

- Harmancioglu, N.B.; Fistikoglu, O.; Ozkul, S.D.; Singh, V.; Alpaslan, M.N. Water Quality Monitoring Network Design; Kluwer Academic Publisher: Dordrecht, The Netherlands, 1999; p. 290. [Google Scholar]

- Clark, J.M.; Schaeffer, B.A.; Darling, J.A.; Urquhart, E.A.; Johnston, J.M.; Ignatius, A.R.; Myer, M.H.; Loftin, K.A.; Werdell, P.J.; Stumpf, R.P. Satellite monitoring of cyanobacterial harmful algal bloom frequency in recreational waters and drinking water sources. Ecol. Indic. 2017, 80, 84–95. [Google Scholar] [CrossRef] [PubMed]

- Tennakoon, S.B.; Ramsay, I.; Marsh, N.; Connor, R.O. The Integrated Monitoring and Assessement System (IMAS): A Decision Support System for Water Quality Monitoring and Assessement Programs. In Proceedings of the 19th International Congress on Modelling and Simulation, Perth, Australia, 12–16 December 2011; pp. 3532–3538. [Google Scholar]

- Behmel, S. Proposition d’un Programme de Suivi de la Qualité de l’eau à L’échelle du Bassin Versant de la Rivière Saint-Charles. 2010. Available online: http://www.apel-maraisdunord.org/apel/assets/Simon-Magnan-Essai-Maitrise.pdf (accessed on 28 September 2019).

- Beveridge, D.; St-Hilaire, A.; Ouarda, T.B.M.J.; Khalil, B.; Conly, F.M.; Wassenaar, L.I.; Ritson-Bennett, E. A geostatistical approach to optimize water quality monitoring networks in large lakes: Application to Lake Winnipeg. J. Great Lakes Res. 2012, 38, 174–182. [Google Scholar] [CrossRef]

- Wilkinson, J.; Souter, N.; Fairweather, P. Best Practice Framwork for the Monitoring and Evaluation of Water-Dependent Ecosystems 1: Framework; Department of Water, Land and Biodiversity Conservation: Adelaide, Australia, 2007.

- Water Quality Monitoring: A Practical Guide to the Design and Implementation of Freshwater Quality Studies and Monitoring Programmes; Bartram, J., Ballance, R., Eds.; Taylor and Francis: London, UK; New York, NY, USA, 1996. [Google Scholar]

- Behmel, S.; Damour, M.; Ludwig, R.; Rodriguez, M. Participative approach to elicit water quality monitoring needs from stakeholder groups—An application of integrated watershed management. J. Environ. Manag. 2018, 15, 540–554. [Google Scholar] [CrossRef] [PubMed]

- Khalil, B.; Ouarda, T.B.M.J.; St-Hilaire, A.; Chebana, F. A statistical approach for the rationalization of water quality indicators in surface water quality monitoring networks. J. Hydrol. 2010, 386, 173–185. [Google Scholar] [CrossRef]

- Ouyang, Y. Evaluation of river water quality monitoring stations by principal component analysis. Water Res. 2005, 39, 2621–2635. [Google Scholar] [CrossRef] [PubMed]

- Levine, C.R.; Yanai, R.D.; Lampman, G.G.; Burns, D.A.; Driscoll, C.T.; Lawrence, G.B.; Lynch, J.A.; Schoch, N. Evaluating the efficiency of environmental monitoring programs. Ecol. Indic. 2014, 39, 94–101. [Google Scholar] [CrossRef]

- Olsen, R.L.; Chappell, R.W.; Loftis, J.C. Water quality sample collection, data treatment and results presentation for principal components analysis—Literature review and Illinois River watershed case study. Water Res. 2012, 46, 3110–3122. [Google Scholar] [CrossRef] [PubMed]

- APEL. Diagnose du lac Saint-Charles 2012—Rapport Final; Association Pour la Protection de L’environnement du lac Saint-Charles et des Marais du Nord: Québec, QC, Canada, 2014; p. 519. [Google Scholar]

- Abrinord. Diagnostic de la Zone de Gestion Intégrée de l’eau d’Abrinord, Version Préliminaire; Organisme de Bassin Versant de la Rivière du Nord: Saint-Jerôme, QC, Canada, 2012; p. 139. [Google Scholar]

- USEPA. Guidelines for Preparation of the Comprehensive State Water Quality Assessments (305b Reports) and Electronic Updates; USEPA: Washington, DC, USA, 2001.

- Legendre, P.; Gallagher, E. Ecologically meaningful transformations for ordination of species data. Oecologia 2001, 129, 271–280. [Google Scholar] [CrossRef] [PubMed]

- Kruskal, W.H.; Wallis, W.A. Use of Ranks in One-Criterion Variance Analysis. J. Am. Stat. Assoc. 1952, 47, 583–621. [Google Scholar] [CrossRef]

- APEL. Suivi des Rivières du Bassin Versant de la Rivière Saint-Charles—Campagne 2013; Association Pour la Protection de L’environnement du lac Saint-Charles et des Marais du Nord: Québec, QC, Canada, 2014; p. 150. [Google Scholar]

- ALCOSAN. ALCOSAN Wet Weather Plan—Receiving Waters Characterization; Allegheny County Sanitary Authority: Pittsburgh, PA, USA, 2012; p. 20. [Google Scholar]

- Stantec Consulting Ltd.; Aquafor Beech Limited. Dry and Wet Weather Modelling of Water Quality Under Alternative Land Use Scenarios in the Duffins and Carruthers Creek Watersheds: A Simple Spreadsheet Approach; The Toronto and Region Conservation Authority: Toronto, ON, Canada, 2003; p. 28. [Google Scholar]

- Pinto, U.; Maheshwari, B. River health assessment in pen-urban landscapes: An application of multivariate analysis to identify the key variables. Water Res. 2011, 45, 3915–3924. [Google Scholar] [CrossRef] [PubMed]

- Khalil, B.; Ouarda, T.B.M.J. Statistical approaches used to assess and redesign surface water-quality-monitoring networks. J. Environ. Monit. 2009, 11, 1915–1929. [Google Scholar] [CrossRef] [PubMed]

- Thomas, R.; Meybeck, M.; Beim, A. Lakes. In Water Quality Assessments—A Guide to the Use of Biota, Sediments and Water in Environmental Monitoring, 2nd ed.; Chapman, D., Ed.; E & FN Spon: London, UK, 1996. [Google Scholar]

- Josse, J.; Chavent, M.; Liquet, B.; Husson, F. Handling Missing Values with Regularized Iterative Multiple Correspondence Analysis. J. Classif. 2012, 29, 91–116. [Google Scholar] [CrossRef]

- Josse, J.; Husson, F. missMDA: A Package for Handling Missing Values in Multivariate Data Analysis. J. Stat. Softw. 2016, 70. [Google Scholar] [CrossRef]

- Hébert, S. Développement d’un Indice de la Qualité Bactériologique et Physico-Chimique de l’eau pour les Rivières du Québec, Québec; Ministère de l’Environnement et de la Faune: Québec, QC, Canada, 1997; Volume envirodoq no EN/970102, pp. 20–24.

| Actionable Tasks | Verifications that Must be Made Prior to the Final Decision |

|---|---|

| Verify whether changes in physicochemical parameters and other observations made in the river WQMP of this watershed can have an effect on the structure of the cyanobacteria community of Lake Saint-Charles |

|

| Verify sampling strategy |

|

| Verify which station can be retrieved between C01 and SC0 |

|

| Verify whether changes in the cyanobacteria monitoring affect other monitoring objectives |

|

| Actionable Tasks | Stations Submitted to the Tasks (IDSS would Lead to a Specific Series of Options to Comply with These Tasks) |

|---|---|

| Verify whether sufficient data is available during rain events | All stations |

| Verify whether sampling frequency was sufficient and whether a different type of sampling frequency should be adopted | Ideally all stations, however, the analysis is time-consuming, therefore the following stations are suggested as being representative for different sections of W2: 1 – Witness station of the Rivière du Nord watershed. 12 – Station representing water quality upstream from the drinking water treatment plant of Saint-Jerôme. 14 – Station with the longest history. 22 – Station the furthest downstream on Rivière du Nord (close to an integrative station of the entire watershed). 23 – Integrative station of a watershed with mostly agricultural activities (representative of the downstream portion of W2). 17 – Integrative station of a watershed with mostly recreational activities (representative of the upstream portion of W2) |

| Verify mixing and representativity |

|

| Verify whether additional water quality parameters should be taken according to the sampling site justification and new monitoring objectives |

|

| Verify whether additional stations should be added (or removed) (other than those presented in Figure 10B) |

|

| Water Quality Parameter | Rain Event Modalities | Number (n) of Samples for Each Modality | Conclusions of the Kruskall – Wallis Test |

|---|---|---|---|

| Fecal coliform | 0 - no rain for 48 h | 915 | Differences were detected between all rain event modalities, except for differences between modality 0 and 1 and 2 and 4. |

| 1- rainfall the same day | 199 | ||

| 2- rainfall 0–24 h prior to sampling | 429 | ||

| 3- rainfall 24–48 h prior to sampling | 126 | ||

| 4- rainfall 0–48 h prior to sampling | 295 | ||

| Total phosphorus | 0 - no rain for 48 h | 918 | Differences were detected between all rain event modalities except between modalities 0 and 1, 1 and 4, 2 and 4. |

| 1- rainfall the same day | 204 | ||

| 2- rainfall 0–24 h prior to sampling | 421 | ||

| 3- rainfall 24–48 h prior to sampling | 122 | ||

| 4- rainfall 0–48 h prior to sampling | 301 | ||

| Total suspended solids | 0 - no rain for 48 h | 840 | Differences were detected between all rain event modalities, except for differences between modality 0 and 1 and 2 and 4. |

| 1- rainfall the same day | 188 | ||

| 2- rainfall 0–24 h prior to sampling | 426 | ||

| 3- rainfall 24–48 h prior to sampling | 129 | ||

| 4- rainfall 0–48 h prior to sampling | 284 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Behmel, S.; Damour, M.; Ludwig, R.; Rodriguez, M. Optimization of River and Lake Monitoring Programs Using a Participative Approach and an Intelligent Decision-Support System. Appl. Sci. 2019, 9, 4157. https://doi.org/10.3390/app9194157

Behmel S, Damour M, Ludwig R, Rodriguez M. Optimization of River and Lake Monitoring Programs Using a Participative Approach and an Intelligent Decision-Support System. Applied Sciences. 2019; 9(19):4157. https://doi.org/10.3390/app9194157

Chicago/Turabian StyleBehmel, Sonja, Mathieu Damour, Ralf Ludwig, and Manuel Rodriguez. 2019. "Optimization of River and Lake Monitoring Programs Using a Participative Approach and an Intelligent Decision-Support System" Applied Sciences 9, no. 19: 4157. https://doi.org/10.3390/app9194157

APA StyleBehmel, S., Damour, M., Ludwig, R., & Rodriguez, M. (2019). Optimization of River and Lake Monitoring Programs Using a Participative Approach and an Intelligent Decision-Support System. Applied Sciences, 9(19), 4157. https://doi.org/10.3390/app9194157