FSRFNet: Feature-selective and Spatial Receptive Fields Networks

Abstract

Featured Application

Abstract

1. Introduction

2. Related Work

2.1. Deeper Architectures

2.2. Attention Mechanisms

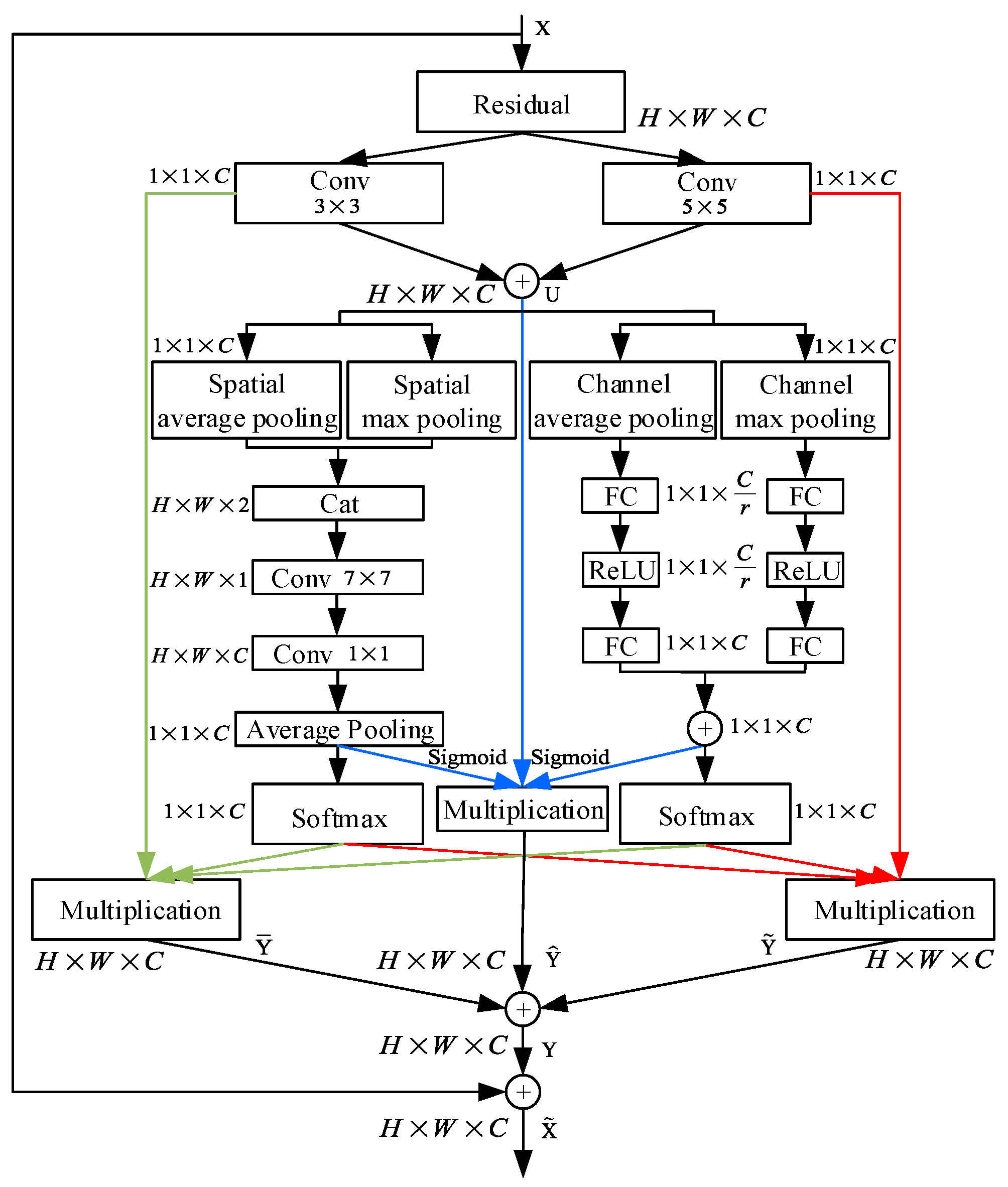

3. Feature-selective and Spatial Receptive Fields (FSRF) Blocks

3.1. Multi-Branch Convolution

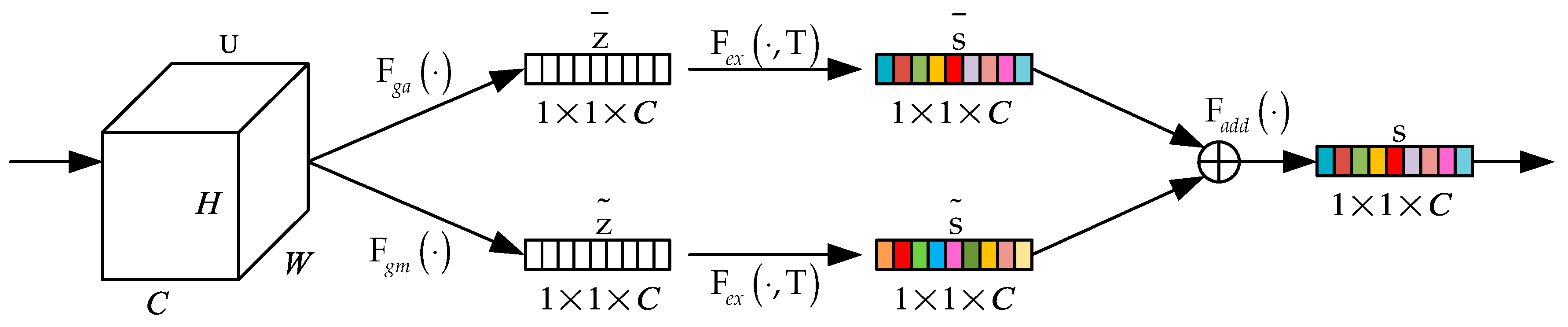

3.2. Fuse

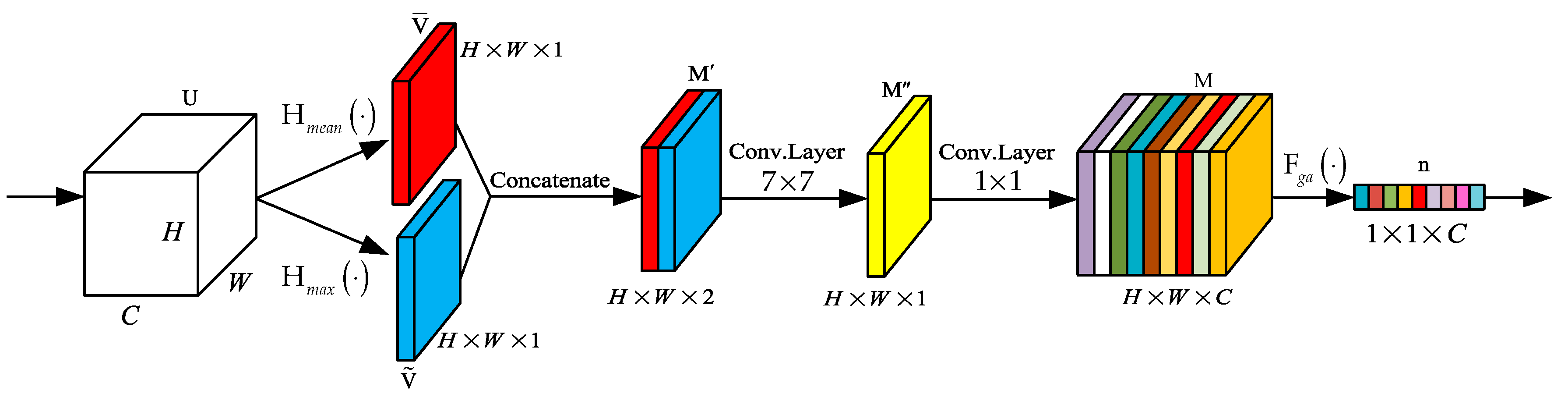

3.3. Interactions between Feature-selective and Spatial Attention

3.4. Instantiation

4. Network Architecture

5. Experiments

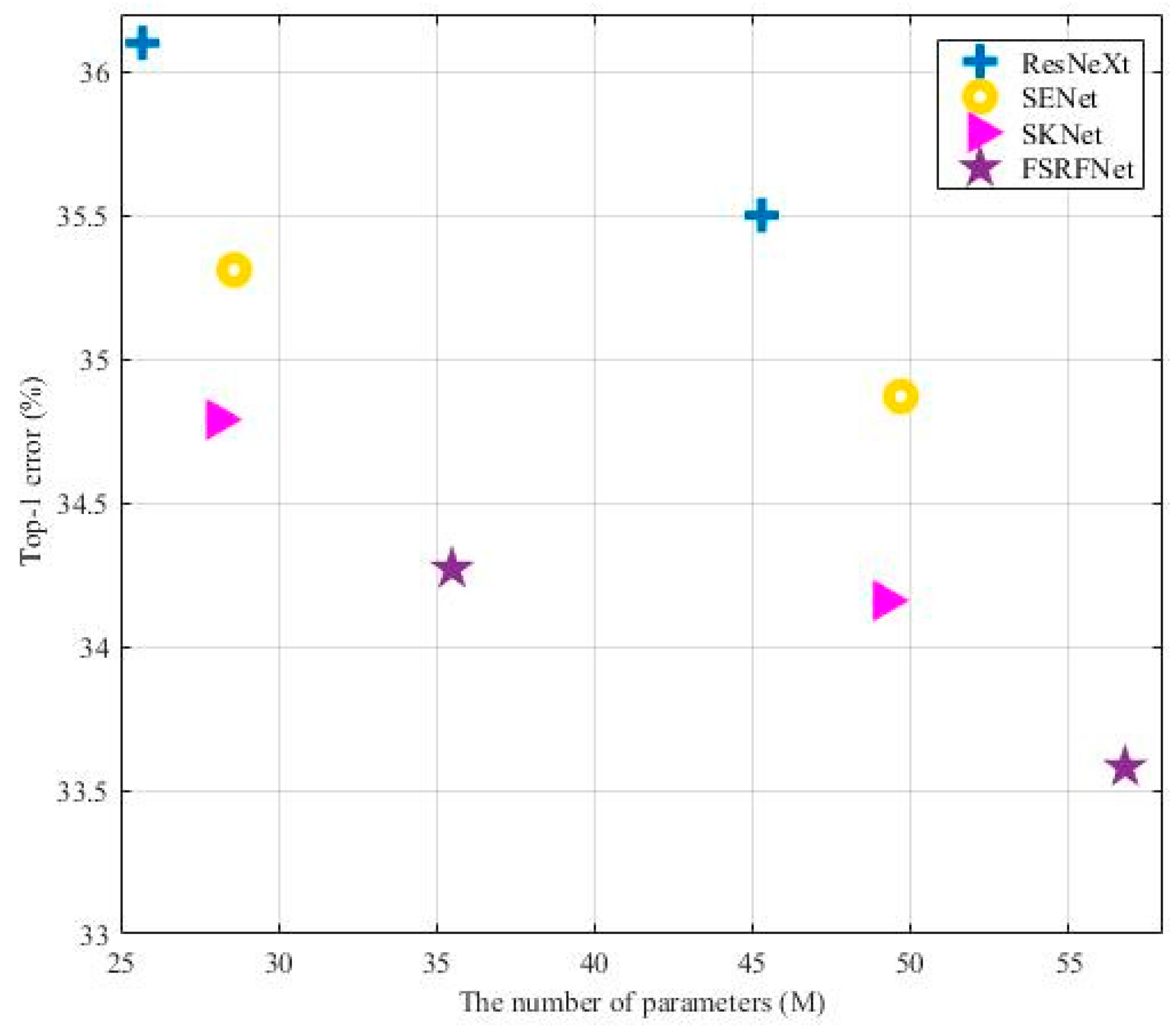

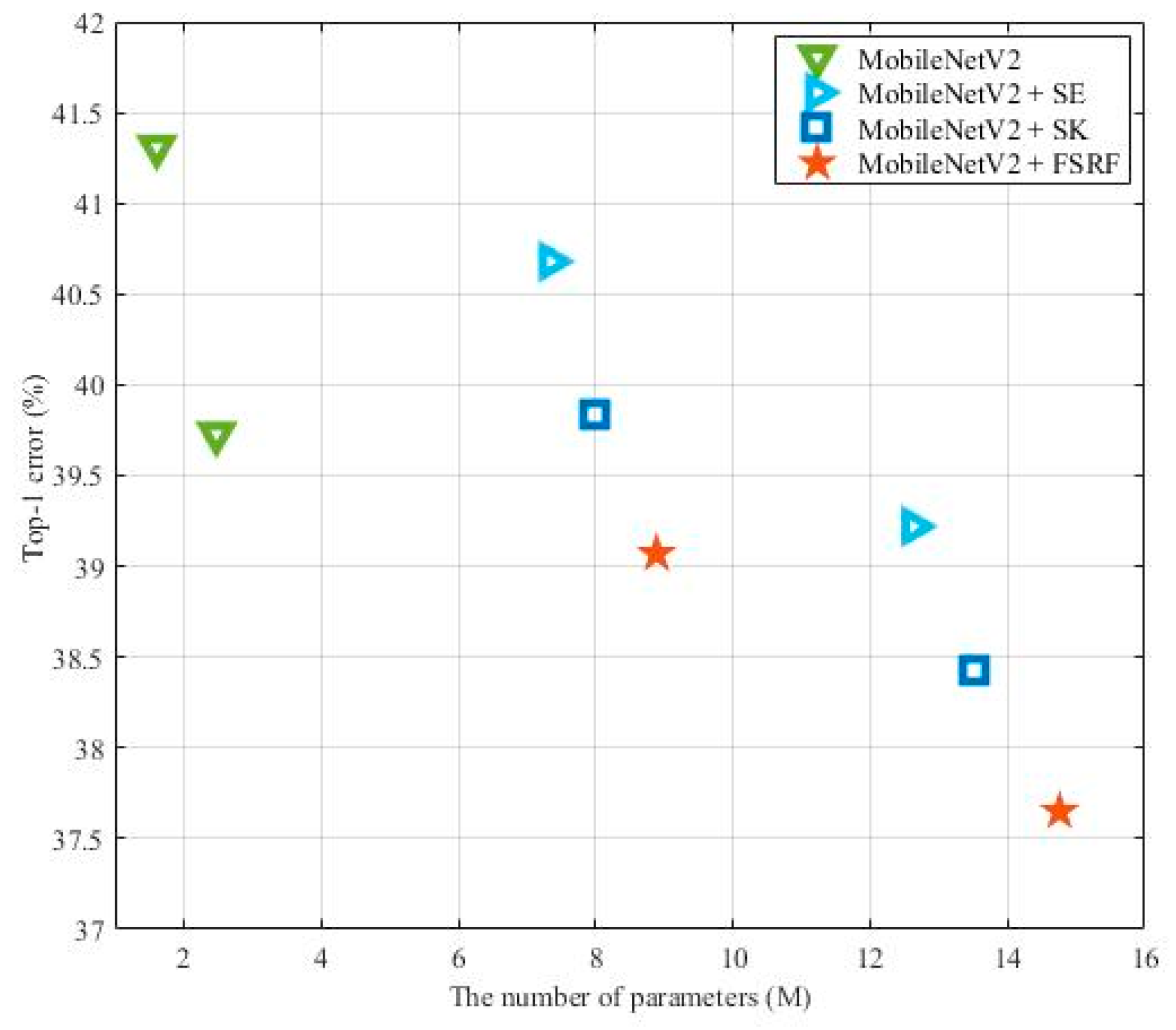

5.1. Tiny ImageNet Classification

5.2. CIFAR Classification

5.3. Visualization with Grad-CAM

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, Inception-ResNet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, California, USA 4–9 February 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Neural Information Processing Systems (NIPS), Harrahs and Harveys, Lake Tahoe, CA, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Adam, H. MobileNet: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Uijlings, J.R.; van de Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast r-cnn. Deformable part models are convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision, Boston, MA, USA, 8–10 June 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo 9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo v3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Mnih, V.; Heess, N.; Graves, A. Recurrent models of visual attention. In Proceedings of the Neural Information Processing Systems (NIPS), Montréal, QC, Canada, 8–13 December 2014; pp. 2204–2212. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. In Proceedings of the Neural Information Processing Systems (NIPS), Montréal, QC, Canada, 8–13 December 2015; pp. 2017–2025. [Google Scholar]

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106–154. [Google Scholar] [CrossRef]

- Desimone, R.; Wessinger, M.; Thomas, L.; Schneider, W. Attentional control of visual perception: Cortical and subcortical mechanisms. Cold Spring Harb. Symp. Quant. Biol. 1990, 55, 963–971. [Google Scholar] [CrossRef]

- Nelson, J.I.; Frost, B.J. Orientation-selective inhibition from beyond the classic visual receptive field. Brain Res. 1978, 139, 359–365. [Google Scholar] [CrossRef]

- Hayden, B.Y.; Gallant, J.L. Time course of attention reveals different mechanisms for spatial and feature-based attention in area V4. Neuron 2005, 47, 637–643. [Google Scholar] [CrossRef]

- Egner, T.; Monti, J.M.; Trittschuh, E.H.; Wieneke, C.A.; Hirsch, J.; Mesulam, M.M. Neural integration of top-down spatial and feature-based information in visual search. J. Neurosci. 2008, 28, 6141–6151. [Google Scholar] [CrossRef]

- Andersen, S.K.; Fuchs, S.; Müller, M.M. Effects of feature-selective and spatial attention at different stages of visual processing. J. Cogn. Neurosci. 2011, 23, 238–246. [Google Scholar] [CrossRef]

- Ibos, G.; Freedman, D.J. Interaction between spatial and feature attention in posterior parietal cortex. Neuron 2016, 91, 931–943. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective Kernel Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 15–21 July 2019; pp. 510–519. [Google Scholar]

- Dudczyk, J. Radar emission sources identification based on hierarchical agglomerative clustering for large data sets. J. Sens. 2016, 2016, 1879327. [Google Scholar] [CrossRef]

- Matuszewski, J. Radar signal identification using a neural network and pattern recognition methods. In Proceedings of the 14th International Conference on Advanced Trends in Radioelectionics, Telecommunications and Computer Engineering (TCSET 2018), Lviv-Slavsk, Ukraine, 20–24 February 2018; pp. 79–83. [Google Scholar] [CrossRef]

- Dudczyk, J.; Wnuk, M. The utilization of unintentional radiation for identification of the radiation sources. In Proceedings of the 34 European Microwave Conference (EuMC 2004), Amsterdam, The Netherlands, 12–14 October 2004; Volume 2, pp. 777–780. [Google Scholar]

- Matuszewski, J.; Pietrow, D. Recognition of electromagnetic sources with the use of deep neural networks. In Proceedings of the XII Conference on Reconnaissance and Electronic Warfare Systems, Oltarzew, Poland, 19–21 November 2018. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet v2: Practical guidelines for efficient CNN architecture design. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNet v2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Itti, L.; Koch, C. Computational modelling of visual attention. Nat. Rev. Neurosci. 2001, 2, 194–203. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Larochelle, H.; Hinton, G.E. Learning to combine foveal glimpses with a third-order Boltzmann machine. In Proceedings of the Neural Information Processing Systems (NIPS), Vancouver, WA, Canada, 6–11 December 2010; pp. 1243–1251. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- You, Q.; Jin, H.; Wang, Z.; Fang, C.; Luo, J. Image captioning with semantic attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4651–4659. [Google Scholar]

- Chung, J.S.; Senior, A.; Vinyals, O.; Zisserman, A. Lip reading sentences in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3444–3453. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Motter, B.C. Focal attention produces spatially selective processing in visual cortical areas V1, V2, and V4 in the presence of competing stimuli. J. Neurophysiol. 1993, 70, 909–919. [Google Scholar] [CrossRef]

- Luck, S.J.; Chelazzi, L.; Hillyard, S.A.; Desimone, R. Neural mechanisms of spatial selective attention in areas V1, V2, and V4 of macaque visual cortex. J. Neurophysiol. 1997, 77, 24–42. [Google Scholar] [CrossRef]

- Kastner, S.; Ungerleider, L.G. Mechanisms of visual attention in the human cortex. Annu. Rev. Neurosci. 2000, 23, 315–341. [Google Scholar]

- Chawla, D.; Lumer, E.D.; Friston, K.J. The relationship between synchronization among neuronal populations and their mean activity levels. Neural Comput. 1999, 11, 1389–1411. [Google Scholar] [CrossRef]

- Bartsch, M.V.; Donohue, S.E.; Strumpf, H.; Schoenfeld, M.A.; Hopf, J.M. Enhanced spatial focusing increases feature-based selection in unattended locations. Sci. Rep. 2018, 8, 16132:1–16132:14. [Google Scholar] [CrossRef]

- Yao, L.; Miller, J. Tiny imagenet classification with convolutional neural networks. CS 231N. 2015, 2, 8. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In Proceedings of the IEEE Conference on Computer Vision, Boston, MA, USA, 7–12 June 2015; pp. 1026–1034. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE International Conference on Computer Vision, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images (Technical Report); University of Toronto: Toronto, ON, Canada, 2009; Volume 1. [Google Scholar]

- Lin, M.; Qiang, C.; Shuicheng, Y. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Huang, G.; Sun, Y.; Liu, Z.; Sedra, D.; Weinberger, K.Q. Deep networks with stochastic depth. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 646–661. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 618–626. [Google Scholar]

| Output | ResNeXt-50 (32× 4d) | SENet-50 | SKNet-50 | FSRFNet-50 |

|---|---|---|---|---|

| Models | #P | GFLOPs | Top-1 Err. (%) |

|---|---|---|---|

| ResNeXt-50 (our impl.) | 25.7M | 4.36 | 36.12 |

| ResNeXt-101 (our impl.) | 45.3M | 8.07 | 35.54 |

| SENet-50 (our impl.) | 28.6M | 4.51 | 35.31 |

| SENet-101 (our impl.) | 49.7M | 8.13 | 34.87 |

| SKNet-50 (our impl.) | 28.1M | 4.78 | 34.79 |

| SKNet-101 (our impl.) | 49.2M | 8.45 | 34.16 |

| FSRFNet-50 (ours) | 35.5M | 5.86 | 34.27 |

| FSRFNet-101 (ours) | 56.8M | 11.32 | 33.58 |

| ShuffleNetV2 | #P | MFLOPs | top-1 err. (%) |

|---|---|---|---|

| 1.47M | 37.62 | 47.82 | |

| 1.59M | 36.25 | 46.51 | |

| 1.54M | 38.78 | 45.01 | |

| 1.87M | 40.41 | 43.33 | |

| 2.35M | 142.35 | 40.17 | |

| 2.72M | 140.93 | 38.64 | |

| 2.67M | 143.66 | 37.41 | |

| 3.23M | 144.57 | 35.62 |

| MobileNetV2 | #P | GFLOPs | top-1 err. (%) |

|---|---|---|---|

| 1.61M | 0.22 | 41.31 | |

| 7.35M | 1.61 | 40.68 | |

| 8.01M | 2.13 | 39.83 | |

| 8.89M | 2.85 | 39.07 | |

| 2.48M | 0.31 | 39.73 | |

| 12.63M | 2.67 | 39.22 | |

| 13.52M | 3.54 | 38.43 | |

| 14.76M | 4.36 | 37.65 |

| ShuffleNetV2 | CIFAR-10 | CIFAR-100 |

|---|---|---|

| 6.98 | 39.31 | |

| 6.73 | 37.87 | |

| 6.61 | 36.38 | |

| 6.46 | 34.64 | |

| 6.29 | 33.24 | |

| 6.17 | 32.32 | |

| 5.93 | 30.71 | |

| 5.85 | 29.68 |

| Models | #P | CIFAR-10 | CIFAR-100 |

|---|---|---|---|

| 24.5M | 5.74 | 28.75 | |

| 32.9M | 5.58 | 28.37 | |

| 66.7M | 5.47 | 27.99 | |

| 34.1M | 5.63 | 28.42 | |

| 26.8M | 5.42 | 27.68 | |

| 29.6M | 5.26 | 27.51 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, X.; Yang, Z.; Yu, Z. FSRFNet: Feature-selective and Spatial Receptive Fields Networks. Appl. Sci. 2019, 9, 3954. https://doi.org/10.3390/app9193954

Ma X, Yang Z, Yu Z. FSRFNet: Feature-selective and Spatial Receptive Fields Networks. Applied Sciences. 2019; 9(19):3954. https://doi.org/10.3390/app9193954

Chicago/Turabian StyleMa, Xianghua, Zhenkun Yang, and Zhiqiang Yu. 2019. "FSRFNet: Feature-selective and Spatial Receptive Fields Networks" Applied Sciences 9, no. 19: 3954. https://doi.org/10.3390/app9193954

APA StyleMa, X., Yang, Z., & Yu, Z. (2019). FSRFNet: Feature-selective and Spatial Receptive Fields Networks. Applied Sciences, 9(19), 3954. https://doi.org/10.3390/app9193954