Well-Distributed Feature Extraction for Image Registration Using Histogram Matching

Abstract

1. Introduction

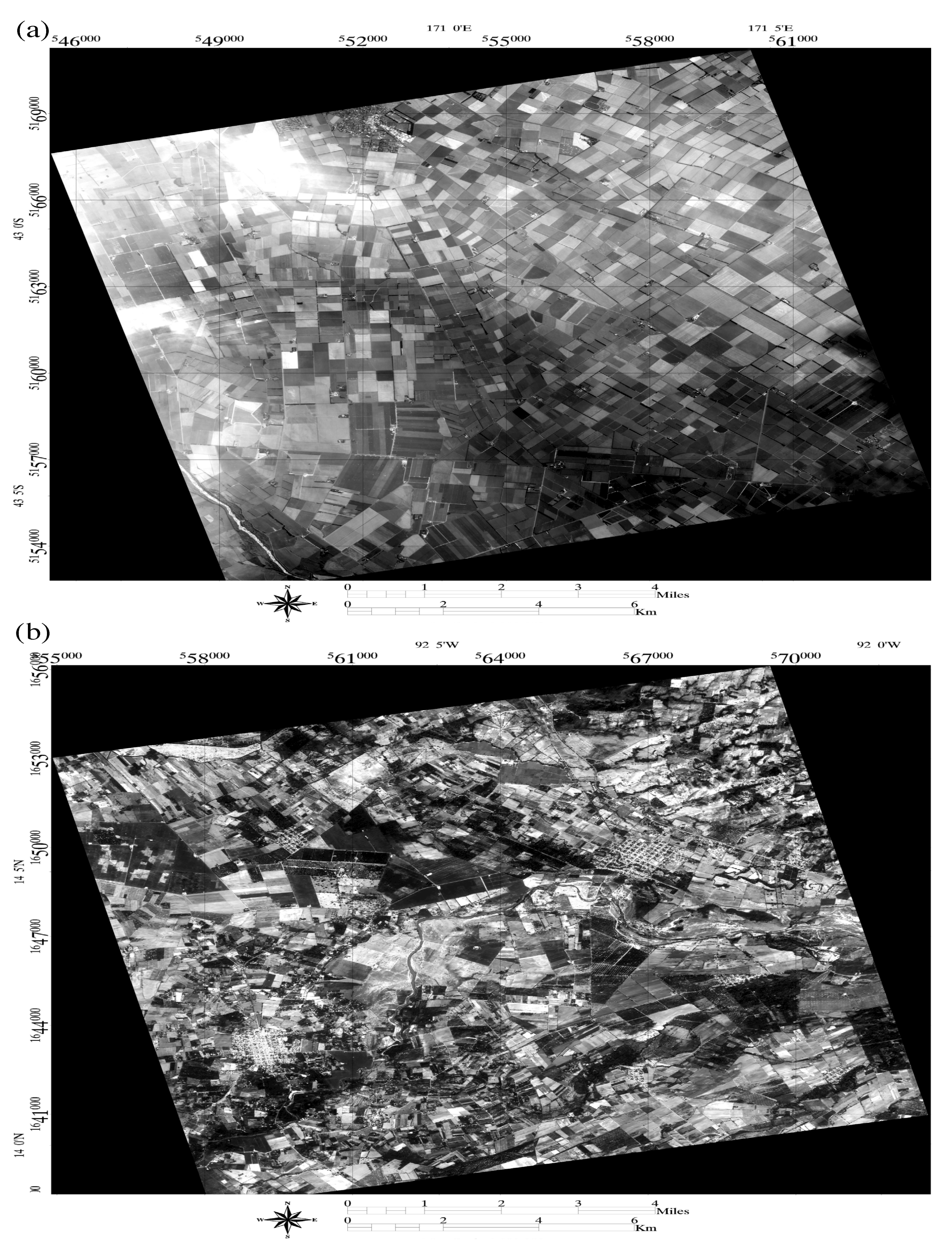

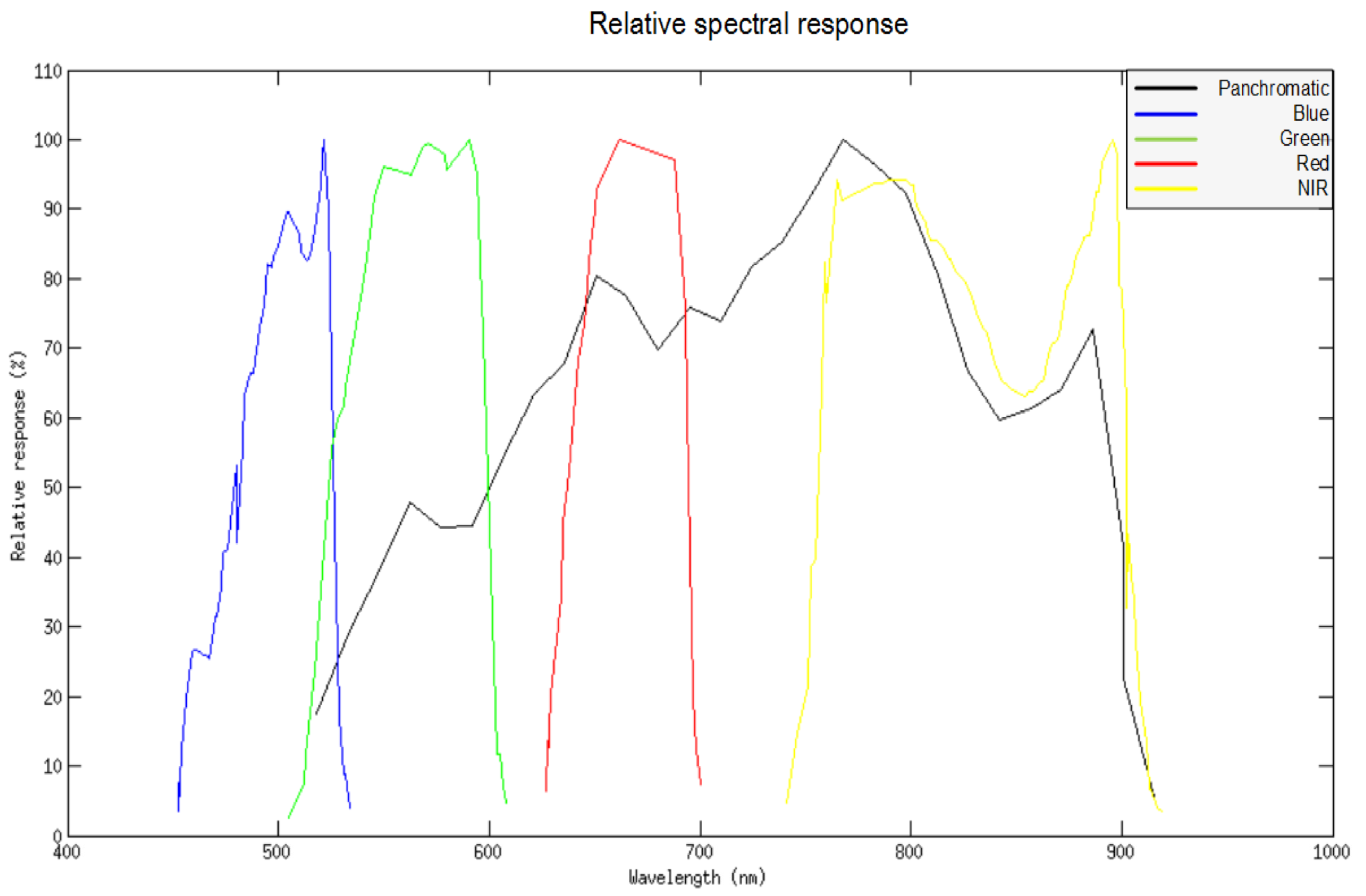

2. Datasets

3. Method

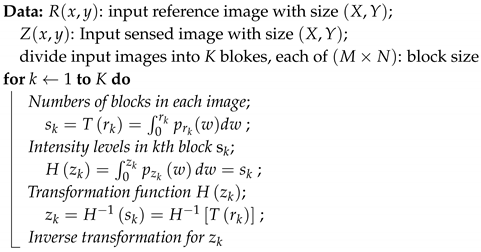

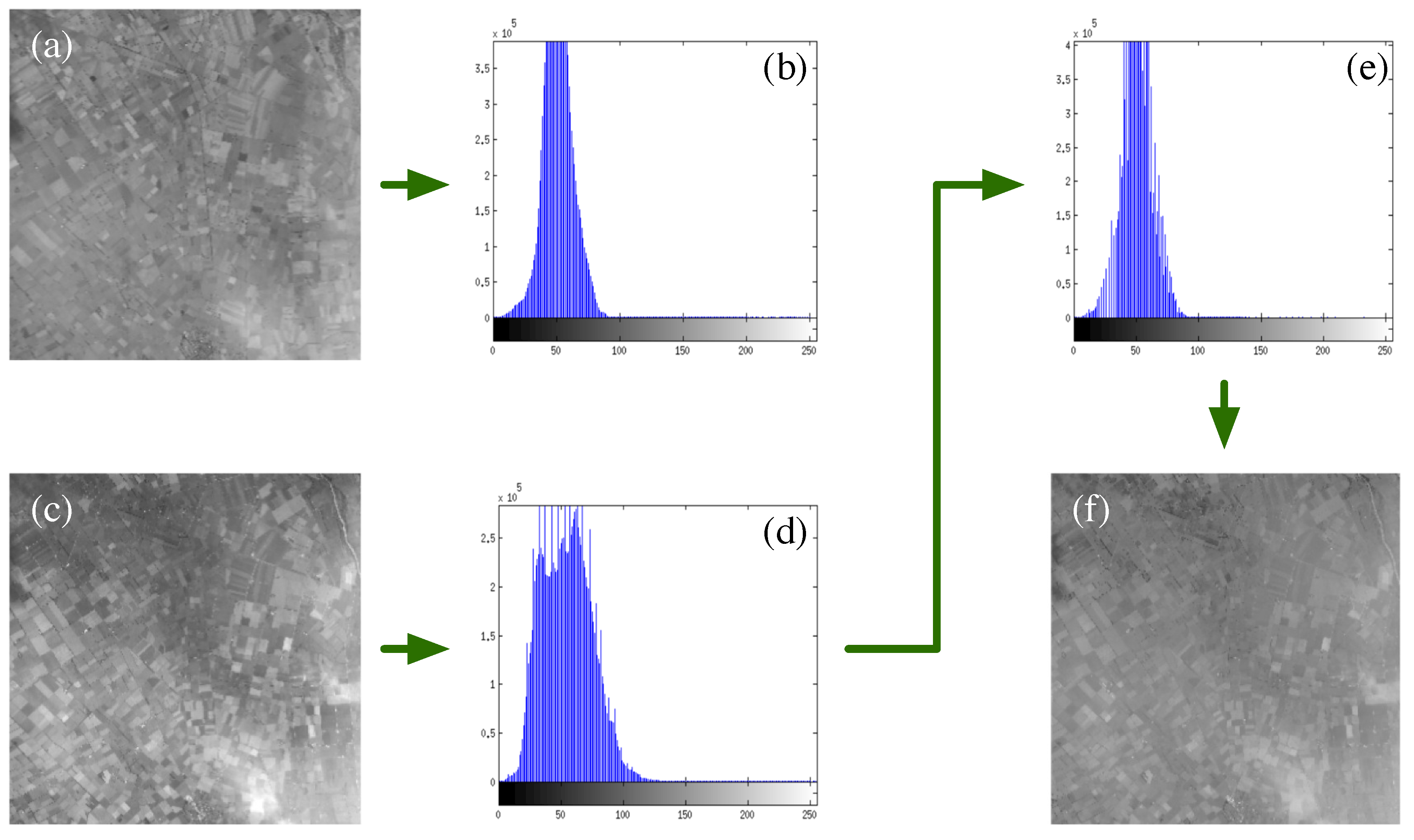

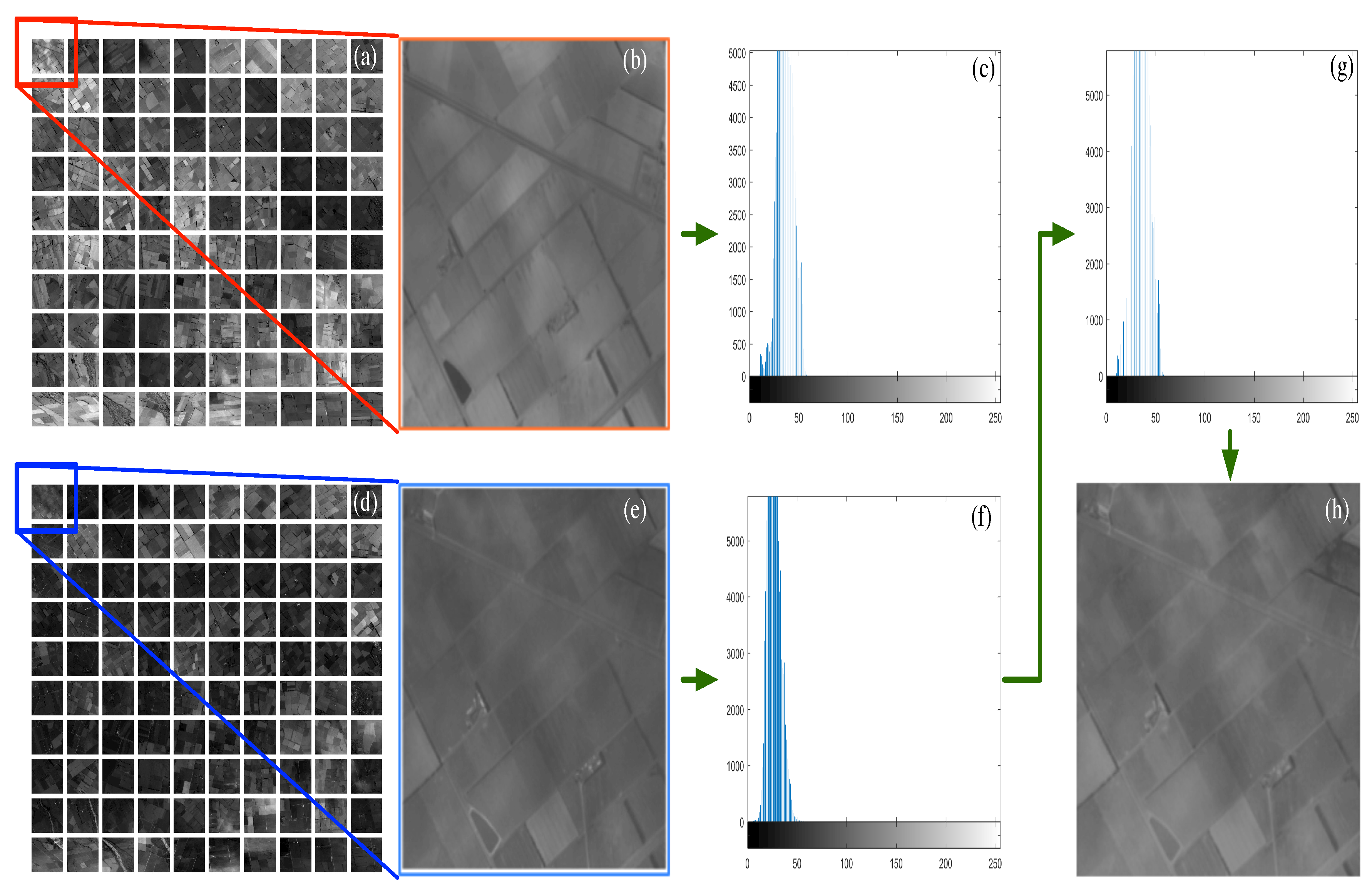

3.1. Contrast Adjustment Using Histogram Matching

| Algorithm 1: Local histogram matching. |

|

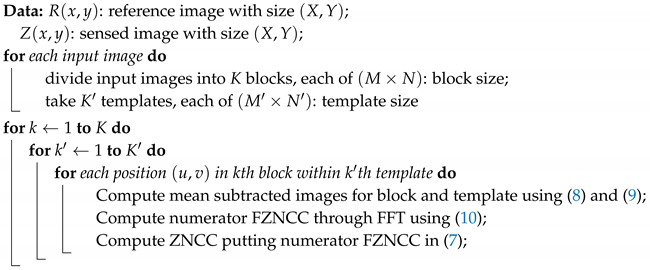

3.2. Feature Extraction

| Algorithm 2: Feature extraction. |

|

3.3. Feature Matching, Transformation, and Re-Sampling

4. Results

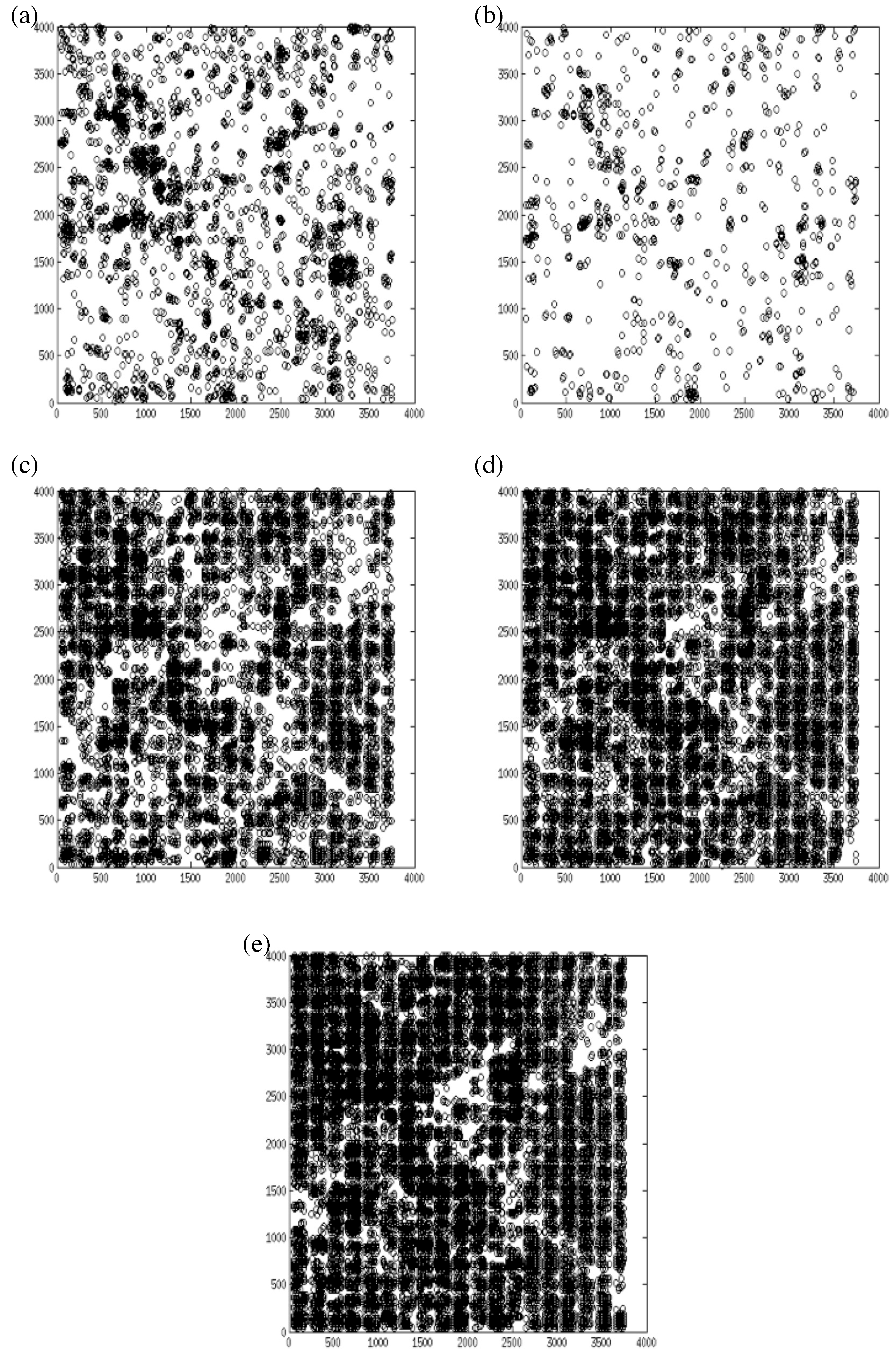

4.1. Quantitative Analysis

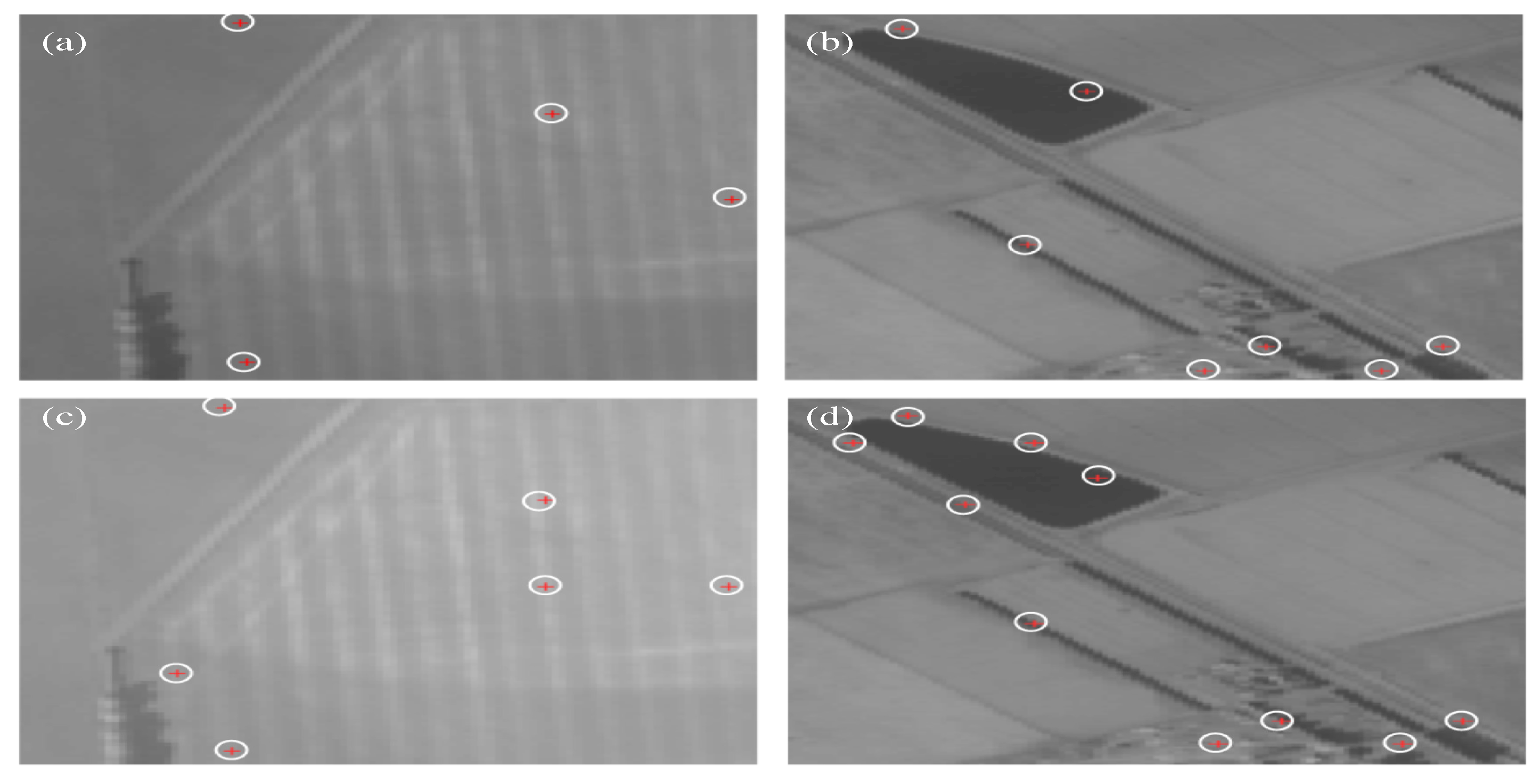

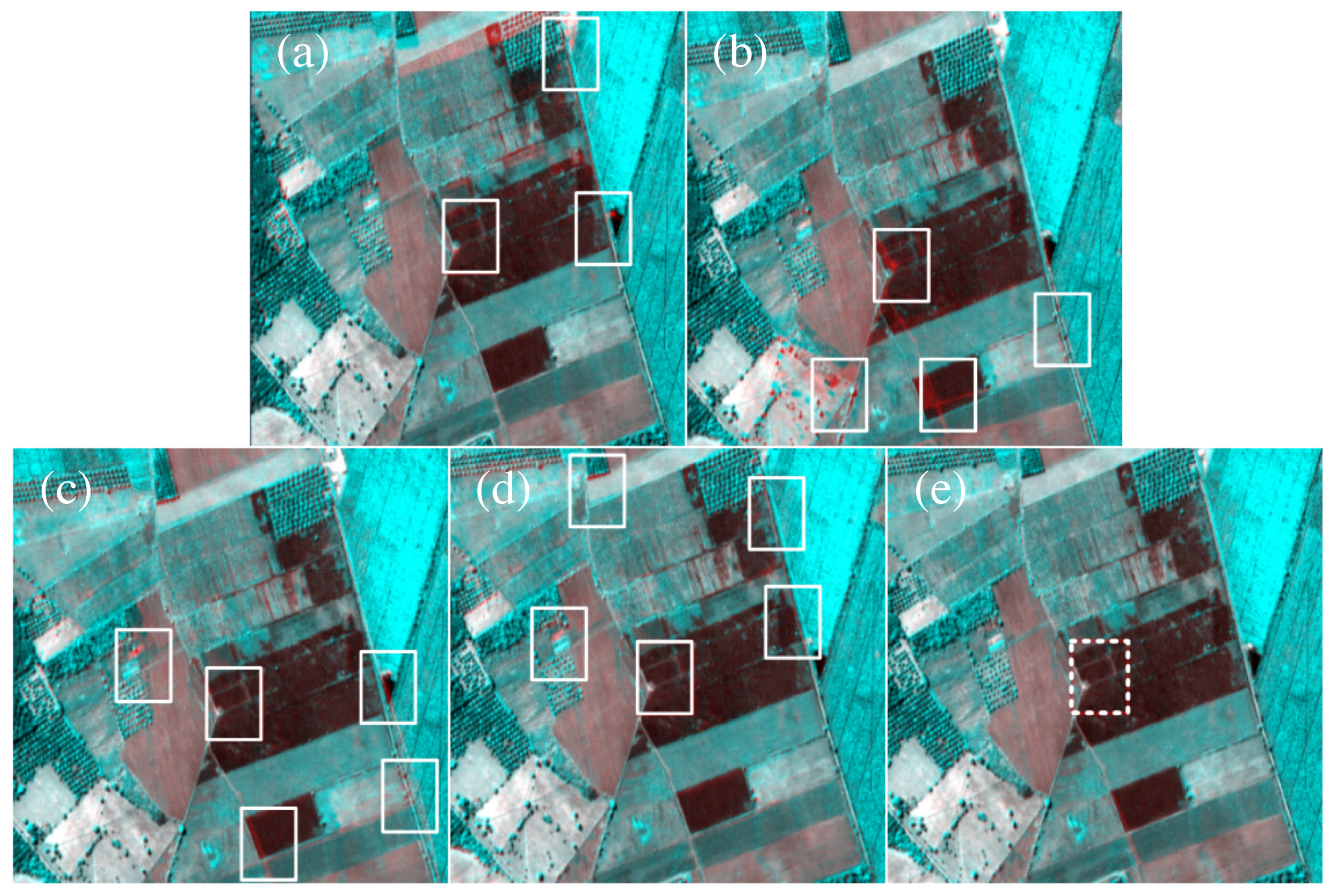

4.2. Qualitative Analysis

5. Conclusions

- Accuracy: The lower RMSE value indicates more accurate results. The proposed method provided lower RMSE values than other conventional methods, thus the proposed method provided more accurate registration results.

- Control point ratio (CPR): The experimental results show that the feature-based methods (SIFT and SURF) provided smaller CPR values than area-based methods (SSD and NCC). Among the area-based methods, the proposed FZNCC method provided the highest CPR value.

Author Contributions

Funding

Conflicts of Interest

References

- Wong, A.; Orchard, J. Efficient FFT-accelerated approach to invariant optical–LIDAR registration. Geosci. Remote Sens. IEEE Trans. 2008, 46, 3917–3925. [Google Scholar] [CrossRef]

- Lee, I.; Seo, D.C.; Choi, T.S. Entropy-Based Block Processing for Satellite Image Registration. Entropy 2012, 14, 2397–2407. [Google Scholar] [CrossRef]

- Huo, S.; Pan, C.; Huo, L.; Zhou, Z. Multilevel SIFT matching for large-size VHR image registration. IEEE Geosci. Remote Sens. Lett. 2012, 9, 171–175. [Google Scholar] [CrossRef]

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Hong, G.; Zhang, Y. Wavelet-based image registration technique for high-resolution remote sensing images. Comput. Geosci. 2008, 34, 1708–1720. [Google Scholar] [CrossRef]

- Le Moigne, J.; Netanyahu, N.S.; Eastman, R.D. Image Registration for Remote Sensing; Cambride University Press: Cambride, UK, 2011. [Google Scholar]

- Goshtasby, A.A. Image Registration: Principles, Tools and Methods; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- Sedaghat, A.; Mokhtarzade, M.; Ebadi, H. Uniform Robust Scale-Invariant Feature Matching for Optical Remote Sensing Images. Geosci. Remote Sens. IEEE Trans. 2011, 49, 4516–4527. [Google Scholar] [CrossRef]

- Song, R.; Szymanski, J. Well-distributed SIFT features. Electron. Lett. 2009, 45, 308–310. [Google Scholar] [CrossRef]

- Lee, I.H.; Choi, T.S. Accurate Registration Using Adaptive Block Processing for Multispectral Images. Circuits Syst. Video Technol. IEEE Trans. 2013, 23, 1491–1501. [Google Scholar] [CrossRef]

- Lowe, D. Object recognition from local scale-invariant features. Computer Vision, 1999. In Proceedings of the Seventh IEEE International Conference on IEEE, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Lowe, D. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Yi, Z.; Zhiguo, C.; Yang, X. Multi-spectral remote image registration based on SIFT. Electron. Lett. 2008, 44, 107–108. [Google Scholar] [CrossRef]

- Song, Z.; Li, S.; George, T. Remote sensing image registration approach based on a retrofitted SIFT algorithm and Lissajous-curve trajectories. Opt. Express 2010, 18, 513–522. [Google Scholar] [CrossRef] [PubMed]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. CVPR 2004, 4, 506–513. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Computer Vision–ECCV 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Juan, L.; Gwun, O. A comparison of sift, pca-sift and surf. Int. J. Image Process. (IJIP) 2010, 3, 143. [Google Scholar]

- Chen, H.; Arora, M.; Varshney, P. Mutual information-based image registration for remote sensing data. Int. J. Remote Sens. 2003, 24, 3701–3706. [Google Scholar] [CrossRef]

- Ma, J.; Chan, J.; Canters, F. Fully automatic subpixel image registration of multiangle CHRIS/Proba data. Geosci. Remote Sens. IEEE Trans. 2010, 48, 2829–2839. [Google Scholar]

- Lewis, J. Fast template matching. Vis. Interface 1995, 95, 120–123. [Google Scholar]

- Goshtasby, A.; Gage, S.; Bartholic, J. A two-stage cross correlation approach to template matching. IEEE Trans. Pattern Anal. Mach. Intell. 1984, 374–378. [Google Scholar] [CrossRef]

- Rueckert, D.; Sonoda, L.; Hayes, C.; Hill, D.; Leach, M.; Hawkes, D. Nonrigid registration using free-form deformations: Application to breast MR images. Med. Imaging IEEE Trans. 1999, 18, 712–721. [Google Scholar] [CrossRef]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Qing, Z.; Wu, B.; Xu, Z.X. Seed point selection method for triangle constrained image matching propagation. Geosci. Remote Sens. Lett. IEEE 2006, 3, 207–211. [Google Scholar]

| CPs | Data | SIFT | SURF | SSD | NCC | FZNCC |

|---|---|---|---|---|---|---|

| Initial CPs (Reference image) | Set 1 | 616,244 | 48,010 | 24,320 | 24,320 | 24,320 |

| Initial CPs (Sensed image) | 383,285 | 28,528 | 24,320 | 24,320 | 24,320 | |

| Corresponding CPs | 6970 | 5038 | 24,320 | 24,320 | 24,320 | |

| Refined CPs | 2558 | 1052 | 3570 | 6449 | 8235 | |

| Initial CPs (Reference image) | Set 2 | 67,902 | 30,897 | 24,320 | 24,320 | 24,320 |

| Initial CPs (Sensed image) | 66,740 | 49,253 | 24,320 | 24,320 | 24,320 | |

| Corresponding CPs | 10,815 | 3984 | 24,320 | 24,320 | 24,320 | |

| Refined CPs | 6465 | 1939 | 6767 | 7883 | 10,269 | |

| Initial CPs (Reference image) | Set 3 | 157,492 | 37,714 | 24,320 | 24,320 | 24,320 |

| Initial CPs (Sensed image) | 148,849 | 43,155 | 24,320 | 24,320 | 24,320 | |

| Corresponding CPs | 9878 | 3304 | 24,320 | 24,320 | 24,320 | |

| Refined CPs | 4913 | 1106 | 8927 | 11,622 | 15,807 |

| Image | Data | SIFT | SURF | SSD | NCC | FZNCC |

|---|---|---|---|---|---|---|

| Reference image | Set 1 | 0.0042 | 0.0219 | 0.1468 | 0.2652 | 0.3386 |

| Sensed image | 0.0067 | 0.0369 | 0.1468 | 0.2652 | 0.3386 | |

| Reference image | Set 2 | 0.0952 | 0.0628 | 0.2782 | 0.3241 | 0.4222 |

| Sensed image | 0.0969 | 0.0394 | 0.2782 | 0.3241 | 0.4222 | |

| Reference image | Set 3 | 0.0312 | 0.0293 | 0.3671 | 0.4779 | 0.6500 |

| Sensed image | 0.0330 | 0.0256 | 0.3671 | 0.4779 | 0.6500 |

| Data | SIFT | SURF | SSD | NCC | FZNCC |

|---|---|---|---|---|---|

| Set 1 | 2.1606 | 3.5117 | 3.5218 | 2.8628 | 1.7490 |

| Set 2 | 1.3509 | 1.8455 | 2.5200 | 1.9755 | 1.0249 |

| Set 3 | 1.2126 | 2.1384 | 1.6349 | 1.3708 | 0.8797 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahmood, M.T.; Lee, I.H. Well-Distributed Feature Extraction for Image Registration Using Histogram Matching. Appl. Sci. 2019, 9, 3487. https://doi.org/10.3390/app9173487

Mahmood MT, Lee IH. Well-Distributed Feature Extraction for Image Registration Using Histogram Matching. Applied Sciences. 2019; 9(17):3487. https://doi.org/10.3390/app9173487

Chicago/Turabian StyleMahmood, Muhammad Tariq, and Ik Hyun Lee. 2019. "Well-Distributed Feature Extraction for Image Registration Using Histogram Matching" Applied Sciences 9, no. 17: 3487. https://doi.org/10.3390/app9173487

APA StyleMahmood, M. T., & Lee, I. H. (2019). Well-Distributed Feature Extraction for Image Registration Using Histogram Matching. Applied Sciences, 9(17), 3487. https://doi.org/10.3390/app9173487